You are currently browsing the category archive for the ‘Digital Enterprise’ category.

Last week I shared my first review of the PLM Roadmap / PDT Fall 2020 conference, organized by CIMdata and Eurostep. Having digested now most of the content in detail, I can state this was the best conference of 2020. In my first post, the topics I shared were mainly the consultant’s view of digital thread and digital twin concepts.

This time, I want to focus on the content presented by the various Aerospace & Defense working groups who shared their findings, lessons-learned (so far) on topics like the Multi-view BOM, Supply Chain Collaboration, MBSE Data interoperability.

This time, I want to focus on the content presented by the various Aerospace & Defense working groups who shared their findings, lessons-learned (so far) on topics like the Multi-view BOM, Supply Chain Collaboration, MBSE Data interoperability.

These sessions were nicely wrapped with presentations from Alberto Ferrari (Raytheon), discussing the digital thread between PLM and Simulation Lifecycle Management and Jeff Plant (Boeing) sharing their Model-Based Engineering strategy.

I believe these insights are crucial, although there might be people in the field that will question if this research is essential. Is not there an easier way to achieve to have the same results?

Nicely formulated by Ilan Madjar as a comment to my first post:

Ilan makes a good point about simplifying the ideas to the masses to make it work. The majority of companies probably do not have the bandwidth to invest and understand the future benefits of a digital thread or digital twins.

This does not mean that these topics should not be studied. If your business is in a small, simple eco-system and wants to work in a connected mode, you can choose a vendor and a few custom interfaces.

This does not mean that these topics should not be studied. If your business is in a small, simple eco-system and wants to work in a connected mode, you can choose a vendor and a few custom interfaces.

However, suppose you work in a global industry with an extensive network of partners, suppliers, and customers.

In that case, you cannot rely on ad-hoc interfaces or a single vendor. You need to invest in standards; you need to study common best practices to drive methodology, standards, and vendors to align.

This process of standardization is so crucial if you want to have a sustainable, connected enterprise. In the end, the push from these companies will lead to standards, allowing the smaller companies to ad-here or connect to.

The future is about Connected through Standards, as discussed in part 1 and further in this post. Let’s go!

Global Collaboration – Defining a baseline for data exchange processes and standards

Katheryn Bell (Pratt & Whitney Canada) presented the progress of the A&D Global Collaboration workgroup. As you can see from the project timeline, they have reached the phase to look towards the future.

Katheryn mentioned the need to standardize terminology as the first point of attention. I am fully aligned with that point; without a standardized terminology framework, people will have a misunderstanding in communication.

This happens even more in the smaller businesses that just pick sometimes (buzz) terms without a full understanding.

Several years ago, I talked with a PLM-implementer telling me that their implementation focus was on systems engineering. After some more explanations, it appeared they were making an attempt for configuration management in reality. Here the confusion was massive. Still, a standard, common terminology is crucial in our domain, even if it seems academic.

Several years ago, I talked with a PLM-implementer telling me that their implementation focus was on systems engineering. After some more explanations, it appeared they were making an attempt for configuration management in reality. Here the confusion was massive. Still, a standard, common terminology is crucial in our domain, even if it seems academic.

The group has been analyzing interoperability standards, standards for long-time archival and retrieval (LOTAR), but also has been studying the ISO 44001 standard related to Collaborative business relationship management systems

In the Q&A session, Katheryn explained that the biggest problem to solve with collaboration was the risk of working with the wrong version of data between disciplines and suppliers.

In the Q&A session, Katheryn explained that the biggest problem to solve with collaboration was the risk of working with the wrong version of data between disciplines and suppliers.

Of course, such errors can lead to huge costs if they are discovered late (or too late). As some of the big OEMs work with thousands of suppliers, you can imagine it is not an issue easily discovered in a more ad-hoc environment.

The move to a standardized Technical Data Package based on a Model-Based Definition is one of these initiatives in this domain to reduce these types of errors.

You can find the proceedings from the Global Collaboration working group here.

Connect, Trace, and Manage Lifecycle of Models, Simulation and Linked Data: Is That Easy?

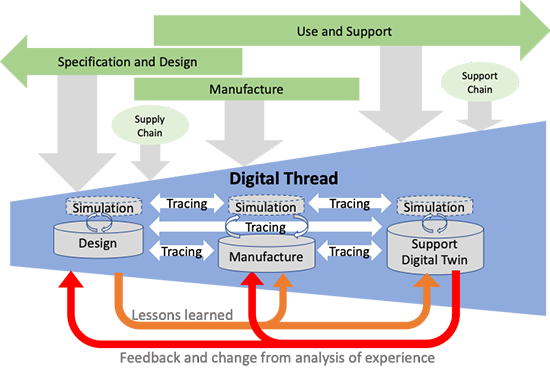

I loved Alberto Ferrari‘s (Raytheon) presentation how he described the value of a model-based digital thread, positioning it in a targeted enterprise.

I loved Alberto Ferrari‘s (Raytheon) presentation how he described the value of a model-based digital thread, positioning it in a targeted enterprise.

Click on the image and discover how business objectives, processes and models go together supported by a federated infrastructure.

Alberto’s presentation was a kind of mind map from how I imagine the future, and it is a pity if you have not had the chance to see his session.

Alberto also focused on the importance of various simulation capabilities combined with simulation lifecycle management. For Alberto, they are essential to implement digital twins. Besides focusing on standards, Alberto pleas for a semantic integration, open service architecture with the importance of DevSecOps.

Enough food for thought; as Alberto mentioned, he presented the corporate vision, not the current state.

More A&D Action Groups

There were two more interesting specialized sessions where teams from the A&D action groups provided a status update.

Brandon Sapp (Boeing) and Ian Parent (Pratt & Whitney) shared the activities and progress on Minimum Model-Based Definition (MBD) for Type Design Certification.

Brandon Sapp (Boeing) and Ian Parent (Pratt & Whitney) shared the activities and progress on Minimum Model-Based Definition (MBD) for Type Design Certification.

As Brandon mentioned, MBD is already a widely used capability; however, MBD is still maturing and evolving. I believe that is also one of the reasons why MBD is not yet accepted in mainstream PLM. Smaller organizations will wait; however, can your company afford to wait?

More information about their progress can be found here.

Mark Williams (Boeing) reported from the A&D Model-Based Systems Engineering action group their first findings related to MBSE Data Interoperability, focusing on an Architecture Model Exchange Solution. A topic interesting to follow as the promise of MBSE is that it is about connected information shared in models. As Mark explained, data exchange standards for requirements and behavior models are mature, readily available in the tools, and easily adopted. Exchanging architecture models has proven to be very difficult. I will not dive into more details, respecting the audience of this blog.

Mark Williams (Boeing) reported from the A&D Model-Based Systems Engineering action group their first findings related to MBSE Data Interoperability, focusing on an Architecture Model Exchange Solution. A topic interesting to follow as the promise of MBSE is that it is about connected information shared in models. As Mark explained, data exchange standards for requirements and behavior models are mature, readily available in the tools, and easily adopted. Exchanging architecture models has proven to be very difficult. I will not dive into more details, respecting the audience of this blog.

For those interested in their progress, more information can be found here

Model-Based Engineering @ Boeing

In this conference, the participation of Boeing was significant through the various action groups. As the cherry on the cake, there was Jeff Plant‘s session, giving an overview of what is happening at Boeing. Jeff is Boeing’s director of engineering practices, processes, and tools.

In his introduction, Jeff mentioned that Boeing has more than 160.000 employees in over 65 countries. They are working with more than 12.000 suppliers globally. These suppliers can be manufacturing, service or technology partnerships. Therefore you can imagine, and as discussed by others during the conference, streamlined collaboration and traceability are crucial.

The now-famous MBE Diamond symbol illustrates the model-based information flows in the virtual world and the physical world based on the systems engineering approach. Like Katheryn Bell did in her session related to Global Collaboration, Jeff started explaining the importance of a common language and taxonomy needed if you want to standardize processes.

The now-famous MBE Diamond symbol illustrates the model-based information flows in the virtual world and the physical world based on the systems engineering approach. Like Katheryn Bell did in her session related to Global Collaboration, Jeff started explaining the importance of a common language and taxonomy needed if you want to standardize processes.

Zoom in on the Boeing MBE Taxonomy, you will discover the clarity it brings for the company.

I was not aware of the ISO 23247 standard concerning the Digital Twin framework for manufacturing, aiming to apply industry standards to the model-based definition of products and process planning. A standard certainly to follow as it brings standardization on top of existing standards.

As Jeff noted: A practical standard for implementation in a company of any size. In my opinion, mandatory for a sustainable, connected infrastructure.

Jeff presented the slide below, showing their standardization internally around federated platforms.

This slide resembles a lot the future platform vision I have been sharing since 2017 when discussing PLM’s future at PLM conferences, when explaining the differences between Coordinated and Connected – see also my presentation here on Slideshare.

You can zoom in on the picture to see the similarities. For me, the differences were interesting to observe. In Jeff’s diagram, the product lifecycle at the top indicates the platform of (central) interest during each lifecycle stage, suggesting a linear process again.

You can zoom in on the picture to see the similarities. For me, the differences were interesting to observe. In Jeff’s diagram, the product lifecycle at the top indicates the platform of (central) interest during each lifecycle stage, suggesting a linear process again.

In reality, the flow of information through feedback loops will be there too.

The second exciting detail is that these federated architectures should be based on strong interoperability standards. Jeff is urging other companies, academics and vendors to invest and come to industry standards for Model-Based System Engineering practices. The time is now to act on this domain.

The second exciting detail is that these federated architectures should be based on strong interoperability standards. Jeff is urging other companies, academics and vendors to invest and come to industry standards for Model-Based System Engineering practices. The time is now to act on this domain.

It reminded me again of Marc Halpern’s message mentioned in my previous post (part 1) that we should be worried about vendor alliances offering an integrated end-to-end data flow based on their solutions. This would lead to an immense vendor-lock in if these interfaces are not based on strong industry standards.

Therefore, don’t watch from the sideline; it is the voice (and effort) of the companies that can drive standards.

Finally, during the Q&A part, Jeff made an interesting point explaining Boeing is making a serious investment, as you can see from their participation in all the action groups. They have made the long-term business case.

Finally, during the Q&A part, Jeff made an interesting point explaining Boeing is making a serious investment, as you can see from their participation in all the action groups. They have made the long-term business case.

The team is confident that the business case for such an investment is firm and stable, however in such long-term investment without direct results, these projects might come under pressure when the business is under pressure.

The virtual fireside chat

The conference ended with a virtual fireside chat from which I picked up an interesting point that Marc Halpern was bringing in. Marc mentioned a survey Gartner has done with companies in fast-moving industries related to the benefits of PLM. Companies reported improvements in accuracy and product development. They did not see so much a reduced time to market or cost reduction. After analysis, Gartner believes the real issue is related to collaboration processes and supply chain practices. Here lead times did not change, nor the number of changes.

Marc believes that this topic will be really showing benefits in the future with cloud and connected suppliers. This reminded me of an article published by McKinsey called The case for digital reinvention. In this article, the authors indicated that only 2 % of the companies interview were investing in a digital supply chain. At the same time, the expected benefits in this area would have the most significant ROI.

Marc believes that this topic will be really showing benefits in the future with cloud and connected suppliers. This reminded me of an article published by McKinsey called The case for digital reinvention. In this article, the authors indicated that only 2 % of the companies interview were investing in a digital supply chain. At the same time, the expected benefits in this area would have the most significant ROI.

The good news, there is consistency, and we know where to focus for early results.

Conclusion

It was a great conference as here we could see digital transformation in action (groups). Where vendor solutions often provide a sneaky preview of the future, we saw people working on creating the right foundations based on standards. My appreciation goes to all the active members in the CIMdata A&D action groups as they provide the groundwork for all of us – sooner or later.

After the series about “Learning from the past,” it is time to start looking toward the future. I learned from several discussions that I probably work most of the time with advanced companies. I believe this would motivate companies that lag behind even to look into the future even more.

After the series about “Learning from the past,” it is time to start looking toward the future. I learned from several discussions that I probably work most of the time with advanced companies. I believe this would motivate companies that lag behind even to look into the future even more.

If you look into the future for your company, you need new or better business outcomes. That should be the driver for your company. A company does not need PLM or a Digital Twin. A company might want to reduce its time to market and improve collaboration between all stakeholders. These objectives can be realized by different ways of working and an IT infrastructure to allow these processes to become digital and connected.

That is the “game”. Coming back to the future of PLM. We do not need a discussion about definitions; I leave this to the academics and vendors. We will see the same applies to the concept of a Digital Twin.

That is the “game”. Coming back to the future of PLM. We do not need a discussion about definitions; I leave this to the academics and vendors. We will see the same applies to the concept of a Digital Twin.

My statement: The digital twin is not new. Everybody can have their own digital twin as long as you interpret the definition differently. Does this sound like the PLM definition?

The definition

I like to follow the Gartner definition:

A digital twin is a digital representation of a real-world entity or system. The implementation of a digital twin is an encapsulated software object or model that mirrors a unique physical object, process, organization, person, or other abstraction. Data from multiple digital twins can be aggregated for a composite view across a number of real-world entities, such as a power plant or a city, and their related processes.

As you see, not a narrow definition. Now we will look at the different types of interpretations.

Single-purpose siloed Digital Twins

- Simple – data only

One of the most straightforward applications of a digital twin is, for example, my Garmin Connect environment. My device registers performance parameters (speed, cadence, power, heartbeat, location) when cycling. Then, after every trip, I can analyze my performance. I can see changes in my overall performance; compare my performance with others in my category (weight, age, sex).

One of the most straightforward applications of a digital twin is, for example, my Garmin Connect environment. My device registers performance parameters (speed, cadence, power, heartbeat, location) when cycling. Then, after every trip, I can analyze my performance. I can see changes in my overall performance; compare my performance with others in my category (weight, age, sex).

Based on that, I can decide if I want to improve my performance. My personal business goal is to maintain and improve my overall performance, knowing I cannot stop aging by upgrading my body.

On November 4th, 2020, I am participating in the (almost virtual) Digital Twin conference organized by Bits&Chips in the Netherlands. In the context of human performance, I look forward to Natal van Riel’s presentation: Towards the metabolic digital twin – for sure, this direction is not simple. Natal is a full professor at the Technical University in Eindhoven, the “smart city” in the Netherlands.

On November 4th, 2020, I am participating in the (almost virtual) Digital Twin conference organized by Bits&Chips in the Netherlands. In the context of human performance, I look forward to Natal van Riel’s presentation: Towards the metabolic digital twin – for sure, this direction is not simple. Natal is a full professor at the Technical University in Eindhoven, the “smart city” in the Netherlands.

- Medium – data and operating models

Many connected devices in the world use the same principle. An airplane engine, an industrial robot, a wind turbine, a medical device, and a train carriage; all track the performance based on this connection between physical and virtual, based on some sort of digital connectivity.

Many connected devices in the world use the same principle. An airplane engine, an industrial robot, a wind turbine, a medical device, and a train carriage; all track the performance based on this connection between physical and virtual, based on some sort of digital connectivity.

The business case here is also monitoring performance, predicting maintenance, and upgrading the product when needed.

This is the domain of Asset Lifecycle Management, a practice that has existed for decades. Based on financial and performance models, the optimal balance between maintaining and overhauling has to be found. Repairs are disruptive and can be extremely costly. A manufacturing site that cannot produce can cost millions per day. Connecting data between the physical and the virtual model allows us to have real-time insights and be proactive. It becomes a digital twin.

This is the domain of Asset Lifecycle Management, a practice that has existed for decades. Based on financial and performance models, the optimal balance between maintaining and overhauling has to be found. Repairs are disruptive and can be extremely costly. A manufacturing site that cannot produce can cost millions per day. Connecting data between the physical and the virtual model allows us to have real-time insights and be proactive. It becomes a digital twin.

- Advanced – data and connected 3D model

The digital twin we see the most in marketing videos is a virtual twin, using a 3D representation for understanding and navigation. The 3D representation provides a Virtual Reality (VR) environment with connected data. When pointing at the virtual components, information might appear, or some animation might take place.

The digital twin we see the most in marketing videos is a virtual twin, using a 3D representation for understanding and navigation. The 3D representation provides a Virtual Reality (VR) environment with connected data. When pointing at the virtual components, information might appear, or some animation might take place.

Building such a virtual representation is a significant effort; therefore, there needs to be a serious business case.

The simplest business case is to use the virtual twin for training purposes. A flight simulator provides a virtual environment and behavior as-if you are flying in a physical airplane – the behavior model behind the simulator should match as well as possibly the real behavior. However, as it is a model, it will never be 100 % reality and requires updates when new findings or product changes appear.

A virtual model of a platform or plant can be used for training on Standard Operating Procedures (SOPs). In the physical world, there is no place or time to conduct such training. Here the complexity might be lower. There is a 3D Model; however, serious updates can only be expected after a major maintenance or overhaul activity.

A virtual model of a platform or plant can be used for training on Standard Operating Procedures (SOPs). In the physical world, there is no place or time to conduct such training. Here the complexity might be lower. There is a 3D Model; however, serious updates can only be expected after a major maintenance or overhaul activity.

These practices are not new either and are used in places where physical training cannot be done.

More challenging is the Augmented Reality (AR) use case. Here the virtual model, most of the time, a lightweight 3D Model, connects to real-time data coming from other sources. For example, AR can be used when an engineer has to service a machine. The AR environment might project actual data from the machine, indicate service points and service procedures.

More challenging is the Augmented Reality (AR) use case. Here the virtual model, most of the time, a lightweight 3D Model, connects to real-time data coming from other sources. For example, AR can be used when an engineer has to service a machine. The AR environment might project actual data from the machine, indicate service points and service procedures.

The positive side of the business case is clear for such an opportunity, ensuring service engineers always work with the right information in a real-time context. The main obstacle to implementing AR, in reality, is the access to data, the presentation of the data and keeping the data in the AR environment matching the reality.

![]() And although there are 3D Models in use, they are, to my knowledge, always created in siloes, not yet connected to their design sources. Have a look at the Digital Twin conference from Bits&Chips, as mentioned before.

And although there are 3D Models in use, they are, to my knowledge, always created in siloes, not yet connected to their design sources. Have a look at the Digital Twin conference from Bits&Chips, as mentioned before.

Several of the cases mentioned above will be discussed here. The conference’s target is to share real cases concluded by Q & A sessions, crucial for a virtual event.

Connected Virtual Twins along the product lifecycle

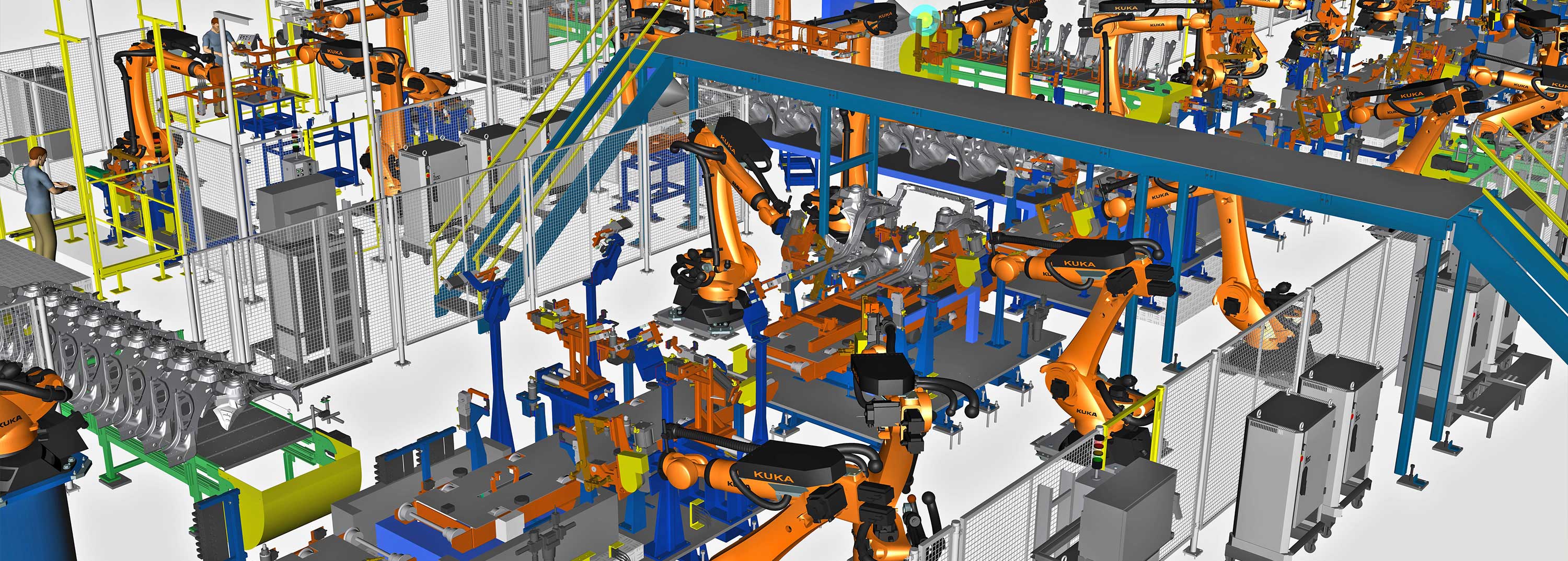

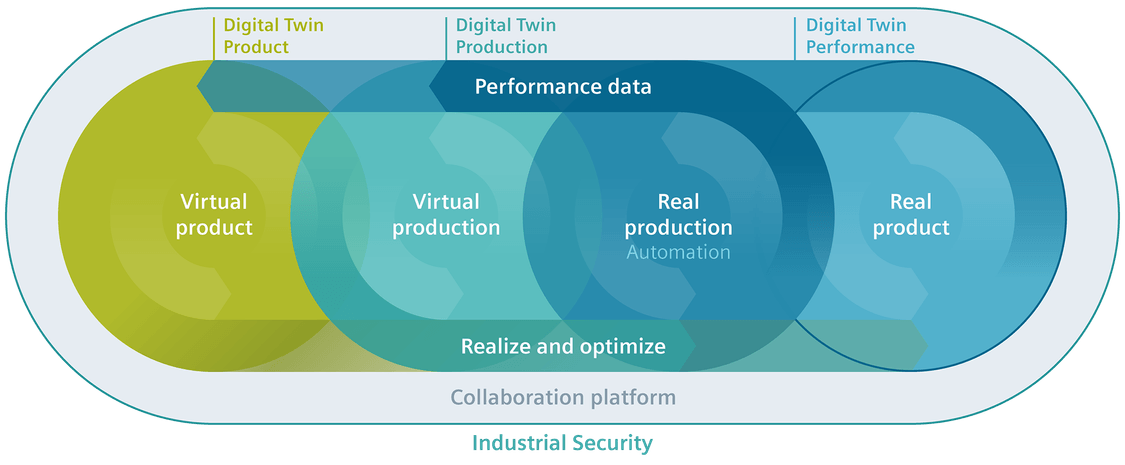

So far, we have been discussing the virtual twin concept, where we connect a product/system/person in the physical world to a virtual model. Now let us zoom in on the virtual twins relevant for the early parts of the product lifecycle, the manufacturing twin, and the development twin. This image from Siemens illustrates the concept:

On slides they imagine a complete integrated framework, which is the future vision. Let us first zoom in on the individual connected twins.

The digital production twin

This is the area of virtual manufacturing and creating a virtual model of the manufacturing plant. Virtual manufacturing planning is not a new topic. DELMIA (Dassault Systèmes) and Tecnomatix (Siemens) are already for a long time offering virtual manufacturing planning solutions.

This is the area of virtual manufacturing and creating a virtual model of the manufacturing plant. Virtual manufacturing planning is not a new topic. DELMIA (Dassault Systèmes) and Tecnomatix (Siemens) are already for a long time offering virtual manufacturing planning solutions.

At that time, the business case was based on the fact that the definition of a manufacturing plant and process done virtually allows you to optimize the plant before investing in physical assets.

Saving money as there is no costly prototype phase to optimize production. In a virtual world, you can perform many trade-off studies without extra costs. That was the past (and, for many companies, still the current situation).

Saving money as there is no costly prototype phase to optimize production. In a virtual world, you can perform many trade-off studies without extra costs. That was the past (and, for many companies, still the current situation).

With the need to be more flexible in manufacturing to address individual customer orders without increasing the overhead of delivering these customer-specific solutions, there is a need for a configurable plant that can produce these individual products (batch size 1).

This is where the virtual plant model comes into the picture again. Instead of having a virtual model to define the ultimate physical plant, now the virtual model remains an active model to propose and configure the production process for each of these individual products in the physical plant.

This is where the virtual plant model comes into the picture again. Instead of having a virtual model to define the ultimate physical plant, now the virtual model remains an active model to propose and configure the production process for each of these individual products in the physical plant.

This is partly what Industry 4.0 is about. Using a model-based approach to configure the plant and its assets in a connected manner. The digital production twin drives the execution of the physical plant. The factory has to change from a static factory to a dynamic “smart” factory.

In the domain of Industry 4.0, companies are reporting progress. However, in my experience, the main challenge is still that the product source data is not yet built in a model-based, configurable manner. Therefore, requires manual rework. This is the area of Model-Based Definition, and I have been writing about this aspect several times. Latest post: Model-Based: Connecting Engineering and Manufacturing

The business case for this type of digital twin, of course, is to be able to customer-specific products with extremely competitive speed and reduced cost compared to standard. It could be your company’s survival strategy. As it is hard to predict the future, as we see from COVID-19, it is still crucial to anticipate the future instead of waiting.

The business case for this type of digital twin, of course, is to be able to customer-specific products with extremely competitive speed and reduced cost compared to standard. It could be your company’s survival strategy. As it is hard to predict the future, as we see from COVID-19, it is still crucial to anticipate the future instead of waiting.

The digital development twin

Before a product gets manufactured, there is a product development process. In the past, this was pure mechanical with some electronic components. Nowadays, many companies are actually manufacturing systems as the software controlling the product plays a significant role. In this context, the model-based systems engineering approach is the upcoming approach to defining and testing a system virtually before committing to the physical world.

Before a product gets manufactured, there is a product development process. In the past, this was pure mechanical with some electronic components. Nowadays, many companies are actually manufacturing systems as the software controlling the product plays a significant role. In this context, the model-based systems engineering approach is the upcoming approach to defining and testing a system virtually before committing to the physical world.

Model-Based Systems Engineering can define a single complex product and perform all kinds of analyses on the system even before there is a physical system in place. I will explain more about model-based systems engineering in future posts. In this context, I want to stress that having a model-based system engineering environment combined with modularity (do not confuse it with model-based) is a solid foundation for dealing with unique custom products. Solutions can be configured and validated against their requirements already during the engineering phase.

Model-Based Systems Engineering can define a single complex product and perform all kinds of analyses on the system even before there is a physical system in place. I will explain more about model-based systems engineering in future posts. In this context, I want to stress that having a model-based system engineering environment combined with modularity (do not confuse it with model-based) is a solid foundation for dealing with unique custom products. Solutions can be configured and validated against their requirements already during the engineering phase.

The business case for the digital development twin is easy to make. Shorter time to market, improved and validated quality, and reduced engineering hours and costs compared to traditional ways of working. To achieve these results, for sure, you need to change your ways of working and the tools you are using. So it won’t be that easy!

The business case for the digital development twin is easy to make. Shorter time to market, improved and validated quality, and reduced engineering hours and costs compared to traditional ways of working. To achieve these results, for sure, you need to change your ways of working and the tools you are using. So it won’t be that easy!

For those interested in Industry 4.0 and the Model-Based System Engineering approach, join me at the upcoming PLM Road Map 2020 and PDT 2020 conference on 17-18-19 November. As you can see from the agenda, a lot of attention to the Digital Twin and Model-Based approaches.

For those interested in Industry 4.0 and the Model-Based System Engineering approach, join me at the upcoming PLM Road Map 2020 and PDT 2020 conference on 17-18-19 November. As you can see from the agenda, a lot of attention to the Digital Twin and Model-Based approaches.

Three digital half-days with hopefully a lot to learn and stay with our feet on the ground. In particular, I am looking forward to Marc Halpern’s keynote speech: Digital Thread: Be Careful What you Wish For, It Just Might Come True

Conclusion

It has been very noisy on the internet related to product features and technologies, probably due to COVID-19 and therefore disrupted interactions between all of us – vendors, implementers and companies trying to adjust their future. The Digital Twin concept is an excellent framing for a concept that everyone can relate to. Choose your business case and then look for the best matching twin.

I believe we are almost at the end of learning from the past. We have seen how, from an initial serial CAD-driven approach with PDM, we evolved to PLM-managed structures, the EBOM and the MBOM. Or to illustrate this statement, look at the image below, where I use a Tech-Clarity image from Jim Brown.

The image on the right describes perfectly the complementary roles of PLM and ERP. The image on the left shows the typical PDM-approach. PDM feeding ERP in a linear process. The image on the right, I believe it is from 2004, shows the best practice before digital transformation. PLM is supporting product innovation in an iterative approach, pushing released information to ERP for execution.

As I think in images, I like the concept of a circle for PLM and an arrow for ERP. I am always using those two images in discussions with my customers when we want to understand if a particular activity should be in the PLM or ERP-domain.

As I think in images, I like the concept of a circle for PLM and an arrow for ERP. I am always using those two images in discussions with my customers when we want to understand if a particular activity should be in the PLM or ERP-domain.

Ten years ago, the PLM-domain was conceptually further extended by introducing support for products in operations and service. Similar to the EBOM (engineering) and the MBOM (manufacturing), the SBOM (service) was introduced to support product information for products in operation. In theory a full connected cicle.

Asset Lifecycle Management

At the same time, I was promoting PLM-practices for owners/operators to enhance Asset Lifecycle Management. My first post from June 2010 was called: PLM for Asset Lifecycle Management and Asset Development introduces this approach.

Conceptually the SBOM and Asset Lifecycle Management have a lot in common. There is a design product, in this case, an asset (plant, machine) running in the field, and we need to make sure operators have the latest information about the asset. And in case of asset changes, which can be a maintenance operation, a repair or complete overall, we need to be sure the changes are based on the correct information from the as-built environment. This requires full configuration management.

Conceptually the SBOM and Asset Lifecycle Management have a lot in common. There is a design product, in this case, an asset (plant, machine) running in the field, and we need to make sure operators have the latest information about the asset. And in case of asset changes, which can be a maintenance operation, a repair or complete overall, we need to be sure the changes are based on the correct information from the as-built environment. This requires full configuration management.

Asset changes can be based on extensive projects that need to be treated like new product development projects, with a staged approach that can take weeks, months, sometimes years. These activities are typical activities performed in PLM-systems, not in MRO-systems that are designed to manage the actual operation. Again here we see the complementary roles of PLM (iterative) and MRO (execution).

Since 2008, I have worked a lot in this environment, mainly in the nuclear and process industry. If you want to learn more about this aspect of PLM, I recommend looking at the PLMpartner website, where Bjørn Fidjeland, in cooperation with SharePLM, published a course on Plant Information Management. We worked together in several projects and Bjørn has done a great effort to describe the logical model to be used instead of a function-feature story.

Ten years ago, we were not calling this concept the “Digital Twin,” as the aim was to provide end-to-end support of asset information from engineering, procurement, and construction towards operation in a coordinated manner. The breaking point in the relation between the EPCs and Owner/Operators is the data-handover – how much of your IP can/do you expose and what is needed. Nowadays, we would call striving for end-to-end data continuity the Digital Thread.

Hot from the press in this context, CIMdata just published a commentary Managing the Digital Thread in Global Value Chains describing Eurostep’s ShareAspace capabilities and experiences in managing an end-to-end information flow (Digital Thread) in a heterogeneous environment based on exchange standards like ISO 10303-239 PLCS. Their solution is based on what I consider a more modern approach for managing digital continuity compared to the traditional approach I described before. Compare the two images in this paragraph. The first image represents the old/current way with a disconnected handover, the second represents ShareAspace connected approach based on a real digital thread.

The Service BOM

As discussed with Asset Lifecycle Management, there is a disconnect between the engineering disciplines and operations in the field, looking from the point of view of an Asset owner/operator.

Now when we look from the perspective of a manufacturing company that produces assets to be serviced, we can identify a different dataflow and a new structure, the Service BOM (SBOM).

The SBOM provides information on how a product needs to be serviced. What are the parts that require service, and what are the service kits that are possible for that product? For that reason, service engineering should be done in parallel to product engineering. When designing a product, the engineer needs to identify which the wearing parts (always require service in time) and which parts might be serviceable.

The SBOM provides information on how a product needs to be serviced. What are the parts that require service, and what are the service kits that are possible for that product? For that reason, service engineering should be done in parallel to product engineering. When designing a product, the engineer needs to identify which the wearing parts (always require service in time) and which parts might be serviceable.

There are different ways to look at the SBOM. Conceptually, the SBOM could be created in close relation with the EBOM. At the moment you define your product, you also should specify how the product will be serviced. See the image below

From this example, it is clear that part standardization and modularization have a considerable benefit for services downstream. What if you have only one serviceable part that applies to many products? The number of parts to have in stock will be strongly reduced instead of having many similar parts that only fit in a single product?

Depending on the type of product, the SBOM can be generic, serving many products in the field. In that case, the company has to deal with catalogs, to be defined in PLM. Or the SBOM can be aligned with the As-Built of a capital product in the field. In that case, the concepts of Asset Lifecycle Management apply. Click on the image to see a clear picture.

Depending on the type of product, the SBOM can be generic, serving many products in the field. In that case, the company has to deal with catalogs, to be defined in PLM. Or the SBOM can be aligned with the As-Built of a capital product in the field. In that case, the concepts of Asset Lifecycle Management apply. Click on the image to see a clear picture.

![]() The SBOM on its own, in such an environment, will have links to specific documents, service instructions, operating manuals.

The SBOM on its own, in such an environment, will have links to specific documents, service instructions, operating manuals.

If your PLM-system allows it, extending the EBOM and MBOM with an SBOM is not a complex effort. What is crucial to understand is that the SBOM has its own lifecycle, which can even last longer than the active product sold. So sometimes, manufacturing specifications, related to service parts need to be maintained too, creating a link between the SBOM and potential MBOM(s).

ECM = Enterprise Change Management

When I discussed ECM in my previous post in the context of Engineering Change Management, I got the feedback that nowadays, everyone talks about Enterprise Change Management. Engineering Change Management is old school.

In the past, and even in a 2014 benchmark, a customer had two change management systems. One in PLM and one in ERP, and companies were looking into connecting these two processes. Like the BOM-interaction between PLM and ERP, this is technology-wise, never a real problem.

The real problem in such situations was to come to a logical flow of events. Many times the company insisted that every change should start from the ERP-system as we like to standardize. This means that even an engineering change had to be registered first in the ERP-system

The real problem in such situations was to come to a logical flow of events. Many times the company insisted that every change should start from the ERP-system as we like to standardize. This means that even an engineering change had to be registered first in the ERP-system

Luckily the reach of PLM has grown. PLM is no longer the engineering tool (IT-system thinking). PLM has become the information backbone for product information all along the product lifecycle. Having the MBOM and SBOM available through a PLM-infrastructure allows organizations to streamline their processes.

And in this modern environment, enterprise change management might take place mostly in a PLM-infrastructure. The PLM-infrastructure providing a digital thread, as the Aras picture above illustrates, provides the full traceability to support configuration management.

However, we still have to remember that configuration management and engineering change management, first of all, are based on methodology and processes. Next, the combination of tools to be used will vary.

However, we still have to remember that configuration management and engineering change management, first of all, are based on methodology and processes. Next, the combination of tools to be used will vary.

I like to conclude this topic with a quote from Lee Perrin’s comment on my previous blog post

I would add that aerospace companies implemented CM, to avoid fatal consequences to their companies, but also to their flying customers.

PLM provides the framework within which to carry out Configuration Management. CM can indeed be carried out without PLM, as was done in the old paper-based days. As you have stated, PLM makes the whole CM process much more efficient. I think more transparent too.

Conclusion

After nine posts around the theme Learning from the past to understand the future, I walked through the history of CAD, PDM and PLM in a fast mode, pointing to practices and friction points. In the blogging space, it is hard to find this information as most blog posts are coming from software vendors explaining why their tool is needed. Hopefully, these series have helped many of you to understand a broader context. Now I want to focus on the future again in my upcoming blog posts.

Still, feel free to contact me and discuss methodology topics.

In the series learning from the past to understand the future, we have almost reached the current state of PLM before digitization became visible. In the last post, I introduced the value of having the MBOM preparation inside a PLM-system, so manufacturing engineering can benefit from early visibility and richer product context when preparing the manufacturing process.

In the series learning from the past to understand the future, we have almost reached the current state of PLM before digitization became visible. In the last post, I introduced the value of having the MBOM preparation inside a PLM-system, so manufacturing engineering can benefit from early visibility and richer product context when preparing the manufacturing process.

Does everyone need an MBOM?

It is essential to realize that you do not need an EBOM and a separate MBOM in case of an Engineering To Order primary process. The target of ETO is to deliver a unique customer product with no time to lose. Therefore, engineering can design with a manufacturing process in mind.

It is essential to realize that you do not need an EBOM and a separate MBOM in case of an Engineering To Order primary process. The target of ETO is to deliver a unique customer product with no time to lose. Therefore, engineering can design with a manufacturing process in mind.

The need for an MBOM comes when:

- You are selling a specific product over a more extended period of time. The engineering definition, in that case, needs to be as little as possible dependent on supplier-specific parts.

- You are delivering your portfolio based on modules. Modules need to be as long as possible stable, therefore independent of where they are manufactured and supplier-specific parts. The better you can define your modules, the more customers you can reach over time.

- You are having multiple manufacturing locations around the world, allowing you to source locally and manufacture based on local plant-specific resources. I described these options in the previous post

The challenge for all companies that want to move from ETO to BTO/CTO is the fact that they need to change their methodology – building for the future while supporting the past. This is typically something to be analyzed per company on how to deal with the existing legacy and installed base.

The challenge for all companies that want to move from ETO to BTO/CTO is the fact that they need to change their methodology – building for the future while supporting the past. This is typically something to be analyzed per company on how to deal with the existing legacy and installed base.

Configurable EBOM and MBOM

In some previous posts, I mentioned that it is efficient to have a configurable EBOM. This means that various options and variants are managed in the same EBOM-structure that can be filtered based on configuration parameters (date effectivity/version identifier/time baseline). A configurable EBOM is often called a 150 % EBOM

The MBOM can also be configurable as a manufacturing plant might have almost common manufacturing steps for different product variants. By using the same process and filtered MBOM, you will manufacture the specific product version. In that case, we can talk about a 120 % MBOM

The MBOM can also be configurable as a manufacturing plant might have almost common manufacturing steps for different product variants. By using the same process and filtered MBOM, you will manufacture the specific product version. In that case, we can talk about a 120 % MBOM

Note: the freedom of configuration in the EBOM is generally higher than the options in the configurable MBOM.

The real business change for EBOM/MBOM

So far, we have discussed the EBOM/MBOM methodology. It is essential to realize this methodology only brings value when the organization will be adapted to benefit from the new possibilities.

One of the recurring errors in PLM implementations is that users of the system get an extended job scope, without giving them the extra time to perform these activities. Meanwhile, other persons downstream might benefit from these activities. However, they will not complain. I realized that already in 2009, I mentioned such a case: Where is my PLM ROI, Mr. Voskuil?

Now let us look at the recommended business changes when implementing an EBOM/MBOM-strategy

- Working in a single, shared environment for engineering and manufacturing preparation is the first step to take.

Working in a PLM-system is not a problem for engineers who are used to the complexity of a PDM-system. For manufacturing engineers, a PLM-environment will be completely new. Manufacturing engineers might prepare their bill of process first in Excel and ultimately enter the complete details in their ERP-system. ERP-systems are not known for their user-friendliness. However, their interfaces are often so rigid that it is not difficult to master the process. Excel, on the other side, is extremely flexible but not connected to anything else.

And now, this new PLM-system requires people to work in a more user-friendly environment with limited freedom. This is a significant shift in working methodology. This means manufacturing engineers need to be trained and supported over several months. Changing habits and keep people motivated takes energy and time. In reality, where is the budget for these activities? See my 2016 post: PLM and Cultural Change Management – too expensive?

And now, this new PLM-system requires people to work in a more user-friendly environment with limited freedom. This is a significant shift in working methodology. This means manufacturing engineers need to be trained and supported over several months. Changing habits and keep people motivated takes energy and time. In reality, where is the budget for these activities? See my 2016 post: PLM and Cultural Change Management – too expensive?

- From sequential to concurrent

Once your manufacturing engineers are able to work in a PLM-environment, they are able to start the manufacturing definition before the engineering definition is released. Manufacturing engineers can participate in design reviews having the information in their environment available. They can validate critical manufacturing steps and discuss with engineers potential changes that will reduce the complexity or cost for manufacturing. As these changes will be done before the product is released, the cost of change is much lower. After all, having engineering and manufacturing working partially in parallel will reduce time to market.

One of the leading business drivers for many companies is introducing products or enhancements to the market. Bringing engineering and manufacturing preparation together also means that the PLM-system can no longer be an engineering tool under the responsibility of the engineering department.

The responsibility for PLM needs to be at a level higher in the organization to ensure well-balanced choices. A higher level in the organization automatically means more attention for business benefits and less attention for functions and features.

The responsibility for PLM needs to be at a level higher in the organization to ensure well-balanced choices. A higher level in the organization automatically means more attention for business benefits and less attention for functions and features.

From technology to methodology – interface issues?

The whole EBOM/MBOM-discussion often has become a discussion related to a PLM-system and an ERP-system. Next, the discussion diverted to how these two systems could work together, changing the mindset to the complexity of interfaces instead of focusing on the logical flow of information.

In an earlier PI Event in München 2016, I lead a focus group related to the PLM and ERP interaction. The discussion was not about technology, all about focusing on what is the logical flow of information. From initial creation towards formal usage in a product definition (EBOM/MBOM).

In an earlier PI Event in München 2016, I lead a focus group related to the PLM and ERP interaction. The discussion was not about technology, all about focusing on what is the logical flow of information. From initial creation towards formal usage in a product definition (EBOM/MBOM).

What became clear from this workshop and other customer engagements is that people are often locked in their siloed way of thinking. Proposed information flows are based on system capabilities, not on the ideal flow of information. This is often the reason why a PLM/ERP-interface becomes complicated and expensive. System integrators do not want to push for organizational change, they prefer to develop an interface that adheres to the current customer expectations.

SAP has always been promoting that they do not need an interface between engineering and manufacturing as their data management starts from the EBOM. They forgot to mention that they have a difficult time (and almost no intention) to manage the early ideation and design phase. As a Dutch SAP country manager once told me: “Engineers are resources that do not want to be managed.” This remark says all about the mindset of ERP.

After overlooking successful PLM-implementations, I can tell the PLM-ERP interface has never been a technical issue once the methodology is transparent. A company needs to agree on logical data flow from ideation through engineering towards design is the foundation.

It is not about owning data and where to store it in a single system. It is about federated data sets that exist in different systems and that are complementary but connected, requiring data governance and master data management.

It is not about owning data and where to store it in a single system. It is about federated data sets that exist in different systems and that are complementary but connected, requiring data governance and master data management.

The SAP-Siemens partnership

In the context of the previous paragraph, the messaging around the recently announced partnership between SAP and Siemens made me curious. Almost everyone has shared an opinion about the partnership. There is a lot of speculation, and many questions were imaginarily answered by as many blog posts in the field. Last week Stan Przybylinski shared CIMdata’s interpretations in a webinar Putting the SAP-Siemens Partnership In Context, which was, in my opinion, the most in-depth analysis I have seen.

For what it is worth, my analysis:

- First of all, the partnership is a merger of slide decks at this moment, aiming to show to a potential customer that in the SAP/Siemens-combination, you find everything you need. A merger of slides does not mean everything works together.

- It is a merger of two different worlds. You can call SAP a real data platform with connected data, where Siemens offering is based on the Teamcenter backbone providing a foundation for a coordinated approach. In the coordinated approach, the data flexibility is lower. For that reason, Mendix is crucial to make Siemens portfolio behave like a connected platform too.

You can read my doubts about having a coordinated and connected system working together (see image above). It was my #1 identified challenge for this decade: PLM 2020 – PLM the next decade (before COVID-19 became a pandemic and illustrated we need to work connected) - The fact that SAP will sell TC PLM and Siemens will sell SAP PPM seems like loser’s statement, meaning our SAP PLM is probably not good enough, or our TC PPM capabilities are not good enough. In reality, I believe they both should remain, and the partnership should work on logical data flows with data residing in two locations – the federated approach. This is how platforms reside next to each other instead of the single black hole.

- The fact that standard interfaces will be developed between the two systems is a subtle sales argument with relatively low value. As I wrote in the “from technology to methodology”-paragraph, the challenges are in the organizational change within companies. Technology is not the issue, although system integrators also need to make a living.

- What I believe makes sense is that both SAP and Siemens, have to realize their Industry 4.0 end-to-end capabilities. It is a German vision now for several years and it is an excellent vision to strive for. Now it is time to build the two platforms working together. This will be a significant technical challenge mainly for Siemens as its foundation is based on a coordinated backbone.

- The biggest challenge, not only for this partnership, is the organizational change within companies that want to build an end-to-end connected solution. In particular, in companies with a vast legacy, the targeted industries by the partnership, the chasm between coordinated legacy data and intended connected data is enormous. Technology will not fix it, perhaps smoothen the pain a little.

Conclusion

With this post, we have reached the foundation of the item-centric approach for PLM, where the EBOM and MBOM are managed in a real-time context. Organizational change is the biggest inhibitor to move forward. The SAP-Siemens partnership is a sales/marketing approach to create a simplified view for the future at C-level discussions.

Let us watch carefully what happens in reality.

Next time potentially the dimension of change management and configuration management in an item-centric approach.

Or perhaps Martijn Dullaart will show us the way before, following up on his tricky poll question

This time a short post (for me) as I am in the middle the series “Learning from the past to understand the future” and currently collecting information for next week’s post. However, recently Rob Ferrone, the original Digital Plumber, pointed me to an interesting post from Scott Taylor, the Data Whisperer.

This time a short post (for me) as I am in the middle the series “Learning from the past to understand the future” and currently collecting information for next week’s post. However, recently Rob Ferrone, the original Digital Plumber, pointed me to an interesting post from Scott Taylor, the Data Whisperer.

![]() In code: The Virtual Dutchman discovered the Data Whisperer thanks to the original Digital Plumber.

In code: The Virtual Dutchman discovered the Data Whisperer thanks to the original Digital Plumber.

Scott’s article with the title: “Data Management Hasn’t Failed, but Data Management Storytelling Has” matches precisely the discussion we have in the PLM community.

Please read his article, and just replace the words Data Management by PLM, and it could have been written for our community. In a way, PLM is a specific application of data management, so not a real surprise.

Scott’s conclusions give food for thought in the PLM community:

To win over business stakeholders, Data Management leadership must craft a compelling narrative that builds urgency, reinvigorates enthusiasm, and evangelizes WHY their programs enable the strategic intentions of their enterprise. If the business leaders whose support and engagement you seek do not understand and accept the WHY, they will not care about the HOW. When communicating to executive leadership, skip the technical details, the feature functionality, and the reference architecture and focus on:

- Establishing an accessible vocabulary

- Harmonizing to a common voice

- Illuminating the business vision

When you tell your Data Management story with that perspective, it can end happily ever after.

It all resonates well with what I described in the PLM ROI Myth – it is clear that when people hear the word Myth, they have a bad connotation, same btw for PLM.

The fact that we still need to learn storytelling is because most of us are so much focused on technology and sometimes on discovering the new name for PLM in the future.

Last week I pointed to a survey from the PLMIG (PLM Interest Group) and XLifcycle, inviting you to help to define the future definition of PLM.

Last week I pointed to a survey from the PLMIG (PLM Interest Group) and XLifcycle, inviting you to help to define the future definition of PLM.

You are still welcome here: Towards a digital future: the evolving role of PLM in the future digital world.

Also, I saw a great interview with Martin Eigner on Minerva PLM TV interview by Jennifer Moore. Martin is well known in the PLM world and has done foundational work for our community

. According to Jennifer, he is considered as The Godfather of PLM. This tittle fits nicely in today’s post. Those who have seen his presentations in recent years will remember Martin is talking about SysLM (System Lifecycle Management) as the future for PLM.

. According to Jennifer, he is considered as The Godfather of PLM. This tittle fits nicely in today’s post. Those who have seen his presentations in recent years will remember Martin is talking about SysLM (System Lifecycle Management) as the future for PLM.

It is an interesting recording to watch – click on the image above to see it. Martin explains nicely why we often do not get the positive feedback from PLM implementations – starting at minute 13 for those who cannot wait.

In the interview, you will discover we often talk too much about our discipline capabilities where the real discussion should be talking business. Strategy and objectives are discussed and decided at the management level of a company. By using storytelling, we can connect to these business objectives.

In the interview, you will discover we often talk too much about our discipline capabilities where the real discussion should be talking business. Strategy and objectives are discussed and decided at the management level of a company. By using storytelling, we can connect to these business objectives.

The end result will be more likely that a company understands why to invest significantly in PLM as now PLM is part of its competitiveness and future continuity.

Conclusion

I shared links to two interesting posts from the last weeks. Studying them will help you to create a broader view. We have to learn to tell the right story. People do not want PLM – they have personal objectives. Companies have business objectives, and they might lead to the need for a new and changing PLM. Connecting to the management in an organization, therefore, is crucial.

Next week again more about learning from the past to understand the future

Life goes on, and I hope you are all staying safe while thinking about the future. Interesting in the context of the future, there was a recent post from Lionel Grealou with the title: Towards PLM 4.0: Hyperconnected Asset Performance Management Framework.

Lionel gave a kind of evolutionary path for PLM. The path from PLM 1.0 (PDM) ending in a PLM 4.0 definition. Read the article or click on the image to see an enlarged version to understand the logical order. Interesting to mention that PLM 4.0 is the end target, for sure there is a wishful mind-mapping with Industry 4.0.

When seeing this diagram, it reminded me of Marc Halpern’s diagram that he presented during the PDT 2015 conference. Without much fantasy, you can map your company to one of the given stages and understand what the logical next step would be. To map Lionel’s model with Marc’s model, I would state PLM 4.0 aligns with Marc’s column Collaborating.

In the discussion related to Lionel’s post, I stated two points. First, an observation that most of the companies that I know remain in PLM 1.0 or 2.0, or in Marc’s diagram, they are still trying to reach the level of Integrating.

Why is it so difficult to move to the next stage?

Oleg Shilovitsky, in a reaction to Lionel’s post, confirmed this. In Why did manufacturing stuck in PLM 1.0 and PLM 2.0? Oleg points to several integration challenges, functional and technical. His take is that new technologies might be the answer to move to PLM 3.0, as you can read from his conclusion.

What is my conclusion?

There are many promising technologies, but integration is remaining the biggest problem for manufacturing companies in adopting PLM 3.0. The companies are struggling to expand upstream and downstream. Existing vendors are careful about the changes. At the same time, very few alternatives can be seen around. Cloud structure, new data management, and cloud infrastructure can simplify many integration challenges and unlock PLM 3.0 for future business upstream and especially downstream. Just my thoughts…

Completely disconnected from Lionel’s post, Angad Sorte from Plural Nordic AS wrote a LinkedIn post: Why PLM does not get attention from your CEO. Click on the image to see an enlarged version, that also neatly aligns with Industry 4.0. Coincidence, or do great minds think alike? Phil Collins would sing: It is in the air tonight

Completely disconnected from Lionel’s post, Angad Sorte from Plural Nordic AS wrote a LinkedIn post: Why PLM does not get attention from your CEO. Click on the image to see an enlarged version, that also neatly aligns with Industry 4.0. Coincidence, or do great minds think alike? Phil Collins would sing: It is in the air tonight

Angad’s post is about the historical framing of PLM as a system, an engineering tool versus a business strategy. Angrad believes once you have a clear definition, it will be easier to explain the next steps for the business. The challenge here is: Do we need, or do we have a clear definition of PLM? It is a topic that I do not want to discuss anymore due to a variety of opinions and interpretations. An exact definition will never lead to a CEO stating, “Now I know why we need PLM.”

I believe there are enough business proof points WHY companies require a PLM-infrastructure as part of a profitable business. Depending on the organization, it might be just a collection of tools, and people do the work. Perhaps this is the practice in small enterprises?

In larger enterprises, the go-to-market strategy, the information needs, and related processes will drive the justification for PLM. But always in the context of a business transformation. Strategic consultancy firms are excellent in providing strategic roadmaps for their customers, indicating the need for a PLM-infrastructure as part of that.

In larger enterprises, the go-to-market strategy, the information needs, and related processes will drive the justification for PLM. But always in the context of a business transformation. Strategic consultancy firms are excellent in providing strategic roadmaps for their customers, indicating the need for a PLM-infrastructure as part of that.

Most of the time, they do not dive more in-depth as when it comes to implementation, other resources are needed.

What needs to be done in PLM 1.0 to 4.0 per level/stage is well described in all the diagrams on a high-level. The WHAT-domain is the domain of the PLM-vendors and implementers. They know what their tools and skillsets can do, and they will help the customer to implement such an environment.

What needs to be done in PLM 1.0 to 4.0 per level/stage is well described in all the diagrams on a high-level. The WHAT-domain is the domain of the PLM-vendors and implementers. They know what their tools and skillsets can do, and they will help the customer to implement such an environment.

The big illusion of all the evolutionary diagrams is that it gives a false impression of evolution. Moving to the next level is not just switching on new or more technology and involve more people.

So the big question is HOW and WHEN to make progress.

HOW to make progress

In the past four years, I have learned that digital transformation in the domain of PLM is NOT an evolution. It is disruptive as the whole foundation for PLM changes. If you zoom in on the picture on the left, you will see the data model on the left, and the data model on the right is entirely different.

In the past four years, I have learned that digital transformation in the domain of PLM is NOT an evolution. It is disruptive as the whole foundation for PLM changes. If you zoom in on the picture on the left, you will see the data model on the left, and the data model on the right is entirely different.

On the left side of the chasm, we have a coordinated environment based on data-structures (items, folders, tasks) to link documents.

On the right side of the chasm, we have a connected environment based on federated data elements and models (3D, Logical, and Simulation models).

I have been discussing this topic in the past two years at various PLM conferences and a year ago in my blog: The Challenges of a connected ecosystem for PLM

If you are interested in learning more about this topic, register for the upcoming virtual PLM Innovation Forum organized by TECHNIA. Registration is for free, and you will be able to watch the presentation, either live or recorded for 30 days.

If you are interested in learning more about this topic, register for the upcoming virtual PLM Innovation Forum organized by TECHNIA. Registration is for free, and you will be able to watch the presentation, either live or recorded for 30 days.

At this moment, the detailed agenda has not been published, and I will update the link once the session is visible. My presentation will not only focus on the HOW to execute a digital transformation, including PLM can be done, but also explain why NOW is the moment.

NOW to make progress

When the COVID19-related lockdown started, must of us thought that after the lockdown, we will be back in business as soon as possible. Now understanding the impact of the virus on our society, it is clear that we need to re-invent ourselves for a sustainable future, be more resilient.

It is now time to act and think differently as due to the lockdown, most of us have time to think. Are you and your company looking forward to creating a better future? Or will you and your company try to do the same non-sustainable rat race of the past and being caught by the next crises.

It is now time to act and think differently as due to the lockdown, most of us have time to think. Are you and your company looking forward to creating a better future? Or will you and your company try to do the same non-sustainable rat race of the past and being caught by the next crises.

McKinsey has been publishing several articles related to the impact of COVID19 and the article: Beyond coronavirus: The path to the next normal very insightful

As McKinsey never talks about PLM, therefore I want to guide you to think about more sustainable business.

Use a modern PLM-infrastructure, practices, and tools to remain competitive, meanwhile creating new or additional business models. Realizing concepts as digital twins, AR/VR-based business models require an internal transition in your company, the jump from coordinated to connected. Therefore, start investigating, experimenting in these new ways of working, and learn fast. This is why we created the PLM Green Alliance as a platform to share and discuss.

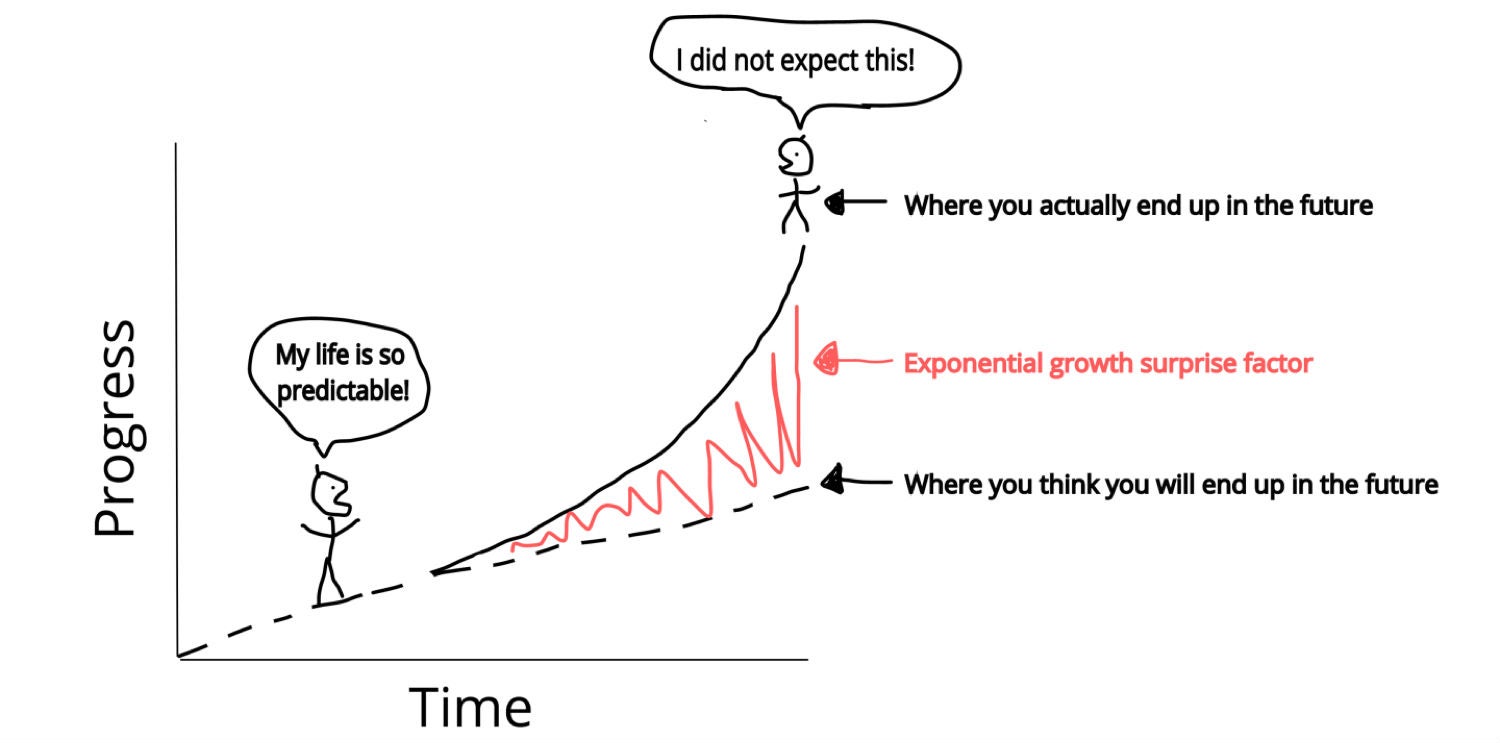

If you believe there is no need to be fast, I recommend you watch Rebecka Carlsson’s presentation at the PLMIF event. The title of her presentation: Exponential Tech in Sustainability. Rebecca will share insights for business development about how companies can upgrade to new business models based on the new opportunities that come with sustainability and exponential tech.

If you believe there is no need to be fast, I recommend you watch Rebecka Carlsson’s presentation at the PLMIF event. The title of her presentation: Exponential Tech in Sustainability. Rebecca will share insights for business development about how companies can upgrade to new business models based on the new opportunities that come with sustainability and exponential tech.

The reason I recommend her presentation because she addresses the aspect of exponential thinking nicely. Rebecka states we are “programmed” to think local-linear as mankind. Exponential thinking goes beyond our experience. Something we are not used doing until with the COVID19-virus we discovered exponential growth of the number of infections.

Finally, and this I read this morning, Jan Bosch wrote an interesting post: Why Agile Matters, talking about the fact that during the design and delivery of the product to the market, the environment and therefore the requirements might change. Read his post, unless as Jan states:

Concluding, if you’re able to perfectly predict the optimal set of requirements for a system or product years ahead of the start of production or deployment and if you’re able to accurately predict the effect of each requirement on the user, the customer and the quality attributes of the system, then you don’t need Agile.

What I like about Jan’s post is the fact that we should anticipate changing requirements. This statement combined with Rebecka’s call for being ready for exponential change, with an emerging need for sustainability, might help you discuss in your company how a modern New Product Introduction process might look like, including requirements for a sustainable future that might come in later (per current situation) or can become a practice for the future

Conclusion

Now is the disruptive moment to break with the old ways of working. Develop plans for the new Beyond-COVID19-society. Force yourselves to work in more sustainable modes (digital/virtual), develop sustainable products or services (a circular economy), and keep on learning. Perhaps we will meet virtually during the upcoming PLM Innovation Forum?

Note: You have reached the end of this post, which means you took the time to read it all. Now if you LIKE or DISLIKE the content, share it in a comment. Digital communication is the future. Just chasing for Likes is a skin-deep society. We need arguments.

Looking forward to your feedback.

Meanwhile, two weeks of a partial lockdown have passed here in the Netherlands, and we have at least another 3 weeks to go according to the Dutch government. The good thing in our country, decisions, and measures are made based on the advice of experts as we cannot rely on politicians as experts.

Meanwhile, two weeks of a partial lockdown have passed here in the Netherlands, and we have at least another 3 weeks to go according to the Dutch government. The good thing in our country, decisions, and measures are made based on the advice of experts as we cannot rely on politicians as experts.

I realize that despite the discomfort for me, for many other people in other countries, it is a tragedy. My mental support to all of you, wherever you are.

So what has happened since Time to Think (and act differently)?

All Hands On Deck

In the past two weeks, it has become clear that a global pandemic as this one requires an “All Hands On Deck” mentality to support the need for medical supplies and in particular respiration devices, so-called ventilators. Devices needed to save the lives of profoundly affected people. I have great respect for the “hands” that are doing the work in infectious environments.

In the past two weeks, it has become clear that a global pandemic as this one requires an “All Hands On Deck” mentality to support the need for medical supplies and in particular respiration devices, so-called ventilators. Devices needed to save the lives of profoundly affected people. I have great respect for the “hands” that are doing the work in infectious environments.

Due to time pressure, innovative thinking is required to reach quick results in many countries. Companies and governmental organizations have created consortia to address the urgent need for ventilators. You will not see so much PR from these consortia as they are too busy doing the real work.

Still, you see from many of the commercial participants their marketing messages, why, and how they contribute to these activities.

One of the most promoted capabilities is PLM collaboration on the cloud as there is a need for real-time collaboration between people that are under lockdown. They have no time setting-up environments and learning new tools to use for collaboration.

One of the most promoted capabilities is PLM collaboration on the cloud as there is a need for real-time collaboration between people that are under lockdown. They have no time setting-up environments and learning new tools to use for collaboration.

For me, these are grand experiments, can a group of almost untrained people corporate fast in a new environment.

For sure, offering free cloud software, PLM, online CAD or 3D Printing, seems like a positive and compassionate gesture from these vendors. However, this is precisely the wrong perception in our PLM-world – the difficulty with PLM does not lie necessary in the tools.

For sure, offering free cloud software, PLM, online CAD or 3D Printing, seems like a positive and compassionate gesture from these vendors. However, this is precisely the wrong perception in our PLM-world – the difficulty with PLM does not lie necessary in the tools.

It is about learning to collaborate outside your silo.

Instead of “wait till I am done” it should become “this is what I have so far – use it for your progress”. This is a behavior change.

Do we have time for behavioral changes at this moment? Time will tell if the myth will become a reality so fast.

A lot of thinking

The past two weeks were weeks of thinking and talking a lot with PLM-interested persons along the globe using virtual meetings.

As long as the lockdowns will be there I keep on offering free of charge PLM coaching for individuals who want to understand the future of PLM.

As long as the lockdowns will be there I keep on offering free of charge PLM coaching for individuals who want to understand the future of PLM.

Through all these calls, I really became THE VirtualDutchman in many of these meetings (thanks Jagan for the awareness).

I realized that there is a lot of value in virtual meetings, in particular with the video option on. Although I believe video works well when you had met before as most of my current meetings were with people, I have met before face-to-face. Hence, you know each other facial expressions already.

I am a big fan of face-to-face meetings as I learned in the past 20 years that despite all the technology and methodology issues, the human factor is essential. We are not rational people; we live and decide by emotions.

I am a big fan of face-to-face meetings as I learned in the past 20 years that despite all the technology and methodology issues, the human factor is essential. We are not rational people; we live and decide by emotions.

Still, I conclude that in the future, I could do with less travel, as I see the benefits from current virtual meetings.

Less face-to-face meetings will help me to work on a more sustainable future as I am aware of the impact flying has on the environment. Also, talking with other people, there is the notion that after the lockdowns, virtual conferencing might become more and more a best practice. Good for the climate, the environment, and time savings – bad for traditional industries like aircraft carriers, taxis, and hotels. I will not say 100 % goodbye but reduce.

A Virtual PLM conference!