You are currently browsing the category archive for the ‘Knowledge Management’ category.

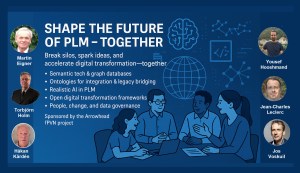

Together with Håkan Kårdén, we had the pleasure of bringing together 32 passionate professionals on November 4th to explore the future of PLM (Product Lifecycle Management) and ALM (Asset Lifecycle Management), inspired by insights from four leading thinkers in the field. Please, click on the image for more details.

Together with Håkan Kårdén, we had the pleasure of bringing together 32 passionate professionals on November 4th to explore the future of PLM (Product Lifecycle Management) and ALM (Asset Lifecycle Management), inspired by insights from four leading thinkers in the field. Please, click on the image for more details.

The meeting had two primary purposes.

- Firstly, we aimed to create an environment where these concepts could be discussed and presented to a broader audience, comprising academics, industrial professionals, and software developers. The group’s feedback could serve as a benchmark for them.

- The second goal was to bring people together and create a networking opportunity, either during the PLM Roadmap/PDT Europe conference, the day after, or through meetings established after this workshop.

Personally, it was a great pleasure to meet some people in person whose LinkedIn articles I had admired and read.

The meeting was sponsored by the Arrowhead fPVN project, a project I discussed in a previous blog post related to the PLM Roadmap/PDT Europe 2024 conference last year. Together with the speakers, we have begun working on a more in-depth paper that describes the similarities and the lessons learned that are relevant. This activity will take some time.

Therefore, this post only includes the abstracts from the speakers and links to their presentations. It concludes with a few observations from some attendees.

Reasoning Machines: Semantic Integration in Cyber-Physical Environments

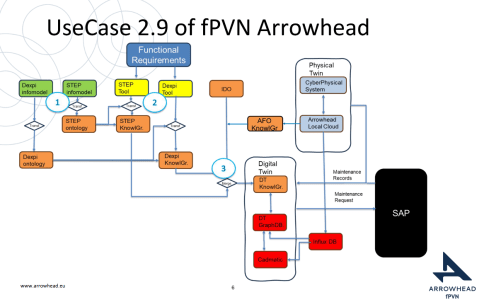

Torbjörn Holm / Jan van Deventer: The presentation discussed the transition from requirements to handover and operations, emphasizing the role of knowledge graphs in unifying standards and technologies for a flexible product value network

Torbjörn Holm / Jan van Deventer: The presentation discussed the transition from requirements to handover and operations, emphasizing the role of knowledge graphs in unifying standards and technologies for a flexible product value network

The presentation outlines the phases of the product and production lifecycle, including requirements, specification, design, build-up, handover, and operations. It raises a question about unifying these phases and their associated technologies and standards, emphasizing that the most extended phase, which involves operation, maintenance, failure, and evolution until retirement, should be the primary focus.

It also discusses seamless integration, outlining a partial list of standards and technologies categorized into three sections: “Modelling & Representation Standards,” “Communication & Integration Protocols,” and “Architectural & Security Standards.” Each section contains a table listing various technology standards, their purposes, and references. Additionally, the presentation includes a “Conceptual Layer Mapping” table that details the different layers (Knowledge, Service, Communication, Security, and Data), along with examples, functions, and references.

The presentation outlines an approach for utilizing semantic technologies to ensure interoperability across heterogeneous datasets throughout a product’s lifecycle. Key strategies include using OWL 2 DL for semantic consistency, aligning domain-specific knowledge graphs with ISO 23726-3, applying W3C Alignment techniques, and leveraging Arrowhead’s microservice-based architecture and Framework Ontology for scalable and interoperable system integration.

The utilized software architecture system, including three main sections: “Functional Requirements,” “Physical Twin,” and “Digital Twin,” each containing various interconnected components, will be presented. The Architecture includes today several Knowledge Graphs (KG): A DEXPI KG, A STEP (ISO 10303) KG, An Arrowhead Framework KG and under work the CFIHOS Semantics Ontology, all aligned.

👉The presentation: W3C Major standard interoperability_Paris

Beyond Handover: Building Lifecycle-Ready Semantic Interoperability

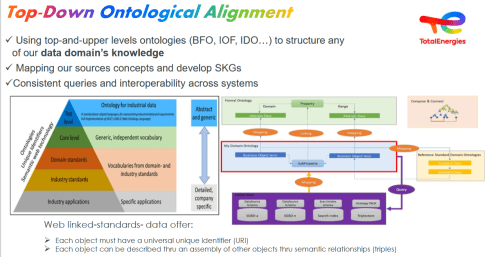

Jean-Charles Leclerc argued that Industrial data standards must evolve beyond the narrow scope of handover and static interoperability. To truly support digital transformation, they must embrace lifecycle semantics or, at the very least, be designed for future extensibility.

This shift enables technical objects and models to be reused, orchestrated, and enriched across internal and external processes, unlocking value for all stakeholders and managing the temporal evolution of properties throughout the lifecycle. A key enabler is the “pattern of change”, a dynamic framework that connects data, knowledge, and processes over time. It allows semantic models to reflect how things evolve, not just how they are delivered.

By grounding semantic knowledge graphs (SKGs) in such rigorous logic and aligning them with W3C standards, we ensure they are both robust and adaptable. This approach supports sustainable knowledge management across domains and disciplines, bridging engineering, operations, and applications.

Ultimately, it’s not just about technology; it’s about governance.

Being Sustainab’OWL (Web Ontology Language) by Design! means building semantic ecosystems that are reliable, scalable, and lifecycle-ready by nature.

Additional Insight: From Static Models to Living Knowledge

To transition from static information to living knowledge, organizations must reassess how they model and manage technical data. Lifecycle-ready interoperability means enabling continuous alignment between evolving assets, processes, and systems. This requires not only semantic precision but also a governance framework that supports change, traceability, and reuse, turning standards into operational levers rather than compliance checkboxes.

👉The presentation: Beyond Handover – Building Lifecycle Ready Semantic Interoperability

The first two presentations had a lot in common as they both come from the Asset Lifecycle Management domain and focus on an infrastructure to support assets over a long lifetime. This is particularly visible in the usage and references to standards such as DEXPI, STEP, and CFIHOS, which are typical for this domain.

The first two presentations had a lot in common as they both come from the Asset Lifecycle Management domain and focus on an infrastructure to support assets over a long lifetime. This is particularly visible in the usage and references to standards such as DEXPI, STEP, and CFIHOS, which are typical for this domain.

How can we achieve our vision of PLM – the Single Source of Truth?

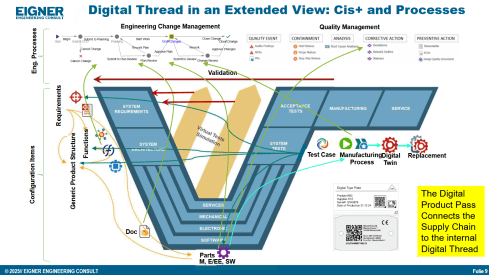

Martin Eigner stated that Product Lifecycle Management (PLM) has long promised to serve as the Single Source of Truth for organizations striving to manage product data, processes, and knowledge across their entire value chain. Yet, realizing this vision remains a complex challenge.

Achieving a unified PLM environment requires more than just implementing advanced software systems—it demands cultural alignment, organizational commitment, and seamless integration of diverse technologies. Central to this vision is data consistency: ensuring that stakeholders across engineering, manufacturing, supply chain, and service have access to accurate, up-to-date, and contextualized information along the Product Lifecycle. This involves breaking down silos, harmonizing data models, and establishing governance frameworks that enforce standards without limiting flexibility.

Emerging technologies and methodologies, such as Extended Digital Thread, Digital Twins, cloud-based platforms, and Artificial Intelligence, offer new opportunities to enhance collaboration and integrated data management.

However, their success depends on strong change management and a shared understanding of PLM as a strategic enabler rather than a purely technical solution. By fostering cross-functional collaboration, investing in interoperability, and adopting scalable architectures, organizations can move closer to a trustworthy single source of truth. Ultimately, realizing the vision of PLM requires striking a balance between innovation and discipline—ensuring trust in data while empowering agility in product development and lifecycle management.

👉The presentation: Martin – Workshop PLM Future 04_10_25

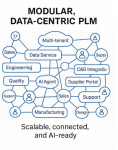

The Future is Data-Centric, Semantic, and Federated … Is your organization ready?

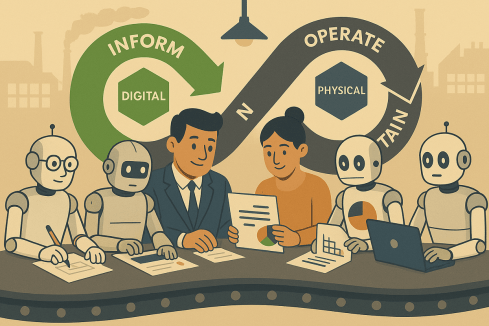

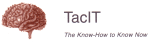

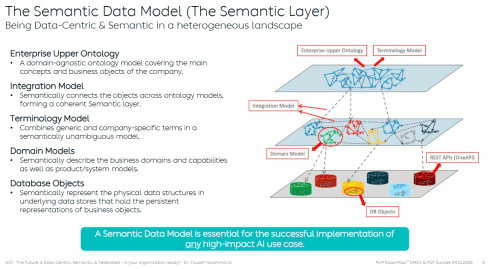

Yousef Hooshmand, who is currently working at NIO as PLM & R&D Toolchain Lead Architect, discussed the must-have relations between a data-centric approach, semantic models and a federated environment as the image below illustrates:

Why This Matters for the Future?

- Engineering is under unprecedented pressure: products are becoming increasingly complex, customers are demanding personalization, and development cycles must be accelerated to meet these demands. Traditional, siloed methods can no longer keep up.

- The way forward is a data-centric, semantic, and federated approach that transforms overwhelming complexity into actionable insights, reduces weeks of impact analysis to minutes, and connects fragmented silos to create a resilient ecosystem.

- This is not just an evolution, but a fundamental shift that will define the future of systems engineering. Is your organization ready to embrace it?

👉The presentation: The Future is Data-Centric, Semantic, and Federated.

Some of first impressions

👉 Bhanu Prakash Ila from Tata Consultancy Services– you can find his original comment in this LinkedIn post

Key points:

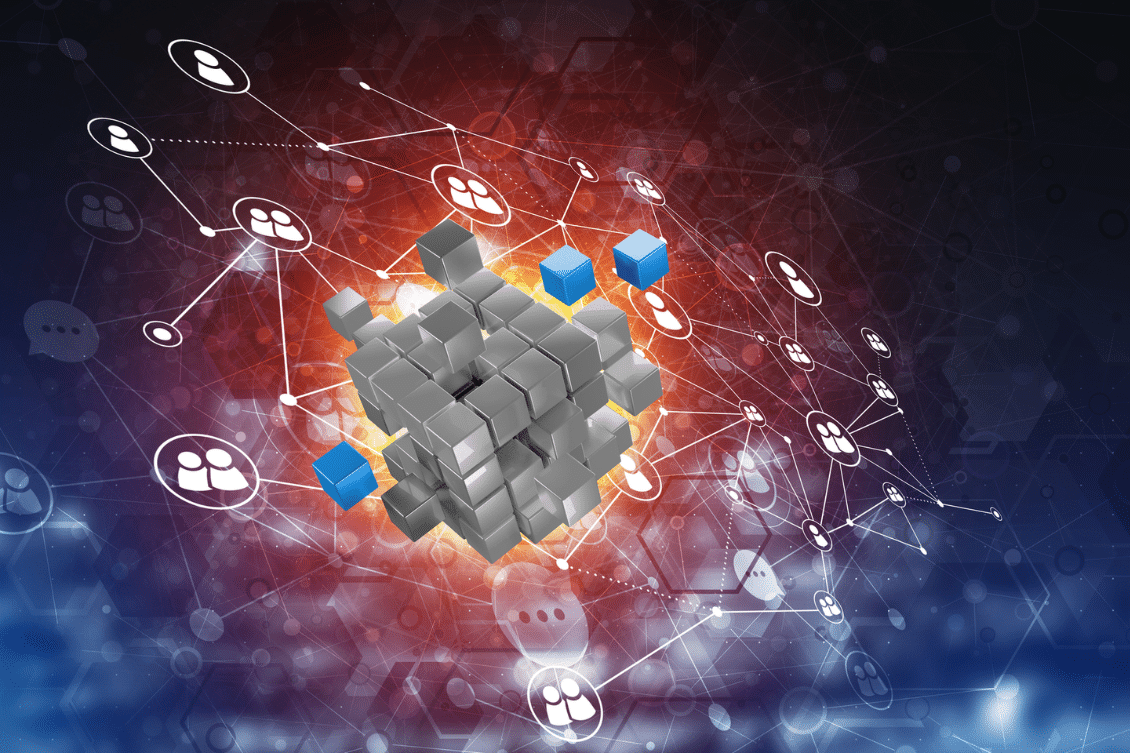

- Traditional PLM architectures struggle with the fundamental challenge of managing increasingly complex relationships between product data, process information, and enterprise systems.

- Ontology-Based Semantic Models – The Way Forward for PLM Digital Thread Integration: Ontology-based semantic models address this by providing explicit, machine-interpretable representations of domain knowledge that capture both concepts and their relationships. These lay the foundations for AI-related capabilities.

It’s clear that as AI, semantic technologies, and data intelligence mature, the way we think and talk about PLM must evolve too – from system-centric to value-driven, from managing data to enabling knowledge and decisions.

A quick & temporary conclusion

Typically, I conclude my blog posts with a summary. However, this time the conclusion is not there yet. There is work to be done to align concepts and understand for which industry they are most applicable. Using standards or avoiding standards as they move too slowly for the business is a point of ongoing discussion. The takeaway for everyone in the workshop was that data without context has no value. Ontologies, semantic models and domain-specific methodologies are mandatory for modern data-driven enterprises. You cannot avoid this learning path by just installing a graph database.

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

Often, PLM implementations are either IT-driven or business-driven to implement a need, and yes, there are people who need to work with it as the closing topic. Time and budget are spent on technology and process definitions, and people get trained. Often, only train the trainer, as there is no more budget or time to let the organization adapt, and rapid ROI is expected.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

The conference aims to start with the people, sharing human-related experiences and enabling networking between people – not only about the industry practices (there will be sessions and discussions on this topic too).

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

And if you are interested and have the opportunity, join us and meet some great thought leaders and others with this shared interest.

Why is modern PLM a dream?

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

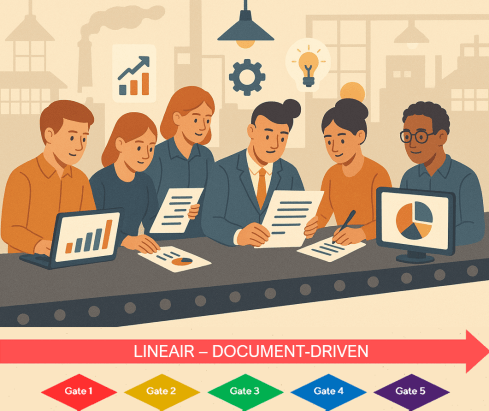

Traditional PLM

Traditional PLM is often associated with implementing a PLM system, mainly serving engineering. Downstream engineering data usage is usually pushed manually or through interfaces to other enterprise systems, like ERP, MES and service systems.

Traditional PLM is closely connected to the coordinated way of working: a linear process based on passing documents (drawings) and datasets (BOMs). Historically, CAD integrations have been the most significant characteristic of these systems.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

Unfortunately, most PLM implementations in the field are based on this approach and are sometimes characterized as advanced PDM.

You recognize traditional PLM thinking when people talk about the single source of truth.

Modern PLM

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

More on this challenge will be discussed later, as in our PLM community, most discussions are IT-driven: architectures, ontologies, and technologies – the WHAT.

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

I think this article from Oleg Shilovitsky, “Rethinking PLM: Is It Time to Move Beyond the Monolith?“ AND the discussion thread in this post is a must-read. I will not quote the content here again.

After reading Oleg’s post and the comments, come back here

The reason for this approach: It is a perfect example of the connected approach. Instead of collecting all the information inside one post (book ?), the information can be accessed by following digital threads. It also illustrates that in a connected environment, you do not own the data; the data comes from accountable people.

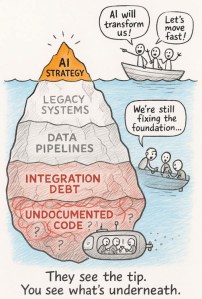

Building such a modern infrastructure is challenging when your company depends mainly on its legacy—the people, processes and systems. Where to change, how to change and when to change are questions that should be answered at the top and require a vision and evolutionary implementation strategy.

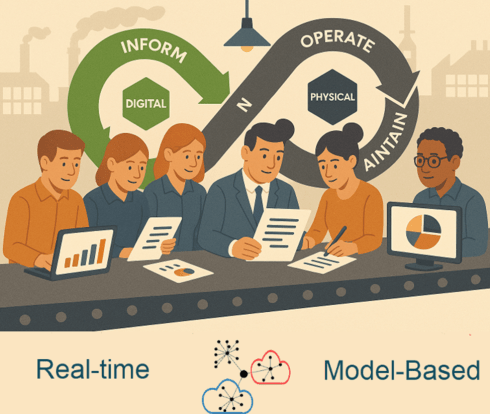

A company should build a layer of connected data on top of the coordinated infrastructure to support users in their new business roles. Implementing a digital twin has significant business benefits if the twin is used to connect with real-time stakeholders from both the virtual and physical worlds.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

Again, there are no quotes from the article; you know how to dive deeper into the connected topic.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

You might recognize modern PLM thinking when people talk about the nearest source of truth and the single source of change.

Is Intelligent PLM the next step?

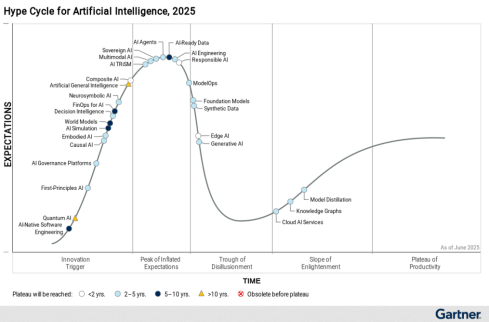

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

The advantage of these platforms is that they are all created data-driven, not suffering from legacy issues.

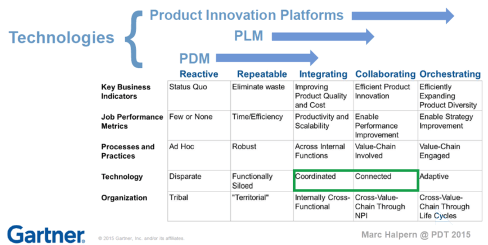

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

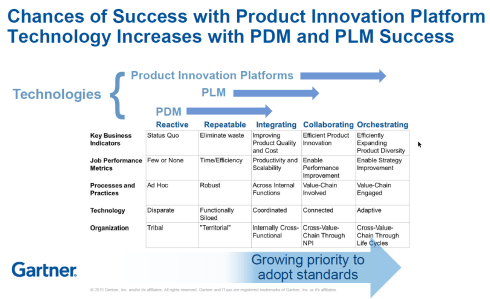

At that time, Peter Bilello shared the CIMdata perspective, Marc Halpern (Gartner) showed my favorite positioning slide (below), and Martin Eigner presented, according to my notes, this digital trend in PLM in his session:” What becomes different for PLM/SysLM?”

2015 Marc Halpern – the Product Innovation Platform (PIP)

While concepts started to become clearer, businesses mainly remained the same. The coordinated approach is the most convenient, as you do not need to reshape your organization. And then came the LLMs that changed everything.

Suddenly, it became possible for organizations to unlock knowledge hidden in their company and make it accessible to people.

Without drastically changing the organization, companies could now improve people’s performance and output (theoretically); therefore, it became a topic of interest for management. One big challenge for reaping the benefits is the quality of the data and information accessed.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

You might recognize intelligent PLM thinking when people and AI agents talk about the most likely truth.

Conclusion

Are you interested in these topics and their meaning for your business and career? Join me at the Share PLM conference, where I will discuss “The dilemma: Humans cannot transform—help them!” Time to work on your dreams!

In the last two weeks, I had some interesting observations and discussions related to the need to have a (PLM) vision. I placed the word PLM between brackets, as PLM is no longer an isolated topic in an organization. A PLM strategy should align with the business strategy and vision.

In the last two weeks, I had some interesting observations and discussions related to the need to have a (PLM) vision. I placed the word PLM between brackets, as PLM is no longer an isolated topic in an organization. A PLM strategy should align with the business strategy and vision.

To be clear, if you or your company wants to survive in the future, you need a sustainable vision and a matching strategy as the times they are a changing, again!

I love the text: “Don’t criticize what you can’t understand” – a timeless quote.

First, there was Rob Ferrone’s article: Multi-view. Perspectives that shape PLM – a must-read to understand who to talk to about which dimension of PLM – and it is worth browsing through the comments too – there you will find the discussions, and it helps you to understand the PLM players.

First, there was Rob Ferrone’s article: Multi-view. Perspectives that shape PLM – a must-read to understand who to talk to about which dimension of PLM – and it is worth browsing through the comments too – there you will find the discussions, and it helps you to understand the PLM players.

Note: it is time that AI-generated images become more creative 😉

Next, there is still the discussion started by Gareth Webb, Digital Thread and the Knowledge Graph, further stirred by Oleg Shilovitsky.

Next, there is still the discussion started by Gareth Webb, Digital Thread and the Knowledge Graph, further stirred by Oleg Shilovitsky.

Based on the likes and comments, it is clearly a topic that creates interaction – people are thinking and talking about it – the Digital Thread as a Service.

One of the remaining points in this debate is still the HOW and WHEN companies decide to implement a Digital Thread, a Knowledge Graph and other modern data concepts.

So far my impression is that most companies implement their digital enhancements (treads/graphs) in a bottom-up approach, not driven by a management vision but more like band-aids or places where it fits well, without a strategy or vision.

So far my impression is that most companies implement their digital enhancements (treads/graphs) in a bottom-up approach, not driven by a management vision but more like band-aids or places where it fits well, without a strategy or vision.

The same week, we, Beatriz Gonzáles and I, recorded a Share PLM podcast session with Paul Kaiser from MHP Americas as a guest. Paul is the head of the Digital Core & Technology department, where he leads management and IT consulting services focused on end-to-end business transformation.

During our discussion, Paul mentioned the challenge in engagements when the company has no (PLM) vision. These companies expect external consultants to formulate and implement the vision – a recipe for failure due to wrong expectations.

During our discussion, Paul mentioned the challenge in engagements when the company has no (PLM) vision. These companies expect external consultants to formulate and implement the vision – a recipe for failure due to wrong expectations.

The podcast can be found HERE , and the session inspired me to write this post.

“We just want to be profitable“.

I believe it is a typical characteristic of small and medium enterprises that people are busy with their day-to-day activities. In addition, these companies rarely appoint new top management, which could shake up the company in a positive direction. These companies evolve …..

You often see a stable management team with members who grew up with the company and now monitor and guide it, watching its finances and competition. They know how the current business is running.

Based on these findings, there will be classical efficiency plans, i.e., cutting costs somewhere, dropping some non-performing products, or investing in new technology that they cannot resist. Still, minor process changes and fundamental organizational changes are not expected.

Based on these findings, there will be classical efficiency plans, i.e., cutting costs somewhere, dropping some non-performing products, or investing in new technology that they cannot resist. Still, minor process changes and fundamental organizational changes are not expected.

Most of the time, the efficiency plans provide single-digit benefits.

Everyone is happy when the company feels stable and profitable, even if the margins are under pressure. The challenge for this type of company without a vision is that they navigate in the dark when the outside world changes – like nowadays.

Everyone is happy when the company feels stable and profitable, even if the margins are under pressure. The challenge for this type of company without a vision is that they navigate in the dark when the outside world changes – like nowadays.

The world is changing drastically.

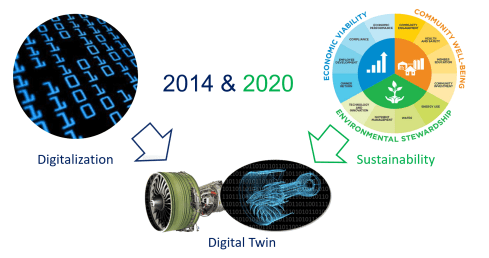

Since 2014, I have advocated for digital transformation in the PLM domain and explained it simply using the statement: From Coordinated to Connected, which already implies much complexity.

Moving from document/files to datasets and models, from a linear delivery model to a DevOps model, from waterfall to agile and many other From-To statements.

Moving From-To is a transformational journey, which means you will learn and adapt to new ways of working during the journey. Still, the journey should have a target, directed by a vision.

However, not many companies have started this journey because they just wanted to be profitable.

“Why should we go in an unknown direction?”

With the emergence of sustainability regulations, e.g., GHG and ESG reporting, carbon taxes, material reporting, and the Digital Product Passport, which goes beyond RoHS and REACH and applies to much more industries, there came the realization that there is a need to digitize the product lifecycle processes and data beyond documents. Manual analysis and validation are too expensive and unreliable.

At this stage, there is already a visible shift between companies that have proactively implemented a digitally connected infrastructure and companies that still see compliance with regulations as an additional burden. The first group brings products to the market faster and more sustainably than the second group because sustainability is embedded in their product lifecycle management.

![]() And just when companies felt they could manage the transition from Coordinated to Coordinated and Connected, there was the fundamental disruption of embedded AI in everything, including the PLM domain.

And just when companies felt they could manage the transition from Coordinated to Coordinated and Connected, there was the fundamental disruption of embedded AI in everything, including the PLM domain.

- Large Language Models LLMs can go through all the structured and unstructured data, providing real-time access to information, which would take experts years of learning. Suddenly, everyone can behave experienced.

- The rigidness of traditional databases can be complemented by graph databases, which visualize knowledge that can be added and discovered on the fly without IT experts. Suddenly, an enterprise is no longer a collection of interfaced systems but a digital infrastructure where data flows – some call it Digital Thread as a Service (DTaaS)

- Suddenly, people feel overwhelmed by complexity, leading to fear and doing nothing, a killing attitude.

I cannot predict what will happen in the next 5 to 10 years, but I am sure the current change is one we have never seen before. Be prepared and flexible to act—to be on top of the wave, you need the skills to get there.

Building the vision

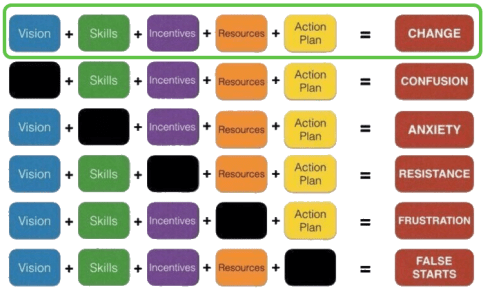

The image below might not be new to you, but it illustrates how companies could manage a complex change.

I will focus only on the first two elements, Vision and Skills, as they are the two elements we as individuals can influence. The other elements are partly related to financial and business constraints.

Vision and Skills are closely related because you can have a fantastic vision. Still, to realize the vision, you need a strategy driven by relevant skills to define and implement the vision. With the upcoming AI, traditional knowledge-based skills will suddenly no longer be a guarantee for future jobs.

AI brings a new dimension for everyone working in a company. To remain relevant, you must develop your unique human skills that make you different from robots or libraries. The importance of human skills might not be new, but now it has become apparent with the explosion of available AI tools.

AI brings a new dimension for everyone working in a company. To remain relevant, you must develop your unique human skills that make you different from robots or libraries. The importance of human skills might not be new, but now it has become apparent with the explosion of available AI tools.

Look at this 2013 table about predicted skills for the future – You can read the details in their paper, The Future of Employment, by Carl Benedikt Frey & Michael Osborne(2013) – click on the image to see the details.

In my 2015 PLM lectures, I joked when showing this image that my job as a PLM coach was secured, because you are a recreational therapist and firefighter combined.

It has become a reality, and many of my coaching engagements nowadays focus on explaining and helping companies formulate and understand their possible path forward. Helping them align and develop a vision of progressing in a volatile world – the technology is there, the skills and the vision are often not yet there.

![]() Combining business strategy with in-depth PLM concepts is a relatively unique approach in our domain. Many of my peers have other primary goals, such as Rob Ferrone’s article: Multi-view. Perspectives that shape PLM explains.

Combining business strategy with in-depth PLM concepts is a relatively unique approach in our domain. Many of my peers have other primary goals, such as Rob Ferrone’s article: Multi-view. Perspectives that shape PLM explains.

And then there is …..

The Share PLM Summit 2025

Modern times need new types of information building and sharing, and therefore, I am eager to participate in the upcoming Share PLM Summit at the end of May in Jerez (Spain).

See the link to the event here: The Share PLM Summit 2025 – with the theme: Where People Take Center Stage to Drive Human-Centric Transformations in PLM and Lead the Future of Digital Innovation.

In my lecture, I will focus on how humans can participate in/anticipate this digital AI-based transformation. But even more, I look forward to the lectures and discussions with other peers, as more people-centric thought leaders and technology leaders will join us:

Quoting Oleg Shilovitsky:

PLM was built to manage data, but too often, it makes people work for the data instead of working the other way around. At Share PLM Summit 2025, I’ll discuss how PLM must evolve from rigid, siloed systems to intelligent, connected, and people-centric data architectures.

We need both, and I hope to see you at the end of May at this unique PLM conference.

Conclusion

We are at a decisive point of the digital transformation as AI will challenge people skills, knowledge and existing ways of working. Combined with a turbulent world order, we need to prepare to be flexible and resilient. Therefore instead of focusing on current best practices we need to prepare for the future – a vision developed by skilled people. How will you or your company work on that? Join us if you have questions or ideas.

In the past two weeks, I had several discussions with peers in the PLM domain about their experiences.

In the past two weeks, I had several discussions with peers in the PLM domain about their experiences.

Some of them I met after a long time again face-to-face at the LiveWorx 2023 event. See my review of the event here: The Weekend after LiveWorx 2023.

And there were several interactions on LinkedIn, leading to a more extended discussion thread (an example of a digital thread ?) or a Zoom discussion (a so-called 2D conversation).

To complete the story, I also participated in two PLM podcasts from Share PLM, where we interviewed Johan Mikkelä (currently working at FLSmidth) and, in the second episode Issam Darraj (presently working at ABB) about their PLM experiences. Less a discussion, more a dialogue, trying to grasp the non-documented aspects of PLM. We are looking for your feedback on these podcasts too.

To complete the story, I also participated in two PLM podcasts from Share PLM, where we interviewed Johan Mikkelä (currently working at FLSmidth) and, in the second episode Issam Darraj (presently working at ABB) about their PLM experiences. Less a discussion, more a dialogue, trying to grasp the non-documented aspects of PLM. We are looking for your feedback on these podcasts too.

All these discussions led to a reconfirmation that if you are a PLM practitioner, you need a broad skillset to address the business needs, translate them into people and process activities relevant to the industry and ultimately implement the proper collection of tools.

![]() As a sneaky preview for the podcast sessions, we asked both Johan and Issam about the importance of the tools. I will not disclose their answers here; you have to listen.

As a sneaky preview for the podcast sessions, we asked both Johan and Issam about the importance of the tools. I will not disclose their answers here; you have to listen.

Let’s look at some of the discussions.

NOTE: Just before pushing the Publish button, Oleg Shilovitsky published this blog article PLM Project Failures and Unstoppable PLM Playbook. I will comment on his points at the end of this post. It is all part of the extensive discussion.

NOTE: Just before pushing the Publish button, Oleg Shilovitsky published this blog article PLM Project Failures and Unstoppable PLM Playbook. I will comment on his points at the end of this post. It is all part of the extensive discussion.

PLM, LinkedIn and complexity

The most popular discussions on LinkedIn are often related to the various types of Bills of Materials (eBOM, mBOM, sBOM), Part numbering schemes (intelligent or not), version and revision management and the famous FFF discussions.

The most popular discussions on LinkedIn are often related to the various types of Bills of Materials (eBOM, mBOM, sBOM), Part numbering schemes (intelligent or not), version and revision management and the famous FFF discussions.

This post: PLM and Configuration Management Best Practices: Working with Revisions, from Andreas Lindenthal, was a recent example that triggered others to react.

I had some offline discussions on this topic last week, and I noticed Frédéric Zeller wrote his post with the title PLM, LinkedIn and complexity, starting his post with (quote):

I am stunned by the average level of posts on the PLM on LinkedIn.

I’m sorry, but in 2023 :

- Part Number management (significant, non-significant) should no longer be a problem.

- Revision management should no longer be a question.

- Configuration management theory should no longer be a question.

- Notions of EBOMs, MBOMs … should no longer be a question.

So why are there still problems on these topics?

You can see from the at least 40+ comments that this statement created a lot of reactions, including mine. Apparently, these topics are touching many people worldwide, and there is no simple, single answer to each of these topics. And there are so many other topics relevant to PLM.

Talking later with Frederic for one hour in a Zoom session, we discussed the importance of the right PLM data model.

Talking later with Frederic for one hour in a Zoom session, we discussed the importance of the right PLM data model.

I also wrote a series about the (traditional) PLM data model: The importance of a (PLM) data model.

Frederic is more of a PLM architect; we even discussed the wording related to the EBOM and the MBOM. A topic that I feel comfortable discussing after many years of experience seeing the attempts that failed and the dreams people had. And this was only one aspect of PLM.

You also find the discussion related to a PLM certification in the same thread. How would you certify a person as a PLM expert?

You also find the discussion related to a PLM certification in the same thread. How would you certify a person as a PLM expert?

There are so many dimensions to PLM. Even more important, the PLM from 10-15 years ago (more of a system discussion) is no longer the PLM nowadays (a strategy and an infrastructure) –

This is a crucial difference. Learning to use a PLM tool and implement it is not the same as building a PLM strategy for your company. It is Tools, Process, People versus Process, People, Tools and Data.

Time for Methodology workshops?

I recently discussed with several peers what we could do to assist people looking for best practices discussion and lessons learned. There is a need, but how to organize them as we cannot expect this to be voluntary work.

![]() In the past, I suggested MarketKey, the organizer of the PI DX events, extend its theme workshops. For example, instead of a 45-min Focus group with a short introduction to a theme (e.g., eBOM-mBOM, PLM-ERP interfaces), make these sessions last at least half a day and be independent of the PLM vendors.

In the past, I suggested MarketKey, the organizer of the PI DX events, extend its theme workshops. For example, instead of a 45-min Focus group with a short introduction to a theme (e.g., eBOM-mBOM, PLM-ERP interfaces), make these sessions last at least half a day and be independent of the PLM vendors.

Apparently, it did not fit in the PI DX programming; half a day would potentially stretch the duration of the conference and more and more, we see two days of meetings as the maximum. Longer becomes difficult to justify even if the content might have high value for the participants.

I observed a similar situation last year in combination with the PLM roadmap/PDT Europe conference in Gothenburg. Here we had a half-day workshop before the conference led by Erik Herzog(SAAB Aeronautics)/ Judith Crockford (Europstep) to discuss concepts related to federated PLM – read more in this post: The week after PLM Roadmap/PDT Europe 2022.

I observed a similar situation last year in combination with the PLM roadmap/PDT Europe conference in Gothenburg. Here we had a half-day workshop before the conference led by Erik Herzog(SAAB Aeronautics)/ Judith Crockford (Europstep) to discuss concepts related to federated PLM – read more in this post: The week after PLM Roadmap/PDT Europe 2022.

It reminded me of an MDM workshop before the 2015 Event, led by Marc Halpern from Gartner. Unfortunately, the federated PLM discussion remained a pretty Swedish initiative, and the follow-up did not reach a wider audience.

And then there are the Aerospace and Defense PLM action groups that discuss moderated by CIMdata. It is great that they published their findings (look here), although the best lessons learned are during the workshops.

And then there are the Aerospace and Defense PLM action groups that discuss moderated by CIMdata. It is great that they published their findings (look here), although the best lessons learned are during the workshops.

However, I also believe the A&D industry cannot be compared to a mid-market machinery manufacturing company. Therefore, it is helpful for a smaller audience only.

And here, I inserted a paragraph dedicated to Oleg’s recent post, PLM Project Failures and Unstoppable PLM Playbook – starting with a quote:

How to learn to implement PLM? I wrote about it in my earlier article – PLM playbook: how to learn about PLM? While I’m still happy to share my knowledge and experience, I think there is a bigger need in helping manufacturing companies and, especially PLM professionals, with the methodology of how to achieve the right goal when implementing PLM. Which made me think about the Unstoppable PLM playbook ©.

I found a similar passion for helping companies to adopt PLM while talking to Helena Gutierrez. Over many conversations during the last few months, we talked about how to help manufacturing companies with PLM adoption. The unstoppable PLM playbook is still a work in progress, but we want to start talking about it to get your feedback and start the conversation.

It is an excellent confirmation of the fact that there is a need for education and that the education related to PLM on the Internet is not good enough.

As a former teacher in Physics, I do not believe in the Unstoppable PLM Playbook, even if it is a branded name. Many books are written by specific authors, giving their perspectives based on their (academic) knowledge.

As a former teacher in Physics, I do not believe in the Unstoppable PLM Playbook, even if it is a branded name. Many books are written by specific authors, giving their perspectives based on their (academic) knowledge.

Are they useful? I believe only in the context of a classroom discussion where the applicability can be discussed,

Therefore my questions to vendor-neutral global players, like CIMdata, Eurostep, Prostep, SharePLM, TCS and others, are you willing to pick up this request? Or are there other entities that I missed? Please leave your thoughts in the comments. I will be happy to assist in organizing them.

Therefore my questions to vendor-neutral global players, like CIMdata, Eurostep, Prostep, SharePLM, TCS and others, are you willing to pick up this request? Or are there other entities that I missed? Please leave your thoughts in the comments. I will be happy to assist in organizing them.

There are many more future topics to discuss and document too.

- What about the potential split of a PLM infrastructure between Systems of Record & Systems of Engagement?

- What about the Digital Thread, a more and more accepted theme in discussions, but what is the standard definition?

- Is it traceability as some vendors promote it, or is it the continuity of data, direct usable in various contexts – the DevOps approach?

Who likes to discuss methodology?

When asking myself this question, I see the analogy with standards. So let’s look at the various players in the PLM domain – sorry for the immense generalization.

When asking myself this question, I see the analogy with standards. So let’s look at the various players in the PLM domain – sorry for the immense generalization.

Strategic consultants: standards are essential, but spare me the details.

Vendors: standards are limiting the unique capabilities of my products

Implementers: two types – Those who understand and use standards as they see the long-term benefits. Those who avoid standards as it introduces complexity.

Companies: they love standards if they can be implemented seamlessly.

Universities: they love to explore standards and help to set the standards even if they are not scalable

Just replace standards with methodology, and you see the analogy.

We like to discuss the methodology.

As I mentioned in the introduction, I started to work with Share PLM on a series of podcasts where we interview PLM experts in the field that have experience with the people, the process, the tools and the data side. Through these interviews, you will realize PLM is complex and has become even more complicated when you consider PLM a strategy instead of a tool.

As I mentioned in the introduction, I started to work with Share PLM on a series of podcasts where we interview PLM experts in the field that have experience with the people, the process, the tools and the data side. Through these interviews, you will realize PLM is complex and has become even more complicated when you consider PLM a strategy instead of a tool.

We hope these podcasts might be a starting point for further discussion – either through direct interactions or through contributions to the podcast. If you have PLM experts in your network that can explain the complexity of PLM from various angles and have the experience. Please let us know – it is time to share.

Conclusion

By switching gears, I noticed that PLM has become complex. Too complex for a single person to master. With an aging traditional PLM workforce (like me), it is time to consolidate the best practices of the past and discuss the best practices for the future. There are no simple answers, as every industry is different. Help us to energize the PLM community – your thoughts/contributions?

Imagine you are a supplier working for several customers, such as big OEMs or smaller companies. In Dec 2020, I wrote about PLM and the Supply Chain because it was an underexposed topic in many companies. Suppliers need their own PLM and IP protection and work as efficiently as possible with their customers, often the OEMs.

Imagine you are a supplier working for several customers, such as big OEMs or smaller companies. In Dec 2020, I wrote about PLM and the Supply Chain because it was an underexposed topic in many companies. Suppliers need their own PLM and IP protection and work as efficiently as possible with their customers, often the OEMs.

Most PLM implementations always start by creating the ideal internal collaboration between functions in the enterprise. Historically starting with R&D and Engineering, next expanding to Manufacturing, Services and Marketing. Most of the time in this logical order.

In these implementations, people are not paying much attention to the total value chain, customers and suppliers. And that was one of the interesting findings at that time, supported by surveys from Gartner and McKinsey:

- Gartner: Companies reported improvements in the accuracy of product data and product development as the main benefit of their PLM implementation. They did not see so much of a reduced time to market or reduced product development costs. After analysis, Gartner believes the real issue is related to collaboration processes and supply chain practices. Here the lead times did not change, nor did the number of changes.

- McKinsey: In their article, The Case for Digital Reinvention, digital supply chains were mentioned as the area with the potential highest ROI; however, as the image shows below, it was the area with the lowest investment at that time.

In 2020 we were in the middle of broken supply chains and wishful thinking related to digital transformation, all due to COVID-19.

In 2020 we were in the middle of broken supply chains and wishful thinking related to digital transformation, all due to COVID-19.

Meanwhile, the further digitization in PLM (systems of engagement) and the new topic, Sustainability of the supply chain, became visible.

Therefore it is time to make a status again, also driven by discussions in the past few weeks.

The old “connected” approach (loose-loose).

A preferred way for OEMs in the past was to have the Supplier or partner directly work in their PLM environment. The OEM could keep control of the product development process and the incremental maturity of the BOM, where the Supplier could connect their part data and designs to the OEM environment. T

A preferred way for OEMs in the past was to have the Supplier or partner directly work in their PLM environment. The OEM could keep control of the product development process and the incremental maturity of the BOM, where the Supplier could connect their part data and designs to the OEM environment. T

The advantage for the OEM is clear – direct visibility of the supplier data when available. The benefit for the Supplier could also be immediate visibility of the broader context of the part they are responsible for.

However, the disadvantages for a supplier are more significant. Working in the OEM environment exposes all your IP and hinders knowledge capitalization from the Supplier. Not a big thing for perhaps a tier 3 supplier; however, the more advanced the products from the Supplier are, the higher the need to have its own PLM environment.

However, the disadvantages for a supplier are more significant. Working in the OEM environment exposes all your IP and hinders knowledge capitalization from the Supplier. Not a big thing for perhaps a tier 3 supplier; however, the more advanced the products from the Supplier are, the higher the need to have its own PLM environment.

Therefore the old connected approach is a loose-loose relationship in particular for the Supplier and even for the OEM (having less knowledgeable suppliers)

The modern “connected” approach (wins t.b.d.)

In this situation, the target infrastructure is a digital infrastructure, where datasets are connected in real-time, providing the various stakeholders in engagement access to a filtered set of data relevant to their roles.

In this situation, the target infrastructure is a digital infrastructure, where datasets are connected in real-time, providing the various stakeholders in engagement access to a filtered set of data relevant to their roles.

In my terminology, I refer to them as Systems of Engagement, as the target is that all stakeholders work in this environment.

The counterpart of Systems of Engagement is the Systems of Record, which provides a product baseline, manufacturing baseline, and configuration baseline of information consumed by other disciplines.

These baselines are often called Bills of Information, and the traditional PLM system has been designed as a System of Record. Major Bills of Information are the eBOM, the mBOM and sometimes people talk about the sBOM(service BOM).

These baselines are often called Bills of Information, and the traditional PLM system has been designed as a System of Record. Major Bills of Information are the eBOM, the mBOM and sometimes people talk about the sBOM(service BOM).

Typical examples of Systems of Engagement I have seen in alphabetical order are:

- Arena Solutions has a long-term experience in BOM collaboration between engineering teams, suppliers and contract manufacturers.

- CATENA-X might be a strange player in this list, as CATENA-X is more a German Automotive consortium targeting digital collaboration between stakeholders, ensuring security and IP protection.

- Colab is a provider of cloud-based collaboration software allowing design teams and suppliers to work in real time together.

- OnShape – a cloud-based collaborative product design environment for dispersed engineering teams and partners.

- OpenBOM – a SaaS solution focusing on BOM collaboration connected to various CAD systems along with design teams and their connected suppliers

These are some of the Systems of Engagement I am aware of. They focus on specific value streams that can improve the targeted time to market and product introduction efficiency. In companies with no extensive additional PLM infrastructure, they can become crucial systems of engagement.

The main challenge for these systems of engagement is how they will connect to traditional Systems or Records – the classical PLM systems that we know in the market (Aras, Dassault, PTC, Siemens).

The main challenge for these systems of engagement is how they will connect to traditional Systems or Records – the classical PLM systems that we know in the market (Aras, Dassault, PTC, Siemens).

Image on the left from a presentation done by Eric Herzog from SAAB at last year’s CIMdata/PDT conference.

You can read more about this here.

When establishing a mix of Systems of Engagement and Systems of Record in your organization digitally connected, we will see overall benefits. My earlier thoughts, in general, are here: Time to split PLM?

The almost Connected approach

As I mentioned, in most companies, it is already challenging to manage their internal System of Record, which is needed for current operations and the traceability of information. In addition, most of the data stored in these systems is document-driven, not designed for real-time collaboration. So how would these companies collaborate with their suppliers?

The Model-Based Enterprise

In the bigger image below, I am referring to an image published by Jennifer Herron from her book Re-use Your CAD, where she describes the various stages of interaction between engineering, manufacturing and the extended enterprise.

In the bigger image below, I am referring to an image published by Jennifer Herron from her book Re-use Your CAD, where she describes the various stages of interaction between engineering, manufacturing and the extended enterprise.

Her mission is to promote and educate organizations in moving to a Model-Based Definition and, in the long term, to a Model-Base Enterprise.

The ultimate target of information exchange in this diagram is that the OEM and the Supplier are separate entities. However, they can exchange Digital Product Definition Packages and TDPs over the web (electronically). In this exchange, we have a mix of systems of engagement and systems of record on the OEM and Supplier sides.

Depending on the type of industry, in my ecosystem of companies, many suppliers are still at level 2, dreaming or pushed to become level 3, illustrating there is a difficult job to do – learning new practices. And why would you move to the next level?

Depending on the type of industry, in my ecosystem of companies, many suppliers are still at level 2, dreaming or pushed to become level 3, illustrating there is a difficult job to do – learning new practices. And why would you move to the next level?

Every step can have significant benefits, as reported by companies that did this.

So what’s stopping your company from moving ahead? People, Processes, Skills, Work Pressure? It is one of the most common excuses: “We are too busy, no time to improve”.

A supply chain collaboration hub

On March 21, I discussed with Magnus Färneland from Eurostep their cloud-based PLM collaboration hub, ShareAspace. You can read the interview here: PLM and Supply Chain Collaboration

I believe this concept can be compelling for a connected enterprise. The OEM and the Supplier share (or connect) only the data they want to share, preferably based on the PLCS data schema (ISO 10303-239).

In a primitive approach, this can be BOM structures with related files; however, it could become a real model-based connection hub in the advanced mode. “

Now you ask yourself why this solution is not booming.

In my opinion, there are several points to consider:

- Who designs, operates and maintains the collaboration hub?

It is likely not the suppliers, and when the OEM takes ownership, they might believe there is no need for the extra hub; just use the existing PLM infrastructure. - Could a third party find a niche market for this? Eurostep has already been working on this for many years, but adopting the concept seems higher in de BIM or Asset Management domains. Here the owner/operator sees the importance of a collaboration hub.

A final remark, we are still far from a connected enterprise; concepts like Catena-X and others need to become mature to serve as a foundation – there is a lot of technology out there -now we need the skilled people and tested practices to use the right technology and tune solutions concepts.

A final remark, we are still far from a connected enterprise; concepts like Catena-X and others need to become mature to serve as a foundation – there is a lot of technology out there -now we need the skilled people and tested practices to use the right technology and tune solutions concepts.

Sustainability demands a connected enterprise.

I focused on the Supplier dilemma this time because it is one of the crucial aspects of a circular economy and sustainable product development.

I focused on the Supplier dilemma this time because it is one of the crucial aspects of a circular economy and sustainable product development.

Only by using virtual models of the To-Be products/systems can we seriously optimize them. Virtual models and Digital Twins do not run on documents; they require accurate data from anywhere connected.

You can read more details in my post earlier this year: MBSE and Sustainability or look at the PLM and Sustainability recording on our PLM Global Green Alliance YouTube channel.

Conclusion

Due to various discussions I recently had in the field, it became clear that the topic of supplier integration in a best-connected manner is one of the most important topics to address in the near future. We cannot focus longer on our company as an isolated entity – value streams implemented in a connected manner become a must.

And now I am going to enjoy Liveworx in Boston, learning, discussing and understanding more about what PTC is doing and planning in the context of digital transformation and sustainability. More about that in my next post: The week(end) after Liveworx 2023 (to come)

Happy New Year to all of you, and may this year be a year of progress in understanding and addressing the challenges ahead of us.

Happy New Year to all of you, and may this year be a year of progress in understanding and addressing the challenges ahead of us.

To help us focus, I selected three major domains I will explore further this year. These domains are connected – of course – as nothing is isolated in a world of System Thinking. Also, I wrote about these domains in the past, as usually, noting happens out of the blue.

Meanwhile, there are a lot of discussions related to Artificial Intelligence (AI), in particular ChatGPT (openAI). But can AI provide the answers? I believe not, as AI is mainly about explicit knowledge, the knowledge you can define by (learning) algorithms.

Meanwhile, there are a lot of discussions related to Artificial Intelligence (AI), in particular ChatGPT (openAI). But can AI provide the answers? I believe not, as AI is mainly about explicit knowledge, the knowledge you can define by (learning) algorithms.

Expert knowledge, often called Tacit knowledge, is the knowledge of the expert, combining information from different domains into innovative solutions.

![]() I started my company, TacIT, in 1999 because I thought (and still think) that Tacit knowledge is the holy grail for companies.

I started my company, TacIT, in 1999 because I thought (and still think) that Tacit knowledge is the holy grail for companies.

Let’s see with openAI how far we get ……

Digitization of the PLM domain

The PLM domain is suffering from its legacy data (documents), legacy processes (linear – mechanical focus) and legacy people (siloed). The statement is a generalization.

The PLM domain is suffering from its legacy data (documents), legacy processes (linear – mechanical focus) and legacy people (siloed). The statement is a generalization.

More details can be found in my blog series: The road to model-based and connected PLM.

So why should companies move to a model-based and connected approach for their PLM infrastructure?

![]() There are several reasons why companies may want to move to a model-based and connected approach for their Product Lifecycle Management (PLM) infrastructure:

There are several reasons why companies may want to move to a model-based and connected approach for their Product Lifecycle Management (PLM) infrastructure:

- Increased efficiency: A model-based approach allows for creating a digital twin of the product, which can be used to simulate and test various design scenarios, reducing the need for physical prototypes and testing. This can lead to faster and more efficient product development.

- Improved collaboration: A connected PLM infrastructure allows for better collaboration between different teams and departments, as all product-related information is stored in a central location and can be accessed by authorized personnel. This can improve communication and decision-making within the organization.

- Enhanced visibility: A model-based PLM system provides a single source of truth for all product-related data, giving management a clear and comprehensive view of the product development process. This can help identify bottlenecks and areas for improvement.

- Reduced risk: By keeping all product-related information in a centralized location, the risk of data loss or inconsistencies is reduced. This can help ensure that the product is developed in accordance with regulatory requirements and company standards.

- Increased competitiveness: A model-based and connected PLM infrastructure can help companies bring new products to market faster and with fewer errors, giving them a competitive advantage in their industry.

The text in italics was created by ChatGPT. After three learning cycles, this was the best answer I got. What we are missing in this answer is the innovative and transformative part that modern PLM can bring. Where is the concept of different ways of working, and new business models, both drivers for digitalization in many businesses?

Expert knowledge related to Federated PLM (or Killing the PLM Monolith) are topics you will not find through AI. This is, for me, the most interesting part to explore.

Expert knowledge related to Federated PLM (or Killing the PLM Monolith) are topics you will not find through AI. This is, for me, the most interesting part to explore.

We see the need but lack a common understanding of the HOW.

Algorithms will not innovate; for that, you need Tacit intelligence & Curiosity instead of Artificial Intelligence. More exploration of Federated PLM this year.

PLM and Sustainability

Last year as part of the PLM Global Green Alliance, we spoke with six different PLM solution providers to understand their sustainability goals, targets, and planned support for Sustainability. All of them confirmed Sustainability has become an important issue for their customers in 2022. Sustainability is on everyone’s agenda.

Last year as part of the PLM Global Green Alliance, we spoke with six different PLM solution providers to understand their sustainability goals, targets, and planned support for Sustainability. All of them confirmed Sustainability has become an important issue for their customers in 2022. Sustainability is on everyone’s agenda.

Why is PLM important for Sustainability?

PLM is important for Sustainability because a PLM helps organizations manage the entire lifecycle of a product, from its conception and design to its manufacture, distribution, use, and disposal. PLM can be important for Sustainability because it can help organizations make more informed decisions about the environmental impacts of their products and take steps to minimize those impacts throughout the product’s lifecycle.

PLM is important for Sustainability because a PLM helps organizations manage the entire lifecycle of a product, from its conception and design to its manufacture, distribution, use, and disposal. PLM can be important for Sustainability because it can help organizations make more informed decisions about the environmental impacts of their products and take steps to minimize those impacts throughout the product’s lifecycle.

For example, using PLM, an organization can consider the environmental impacts of the materials that are used in a product, the energy consumption of the manufacturing process, the product’s end-of-life disposal, and other factors that may affect its overall Sustainability. By considering these factors early in the design process, organizations can make more sustainable choices that reduce the environmental impact of their products over their lifecycle.

In addition, PLM can help organizations track and measure the Sustainability of their products over time, allowing them to continuously improve and optimize their products for Sustainability. This can be particularly important for organizations that are looking to meet regulatory requirements or consumer demand for more sustainable products.

Again not a wrong answer, but there is no mention of supporting the concepts of the circular economy, the potential of using digital twins at the various lifecycle stages of the products to perform trade-off studies in the virtual world, or to analyze the product behavior from the physical world in the virtual world for optimization.

This is (again), for me, the most interesting part to explore.

We see the need but lack the sense of urgency on the NOW.

More on Sustainability this year.

PLM Education at all levels

Historically PLM was framed as an “engineering thing” or a system needed for R&D/Engineering. Modern PLM is much broader and, unfortunately, more hard to grasp. I support the CIMdata definition:

CIMdata defines PLM as:

-

A strategic business approach that applies a consistent set of business solutions that support the collaborative creation, management, dissemination, and use of product definition information

-

Supporting the extended enterprise (customers, design and supply partners, etc.)

-

Spanning from concept to end of life of a product or plant

-

Integrating people, processes, business systems, and information

And even here, I would say this definition is challenging as the scope is extensive and could touch a lot of activities inside an enterprise. As I wrote last year in my series of posts related to PLM and Education, there is:

And even here, I would say this definition is challenging as the scope is extensive and could touch a lot of activities inside an enterprise. As I wrote last year in my series of posts related to PLM and Education, there is:

Strategy education (what are the trends/potential of digital transformation in the business domain related to the company’s products)

Methodology/ Academic Education (independent from tools, what are the best practices, where they reside, potential lessons learned and pitfalls to avoid)

Organisational Change Management (the Education and support needed to enable a business transformation – modern PLM is a transformation enabler)

By asking ChatGPT for their opinion, we learn the following:

Product Lifecycle Management (PLM) education refers to training and development programs that focus on teaching individuals how to effectively manage the lifecycle of a product within an organization. This can include a wide range of topics, such as product design, development, and engineering; supply chain management; quality control and assurance; and product retirement and disposal.

Product Lifecycle Management (PLM) education refers to training and development programs that focus on teaching individuals how to effectively manage the lifecycle of a product within an organization. This can include a wide range of topics, such as product design, development, and engineering; supply chain management; quality control and assurance; and product retirement and disposal.

Some specific types of PLM education that a company may need to provide to its employees include:

- Introduction to PLM: This type of training is designed to provide a general overview of PLM concepts and practices and may be suitable for employees who are new to the field or who have limited experience with PLM.

- PLM software training: Many companies use specialized PLM software to manage their product lifecycle processes. Employees who will be using this software will need training on how to use it effectively.

- Industry-specific PLM training: Depending on the industry in which a company operates, there may be specific PLM practices and regulations that employees need to be familiar with. For example, a company in the aerospace industry may need to provide training on PLM practices that are specific to the aerospace industry.

- Leadership and management training: For employees who are responsible for leading and managing PLM projects or teams, additional training in leadership and project management may be necessary.

Overall, the types of PLM education that a company needs will depend on the specific needs and goals of the organization, as well as the roles and responsibilities of its employees.

In general, we see from the answer that strategy and organizational change management beyond the tool are not considered.

This is precisely the area where a PLM Expert can help.

We see the need for Education, but we lack the willingness to invest in it.

Conclusion

It was an exciting exercise to combine my blogging thoughts with the answers from OpenAI. I am impressed by the given answers, knowing that the topics discussed about PLM are not obvious. On the other hand, I am not worried that AI will take over the job of the PLM consultant. As I mentioned before, the difference between Explicit Knowledge and Tacit Knowledge is clear, and business transformations will largely depend on the usage of Tacit knowledge.

I am curious about your experiences and will follow the topics mentioned in this post and write about them with great interest.

With great pleasure, I am writing this post, part of a tradition that started for me in 2014. Posts starting with “The weekend after …. “describing what happened during a PDT conference, later the event merged with CIMdata becoming THE PLM event for discussions beyond marketing.

With great pleasure, I am writing this post, part of a tradition that started for me in 2014. Posts starting with “The weekend after …. “describing what happened during a PDT conference, later the event merged with CIMdata becoming THE PLM event for discussions beyond marketing.

For many of us, this conference was the first time after COVID-19 in 2020. It was a 3D (In person) conference instead of a 2D (digital) conference. With approximately 160 participants, this conference showed that we wanted to meet and network in person and the enthusiasm and interaction were great.

The conference’s theme, Digital Transformation and PLM – a call for PLM Professionals to redefine and re-position the benefits and value of PLM, was quite open.

There are many areas where digitization affects the way to implement a modern PLM Strategy.

Now some of my highlights from day one. I needed to filter to remain around max 1500 words. As all the other sessions, including the sponsor vignettes, were informative, they increased the value of this conference.

Digital Skills Transformation -Often Forgotten Critical Element of Digital Transformation

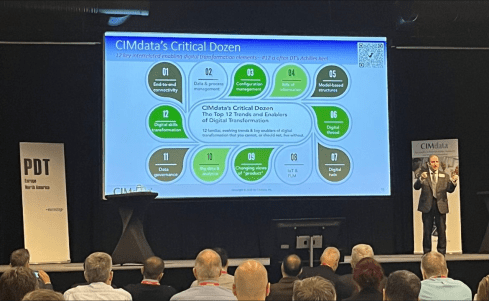

![]() Day 1 started traditionally with the keynote from Peter Bilello, CIMdata’s president and CEO. In previous conferences, Peter has recently focused on explaining the CIMdata’s critical dozen (image below). If you are unfamiliar with them, there is a webinar on November 10 where you can learn more about them.

Day 1 started traditionally with the keynote from Peter Bilello, CIMdata’s president and CEO. In previous conferences, Peter has recently focused on explaining the CIMdata’s critical dozen (image below). If you are unfamiliar with them, there is a webinar on November 10 where you can learn more about them.

All twelve are equally important; it is not a sequence of priorities. This time Peter spent more time on Organisational Change management (OCM), number 12 of the critical dozen – or, as stated, the Digital Transformation’s Achilles heel. Although we always mention people are important, in our implementation projects, they often seem to be the topic that gets the less focus.

We all agree on the statement: People, Process, Tools & Data. Often the reality is that we start with the tools, try to build the processes and push the people in these processes. Is it a coincidence that even CIMdata puts Digital Skills transformation as number 12? An unconscious bias?

We all agree on the statement: People, Process, Tools & Data. Often the reality is that we start with the tools, try to build the processes and push the people in these processes. Is it a coincidence that even CIMdata puts Digital Skills transformation as number 12? An unconscious bias?

This time, the people’s focus got full attention. Peter explained the need for a digital skills transformation framework to educate, guide and support people during a transformation. The concluding slide below says it all.

Transformation Journey and PLM & PDM Modernization to the Digital Future

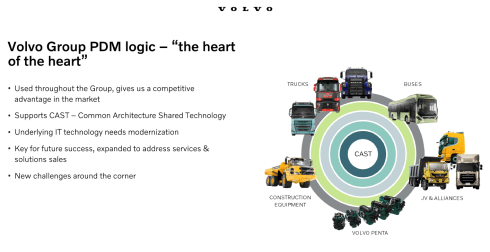

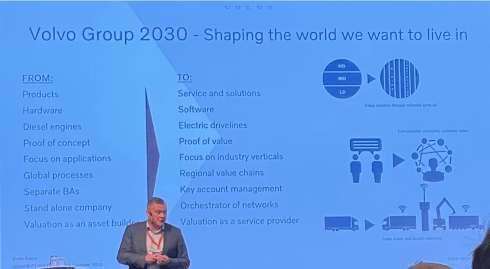

The second keynote of the day was from Josef Schiöler, Head of Core Platform Area PLM/PDM from the Volvo Group. Josef and his team have a huge challenge as they are working on a foundation for the future of the Volvo Group.

The second keynote of the day was from Josef Schiöler, Head of Core Platform Area PLM/PDM from the Volvo Group. Josef and his team have a huge challenge as they are working on a foundation for the future of the Volvo Group.

The challenge is that it will provide the foundation for new business processes and the various group members, as the image shows below:

As Josef said, it is really the heart of the heart, crucial for the future. Peter Bilello referred to this project as open-heart surgery while the person is still active, as the current business must go on too.

The picture below gives an impression of the size of the operation.

And like any big transformation project also, the Volvo Group has many questions to explore as there is no existing blueprint to use.

To give you an impression:

- How to manage complex documentation with existing and new technology and solution co-existing?

(My take: the hybrid approach) - How to realize benefits and user adoption with user experience principles in mind?

(My take: Understand the difference between a system of engagement and a system of record) - How to avoid seeing modernization as pure an IT initiative and secure that end-user value creation is visible while still keeping a focus on finalizing the technology transformation?

(My take: think hybrid and focus first on the new systems of engagement that can grow) - How to efficiently partner with software vendors to ensure vendor solutions fit well in the overall PLM/PDM enterprise landscape without heavy customization?

(My take: push for standards and collaboration with other similar companies – they can influence a vendor)

![]() Note: My takes are just a starting point of the conversation. There is a discussion in the PLM domain, which I described in my blog post: A new PLM paradigm.

Note: My takes are just a starting point of the conversation. There is a discussion in the PLM domain, which I described in my blog post: A new PLM paradigm.

The day before the conference, we had a ½ day workshop initiated by SAAB and Eurostep where we discussed the various angles of the so-called Federated PLM.

The day before the conference, we had a ½ day workshop initiated by SAAB and Eurostep where we discussed the various angles of the so-called Federated PLM.

I will return to that topic soon after some consolidation with the key members of that workshop.

Steering future Engineering Processes with System Lifecycle Management

Patrick Schäfer‘s presentation was different than the title would expect. Patrick is the IT Architect Engineering IT from ThyssenKrupp Presta AG. The company provides steering systems for the automotive industry, which is transforming from mechanical to autonomous driving, e-mobility, car-to-car connectivity, stricter safety, and environmental requirements.