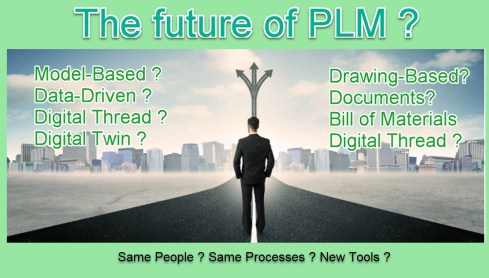

You are currently browsing the tag archive for the ‘Digital Enterprise’ tag.

Wow, what a tremendous amount of impressions to digest when traveling back from Jerez de la Frontera, where Share PLM held its first PLM conference. You might have seen the energy from the messages on LinkedIn, as this conference had a new and unique daring starting point: Starting from human-led transformations.

Wow, what a tremendous amount of impressions to digest when traveling back from Jerez de la Frontera, where Share PLM held its first PLM conference. You might have seen the energy from the messages on LinkedIn, as this conference had a new and unique daring starting point: Starting from human-led transformations.

Look what Jens Chemnitz, Linda Kangastie, Martin Eigner, Jakob Äsell or Oleg Shilovitsky had to say.

For over twenty years, I have attended all kinds of PLM events, either vendor-neutral or from specific vendors. None of these conferences created so many connections between the attendees and the human side of PLM implementation.

We can present perfect PLM concepts, architectures and methodologies, but the crucial success factor is the people—they can make or break a transformative project.

Here are some of the first highlights for those who missed the event and feel sorry they missed the vibe. I might follow up in a second post with more details. And sorry for the reduced quality—I am still enjoying Spain and refuse to use AI to generate this human-centric content.

The scenery

Approximately 75 people have been attending the event in a historic bodega, Bodegas Fundador, in the historic center of Jerez. It is not a typical place for PLM experts, but an excellent place for humans with an Andalusian atmosphere. It was great to see companies like Razorleaf, Technia, Aras, XPLM and QCM sponsor the event, confirming their commitment. You cannot start a conference from scratch alone.

The next great differentiator was the diversity of the audience. Almost 50 % of the attendees were women, all working on the human side of PLM.

Another brilliant idea was to have the summit breakfast in the back of the stage area, so before the conference days started, you could mingle and mix with the people instead of having a lonely breakfast in your hotel.

Another brilliant idea was to have the summit breakfast in the back of the stage area, so before the conference days started, you could mingle and mix with the people instead of having a lonely breakfast in your hotel.

Now, let’s go into some of the highlights; there were more.

A warm welcome from Share PLM

Beatriz Gonzalez, CEO and co-founder of Share PLM, kicked off the conference, explaining the importance of human-led transformations and organizational change management and sharing some of their best practices that have led to success for their customers.

You might have seen this famous image in the past, explaining why you must address people’s emotions.

Working with Design Sprints?

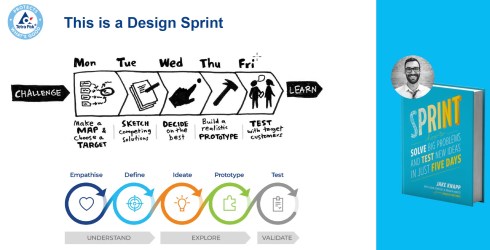

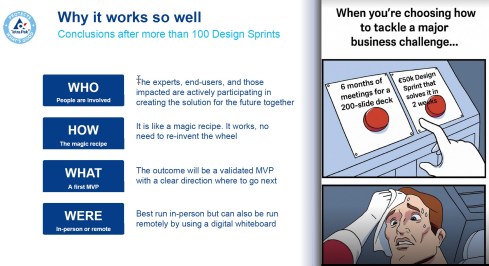

Have you ever heard of design sprints as a methodology for problem-solving within your company? If not, you should read the book by Jake Knapp- Creator of Design Sprint.

Andrea Järvén, program manager at Tetra Pak and closely working with the PLM team, recommended this to us. She explained how Tetra Pak successfully used design sprints to implement changes. You would use design sprints when development cycles run too looong, Teams lose enthusiasm and focus, work is fragmented, and the challenges are too complex.

Andrea Järvén, program manager at Tetra Pak and closely working with the PLM team, recommended this to us. She explained how Tetra Pak successfully used design sprints to implement changes. You would use design sprints when development cycles run too looong, Teams lose enthusiasm and focus, work is fragmented, and the challenges are too complex.

Instead of a big waterfall project, you run many small design sprints with the relevant stakeholders per sprint, coming step by step closer to the desired outcome.

The sprints are short – five days of the full commitment of a team targeting a business challenge, where every day has a dedicated goal, as you can see from the image above.

It was an eye-opener, and I am eager to learn where this methodology can be used in the PLM projects I contribute.

Unlocking Success: Building a Resilient Team for Your PLM Journey

Johan Mikkelä from FLSmidth shared a great story about the skills, capacities, and mindset needed for a PLM transformational project.

Johan Mikkelä from FLSmidth shared a great story about the skills, capacities, and mindset needed for a PLM transformational project.

Johan brought up several topics to consider when implementing a PLM project based on his experiences.

One statement that resonated well with the audience of this conference was:

The more diversified your team is, the faster you can adapt to changes.

He mentioned that PLM projects feel like a marathon, and I believe it is true when you talk about a single project.

However, instead of a marathon, we should approach PLM activities as a never-ending project, but a pleasant journey that is not about reaching a finish but about step-by-step enjoying, observing, and changing a little direction when needed.

However, instead of a marathon, we should approach PLM activities as a never-ending project, but a pleasant journey that is not about reaching a finish but about step-by-step enjoying, observing, and changing a little direction when needed.

Strategic Shift of Focus – a human-centric perspective

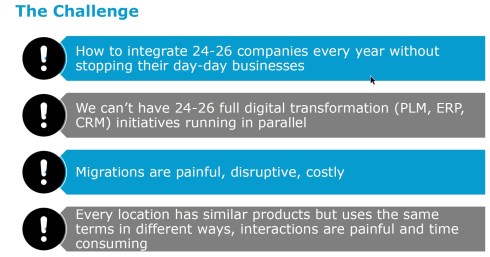

Besides great storytelling, Antonio Casaschi‘s PLM learning journey at Assa Abloy was a perfect example of why PLM theory and reality often do not match. With much energy and experience, he came to Assa Abloy to work on the PLM strategy.

Besides great storytelling, Antonio Casaschi‘s PLM learning journey at Assa Abloy was a perfect example of why PLM theory and reality often do not match. With much energy and experience, he came to Assa Abloy to work on the PLM strategy.

He started his PLM strategies top-down, trying to rationalize the PLM infrastructure within Assa Abloy with a historically bad perception of a big Teamcenter implementation from the past. Antonio and his team were the enemies disrupting the day-to-day life of the 200+ companies under the umbrella of Assa Abloy.

A logical lesson learned here is that aiming top-down for a common PLM strategy is impossible in a company that acquires another six new companies per quarter.

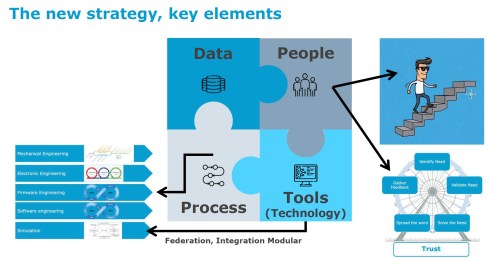

His final strategy is a bottom-up strategy, where he and the team listen to and work with the end-users in the native environments. They have become trusted advisors now as they have broad PLM experience but focus on current user pains. With the proper interaction, his team of trusted advisors can help each of the individual companies move towards a more efficient and future-focused infrastructure at their own pace.

The great lessons I learned from Antonio are:

- If your plan does not work out, be open to failure. Learn from your failures and aim for the next success.

- Human relations—I trust you, understand you, and know what to do—are crucial in such a complex company landscape.

Navigating Change: Lessons from My First Year as a Program Manager

Linda Kangastie from Valmet Technologies Oy in Finland shared her experiences within the company, from being a PLM key user to now being a PLM program manager for the PAP Digi Roadmap, containing PLM, sales tools, installed base, digitalization, process harmonization and change management, business transformation—a considerable scope.

Linda Kangastie from Valmet Technologies Oy in Finland shared her experiences within the company, from being a PLM key user to now being a PLM program manager for the PAP Digi Roadmap, containing PLM, sales tools, installed base, digitalization, process harmonization and change management, business transformation—a considerable scope.

The recommendations she gave should be a checklist for most PLM projects – if you are missing one of them, ask yourself what you are missing:

- THE ROADMAP and THE BIG PICTURE – is your project supported by a vision and a related roadmap of milestones to achieve?

- Biggest Buy-in comes with money! – The importance of a proper business case describing the value of the PLM activities and working with use cases demonstrating the value.

- Identify the correct people in the organization – the people that help you win, find sparring partners in your organization and make sure you have a common language.

- Repetition – taking time to educate, learn new concepts and have informal discussions with people –is a continuous process.

As you can see, there is no discussion about technology– it is about business and people.

To conclude, other speakers mentioned this topic too; it is about being honest and increasing trust.

The Future Is Human: Leading with Soul in a World of AI

Helena Guitierez‘s keynote on day two was the one that touched me the most as she shared her optimistic vision of the future where AI will allow us to be so more efficient in using our time, combined, of course, with new ways of working and behaviors.

Helena Guitierez‘s keynote on day two was the one that touched me the most as she shared her optimistic vision of the future where AI will allow us to be so more efficient in using our time, combined, of course, with new ways of working and behaviors.

As an example, she demonstrated she had taken an academic paper from Martin Eigner, and by using an AI tool, the German paper was transformed into an English learning course, including quizzes. And all of this with ½ day compared to the 3 to 4 days it would take the Share PLM team for that.

With the time we save for non-value-added work, we should not remain addicted to passive entertainment behind a flat screen. There is the opportunity to restore human and social interactions in person in areas and places where we want to satisfy our human curiosity.

I agree with her optimism. During Corona and the introduction of teams and Zoom sessions, I saw people become resources who popped up at designated times behind a flat screen.

I agree with her optimism. During Corona and the introduction of teams and Zoom sessions, I saw people become resources who popped up at designated times behind a flat screen.

The real human world was gone, with people talking in the corridors at the coffee machine. These are places where social interactions and innovation happen. Coffee stimulates our human brain; we are social beings, not resources.

Death on the Shop Floor: A PLM Murder Mystery

Rob Ferrone‘s theatre play was an original way of explaining and showing that everyone in the company does their best. The product was found dead, and Andrea Järvén alias Angie Neering, Oleg Shilovitsky alias Per Chasing, Patrick Willemsen alias Manny Facturing, Linda Kangastie alias Gannt Chartman and Antonio Casaschi alias Archie Tect were either pleaded guilty by the public jury or not guilty, mainly on the audience’s prejudices.

You can watch the play here, thanks to Michael Finocchiaro :

According to Rob, the absolute need to solve these problems that allow products to die is the missing discipline of product data people, who care for the flow, speed, and quality of product data. Rob gave some examples of his experience with Quick Release project he had worked with.

My learnings from this presentation are that you can make PLM stories fun, but even more important, instead of focusing on data quality by pushing each individual to be more accurate—it seems easy to push, but we know the quality; you should implement a workforce with this responsibility. The ROI for these people is clear.

![]() Note: I believe that once companies become more mature in working with data-driven tools and processes, AI will slowly take over the role of these product data people.

Note: I believe that once companies become more mature in working with data-driven tools and processes, AI will slowly take over the role of these product data people.

Conclusion

I greatly respect Helena Guitierez and the Share PLM team. I appreciate how they demonstrated that organizing a human-centric PLM summit brings much more excitement than traditional technology—or industry-focused PLM conferences. Starting from the human side of the transformation, the audience was much more diverse and connected.

Closing the conference with a fantastic flamenco performance was perhaps another excellent demonstration of the human-centric approach. The raw performance, a combination of dance, music, and passion, went straight into the heart of the audience – this is how PLM should be (not every day)

There is so much more to share. Meanwhile, you can read more highlights through Michal Finocchiaro’s overview channel here.

Congratulations if you have shown you can resist the psychological and emotional pressure and did not purchase anything in the context of Black Friday. However, we must not forget that another big part of the world cannot afford this behavior, as they do not have the means to do so – ultimate Black Friday might be their dream and a fast track to more enormous challenges.

Congratulations if you have shown you can resist the psychological and emotional pressure and did not purchase anything in the context of Black Friday. However, we must not forget that another big part of the world cannot afford this behavior, as they do not have the means to do so – ultimate Black Friday might be their dream and a fast track to more enormous challenges.

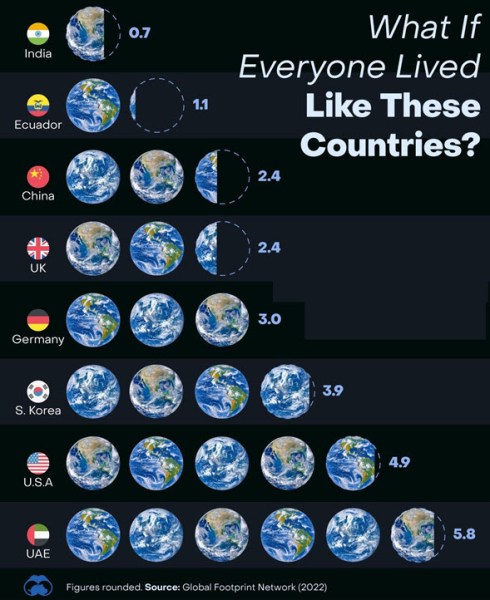

The difference between our societies, all living on the same planet, is illustrated in the image below, illustrating the unfairness of this situation

What the image also shows is a warning that we all have to act, as step by step, we will reach planet boundaries for resources.

Or we need more planets, and I understand a brilliant guy is already working on it. Let’s go to Mars and enjoy life there.

Or we need more planets, and I understand a brilliant guy is already working on it. Let’s go to Mars and enjoy life there.

For those generations staying on this planet, there is only one option: we need to change our economy of unlimited growth and reconsider how we use our natural resources.

The circular economy?

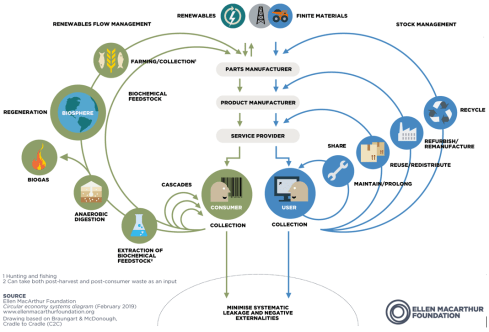

You are probably familiar with the butterfly diagram from the Ellen MacArthur Foundation, where we see the linear process: Take-Make-Use-Waste in the middle.

This approach should be replaced by more advanced regeneration loops on the left side and the five R’s on the right: Reduce, Repair, Reuse, Refurbish and Recycle as the ultimate goal is the minimum leakage of Earth resources.

Closely related to the Circular Economy concept is the complementary Cradle-To-Cradle design approach. In this case, while designing our products, we also consider the end of life of a product as the start for other products to be created based on the materials used.

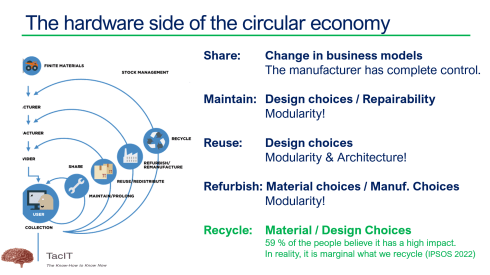

The CE butterfly diagram’s right side is where product design plays a significant role and where we, as a PLM community, should be active. Each loop has its own characteristics, and the SHARE loop is the one I focused on during the recent PLM Roadmap / PDT Europe conference in Gothenburg.

As you can see, the Maintain, Reuse, Refurbish and Recycle loops depend on product design strategies, in particular, modularity and, of course, depending on material choices.

It is important to note that the recycle loop is the most overestimated loop, where we might contribute to recycling (glass, paper, plastic) in our daily lives; however, other materials, like composites often with embedded electronics, have a much more significant impact.

It is important to note that the recycle loop is the most overestimated loop, where we might contribute to recycling (glass, paper, plastic) in our daily lives; however, other materials, like composites often with embedded electronics, have a much more significant impact.

Watch the funny meme in this post: “We did everything we could– we brought our own bags.”

![]() The title of my presentation was: Products as a Service – The Ultimate Sustainable Economy?

The title of my presentation was: Products as a Service – The Ultimate Sustainable Economy?

You can find my presentation on SlideShare here.

Let’s focus on the remainder of the presentation’s topic: Product As A Service.

The Product Service System

Where Product As A Service might be the ultimate dream for an almost wasteless society, Ida Auken, a Danish member of the parliament, gave a thought-provoking lecture in that context at the 2016 World Economic Forum. Her lecture was summarized afterward as

Where Product As A Service might be the ultimate dream for an almost wasteless society, Ida Auken, a Danish member of the parliament, gave a thought-provoking lecture in that context at the 2016 World Economic Forum. Her lecture was summarized afterward as

“In the future, you will own nothing and be happy.”

A theme also picked up by conspiracy thinkers during the COVID pandemic, claiming “they” are making us economic slaves and consumers. With Black Friday in mind, I do not think there is a conspiracy; it is the opposite.

Closer to implementing everywhere Product as a Service for our whole economy, we might be going into Product Service Systems.

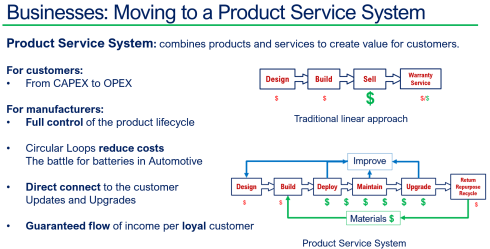

As the image shows, a product service system is a combination of providing a product with related services to create value for the customer.

In the ultimate format, the manufacturer owns the products and provides the services, keeping full control of the performance and materials during the product lifecycle. The benefits for the customer are that they pay only for the usage of the product and, therefore, do not need to invest upfront in the solution (CAPEX), but they only pay when using the solution (OPEX).

A great example of this concept is Spotify or other streaming services. You do not pay for the disc/box anymore; you pay for the usage, and the model is a win-win for consumers (many titles) and producers (massive reach).

A great example of this concept is Spotify or other streaming services. You do not pay for the disc/box anymore; you pay for the usage, and the model is a win-win for consumers (many titles) and producers (massive reach).

Although the Product Service System will probably reach consumers later, the most significant potential is currently in the B2B business model, e.g., transportation as a service and special equipment usage as a service. Examples are popping up in various industries.

My presentation focused on three steps that manufacturing companies need to consider now and in the future when moving to a Product Service System.

My presentation focused on three steps that manufacturing companies need to consider now and in the future when moving to a Product Service System.

Step 1: Get (digital) connected to your Product and customer

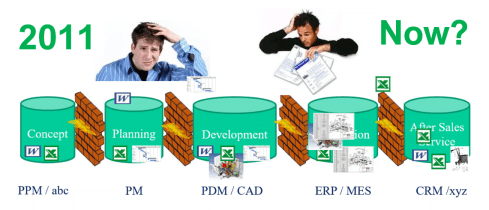

A foundational step companies must take is to create a digital infrastructure to support all stakeholders in the product service offering. Currently, many companies have a siloed approach where each discipline Marketing/Sales, R&D, Engineering, Manufacturing and Sales will have their own systems.

Digital Transformation in the PLM domain is needed here – where are you on this level?

But it is not only the technical silos that impede the end-to-end visibility of information. If there are no business targets to create and maintain the end-to-end information sharing, you can not expect it to happen.

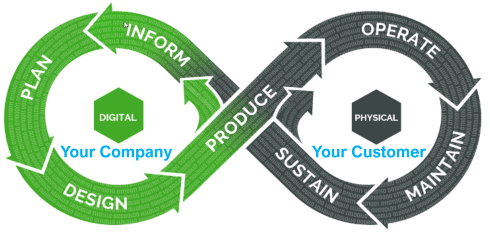

Therefore, companies should invest in the digitalization of their ways of working, implementing an end-to-end digital thread AND changing their linear New Product Development process into a customer-driven DevOp approach. The PTC image below shows the way to imagine a end-to-end connected environment

In a Product Service System, the customer is the solution user, and the solution provider is responsible for the uptime and improvement of the solution over time.

As an upcoming bonus and a must, companies need to use AI to run their Product Service System as it will improve customer knowledge and trends. Don’t forget that AI (and Digital Twins) runs best on reliable data.

As an upcoming bonus and a must, companies need to use AI to run their Product Service System as it will improve customer knowledge and trends. Don’t forget that AI (and Digital Twins) runs best on reliable data.

Step 2 From Product to Experience

A Product Service System is not business as usual by providing products with some additional services. Besides concepts such as Digital Thread and Digital Twins of the solution, there is also the need to change the company’s business model.

In the old way, customers buy the product; in the Product Service System, the customer becomes a user. We should align the company and business to become user-centric and keep the user inspired by the experience of the Product Service System.

In the old way, customers buy the product; in the Product Service System, the customer becomes a user. We should align the company and business to become user-centric and keep the user inspired by the experience of the Product Service System.

In this context, there are two interesting articles to read:

- Jan Bosch: From Agile to Radical: Business Model

- Chris Seiler: How to escape the vicious circle in times of transformation?

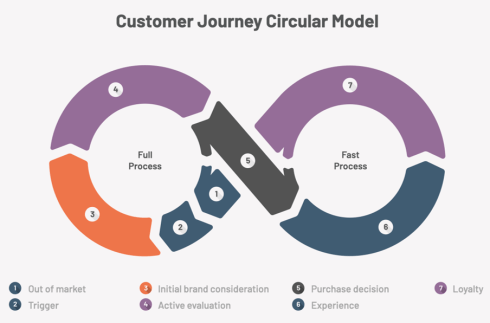

The change in business model means that companies should think about a circular customer journey.

As the company will remain the product owner, it is crucial to understand what happens when the customers stop using the service or how to ensure maintenance and upgrades.

In addition, to keep the customer satisfied, it remains vital to discover the customer KPIs and how additional services could potentially improve the relationship. Again, AI can help find relationships that are not yet digitally established.

Step 2: From product to experience can already significantly impact organizations. The traditional salesperson’s role will disappear and be replaced by excellence in marketing, services and product management.

Step 2: From product to experience can already significantly impact organizations. The traditional salesperson’s role will disappear and be replaced by excellence in marketing, services and product management.

This will not happen quickly as, besides the vision, there needs to be an evolutionary path to the new business model.

Therefore, companies must analyze their portfolio and start experimenting with a small product, converting it into a product service system. Starting simple allows companies to learn and be prepared for scaling up.

A Product Service System also influences a company’s cash flow as revenue streams will change.

When scaling up slowly, the company might be able to finance this transition themselves. Another option, already happening, is for a third party to finance the Product Service System – think about car leasing, power by the hour, or some industrial equipment vendors.

When scaling up slowly, the company might be able to finance this transition themselves. Another option, already happening, is for a third party to finance the Product Service System – think about car leasing, power by the hour, or some industrial equipment vendors.

Step 3 Towards a doughnut economy?

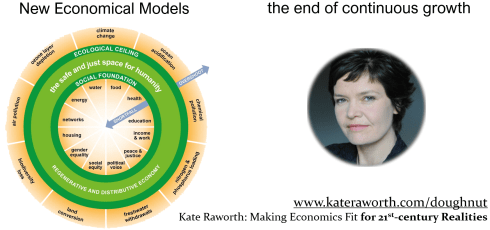

The last step is probably a giant step or even a journey. An economic mindset shift is needed from the ever-growing linear economy towards an economy flourishing for everyone within economic, environmental and social boundaries.

Unlimited growth is the biggest misconception on a planet reaching its borders. Either we need more planets, or we need to adjust our society.

In that context, I read the book “The Doughnut Economy” by Kate Raworth, a recognized thought leader who explains how a future economic model can flourish, including a circular economy, and you will be happy.

But we must abandon the old business models and habits – there will be a lot of resistance to change before people are forced to change. This change can take generations as the outside world will not change without a reason, and the established ones will fight for their privileges.

But we must abandon the old business models and habits – there will be a lot of resistance to change before people are forced to change. This change can take generations as the outside world will not change without a reason, and the established ones will fight for their privileges.

It is a logical process where people and boundaries will learn to find a new balance. Will it be in a Doughnut Economy, or did we overlook some bright other concepts?

Conclusion

The week after Black Friday and hopefully the month after all the Christmas presents, it is time to formulate your good intentions for 2025. As humans, we should consume less; as companies, we should direct our future to a sustainable future by exploring the potential of the Product Service System and beyond.

It was a great pleasure to attend my favorite vendor-neutral PLM conference this year in Gothenburg—approximately 150 attendees, where most have expertise in the PLM domain.

It was a great pleasure to attend my favorite vendor-neutral PLM conference this year in Gothenburg—approximately 150 attendees, where most have expertise in the PLM domain.

We had the opportunity to learn new trends, discuss reality, and meet our peers.

The theme of the conference was:Value Drivers for Digitalization of the Product Lifecycle, a topic I have been discussing in my recent blog posts, as we need help and educate companies to understand the importance of digitalization for their business.

The two-day conference covered various lectures – view the agenda here – and of course the topic of AI was part of half of the lectures, giving the attendees a touch of reality.

The two-day conference covered various lectures – view the agenda here – and of course the topic of AI was part of half of the lectures, giving the attendees a touch of reality.

In this first post, I will cover the main highlight of Day 1.

Value Drivers for Digitalization of the Product Lifecycle

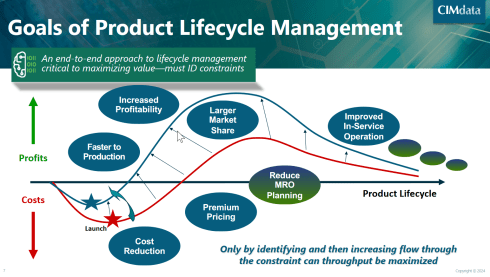

As usual, the conference started with Peter Bilello, president & CEO of CIMdata, stressing again that when implementing a PLM strategy, the maximum result comes from a holistic approach, meaning look at the big picture, don’t just focus on one topic.

As usual, the conference started with Peter Bilello, president & CEO of CIMdata, stressing again that when implementing a PLM strategy, the maximum result comes from a holistic approach, meaning look at the big picture, don’t just focus on one topic.

It was interesting to see again the classic graph (below) explaining the benefits of the end-to-end approach – I believe it is still valid for most companies; however, as I shared in my session the next day, implementing concepts of a Products Service System will require more a DevOp type of graph (more next week).

Next, Peter went through the CIMdata’s critical dozen with some updates. You can look at the updated 2024 image here.

Some of the changes: Digital Thread and Digital Twin are merged– as Digital Twins do not run on documents. And instead of focusing on Artificial Intelligence only, CIMdata introduced Augmented Intelligence as we should also consider solutions that augment human activities, not just replace them.

Some of the changes: Digital Thread and Digital Twin are merged– as Digital Twins do not run on documents. And instead of focusing on Artificial Intelligence only, CIMdata introduced Augmented Intelligence as we should also consider solutions that augment human activities, not just replace them.

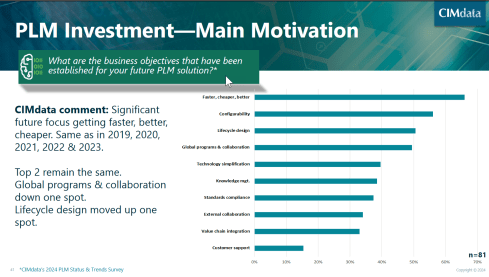

Peter also shared the results of a recent PLM survey where companies were asked about their main motivation for PLM investments. I found the result a little discouraging for several reasons:

The number one topic is still faster, cheaper and better – almost 65 % of the respondents see this as their priority. This number one topic illustrates that Sustainability has not reached the level of urgency, and perhaps the topic can be found in standards compliance.

Many of the companies with Sustainability in their mission should understand that a digital PLM infrastructure is the foundation for most initiatives, like Lifecycle Analysis (LCA). Sustainability is more than part of standards compliance, if it was mentioned anyway.

Many of the companies with Sustainability in their mission should understand that a digital PLM infrastructure is the foundation for most initiatives, like Lifecycle Analysis (LCA). Sustainability is more than part of standards compliance, if it was mentioned anyway.

The second disappointing observation for the understanding of PLM is that customer support is mentioned only by 15 % of the companies. Again, connecting your products to your customers is the first step to a DevOp approach, and you need to be able to optimize your product offering to what the customer really wants.

Digital Transformation of the Value Chain in Pharma

The second keynote was from Anders Romare, Chief Digital and Information Officer at Novo Nordisk. Anders has been participating in the PDT conference in the past. See my 2016 PLM Roadmap/PDT Europe post, where Anders presented on behalf of Airbus: Digital Transformation through an e2e PLM backbone.

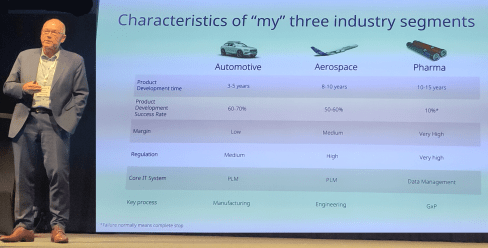

Anders started by sharing some of the main characteristics of the companies he has been working for. Volvo, Airbus and now Novo Nordisk. It is interesting to compare these characteristics as they say a lot about the industry’s focus. See below:

Anders is now responsible for digital transformation in Novo Nordisk, which is a challenge in a heavily regulated industry.

One of the focus areas for Novo Nordisk in 2024 is also Artificial Intelligence, as you can see from the image to the left (click on it for the details).

One of the focus areas for Novo Nordisk in 2024 is also Artificial Intelligence, as you can see from the image to the left (click on it for the details).

As many others in this conference, Anders mentioned AI can only be applicable when it runs on top of accurate data.

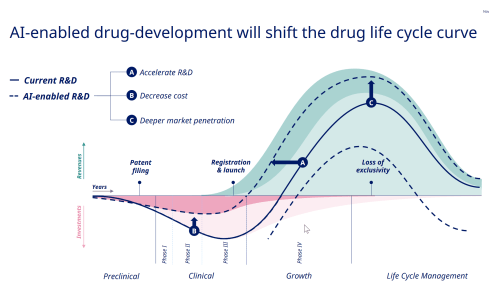

Understanding the potential of AI, they identified 59 areas where AI can create value for the business, and it is interesting to compare the traditional PLM curve Peter shared in his session with the potential AI-enabled drug-development curve as presented by Anders below:

Next, Anders shared some of the example cases of this exploration, and if you are interested in the details, visit their tech.life site.

When talking about the engineering framing of PLM, it was interesting to learn from Anders, who had a long history in PLM before Novo Nordisk, when he replied to a question from the audience that he would never talk about PLM at the management level. It’s very much aligned with my Don’t mention the P** word post.

A Strategy for the Management of Large Enterprise PLM Platforms

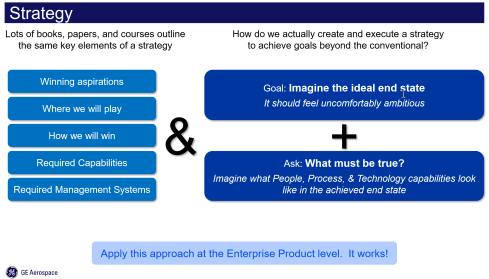

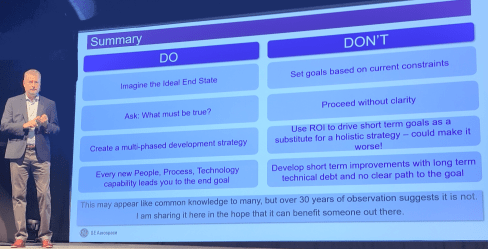

One of the highlights for me on Day 1 was Jorgen Dahl‘s presentation. Jorgen, a senior PLM director at GE Aerospace, shared their story towards a single PLM approach needed due to changes in businesses. And addressing the need for a digital thread also comes with an increased need for uptime.

I like his strategy to execution approach, as shown in the image below, as it contains the most important topics. The business vision and understanding, the imagination of the end status and What must be True?

In my experience, the three blocks are iteratively connected. When describing the strategy, you might not be able to identify the required capabilities and management systems yet.

But then, when you start to imagine the ideal end state, you will have to consider them. And for companies, it is essential to be ambitious – or, as Jorgen stated, uncomfortable ambitious. Go for the 75 % to almost 100 % to be true. Also, asking What must be True is an excellent way to allow people to be involved and creatively explore the next steps.

Note: This approach does not provide all the details, as it will be a multiyear journey of learning and adjusting towards the future. Therefore, the strategy must be aligned with the culture to avoid continuous top-down governance of the details. In that context, Jorgen stated:

“Culture is what happens when you leave the room.”

It is a more positive statement than the famous Peter Drucker’s quote: “Culture eats strategy for breakfast.”

Jorgen’s concluding slide mentions potential common knowledge, although I believe the way Jorgen used the right easy-to-digest points will be helpful for all organizations to step back, look at their initiatives, and compare where they can improve.

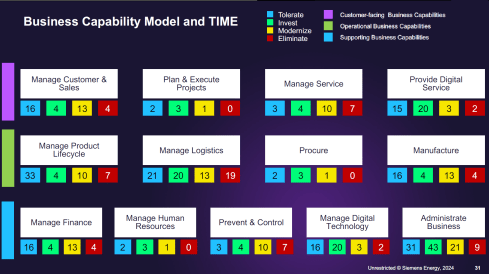

How a Business Capability Model and Application Portfolio Management Support Through Changing Times

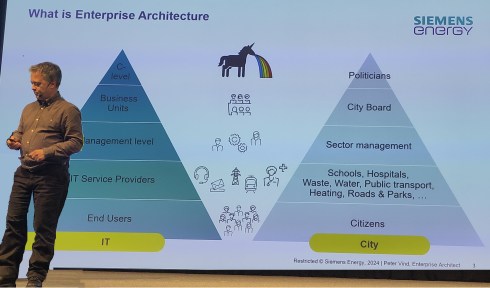

Peter Vind‘s presentation was nicely connected to the presentation from Jorgen Dahl. Peter, who is an enterprise architect at Siemens Energy, started by explaining where the enterprise architect fits in an organization and comparing it to a city.

In his entertaining session, he mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

Peter explained how they used Business Capability Modeling when Siemens Energy went through various business stages. First, the carve-out from Siemens AG and later the merger with Siemens Gamesa. Their challenge is to understand which capabilities remain, which are new or overlapping, both during the carve-out and merging process.

The business capability modeling leads to a classification of the applications used at different levels of the organization, such as customer-facing, operational, or supporting business capabilities.

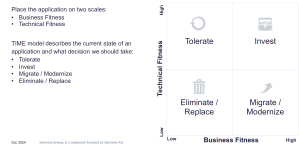

Next, for the lifecycle of the applications, the TIME approach was used, meaning that each application was mapped to business fitness and technical fitness. Click on the diagram to see the details.

The result could look like the mapping shown below – a comprehensive overview of where the action is

It is a rational approach; however, Peter mentioned that we also should be aware of the HIPPOs in an organization. If there is a HiPPO (Highest Paid Person’s Opinion) in play, you might face a political battle too.

It was a great educational session illustrating the need for an Enterprise Architect, the value of business capabilities modeling and the TIME concept.

And some more …

There were several other exciting presentations during day 1; however, as not all presentations are publicly available, I cannot discuss them in detail; I just looked at my notes.

Driving Trade Compliance and Efficiency

Peter Sandeck, Director of Project Management at TE Connectivity shared what they did to motivate engineers to endorse their Jurisdiction and Classification Assessment (JCA) process. Peter showed how, through a Minimal Viable Product (MVP) approach and listening to the end-users, they reached a higher Customer Satisfaction (CSAT) score after several iterations of the solution developed for the JCA process.

Peter Sandeck, Director of Project Management at TE Connectivity shared what they did to motivate engineers to endorse their Jurisdiction and Classification Assessment (JCA) process. Peter showed how, through a Minimal Viable Product (MVP) approach and listening to the end-users, they reached a higher Customer Satisfaction (CSAT) score after several iterations of the solution developed for the JCA process.

This approach is an excellent example of an agile method in which engineers are involved. My remaining question is still – are the same engineers in the short term also pushed to make lifecycle assessments? More work; however, I believe if you make it personal, the same MVP approach could work again.

Value of Model-Based Product Architecture

Jussi Sippola, Chief Expert, Product Architecture Management & Modularity at Wärtsilä, presented an excellent story related to the advantages of a more modular product architecture. Where historically, products were delivered based on customer requirements through the order fulfillment process, now there is in parallel the portfolio management process, defining the platform of modules, features and options.

Jussi Sippola, Chief Expert, Product Architecture Management & Modularity at Wärtsilä, presented an excellent story related to the advantages of a more modular product architecture. Where historically, products were delivered based on customer requirements through the order fulfillment process, now there is in parallel the portfolio management process, defining the platform of modules, features and options.

Jussi mentioned that they were able to reduce the number of parts by 50 % while still maintaining the same level of customer capabilities. In addition, thanks to modularity, they were able to reduce the production lead time by 40 % – essential numbers if you want to remain competitive.

Conclusion

Day 1 was a day where we learned a lot as an audience, and in addition, the networking time and dinner in the evening were precious for me and, I assume, also for many of the participants. In my next post, we will see more about new ways of working, the AI dream and Sustainability.

I have not been writing much new content recently as I feel that from the conceptual side, so much has already been said and written. A way to confuse people is to overload them with information. We see it in our daily lives and our PLM domain.

I have not been writing much new content recently as I feel that from the conceptual side, so much has already been said and written. A way to confuse people is to overload them with information. We see it in our daily lives and our PLM domain.

With so much information, people become apathetic, and you will hear only the loudest and most straightforward solutions.

One desire may be that we should go back to the past when everything was easier to understand—are you sure about that?

This attitude has often led to companies doing nothing, not taking any risks, and just providing plasters and stitches when things become painful. Strategic decision-making is the key to avoiding this trap.

I just read this article in the Guardian: The German problem? It is an analog country in a digital world.

The article also describes the lessons learned from the UK (quote):

Britain was the dominant economic power in the 19th century on the back of the technologies of the first Industrial Revolution and found it hard to break with the old ways even when it should have been obvious that its coal and textile industries were in long-term decline.

As a result, Britain lagged behind its competitors. One of these was Germany, which excelled in advanced manufacturing and precision engineering.

Many technology concepts originated from Germany in the past and even now we are talking about Industrie 4.0 and Catena-X as advanced concepts. But are they implemented? Did companies change their culture and ways of working required for a connected and digital enterprise?

Technology is not the issue.

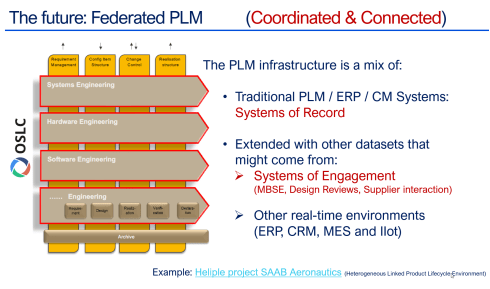

The current PLM concepts, which discuss a federated PLM infrastructure based on connected data, have become increasingly stable.

The current PLM concepts, which discuss a federated PLM infrastructure based on connected data, have become increasingly stable.

Perhaps people are using different terminologies and focusing on specific aspects of a business; however, all these (technical) discussions talk about similar business concepts:

- Prof. Dr. Jorg W. Fischer, managing partner at Steinbeis – Reshape Information Management (STZ-RIM), writes a lot about a modern data-driven infrastructure, mainly in the context of PLM and ERP. His recent article: The Freeway from PLM to ERP.

- Oleg Shilovitsky, CEO of OpenBOM, has a never-ending flow of information about data and infrastructure concepts and an understandable focus on BOMs. One of his recent articles, PLM 2030: Challenges and Opportunities of Data Lifecycle Management

- Matthias Ahrens, enterprise architect at Forvia / Hella, often shares interesting concepts related to enterprise architecture relevant to PLM. His latest share: Think PLM beyond a chain of tools!

- Dr. Yousef Hooshmand, PLM lead at NIO, shared his academic white paper and experiences at Daimler and NIO through various presentations. His publication can be found here: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

- Erik Herzog, technical fellow at SAAB Aeronautics, has been active for the past two years, sharing the concept of federated PLM applied in the Heliple project. His latest publication post: Heliple Federated PLM at the INCOSE International Symposium in Dublin

![]() Several more people are sharing their knowledge and experience in the domain of modern PLM concepts, and you will see that technology is not the issue. The hype of AI may become an issue.

Several more people are sharing their knowledge and experience in the domain of modern PLM concepts, and you will see that technology is not the issue. The hype of AI may become an issue.

From IT focus to Business focus

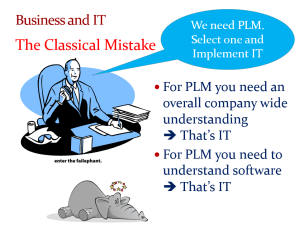

One issue I observed at several companies I worked with is that the PLM’s responsibility is inside the IT organization – click on the image to get the mindset.

One issue I observed at several companies I worked with is that the PLM’s responsibility is inside the IT organization – click on the image to get the mindset.

This situation is a historical one, as in the traditional PLM mode, the focus was on the on-premise installation and maintenance of a PLM system. Topics like stability, performance and security are typical IT topics.

IT departments have often been considered cost centers, and their primary purpose is to keep costs low.

Does the slogan ONE CAD, ONE PLM or ONE ERP resonate in your company?

It is all a result of trying to standardize a company’s tools. It is not deficient in a coordinated enterprise where information is exchanged in documents and BOMs. Although I wrote in 2011 about the tension between business and IT in my post “PLM and IT—love/hate relation?”

It is all a result of trying to standardize a company’s tools. It is not deficient in a coordinated enterprise where information is exchanged in documents and BOMs. Although I wrote in 2011 about the tension between business and IT in my post “PLM and IT—love/hate relation?”

Now, modern PLM is about a connected infrastructure where accurate data is the #1 priority.

Most of the new processes will be implemented in value streams, where the data is created in SaaS solutions running in the cloud. In such environments, business should be leading, and of course, where needed, IT should support the overall architecture concepts.

In this context, I recommend an older but still valid article: The Changing Role of IT: From Gatekeeper to Business Partner.

This changing role for IT should come in parallel to the changing role for the PLM team. The PLM team needs to first focus on enabling the new types of businesses and value streams, not on features and capabilities. This change in focus means they become part of the value creation teams instead of a cost center.

From successful PLM implementations, I have seen that the team directly reported to the CEO, CTO or CIO, no longer as a subdivision of the larger IT organization.

Where is your PLM team?

Is it a cost center or a value-creation engine?

The role of business leaders

As mentioned before, with a PLM team reporting to the business, communication should transition from discussing technology and capabilities to focusing on business value.

I recently wrote about this need for a change in attitude in my post: PLM business first. The recommended flow is nicely represented in the section “Starting from the business.”

I recently wrote about this need for a change in attitude in my post: PLM business first. The recommended flow is nicely represented in the section “Starting from the business.”

Image: Yousef Hooshmand.

Business leaders must realize that a change is needed due to upcoming regulations, like ESG and CSRD reporting, the Digital Product Passport and the need for product Life Cycle Analysis (LCA), which is more than just a change of tools.

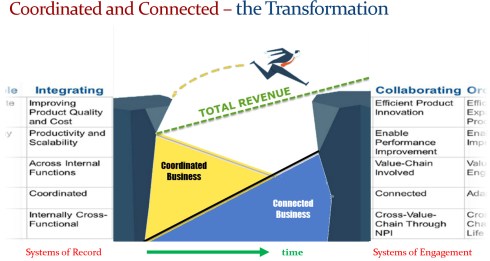

I have often referred to the diagram created by Mark Halpern from Gartner in 2015. Below you can see and adjusted diagram for 2024 including AI.

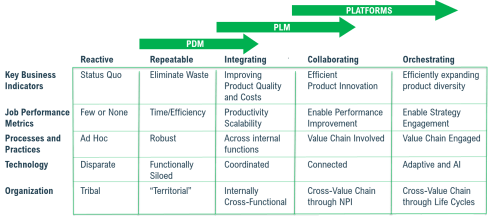

It looks like we are moving from Coordinated technology toward Connected technology. This seems easy to frame. However, my experience discussing this step in the past four to five years has led to the following four lessons learned:

- It is not a transition from Coordinated to Connected.

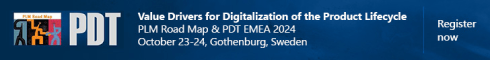

At this step, a company has to start in a hybrid mode – there will always remain Coordinated ways of working connected to Connected ways of working. This is the current discussion related to Federated PLM and the introduction of the terms System of Record (traditional systems / supporting linear ways of working) and Systems of Engagement (connected environments targeting real-time collaboration in their value chain) - It is not a matter of buying or deploying new tools.

Digital transformation is a change in ways of working and the skills needed. In traditional environments, where people work in a coordinated approach, they can work in their discipline and deliver when needed. People working in the connected approach have different skills. They work data-driven in a multidisciplinary mode. These ways of working require modern skills. Companies that are investing in new tools often hesitate to change their organization, which leads to frustration and failure. - There is no blueprint for your company.

Digital transformation in a company is a learning process, and therefore, the idea of a digital transformation project is a utopia. It will be a learning journey where you have to start small with a Minimum Viable Product approach. Proof of Concepts is a waste of time as they do not commit to implementing the solution. - The time is now!

The role of management is to secure the company’s future, which means having a long-term vision. And as it is a learning journey, the time is now to invest and learn using connected technology to be connected to coordinated technology. Can you avoid waiting to learn?

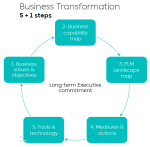

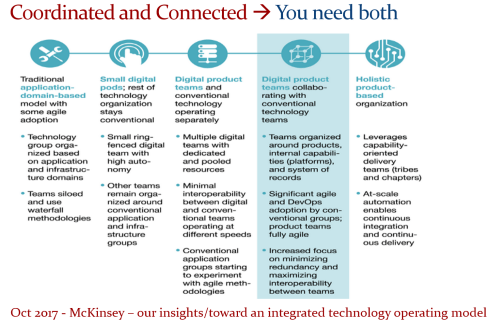

I have shared the image below several times as it is one of the best blueprints for describing the needed business transition. It originates from a McKinsey article that does not explicitly refer to PLM, again demonstrating it is first about a business strategy.

It is up to the management to master this process and apply it to their business in a timely manner. If not, the company and all its employees will be at risk for a sustainable business. Here, the word Sustainable has a double meaning – for the company and its employees/shareholders and the outside world – the planet.

Want to learn and discuss more?

Currently, I am preparing my session for the upcoming PLM Roadmap/PDT Europe conference on 23 and 24 October in Gothenburg. As I mentioned in previous years, this conference is my preferred event of the year as it is vendor-independent, and all participants are active in the various phases of a PLM implementation.

If you want to attend the conference, look here for the agenda and registration. I look forward to discussing modern PLM and its relation to sustainability with you. More in my upcoming posts till the conference.

Conclusion

Digital transformation in the PLM domain is going slow in many companies as it is complex. It is not an easy next step, as companies have to deal with different types of processes and skills. Therefore, a different organizational structure is needed. A decision to start with a different business structure always begins at the management level, driven by business goals. The technology is there—waiting for the business to lead.

Those who have been following my blog posts over the past two years may have discovered that I consistently use the terms “System of Engagement” and “System of Record” in the context of a Coordinated and Connected PLM infrastructure.

Understanding the distinction between ‘System of Engagement‘ and ‘System of Record‘ is crucial for comprehending the type of collaboration and business purpose in a PLM infrastructure. When explored in depth, these terms will reveal the underlying technology.

The concept

A year ago, I had an initial discussion with three representatives of a typical system of engagement. I spoke with Andre Wegner from Authentise, MJ Smith from CoLab and Oleg Shilovitsky from OpenBOM. You can read and see the interview here: The new side of PLM? Systems of Engagement!

A year ago, I had an initial discussion with three representatives of a typical system of engagement. I spoke with Andre Wegner from Authentise, MJ Smith from CoLab and Oleg Shilovitsky from OpenBOM. You can read and see the interview here: The new side of PLM? Systems of Engagement!

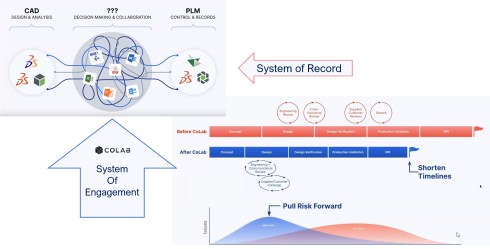

As a follow-up, I had a more detailed interview with Taylor Young, the Chief Strategy Officer of CoLab, early this year.

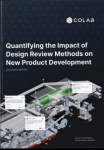

CoLab introduced the term Design Engagement System (DES), a new TLA. Based on a survey among 250 global engineering leaders, we discussed the business impact and value of their DES System of Engagement.

CoLab introduced the term Design Engagement System (DES), a new TLA. Based on a survey among 250 global engineering leaders, we discussed the business impact and value of their DES System of Engagement.

You can read the discussion here: Where traditional PLM fails.

The business benefits

I like that CoLab’s external messaging focuses on the business capabilities and opportunities, which reminded me of the old Steve Jobs recording: Don’t talk about the product!

There are so many discussions on LinkedIn about the usage of various technologies and concepts without a connection to the business. I’ll let you explore and decide.

It’s worth noting that while the ‘System of Engagement’ offers significant business benefits, it’s not a standalone solution. The right technology is crucial for translating these benefits into tangible business results.

This was a key takeaway from my follow-up discussion with MJ Smith, CMO at CoLab, about the difference between Configuration and Customization.

Why configurability?

Hello MJ, it has been a while since we spoke, and this time, I am curious to learn how CoLab fits in an enterprise PLM infrastructure, zooming in on the aspects of configuration and customization.

Hello MJ, it has been a while since we spoke, and this time, I am curious to learn how CoLab fits in an enterprise PLM infrastructure, zooming in on the aspects of configuration and customization.

Using configurability, we can make a smaller number of features work for more use cases or business processes. Users do not want to learn and adopt many different features, and a system of engagement should make it easy to participate in a business process, even for infrequent or irregular users.

Using configurability, we can make a smaller number of features work for more use cases or business processes. Users do not want to learn and adopt many different features, and a system of engagement should make it easy to participate in a business process, even for infrequent or irregular users.

In design review, this means cross-functional teams and suppliers who are not the core users of CAD or PLM.

I agree, and for that reason, we see the discussion of Systems of Record (not user-friendly and working in a coordinated mode) and Systems of Engagement (focus on the end-user and working in a connected mode). How do you differentiate with CoLab?

I agree, and for that reason, we see the discussion of Systems of Record (not user-friendly and working in a coordinated mode) and Systems of Engagement (focus on the end-user and working in a connected mode). How do you differentiate with CoLab?

From a technology perspective, as a System of Engagement, CoLab wants to eliminate complex, multi-step workflows that require users to navigate between 5-10+ different point solutions to complete a review.

From a technology perspective, as a System of Engagement, CoLab wants to eliminate complex, multi-step workflows that require users to navigate between 5-10+ different point solutions to complete a review.

For example:

- SharePoint for sending data

- CAD viewers for interrogating models

- PowerPoint for documenting markups – using screenshots

- Email or Teams meetings for discussing issues

- Spreadsheets for issue tracking

- Traditional PLM systems for consolidation

As mentioned before, the participants can be infrequent or irregular users from different companies. This gap exists today, with only 20% of suppliers and 49% of cross-functional teams providing valuable design feedback (see the 2023 report here). To prevent errors and increase design quality, NPD teams must capture helpful feedback from these SMEs, many of whom only participate in 2 to 3 design reviews each year.

As mentioned before, the participants can be infrequent or irregular users from different companies. This gap exists today, with only 20% of suppliers and 49% of cross-functional teams providing valuable design feedback (see the 2023 report here). To prevent errors and increase design quality, NPD teams must capture helpful feedback from these SMEs, many of whom only participate in 2 to 3 design reviews each year.

Configuration and Customization

Back to the interaction between the System of Engagement (CoLab) and Systems or Records, in this case, probably the traditional PLM system. I think it is important to define the differences between Configuration and Customization first.

Back to the interaction between the System of Engagement (CoLab) and Systems or Records, in this case, probably the traditional PLM system. I think it is important to define the differences between Configuration and Customization first.

These would be my definitions:

- Configuration involves setting up standard options and features in software to meet specific needs without altering the code, such as adjusting settings or using built-in tools.

- Customization involves modifying the software’s code or adding new features to tailor it more precisely to unique requirements, which can include creating custom scripts, plugins, or changes to the user interface.

Both configuration and customization activities can be complex depending on the system we are discussing.

It’s also interesting to consider how configurability and customization can go hand in hand. What starts as a customization for one customer could become a configurable feature later.

It’s also interesting to consider how configurability and customization can go hand in hand. What starts as a customization for one customer could become a configurable feature later.

For software providers like CoLab, the key is to stay close to your customers so that you can understand the difference between a niche use case – where customization may be the best solution – vs. something that could be broadly applicable.

In my definition of customization, I first thought of connecting to the various PLM and CAD systems. Are these interfaces standardized, or are they open to configuration and customization?

In my definition of customization, I first thought of connecting to the various PLM and CAD systems. Are these interfaces standardized, or are they open to configuration and customization?

CoLab offers out-of-the-box integrations with PLM systems, including Windchill, Teamcenter, and 3DX Enovia. By integrating PLM with CoLab, companies can share files straight from PLM to CoLab without having to export or convert to a neutral format like STP.

CoLab offers out-of-the-box integrations with PLM systems, including Windchill, Teamcenter, and 3DX Enovia. By integrating PLM with CoLab, companies can share files straight from PLM to CoLab without having to export or convert to a neutral format like STP.

By sharing CAD from PLM to CoLab, companies make it possible for non-PLM users – inside the company and outside (e.g., suppliers, customers) to participate in design reviews. This use case is an excellent example of how a system of engagement can be used as the connection point between two companies, each with its own system of record.

CoLab can also send data back to PLM. For example, you can see whether there is an open review on a part from within Windchill PLM and how many unresolved comments exist on a file shared with CoLab from PLM. Right now, there are some configurable aspects to our integrations – such as file-sharing controls for Windchill users.

We plan to invest more in the configurability of the PLM to DES interface. We will also invest in our REST API, which customers can use to build custom integrations if they like, instead of using our OOTB integrations.

To get an impression, look at this 90-second demo of CoLab’s Windchill integration for reference.

Talking about IP security is a topic that is always mentioned when companies interact with each other, and in particular in a connected mode. Can you tell us more about how Colab deals with IP protection?

Talking about IP security is a topic that is always mentioned when companies interact with each other, and in particular in a connected mode. Can you tell us more about how Colab deals with IP protection?

CoLab has enterprise customers, like Schaeffler, implementing attribute-based access controls so that users can only access files in CoLab that they would otherwise have access to in Windchill.

CoLab has enterprise customers, like Schaeffler, implementing attribute-based access controls so that users can only access files in CoLab that they would otherwise have access to in Windchill.

We also have customers who integrate CoLab with their ERP system to auto-provision guest accounts for suppliers so they can participate in design reviews.

This means that the OEM is responsible for identifying which data is shared within CoLab. I am curious: Are these kinds of IP-sharing activities standardized because you have a configurable interface to the PLM/ERP, or is this still a customization?

This means that the OEM is responsible for identifying which data is shared within CoLab. I am curious: Are these kinds of IP-sharing activities standardized because you have a configurable interface to the PLM/ERP, or is this still a customization?

I am referring to this point in the Federated PLM Interest Group. We discuss using OSLC as one of the connecting interfaces between the System of Record and the System of Engagement (Modules)—it’s still in the early days, as you can read in this article—but we see encouraging similar results. Is this a topic of attention for CoLab, too?

The interface between CoLab and PLM is the same for every customer (not custom) but can be configured with attributes-based access controls. End users who have access must explicitly share files. Further access controls can also be put in place on the CoLab side to protect IP.

The interface between CoLab and PLM is the same for every customer (not custom) but can be configured with attributes-based access controls. End users who have access must explicitly share files. Further access controls can also be put in place on the CoLab side to protect IP.

We are taking a similar approach to integrating as outlined by OSLC. The OSLC concept is interesting to us, as it appears to provide a framework for better-supporting concepts such as versions and variants. The interface delegation concepts are also of interest.

Conclusion

It was great to dive deeper into the complementary value of CoLab as a typical System of Engagement. Their customers are end-users who want to collaborate efficiently during design reviews. By letting their customers work in a dedicated but connected environment, they are released from working in a traditional, more administrative PLM system.

Interfacing between these two environments will be an interesting topic to follow in the future. Will it be, for example, OSLC-based, or do you see other candidates to standardize?

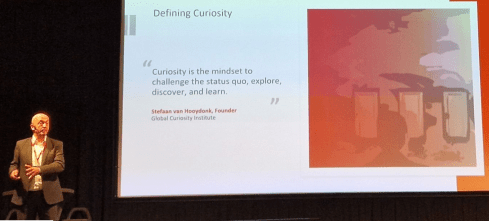

The past two weeks have been a fascinating journey, delving into the intersection of Curiosity, Innovation, and modern PLM. Where many PLM-related posts are about the best products and the best architectures, there is also the “soft” angle – people and culture – which I believe is the most important to start from. Without the right people and the right mindset, every PLM implementation is ready to fail.

The past two weeks have been a fascinating journey, delving into the intersection of Curiosity, Innovation, and modern PLM. Where many PLM-related posts are about the best products and the best architectures, there is also the “soft” angle – people and culture – which I believe is the most important to start from. Without the right people and the right mindset, every PLM implementation is ready to fail.

First, I worked with Stefaan van Hooydonk, the founder of the Global Curiosity Institute and author of the bestselling book The Workplace Curiosity Manifesto, on the article Curiosity as Guiding Principle for PLM Change, which explained the importance of Curiosity in the context of sustainable product development (PLM).

First, I worked with Stefaan van Hooydonk, the founder of the Global Curiosity Institute and author of the bestselling book The Workplace Curiosity Manifesto, on the article Curiosity as Guiding Principle for PLM Change, which explained the importance of Curiosity in the context of sustainable product development (PLM).

The intersection between Curiosity and modern PLM is Systems Thinking.

Systems Thinking: A Crucial 21st Century Skill for Sustainable Product Development, Driven by Curiosity.

Last week, I had the privilege of attending the CADCAM Lab conference in Ljubljana. In addition to my keynote, I was inspired by several presentations on the various aspects of digital transformation: the tools, possible enablement, and the needed mindset.

One of the highlights was the talk by Tanja Mohorič, the director for innovation culture and European projects in Slovene corporation Hidria and director of Slovene Automotive Cluster ACS. Tanja shared her insights on fostering Innovation, a crucial driver for a sustainable business as companies need to innovate in order to remain significant.

One of the highlights was the talk by Tanja Mohorič, the director for innovation culture and European projects in Slovene corporation Hidria and director of Slovene Automotive Cluster ACS. Tanja shared her insights on fostering Innovation, a crucial driver for a sustainable business as companies need to innovate in order to remain significant.

One of the intersections between Innovation and modern PLM is Curiosity

Innovation is defined as the process of bringing about new ideas, methods, products, services, or solutions that have significant positive impact and value.

Let’s zoom in on these two themes.

Curiosity

I knew Stefaan from his keynote at the PLM Road Map / PDT Europe 2022 conference; you can read my review from his session here: The week after PLM Roadmap / PDT Europe 2022.

It was an eye-opener for many of us focusing on the PLM domain. Stefaan’s message is that Curiosity is not only a personal skill; it is also something of a company’s culture. And in this age of rapid change, companies that embrace a culture of openness are outperforming their peers.

This time, on Earth Day (April 22nd), Stefaan organized an interactive webinar titled “Curiosity and the Planet,” which addressed the need for new technologies and approaches to living in a sustainable future. With my Green PLM-twisted mind, I immediately saw the overlap and intersection between our missions.

This time, on Earth Day (April 22nd), Stefaan organized an interactive webinar titled “Curiosity and the Planet,” which addressed the need for new technologies and approaches to living in a sustainable future. With my Green PLM-twisted mind, I immediately saw the overlap and intersection between our missions.

We decided to write an article together on this topic, in which we described a pathway for companies that want to develop more sustainable products or solutions, using Curiosity as one of the means.

As companies need to find their path to the digitization of their PLM infrastructure due to regulations, ESG reporting, and potentially the introduction of digital product passports and the circular economy, they need to act fast in an area not familiar to them.

As companies need to find their path to the digitization of their PLM infrastructure due to regulations, ESG reporting, and potentially the introduction of digital product passports and the circular economy, they need to act fast in an area not familiar to them.

Here, a curious organization will outperform the traditional, controlled enterprise.

You can read the full article here: Curiosity as Guiding Principle for PLM Change.

You can read the full article here: Curiosity as Guiding Principle for PLM Change.

And as I know in our hasty society, not everyone will read the article although I think you should. For those who do not read the details, I close this topic with a quote from the article:

We define Curiosity as the mindset to challenge the status quo, explore, discover and learn.

Curiosity is often considered a trait linked to an individual, as exemplified by the constant questions of children or scientists. Groups of people or organizations can also be curious collectively. Research from INSEAD studying the level of Curiosity across the executive team uncovered that these teams are superior in two distinct ways: first, they are better at future Innovation, and second, they are better at optimizing their current operations. Curiosity on the executive team leads not only to future success but also to better short-term business results. Such teams create the perfect environment for their teams to thrive.

Change, however, is hard, and people are often left to their own devices; they prefer to perpetuate the known past rather than invite an unknown future. Curiosity helps us lean into uncertainty. It encourages us to slow down and observe whether the status quo we hold dear is still relevant. Curiosity is the prime catalyst for change. It invites open questions.

Innovation

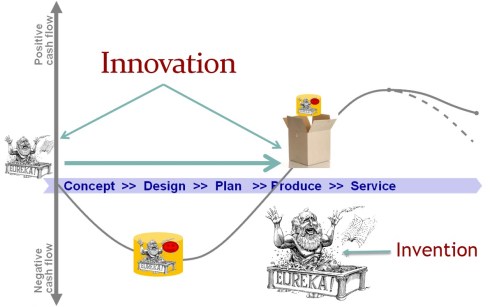

There is often confusion between Invention and Innovation. Where invention is the “Eureka” moment where a new idea gets its shape, Innovation is the process of bringing new ideas, methods, products, services, or solutions to the market.

I presented this topic at the 2013 Product Innovation Conference in Berlin. The title of the presentation was PLM Loves Innovation, and you can find it here on SlideShare.

I presented this topic at the 2013 Product Innovation Conference in Berlin. The title of the presentation was PLM Loves Innovation, and you can find it here on SlideShare.

Looking back at the presentation, I realized we were thinking linear.

Concepts of an iterative approach, DevOps and a Minimum Viable Product (MVP) were not yet there. Meanwhile, thanks to digitization, bringing Innovation to the market has changed, which made Tanja Mohorič’s presentation a significant refresh of the mind.

Tanja’s lecture was illustrated by various quotes, you can find them in her presentation . Here are a few examples:

If you really look closely, most overnight successes took a long time. (Steve Jobs)

If you read Steve Jobs’s history at Apple, you will discover it has been a long journey. Although we like to praise the hero, there were many other, less visible people and patents involved in bringing Apple’s Innovation to the market.

Innovation is the ability to convert ideas into invoices (Lewis Dunacan)

What I like about this quote is that it also shows the importance of having a positive financial outcome. Bringing Innovation to the market is a matter of timing. If you are too early, there is no market for your product (yet), and if you are too late, the market share or margin is gone.

Minds are like parachutes – they only function when open (Thomas Dewar)

Curiosity and an open mind remain needed. The parachute quote is a quote to remember, mainly if you work in a traditional, established company. The risk of conformance is high, and a “we know the best” attitude might be killing the company, as we have seen from some management examples, like Kodak, NOKIA, and others.

Tanja’s presentation addressing the elements that support Innovation and those that kill Innovation can be found here: INNOVATION AS A PRECONDITION TO SUCCESS_Tanja Mohorič.

Tanja’s presentation addressing the elements that support Innovation and those that kill Innovation can be found here: INNOVATION AS A PRECONDITION TO SUCCESS_Tanja Mohorič.

I want to close with one of the essential images that she shared, which is very aligned with how I see companies should consider their future, not as an evolutionary path to survive but as a journey to be inspired.

Coaching

As the CADCAM Group is a significant implementer of the Dassault Systèmes portfolio, my presentation about digital transformation in the PLM Domain was focused on their terminology and capabilities. You can find my presentation on SlideShare here.

As the CADCAM Group is a significant implementer of the Dassault Systèmes portfolio, my presentation about digital transformation in the PLM Domain was focused on their terminology and capabilities. You can find my presentation on SlideShare here.

However, the HOW part of digital transformation is more or less independent of the software. Here, it is about people, digital skills and new ways of working, which can be challenging for an existing enterprise as the linear business must continue. You might have seen the diagram below from previous blog posts/presentations.

The challenge I discussed with a few companies was how to apply it to your company.

First of all, I am still promoting McKinsey’s approach described in their article Our insights/toward an integrated technology operating model from 2017, which might not directly mention PLM at first glance. The way you work in your business should reflect the way you work with PLM and vice versa.

Where the traditional application-domain-based model reflects the existing coordinated business, the transformation takes place by learning to work first in small pods and later in digital product teams.

It seems evident that these new teams will be staffed with young, digital-native people. However, it remains crucial that these teams are coached by experienced people who help the team benefit from their vast experience.

It is like in soccer. Having eleven highly skilled young players does not make a team successful. Success depends on the combination of the trainer and the coach, and it is a continuous interaction throughout the season.

It is like in soccer. Having eleven highly skilled young players does not make a team successful. Success depends on the combination of the trainer and the coach, and it is a continuous interaction throughout the season.

Therefore, a question for your organization: “Where are your coaches and trainers?”

I addressed this topic in my post: PLM 2020- The next decade (4 challenges), where the topic of changing organizations and retiring people became apparent.

As a rule of thumb, I would claim that you should try to give somebody with unique knowledge and who will be retiring in 2 – 3 years the role of coach and is no longer an operational mission. It may look less effective; however, it will contribute to a smooth knowledge transition from a coordinated to a coordinated and connected enterprise.

Conclusion

It was great to be inspired by some of the “soft” topics related to modern PLM. We like to discuss the usage of drawings, intelligent part numbers, the EBOM, MBOM, and SBOM or a cloud infrastructure. However I enjoyed discussing perhaps the most essential parts of a successful PLM implementation: the people, their motivation and their attitude to Curiosity and Innovation – their willingness to get inspired by the future.

What do you see as the most important topic to address in the future?

![]() I attended the PDSVISION forum for the first time, a two-day PLM event in Gothenburg organized by PTC’s largest implementer in the Nordics, also active in North America, the UK, and Germany.

I attended the PDSVISION forum for the first time, a two-day PLM event in Gothenburg organized by PTC’s largest implementer in the Nordics, also active in North America, the UK, and Germany.

The theme of the conference: Master your Digital Thread – a hot topic, as it has been discussed in various events, like the recent PLM Roadmap/PDT Europe conference in November 2023.

The event drew over 200 attendees, showing the commitment of participants, primarily from the Nordics, to knowledge sharing and learning.

The diverse representation included industry leaders like Vestas, pioneers in Sustainable Energy, and innovative startups like CorPower Ocean, who are dedicated to making wave energy reliable and competitive. Notably, the common thread among these diverse participants was their focus on sustainability, a growing theme in PLM conferences and an essential item on every board’s strategic agenda.

I enjoyed the structure and agenda of the conference. The first day was filled with lectures and inspiring keynotes. The second day was a day of interactive workshops divided into four tracks, which were of decent length so we could really dive into the topics. As you can imagine, I followed the sustainability track.

Here are some of my highlights of this conference.

Catching the Wind: A Digital Thread From Design to Service

Simon Saandvig Storbjerg, unfortunately remote, gave an overview of the PLM-related challenges that Vestas is addressing. Vestas, the undisputed market leader in wind energy, is indirectly responsible for 231 million tonnes of CO2 per year.

Simon Saandvig Storbjerg, unfortunately remote, gave an overview of the PLM-related challenges that Vestas is addressing. Vestas, the undisputed market leader in wind energy, is indirectly responsible for 231 million tonnes of CO2 per year.

One of the challenges of wind power energy is the growing complexity and need for variants. With continuous innovation and the size of the wind turbine, it is challenging to achieve economic benefits of scale.

As an example, Simon shared data related to the Lost Production Factor, which was around 5% in 2009 and reduced to 2% in 2017 and is now growing again. This trend is valid not only for Vestas but also for all wind turbine manufacturers, as variability is increasing.

Vestas is introducing modularity to address these challenges. I reported last year about their modularity journey related to the North European Modularity biannual meeting held at Vestas in Ringkøbing – you can read the post here.

Simon also addressed the importance of Model-Based Definition (MBD), which is crucial if you want to achieve digital continuity between engineering and manufacturing. In particular, in this industry, MBD is a challenge to involve the entire value chain, despite the fact that the benefits are proven and known. Change in people skills and processes remains a challenge.

The Future of Product Design and Development

The session led by PTC from Mark Lobo, General Manager for the PLM Segment, and Brian Thompson, General Manager of the CAD Segment, brought clarity to the audience on the joint roadmap of Windchill and Creo.

The session led by PTC from Mark Lobo, General Manager for the PLM Segment, and Brian Thompson, General Manager of the CAD Segment, brought clarity to the audience on the joint roadmap of Windchill and Creo.

Mark and Brian highlighted the benefits of a Model-Based Enterprise and Model-Based Definition, which are musts if you want to be more efficient in your company and value chain.

Mark and Brian highlighted the benefits of a Model-Based Enterprise and Model-Based Definition, which are musts if you want to be more efficient in your company and value chain.

The WHY is known, see the benefits described in the image, and requires new ways of working, something organizations need to implement anyway when aiming to realize a digital thread or digital twin.

In addition, Mark addressed PTC’s focus on Design for Sustainability and their partner network. In relation to materials science, the partnership with Ansys Granta MI is essential. It was presented later by Ansys and discussed on day two during one of the sustainability workshops.

Mark and Brian elaborated on the PTC SaaS journey – the future atlas platform and the current status of WindChill+ and Creo+, addressing a smooth transition from existing customers to a new future architecture.

And, of course, there was the topic of Artificial Intelligence.

Mark explained that PTC is exploring AI in various areas of the product lifecycle, like validating requirements, optimizing CAD models, streamlining change processes on the design side but also downstream activities like quality and maintenance predictions, improved operations and streamlined field services and service parts are part of the PTC Copilot strategy.

Mark explained that PTC is exploring AI in various areas of the product lifecycle, like validating requirements, optimizing CAD models, streamlining change processes on the design side but also downstream activities like quality and maintenance predictions, improved operations and streamlined field services and service parts are part of the PTC Copilot strategy.

PLM combined with AI is for sure a topic where the applicability and benefits can be high to improve decision-making.

PLM Data Merge in the PTC Cloud: The Why & The How