Recently, I have been reading some interesting posts beyond all the technical discussions related to PLM and AI. Is PLM becoming obsolete? Are we heading to a new type of infrastructure based on MCP agents? Are these agents an example of new ways of collaboration?

Collaboration – it pops up everywhere!

Here is a quote from the article that triggered me:

The 𝐧𝐮𝐦𝐛𝐞𝐫 𝐨𝐧𝐞 𝐫𝐞𝐚𝐬𝐨𝐧 organizations deploy MBSE is not simulation or architecture development. It is 𝐞𝐧𝐡𝐚𝐧𝐜𝐞𝐝 𝐜𝐨𝐥𝐥𝐚𝐛𝐨𝐫𝐚𝐭𝐢𝐨𝐧 𝐚𝐧𝐝 𝐜𝐨𝐦𝐦𝐮𝐧𝐢𝐜𝐚𝐭𝐢𝐨𝐧 — at 67%. But here is the uncomfortable part.

Only 24% reported actually achieving collaboration as a business outcome. That is a 43-point gap between intent and result. Traceability is even worse — 48% deploy MBSE for it, 9% say they have realized it.

What if the problem is not that MBSE fails to deliver collaboration — but that most organizations 𝐧𝐞𝐯𝐞𝐫 𝐝𝐞𝐟𝐢𝐧𝐞 𝐰𝐡𝐚𝐭 𝐛𝐞𝐭𝐭𝐞𝐫 𝐜𝐨𝐥𝐥𝐚𝐛𝐨𝐫𝐚𝐭𝐢𝐨𝐧 𝐥𝐨𝐨𝐤𝐬 𝐥𝐢𝐤𝐞 in measurable terms?

Chad Jackson’s article aligns with many other discussions I had with companies related to PLM (and MBSE) – itinspired me to focus this time on collaboration.

How do we measure collaboration?

My 2015 blog post has the same title: How do you measure collaboration? The post was written at a time when PLM collaboration had to compete with ERP execution stories. Often, engineering collaboration was considered an inefficient process to be fixed in the future, according to some ERP vendors.

My 2015 blog post has the same title: How do you measure collaboration? The post was written at a time when PLM collaboration had to compete with ERP execution stories. Often, engineering collaboration was considered an inefficient process to be fixed in the future, according to some ERP vendors.

ERP always had a strong voice at the management level—boxes on an org chart, reporting lines, clear ownership and KPIs flowing upward. You could see how the company was performing.

ERP always had a strong voice at the management level—boxes on an org chart, reporting lines, clear ownership and KPIs flowing upward. You could see how the company was performing.

From the management side, accountability flows downward. The architecture of the organization mirrors the architecture of the product, and the architecture of the product mirrors the architecture of the organization.

We have known this for decades; it is Conway’s Law. Yet we are still surprised when silos emerge exactly where we designed them.

The Management Dilemma

In many of my engagements, the company’s management often struggles to understand the value of collaboration because there is no direct line between collaboration and immediate performance. Revenue can be measured. Cycle times can be measured. Defects can be measured. Even employee turnover can be measured.

In many of my engagements, the company’s management often struggles to understand the value of collaboration because there is no direct line between collaboration and immediate performance. Revenue can be measured. Cycle times can be measured. Defects can be measured. Even employee turnover can be measured.

But collaboration? What is the KPI?

It is a fair question. If something cannot be quantified, it becomes subjective and depends on gut feelings. And if it cannot be tied directly to quarterly results, it often becomes optional.

The problem is not that collaboration has no impact on performance – look at the introduction of email in companies. Did your company make a business case for that?

The problem is not that collaboration has no impact on performance – look at the introduction of email in companies. Did your company make a business case for that?

Still, it improved collaboration a lot, and sometimes it became a burden with all the CC-messages and epistles exchanged.

Collaboration has an impact, deeply and systematically. But its impact is indirect, delayed, and distributed. It reduces friction, can improve shared understanding and prevent expensive rework.

The return on investment on collaboration is real, but it does not show up as a clean, linear metric.

The return on investment on collaboration is real, but it does not show up as a clean, linear metric.

For a hierarchical and linearly structured organization, horizontal collaboration is often hard to “sell.”

Back to Conway’s Law

Organizational structure shapes communication patterns. Communication patterns shape systems.

Organizational structure shapes communication patterns. Communication patterns shape systems.

If your organization is vertical, your product will be vertical. If your incentives are local, your decisions will be local. If your teams are isolated, your solutions will be fragmented.

You cannot expect horizontal behavior from a vertically optimized structure without friction.

Disconnected collaboration initiatives fail because they try to overlay horizontal tools on top of vertical incentives.

Attempts like a new collaboration platform or using shared workspace technology to incentivize collaboration are examples of this approach.

But the underlying structure remains untouched. People are still measured on local performance. Budgets are still allocated per department. Promotions still reward vertical success.

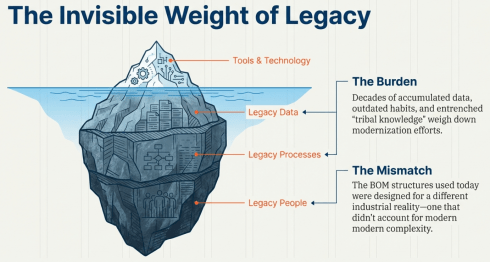

![]() First question to ask in your company: Who is responsible for your PLM/collaboration infrastructure for non-transactional information?

First question to ask in your company: Who is responsible for your PLM/collaboration infrastructure for non-transactional information?

Most likely, it is in the IT or Engineering silo, rarely on a higher organizational level.

And then we are surprised when collaboration stalls?

The Myth of the Tool

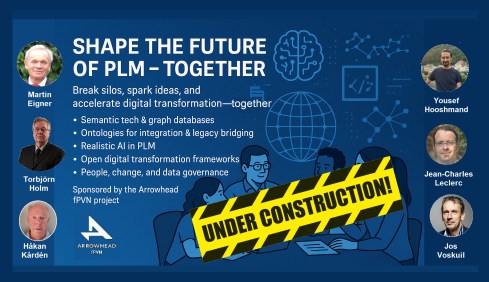

Whenever collaboration becomes a pain, people look for IT tools as a cure.

“We need better platforms.”

“We need better platforms.”

“We need integrated systems.”

and now:

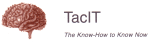

“We need AI – the AI agents will do the collaboration for us.”

Tools matter, but they are amplifiers. They amplify existing behavior. They do not create it. While finalizing this article, I saw this post from Dr. Sebastian Wernicke coming in, containing this quote:

Agents are software. Maturity is culture. And culture, inconveniently, doesn’t come with an install package.

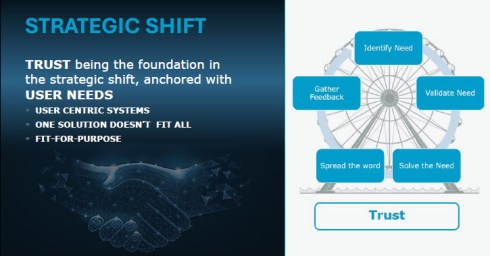

If trust is low, a collaboration platform becomes a battlefield. If incentives are misaligned, shared dashboards become weapons. If fear dominates, transparency becomes a threat.

![]() Collaboration is not a software problem. It is a human problem. Which brings us to something that is rarely discussed in boardrooms: the intrinsic motivation of its employees.

Collaboration is not a software problem. It is a human problem. Which brings us to something that is rarely discussed in boardrooms: the intrinsic motivation of its employees.

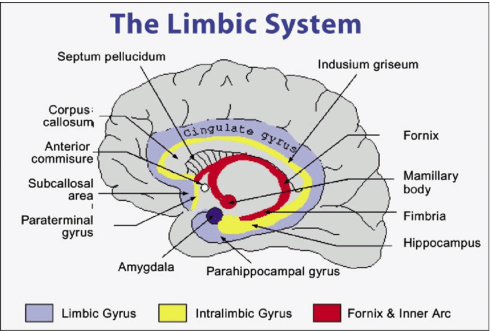

The Limbic Brain Is Always There

Beneath the rational layer of strategy and planning sits something older: the limbic system. The part of us that cares about belonging, safety, recognition, autonomy, and purpose.

Collaboration thrives when the limbic brain’s needs are met. It collapses when they are threatened.

- If people feel unsafe, they protect information!

- If they feel undervalued, they withdraw effort!

- If they feel controlled, they resist alignment!

You cannot mandate collaboration if the emotional system of the organization is defensive.

The question is not “How do we force collaboration?”

The question is “How do we create conditions where collaboration feels natural?”

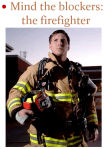

And that requires leaders to connect to the human, not just to the role or an artificial intelligence solution. They should be inspired by this iconic image from Share PLM:

Besides a difficult-to-quantify ROI, there is another reason why collaboration struggles to gain executive traction: it rarely creates immediate success.

It prevents future failure, and we humans in general do not prioritize prevention, thinking of our environmental, financial and potential even health behavior. Where prevention has the lowest cost, most of the time, fixing the damage lies in our nature.

For companies, it is easier to celebrate the hero who fixes a late-stage integration disaster than the quiet team that prevented it months earlier through cross-functional dialogue.

For companies, it is easier to celebrate the hero who fixes a late-stage integration disaster than the quiet team that prevented it months earlier through cross-functional dialogue.

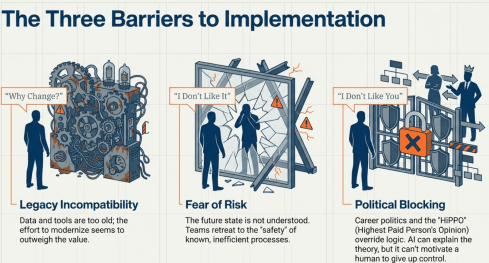

For me, the firefighters are the biggest challenge to successfully implementing a PLM infrastructure. The image to the left comes from a 2014 presentation when discussing potential resistance to a successful PLM implementation.

In vertical systems, firefighting is visible. Prevention is silent and therefore collaboration activities feel like a cost center rather than a strategic lever.

Where to Push, Where to Invest?

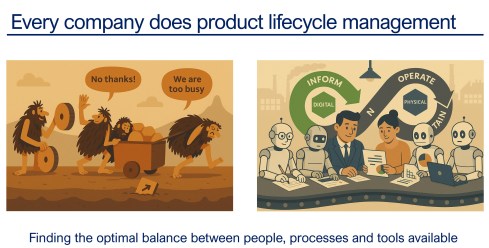

![]() If you cannot directly measure collaboration, where should you push? Not in tools alone, slogans or one-off workshops. Invest in shared experiences.

If you cannot directly measure collaboration, where should you push? Not in tools alone, slogans or one-off workshops. Invest in shared experiences.

When people meet outside their vertical silos, something subtle shifts. They see faces instead of functions. They understand constraints instead of assuming incompetence. They replace narratives with conversations.

Note: shared experiences are not the same as planned online webmeetings that became popular during and after COVID. They have a rigid regime of collaboration enforcement, back-to-back in many companies, most of the time lacking the typical “coffee machine” experiences.

Note: shared experiences are not the same as planned online webmeetings that became popular during and after COVID. They have a rigid regime of collaboration enforcement, back-to-back in many companies, most of the time lacking the typical “coffee machine” experiences.

Also, when looking at events where people share experiences, there is a difference between a traditional vertical PLM/CM/IT/ERP conference where specialists focus on one discipline and on the other side, a human-centric conference, where humans share their experiences in an organization.

The Share PLM Summit in May last year was an eye-opener for me. Starting from the human perspective brought a lot of energy and willingness to discuss various insights – collaboration at its best.

Events, summits, workshops—when done well—create human connection. They remind participants that behind every deliverable sits a person trying to do meaningful work.

The focus on the human perspective is not soft. It is strategic because collaboration is not primarily about information exchange. It is about relationship quality and trust.

The Real Question

The question is not whether collaboration is valuable. The question is whether we are willing to adjust our vertical incentives to make it possible.

The question is not whether collaboration is valuable. The question is whether we are willing to adjust our vertical incentives to make it possible.

Because collaboration is not free, it requires time. It requires emotional energy. It requires psychological safety. It sometimes requires giving up local control for global benefit.

In systems terms, it requires shifting from local optimization to whole-system optimization.

That is uncomfortable.

But if our products are complex, interconnected, and rapidly evolving—as most are today—then vertical thinking alone is no longer sufficient. The world has become horizontal, even if our org charts have not.

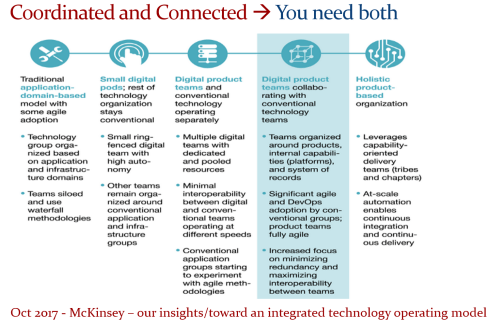

And perhaps the real challenge is not how to measure collaboration, but how to design organizations where collaboration is no longer something we need to sell at all. An article from McKinsey might inspire you here for this transition – for me, it did: Toward an integrated technology operating model.

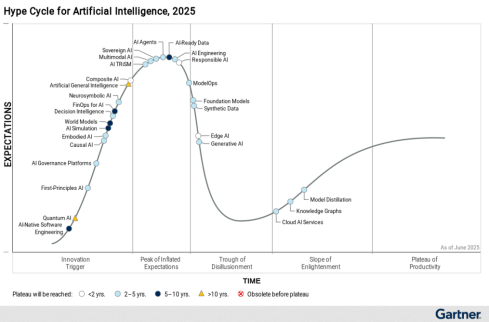

Beyond AI

While everyone talks and writes about AI, I do not believe AI will solve the collaboration issue. For sure, AI collaboration with agents will increase personal and organizational effectiveness, but it never touches our limbic brain, the irreplaceable part that makes us typical humans and unique.

There will always be a need for that, unless we become numb and addicted to the AI environments. There are various studies popping up on how AI “untrains” our brain muscles, reduces patience and deep thinking. Finding a new human balance is crucial.

Conclusion

Triggered by Chad Jackson’s post about MBSE and collaboration, I took the time to deep-dive into the aspects of collaboration in the PLM domain. How do you manage collaboration?

Come and share your experiences at the upcoming Share PLM 2026 summit from 19-20 May in Jerez. The title of my keynote: Are Humans Still Resources? Agentic AI and the Future of Work and PLM.

I enjoyed my role as the “Flying Dutchman,” travelling around the world to support PLM implementations and discussions. Flying was simply part of the job. Real communication meant being in the same room; early phone and video calls were expensive, awkward, and often ineffective. PLM was — and still is — a human business.

I enjoyed my role as the “Flying Dutchman,” travelling around the world to support PLM implementations and discussions. Flying was simply part of the job. Real communication meant being in the same room; early phone and video calls were expensive, awkward, and often ineffective. PLM was — and still is — a human business.

If you haven’t filled in the survey yet, please

If you haven’t filled in the survey yet, please

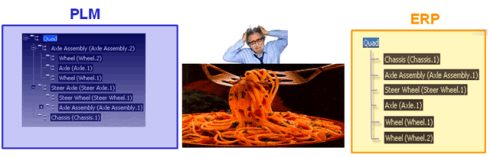

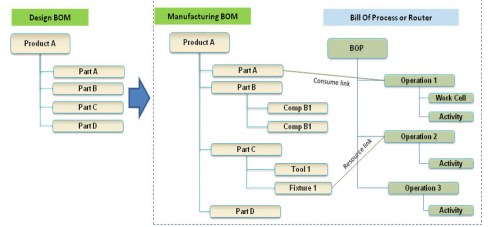

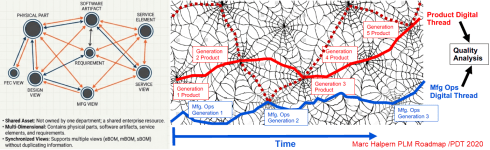

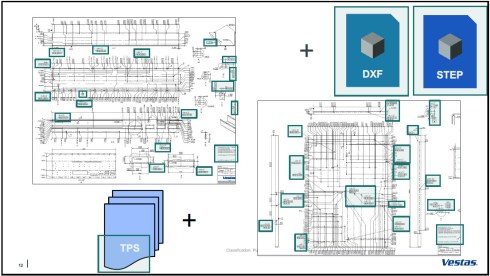

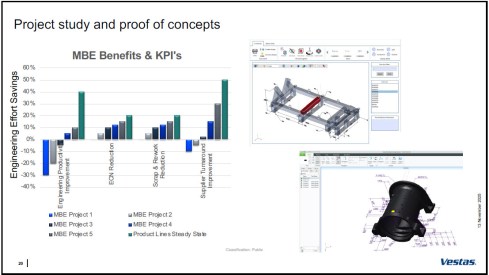

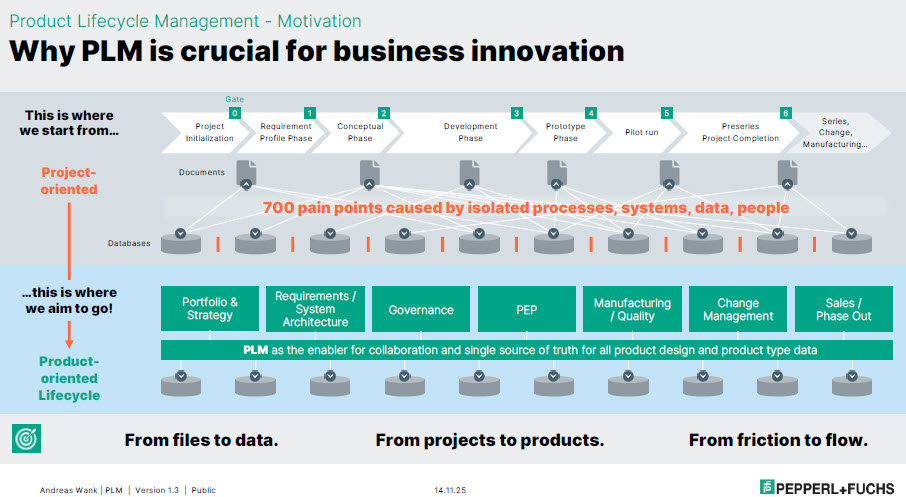

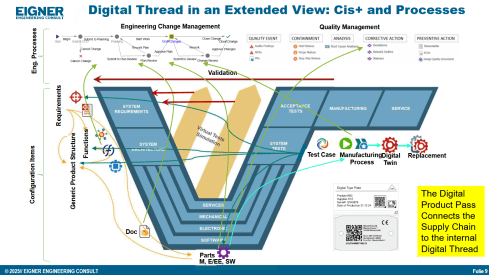

This definition needs to be resolved and adapted for a specific plant with its local suppliers and resources. PLM systems often support the transformation from the eBOM to a proposed mBOM, and if done more completely with a Bill of Process.

This definition needs to be resolved and adapted for a specific plant with its local suppliers and resources. PLM systems often support the transformation from the eBOM to a proposed mBOM, and if done more completely with a Bill of Process.

The challenge for these companies is that there is a lot of guesswork to be done, as the service business was not planned in their legacy business. A quick and dirty solution was to use the mBOM in ERP as the source of information. However, the ERP system usually does not provide any context information, such as where the part is located and what potential other parts need to be replaced—a challenging job for service engineers.

The challenge for these companies is that there is a lot of guesswork to be done, as the service business was not planned in their legacy business. A quick and dirty solution was to use the mBOM in ERP as the source of information. However, the ERP system usually does not provide any context information, such as where the part is located and what potential other parts need to be replaced—a challenging job for service engineers.

In early December, it became clear that Rich would no longer be able to support the PGGA for personal reasons. We respect his decision and thank Rich for the energy and private money he has put into setting up the website, pushing the moderators to remain active and publishing the newsletter every month. From the frequency of the newsletter over the last year, you might have noticed Rich struggled to be active.

In early December, it became clear that Rich would no longer be able to support the PGGA for personal reasons. We respect his decision and thank Rich for the energy and private money he has put into setting up the website, pushing the moderators to remain active and publishing the newsletter every month. From the frequency of the newsletter over the last year, you might have noticed Rich struggled to be active. product or start an alliance, the name can be excellent at the start, but later it might work against you. I believe we are facing this situation too with our PGGA (PLM Green Global Alliance)

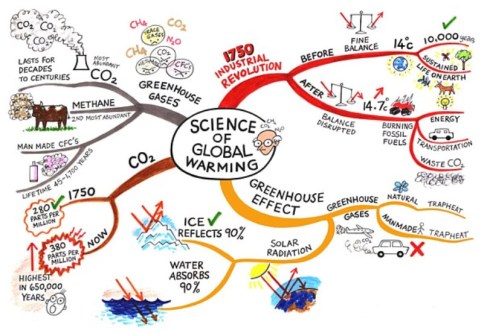

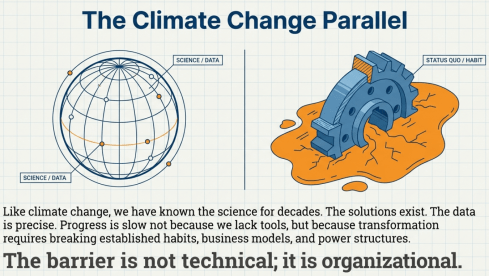

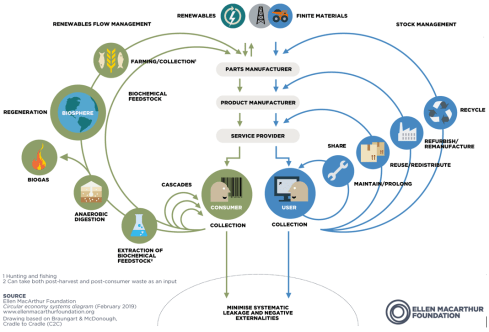

product or start an alliance, the name can be excellent at the start, but later it might work against you. I believe we are facing this situation too with our PGGA (PLM Green Global Alliance) Whether a business delivers products or services, most of the environmental impact is locked in during the design phase—often quoted at close to 80%. That makes design a strategic responsibility not only for engineering.

Whether a business delivers products or services, most of the environmental impact is locked in during the design phase—often quoted at close to 80%. That makes design a strategic responsibility not only for engineering.

Green has gradually acquired a negative connotation, weakened by early marketing hype and repeated greenwashing exposures. For many, green has lost its attractiveness.

Green has gradually acquired a negative connotation, weakened by early marketing hype and repeated greenwashing exposures. For many, green has lost its attractiveness.

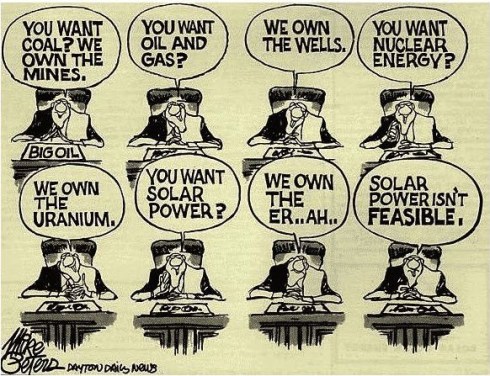

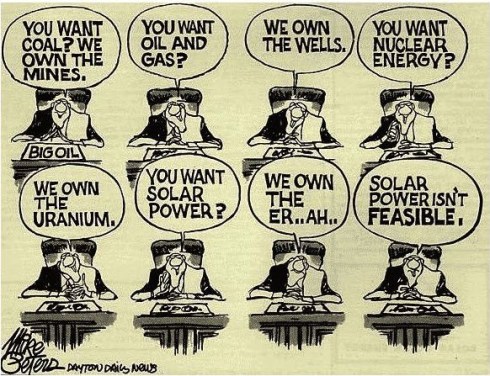

When reading or listening to the news, it seems that globalization is over and imperialism is back with a primary focus on economic control. For some countries, this means even control over people’s information and thoughts, by restricting access to information, deleting scientific data and meanwhile dividing humanity into good and bad people.

When reading or listening to the news, it seems that globalization is over and imperialism is back with a primary focus on economic control. For some countries, this means even control over people’s information and thoughts, by restricting access to information, deleting scientific data and meanwhile dividing humanity into good and bad people.

December is the last month when daylight is getting shorter in the Netherlands, and with the end of the year approaching, this is the time to reflect on 2025.

December is the last month when daylight is getting shorter in the Netherlands, and with the end of the year approaching, this is the time to reflect on 2025. It was already clear that AI-generated content was going to drown the blogging space. The result: Original content became less and less visible, and a self-reinforcing amount of general messages reduced further excitement.

It was already clear that AI-generated content was going to drown the blogging space. The result: Original content became less and less visible, and a self-reinforcing amount of general messages reduced further excitement. Therefore, if you are still interested in content that has not been generated with AI, I recommend subscribing to my blog and interacting directly with me through the comments, either on LinkedIn or via a direct message.

Therefore, if you are still interested in content that has not been generated with AI, I recommend subscribing to my blog and interacting directly with me through the comments, either on LinkedIn or via a direct message.

It was PeopleCentric first at the beginning of the year, with the

It was PeopleCentric first at the beginning of the year, with the

Who are going to be the winners? Currently, the hardware, datacenter and energy providers, not the AI-solution providers. But this can change.

Who are going to be the winners? Currently, the hardware, datacenter and energy providers, not the AI-solution providers. But this can change. Many of the current AI tools allow individuals to perform better at first sight. Suddenly, someone who could not write understandable (email) messages, draw images or create structured presentations now has a better connection with others—the question to ask is whether these improved efficiencies will also result in business benefits for an organization.

Many of the current AI tools allow individuals to perform better at first sight. Suddenly, someone who could not write understandable (email) messages, draw images or create structured presentations now has a better connection with others—the question to ask is whether these improved efficiencies will also result in business benefits for an organization. Looking back at the introduction of email with Lotus Notes, for example, email repositories became information siloes and did not really improve the intellectual behavior of people.

Looking back at the introduction of email with Lotus Notes, for example, email repositories became information siloes and did not really improve the intellectual behavior of people. As a result of this, some companies tried to reduce the usage of individual emails and work more and more in communities with a specific context. Also, due to COVID and improved connectivity, this led to the success of

As a result of this, some companies tried to reduce the usage of individual emails and work more and more in communities with a specific context. Also, due to COVID and improved connectivity, this led to the success of  For many companies, the chatbot is a way to reduce the number of people active in customer relations, either sales or services. I believe that, combined with the usage of LLMs, an improvement in customer service can be achieved. Or at least the perception, as so far I do not recall any interaction with a chatbot to be specific enough to solve my problem.

For many companies, the chatbot is a way to reduce the number of people active in customer relations, either sales or services. I believe that, combined with the usage of LLMs, an improvement in customer service can be achieved. Or at least the perception, as so far I do not recall any interaction with a chatbot to be specific enough to solve my problem.

Remember, the first 50 – 100 years of the Industrial Revolution made only a few people extremely rich.

Remember, the first 50 – 100 years of the Industrial Revolution made only a few people extremely rich.

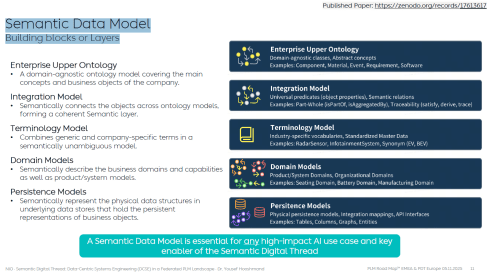

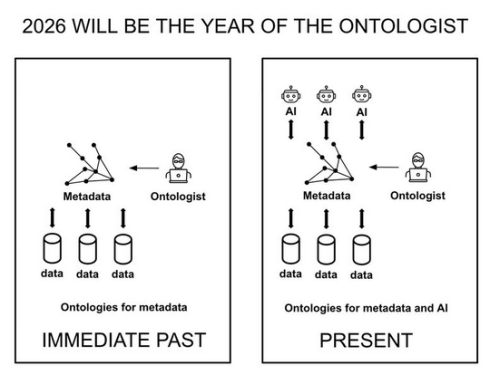

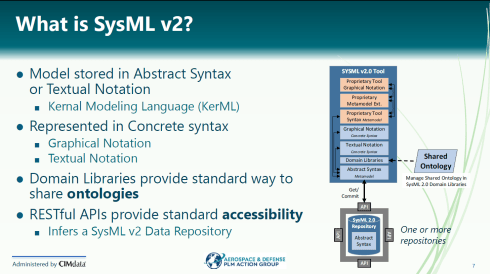

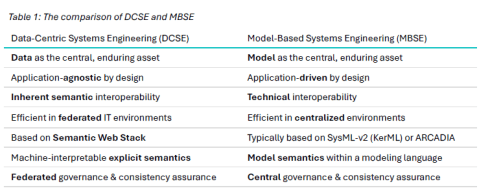

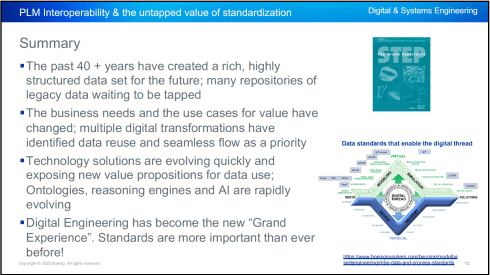

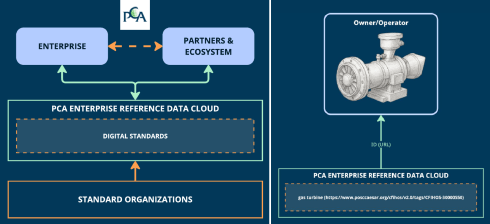

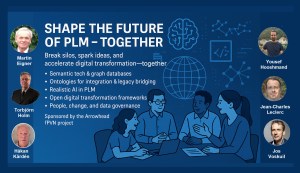

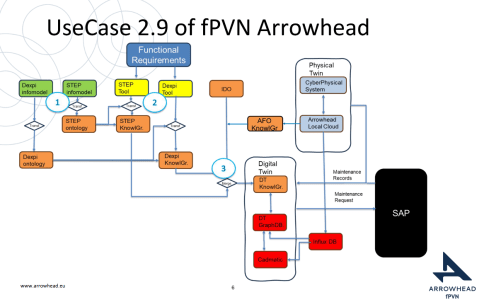

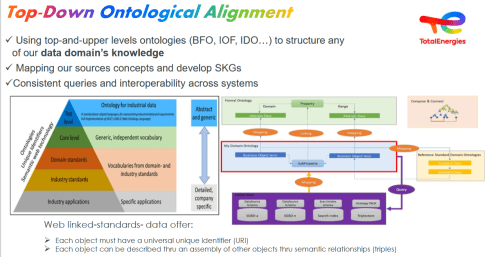

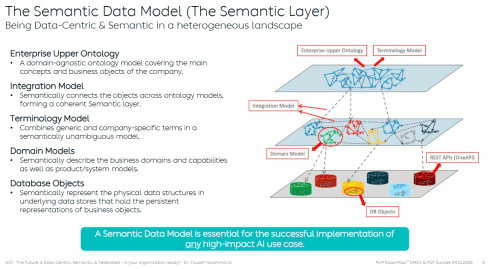

My conclusion from that post was that day 1 was a challenging day if you are a newbie in the domain of PLM and data-driven practices. We discussed and learned about relevant standards that support a digital enterprise, as well as the need for ontologies and semantic models to give data meaning and serve as a foundation for potential AI tools and use cases.

My conclusion from that post was that day 1 was a challenging day if you are a newbie in the domain of PLM and data-driven practices. We discussed and learned about relevant standards that support a digital enterprise, as well as the need for ontologies and semantic models to give data meaning and serve as a foundation for potential AI tools and use cases. Note: I try to avoid the abbreviation PLM, as many of us in the field associate PLM with a system, where, for me, the system is more of an IT solution, where the strategy and practices are best named as product lifecycle management.

Note: I try to avoid the abbreviation PLM, as many of us in the field associate PLM with a system, where, for me, the system is more of an IT solution, where the strategy and practices are best named as product lifecycle management.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past. Probably, November 11th was not the best day for broad attendance, and therefore, we hope that the recording of this webinar will allow you to connect and comment on this post.

Probably, November 11th was not the best day for broad attendance, and therefore, we hope that the recording of this webinar will allow you to connect and comment on this post.

[…] (The following post from PLM Green Global Alliance cofounder Jos Voskuil first appeared in his European PLM-focused blog HERE.) […]

[…] recent discussions in the PLM ecosystem, including PSC Transition Technologies (EcoPLM), CIMPA PLM services (LCA), and the Design for…

Jos, all interesting and relevant. There are additional elements to be mentioned and Ontologies seem to be one of the…

Jos, as usual, you've provided a buffet of "food for thought". Where do you see AI being trained by a…

Hi Jos. Thanks for getting back to posting! Is is an interesting and ongoing struggle, federation vs one vendor approach.…