You are currently browsing the category archive for the ‘Cloud’ category.

Last week, I participated in the annual 3DEXPERIENCE User Conference, organized by the ENOVIA and NETVIBES brands. With approximately 250 attendees, the 2-day conference on the High-Tech Campus in Eindhoven was fully booked.

Last week, I participated in the annual 3DEXPERIENCE User Conference, organized by the ENOVIA and NETVIBES brands. With approximately 250 attendees, the 2-day conference on the High-Tech Campus in Eindhoven was fully booked.

My PDM/PLM career started in 1990 in Eindhoven.

First, I spent a significant part of my school life there, and later, I became a physics teacher in Eindhoven. Then, I got infected by CAD and data management, discovering SmarTeam, and the rest is history.

First, I spent a significant part of my school life there, and later, I became a physics teacher in Eindhoven. Then, I got infected by CAD and data management, discovering SmarTeam, and the rest is history.

As I wrote in my last year’s post, the 3DEXPERIENCE conference always feels like a reunion, as I have worked most of my time in the SmarTeam, ENOVIA, and 3DEXPERIENCE Eco-system.

Innovation Drivers in the Generative Economy

Stephane Declee and Morgan Zimmerman kicked off the conference with their keynote, talking about the business theme for 2024: the Generative Economy. Where the initial focus was on the Experience Economy and emotion, the Generative Economy includes Sustainability. It is a clever move as the word Sustainability, like Digital Transformation, has become such a generic term. The Generative Economy clearly explains that the aim is to be sustainable for the planet.

Stephane and Morgan talked about the importance of the virtual twin, which is different from digital twins. A virtual twin typically refers to a broader concept that encompasses not only the physical characteristics and behavior of an object or system but also its environment, interactions, and context within a virtual or simulated world. Virtual Twins are crucial to developing sustainable solutions.

Morgan concluded the session by describing the characteristics of the data-driven 3DEXPERIENCE platform and its AI fundamentals, illustrating all the facets of the mix of a System of Record (traditional PLM) and Systems of Record (MODSIM).

3DEXPERIENCE for All at automation.eXpress

Daniel Schöpf, CEO and founder of automation.eXpress GmbH, gave a passionate story about why, for his business, the 3DEXPERIENCE platform is the only environment for product development, collaboration and sales.

Automation.eXpress is a young but typical Engineering To Order company building special machinery and services in dedicated projects, which means that every project, from sales to delivery, requires a lot of communication.

Automation.eXpress is a young but typical Engineering To Order company building special machinery and services in dedicated projects, which means that every project, from sales to delivery, requires a lot of communication.

For that reason, Daniel insisted all employees to communicate using the 3DEXPERIENCE platform on the cloud. So, there are no separate emails, chats, or other siloed systems.

Everyone should work connected to the project and the product as they need to deliver projects as efficiently and fast as possible.

Daniel made this decision based on his 20 years of experience in traditional ways of working—the coordinated approach. Now, starting from scratch in a new company without a legacy, Daniel chose the connected approach, an ideal fit for his organization, and using the cloud solution as a scalable solution, an essential criterium for a startup company.

My conclusion is that this example shows the unique situation of an inspired leader with 20 years of experience in this business who does not choose ways of working from the past but starts a new company in the same industry, but now based on a modern platform approach instead of individual traditional tools.

My conclusion is that this example shows the unique situation of an inspired leader with 20 years of experience in this business who does not choose ways of working from the past but starts a new company in the same industry, but now based on a modern platform approach instead of individual traditional tools.

Augment Me Through Innovative Technology

Dr. Cara Antoine gave an inspiring keynote based on her own life experience and lessons learned from working in various industries, a major oil & gas company and major high-tech hardware and software brands. Currently, she is an EVP and the Chief Technology, Innovation & Portfolio Officer at Capgemini.

Dr. Cara Antoine gave an inspiring keynote based on her own life experience and lessons learned from working in various industries, a major oil & gas company and major high-tech hardware and software brands. Currently, she is an EVP and the Chief Technology, Innovation & Portfolio Officer at Capgemini.

She explained how a life-threatening infection that caused blindness in one of her eyes inspired her to find ways to augment herself to keep on functioning.

With that, she drew a parallel with humanity, who continuously have been augmenting themselves from the prehistoric day to now at an ever-increasing speed of change.

With that, she drew a parallel with humanity, who continuously have been augmenting themselves from the prehistoric day to now at an ever-increasing speed of change.

The current augmentation is the digital revolution. Digital technology is coming, and you need to be prepared to survive – it is Innovate of Abdicate.

Dr. Cara continued expressing the need to invest in innovation (me: it was not better in the past 😉 ) – and, of course, with an economic purpose; however, it should go hand in hand with social progress (gender diversity) and creating a sustainable planet (innovation is needed here).

Besides the focus on innovation drivers, Dr. Cara always connected her message to personal interaction. Her recently published book Make it Personal describes the importance of personal interaction, even if the topics can be very technical or complex.

Besides the focus on innovation drivers, Dr. Cara always connected her message to personal interaction. Her recently published book Make it Personal describes the importance of personal interaction, even if the topics can be very technical or complex.

I read the book with great pleasure, and it was one of the cornerstones of the panel discussion next.

It is all about people…

It might be strange to have a session like this in an ENOVIA/NETVIBES User Conference; however, it is another illustration that we are not just talking about technology and tools.

I was happy to introduce and moderate this panel discussion,also using the iconic Share PLM image, which is close to my heart.

I was happy to introduce and moderate this panel discussion,also using the iconic Share PLM image, which is close to my heart.

The panelists, Dr. Cara Antoine, Daniel Schöpf, and Florens Wolters, each actively led transformational initiatives with their companies.

We discussed questions related to culture, personal leadership and involvement and concluded with many insights, including “Create chemistry, identify a passion, empower diversity, and make a connection as it could make/break your relationship, were discussed.

And it is about processes.

Another trend I discovered is that cloud-based business platforms, like the 3DEXERIENCE platform, switch the focus from discussing functions and features in tools to establishing platform-based environments, where the focus is more on data-driven and connected processes.

Another trend I discovered is that cloud-based business platforms, like the 3DEXERIENCE platform, switch the focus from discussing functions and features in tools to establishing platform-based environments, where the focus is more on data-driven and connected processes.

Some examples:

Data Driven Quality at Suzlon Energy Ltd.

Florens Wolters, who also participated in the panel discussion “It is all about people ..” explained how he took the lead to reimagine the Sulon Energy Quality Management System using the 3DEXPERIENCE platform and ENOVIA from a disconnected, fragmented, document-driven Quality Management System with many findings in 2020 to a fully integrated data-driven management system with zero findings in 2023.

Florens Wolters, who also participated in the panel discussion “It is all about people ..” explained how he took the lead to reimagine the Sulon Energy Quality Management System using the 3DEXPERIENCE platform and ENOVIA from a disconnected, fragmented, document-driven Quality Management System with many findings in 2020 to a fully integrated data-driven management system with zero findings in 2023.

It is an illustration that a modern data-driven approach in a connected environment brings higher value to the organization as all stakeholders in the addressed solution work within an integrated, real-time environment. No time is wasted to search for related information.

It is an illustration that a modern data-driven approach in a connected environment brings higher value to the organization as all stakeholders in the addressed solution work within an integrated, real-time environment. No time is wasted to search for related information.

Of course, there is the organizational change management needed to convince people not to work in their favorite siloes system, which might be dedicated to the job, but not designed for a connected future.

Of course, there is the organizational change management needed to convince people not to work in their favorite siloes system, which might be dedicated to the job, but not designed for a connected future.

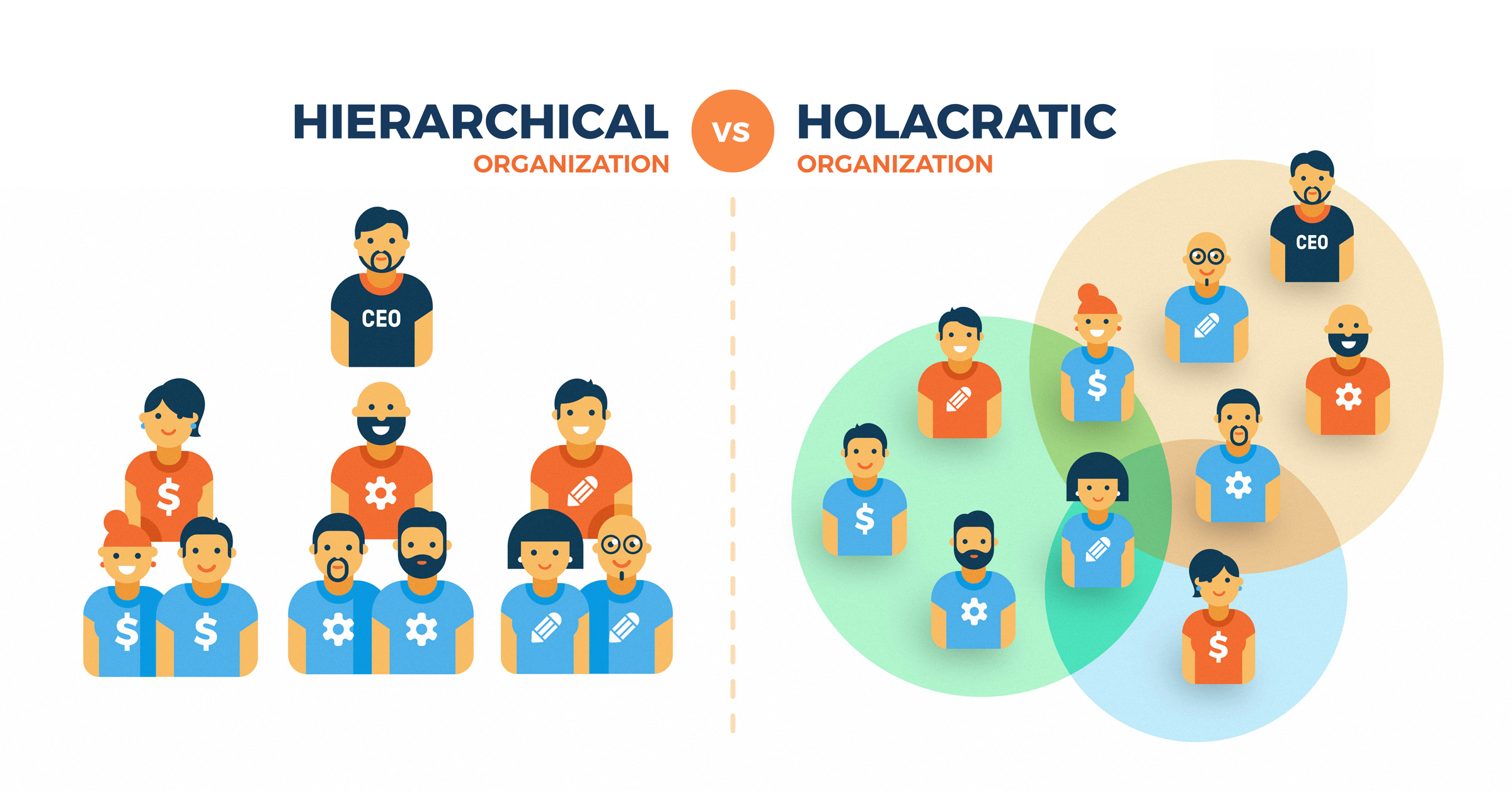

The image to the left was also a part of the “It is all about people”- session.

Enterprise Virtual Twin at Renault Group

The presentation of Renault was also an exciting surprise. Last year, they shared the scope of the Renaulution project at the conference (see also my post: The week after the 3DEXPERIENCE conference 2023).

The presentation of Renault was also an exciting surprise. Last year, they shared the scope of the Renaulution project at the conference (see also my post: The week after the 3DEXPERIENCE conference 2023).

Here, Renault mentioned that they would start using the 3DEXPERIENCE platform as an enterprise business platform instead of a traditional engineering tool.

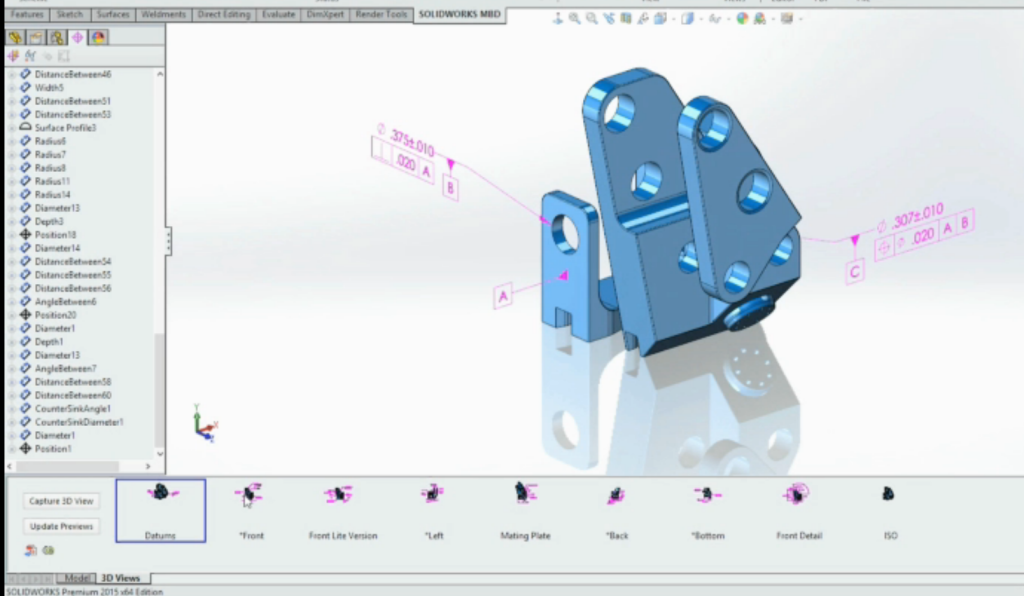

Their presentation today, which was related to their Engineering Virtual Twin, was an example of that. Instead of using their document-based SCR (Système de Conception Renault – the Renault Design System) with over 1000 documents describing processes connected to over a hundred KPI, they have been modeling their whole business architecture and processes in UAF using a Systems of System Approach.

The image above shows Franck Gana, Renault’s engineering – transformation chief officer, explaining the approach. We could write an entire article about the details of how, again, the 3DEXPERIENCE platform can be used to provide a real-time virtual twin of the actual business processes, ensuring everyone is working on the same referential.

Bringing Business Collaboration to the Next Level with Business Experiences

To conclude this section about the shifting focus toward people and processes instead of system features, Alizée Meissonnier Aubin and Antoine Gravot introduced a new offering from 3DS, the marketplace for Business Experiences.

According to the HBR article, workers switch an average of 1200 times per day between applications, leading to 9 % of their time reorienting themselves after toggling.

1200 is a high number and a plea for working in a collaboration platform instead of siloed systems (the Systems of Engagement, in my terminology – data-driven, real-time connected). The story has been told before by Daniel Schöpf, Florens Wolters and Franck Gana, who shared the benefits of working in a connected collaboration environment.

The announced marketplace will be a place where customers can download Business Experiences.

There is was more ….

There were several engaging presentations and workshops during the conference. But, as we reach 1500 words, I will mention just two of them, which I hope to come back to in a later post with more detail.

- Delivering Sustainable & Eco Design with the 3DS LCA Solution

Valentin Tofana from Comau, an Italian multinational company in the automation and committed to more sustainable products. In the last context Valentin shared his experiences and lessons learned starting to use the 3DS LifeCycle Assessment tools on the 3DEXPERIENCE platform.

Valentin Tofana from Comau, an Italian multinational company in the automation and committed to more sustainable products. In the last context Valentin shared his experiences and lessons learned starting to use the 3DS LifeCycle Assessment tools on the 3DEXPERIENCE platform.

This session gave such a clear overview that we will come back with the PLM Green Global Alliance in a separate interview. - Beyond PLM. Productivity is the Key to Sustainable Business

Neerav MEHTA from L&T Energy Hydrocarbon demonstrated how they currently have implemented a virtual twin of the plant, allowing everyone to navigate, collaborate and explore all activities related to the plant.I was promoting this concept in 2013 also for Oil & Gas EPC companies, at that time, an immense performance and integration challenge. (PLM for all industries) Now, ten years later, thanks to the capabilities of the 3DEXPERIENCE platform, it has become a workable reality. Impressive.

Neerav MEHTA from L&T Energy Hydrocarbon demonstrated how they currently have implemented a virtual twin of the plant, allowing everyone to navigate, collaborate and explore all activities related to the plant.I was promoting this concept in 2013 also for Oil & Gas EPC companies, at that time, an immense performance and integration challenge. (PLM for all industries) Now, ten years later, thanks to the capabilities of the 3DEXPERIENCE platform, it has become a workable reality. Impressive.

Conclusion

Again, I learned a lot during these days, seeing the architecture of the 3DEXPERIENCE platform growing (image below). In addition, more and more companies are shifting their focus to real-time collaboration processes in the cloud on a connected platform. Their testimonies illustrate that to be sustainable in business, you have to augment yourself with digital.

Note: Dassault Systemes did not cover any of the cost for me attending this conference. I picked the topics close to my heart and got encouraged by all the conversations I had.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

I noticed this year/last year that many companies are discussing or working on their future PLM. It is time to make progress after COVID, particularly in digitization.

And as most companies are avoiding the risk of a “big bang”, they are exploring how they can improve their businesses in an evolutionary mode.

PLM is no longer a system

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

More and more, PLM is considered a strategy, with which I fully agree. Therefore, implementing a PLM strategy requires holistic thinking and an infrastructure of different types of systems, where possible, digitally connected.

This trend is bad news for the PLM vendors as they continuously work on an end-to-end portfolio where every aspect of the PLM lifecycle is covered by one of their systems. The company’s IT department often supports the PLM vendors, as IT does not like a diverse landscape.

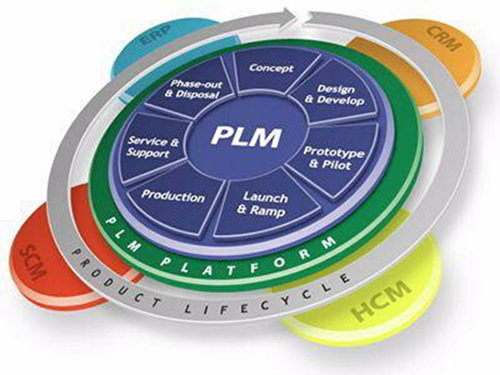

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

However, are these capabilities implementable in an economical and user-friendly manner by actual companies or should PLM players need to change their strategy”?

A question I will try to answer in this post

The future of PLM

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

Some of the posts to refresh your memory are:

- Time to split PLM?

- People, Processes, Data and Tools?

- The rise and the fall of the BOM?

- The new side of PLM? Systems of Engagement!

To summarize what has been discussed in these posts are the following points:

The As Is:

- The traditional PLM systems are examples of a System of Record, not designed to be end-user friendly but designed to have a traceable baseline for manufacturing, service and product compliance.

- The traditional PLM systems are tuned to a mechanical product introduction and release process in a coordinated manner, with a focus on BOM governance.

- The legacy information is stored in BOM structures and related specification files.

System of Record (ENOVIA image 2014)

The To Be:

- We are not talking about a PLM system anymore; a traditional System of Record will be digitally connected to different Systems of Engagement / Domains / Products, which have their own optimized environment for real-time collaboration.

- The BOM structures remain essential for the hardware part; however, overreaching structures are needed to manage software and hardware releases for a product. These structures depend on connected datasets.

- To support digital twins at the various lifecycle stages (design. Manufacturing, operations), product data needs to be based on and consumed by models.

- A future PLM infrastructure is hybrid, based on a Single Source of Change (SSoC) and an Authoritative Source of Truth (ASoT) instead of a Single Source of Truth (SSoT).

Various Systems of Engagement

Related podcasts

I relistened two podcasts before writing this post, and I think they are a must to listen to.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

Highlights: From System to Platform – the new norman. A Single Source of Truth doesn’t work anymore – it is about value streams. People in big companies fear making wrong PLM decisions, which is seen as a significant risk for your career.

There is no immediate need to change the current status quo.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

Highlights: The term Single Source of Change and the existing concepts of a hybrid PLM infrastructure based on his experiences at Daimler and now at NIO. Yousef stresses the importance of having the vision and the executive support to execute.

![]() The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

In addition, I want to point to the experiences provided by Erik Herzog in the Heliple project using OSLC interfaces as the “glue” to connect (in my terminology) the Systems of Engagement and the Systems of Record.

If you are interested in these concepts and want to learn and discuss them with your peers, more can be learned during the upcoming CIMdata PLM Roadmap / PDT Europe conference.

In particular, look at the agenda for day two if you are interested in this topic.

The future for the PLM vendors

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

They are more focused with their messaging on the transition from on-premise to the cloud, providing a SaaS offering with their portfolio.

I was slightly disappointed when I saw this article on Engineering.com provided by Autodesk: 5 PLM Best Practices from the Experiences of Autodesk and Its Customers.

The article is tool-centric, with statements that make sense and could be written by any PLM Vendor. However, Best Practice #1 Central Source of Truth Improves Productivity and Collaboration was the message that struck me. Collaboration comes from connecting people, not from the Single Source of Truth utopia.

I don’t believe PLM Vendors have to be afraid of losing their installed base rapidly with companies using their PLM as a System or Record. There is so much legacy stored in these systems that might still be relevant. The existence of legacy information, often documents, makes a migration or swap to another vendor almost impossible and unaffordable.

The System of Record is incompatible with data-driven PLM capabilities

I would like to see more clear developments of the PLM Vendors, creating a plug-and-play infrastructure for Systems of Engagement. Plug-and-play solutions could be based on a neutral partner collaboration hub like ShareAspace or the Systems of Engagement I discussed recently in my post and interview: The new side of PLM? Systems of Engagement!

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Some people believe AI, with the examples we have seen with ChatGPT, will be the future direction without needing interface standards.

I am curious about your thoughts and experiences in that area and am willing to learn.

Talking about learning?

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

As Martijn starts in the preface:

“I decided to write this book because, in my search for more knowledge on the topics of Part Re-Identification, Interchangeability, and Traceability, I could only find bits and pieces but not a comprehensive work that helps fundamentally understand these topics”.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

Martijn writes in an easily readable style and uses step-by-step examples to discuss the various options. There are even exercises at the end to use in a classroom or for your team to digest the content.

The good news is that the book is not about the past. You might also know Martijn for our joint discussion, The Future of Configuration Management, together with Maxime Gravel and Lisa Fenwick, on the impact of a model-based and data-driven approach to CM.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I recommend buying the book as a paperback so you can navigate easily between the diagrams and the text.

Conclusion

The trend for federated PLM is becoming more and more visible as companies start implementing these concepts. The end of monolithic PLM is a threat and an opportunity for the existing PLM Vendors. Will they work towards an open plug-and-play future, or will they keep their portfolios closed? What do you think?

Last week I had the opportunity to discuss the topic of Systems of Engagement in the context of the more extensive PLM landscape.

Last week I had the opportunity to discuss the topic of Systems of Engagement in the context of the more extensive PLM landscape.

I spoke with Andre Wegner from Authentise and their product Threads, MJ Smith from CoLab and Oleg Shilovitsky from OpenBOM.

I invited all three of them to discuss their background, their target customers, the significance of real-time collaboration outside discipline siloes, how they connect to existing PLM systems (Systems of Record), and finally, whether a company culture plays a role.

![]() Listen to this almost 45 min discussion here (save the m4a file first) or watch the discussion below on YouTube.

Listen to this almost 45 min discussion here (save the m4a file first) or watch the discussion below on YouTube.

What I learned from this conversation

- Systems of Engagement are bringing value to small enterprises but also as complementary systems to traditional PLM environments in larger companies.

- Thanks to their SaaS approach, they are easy to install and use to fulfill a need that would take weeks/months to implement in a traditional PLM environment. They can be implemented at a department level or by connecting a value chain of people.

- Due to their real-time collaboration capabilities, these systems provide fast and significant benefits.

- Systems of Engagement represent the trend that companies want to move away from monolithic systems and focus on working with the correct data connected to the users. A topic I will explore in a future blog post/

![]() I am curious to learn what you pick up from this conversation – are we missing other trends? Use the comments to this post.

I am curious to learn what you pick up from this conversation – are we missing other trends? Use the comments to this post.

Related to the company:

Visit Authentise.com

Related to the product:

Learn more about Collaborative Threads

Related to the reported benefits:

– Surgical robotics R&D team tracks 100% of their decisions and saves 150 hours in the first two weeks… doubling the effective size of their team:

Related to the company:

Visit Colabsoftware.com

Related to the product

Raise the bar for your design conversations

Related to the reported benefits

– How Mainspring used CoLab to achieve a 50% cost reduction redesign in half the time

– How Ford Pro Accelerated Time to Market by 30%

Related to the company:

Visit openbom.com

Related to the product:

Global Collaborative SaaS Platform For Industrial Companies

Related to reported benefits:

– OpenBOM makes the OKOS team 20% more efficient by helping to reduce inventory errors, costs, and streamlining supplier process

– VarTech Systems Optimizes Efficiency by Saving Two Hours of Engineering Time Daily with OpenBOM

Conclusion

I believe that Systems of Engagement are important for the digital transformation of a company.

They allow companies to learn what it means to work in a SaaS environment, potentially outside traditional company borders but with a focus on a specific value stream.

Thanks to their rapid deployment times, they help the company to grow its revenue even when the existing business is under threat due to newcomers.

The diagram below says it all. What are your favorite Systems of Engagement?

Hot from the press

Don’t miss the latest episode from the Share PLM podcast with Yousef Hooshmand – the discussion is very much connected to this discussion.

I was happy to present and participate at the 3DEXEPRIENCE User Conference held this year in Paris on 14-15 March. The conference was an evolution of the previous ENOVIA User conferences; this time, it was a joint event by both the ENOVIA and the NETVIBES brand.

I was happy to present and participate at the 3DEXEPRIENCE User Conference held this year in Paris on 14-15 March. The conference was an evolution of the previous ENOVIA User conferences; this time, it was a joint event by both the ENOVIA and the NETVIBES brand.

The conference was, for me, like a reunion. As I have worked for over 25 years in the SmarTeam, ENOVIA and 3DEXPERIENCE eco-system, now meeting people I have worked with and have not seen for over fifteen years.

My presentation: Sustainability Demands Virtualization – and it should happen fast was based on explaining the transformation from a coordinated (document-driven) to a connected (data-driven) enterprise.

There were 100+ attendees at the conference, mainly from Europe, and most of the presentations were coming from customers, where the breakout sessions gave the attendees a chance to dive deeper into the Dassault Systèmes portfolio.

Here are some of my impressions.

The power of ENOVIA and NETVIBES

I had a traditional view of the 3DEXPERIENCE platform based on my knowledge of ENOVIA, CATIA and SIMULIA, as many of my engagements were in the domain of MBSE or a model-based approach.

I had a traditional view of the 3DEXPERIENCE platform based on my knowledge of ENOVIA, CATIA and SIMULIA, as many of my engagements were in the domain of MBSE or a model-based approach.

However, at this conference, I discovered the data intelligence side that Dassault Systèmes is bringing with its NETVIBES brand.

Where I would classify the ENOVIA part of the 3DEXPERIENCE platform as a traditional System of Record infrastructure (see Time to Split PLM?).

I discovered that by adding NETVIBES on top of the 3DEXPERIENCE platform and other data sources, the potential scope had changed significantly. See the image below:

As we can see, the ontologies and knowledge graph layer make it possible to make sense of all the indexed data below, including the data from the 3DEXPERIENCE Platform, which provides a modern data-driven layer for its consumers and apps.

The applications on top of this layer, standard or developed, can be considered Systems of Engagement.

My curiosity now: will Dassault Systèmes keep supporting the “old” system of record approach – often based on BOM structures (see also my post: The Rise and Fall of the BOM) combined with the new data-driven environment? In that case, you would have both approaches within one platform.

My curiosity now: will Dassault Systèmes keep supporting the “old” system of record approach – often based on BOM structures (see also my post: The Rise and Fall of the BOM) combined with the new data-driven environment? In that case, you would have both approaches within one platform.

The Virtual Twin versus the Digital Twin

It is interesting to notice that Dassault Systèmes consistently differentiates between the definition of the Virtual Twin and the Digital Twin.

According to the 3DS.com website:

Digital Twins are simply a digital form of an object, a virtual version.

Unlike a digital twin prototype that focuses on one specific object, Virtual Twin Experiences let you visualize, model and simulate the entire environment of a sophisticated experience. As a result, they facilitate sustainable business innovation across the whole product lifecycle.

Understandably, Dassault Systemes makes this differentiation. With the implementation of the Unified Product Structure, they can connect CAD geometry as datasets to other non-CAD datasets, like eBOM and mBOM data.

The Unified Product Structure was not the topic of this event but is worthwhile to notice.

REE Automotive

![]() The presentation from Steve Atherton from REE Automotive was interesting because here we saw an example of an automotive startup that decided to go pure for the cloud.

The presentation from Steve Atherton from REE Automotive was interesting because here we saw an example of an automotive startup that decided to go pure for the cloud.

REE Automotive is an Israeli technology company that designs, develops, and produces electric vehicle platforms. Their mission is to provide a modular and scalable electric vehicle platform that can be used by a wide range of industries, including delivery and logistics, passenger cars, and autonomous vehicles.

Steve Atherton is the PLM 3DExperience lead for REE at the Engineering Centre in Coventry in the UK, where they have most designers. REE also has an R&D center in Tel Aviv with offshore support from India and satellite offices in the US

Steve Atherton is the PLM 3DExperience lead for REE at the Engineering Centre in Coventry in the UK, where they have most designers. REE also has an R&D center in Tel Aviv with offshore support from India and satellite offices in the US

REE decided from the start to implement its PLM backbone in the cloud, a logical choice for such a global spread company.

The cloud was also one of the conference’s central themes, and it was interesting to see that a startup company like REE is pushing for an end-to-end solution based on a cloud solution. So often, you see startups choosing traditional systems as the senior members of the startup to take their (legacy) PLM knowledge to their next company.

The cloud was also one of the conference’s central themes, and it was interesting to see that a startup company like REE is pushing for an end-to-end solution based on a cloud solution. So often, you see startups choosing traditional systems as the senior members of the startup to take their (legacy) PLM knowledge to their next company.

The current challenge for REE is implementing the manufacturing processes (EBOM- MBOM) and complying as much as possible with the out-of-the-box best practices to make their cloud implementation future-proof.

Groupe Renault

Olivier Mougin, Head of PLM at Groupe RENAULT, talked about their Renaulution Virtual Twin (RVT) program. Renault has always been a strategic partner of Dassault Systèmes.

Olivier Mougin, Head of PLM at Groupe RENAULT, talked about their Renaulution Virtual Twin (RVT) program. Renault has always been a strategic partner of Dassault Systèmes.

I remember them as one of the first references for the ENOVIA V6 backbone.

The Renaulution Virtual Twin ambition: from engineering to enterprise platform, is enormous, as you can see below:

Each of the three pillars has transformational aspects beyond traditional ways of working. For each pillar, Olivier explained the business drivers, expected benefits, and why a new approach is needed. I will not go into the details in this post.

However, you can see the transformation from an engineering backbone to an enterprise collaboration platform – The Renaulution!.

Ahmed Lguaouzi, head of marketing at NETVIBES, enforced the extended power of data intelligence on top of an engineering landscape as the target architecture.

Renault’s ambition is enormous – the ultimate dream of digital transformation for a company with a great legacy. The mission will challenge Renault and Dassault Systèmes to implement this vision, which can become a lighthouse for others.

3DS PLM Journey at MIELE

An exciting session close to my heart was the digital transformation story from MIELE, explained by André Lietz, head of the IT Products PLM @ Miele. As an old MIELE dishwasher owner, I was curious to learn about their future.

An exciting session close to my heart was the digital transformation story from MIELE, explained by André Lietz, head of the IT Products PLM @ Miele. As an old MIELE dishwasher owner, I was curious to learn about their future.

Miele has been a family-owned business since 1899, making high-end domestic and commercial equipment. They are a typical example of the power of German mid-market companies. Moreover, family-owned gives them stability and the opportunity to develop a multi-year transformation roadmap without being distracted by investor demands every few years.

André, with his team, is responsible for developing the value chain inside the product development process (PDP), the operation of nearly 90 IT applications, and the strategic transformation of the overarching PLM Mission 2027+.

André, with his team, is responsible for developing the value chain inside the product development process (PDP), the operation of nearly 90 IT applications, and the strategic transformation of the overarching PLM Mission 2027+.

As the slide below illustrates, the team is working on four typical transformation drivers:

- Providing customers with connected, advanced products (increasing R&D complexity)

- Providing employees with a modern, digital environment (the war for digital talent)

- Providing sustainable solutions (addressing the whole product lifecycle)

- Improving internal end-to-end collaboration and information visibility (PLM digital transformation)

André talked about their DELMIA pilot plant/project and its benefits to connect the EBOM and MBOM in the 3DEXPERIENCE platform. From my experience, this is a challenging topic, particularly in German companies, where SAP dominated the BOM for over twenty years.

I am curious to learn more about the progress in the upcoming years. The vision is there; the transformation is significant, but they have the time to succeed! This can be another digital transformation example.

I am curious to learn more about the progress in the upcoming years. The vision is there; the transformation is significant, but they have the time to succeed! This can be another digital transformation example.

And more …

Besides some educational sessions by Dassault Systemes (Laurent Bertaud – NETVIBES data science), there were also other interesting customer testimonies from Fernando Petre (IAR80 – Fly Again project), Christian Barlach (ISC Sustainable Construction) and Thelma Bonello (Methode Electronics – end-to-end BOM infrastructure). All sessions helped to get a better understanding about what is possible and what is done in the domain of PLM.

Conclusion

I learned a lot during these days, particularly the virtual twin strategy and the related capabilities of data intelligence. As the event was also a reunion for me with many people from my network, I discovered that we all aim at a digital transformation. We have a mission and a vision. The upcoming years will be crucial to implement the mission and realizing the vision. It will be the early adopters like Renault pushing Dassault Systèmes to deliver. I hope to stay tuned. You too?

NOTE: Dassault Systèmes covered some of the expenses associated with my participation in this event but did not in any way influence the content of this post.

It has been busy recently in the context of the PLM Global Green Alliances (PGGA) series: PLM and Sustainability, where we interview PLM-related software vendors, discussing their sustainability mission and offering.

It has been busy recently in the context of the PLM Global Green Alliances (PGGA) series: PLM and Sustainability, where we interview PLM-related software vendors, discussing their sustainability mission and offering.

We talked with SAP, Autodesk, and Dassault Systèmes and last week with Sustaira. Now the discussion was with the team from Aras. Aras is known as a non-traditional PLM player, having the following slogan on their website:

It is a great opening statement for our discussion. Let’s discover more.

Aras

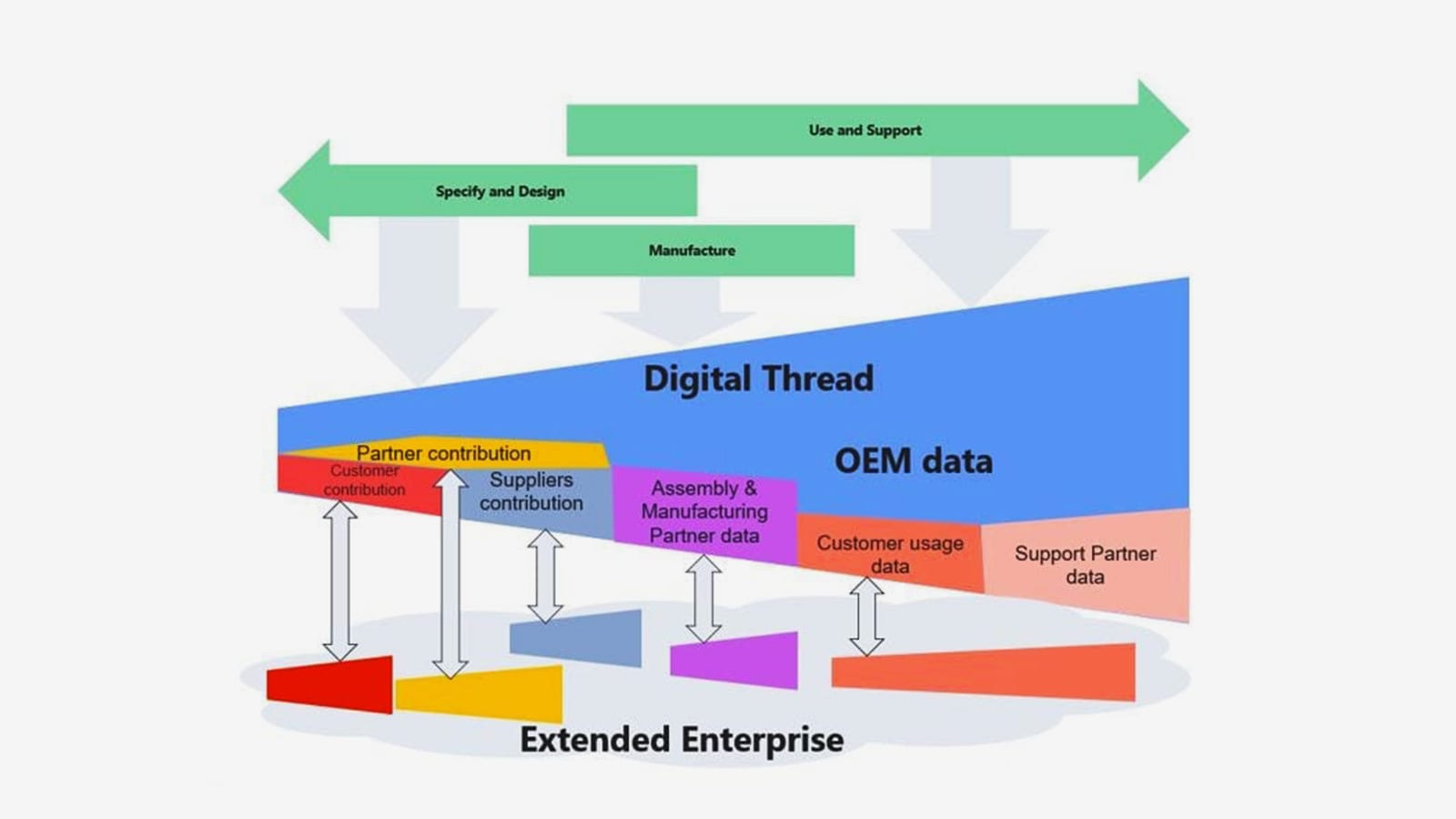

The discussion was with Patrick Willemsen, Director of Technical Community EMEA and Matthias Fohrer, Director of Global Alliances EMEA at Aras. It was an interesting interview; as we discussed, Aras focuses on the digital thread, connecting data from all sources with an infrastructure designed to support a company in its PLM domain.

The discussion was with Patrick Willemsen, Director of Technical Community EMEA and Matthias Fohrer, Director of Global Alliances EMEA at Aras. It was an interesting interview; as we discussed, Aras focuses on the digital thread, connecting data from all sources with an infrastructure designed to support a company in its PLM domain.

As I mentioned in a previous blog post, PLM and Sustainability – if we want to work efficiently on Sustainability, we need to have a data-driven and connected infrastructure.

And this made this discussion interesting to follow– please look/listen to the 30 minutes conversation below.

Slides shown during the interview and additional company information can be found HERE.

What we have learned

There were several interesting points in our discussion where we were aligned; first of all, the sustainable value of bringing your solutions to the cloud.

There were several interesting points in our discussion where we were aligned; first of all, the sustainable value of bringing your solutions to the cloud.

So we discussed the topic of Sustainability and the cloud, and it was interesting to read this week McKinsey’s post The green IT revolution: A blueprint for CIOs to combat climate change containing this quote:

“Moving to the cloud has more impact than optimizing data centers”– the article is quite applicable for Aras.

Next, I liked the message that it is all about collaboration between different parties.

As Matthias mentioned, nobody can do it on their own. According to Aras’ studies, 70% see Sustainability as an important area to improve themselves; nobody can do it on his own. Partnerships are crucial, as well as digital connections between the stakeholders. It is a plea for systems thinking in a connected manner, connecting to existing material libraries.

As Matthias mentioned, nobody can do it on their own. According to Aras’ studies, 70% see Sustainability as an important area to improve themselves; nobody can do it on his own. Partnerships are crucial, as well as digital connections between the stakeholders. It is a plea for systems thinking in a connected manner, connecting to existing material libraries.

The third point we were aligned with is that PLM and Sustainability are a learning journey. As Patrick explained, it is about embracing the circular economy and learning step by step.

The third point we were aligned with is that PLM and Sustainability are a learning journey. As Patrick explained, it is about embracing the circular economy and learning step by step.

<– Click on the image to enlarge.

Want to learn more?

Aras has published several white papers and surveys and hosted webinars related to Sustainability. Here are a few of them:

Aras Survey Challenges 2022: From Sustainability to Digitalization

White Paper: The Circular Economy as a Model for the Future

Webinar: Greener Business, PLM, Traceability, and Beyond

Webinar: How PLM Paves the Way for Sustainability

Blog: The Circular Economy as a Model for the Future

Conclusions

It is clear that Aras provides an infrastructure for a connected enterprise. They combine digital PLM capabilities with the option to extend their reach by supporting sustainability-related processes, like systems thinking and lifecycle assessments. And as they mention, no one can do it alone; we depend on collaboration and learning for all stakeholders.

On more week to go – join us if you can – click here

After a short summer break with almost no mentioning of the word PLM, it is time to continue this series of posts exploring the future of “connected” PLM. For those who also started with a cleaned-up memory, here is a short recap:

In part 1, I rush through more than 60 years of product development, starting from vellum drawings ending with the current PLM best practice for product development, the item-centric approach.

In part 1, I rush through more than 60 years of product development, starting from vellum drawings ending with the current PLM best practice for product development, the item-centric approach.

In part 2, I painted a high-level picture of the future, introducing the concept of digital platforms, which, if connected wisely, could support the digital enterprise in all its aspects. The five platforms I identified are the ERP and CRM platform (the oldest domains).

In part 2, I painted a high-level picture of the future, introducing the concept of digital platforms, which, if connected wisely, could support the digital enterprise in all its aspects. The five platforms I identified are the ERP and CRM platform (the oldest domains).

Next, the MES and PIP platform(modern domains to support manufacturing and product innovation in more detail) and the IoT platform (needed to support connected products and customers).

In part 3, I explained what is data-driven and how data-driven is closely connected to a model-based approach. Here we abandon documents (electronic files) as active information carriers. Documents will remain, however, as reports, baselines, or information containers. In this post, I ended up with seven topics related to data-driven, which I will discuss in upcoming posts.

In part 3, I explained what is data-driven and how data-driven is closely connected to a model-based approach. Here we abandon documents (electronic files) as active information carriers. Documents will remain, however, as reports, baselines, or information containers. In this post, I ended up with seven topics related to data-driven, which I will discuss in upcoming posts.

Hopefully, by describing these topics – and for sure, there are more related topics – we will better understand the connected future and make decisions to enable the future instead of freezing the past.

Topic 1 for this post:

Data-driven does not imply, there needs to be a single environment, a single database that contains all information. As I mentioned in my previous post, it will be about managing connected datasets federated. It is not anymore about owned the data; it is about access to reliable data.

Platform or a collection of systems?

One of the first (marketing) hurdles to take is understanding what a data platform is and what is a collection of systems that work together, sold as a platform.

CIMdata published in 2017 an excellent whitepaper positioning the PIP (Product Innovation Platform): Product Innovation Platforms: Definition, Their Role in the Enterprise, and Their Long-Term Viability. CIMdata’s definition is extensive and covers the full scope of product innovation. Of course, you can find a platform that starts from a more focused process.

CIMdata published in 2017 an excellent whitepaper positioning the PIP (Product Innovation Platform): Product Innovation Platforms: Definition, Their Role in the Enterprise, and Their Long-Term Viability. CIMdata’s definition is extensive and covers the full scope of product innovation. Of course, you can find a platform that starts from a more focused process.

For example, look at OpenBOM (focus on BOM collaboration), OnShape (focus on CAD collaboration) or even Microsoft 365 (historical, document-based collaboration).

The idea behind a platform is that it provides basic capabilities connected to all stakeholders, inside and outside your company. In addition, to avoid that these capabilities are limited, a platform should be open and able to connect with other data sources that might be either local or central available.

From these characteristics, it is clear that the underlying infrastructure of a platform must be based on a multitenant SaaS infrastructure, still allowing local data to be connected and shielded for performance or IP reasons.

From these characteristics, it is clear that the underlying infrastructure of a platform must be based on a multitenant SaaS infrastructure, still allowing local data to be connected and shielded for performance or IP reasons.

The picture below describes the business benefits of a Product Innovation Platform as imagined by Accenture in 2014

Link to CIMdata’s 2014 commentary of Digital PLM HERE

Sometimes vendors sell their suite of systems as a platform. This is a marketing trick because when you want to add functionality to your PLM infrastructure, you need to install a new system and create or use interfaces with the existing systems, not really a scalable environment.

Sometimes vendors sell their suite of systems as a platform. This is a marketing trick because when you want to add functionality to your PLM infrastructure, you need to install a new system and create or use interfaces with the existing systems, not really a scalable environment.

In addition, sometimes, the collaboration between systems in such a marketing platform is managed through proprietary exchange (file) formats.

A practice we have seen in the construction industry before cloud connectivity became available. However, a so-called end-to-end solution working on PowerPoint implemented in real life requires a lot of human intervention.

A practice we have seen in the construction industry before cloud connectivity became available. However, a so-called end-to-end solution working on PowerPoint implemented in real life requires a lot of human intervention.

Not a single environment

There has always been the debate:

“Do I use best-in-class tools, supporting the end-user of the software, or do I provide an end-to-end infrastructure with more generic tools on top of that, focusing on ease of collaboration?”

In the system approach, the focus was most of the time on the best-in-class tools where PLM-systems provide the data governance. A typical example is the item-centric approach. It reflects the current working culture, people working in their optimized siloes, exchanging information between disciplines through (neutral) files.

The platform approach makes it possible to deliver the optimized user interface for the end-user through a dedicated app. Assuming the data needed for such an app is accessible from the current platform or through other systems and platforms.

It might be tempting as a platform provider to add all imaginable data elements to their platform infrastructure as much as possible. The challenge with this approach is whether all data should be stored in a central data environment (preferably cloud) or federated. And what about filtering IP?

It might be tempting as a platform provider to add all imaginable data elements to their platform infrastructure as much as possible. The challenge with this approach is whether all data should be stored in a central data environment (preferably cloud) or federated. And what about filtering IP?

In my post PLM and Supply Chain Collaboration, I described the concept of having an intermediate hub (ShareAspace) between enterprises to facilitate real-time data sharing, however carefully filtered which data is shared in the hub.

It may be clear that storing everything in one big platform is not the future. As I described in part 2, in the end, a company might implement a maximum of five connected platforms (CRM, ERP, PIP, IoT and MES). Each of the individual platforms could contain a core data model relevant for this part of the business. This does not imply there might be no other platforms in the future. Platforms focusing on supply chain collaboration, like ShareAspace or OpenBOM, will have a value proposition too. In the end, the long-term future is all about realizing a digital tread of information within the organization.

Will we ever reach a perfectly connected enterprise or society? Probably not. Not because of technology but because of politics and human behavior. The connected enterprise might be the most efficient architecture, but will it be social, supporting all humanity. Predicting the future is impossible, as Yuval Harari described in his book: 21 Lessons for the 21st Century. Worth reading, still a collection of ideas.

Will we ever reach a perfectly connected enterprise or society? Probably not. Not because of technology but because of politics and human behavior. The connected enterprise might be the most efficient architecture, but will it be social, supporting all humanity. Predicting the future is impossible, as Yuval Harari described in his book: 21 Lessons for the 21st Century. Worth reading, still a collection of ideas.

Proprietary data model or standards?

So far, when you are a software vendor developing a system, there is no restriction in how you internally manage your data. In the domain of PLM, this meant that every vendor has its own proprietary data model and behavior.

I have learned from my 25+ years of experience with systems that the original design of a product combined with the vendor’s culture defines the future roadmap. So even if a PLM vendor would rewrite all their software to become data-driven, the ways of working, the assumptions will be based on past experiences.

I have learned from my 25+ years of experience with systems that the original design of a product combined with the vendor’s culture defines the future roadmap. So even if a PLM vendor would rewrite all their software to become data-driven, the ways of working, the assumptions will be based on past experiences.

This makes it hard to come to unified data models and methodology valid for our PLM domain. However, large enterprises like Airbus and Boeing and the major Automotive suppliers have always pushed for standards as they will benefit the most from standardization.

The recent PDT conferences were an example of this, mainly the 2020 Fall conference. Several Aerospace & Defense PLM Action groups reported their progress.

The recent PDT conferences were an example of this, mainly the 2020 Fall conference. Several Aerospace & Defense PLM Action groups reported their progress.

You can read my impression of this event in The weekend after PLM Roadmap / PDT 2020 – part 1 and The next weekend after PLM Roadmap PDT 2020 – part 2.

It would be interesting to see a Product Innovation Platform built upon a data model as much as possible aligned to existing standards. Probably it won’t happen as you do not make money from being open and complying with standards as a software vendor. Still, companies should push their software vendors to support standards as this is the only way to get larger connected eco-systems.

I do not believe in the toolkit approach where every company can build its own data model based on its current needs. I have seen this flexibility with SmarTeam in the early days. However, it became an upgrade risk when new, overlapping capabilities were introduced, not matching the past.

I do not believe in the toolkit approach where every company can build its own data model based on its current needs. I have seen this flexibility with SmarTeam in the early days. However, it became an upgrade risk when new, overlapping capabilities were introduced, not matching the past.

In addition, a flexible toolkit still requires a robust data model design done by experienced people who have learned from their mistakes.

In addition, a flexible toolkit still requires a robust data model design done by experienced people who have learned from their mistakes.

The benefit of using standards is that they contain the learnings from many people involved.

Conclusion

I did not like writing this post so much, as my primary PLM focus lies on people and methodology. Still, understanding future technologies is an important point to consider. Therefore, this time a not-so-exciting post. There is enough to read on the internet related to PLM technology; see some of the recent articles below. Enjoy

Matthias Ahrens shared: Integrated Product Lifecycle Management (Google translated from German)

Oleg Shilovitsky wrote numerous articles related to technology –

in this context:

3 Challenges of Unified Platforms and System Locking and

SaaS PLM Acceleration Trends

Last week I wrote about the recent PLM Road Map & PDT Spring 2021 conference day 1, focusing mainly on technology. There were also interesting sessions related to exploring future methodologies for a digital enterprise. Now on Day 2, we started with two sessions related to people and methodology, indispensable when discussing PLM topics.

Last week I wrote about the recent PLM Road Map & PDT Spring 2021 conference day 1, focusing mainly on technology. There were also interesting sessions related to exploring future methodologies for a digital enterprise. Now on Day 2, we started with two sessions related to people and methodology, indispensable when discussing PLM topics.

Designing and Keeping Great Teams

This keynote speech from Noshir Contractor, Professor of Behavioral Sciences in the McCormick School of Engineering & Applied Science, intrigued me as the subtitle states: Lessons from Preparing for Mars. What Can PLM Professionals Learn from This?

You might ask yourself, is a PLM implementation as difficult and as complex as a mission to Mars? I hoped, so I followed with great interest Noshir’s presentation.

You might ask yourself, is a PLM implementation as difficult and as complex as a mission to Mars? I hoped, so I followed with great interest Noshir’s presentation.

Noshir started by mentioning that many disruptive technologies have emerged in recent years, like Teams, Slack, Yammer and many more.

The interesting question he asked in the context of PLM is:

As the domain of PLM is all about trying to optimize effective collaboration, this is a fair question

Noshir shared with us that it is not the most crucial point to look at people’s individual skills but more about who they know.

Measure who they work with is more important than who they are.

Based on this statement, Noshir showed some network patterns of different types of networks.

Click on the image to see the enlarged picture.

It is clear from these patterns how organizations communicate internally and/or externally. It would be an interesting exercise to perform in a company and to see if the analysis matches the perceived reality.

Noshir’s research was used by NASA to analyze and predict the right teams for a mission to Mars.

Noshir went further by proposing what PLM can learn from teams that are going into space. And here, I was not sure about the parallel. Is a PLM project comparable to a mission to Mars? I hope not! I have always advocated that a PLM implementation is a journey. Still, I never imagined that it could be a journey into the remote unknown.

Noshir went further by proposing what PLM can learn from teams that are going into space. And here, I was not sure about the parallel. Is a PLM project comparable to a mission to Mars? I hope not! I have always advocated that a PLM implementation is a journey. Still, I never imagined that it could be a journey into the remote unknown.

Noshir explained that they had built tools based on their scientific model to describe and predict how teams could evolve over time. He believes that society can also benefit from these learnings. Many inventions from the past were driven by innovations coming from space programs.

I believe Noshir’s approach related to team analysis is much more critical for organizations with a mission. How do you build multidisciplinary teams?

The proposed methodology is probably best for a holocracy based organization. Holocrazy is an interesting concept for companies to get their employees committed, however, it also demands a type of involvement that not every person can deliver. For me, coming back to PLM, as a strategy to enable collaboration, the effectiveness of collaboration depends very much on the organizational culture and created structure.

The proposed methodology is probably best for a holocracy based organization. Holocrazy is an interesting concept for companies to get their employees committed, however, it also demands a type of involvement that not every person can deliver. For me, coming back to PLM, as a strategy to enable collaboration, the effectiveness of collaboration depends very much on the organizational culture and created structure.

DISRUPTION – EXTINCTION or still EVOLUTION?

We talk a lot about disruption because disruption is a painful process that you do not like to happen to yourself or your company. In the context of this conference’s theme, I discussed the awareness that disruptive technologies will be changing the PLM Value equation.

A disruption like the switch from mini-computers to PCs (killed DEC) or from Symbian to iOS (killed Nokia) is therefore not likely to happen that fast. Still, there is a need to take benefit from these new disruptive technologies.

My presentation was focusing on describing the path of evolution and focus areas for the PLM community. Doing nothing means extinction; experimenting and learning towards the future will provide an evolutionary way.

Starting from acknowledging that there is an incompatibility between data produced most of the time now and the data needed in the future, I explained my theme: From Coordinated to Connected. As a PLM community, we should spend more time together in focus groups, conferences on describing and verifying methodology and best practices.

Nigel Shaw (EuroStep) and Mark Williams (Boeing) hinted in this direction during this conference (see day 1). Erik Herzog (SAAB Aeronautics) brought this topic to last year’s conference (see day 3). Outside this conference, I have comparable touchpoints with Martijn Dullaert when discussing Configuration Management in the future in relation to PLM.

In addition, this decade will probably be the most disruptive decade we have known in humanity due to external forces that push companies to change. Sustainability regulations from governments (the Paris agreement), the implementation of circular economy concepts combined with the positive and high Total Share Holder return will push companies to adapt themselves more radical than before.

What is clear is that disruptive technologies and concepts, like Industry 4.0, Digital Thread and Digital Twin, can serve a purpose when implemented efficiently, ensuring the business becomes sustainable.

Due to the lack of end-to-end experience, we need focus groups and conferences to share progress and lessons learned. And we do not need to hear the isolated vendor success stories here as a reference, as often they are siloed again and leading to proprietary environments.

Due to the lack of end-to-end experience, we need focus groups and conferences to share progress and lessons learned. And we do not need to hear the isolated vendor success stories here as a reference, as often they are siloed again and leading to proprietary environments.

You can see my full presentation on SlideShare: DISRUPTION – EXTINCTION or still EVOLUTION?

Building a profitable Digital T(win) business

Beatrice Gasser, Technical, Innovation, and Sustainable Development Director from the Egis group, gave an exciting presentation related to the vision and implementation of digital twins in the construction industry.

The Egis group both serves as a consultancy firm as well as an asset management organization. You can see a wide variety of activities on their website or have a look at their perspectives

Historically the construction industry has been lagging behind having low productivity due to fragmentation, risk aversion and recently, more and more due to the lack of digital talent. In addition, some of the construction companies make their money from claims inside of having a smooth and profitable business model.

Without innovation in the construction industry, companies working the traditional way would lose market share and investor-focused attention, as we can see from the BCG diagram I discussed in my session.

The digital twin of construction is an ideal concept for the future. It can be built in the design phase to align all stakeholders, validate and integrate solutions and simulate the building operational scenarios at almost zero materials cost. Egis estimates that by using a digital twin during construction, the engineering and construction costs of a building can be reduced between 15 and 25 %

More importantly, the digital twin can also be used to first simulate operations and optimize energy consumption. The connected digital twin of an existing building can serve as a new common data environment for future building stakeholders. This could be the asset owner, service companies, and even the regulatory authorities needing to validate the building’s safety and environmental impact.

Beatrice ended with five principles essential to establish a digital twin, i.e

I think the construction industry has a vast potential to disrupt itself. Faster than the traditional manufacturing industries due to their current needs to work in a best-connected manner.

I think the construction industry has a vast potential to disrupt itself. Faster than the traditional manufacturing industries due to their current needs to work in a best-connected manner.

Next, there is almost no legacy data to deal with for these companies. Every new construction or building is a unique project on its own. The key differentiators will be experience and efficient ways of working.

It is about the belief, the guts and the skilled people that can make it work – all for a more efficient and sustainable future.

Leveraging PLM and Cloud Technology for Market Success

Stan Przybylinski, Vice President of CIMdata, reported their global survey related to the cloud, completed in early 2021. Also, Stan typified Industry 4.0 as a connected vision and cloud and digital thread as enablers to implementing this vision.

Stan Przybylinski, Vice President of CIMdata, reported their global survey related to the cloud, completed in early 2021. Also, Stan typified Industry 4.0 as a connected vision and cloud and digital thread as enablers to implementing this vision.

The companies interviewed showed a lot of goodwill to make progress – click on the image to see the details. CIMdata is also working with PLM Vendors to learn and describe better the areas of beneft. I remain curious about who comes with a realization and business case that is future-proof. This will define our new PLM Value Equation.

Conclusion

These were two exciting days with enough mentioning of disruptive technologies. Our challenge in the PLM domain will be to give them a purpose. A purpose is likely driven by external factors related to the need for a sustainable future. Efficiency and effectiveness must come from learning to work in connected environments (digital twin, digital thread, industry 4.0, Model-Based (Systems) Engineering.

Note: You might have seen the image below already – a nice link between sustainability and the mission to Mars

After “The PLM Doctor is IN #2,” now again a written post in the category of PLM and complementary practices/domains.

After PLM and Configuration Lifecycle ManagementCLM (January 2021) and PLM and Configuration Management CM (February 2021), now it is time to address the third interesting topic:

PLM and Supply Chain collaboration.

In this post, I am speaking with Magnus Färneland from Eurostep, a company well known in my PLM ecosystem, through their involvement in standards (STEP and PLCS), the PDT conferences, and their PLM collaboration hub, ShareAspace.

Supply Chain collaboration

The interaction between OEMs and their suppliers has been a topic of particular interest to me. As a warming-up, read my post after CIMdata/PDT Roadmap 2020: PLM and the Supply Chain. In this post, I briefly touched on the Eurostep approach – having a Supply Chain Collaboration Hub. Below an image from that post – in this case, the Collaboration Hub is positioned between two OEMs.

Recently Eurostep shared a blog post in the same context: 3 Steps to remove data silos from your supply chain addressing the dreams of many companies: moving from disconnected information silos towards a logical flow of data. This topic is well suited for all companies in the digital transformation process with their supply chain. So, let us hear it from Eurostep.

Eurostep – the company / the mission

First of all, can you give a short introduction to Eurostep as a company and the unique value you are offering to your clients?

First of all, can you give a short introduction to Eurostep as a company and the unique value you are offering to your clients?

Eurostep was founded in 1994 by several world-class experts on product data and information management. In the year 2000, we started developing ShareAspace. We took all the experience we had from working with collaboration in the extended enterprise, mixed it with our standards knowledge, and selected Microsoft as the technology for our software platform.

Eurostep was founded in 1994 by several world-class experts on product data and information management. In the year 2000, we started developing ShareAspace. We took all the experience we had from working with collaboration in the extended enterprise, mixed it with our standards knowledge, and selected Microsoft as the technology for our software platform.

We now offer ShareAspace as a solution for product information collaboration in all three industry verticals where we are active: Manufacturing, Defense and AEC & Plant.

ShareAspace is based on an information standard called PLCS (ISO 10300-239). This means we have a data model covering the complete life cycle of a product from requirements and conceptual design to an existing installed base. We have added things needed, such as consolidation and security. Our partnership with Microsoft has also resulted in ShareAspace being available in Azure as a service (our Design to Manufacturing software).

ShareAspace is based on an information standard called PLCS (ISO 10300-239). This means we have a data model covering the complete life cycle of a product from requirements and conceptual design to an existing installed base. We have added things needed, such as consolidation and security. Our partnership with Microsoft has also resulted in ShareAspace being available in Azure as a service (our Design to Manufacturing software).

Why a supply chain collaboration hub?

Currently, most suppliers work in a disconnected manner with their clients – sending files up and down or the need to work inside the OEM environment. What are the reasons to consider a supply chain collaboration hub or, as you call it, a product information collaboration solution?

Currently, most suppliers work in a disconnected manner with their clients – sending files up and down or the need to work inside the OEM environment. What are the reasons to consider a supply chain collaboration hub or, as you call it, a product information collaboration solution?

The hub concept is not new per se. There are plenty of examples of file sharing hubs. Once you realize that sending files back and forth by email is a disaster for keeping control of your information being shared with suppliers, you would probably try out one of the available file-sharing alternatives.

The hub concept is not new per se. There are plenty of examples of file sharing hubs. Once you realize that sending files back and forth by email is a disaster for keeping control of your information being shared with suppliers, you would probably try out one of the available file-sharing alternatives.

However, after a while, you begin to realize that a file share can be quite time consuming to keep up to date. Files are being changed. Files are being removed! Some files are enormous, and you realize that you only need a fraction of what is in the file. References within one file to another file becomes corrupt because the other file is of a new version. Etc. Etc.

![]() This is about the time when you realize that you need similar control of the data you share with suppliers as you have in your internal systems. If not better.

This is about the time when you realize that you need similar control of the data you share with suppliers as you have in your internal systems. If not better.

A hub allows all partners to continue to use their internal tools and processes. It is also a more secure way of collaboration as the suppliers and partners are not let into the internal systems of the OEM.

Another significant side effect of this is that you only expose the data in the hub intended for external sharing and avoid sharing too much or exposing internal sensitive data.

A hub is also suitable for business flexibility as partners are not hardwired with the OEM. Partners can change, and IT systems in the value chain can change without impacting more than the single system’s connecting to the hub.

Should every company implement a supply chain collaboration hub?

Based on your experience, what types of companies should implement a supply chain collaboration hub and what are the expected benefits?

Based on your experience, what types of companies should implement a supply chain collaboration hub and what are the expected benefits?

The large OEMs and 1st tier suppliers certainly benefit from this since they can incorporate hundreds, if not thousands, of suppliers. Sharing technical data across the supply chain from a dedicated hub will remove confusions, improve control of the shared data, and build trust with their partners.

The large OEMs and 1st tier suppliers certainly benefit from this since they can incorporate hundreds, if not thousands, of suppliers. Sharing technical data across the supply chain from a dedicated hub will remove confusions, improve control of the shared data, and build trust with their partners.

With our cloud-based offering, we now also make it possible for at least mid-sized companies (like 200+ employees) to use ShareAspace. They may not have a well-adopted PLM system or the issues of communicating complex specifications originating from several internal sources. However still, they need to be professional in dealing with suppliers.

![]() The smallest client we have is a manufacturer of pool cleaners, a complex product with many suppliers. The company Weda [www.weda.se] has less than 10 employees, and they use ShareAspace as SaaS. With ShareAspace, they have improved their collaboration process with suppliers and cut costs and lowered inventory levels.

The smallest client we have is a manufacturer of pool cleaners, a complex product with many suppliers. The company Weda [www.weda.se] has less than 10 employees, and they use ShareAspace as SaaS. With ShareAspace, they have improved their collaboration process with suppliers and cut costs and lowered inventory levels.

ShareAspace can really scale big. It serves as a collaboration solution for the two new Aircraft carriers in the UK, the QUEEN ELIZABETH class. The aircraft carriers were built by a consortium that was closed in early 2020.

ShareAspace can really scale big. It serves as a collaboration solution for the two new Aircraft carriers in the UK, the QUEEN ELIZABETH class. The aircraft carriers were built by a consortium that was closed in early 2020.

ShareAspace is being used to hold the design data and other documentation from the consortium to be available to the multiple organizations (both inside and outside of the Ministry of Defence) that need controlled access.

What is the dependency on standards?

I always associate Eurostep with the PLCS (ISO 10303-239) standard, providing an information model for “hardware” products along the lifecycle. How important is this standard for you in the context of your ShareAspace offering?

I always associate Eurostep with the PLCS (ISO 10303-239) standard, providing an information model for “hardware” products along the lifecycle. How important is this standard for you in the context of your ShareAspace offering?

Should everyone adapt to this standard?

We have used PLCS to define the internal data schema in ShareAspace. This is an excellent starting point for capturing information from different systems and domains and still getting it to fit together. Why invent something new?

We have used PLCS to define the internal data schema in ShareAspace. This is an excellent starting point for capturing information from different systems and domains and still getting it to fit together. Why invent something new?