You are currently browsing the tag archive for the ‘BIM’ tag.

Three weeks ago, we published our first PLM Global Green Alliance interview discussing the relationship between PLM and Sustainability with the main vendors. We talked with Darren West from SAP.

Three weeks ago, we published our first PLM Global Green Alliance interview discussing the relationship between PLM and Sustainability with the main vendors. We talked with Darren West from SAP.

You can find the interview here: PLM and Sustainability: talking with SAP.

When we published the interview, it was also the moment a Russian dictator started the invasion of Ukraine, making it difficult for me to focus on our sustainability mission, having friends in both countries.

Now, three weeks later, with even more horrifying news coming from Ukraine, my thoughts are with the heroic people there, who resist and fight for their lives to exist. And it is not only in Ukraine. Also, people suffering under other totalitarian regimes are fighting this unfair battle.

Now, three weeks later, with even more horrifying news coming from Ukraine, my thoughts are with the heroic people there, who resist and fight for their lives to exist. And it is not only in Ukraine. Also, people suffering under other totalitarian regimes are fighting this unfair battle.

Meanwhile, another battle that concerns us all might get stalled if the conflict in Ukraine continues. This decade requires us to focus on the transition towards a sustainable planet, where the focus is on reducing carbon emissions. It is clear from the latest IPPC report: Impacts Adaptation and Vulnerability that we need to act.

Meanwhile, another battle that concerns us all might get stalled if the conflict in Ukraine continues. This decade requires us to focus on the transition towards a sustainable planet, where the focus is on reducing carbon emissions. It is clear from the latest IPPC report: Impacts Adaptation and Vulnerability that we need to act.

Autodesk

![]() Therefore, I am happy we can continue our discussion on PLM and Sustainability, this time with Autodesk. In the conversation with SAP, we discovered SAP’s strength lies in measuring the environmental impact of materials and production processes. However, most (environmental) impact-related decisions are made before the engineering & design phase.

Therefore, I am happy we can continue our discussion on PLM and Sustainability, this time with Autodesk. In the conversation with SAP, we discovered SAP’s strength lies in measuring the environmental impact of materials and production processes. However, most (environmental) impact-related decisions are made before the engineering & design phase.

Autodesk is a well-known software company in the Design & Manufacturing industry and the AEC (Architecture, Engineering and Construction) industry.

Autodesk was open to sharing its sustainability activities with us. So we spoke with Zoé Bezpalko, Autodesk’s Sustainability Strategy Manager for the Design & Manufacturing Industries, and Jon den Hartog, Product Manager for Autodesk’s PDM and PLM solutions. So we were talking with the right persons for our PLM Global Green Alliance.

Autodesk was open to sharing its sustainability activities with us. So we spoke with Zoé Bezpalko, Autodesk’s Sustainability Strategy Manager for the Design & Manufacturing Industries, and Jon den Hartog, Product Manager for Autodesk’s PDM and PLM solutions. So we were talking with the right persons for our PLM Global Green Alliance.

Watch the 30 minutes recording below, learn more about Autodesk’s sustainability goals and offerings and get motivated to (re)act.

The slides shown in this presentation can be downloaded HERE

What we have learned

The interview showed that Autodesk is actively working on a sustainable future. Both by acting internally, but, and even more important, by helping their customers to have a positive impact, using technologies like generative design and more environmentally friendly building projects. We talked about the renovation project of our famous Dutch Afsluitdijk.

The interview showed that Autodesk is actively working on a sustainable future. Both by acting internally, but, and even more important, by helping their customers to have a positive impact, using technologies like generative design and more environmentally friendly building projects. We talked about the renovation project of our famous Dutch Afsluitdijk.

The second observation is that Autodesk is working on empowering the designer to make better decisions regarding material usage or reuse. Life Cycle Assessment done by engineers will be a future required skill. As we discussed, this bottom-up user empowerment should be combined with a company strategy.

Want to learn more?

As you can see from the image shown in the recording, there is a lot to learn about Autodesk Forge. Click on the image for your favorite link, or open the PDF connected to the recording for your sustainability plans.

As you can see from the image shown in the recording, there is a lot to learn about Autodesk Forge. Click on the image for your favorite link, or open the PDF connected to the recording for your sustainability plans.

And there is the link to the Autodesk sustainability hub: Autodesk.com/sustainability

Conclusion

This was a motivating session to see Autodesk acting on Sustainability, and they are encouraging their customers to act.

It is necessary that companies and consumers get motivated and supported for more sustainable products and activities. We look forward to coming back with Autodesk in a second round with the PLM vendors to discover and discuss progress.

To avoid that software geeks are getting curious about the title – in this context, ALM means Asset Lifecycle Management. In 2008 I was active for SmarTeam to promote PLM concepts relevant for Asset Lifecycle Management. The focus was on PLM being complementary to asset operation management (EAM Enterprise Asset Management and MRO – Maintenance Repair and Overhaul).

To avoid that software geeks are getting curious about the title – in this context, ALM means Asset Lifecycle Management. In 2008 I was active for SmarTeam to promote PLM concepts relevant for Asset Lifecycle Management. The focus was on PLM being complementary to asset operation management (EAM Enterprise Asset Management and MRO – Maintenance Repair and Overhaul).

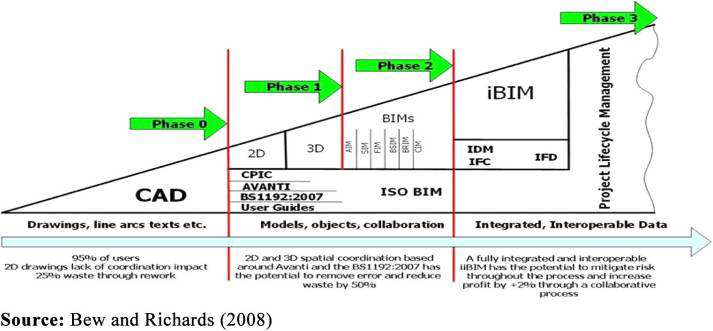

This topic has become actual for me in the past two months, having discussed and seen (PDT) the concepts of a model-based approach for assets and constructions. PLM, ALM, and BIM converge conceptually. Every year I give a one-day update from the field for students doing a master for PLM & BIM on top of their engineering/architectural background. Five years ago, there was no mentioning of BIM, now the ratio of BIM-oriented students has become significant. For me it is always great to see young students willing to learn PLM or BIM on top of their own skillset. Read more about this particular Master class in French when you click on the logo to the left.

This topic has become actual for me in the past two months, having discussed and seen (PDT) the concepts of a model-based approach for assets and constructions. PLM, ALM, and BIM converge conceptually. Every year I give a one-day update from the field for students doing a master for PLM & BIM on top of their engineering/architectural background. Five years ago, there was no mentioning of BIM, now the ratio of BIM-oriented students has become significant. For me it is always great to see young students willing to learn PLM or BIM on top of their own skillset. Read more about this particular Master class in French when you click on the logo to the left.

In 2012 I started to explain PLM benefits to EPC companies (Engineering Procurement Construction), targeting a more profitable and efficient delivery of their constructions (oil platform, plant, building, infrastructure). The simplified reasoning behind using PLM was related to a more efficient and quality of multidisciplinary collaboration, reducing costly fixes during construction, and smoothening the intensive process of data handover.

More and more in the process industry, standards, like ISO 15926 (Process Industry) and ISO 19650 (BIM – mainly in the UK), became crucial. At that time, it was difficult to convince companies to focus on the horizontal-integrated process instead of dedicated, disconnected tools. Meanwhile, this has changed, thanks to the Digital Twin hype. Let’s have a look.

More and more in the process industry, standards, like ISO 15926 (Process Industry) and ISO 19650 (BIM – mainly in the UK), became crucial. At that time, it was difficult to convince companies to focus on the horizontal-integrated process instead of dedicated, disconnected tools. Meanwhile, this has changed, thanks to the Digital Twin hype. Let’s have a look.

PLM and ALM

The initial value for using PLM concepts complementary to MRO systems came from the fact that MRO systems are mainly focusing on plant operations. You could compare these systems with ERP systems for manufacturing companies, focusing execution and continuous operation. Scheduled maintenance and inspections are also driven by the MRO system. Typical MRO systems are Maximo and SAP PM. PLM could deliver configuration management, linking the design intent to the physical implementation. Therefore provide higher data quality, visibility, and traceability of the asset history.

In 2010, I shared these concepts in two posts: Asset Lifecycle Management using a PLM-system and PLM for Asset Lifecycle Management and Asset Development based on lessons learned with some (nuclear) plant owner/operators. They started to discover the need for configuration management to ensure data quality for operations. In 2010-2014 the business case using PLM complementary to MRO was data quality and therefore reduced down-time when executing large maintenance programs (dependencies between the individual projects were not visible without PLM)

In MRO-systems, like in ERP-systems, the data for execution is based on information coming from various engineering sources – specifications, PFDs, P&IDs. Questions owner/operators ask themselves are:

In MRO-systems, like in ERP-systems, the data for execution is based on information coming from various engineering sources – specifications, PFDs, P&IDs. Questions owner/operators ask themselves are:

- What are the designed operational settings?

- Are the asset parameters currently running as designed?

- What is the optimized maintenance period?

- Can we stretch maintenance intervals?

- Can we reduce inspections?

- Can we reduce downtime for maintenance and overhaul?

- What about predictive maintenance?

Most of these questions are answered by experts that use their tacit knowledge and experience to give the best so far answers. And when the answers were wrong, they were accepted as new learning points. Next time we won’t make this mistake, and the experts become even more knowledgeable.

Most of these questions are answered by experts that use their tacit knowledge and experience to give the best so far answers. And when the answers were wrong, they were accepted as new learning points. Next time we won’t make this mistake, and the experts become even more knowledgeable.

Now, these questions could be answered if you can model your asset in a virtual environment. In the virtual world, you would use simulation models, logical models, and 3D Models to describe the asset. This is where Model-Based Systems Engineering practices are used. However, these models need to be calibrated based on reality. And that is where IoT and Asset Operation Monitoring comes in connecting physical behavior with virtual predicted behavior. You can read more about this relationship in my post: Will MBSE the new PLM instead of IoT?

PLM and BIM

In 2014 when I started to discuss PLM concepts with EPC-companies (Engineering, Procurement, and Construction), mainly in the Oil & Gas industry. Here excellent asset development tools (AVEVA, Intergraph, Bentley) are the standard, and as the purpose of an EPC company is to deliver a plant or platform. Each software tool has its purpose and there is no lifecycle strategy. The value PLM could bring was providing a program overview (complementary with Primavera), standardization, multidisciplinary coordination and visibility across projects to capture knowledge.

Most of the time, the EPC companies did not see the value of optimizing themselves as this was accepted in the process. Even while their productivity and cost due to poor quality (fixing during construction /commissioning) were absurd (10-20 % of the project budget). Cultural change – think longer instead of fix later – was hard to explain. In the end, the EPC was not responsible for operations, so why bother that much?

My blog posts: PLM for all Industries and 2014 – the year that the construction industry did not discover PLM illustrate the challenge at that time. None of the EPCs and construction companies had the, that improving collaboration based on information-continuity (not data-driven yet) could bring the significant benefits, despite their relatively low-profit margin (1- 3 % is considered excellent). Breaking the silos is too.

My blog posts: PLM for all Industries and 2014 – the year that the construction industry did not discover PLM illustrate the challenge at that time. None of the EPCs and construction companies had the, that improving collaboration based on information-continuity (not data-driven yet) could bring the significant benefits, despite their relatively low-profit margin (1- 3 % is considered excellent). Breaking the silos is too.

Two recent trends, however, changed the status quo that existed.

First of all, more and more, the owner/operator does not want to be responsible for the maintenance and operations of the asset. The typical EPC-companies now became DBO-companies (Design Build and Operate), this requires lifecycle thinking for these companies as most of the costs of an asset are during its maintenance and operation phase.

First of all, more and more, the owner/operator does not want to be responsible for the maintenance and operations of the asset. The typical EPC-companies now became DBO-companies (Design Build and Operate), this requires lifecycle thinking for these companies as most of the costs of an asset are during its maintenance and operation phase.

Advanced Thinking (read: (Model-Based) Systems Engineering) can help these companies to shift their focus on a more sustainable design of the asset for the future and get rewarded for that. In the old EPC-model, the target was “just” to deliver as specified.

A second significant trend is the availability of cloud infrastructure for the construction world. A cloud infrastructure does not require considerable investment for the stakeholders in a construction project. By introducing BIM in a common data environment (CDE), a comparable infrastructure to PLM is created and likely the Maintenance-and-Operatie stakeholder is eager to have the full virtual definition here for the future.

A second significant trend is the availability of cloud infrastructure for the construction world. A cloud infrastructure does not require considerable investment for the stakeholders in a construction project. By introducing BIM in a common data environment (CDE), a comparable infrastructure to PLM is created and likely the Maintenance-and-Operatie stakeholder is eager to have the full virtual definition here for the future.

Read more about BIM and CDE for example, here: CDE – strategic BIM process tool.

Of course, technology and standards are there to collaborate. Now it is up to the stakeholders involved to develop new skills for collaboration (learn or hire) and implement them through new ways of working. A learning process can never be pushed by a big-bang, so make sure your company operates in two modes while learning.

As I mentioned the Maintenance-and-Operate stakeholders or in traditional cases, the Owner/Operators are incredibly interested in a well-defined virtual model of the asset. This allows them to analyze and simulate the implementation of fixes and enhancements for the future with an optimum result. Again we are talking about a digital twin of the asset here

Conclusion

Even though the digital twin is on the top of the Gartner Hype cycle, it has become already a vital principle to implement in particular for substantial, critical assets. As these precious assets, minor inefficiencies in data continuity can still be afforded to learn. From the moment companies have established a digital continuity between their virtual and physical assets, the concept for Digital Twin can also be profitable (and required) for other industries. In particular when these companies want to deliver their products as a service.

Note: I have been talking this year a lot about the challenges of digital transformation applied to PLM in particular. During PI PLMx London 2020 on February 3 and 4, I will lead a Think Thank session related to the challenge of connecting your PLM transformation to your executives’ vision (and budget). See you there ?

Note: I have been talking this year a lot about the challenges of digital transformation applied to PLM in particular. During PI PLMx London 2020 on February 3 and 4, I will lead a Think Thank session related to the challenge of connecting your PLM transformation to your executives’ vision (and budget). See you there ?

Last week I shared the first impression from my favorite conference, the PLM Roadmap / PDT conference organized by CIMdata and Eurostep. You can read some of the highlights here: The weekend after PLM Roadmap / PDT 2019 Day 1.

Last week I shared the first impression from my favorite conference, the PLM Roadmap / PDT conference organized by CIMdata and Eurostep. You can read some of the highlights here: The weekend after PLM Roadmap / PDT 2019 Day 1.

Click on the logo to see what was the full agenda. In this post, I will focus on some of the highlights of day 2.

Chernobyl, The megaproject with the New Arch

Christophe Portenseigne from the Bouygues Construction Group shared with us his personal story about this megaproject, called Novarka. 33 years ago, reactor #4 exploded and has been confined with an object shelter within six months in 1986. This was done with heroic speed, and it was anticipated that the shelter would only last for 20 – 30 years. You can read about this project here.

Christophe Portenseigne from the Bouygues Construction Group shared with us his personal story about this megaproject, called Novarka. 33 years ago, reactor #4 exploded and has been confined with an object shelter within six months in 1986. This was done with heroic speed, and it was anticipated that the shelter would only last for 20 – 30 years. You can read about this project here.

The Novarka project was about creating a shelter for Confinement of the radioactive dust and protection of the existing against external actions (wind, water, snow…) for the next 100 years!

And even necessary, the inside the arch would be a plant where people could work safely on the process of decommissioning the existing contaminated structures. You can read about the full project here at the Novarka website.

What impressed me the most the personal stories of Christophe taking us through some of the massive challenges that need to be solved with innovative thinking. High complexity, a vast number of requirements, many parties, stakeholders involved closed in June 2019. As Christophe mentioned, this was a project to be proud of as it creates a kind of optimism that no matter how big the challenges are, with human ingenuity and effort, we can solve them.

A Model Factory for the Efficient Development of High Performing Vehicles

Eric Landel, expert leader for Numerical Modeling and Simulation at Renault, gave us an interesting insight into an aspect of digitalization that has become very valuable, the connection between design and simulation to develop products, in this case, the Renault CLIO V, as much as possible in the virtual world. You need excellent simulation models to match future reality (and tests). The target of simulation was to get the highest safety test results in the Europe NCAP rating – 5 stars.

The Renault modeling factory implemented a digital loop (below) to ensure that at the end of the design/simulation, a robust design would exist. Eric mentioned that for the Clio, they did not build a prototype anymore. The first physical tests were done on cars coming from the plant. Despite the investment in simulation software, a considerable saving in crash part over cost before TGA (Tooling Go Ahead).

Combined with the savings, the process has been much faster than before. From 10 weeks for a simulation loop towards 4 weeks. The next target is to reduce this time to 1 week. A real example of digitization and a connected model-based approach.

From virtual prototype to hybrid twin

ESI – their sponsor session Evolving from Virtual Prototype Testing to Hybrid Twin: Challenges & Benefits was an excellent complementary session to the presentation from Renault

PLM, MBSE and Supply chain – challenges and opportunities

Nigel Shaw’s presentation was one of my favorite presentations, as Nigel addressed the same topics that I have been discussing in the past years. His focus was on collaboration between the OEM and supplier with the various aspects of requirements management, configuration management, simulation and the different speeds of PLM (focus on mechanical) and ALM (focus on software)

How can such activities work in a digitally-connected environment instead of a document-based approach? Nigel looked into the various aspects of existing standards in their domains and their future. There is a direction to MBE (Model-Based Everything) but still topics to consider. See below:

I agree with Nigel – the future is model-based – when will be the issue for the market leaders.

The ISO AP239 ed3 Project and the Through Life Cycle Interoperability Challenge

Yves Baudier from AFNET, a reference association in France regarding industry digitation, digital threads, and digital processes for Extended Enterprise/Supply chain. All about a digital future and Yves presentation was about the interoperability challenge, mentioning three of my favorite points to consider:

- Data becoming more and more a strategic asset – as digitalization of Industry and Services, new services enabled by data analytics

- All engineering domains (from concept design to system end of life) need to develop a data-centric approach (not only model-centric)– An opportunity for PLM to cover the full life-cycle

- Effectivity and efficiency of data interoperability through the life-cycle is now an essential industry requirement – e.g., “virtual product” and “digital twin” concepts

All the points are crucial for the domain of PLM.

In that context, Yves discussed the evolution of the ISO 10303-239 standard, also known as PLCS. The target with ISO AP239 ed3 is to become the standard for Aerospace and Defense for the full product lifecycle and through this convergence being able to push IT/PLM Vendors to comply – crucial for a digital enterprise

Time for the construction / civil industry

Christophe Castaing, director of digital engineering at Egis, shared with us their solution framework to manage large infrastructure projects by focusing on both the Asset Information (BIM-based) and the collaborative processes between the stakeholders, all based on standards. It was a broad and in-depth presentation – too much to share in a blog post. To conclude (see also Christophe’s slide below) in the construction industry more and more, there is the desire to have a digital twin of a given asset (building/construction), creating the need for standard information models.

Pierre Benning, IT director from Bouygues Public Works gave us an update on the MINnD project. MINnD standing for Modeling INteroperable INformation for sustainable INfrastructures in xD, a French research project dedicated to the deployment of BIM and digital engineering in the infrastructure sector. Where BIM has been starting from the construction industry, there is a need for a similar, digital modeling approach for civil infrastructure. In 2014 Christophe Castaing already reported the activities of the MINnD project – see The weekend after PDT 2014. Now Pierre was updating us on what are the activities for MINnD Season 2 – see below:

As you can see, again, the interest in digital twins for operations and maintenance. Perhaps here, the civil infrastructure industry will be faster than traditional industries because of its enormous value. BIM and GIS reconciliation is a precise topic as many civil infrastructures have a GIS aspect – Road/Train infrastructure for example. The third bullet is evident to me. With digitization and the integration of contractors and suppliers, BIM and PLM will be more-and-more conceptual alike. The big difference still at this moment: BIM has one standard framework where PLM-standards are still not in a consolidation stage.

Digital Transformation for PLM is not an evolution

If you have been following my blog in the past two years, you may have noticed that I am exploring ways to solve the transition from traditional, coordinated PLM processes towards future, connected PLM. In this session, I shared with the audience that digital transformation is disruptive for PLM and requires thinking in two modes.

If you have been following my blog in the past two years, you may have noticed that I am exploring ways to solve the transition from traditional, coordinated PLM processes towards future, connected PLM. In this session, I shared with the audience that digital transformation is disruptive for PLM and requires thinking in two modes.

Thinking in two modes is not what people like, however, organizations can run in two modes. Also, I shared some examples from digital transformation stories that illustrate there was no transformation, either failure or smoke, and mirrors. You can download my presentation via SlideShare here.

Fireplace discussion: Bringing all the Trends Together, What’s next

We closed the day and the conference with a fireplace chat moderated by Dr. Ken Versprille from CIMdata, where we discussed, among other things, the increasing complexity of products and products as a service. We have seen during the sessions from BAE Systems Maritime and Bouygues Construction Group that we can do complex projects, however, when there are competition and time to deliver pressure, we do not manage the project so much, we try to contain the potential risk. It was an interactive fireplace giving us enough thoughts for next year.

Conclusion

Nothing to add to Håkan Kårdén’s closing tweet – I hope to see you next year.

This is my concluding post related to the various aspects of the model-driven enterprise. We went through Model-Based Systems Engineering (MBSE) where the focus was on using models (functional / logical / physical / simulations) to define complex product (systems). Next we discussed Model Based Definition / Model-Based Enterprise (MBD/MBE), where the focus was on data continuity between engineering and manufacturing by using the 3D Model as a master for design, manufacturing and eventually service information.

This is my concluding post related to the various aspects of the model-driven enterprise. We went through Model-Based Systems Engineering (MBSE) where the focus was on using models (functional / logical / physical / simulations) to define complex product (systems). Next we discussed Model Based Definition / Model-Based Enterprise (MBD/MBE), where the focus was on data continuity between engineering and manufacturing by using the 3D Model as a master for design, manufacturing and eventually service information.

And last time we looked at the Digital Twin from its operational side, where the Digital Twin was applied for collecting and tuning physical assets in operation, which is not a typical PLM domain to my opinion.

Now we will focus on two areas where the Digital Twin touches aspects of PLM – the most challenging one and the most over-hyped areas I believe. These two areas are:

- The Digital Twin used to virtually define and optimize a new product/system or even a system of systems. For example, defining a new production line.

- The Digital Twin used to be the virtual replica of an asset in operation. For example, a turbine or engine.

Digital Twin to define a new Product/System

There might be some conceptual overlap if you compare the MBSE approach and the Digital Twin concept to define a new product or system to deliver. For me the differentiation would be that MBSE is used to master and define a complex system from the R&D point of view – unknown solution concepts – use hardware or software? Unknown constraints to be refined and optimized in an iterative manner.

In the Digital Twin concept, it is more about a defining a system that should work in the field. How to combine various systems into a working solution and each of the systems has already a pre-defined set of behavioral / operational parameters, which could be 3D related but also performance related.

In the Digital Twin concept, it is more about a defining a system that should work in the field. How to combine various systems into a working solution and each of the systems has already a pre-defined set of behavioral / operational parameters, which could be 3D related but also performance related.

You would define and analyze the new solution virtual to discover the ideal solution for performance, costs, feasibility and maintenance. Working in the context of a virtual model might take more time than traditional ways of working, however once the models are in place analyzing the solution and optimizing it takes hours instead of weeks, assuming the virtual model is based on a digital thread, not a sequential process of creating and passing documents/files. Virtual solutions allow a company to optimize the solution upfront instead of costly fixing during delivery, commissioning and maintenance.

Why aren’t we doing this already? It takes more skilled engineers instead of cheaper fixers downstream. The fact that we are used to fixing it later is also an inhibitor for change. Management needs to trust and understand the economic value instead of trying to reduce the number of engineers as they are expensive and hard to plan.

Why aren’t we doing this already? It takes more skilled engineers instead of cheaper fixers downstream. The fact that we are used to fixing it later is also an inhibitor for change. Management needs to trust and understand the economic value instead of trying to reduce the number of engineers as they are expensive and hard to plan.

In the construction industry, companies are discovering the power of BIM (Building Information Model) , introduced to enhance the efficiency and productivity of all stakeholders involved. Massive benefits can be achieved if the construction of the building and its future behavior and maintenance can be optimized virtually compared to fixing it in an expensive way in reality when issues pop up.

In the construction industry, companies are discovering the power of BIM (Building Information Model) , introduced to enhance the efficiency and productivity of all stakeholders involved. Massive benefits can be achieved if the construction of the building and its future behavior and maintenance can be optimized virtually compared to fixing it in an expensive way in reality when issues pop up.

The same concept applies to process plants or manufacturing plants where you could virtually run the (manufacturing) process. If the design is done with all the behavior defined (hardware-in-the-loop simulation and software-in-the-loop) a solution has been virtually tested and rapidly delivered with no late discoveries and costly fixes.

Of course it requires new ways of working. Working with digital connected models is not what engineering learn during their education time – we have just started this journey. Therefore organizations should explore on a smaller scale how to create a full Digital Twin based on connected data – this is the ultimate base for the next purpose.

Of course it requires new ways of working. Working with digital connected models is not what engineering learn during their education time – we have just started this journey. Therefore organizations should explore on a smaller scale how to create a full Digital Twin based on connected data – this is the ultimate base for the next purpose.

Digital Twin to match a product/system in the field

When you are after the topic of a Digital Twin through the materials provided by the various software vendors, you see all kinds of previews what is possible. Augmented Reality, Virtual Reality and more. All these presentations show that clicking somewhere in a 3D Model Space relevant information pops-up. Where does this relevant information come from?

When you are after the topic of a Digital Twin through the materials provided by the various software vendors, you see all kinds of previews what is possible. Augmented Reality, Virtual Reality and more. All these presentations show that clicking somewhere in a 3D Model Space relevant information pops-up. Where does this relevant information come from?

Most of the time information is re-entered in a new environment, sometimes derived from CAD but all the metadata comes from people collecting and validating data. Not the type of work we promote for a modern digital enterprise. These inefficiencies are good for learning and demos but in a final stage a company cannot afford silos where data is collected and entered again disconnected from the source.

The main problem: Legacy PLM information is stored in documents (drawings / excels) and not intended to be shared downstream with full quality.

Read also: Why PLM is the forgotten domain in digital transformation.

If a company has already implemented an end-to-end Digital Twin to deliver the solution as described in the previous section, we can understand the data has been entered somewhere during the design and delivery process and thanks to a digital continuity it is there.

How many companies have done this already? For sure not the companies that are already a long time in business as their current silos and legacy processes do not cater for digital continuity. By appointing a Chief Digital Officer, the journey might start, the biggest risk the Chief Digital Officer will be running another silo in the organization.

How many companies have done this already? For sure not the companies that are already a long time in business as their current silos and legacy processes do not cater for digital continuity. By appointing a Chief Digital Officer, the journey might start, the biggest risk the Chief Digital Officer will be running another silo in the organization.

So where does PLM support the concept of the Digital Twin operating in the field?

For me, the IoT part of the Digital Twin is not the core of a PLM. Defining the right sensors, controls and software are the first areas where IoT is used to define the measurable/controllable behavior of a Digital Twin. This topic has been discussed in the previous section.

The second part where PLM gets involved is twofold:

- Processing data from an individual twin

- Processing data from a collection of similar twins

Processing data from an individual twin

Data collected from an individual twin or collection of twins can be analyzed to extract or discover failure opportunities. An R&D organization is interested in learning what is happening in the field with their products. These analyses lead to better and more competitive solutions.

Data collected from an individual twin or collection of twins can be analyzed to extract or discover failure opportunities. An R&D organization is interested in learning what is happening in the field with their products. These analyses lead to better and more competitive solutions.

Predictive maintenance is not necessarily a part of that. When you know that certain parts will fail between 10.000 and 20.000 operating hours, you want to optimize the moment of providing service to reduce downtime of the process and you do not want to replace parts way too early.

The R&D part related to predictive maintenance could be that R&D develops sensors inside this serviceable part that signal the need for maintenance in a much smaller time from – maintenance needed within 100 hours instead of a bandwidth of 10.000 hours. Or R&D could develop new parts that need less service and guarantee a longer up-time.

For an R&D department the information from an individual Digital Twin might be only relevant if the Physical Twin is complex to repair and downtime for each individual too high. Imagine a jet engine, a turbine in a power plant or similar. Here a Digital Twin will allow service and R&D to prepare maintenance and simulate and optimize the actions for the physical world before.

The five potential platforms of a digital enterprise

The second part where R&D will be interested in, is in the behavior of similar products/systems in the field combined with their environmental conditions. In this way, R&D can discover improvement points for the whole range and give incremental innovation. The challenge for this R&D organization is to find a logical placeholder in their PLM environment to collect commonalities related to the individual modules or components. This is not an ERP or MES domain.

![]() Concepts of a logical product structure are already known in the oil & gas, process or nuclear industry and in 2017 I wrote about PLM for Owners/Operators mentioning Bjorn Fidjeland has always been active in this domain, you can find his concepts at plmPartner here or as an eLearning course at SharePLM.

Concepts of a logical product structure are already known in the oil & gas, process or nuclear industry and in 2017 I wrote about PLM for Owners/Operators mentioning Bjorn Fidjeland has always been active in this domain, you can find his concepts at plmPartner here or as an eLearning course at SharePLM.

To conclude:

- This post is way too long (sorry)

- PLM is not dead – it evolves into one of the crucial platforms for the future – The Product Innovation Platform

- Current BOM-centric approach within PLM is blocking progress to a full digital thread

More to come after the holidays (a European habit) with additional topics related to the digital enterprise

The past year I have written about PLM in the context of digital transformation, relevant for companies that deliver products to the market. Some years ago, I have advocated the value of a PLM infrastructure for EPC companies and Owners/Operators of a plant.

The past year I have written about PLM in the context of digital transformation, relevant for companies that deliver products to the market. Some years ago, I have advocated the value of a PLM infrastructure for EPC companies and Owners/Operators of a plant.

EPC stands for Engineering, Construction, and Procurement, a typical name for often large capital-intensive projects, executed by a consortium of companies. Together they create buildings, platforms, plants, infrastructure and more one-off deliveries, which will be under control of the Owner/Operator after going-live.

Some references:

2014 EPC related: The year the construction industry did not discover PLM

2013 Owner/Operators related: PLM for all industries?

As you can see from the dates, these posts are not the most recent posts. Meanwhile, EPC-based businesses are discovering the value of a PLM infrastructure. Main component for them is BIM (Building Information Model or Building Information Management) and they use cloud-based collaboration environments to be more cost-efficient. Slowly these companies are moving to a single repository of the data supporting multidisciplinary collaboration related to a BIM model to guarantee a continuity of data and better execution. I am positive about EPC companies that are discovering the value of PLM- It might be slightly different from classical product-selling companies, mainly because data ownership is different. In an EPC environment many companies are responsible for parts of the data and each of them keeps the real knowledge as IP (Intellectual Property) for themselves. They only “publish” deliverables. For companies that deliver products to the market, the OEM keeps responsibility for all relevant product information and h has a different strategy.

I worked in the past with one of my peers, Bjorn Fidjeland (www.plmpartner.com) on PLM for EPCs and Owner/Operators. We share the same passion to bring PLM outside traditional industries. As Bjorn is now more active than I am in this domain, I recommend to read Bjorn´s posts on this topic. For example:

I worked in the past with one of my peers, Bjorn Fidjeland (www.plmpartner.com) on PLM for EPCs and Owner/Operators. We share the same passion to bring PLM outside traditional industries. As Bjorn is now more active than I am in this domain, I recommend to read Bjorn´s posts on this topic. For example:

EPC related 2016: Handover to logistics and supply chain in capital projects

Owner/Operators 2015: Plant Information Management – Information Structures

Bjorn provides a lot of details, which are important as implementing PLM for EPCs or Owner/Operators requires different data structures. I wrote about these concepts in 2014 in two posts – PLM and/or SLM ? post 1 and post 2. At that time not realizing the virtual twin was becoming popular.

PLM complementary to EAM

The last year I have explored these concepts together with (potential) Owner/Operators of a plant, where PLM would be complementary to their EAM system. In the world of Owner/Operators, Enterprise Asset Management (EAM) software is the major software these companies use. You find some of the major EAM players here.

You will discover that all these software suites are good for plant operations, but they all have a challenge to support data consistency and quality in particular when dealing with plant changes and efficient, high-quality plant information management. Versioning and status management, typical PLM capabilities are often not there.

Owner/Operators have challenges with EAM environments as:

- EAM systems are designed to support an as-operated environment, assuming all data it correct. Support for Maintenance, Repair or Overhaul projects is often rudimentary and depending on document-driven processes. The primary business process of these companies is producing continuously, such as, electricity or chemicals. Therefore typical engineering projects to change or enhance the main production process do not have the same financial focus.

- A document-driven approach is the de facto standard common for these industries. Most of the time because the plant has been established through an EPC approach, which was 100 % document-driven due to the different disconnected disciplines/tools working at that time in the EPC project. As the asset information is stored and delivered in documents, most owners/operators keep the document-driven approach for future change projects.

Owners/operator can benefit significantly from a data-driven PLM system as complementary infrastructure to their EAM system. The PLM system will be the source for accurate asset information, manage the change and approvals for the assets and ultimately push the new released information to the EAM system. The PLM system will offer the full history an traceability of decisions made, important for regulatory bodies or insurance companies.

.A data-driven approach for asset information allows owners/operators to benefit from efficient processes, reducing strongly the amount of people required to process data (documents) or reducing the time for people working in maintenance and operations to search for data. I found a nice slide from IBM explaining the concept of PLM an EAM collaboration – see below:

The same benefits modern digital enterprises will have related to a data-driven approach will come available for owner/operators. Operational management is supported by the EAM system combined with real-time capabilities provided by a modern PLM systems to analyze, design and deliver changes to the plant without a costly data conversion process (e.g. compiling new documents) and disconnected processes.

Moving to a virtual twin

Interesting enough the digital transformation is bringing the concepts of connecting engineering, manufacturing and operations together into an infrastructure of digital platforms interacting together. Where owners/operators historically do not focus on optimizing the engineering process to build and maintain their assets, in the “classical” industries companies were not really focusing on how products behaved in the field after they were delivered. With digital continuity (the digital thread) and IoT now these “classical” companies can connect to their products in the field. Their products become assets of information, and in case these companies change their business offering into leasing products and services, these assets become managed assets, like the assets owner/operators are managing.

Interesting enough the digital transformation is bringing the concepts of connecting engineering, manufacturing and operations together into an infrastructure of digital platforms interacting together. Where owners/operators historically do not focus on optimizing the engineering process to build and maintain their assets, in the “classical” industries companies were not really focusing on how products behaved in the field after they were delivered. With digital continuity (the digital thread) and IoT now these “classical” companies can connect to their products in the field. Their products become assets of information, and in case these companies change their business offering into leasing products and services, these assets become managed assets, like the assets owner/operators are managing.

The concept of a virtual twin (or digital twin – image proprietary of GE) , where a virtual model-based environment is linked to one or more real instances in operations, is the dream of all industries. Preparing, Simulating and verifying changes in a virtual world is so much more efficient and cheaper that is allows for higher quality of products and in the case of plant operators higher safety will be the number one topic.

Conclusion

What I have learned so far from plant owners/operators is that they are struggling to grasp a modern digital enterprise concept as their current environment is not model-based but document-driven. Starting with PLM to complement their EAM system could be a first step to understand the value and business benefits of digital continuity. It requires a new way of thinking which is not a commodity at this time. It will happen in the next 5 to 10 years. Expect it to be driven by the realization of virtual twins in the industry and further BIM maturity. The future is model-based !!!

p.s. I am happy to announce WordPress provided a new feature to my blog. In the side panel you can now choose your language (based on Google Translate) if you have difficulties with English. Enjoy !

As a genuine Dutchman, I was able to spend time last month in the Netherlands, and I attended two interesting events: BIMOpen2015, where I was invited to speak about what BIM could learn from PLM (see Dutch review here) and the second event: Where engineering meets supply chain organized by two startup companies located in Yes!Delft an incubator place working close to the technical university of Delft (Dutch announcement here)

As a genuine Dutchman, I was able to spend time last month in the Netherlands, and I attended two interesting events: BIMOpen2015, where I was invited to speak about what BIM could learn from PLM (see Dutch review here) and the second event: Where engineering meets supply chain organized by two startup companies located in Yes!Delft an incubator place working close to the technical university of Delft (Dutch announcement here)

Two different worlds and I realized later, they potential have the same future. So let’s see what happened.

BIMopen 2015

BIMopen 2015 had the theme: From Design to Operations and the idea of the conference was to bring together construction companies (the builders) and the facility managers (the operators) and discuss the business value they see from BIM.

BIMopen 2015 had the theme: From Design to Operations and the idea of the conference was to bring together construction companies (the builders) and the facility managers (the operators) and discuss the business value they see from BIM.

First I have to mention that BIM is a confusing TLA like PLM. So many interpretations of what BIM means. For me, when I talk about BIM I mean Building Information Management. In a narrower meaning, BIM is often considered as a Building Information Model – a model that contains all multidisciplinary information. The last definition does not deal with typical lifecycle operations, like change management, planning, and execution.

The BIMopen conference started with Ellen Joyce Dijkema from BDO consultants who addressed the cost of failure and the concepts of lean. Thinking. The high cost of failure is known and accepted in the construction industry, where at the end of the year profitability can be 1 % of turnover (with a margin of +/- 3 % – so being profitable is hard).

Lean thinking requires a cultural change, which according to Ellen Joyce is an enormous challenge, where according to a study done by Prof Dr. A. Cozijnsen there is only 19 % of chance this will be successful, compared to 40 % chance of success for new technology and 30 % of chance for new work processes.

It is clear changing culture is difficult and in the construction industry it might be even harder. I had the feeling a large part of the audience did not grasp the opportunity or could find a way to apply it to their own world.

My presentation about what BIM could learn from PLM was similar. Construction companies have to spend more time on upfront thinking instead of fixing it later (costly). In addition thinking about the whole lifecycle of a construction, also in operations can bring substantial revenue for the owner or operator of a construction. Where traditional manufacturing companies take the entire lifecycle into account, this is still not understood in the construction industry.

This point was illustrated by the fact that there was only one person in the audience with the primary interest to learn what BIM could contribute to his job as facility manager and half-way the conference he still was not convinced BIM had any value for him.

A significant challenge for the construction industry is that there is no end-to-end ownership of data, therefore having a single company responsible for all the relevant and needed data does not exist. Ownership of data can result in legal responsibility at the end (if you know what to ask for) and in a risk shifting business like the construction industry companies try to avoid responsibility for anything that is not directly related to the primary activities.

Some larger companies during the conference like Ballast Nedam and HFB talked about the need to have a centralized database to collect all the data related to a construction (project). They were building these systems themselves, probably because they were not aware of PLM systems or did not see through the first complexity of a PLM system, therefore deciding a standard system will not be enough.

I believe this is short-term thinking as with a custom system you can get quick results and user acceptance (it works the way the user is asking for) however custom systems have always been a blockage for the future after 10-15 years as they are developed with a mindset from that time.

I believe this is short-term thinking as with a custom system you can get quick results and user acceptance (it works the way the user is asking for) however custom systems have always been a blockage for the future after 10-15 years as they are developed with a mindset from that time.

If you want to know, learn more about my thoughts have a look at 2014 the year the construction industry did not discover PLM. I will write a new post at the end of the year with some positive trends. Construction companies start to realize the benefits of a centralized data-driven environment instead of shifting documents and risks.

The cloud might be an option they are looking for. Which brings me to the second event.

Engineering meets Supply Chain

This was more an interactive workshop / conference where two startups KE-Works and TradeCloud illustrated the individual value of their solution and how it could work in an integrated way. I had been in touch with KE-Works before because they are an example of the future trend, platform-thinking. Instead of having one (or two) large enterprise system(s), the future is about connecting data-centric services, where most of them can run in the cloud for scalability and performance.

KE-Works provides a real-time workflow for engineering teams based on knowledge rules. Their solution runs in the cloud but connects to systems used by their customers. One of their clients Fokker Elmo explained how they want to speed up their delivery process by investing in a knowledge library using KE-works knowledge rules (an approach the construction industry could apply too)

In general if you look at what KE-works does, it is complementary to what PLM-systems or platforms do. They add the rules for the flow of data, where PLM-systems are more static and depend on predefined processes.

TradeCloud provides a real-time platform for the supply chain connecting purchasing and vendors through a data-driven approach instead of exchanging files and emails. TradeCloud again is another example of a collection of dedicated services, targeting, in this case, the bottom of the market. TradeCloud connects to the purchaser’s ERP and can also connect to the vendor’s system through web services.

TradeCloud provides a real-time platform for the supply chain connecting purchasing and vendors through a data-driven approach instead of exchanging files and emails. TradeCloud again is another example of a collection of dedicated services, targeting, in this case, the bottom of the market. TradeCloud connects to the purchaser’s ERP and can also connect to the vendor’s system through web services.

The CADAC group, a large Dutch Autodesk solution provided also showed their web-services based solution connecting Autodesk Vault with TradeCloud to make sure the right drawings are available. The name of their solution, the “Cadac Organice Vault TradeCloud Adapter” is more complicated than the solution itself.

What I saw that afternoon was three solutions providers connected using the cloud and web services to support a part of a company’s business flow. I could imagine that adding services from other companies like OnShape (CAD in the cloud), Kimonex (BOM Management for product design in the cloud) and probably 20 more candidates can already build and deliver a simplified business flow in an organization without having a single, large enterprise system in place that connects all.

What I saw that afternoon was three solutions providers connected using the cloud and web services to support a part of a company’s business flow. I could imagine that adding services from other companies like OnShape (CAD in the cloud), Kimonex (BOM Management for product design in the cloud) and probably 20 more candidates can already build and deliver a simplified business flow in an organization without having a single, large enterprise system in place that connects all.

The Future

I believe this is the future and potential a breakthrough for the construction industry. As the connections between the stakeholders can vary per project, having a configurable combination of business services supported by a cloud infrastructure enables an efficient flow of data.

I believe this is the future and potential a breakthrough for the construction industry. As the connections between the stakeholders can vary per project, having a configurable combination of business services supported by a cloud infrastructure enables an efficient flow of data.

As a PLM expert, you might think all these startups with their solutions are not good enough for the real world of PLM. And currently they are not – I agree. However disruption always comes unnoticed. I wrote about it in 2012 (The Innovators Dilemma and PLM)

Conclusion

Innovation happens when you meet people, observe and associate in areas outside your day-to-day business. For me, these two events connected some of the dots for the future. What do you think? Will a business process based on connected services become the future?

Sometimes we have to study careful to see patterns have a look here what is possible according to some scientists (click on the picture for the article)

A year ago I wrote a blog post questioning if the construction industry would learn from PLM practices in relation to BIM.

A year ago I wrote a blog post questioning if the construction industry would learn from PLM practices in relation to BIM.

In that post, I described several lessons learned from other industries. Topics like:

- Working on a single, shared repository of on-line data (the Digital Mock Up). Continuity of data based on a common data model – not only 3D

- It is a mindset. People need to learn to share instead of own data

- Early validation and verification based on a virtual model. Working in the full context

- Planning and anticipation for service and maintenance during the design phase. Design with the whole lifecycle in mind (and being able to verify the design)

The comments to that blog post already demonstrated that the worlds of PLM and BIM are not 100 percent comparable and that there are some serious inhibitors preventing them to come closer. One year later, let´s see where we are:

BIM moving into VDC (or BLM ?)

The first trend that becomes visible is that people in the construction industry start to use more and more the term Virtual Design and Construction (VDC) instead of BIM (Building Information Model or Building Information Management?).

The first trend that becomes visible is that people in the construction industry start to use more and more the term Virtual Design and Construction (VDC) instead of BIM (Building Information Model or Building Information Management?).

The good news here is that there is less ambiguity with the term VDC instead of BIM. Does this mean many BIM managers will change their job title? Probably not as most construction companies are still in the learning phase what a digital enterprise means for them.

Still Virtual Design and Construction focuses a lot on the middle part of the full lifecycle of a construction. VDC does not necessary connect the early concept phase and for sure almost neglects the operational phase. The last phase is often ignored as construction companies are not thinking (yet) about Repair & Maintenance contracts (the service economy).

And surprisingly, last week I saw a blog post from Dassault Systemes, where Dassault introduced the word BLM (Building Lifecycle Management). Related to this blog post also some LinkedIn discussions started. BLM, according to Dassault Systemes, is the combination of BIM and PLM – read this post here.

The challenge however for construction companies is to, what are the related data sets they require and how can you create this continuity of data. This brings us to one of the most important inhibitors.

Data Ownership

Where in other industries a clear product data owner exists, the ownership of data in EPC (Engineering, Procurement, Construction) companies, typical for the construction industry or oil & gas industry is most of the times on purpose vague.

Where in other industries a clear product data owner exists, the ownership of data in EPC (Engineering, Procurement, Construction) companies, typical for the construction industry or oil & gas industry is most of the times on purpose vague.

First of all the owner of a construction often does not know which data could be relevant to maintain. And secondly, as soon as the owner asks for more detailed information, he will have to pay for that, raising the costs, which not directly flow back to benefits, only later during the FM (Facility Management) /Operational stage.

And let´s imagine the owner could get the all the data required. Next the owner is at risk, as potentially having the information might makes you liable for mistakes and claims.

From discussion with construction owners I learned their policy is not to aim for the full dataset related to a construction. It reduces the risk to be liable. Imagine Boeing and Airbus would follow this approach. This brings us to another important inhibitor.

A risk shifting business

The construction industry on its own is still a risk shifting business, where each party tries to pass the risk of cost of failure to another stakeholder in the pyramid. The most powerful owners / operators of the construction industry quickly play down the risk to their contractors and suppliers. And these companies then then distribute the risk further down to their subcontractors.

The construction industry on its own is still a risk shifting business, where each party tries to pass the risk of cost of failure to another stakeholder in the pyramid. The most powerful owners / operators of the construction industry quickly play down the risk to their contractors and suppliers. And these companies then then distribute the risk further down to their subcontractors.

If you do not accept the risk, you are no longer in the game. This is different from other industries and I have seen this approach in a few situations.

For example, I was dealing with an EPC company that wanted to implement PLM. The company expected that the PLM implementer would take a large part of the risk for the implementation. As they were always taking the risk too for their big customers when applying for a project. Here there was a clash of cultures, as PLM implementers learned that the risk of a successful PLM implementation is vague as many soft values define the success. It is not a machine or platform that has to work after some time.

For example, I was dealing with an EPC company that wanted to implement PLM. The company expected that the PLM implementer would take a large part of the risk for the implementation. As they were always taking the risk too for their big customers when applying for a project. Here there was a clash of cultures, as PLM implementers learned that the risk of a successful PLM implementation is vague as many soft values define the success. It is not a machine or platform that has to work after some time.

Another example was related to requirements management. Here the EPC company wanted to become clear and specific to their customer. However their customer reacted very strange. Instead of being happy that the EPC company invested in more upfront thinking and analysis, the customer got annoyed as they were not used to be specific so early in the process. They told the EPC company, “if you have so many questions, probably you do not understand the business”.

Another example was related to requirements management. Here the EPC company wanted to become clear and specific to their customer. However their customer reacted very strange. Instead of being happy that the EPC company invested in more upfront thinking and analysis, the customer got annoyed as they were not used to be specific so early in the process. They told the EPC company, “if you have so many questions, probably you do not understand the business”.

So everyone in the EPC business is pushed to accept a higher risk and uncertainty than other industries. However, the big reward is that you are allowed to have a cost of failure above 15 – 20 percent without feeling bad. Which this percentage you would be out of business in other industries. And this brings us to another important inhibitor.

Accepted high cost of failure

As the industry accepts this high cost of failure, companies are not triggered to work different or to redesign their processes in order to lower the inefficiencies. The UK government mandates BIM Level 2 for their projects starting in 2016 and beyond, to reduce costs through inefficiencies.

As the industry accepts this high cost of failure, companies are not triggered to work different or to redesign their processes in order to lower the inefficiencies. The UK government mandates BIM Level 2 for their projects starting in 2016 and beyond, to reduce costs through inefficiencies.

But will the UK government invest to facilitate and aim for data ownership? Probably not, as the aim of governments is not to be extreme economical. Being not liable has a bigger value than being more efficient for governments as I learned. Being more efficient is the message to the outside world to keep the taxpayer satisfied.

It is hard to change this way of thinking. It requires a cultural change through the whole value chain. And cultural change is the “worst” thing that can happen to a company. The biggest inhibitor.

Cultural change

Cultural change is a point that touches all industries and there is no difference between the construction industry and for example a classical discrete manufacturing company. Because of global competition and comparable products other industries have been forced already to work different, in order to survive (and are still challenged)

Cultural change is a point that touches all industries and there is no difference between the construction industry and for example a classical discrete manufacturing company. Because of global competition and comparable products other industries have been forced already to work different, in order to survive (and are still challenged)

The cultural change lies in people. We (the older generation) are educated and brought up in classical engineering models that reflect the post second world war best practices. Being important in a process is your job justification and job guarantee.

New paradigms, based on a digital world instead of a document-shifting world, need to be defined and matured and will make many classical data processing jobs redundant. Read this interesting article from the Economist: The Onrushing Wave

This is a challenge for every company. The highest need to implement this cultural change is ironically for those countries with the highest legacy: Western Europe / the United-States.

As these countries also have the highest labor cost, the impact of, keep on doing the old stuff, will reduce their competitiveness. The impact for construction companies is less, as the construction industry is still a local business, as at the end resources will not travel the globe to execute projects.

However cheaper labor costs become more and more available in every country. If companies want to utilize them, they need to change the process. They need shift towards more thinking and knowledge in the early lifecycle to avoid the need for high qualified people to be in the field to the fix errors.

Sharing instead of owning

For me the major purpose of PLM is to provide an infrastructure for people to share information in such a manner that others, not aware of the information, can still easily find and use the information in a relevant context of their activities. The value: People will decide on actual information and no longer become reactive on fixing errors due to lack of understanding the context.

For me the major purpose of PLM is to provide an infrastructure for people to share information in such a manner that others, not aware of the information, can still easily find and use the information in a relevant context of their activities. The value: People will decide on actual information and no longer become reactive on fixing errors due to lack of understanding the context.

The problem for the construction industry is that I have not seen any vendor focusing on sharing the big picture. Perhaps the BLM discussion will be a first step. For the major tool providers, like Autodesk and Bentley, their business focus is on the continuity of their tools, not on the continuity of data.

Last week I noticed a cloud based Issue Management solution, delivered by Kubus. Issue Management is one of the typical and easy benefits a PLM infrastructure can deliver. In particular if issues can be linked to projects, construction parts, processes, customers. If this solution becomes successful, the extension might be to add more data elements to the cloud solution. Main question will remain: Who owns the data ? Have a look:

For continuity of data, you need standards and openness – IFC is one of the many standards needed in the full scope of collaboration. Other industries are further developed in their standards driven by end-user organizations instead of vendors. Companies should argue with their vendors that openness is a right, not a privilege.

Conclusion

A year ago, I was more optimistic about the construction industry adopting PLM practices. What I have learned this year, and based on feedback from others, were are not at the turning point yet. Change is difficult to achieve from one day to the other. Meanwhile, the whole value chain in the construction industry has different objectives. Nobody will take the risk or can afford the risk.

I remain interested to see where the construction industry is heading.

What do you think will 2015 be the year of a breakthrough?

This is for the moment the last post about the difference between files and a data-oriented approach. This time I will focus on the need for open exchange standards and the relation to proprietary systems. In my first post, I explained that a data-centric approach can bring many business benefits and is pointing to background information for those who want to learn more in detail. In my second post, I gave the example of dealing with specifications.

This is for the moment the last post about the difference between files and a data-oriented approach. This time I will focus on the need for open exchange standards and the relation to proprietary systems. In my first post, I explained that a data-centric approach can bring many business benefits and is pointing to background information for those who want to learn more in detail. In my second post, I gave the example of dealing with specifications.

It demonstrated that the real value for a data-centric approach comes at the moment there are changes of the information over time. For a specification that is right the first time and never changes there is less value to win with a data-centric approach. Moreover, aren’t we still dreaming that we do everything right the first time.

The specification example was based on dealing with text documents (sometimes called 1D information). The same benefits are valid for diagrams, schematics (2D information) and CAD models (3D information)

1D,2D,3D …..

The challenge for a data-oriented approach is that information needs to be stored in data elements in a database, independent of an individual file format. For text, this might be easy to comprehend. Text elements are relative simple to understand. Still the OpenDocument standard for Office documents is in the background based on a lot of technical know-how and experience to make it widely acceptable. For 2D and 3D information this is less obvious as this is for the domain of the CAD vendors.

The challenge for a data-oriented approach is that information needs to be stored in data elements in a database, independent of an individual file format. For text, this might be easy to comprehend. Text elements are relative simple to understand. Still the OpenDocument standard for Office documents is in the background based on a lot of technical know-how and experience to make it widely acceptable. For 2D and 3D information this is less obvious as this is for the domain of the CAD vendors.

CAD vendors have various reasons not to store their information in a neutral format.

- First of all, and most important for their business, a neutral format would reduce the dependency on their products. Other vendors could work with these formats too, therefore reducing the potential market capture. You could say that in a certain manner the Autodesk 2D format for DXF (and even DWG) have become a neutral format for 2D data as many other vendors have applications that read and write back information in the DXF-data format. So far DXF is stored in a file but you could store DXF data also inside a database and make it available as elements.

- This brings us to the second reason why using neutral data formats are not that evident for CAD vendors. It reduces their flexibility to change the format and optimize it for maximal performance. Commercially the significant, immediate disadvantage of working in neutral formats is that it has not been designed for particular needs in an individual application and therefore any “intelligent” manipulations on the data are hard to achieve..

The same reasoning can be applied to 3D data, where different neutral formats exist (IGES, STEP, …. ). It is very difficult to identify a common 3D standard without losing many benefits that an individual 3D CAD format brings currently. For example, CATIA is handling 3D CAD data in a complete different way as Creo does, and again handled different compared to NX, SolidWorks, Solid Edge and Inventor. Even some of them might use the same CAD kernel.

The same reasoning can be applied to 3D data, where different neutral formats exist (IGES, STEP, …. ). It is very difficult to identify a common 3D standard without losing many benefits that an individual 3D CAD format brings currently. For example, CATIA is handling 3D CAD data in a complete different way as Creo does, and again handled different compared to NX, SolidWorks, Solid Edge and Inventor. Even some of them might use the same CAD kernel.

However, it is not only about the geometry anymore; the shapes represent virtual objects that have metadata describing the objects. In addition other related information exists, not necessarily coming from the design world, like tasks (planning), parts (physical), suppliers, resources and more

PLM, ERP, systems and single source of truth

This brings us in the world of data management, in my world mainly PLM systems and ERP systems. An ERP system is already a data-centric application, the BOM is already available as metadata as well as all the scheduling and interaction with resources, suppliers and financial transactions. Still ERP systems store a lot of related documents and drawings, containing content that does not match their data model.

PLM systems have gradually becoming more and more data centric as the origin was around engineering data, mostly stored in files. In a data-centric approach, there is the challenge to exchange data between a PLM system and an ERP system. Usually there is a need to share information between two systems, mainly the items. Different definitions of an item on the PLM and ERP side make it hard to exchange information from one system to the other. It is for that reason why there are many discussions around PLM and ERP integration and the BOM.

In the modern data-centric approach however we should think less and less in systems and more and more in business processes performed on actual data elements. This requires a company-wide, actually an enterprise-wide or industry-wide data definition of all information that is relevant for the business processes. This leads into Master Data Management, the new required skill for enterprise solution architects

The data-centric approach creates the impression that you can achieve a single source of the truth as all objects are stored uniquely in a database. SAP solves the problem by stating everything fits in their single database. To my opinion this is more a black hole approach: Everything gets inside, but even light cannot escape. Usability and reuse of information that was stored with the intention not to be found is the big challenge here.

The data-centric approach creates the impression that you can achieve a single source of the truth as all objects are stored uniquely in a database. SAP solves the problem by stating everything fits in their single database. To my opinion this is more a black hole approach: Everything gets inside, but even light cannot escape. Usability and reuse of information that was stored with the intention not to be found is the big challenge here.

Other PLM and ERP vendors have different approaches. Either they choose for a service bus architecture where applications in the background link and synchronize common data elements from each application. Therefore, there is some redundancy, however everything is connected. More and more PLM vendors focus on building a platform of connected data elements, where on top applications will run, like the 3DExperience platform from Dassault Systèmes.

As users we are more and more used to platforms as Google, Apple provide these platforms already in the cloud for common use on our smartphones. The large amount of apps run on shared data elements (contacts, locations …) and store additional proprietary data.

As users we are more and more used to platforms as Google, Apple provide these platforms already in the cloud for common use on our smartphones. The large amount of apps run on shared data elements (contacts, locations …) and store additional proprietary data.

Platforms, Networks and standards