You are currently browsing the tag archive for the ‘Digital Twin’ tag.

Our recent interviews this year with aPriori and SAP were with companies that had less of a focus on the traditional product design process and more of a focus on the (circular) manufacturing process. In these interviews the importance of working with connected data was discussed in a shared (digital) thread.

Our recent interviews this year with aPriori and SAP were with companies that had less of a focus on the traditional product design process and more of a focus on the (circular) manufacturing process. In these interviews the importance of working with connected data was discussed in a shared (digital) thread.

This time, we, Mark Reisig and Jos Voskuil, were excited to talk with Siemens, not only a well-known PLM vendor but also a manufacturer of products and, therefore, having a close understanding of what is needed and can be achieved with their software solutions.

Siemens

![]() As Siemens is such a broad enterprise; we were happy to speak with Ryan R. Rochelle, who focuses on Sustainable Production, Sustainable Manufacturing and Sustainable Industry within Siemens . In the interview we discussed the importance of digital twins and the feedback loops between design and manufacturing. Despite some flaws in the network connection, we are happy to share an informative interview.

As Siemens is such a broad enterprise; we were happy to speak with Ryan R. Rochelle, who focuses on Sustainable Production, Sustainable Manufacturing and Sustainable Industry within Siemens . In the interview we discussed the importance of digital twins and the feedback loops between design and manufacturing. Despite some flaws in the network connection, we are happy to share an informative interview.

Enjoy listening and watching the next 33 minutes, talking with Ryan Rochelle.

You can download the images shown during the interview HERE

What I have learned

- Like all PLM vendors in this domain, Siemens talks about the importance of a circular economy and the need for digital threads and digital twins, confirming the need for all of us to invest in the digitization of the product lifecycle.

- Siemens is in a unique position as both the industrial user and software provider of its PLM suite, therefore having a unique feedback loop on the usability and applicability of its software in its industry.

- In the area of sustainability, they learn from both customers and internal customers. They are customer zero. Here, they observe shifting in engineering activities to the left” to optimize processes, supply chain and manufacturing earlier . (<<PGGA>>: which aligns with our aPriori and Makersite interviews).

- Siemens, SiGreen’s solution is an example of this unique position, being be able to track the carbon footprint of products across the supply chain.

Want to learn more

- There is the Siemens Sustainable industries website

- How the Digital Enterprise helps attain sustainability

- The Journey to a Sustainability Lighthouse awarded by the World Economic Forum

Conclusion

We have been discussing the relationship between PLM and sustainability with relevant software vendors for over two years now. As we saw initially in 2022, a few companies were exploring the possibilities.

Now, with further regulations and advanced software capabilities, companies are starting to implement new capabilities to make their product development process and products more sustainable. Siemens, as a software provider and an industrial user of its tools, is leading this journey—is it time for your company to step up, too?

Again, a “The weekend after …” post related to my favorite event to which I have contributed since 2014.

Expectations were high this time from my side, in particular because we would have a serious discussion related to connected digital threads and federated PLM.

More about these topics in my post next week as all content is not yet available for sharing.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

Looking back to the conference, there were two noticeable streams.

- The stream where people share their current PLM experiences, traditionally the A&D action groups moderated by CIMdata, is part of this stream. This part I will cover in this post.

- There were forward-looking presentations related to standards, ontologies, and federated PLM—all with an AI flavor. This part I will cover in my next post(s).

The connection between all these sessions was the Digital Thread. The conference’s theme was: The Digital Thread in a Heterogeneous, Extended Enterprise Reality. Let’s start the review with the highlights from the first stream.

Digital Thread: Why Should We Care?

As usual, Peter Bilello from CIMdata kicked off the conference by setting the scene. Peter started by clarifying the two definitions of the Digital Thread.

- The first is a communication framework that allows a connected data flow and integrated view of an asset’s data (i.e., its Digital Twin) throughout its lifecycle across traditionally siloed functional perspectives.

In my terminology, the connected digital thread. - The second is a network of connected information sources around the product lifecycle supporting traceability and decision-making.

In my terminology, the coordinated digital thread is the most straightforward digital thread to achieve.

Peter recommends starting a digital thread by connecting at the beginning of product conceptualization, creating an environment where one can analyze the performance of the product portfolio and the product features and capabilities that need to be planned or how they perform in the field.

In addition, when defining the products, connect them with regulatory requirement databases as they have must-have requirements. A topic I addressed in my session too, besides the existing regulatory requirements, it is expected that in the upcoming years, due to environmental regulations, these requirements will increase, and it will be necessary to have them integrated with your digital thread.

Digital Threads require data governance and are the basis for the various digital twins. Peter discussed the multiple applications of the digital twin, primarily a relation between a virtual asset and a physical asset, except in the early concept phase.

The digital thread is still in the early phase of implementation at companies. A CIMdata survey showed that companies still focus primarily on implementing traditional PDM capabilities, although as the image above shows, there is a growing interest in short-term digital twin/thread implementations.

People, Process & Technology:

The Pillars of Digital Transformation Success

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

Having your people at all levels involved and make them understand the need for change is crucial. A change does not happen top-down. You must educate people and understand what is possible and achievable to change – in the right direction. One of her concluding slides highlights the main points.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

Between the regular sessions there were short sessions from sponsors: Altium, Contact Software, Dassault Systemes, ESI, inensia, Modular Management , PTC, SAP, Share PLM and Sinequa could pitch their value offering.

The Share PLM session, shortly after Christine’s presentation was a nice continuation of the focus on people. I loved the Share PLM image to the left explaining why people do not engage with our dreams.

Learn how LEONI is achieving Digital Continuity in the Automotive Industry.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Recently it has gone through a serious financial crisis and the need for restructuring. This makes it always challenging for a “visionary” PLM project. Tobias mentioned that after disappointing engagements with consultancy firms, they decided on a bottom-up approach to analyze existing processes using BPML. They agreed on a to-be state, fixing bottlenecks and streamlining the flow of information.

Tobias presented a smooth product data flow between their PLM system (PTC Windchill) and ERP (SAP S/4 HANA), clearly stating that the PLM system has become the controlled source of managing product changes.

Their key achievements reported so far were:

- related to BOM creation and routing (approx. 10x faster – from 2-3 days to ¼ day),

- better data consistency (fewer manual steps)

- complete traceability between the systems with PLM as the change management backbone.

The last point I would call the coordinated Digital Thread. The image below shows their current IT landscape in a simplified manner.

This solution might seem obvious for neutral PLM academics or experts, but it is an achievement to do this in an environment with SAP implemented. The eBOM-mBOM discussion is one of the most frequent held discussions – sometimes a battle.

Often, companies use their IT systems first and listen to the vendor’s experts to build integrations instead of starting from the natural business flow of information.

Aerospace & Defense Action groups outcomes

As usual, several Aerospace & Defense (A&D) action groups reported their progress during this conference. The A&D action groups are facilitated by CIMdata, and per topic, various OEMs and suppliers in the A&D industry study and analyze a particular topic, often inviting software vendors to demonstrate and discuss their capabilities with them.

Their activities and reports can be found on the A&D PLM Action page here; In the remainder of this post I will share briefly the ones presented. For a real deep dive in the topics I recommend to find the proceedings per topic on the A&D action page.

The Promise and Reality of the Digital Thread

James Roche CIMdata presented insights from industry research on The Promise and Reality of the Digital Thread. A total of 90 persons completed an in-depth survey about the status and implementation of digital thread concepts in their company. It is clear that the digital thread is still in its early days in this industry, and it is mainly about the coordinated digital thread. The image below reflects the highlights of the survey.

A&D Industry Digital Twin and Digital Thread Standards

Robert Rencher from Boeing explained the progress of their Digital Twin/Digital Thread project, where they had investigated the applicable standards to support a Digital Twin/Digital Thread (Phase 4 out of 7 currently planned). The image below shows that various standards may apply depending on business perspectives.

Their current findings are:

- Digital twin standards overlap, which is most likely a function of standards bodies representing their respective standards as an ongoing development from a historical perspective.

- The limited availability of mature digital twin/thread standards requires greater attention by standards organizations.

- The concept of the digital twin continues to evolve. This dynamic will be a challenge to standards bodies.

- The digital twin and the digital thread are distinct aspects of digital transformation. The corresponding digital twin and digital thread standards will be distinctly different.

- Coordinating the development of the respective standards between the digital twin/thread is needed.

- The digital twin’s organization, definition, and enablement depend on data and information provided by the digital thread.

Roadmap for Enabling Global Collaboration

Robert Gutwein (Pratt & Whitney Canada) and Agnes Gourillon-Jandot (Safran Aircraft Engines) reported their progress on the Global Collaboration project. Collaboration is challenged as exchange methods can vary, as well as dealing with the validation of exchanged information and governing the exchange of information in the context of IP protection.

One of the focal points was to introduce an approach to define standardized supplier agreements that anticipate modern model-based exchanges and collaboration methods.

Robert & Agnes presented the 8-step guideline for the aerospace industry in specific terms, explicitly mentioning the ISO44001 standard as being generic for all industries. An impression of the eight steps and sub-steps can be found below:

The 8-step approach will be supported by a 3rd-party Collaboration Management System (CMS app), which is not mandatory but recommended for use. When an interaction depends on a specific tool, it cannot become an ISO standard. The purpose of the methodology and app is to assist participants to ensure the collaboration aspect between stakeholders contains all the necessary steps & and people.

Model-based OEM/Supplier Collaboration Needs in Aviation Industry

Hartmut Hintze, working at Airbus Operations, presented the latest findings of the MBSE Data Interoperability working group and presented the model-based OEM/Supplier collaboration requirements and standards that need to be supported by the PLM/MBSE solution providers in the future. This collaboration goes beyond sharing CAD models, as you can see from the supplier engagement framework below:

As there are no standards-based tools, their first focus was looking into methodologies for model and behavior exchanges based on use cases. The use cases are then used to verify the state-of-the-art abilities of the various tools. At this moment, there is a focus on SysML V2 as a potential game-changer due to its new API support. As a relative novice on SysML, I cannot explain this topic in more simple words. I recommend that experts visit their presentations on the AD PAG publications page here.

Conclusions

The theme of the conference was related to the Digital Thread – and as you will discover it is valid for everyone. Learn to see the difference between the coordinated Digital Thread and the connected Digital Tread.This time, a lot of information about the Aerospace and Defense Action Groups (AD PAG), which are a fundamental part of this conference. The A&D industry has always been leading in advanced PLM concepts. However, more advanced concepts will come in my next post when touching the connected Digital Thread in the context of federated PLM and let’s not forget AI.

In the last few weeks, I thought I had a writer’s block, as I usually write about PLM-related topics close to my engagements.

In the last few weeks, I thought I had a writer’s block, as I usually write about PLM-related topics close to my engagements.

Where are the always popular discussions related to EBOM or MBOM? Where is the Form-Fit-Function discussion or the traditional “meaningful numbers” discussions?

These topics always create a lot of interaction and discussion, as many of us have mature opinions.

However, last month I spent most of the time discussing the connection between digital PLM strategies and sustainability. With the Russian invasion of Ukraine, leading to high energy prices, combined with several climate disasters this year, people are aware that 2022 is not a year as usual. A year full of events that force us to rethink our current ways of living.

The notion of urgency

Sustainability for the planet and its people has all the focus currently. COP27 gives you the impression that governments are really serious. Are they? Read this post from Kimberley R. Miner, Climate Scientist at NASA, Polar Explorer& Professor.

Sustainability for the planet and its people has all the focus currently. COP27 gives you the impression that governments are really serious. Are they? Read this post from Kimberley R. Miner, Climate Scientist at NASA, Polar Explorer& Professor.

She doubts if we really grasp the urgency needed to address climate change. Or are we just playing to be on stage? I agree with her doubts.

So what to do with my favorite EBOM-MBOM discussions?

Last week I attended an event organized by Dassault Systems in the Netherlands for their Dutch/Belgium customers.

Last week I attended an event organized by Dassault Systems in the Netherlands for their Dutch/Belgium customers.

The title of the event was: Sustainable innovation for a digital future. I expected a techy event. Click on the image to see the details.

Asking my grandson, who had just started to his study Aerospace Engineering in Delft (NL), learning to work with CAD and PLM-tools, to join me – he replied:

“Too many software demos”

It turned out that my grandson was wrong. The keynote speech from Ruud Veltenaar made most of the audience feel uncomfortable. He really pointed to the fact that we are aware of climate change and our impact on the planet, but in a way, we are paralyzed. Nothing new, but confronting and unexpected when going to a customer event.

It turned out that my grandson was wrong. The keynote speech from Ruud Veltenaar made most of the audience feel uncomfortable. He really pointed to the fact that we are aware of climate change and our impact on the planet, but in a way, we are paralyzed. Nothing new, but confronting and unexpected when going to a customer event.

Ruud’s message: Accept that we are at the end of an existing world order, and we should prepare for a new world order with the right moral leadership. It starts within yourself. Reflect on who you really are, where you are in your life path, and finally, what you want.

It sounds simple, and I can see it helps to step aside and reflect on these points.

Otherwise, you might feel we are in a rat race as shown below (recommend to watch).

The keynote was the foundation for a day of group and panel discussions on sustainability. Learning from their customers their sustainability plans and experiences.

It showed Dassault Systems, with its 2012 purpose (click on the link to see its history), Harmonizing Products, Nature and Life is ahead of the curve (at least they were for me).

It showed Dassault Systems, with its 2012 purpose (click on the link to see its history), Harmonizing Products, Nature and Life is ahead of the curve (at least they were for me).

The event was energizing, and my grandson was wrong:

“No software – next time?”

The impact of legacies – data, processes & people

For those who haven’t read my previous post, The week after PLM Roadmap / PDT Europe 2022, I wrote about the importance of Heterogeneous and federated PLM, one of the discussions related to data-driven PLM.

For those who haven’t read my previous post, The week after PLM Roadmap / PDT Europe 2022, I wrote about the importance of Heterogeneous and federated PLM, one of the discussions related to data-driven PLM.

Looking back, I have been writing about data-driven PLM since 2014, and few companies have made progress here. Understandable, first of all, due to legacy data, which is not in the right format or quality to support data-driven processes.

However, also here, legacy processes and legacy people are blocking the change. There is no blame here; it is difficult to change. You might have a visionary management team, but then it comes down to the execution of the strategy. The organizational structure and the existing people skills are creating more resistance than progress.

For that reason, I wrote this post in 2015: PLM and Global Warming, where I compared the progress we made within our PLM community with the lack of progress we are making in solving global warming. We know the problem, but we are unable to act due to the lack of feeling the urgency.

For that reason, I wrote this post in 2015: PLM and Global Warming, where I compared the progress we made within our PLM community with the lack of progress we are making in solving global warming. We know the problem, but we are unable to act due to the lack of feeling the urgency.

This blog post triggered Rich McFall to start together in 2018 the PLM Global Green Alliance.

In my PLM Roadmap / PDT Europe session Sustainability and Data-driven PLM – the perfect storm, I raised the awareness that we need to speed up. We have 10 perhaps 15 years to implement radical changes, according to scientists, before we reach irreversible tipping points.

Why PLM and Sustainability?

Sustainability starts with the business strategy. How does your company want to contribute to a more sustainable future? The strategy to follow with probably the most impact is the concept of a circular economy – image below and more info here.

The idea behind the circular economy is to minimize the need for new finite materials (the right side) and to use for energy delivery only renewables. Implementing these principles clearly requires a more holistic design of products and services. Each loop should be analyzed and considered when delivering solutions to the market.

Therefore, a logical outcome of the circular economy would be transforming from selling products to the market towards a product-as-a-service model. In this case, the product manufacturer becomes responsible for the full product lifecycle and its environmental impact.

And here comes the importance of PLM. You can measure and tune your environmental impact during production in your ERP or MES environment. However, 80 % of the environmental impact is defined during the design phase, the domain of PLM. All these analysis together are called Life Cycle Analysis or Life Cycle Assessment (LCA). A practice that starts at the moment you start to think about a product or solution – a specialized systems thinking approach.

So how to define and select the right options for future products?

Virtual products / Digital Twins

This is where sustainability is pushing for digitization of the product lifecycle. Building and analyzing products in the virtual world is much cheaper than working with physical prototypes.

This is where sustainability is pushing for digitization of the product lifecycle. Building and analyzing products in the virtual world is much cheaper than working with physical prototypes.

The importance of a model-based approach here allows companies efficiently deal with trade-off studies for each solution.

In addition, the choice and the behavior of materials also have an impact. These material properties will come from various databases, some based on hazardous substances, others on environmental parameters. Connecting these databases to the virtual model is crucial to remain efficient.

Imagine you need manually collect and process in these properties whenever studying an alternative. The manual process will be too costly (fewer trade-offs and not finding the optimum) and too slow (time-to-market impact).

That’s why I am greatly interested in all the developments related to a federated PLM infrastructure. A monolithic system cannot be the solution for such a model-based environment. In my terminology, here we need an architecture with systems of engagement combined with system(s) of record.

That’s why I am greatly interested in all the developments related to a federated PLM infrastructure. A monolithic system cannot be the solution for such a model-based environment. In my terminology, here we need an architecture with systems of engagement combined with system(s) of record.

I will publish more on this topic in the future.

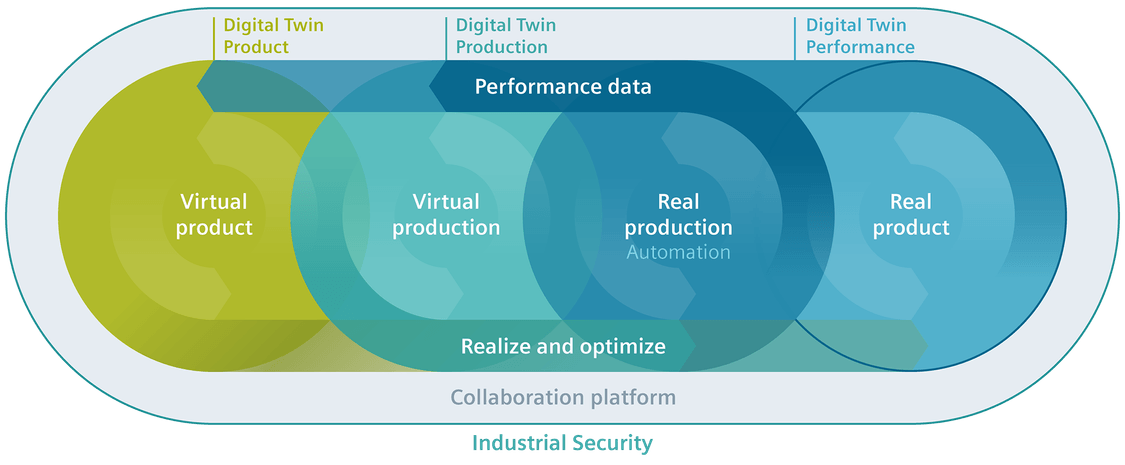

In the previous paragraphs, I wrote about the virtual product environment, which some companies call the virtual twin. However, besides the virtual twin, we also need several digital twins. These digital models allow us to monitor and optimize the production process, which can lead to design changes.

Also, monitoring the product in operation using a digital twin allows us to optimize the performance and execution of the solutions in the field.

The feedback from these digital twins will then help the company to improve the design and calibrate their simulation models. It should be a closed loop. You can find a more recent discussion related to the above image here.

Our mission

At this moment, sustainability is at the top of my personal agenda, and I hope for many of you. However, besides the choices we can make in our personal lives, there is also an area where we, as PLM interested parties, should contribute: The digitization of the product lifecycle as an enabler for a sustainable business.

At this moment, sustainability is at the top of my personal agenda, and I hope for many of you. However, besides the choices we can make in our personal lives, there is also an area where we, as PLM interested parties, should contribute: The digitization of the product lifecycle as an enabler for a sustainable business.

Without mature concepts for a connected enterprise, implementing sustainable products and business processes will be a wish, not a strategy. So add digitization to your skillset and use it in the context of sustainability.

Conclusion

It might look like this PLM blog has become an environmental blog. This might be right, as the environmental impact of products and solutions is directly related to product lifecycle management. However, do not worry. In the upcoming time, I will focus on the aspects and experiences of a connected enterprise. I will leave the easier discussions (EBOM/MBOM/FFF/Smart Numbers) from a coordinated enterprise as they are. There is work to do shortly. Your thoughts?

Last week I wrote about the recent PLM Road Map & PDT Spring 2021 conference day 1, focusing mainly on technology. There were also interesting sessions related to exploring future methodologies for a digital enterprise. Now on Day 2, we started with two sessions related to people and methodology, indispensable when discussing PLM topics.

Last week I wrote about the recent PLM Road Map & PDT Spring 2021 conference day 1, focusing mainly on technology. There were also interesting sessions related to exploring future methodologies for a digital enterprise. Now on Day 2, we started with two sessions related to people and methodology, indispensable when discussing PLM topics.

Designing and Keeping Great Teams

This keynote speech from Noshir Contractor, Professor of Behavioral Sciences in the McCormick School of Engineering & Applied Science, intrigued me as the subtitle states: Lessons from Preparing for Mars. What Can PLM Professionals Learn from This?

You might ask yourself, is a PLM implementation as difficult and as complex as a mission to Mars? I hoped, so I followed with great interest Noshir’s presentation.

You might ask yourself, is a PLM implementation as difficult and as complex as a mission to Mars? I hoped, so I followed with great interest Noshir’s presentation.

Noshir started by mentioning that many disruptive technologies have emerged in recent years, like Teams, Slack, Yammer and many more.

The interesting question he asked in the context of PLM is:

As the domain of PLM is all about trying to optimize effective collaboration, this is a fair question

Noshir shared with us that it is not the most crucial point to look at people’s individual skills but more about who they know.

Measure who they work with is more important than who they are.

Based on this statement, Noshir showed some network patterns of different types of networks.

Click on the image to see the enlarged picture.

It is clear from these patterns how organizations communicate internally and/or externally. It would be an interesting exercise to perform in a company and to see if the analysis matches the perceived reality.

Noshir’s research was used by NASA to analyze and predict the right teams for a mission to Mars.

Noshir went further by proposing what PLM can learn from teams that are going into space. And here, I was not sure about the parallel. Is a PLM project comparable to a mission to Mars? I hope not! I have always advocated that a PLM implementation is a journey. Still, I never imagined that it could be a journey into the remote unknown.

Noshir went further by proposing what PLM can learn from teams that are going into space. And here, I was not sure about the parallel. Is a PLM project comparable to a mission to Mars? I hope not! I have always advocated that a PLM implementation is a journey. Still, I never imagined that it could be a journey into the remote unknown.

Noshir explained that they had built tools based on their scientific model to describe and predict how teams could evolve over time. He believes that society can also benefit from these learnings. Many inventions from the past were driven by innovations coming from space programs.

I believe Noshir’s approach related to team analysis is much more critical for organizations with a mission. How do you build multidisciplinary teams?

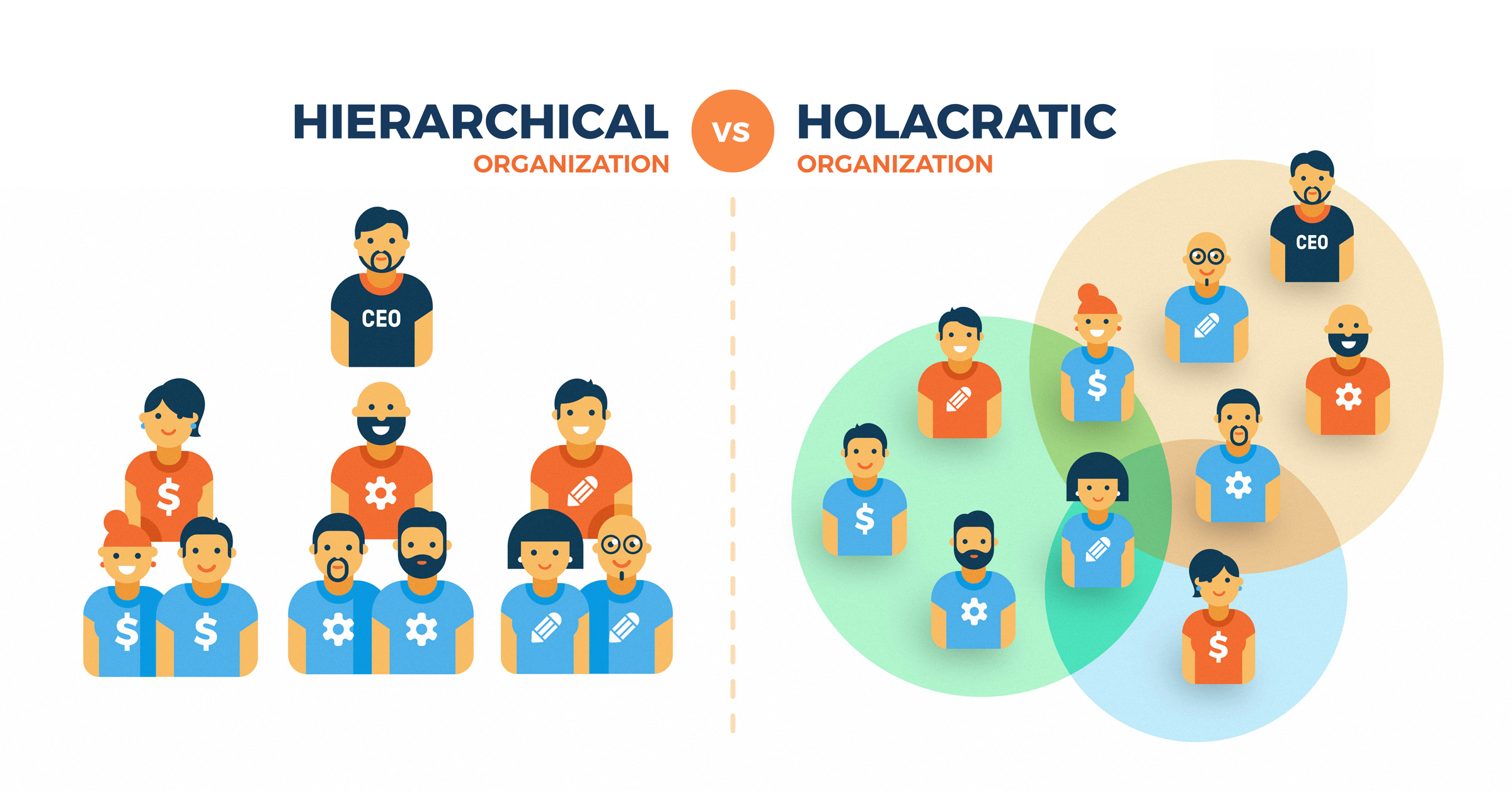

The proposed methodology is probably best for a holocracy based organization. Holocrazy is an interesting concept for companies to get their employees committed, however, it also demands a type of involvement that not every person can deliver. For me, coming back to PLM, as a strategy to enable collaboration, the effectiveness of collaboration depends very much on the organizational culture and created structure.

The proposed methodology is probably best for a holocracy based organization. Holocrazy is an interesting concept for companies to get their employees committed, however, it also demands a type of involvement that not every person can deliver. For me, coming back to PLM, as a strategy to enable collaboration, the effectiveness of collaboration depends very much on the organizational culture and created structure.

DISRUPTION – EXTINCTION or still EVOLUTION?

We talk a lot about disruption because disruption is a painful process that you do not like to happen to yourself or your company. In the context of this conference’s theme, I discussed the awareness that disruptive technologies will be changing the PLM Value equation.

A disruption like the switch from mini-computers to PCs (killed DEC) or from Symbian to iOS (killed Nokia) is therefore not likely to happen that fast. Still, there is a need to take benefit from these new disruptive technologies.

My presentation was focusing on describing the path of evolution and focus areas for the PLM community. Doing nothing means extinction; experimenting and learning towards the future will provide an evolutionary way.

Starting from acknowledging that there is an incompatibility between data produced most of the time now and the data needed in the future, I explained my theme: From Coordinated to Connected. As a PLM community, we should spend more time together in focus groups, conferences on describing and verifying methodology and best practices.

Nigel Shaw (EuroStep) and Mark Williams (Boeing) hinted in this direction during this conference (see day 1). Erik Herzog (SAAB Aeronautics) brought this topic to last year’s conference (see day 3). Outside this conference, I have comparable touchpoints with Martijn Dullaert when discussing Configuration Management in the future in relation to PLM.

In addition, this decade will probably be the most disruptive decade we have known in humanity due to external forces that push companies to change. Sustainability regulations from governments (the Paris agreement), the implementation of circular economy concepts combined with the positive and high Total Share Holder return will push companies to adapt themselves more radical than before.

What is clear is that disruptive technologies and concepts, like Industry 4.0, Digital Thread and Digital Twin, can serve a purpose when implemented efficiently, ensuring the business becomes sustainable.

Due to the lack of end-to-end experience, we need focus groups and conferences to share progress and lessons learned. And we do not need to hear the isolated vendor success stories here as a reference, as often they are siloed again and leading to proprietary environments.

Due to the lack of end-to-end experience, we need focus groups and conferences to share progress and lessons learned. And we do not need to hear the isolated vendor success stories here as a reference, as often they are siloed again and leading to proprietary environments.

You can see my full presentation on SlideShare: DISRUPTION – EXTINCTION or still EVOLUTION?

Building a profitable Digital T(win) business

Beatrice Gasser, Technical, Innovation, and Sustainable Development Director from the Egis group, gave an exciting presentation related to the vision and implementation of digital twins in the construction industry.

The Egis group both serves as a consultancy firm as well as an asset management organization. You can see a wide variety of activities on their website or have a look at their perspectives

Historically the construction industry has been lagging behind having low productivity due to fragmentation, risk aversion and recently, more and more due to the lack of digital talent. In addition, some of the construction companies make their money from claims inside of having a smooth and profitable business model.

Without innovation in the construction industry, companies working the traditional way would lose market share and investor-focused attention, as we can see from the BCG diagram I discussed in my session.

The digital twin of construction is an ideal concept for the future. It can be built in the design phase to align all stakeholders, validate and integrate solutions and simulate the building operational scenarios at almost zero materials cost. Egis estimates that by using a digital twin during construction, the engineering and construction costs of a building can be reduced between 15 and 25 %

More importantly, the digital twin can also be used to first simulate operations and optimize energy consumption. The connected digital twin of an existing building can serve as a new common data environment for future building stakeholders. This could be the asset owner, service companies, and even the regulatory authorities needing to validate the building’s safety and environmental impact.

Beatrice ended with five principles essential to establish a digital twin, i.e

I think the construction industry has a vast potential to disrupt itself. Faster than the traditional manufacturing industries due to their current needs to work in a best-connected manner.

I think the construction industry has a vast potential to disrupt itself. Faster than the traditional manufacturing industries due to their current needs to work in a best-connected manner.

Next, there is almost no legacy data to deal with for these companies. Every new construction or building is a unique project on its own. The key differentiators will be experience and efficient ways of working.

It is about the belief, the guts and the skilled people that can make it work – all for a more efficient and sustainable future.

Leveraging PLM and Cloud Technology for Market Success

Stan Przybylinski, Vice President of CIMdata, reported their global survey related to the cloud, completed in early 2021. Also, Stan typified Industry 4.0 as a connected vision and cloud and digital thread as enablers to implementing this vision.

Stan Przybylinski, Vice President of CIMdata, reported their global survey related to the cloud, completed in early 2021. Also, Stan typified Industry 4.0 as a connected vision and cloud and digital thread as enablers to implementing this vision.

The companies interviewed showed a lot of goodwill to make progress – click on the image to see the details. CIMdata is also working with PLM Vendors to learn and describe better the areas of beneft. I remain curious about who comes with a realization and business case that is future-proof. This will define our new PLM Value Equation.

Conclusion

These were two exciting days with enough mentioning of disruptive technologies. Our challenge in the PLM domain will be to give them a purpose. A purpose is likely driven by external factors related to the need for a sustainable future. Efficiency and effectiveness must come from learning to work in connected environments (digital twin, digital thread, industry 4.0, Model-Based (Systems) Engineering.

Note: You might have seen the image below already – a nice link between sustainability and the mission to Mars

Last week I shared my first review of the PLM Roadmap / PDT Fall 2020 conference, organized by CIMdata and Eurostep. Having digested now most of the content in detail, I can state this was the best conference of 2020. In my first post, the topics I shared were mainly the consultant’s view of digital thread and digital twin concepts.

This time, I want to focus on the content presented by the various Aerospace & Defense working groups who shared their findings, lessons-learned (so far) on topics like the Multi-view BOM, Supply Chain Collaboration, MBSE Data interoperability.

This time, I want to focus on the content presented by the various Aerospace & Defense working groups who shared their findings, lessons-learned (so far) on topics like the Multi-view BOM, Supply Chain Collaboration, MBSE Data interoperability.

These sessions were nicely wrapped with presentations from Alberto Ferrari (Raytheon), discussing the digital thread between PLM and Simulation Lifecycle Management and Jeff Plant (Boeing) sharing their Model-Based Engineering strategy.

I believe these insights are crucial, although there might be people in the field that will question if this research is essential. Is not there an easier way to achieve to have the same results?

Nicely formulated by Ilan Madjar as a comment to my first post:

Ilan makes a good point about simplifying the ideas to the masses to make it work. The majority of companies probably do not have the bandwidth to invest and understand the future benefits of a digital thread or digital twins.

This does not mean that these topics should not be studied. If your business is in a small, simple eco-system and wants to work in a connected mode, you can choose a vendor and a few custom interfaces.

This does not mean that these topics should not be studied. If your business is in a small, simple eco-system and wants to work in a connected mode, you can choose a vendor and a few custom interfaces.

However, suppose you work in a global industry with an extensive network of partners, suppliers, and customers.

In that case, you cannot rely on ad-hoc interfaces or a single vendor. You need to invest in standards; you need to study common best practices to drive methodology, standards, and vendors to align.

This process of standardization is so crucial if you want to have a sustainable, connected enterprise. In the end, the push from these companies will lead to standards, allowing the smaller companies to ad-here or connect to.

The future is about Connected through Standards, as discussed in part 1 and further in this post. Let’s go!

Global Collaboration – Defining a baseline for data exchange processes and standards

Katheryn Bell (Pratt & Whitney Canada) presented the progress of the A&D Global Collaboration workgroup. As you can see from the project timeline, they have reached the phase to look towards the future.

Katheryn mentioned the need to standardize terminology as the first point of attention. I am fully aligned with that point; without a standardized terminology framework, people will have a misunderstanding in communication.

This happens even more in the smaller businesses that just pick sometimes (buzz) terms without a full understanding.

Several years ago, I talked with a PLM-implementer telling me that their implementation focus was on systems engineering. After some more explanations, it appeared they were making an attempt for configuration management in reality. Here the confusion was massive. Still, a standard, common terminology is crucial in our domain, even if it seems academic.

Several years ago, I talked with a PLM-implementer telling me that their implementation focus was on systems engineering. After some more explanations, it appeared they were making an attempt for configuration management in reality. Here the confusion was massive. Still, a standard, common terminology is crucial in our domain, even if it seems academic.

The group has been analyzing interoperability standards, standards for long-time archival and retrieval (LOTAR), but also has been studying the ISO 44001 standard related to Collaborative business relationship management systems

In the Q&A session, Katheryn explained that the biggest problem to solve with collaboration was the risk of working with the wrong version of data between disciplines and suppliers.

In the Q&A session, Katheryn explained that the biggest problem to solve with collaboration was the risk of working with the wrong version of data between disciplines and suppliers.

Of course, such errors can lead to huge costs if they are discovered late (or too late). As some of the big OEMs work with thousands of suppliers, you can imagine it is not an issue easily discovered in a more ad-hoc environment.

The move to a standardized Technical Data Package based on a Model-Based Definition is one of these initiatives in this domain to reduce these types of errors.

You can find the proceedings from the Global Collaboration working group here.

Connect, Trace, and Manage Lifecycle of Models, Simulation and Linked Data: Is That Easy?

I loved Alberto Ferrari‘s (Raytheon) presentation how he described the value of a model-based digital thread, positioning it in a targeted enterprise.

I loved Alberto Ferrari‘s (Raytheon) presentation how he described the value of a model-based digital thread, positioning it in a targeted enterprise.

Click on the image and discover how business objectives, processes and models go together supported by a federated infrastructure.

Alberto’s presentation was a kind of mind map from how I imagine the future, and it is a pity if you have not had the chance to see his session.

Alberto also focused on the importance of various simulation capabilities combined with simulation lifecycle management. For Alberto, they are essential to implement digital twins. Besides focusing on standards, Alberto pleas for a semantic integration, open service architecture with the importance of DevSecOps.

Enough food for thought; as Alberto mentioned, he presented the corporate vision, not the current state.

More A&D Action Groups

There were two more interesting specialized sessions where teams from the A&D action groups provided a status update.

Brandon Sapp (Boeing) and Ian Parent (Pratt & Whitney) shared the activities and progress on Minimum Model-Based Definition (MBD) for Type Design Certification.

Brandon Sapp (Boeing) and Ian Parent (Pratt & Whitney) shared the activities and progress on Minimum Model-Based Definition (MBD) for Type Design Certification.

As Brandon mentioned, MBD is already a widely used capability; however, MBD is still maturing and evolving. I believe that is also one of the reasons why MBD is not yet accepted in mainstream PLM. Smaller organizations will wait; however, can your company afford to wait?

More information about their progress can be found here.

Mark Williams (Boeing) reported from the A&D Model-Based Systems Engineering action group their first findings related to MBSE Data Interoperability, focusing on an Architecture Model Exchange Solution. A topic interesting to follow as the promise of MBSE is that it is about connected information shared in models. As Mark explained, data exchange standards for requirements and behavior models are mature, readily available in the tools, and easily adopted. Exchanging architecture models has proven to be very difficult. I will not dive into more details, respecting the audience of this blog.

Mark Williams (Boeing) reported from the A&D Model-Based Systems Engineering action group their first findings related to MBSE Data Interoperability, focusing on an Architecture Model Exchange Solution. A topic interesting to follow as the promise of MBSE is that it is about connected information shared in models. As Mark explained, data exchange standards for requirements and behavior models are mature, readily available in the tools, and easily adopted. Exchanging architecture models has proven to be very difficult. I will not dive into more details, respecting the audience of this blog.

For those interested in their progress, more information can be found here

Model-Based Engineering @ Boeing

In this conference, the participation of Boeing was significant through the various action groups. As the cherry on the cake, there was Jeff Plant‘s session, giving an overview of what is happening at Boeing. Jeff is Boeing’s director of engineering practices, processes, and tools.

In his introduction, Jeff mentioned that Boeing has more than 160.000 employees in over 65 countries. They are working with more than 12.000 suppliers globally. These suppliers can be manufacturing, service or technology partnerships. Therefore you can imagine, and as discussed by others during the conference, streamlined collaboration and traceability are crucial.

The now-famous MBE Diamond symbol illustrates the model-based information flows in the virtual world and the physical world based on the systems engineering approach. Like Katheryn Bell did in her session related to Global Collaboration, Jeff started explaining the importance of a common language and taxonomy needed if you want to standardize processes.

The now-famous MBE Diamond symbol illustrates the model-based information flows in the virtual world and the physical world based on the systems engineering approach. Like Katheryn Bell did in her session related to Global Collaboration, Jeff started explaining the importance of a common language and taxonomy needed if you want to standardize processes.

Zoom in on the Boeing MBE Taxonomy, you will discover the clarity it brings for the company.

I was not aware of the ISO 23247 standard concerning the Digital Twin framework for manufacturing, aiming to apply industry standards to the model-based definition of products and process planning. A standard certainly to follow as it brings standardization on top of existing standards.

As Jeff noted: A practical standard for implementation in a company of any size. In my opinion, mandatory for a sustainable, connected infrastructure.

Jeff presented the slide below, showing their standardization internally around federated platforms.

This slide resembles a lot the future platform vision I have been sharing since 2017 when discussing PLM’s future at PLM conferences, when explaining the differences between Coordinated and Connected – see also my presentation here on Slideshare.

You can zoom in on the picture to see the similarities. For me, the differences were interesting to observe. In Jeff’s diagram, the product lifecycle at the top indicates the platform of (central) interest during each lifecycle stage, suggesting a linear process again.

You can zoom in on the picture to see the similarities. For me, the differences were interesting to observe. In Jeff’s diagram, the product lifecycle at the top indicates the platform of (central) interest during each lifecycle stage, suggesting a linear process again.

In reality, the flow of information through feedback loops will be there too.

The second exciting detail is that these federated architectures should be based on strong interoperability standards. Jeff is urging other companies, academics and vendors to invest and come to industry standards for Model-Based System Engineering practices. The time is now to act on this domain.

The second exciting detail is that these federated architectures should be based on strong interoperability standards. Jeff is urging other companies, academics and vendors to invest and come to industry standards for Model-Based System Engineering practices. The time is now to act on this domain.

It reminded me again of Marc Halpern’s message mentioned in my previous post (part 1) that we should be worried about vendor alliances offering an integrated end-to-end data flow based on their solutions. This would lead to an immense vendor-lock in if these interfaces are not based on strong industry standards.

Therefore, don’t watch from the sideline; it is the voice (and effort) of the companies that can drive standards.

Finally, during the Q&A part, Jeff made an interesting point explaining Boeing is making a serious investment, as you can see from their participation in all the action groups. They have made the long-term business case.

Finally, during the Q&A part, Jeff made an interesting point explaining Boeing is making a serious investment, as you can see from their participation in all the action groups. They have made the long-term business case.

The team is confident that the business case for such an investment is firm and stable, however in such long-term investment without direct results, these projects might come under pressure when the business is under pressure.

The virtual fireside chat

The conference ended with a virtual fireside chat from which I picked up an interesting point that Marc Halpern was bringing in. Marc mentioned a survey Gartner has done with companies in fast-moving industries related to the benefits of PLM. Companies reported improvements in accuracy and product development. They did not see so much a reduced time to market or cost reduction. After analysis, Gartner believes the real issue is related to collaboration processes and supply chain practices. Here lead times did not change, nor the number of changes.

Marc believes that this topic will be really showing benefits in the future with cloud and connected suppliers. This reminded me of an article published by McKinsey called The case for digital reinvention. In this article, the authors indicated that only 2 % of the companies interview were investing in a digital supply chain. At the same time, the expected benefits in this area would have the most significant ROI.

Marc believes that this topic will be really showing benefits in the future with cloud and connected suppliers. This reminded me of an article published by McKinsey called The case for digital reinvention. In this article, the authors indicated that only 2 % of the companies interview were investing in a digital supply chain. At the same time, the expected benefits in this area would have the most significant ROI.

The good news, there is consistency, and we know where to focus for early results.

Conclusion

It was a great conference as here we could see digital transformation in action (groups). Where vendor solutions often provide a sneaky preview of the future, we saw people working on creating the right foundations based on standards. My appreciation goes to all the active members in the CIMdata A&D action groups as they provide the groundwork for all of us – sooner or later.

After the series about “Learning from the past,” it is time to start looking toward the future. I learned from several discussions that I probably work most of the time with advanced companies. I believe this would motivate companies that lag behind even to look into the future even more.

After the series about “Learning from the past,” it is time to start looking toward the future. I learned from several discussions that I probably work most of the time with advanced companies. I believe this would motivate companies that lag behind even to look into the future even more.

If you look into the future for your company, you need new or better business outcomes. That should be the driver for your company. A company does not need PLM or a Digital Twin. A company might want to reduce its time to market and improve collaboration between all stakeholders. These objectives can be realized by different ways of working and an IT infrastructure to allow these processes to become digital and connected.

That is the “game”. Coming back to the future of PLM. We do not need a discussion about definitions; I leave this to the academics and vendors. We will see the same applies to the concept of a Digital Twin.

That is the “game”. Coming back to the future of PLM. We do not need a discussion about definitions; I leave this to the academics and vendors. We will see the same applies to the concept of a Digital Twin.

My statement: The digital twin is not new. Everybody can have their own digital twin as long as you interpret the definition differently. Does this sound like the PLM definition?

The definition

I like to follow the Gartner definition:

A digital twin is a digital representation of a real-world entity or system. The implementation of a digital twin is an encapsulated software object or model that mirrors a unique physical object, process, organization, person, or other abstraction. Data from multiple digital twins can be aggregated for a composite view across a number of real-world entities, such as a power plant or a city, and their related processes.

As you see, not a narrow definition. Now we will look at the different types of interpretations.

Single-purpose siloed Digital Twins

- Simple – data only

One of the most straightforward applications of a digital twin is, for example, my Garmin Connect environment. My device registers performance parameters (speed, cadence, power, heartbeat, location) when cycling. Then, after every trip, I can analyze my performance. I can see changes in my overall performance; compare my performance with others in my category (weight, age, sex).

One of the most straightforward applications of a digital twin is, for example, my Garmin Connect environment. My device registers performance parameters (speed, cadence, power, heartbeat, location) when cycling. Then, after every trip, I can analyze my performance. I can see changes in my overall performance; compare my performance with others in my category (weight, age, sex).

Based on that, I can decide if I want to improve my performance. My personal business goal is to maintain and improve my overall performance, knowing I cannot stop aging by upgrading my body.

On November 4th, 2020, I am participating in the (almost virtual) Digital Twin conference organized by Bits&Chips in the Netherlands. In the context of human performance, I look forward to Natal van Riel’s presentation: Towards the metabolic digital twin – for sure, this direction is not simple. Natal is a full professor at the Technical University in Eindhoven, the “smart city” in the Netherlands.

On November 4th, 2020, I am participating in the (almost virtual) Digital Twin conference organized by Bits&Chips in the Netherlands. In the context of human performance, I look forward to Natal van Riel’s presentation: Towards the metabolic digital twin – for sure, this direction is not simple. Natal is a full professor at the Technical University in Eindhoven, the “smart city” in the Netherlands.

- Medium – data and operating models

Many connected devices in the world use the same principle. An airplane engine, an industrial robot, a wind turbine, a medical device, and a train carriage; all track the performance based on this connection between physical and virtual, based on some sort of digital connectivity.

Many connected devices in the world use the same principle. An airplane engine, an industrial robot, a wind turbine, a medical device, and a train carriage; all track the performance based on this connection between physical and virtual, based on some sort of digital connectivity.

The business case here is also monitoring performance, predicting maintenance, and upgrading the product when needed.

This is the domain of Asset Lifecycle Management, a practice that has existed for decades. Based on financial and performance models, the optimal balance between maintaining and overhauling has to be found. Repairs are disruptive and can be extremely costly. A manufacturing site that cannot produce can cost millions per day. Connecting data between the physical and the virtual model allows us to have real-time insights and be proactive. It becomes a digital twin.

This is the domain of Asset Lifecycle Management, a practice that has existed for decades. Based on financial and performance models, the optimal balance between maintaining and overhauling has to be found. Repairs are disruptive and can be extremely costly. A manufacturing site that cannot produce can cost millions per day. Connecting data between the physical and the virtual model allows us to have real-time insights and be proactive. It becomes a digital twin.

- Advanced – data and connected 3D model

The digital twin we see the most in marketing videos is a virtual twin, using a 3D representation for understanding and navigation. The 3D representation provides a Virtual Reality (VR) environment with connected data. When pointing at the virtual components, information might appear, or some animation might take place.

The digital twin we see the most in marketing videos is a virtual twin, using a 3D representation for understanding and navigation. The 3D representation provides a Virtual Reality (VR) environment with connected data. When pointing at the virtual components, information might appear, or some animation might take place.

Building such a virtual representation is a significant effort; therefore, there needs to be a serious business case.

The simplest business case is to use the virtual twin for training purposes. A flight simulator provides a virtual environment and behavior as-if you are flying in a physical airplane – the behavior model behind the simulator should match as well as possibly the real behavior. However, as it is a model, it will never be 100 % reality and requires updates when new findings or product changes appear.

A virtual model of a platform or plant can be used for training on Standard Operating Procedures (SOPs). In the physical world, there is no place or time to conduct such training. Here the complexity might be lower. There is a 3D Model; however, serious updates can only be expected after a major maintenance or overhaul activity.

A virtual model of a platform or plant can be used for training on Standard Operating Procedures (SOPs). In the physical world, there is no place or time to conduct such training. Here the complexity might be lower. There is a 3D Model; however, serious updates can only be expected after a major maintenance or overhaul activity.

These practices are not new either and are used in places where physical training cannot be done.

More challenging is the Augmented Reality (AR) use case. Here the virtual model, most of the time, a lightweight 3D Model, connects to real-time data coming from other sources. For example, AR can be used when an engineer has to service a machine. The AR environment might project actual data from the machine, indicate service points and service procedures.

More challenging is the Augmented Reality (AR) use case. Here the virtual model, most of the time, a lightweight 3D Model, connects to real-time data coming from other sources. For example, AR can be used when an engineer has to service a machine. The AR environment might project actual data from the machine, indicate service points and service procedures.

The positive side of the business case is clear for such an opportunity, ensuring service engineers always work with the right information in a real-time context. The main obstacle to implementing AR, in reality, is the access to data, the presentation of the data and keeping the data in the AR environment matching the reality.

![]() And although there are 3D Models in use, they are, to my knowledge, always created in siloes, not yet connected to their design sources. Have a look at the Digital Twin conference from Bits&Chips, as mentioned before.

And although there are 3D Models in use, they are, to my knowledge, always created in siloes, not yet connected to their design sources. Have a look at the Digital Twin conference from Bits&Chips, as mentioned before.

Several of the cases mentioned above will be discussed here. The conference’s target is to share real cases concluded by Q & A sessions, crucial for a virtual event.

Connected Virtual Twins along the product lifecycle

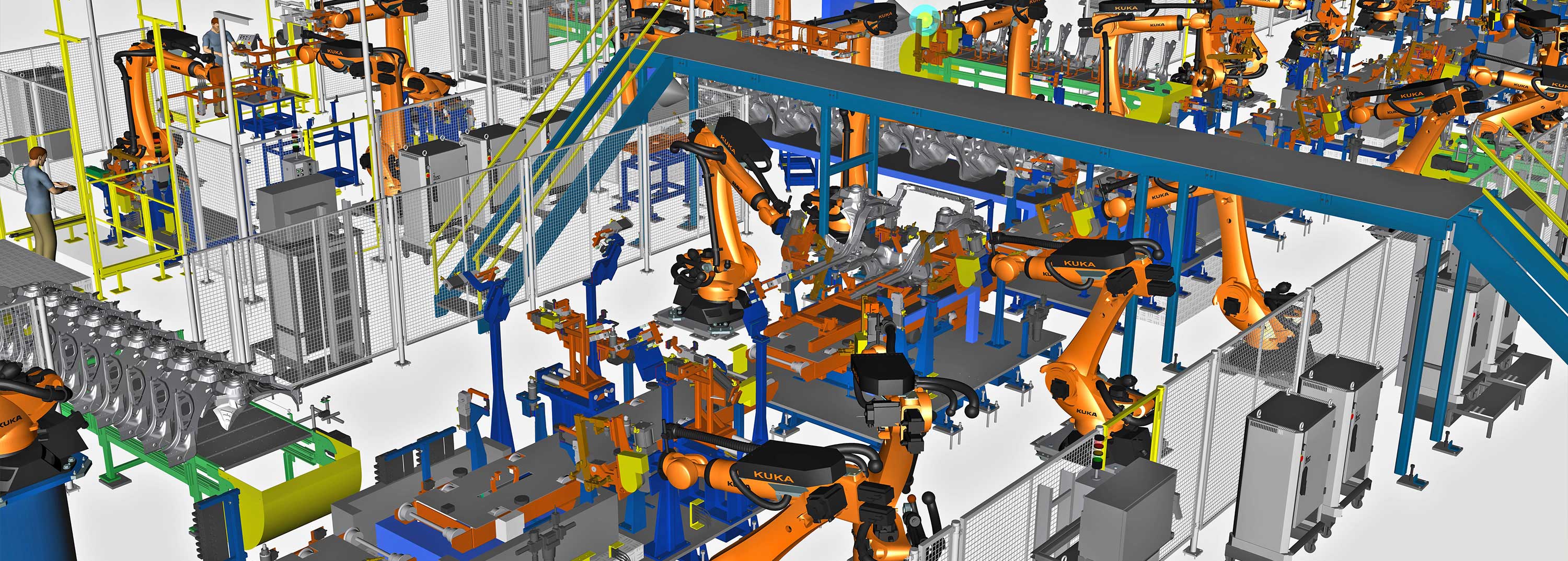

So far, we have been discussing the virtual twin concept, where we connect a product/system/person in the physical world to a virtual model. Now let us zoom in on the virtual twins relevant for the early parts of the product lifecycle, the manufacturing twin, and the development twin. This image from Siemens illustrates the concept:

On slides they imagine a complete integrated framework, which is the future vision. Let us first zoom in on the individual connected twins.

The digital production twin

This is the area of virtual manufacturing and creating a virtual model of the manufacturing plant. Virtual manufacturing planning is not a new topic. DELMIA (Dassault Systèmes) and Tecnomatix (Siemens) are already for a long time offering virtual manufacturing planning solutions.

This is the area of virtual manufacturing and creating a virtual model of the manufacturing plant. Virtual manufacturing planning is not a new topic. DELMIA (Dassault Systèmes) and Tecnomatix (Siemens) are already for a long time offering virtual manufacturing planning solutions.

At that time, the business case was based on the fact that the definition of a manufacturing plant and process done virtually allows you to optimize the plant before investing in physical assets.

Saving money as there is no costly prototype phase to optimize production. In a virtual world, you can perform many trade-off studies without extra costs. That was the past (and, for many companies, still the current situation).

Saving money as there is no costly prototype phase to optimize production. In a virtual world, you can perform many trade-off studies without extra costs. That was the past (and, for many companies, still the current situation).

With the need to be more flexible in manufacturing to address individual customer orders without increasing the overhead of delivering these customer-specific solutions, there is a need for a configurable plant that can produce these individual products (batch size 1).

This is where the virtual plant model comes into the picture again. Instead of having a virtual model to define the ultimate physical plant, now the virtual model remains an active model to propose and configure the production process for each of these individual products in the physical plant.

This is where the virtual plant model comes into the picture again. Instead of having a virtual model to define the ultimate physical plant, now the virtual model remains an active model to propose and configure the production process for each of these individual products in the physical plant.

This is partly what Industry 4.0 is about. Using a model-based approach to configure the plant and its assets in a connected manner. The digital production twin drives the execution of the physical plant. The factory has to change from a static factory to a dynamic “smart” factory.

In the domain of Industry 4.0, companies are reporting progress. However, in my experience, the main challenge is still that the product source data is not yet built in a model-based, configurable manner. Therefore, requires manual rework. This is the area of Model-Based Definition, and I have been writing about this aspect several times. Latest post: Model-Based: Connecting Engineering and Manufacturing

The business case for this type of digital twin, of course, is to be able to customer-specific products with extremely competitive speed and reduced cost compared to standard. It could be your company’s survival strategy. As it is hard to predict the future, as we see from COVID-19, it is still crucial to anticipate the future instead of waiting.

The business case for this type of digital twin, of course, is to be able to customer-specific products with extremely competitive speed and reduced cost compared to standard. It could be your company’s survival strategy. As it is hard to predict the future, as we see from COVID-19, it is still crucial to anticipate the future instead of waiting.

The digital development twin

Before a product gets manufactured, there is a product development process. In the past, this was pure mechanical with some electronic components. Nowadays, many companies are actually manufacturing systems as the software controlling the product plays a significant role. In this context, the model-based systems engineering approach is the upcoming approach to defining and testing a system virtually before committing to the physical world.

Before a product gets manufactured, there is a product development process. In the past, this was pure mechanical with some electronic components. Nowadays, many companies are actually manufacturing systems as the software controlling the product plays a significant role. In this context, the model-based systems engineering approach is the upcoming approach to defining and testing a system virtually before committing to the physical world.

Model-Based Systems Engineering can define a single complex product and perform all kinds of analyses on the system even before there is a physical system in place. I will explain more about model-based systems engineering in future posts. In this context, I want to stress that having a model-based system engineering environment combined with modularity (do not confuse it with model-based) is a solid foundation for dealing with unique custom products. Solutions can be configured and validated against their requirements already during the engineering phase.

Model-Based Systems Engineering can define a single complex product and perform all kinds of analyses on the system even before there is a physical system in place. I will explain more about model-based systems engineering in future posts. In this context, I want to stress that having a model-based system engineering environment combined with modularity (do not confuse it with model-based) is a solid foundation for dealing with unique custom products. Solutions can be configured and validated against their requirements already during the engineering phase.

The business case for the digital development twin is easy to make. Shorter time to market, improved and validated quality, and reduced engineering hours and costs compared to traditional ways of working. To achieve these results, for sure, you need to change your ways of working and the tools you are using. So it won’t be that easy!

The business case for the digital development twin is easy to make. Shorter time to market, improved and validated quality, and reduced engineering hours and costs compared to traditional ways of working. To achieve these results, for sure, you need to change your ways of working and the tools you are using. So it won’t be that easy!

For those interested in Industry 4.0 and the Model-Based System Engineering approach, join me at the upcoming PLM Road Map 2020 and PDT 2020 conference on 17-18-19 November. As you can see from the agenda, a lot of attention to the Digital Twin and Model-Based approaches.

For those interested in Industry 4.0 and the Model-Based System Engineering approach, join me at the upcoming PLM Road Map 2020 and PDT 2020 conference on 17-18-19 November. As you can see from the agenda, a lot of attention to the Digital Twin and Model-Based approaches.

Three digital half-days with hopefully a lot to learn and stay with our feet on the ground. In particular, I am looking forward to Marc Halpern’s keynote speech: Digital Thread: Be Careful What you Wish For, It Just Might Come True

Conclusion

It has been very noisy on the internet related to product features and technologies, probably due to COVID-19 and therefore disrupted interactions between all of us – vendors, implementers and companies trying to adjust their future. The Digital Twin concept is an excellent framing for a concept that everyone can relate to. Choose your business case and then look for the best matching twin.

This is almost my last planned post related to the concepts of model-based. After having discussed Model-Based Systems Engineering (needed to develop complex products/systems including hardware and software) and Model-Based Definition (creating an efficient connection between Engineering and Manufacturing), my last post will be related to the most over-hyped topic: The Digital Twin

There are several reasons why the Digital Twin is over-hyped. One of the reasons is that the Digital Twin is not necessarily considered as a PLM-related topic. Other vendors like SAP (the network of digital twins), Oracle (Digital Twins for IoT applications) and GE with their Predix-platform also contributed to the hype related to the digital twin. The other reason is that the concept of Digital Twin is a great idea for marketers to shine above the clouds. Are recent comment from Monica Schnitger says it all in her post 5 quick takeaways from Siemens Automation summit. Monica’s take away related to Digital Twin:

There are several reasons why the Digital Twin is over-hyped. One of the reasons is that the Digital Twin is not necessarily considered as a PLM-related topic. Other vendors like SAP (the network of digital twins), Oracle (Digital Twins for IoT applications) and GE with their Predix-platform also contributed to the hype related to the digital twin. The other reason is that the concept of Digital Twin is a great idea for marketers to shine above the clouds. Are recent comment from Monica Schnitger says it all in her post 5 quick takeaways from Siemens Automation summit. Monica’s take away related to Digital Twin:

The whole digital twin concept is just starting to gain traction with automation users. In many cases, they don’t have a digital representation of the equipment on their lines; they may have some data from the equipment OEM or their automation contractors but it’s inconsistent and probably incomplete. The consensus seemed to be that this is a great idea but out of many attendees’ immediate reach. [But it is important to start down this path: model something critical, gather all the data you can, prove benefit then move on to a bigger project.]

Monica is aiming to the same point I have been mentioning several times. There is no digital representation and the existing data is inconsistent. Don’t wait: The importance of accurate data – act now !

What is a digital twin?

I think there are various definitions of the digital twin and I do not want to go in a definition debate like we had before with the acronyms MBD/MBE (Model Based Definition/Enterprise – the confusion) or even the acronym PLM (classical PLM or digital PLM ?). Let’s agree on the following high-level statements:

- A digital twin is a virtual representation of a physical product

- The virtual part of the digital twin is defined by what you want to analyze, simulate, predict related to the physical product

- One physical product can have multiple digital twins, only in the ideal world there is potentially a unique digital twin for every physical product in the world

- When a product interacts with the environment, based on inputs and outputs, we normally call them systems. When I use Product, it will be most of the time a System, in particular in the context of a digital twin

Given the above statements, I will give some examples of digital twin concepts:

As a cyclist I am active on platforms like Garmin and Strava, using a tracking device, heart monitor and a power meter. During every ride my device plus the sensors measure my performance and all the data is uploaded to the platform, providing me with a report where I drove, how fast, my heartbeat, cadence and power during the ride. On Strava I can see the Flybys (other digital twins that crossed my path and their performances) and I can see per segment how I performed considered to others and I can filter by age, by level etc.)

As a cyclist I am active on platforms like Garmin and Strava, using a tracking device, heart monitor and a power meter. During every ride my device plus the sensors measure my performance and all the data is uploaded to the platform, providing me with a report where I drove, how fast, my heartbeat, cadence and power during the ride. On Strava I can see the Flybys (other digital twins that crossed my path and their performances) and I can see per segment how I performed considered to others and I can filter by age, by level etc.)

This is the easiest part of a digital twin. Every individual can monitor and analyze their personal behavior and discover trends. Additionally, the platform owner has all the intelligence about all cyclists around the world, how they perform and what would be the best performance per location. And based on their Premium offering (where you pay) they can give you advanced advise on how you can improve. This is the Strava business model bringing value to the individual meanwhile learning from the behavior of thousands. Note in this scenario there is no 3D involved.

Another known digital twin story is related to plants in operation. In the past 10 years I have been advocating for Plant Lifecycle Management (PLM for Owner/Operators), describing the value of a virtual plant model using PLM capabilities combined with Maintenance, Repair and Overhaul (MRO) in order to reduce downtime. In a nuclear environment the usage of 3D verification, simulation and even control software in a virtual environment, can bring great benefit due to the fact that the physical twin is not always accessible and downtime can be up to several million per week.

Another known digital twin story is related to plants in operation. In the past 10 years I have been advocating for Plant Lifecycle Management (PLM for Owner/Operators), describing the value of a virtual plant model using PLM capabilities combined with Maintenance, Repair and Overhaul (MRO) in order to reduce downtime. In a nuclear environment the usage of 3D verification, simulation and even control software in a virtual environment, can bring great benefit due to the fact that the physical twin is not always accessible and downtime can be up to several million per week.

The above examples provide two types of digital twins. I will discuss some characteristics in the next paragraphs.

Digital Twin – performance focus

Companies like GE and SAP focus a lot on the digital twin in relation to the asset performance. Measuring the performance of assets, compare their performance with other similar assets and based on performance characteristics the collector of the data can sell predictive maintenance analysis, performance optimization guidance and potentially other value offerings to their customers.

Companies like GE and SAP focus a lot on the digital twin in relation to the asset performance. Measuring the performance of assets, compare their performance with other similar assets and based on performance characteristics the collector of the data can sell predictive maintenance analysis, performance optimization guidance and potentially other value offerings to their customers.

Small improvements in the range of a few percents can have a big impact on the overall net results. The digital twin is crucial in this business model to build-up knowledge, analyze and collect it and sell the knowledge again. This type of scenario is the easiest one. You need products with sensors, you need an infrastructure to collect the data and extract and process information in a manner that it can be linked to a behavior model with parameters that influence the model.

Image SAP blogs