You are currently browsing the tag archive for the ‘PLM backbone’ tag.

This week there was an interesting discussion on LinkedIn initiated by Alex Bruskin from Senticore Technologies. I have known Alex for over 20 years, starting from the SmarTeam days and later through encounters in the PLM space. Alex is a real techie on the outside but also a person with a very creative mind to connect technology to business.

This week there was an interesting discussion on LinkedIn initiated by Alex Bruskin from Senticore Technologies. I have known Alex for over 20 years, starting from the SmarTeam days and later through encounters in the PLM space. Alex is a real techie on the outside but also a person with a very creative mind to connect technology to business.

You can see his LinkedIn featured posts here to get an impression.

Where is PLM @ Startups?

This time Alex shared an observation from an event organized by the Pittsburgh Robotics Network, where he spoke with several startups.

This time Alex shared an observation from an event organized by the Pittsburgh Robotics Network, where he spoke with several startups.

His point, and I quote Alex:

Then, I spoke to a number of presenters there, explaining Senticore capabilities and listening to their situation around engineering/ manufacturing.

– many startups offered an add-on to other platforms => an autonomous module for UAV/helicopter/Vehicle. Some offered robotic components or entire robots (robot-dog).

– all startups use #solidworks , and none use #catia or #nx

– none of them have a PLM system nor an MES. I am 90% certain none of them have ERP, either. They all are apparently using #excel for all these purposes.

– only a handful of them are considering getting a PLM system in the near future.

Read the full post here and the comments below to get a broader insight into the topic.

The PLM Doctor knows it all.

The point reminded me of an episode I did together with Helena Gutierrez from Share PLM last year. She asked the same question to the PLM Doctor.

Do you think PLM is only for big corporations or can startups also benefit from it?

You can see the conversation here:

Meanwhile, the PLM Doctor is unemployed due to the lack of incoming questions.

When looking at startups, I could see two paths. One is the traditional path based on historical mechanical PLM, and a second (potential) approach which is based on understanding the future complexity of the startup offering.

There are two paths – path #1

The first evolutionary path you might have seen a few times before in my blog post is the one depicted by Marc Halpern from Gartner in 2015. At that time, we started discussing Product Innovation Platforms and the new generation of PLM. You can see Marc’s slide below, which is still valid for most situations.

In the slide above, you see the startup company on the left side.

Often the main purpose of a startup company is to be visible on the market with their concept as fast as possible. Startups are often driven by a small group of multifunctional people developing a solution. In this approach, there is no place for people and reflection on processes as they are considered overhead.

Often the main purpose of a startup company is to be visible on the market with their concept as fast as possible. Startups are often driven by a small group of multifunctional people developing a solution. In this approach, there is no place for people and reflection on processes as they are considered overhead.

Only when you target your solution in a strongly regulated environment, e.g., medical devices and aerospace, you need to focus on the process too.

Therefore it is logical that most startup companies focus on the tools to develop their solution. A logical path, as what could you do without tools? Next, the choice of the tools will be, most of the time, driven by the team’s experience and available skills in the market.

Again statistics show it is not likely that advanced tools like NX or CATIA will be chosen for the design part. More likely mid-market products like SolidWorks or Autodesk products. And for data management and reporting, the logical tools are the office tools, Excel, Word and Visio.

Again statistics show it is not likely that advanced tools like NX or CATIA will be chosen for the design part. More likely mid-market products like SolidWorks or Autodesk products. And for data management and reporting, the logical tools are the office tools, Excel, Word and Visio.

And don’t forget PowerPoint to sell the solution.

The role of investors is often also here to question investments that are not clearly understood or relevant at that time.

How a startup scales up very much depends on the choices they make for Repeatable business. This is the moment that a company starts to create its legacy. Processes and best practices need to be established and why you often see is that seasoned people join the company. These people have proven their skills in the past, and most likely, they are willing to repeat this.

And here comes the risk – experienced people come with a much better holistic overview of the product lifecycle aspects. They know what critical steps are needed to move the company to an Integrated business. These experiences are crucial; however, they should not become the new single standard.

Implementing the past is not a guarantee for success in a digital and connected future.

Implementing their past experiences would focus too much on creating a System of Record (PLM 1.0), which is crucial for configuration management, change management and compliance. However, it would also create a productivity dip for those developing the product or solution.

This is the same dilemma that very small and medium enterprises face. They function reasonably well in a Repeatable business. How much should they invest in an Integrated or Collaborating business approach?

This is the same dilemma that very small and medium enterprises face. They function reasonably well in a Repeatable business. How much should they invest in an Integrated or Collaborating business approach?

Following the evolution path described by Marc Halpern always brings you to the point where technology changes from Coordinated to Connected. This is a challenging and immature topic, which I have discussed in my blog posts and during conferences.

See: The Challenges of a connected ecosystem for PLM or this full series of posts: The road to model-based and connected PLM.

There are two paths – path #2

Another path that startups could follow is a more forward-looking path, understanding that you need a coordinated and connected approach in the long term. For the fastest execution, you would like to work in a multidisciplinary mode in real time, exactly the characteristic of a startup.

However, in path #2, the startup should have a longer-term vision. Instead of choosing the obvious tools, they should focus on their company’s most important value streams. They have the opportunity to select integrated domains that are based on a connected, often model-based approach. Some examples of these integrated domains:

However, in path #2, the startup should have a longer-term vision. Instead of choosing the obvious tools, they should focus on their company’s most important value streams. They have the opportunity to select integrated domains that are based on a connected, often model-based approach. Some examples of these integrated domains:

- An MBSE environment focusing on real-time interaction related to product architecture and solution components(RFLP)

- A connected product design environment, where in real-time a virtual product can be created, analyzed, and optimized – connected software might be relevant here.

- A connected product realization environment where product engineering and suppliers work together in real time.

All three examples are typical Systems of Engagement. The big difference with individual tools is that they already focus on multidisciplinary collaboration on a data-driven, model-based approach.

In addition, having these systems in place allows the startup company to invest separately in a System of Record(s) environment when scaling up. This could be a traditional PLM system combined with a Configuration Management System or an Asset Management System.

System of Record choices, of course, depends on the industry needs and the usage of the product in the field. We should not consider one system that serves all; it is an infrastructure.

In the image below, you see the concept of this approach described by Erik Herzog from SAAB Aeronautics during the recent PLM Roadmap / PDT Europe conference. You can read more details of this approach in this post: The Week after PLM Roadmap PDT Europe.

![]() Note: SAAB is not a startup; therefore, they must deal with their legacy. They are now working on business sustainable concepts for the future: Heterogeneous and federated PLM.

Note: SAAB is not a startup; therefore, they must deal with their legacy. They are now working on business sustainable concepts for the future: Heterogeneous and federated PLM.

My opinion: The heterogeneous and federated approach is the ultimate target for any enterprise. I already mentioned the importance of connected environments regarding digital twins and sustainability. Material properties, process environmental impacts and product behavior coming from the field will all work only efficiently if dealt with in a connected and federated manner.

Conclusion

The challenge for startups is that they often start without the knowledge and experience that multidisciplinary collaboration within a value stream is crucial for a connected future. This a topic that I would like to explore further with startups and peers in my ecosystem. What do you think? What are your questions? Join the conversation.

After “The PLM Doctor is IN #2,” now again a written post in the category of PLM and complementary practices/domains.

After PLM and Configuration Lifecycle ManagementCLM (January 2021) and PLM and Configuration Management CM (February 2021), now it is time to address the third interesting topic:

PLM and Supply Chain collaboration.

In this post, I am speaking with Magnus Färneland from Eurostep, a company well known in my PLM ecosystem, through their involvement in standards (STEP and PLCS), the PDT conferences, and their PLM collaboration hub, ShareAspace.

Supply Chain collaboration

The interaction between OEMs and their suppliers has been a topic of particular interest to me. As a warming-up, read my post after CIMdata/PDT Roadmap 2020: PLM and the Supply Chain. In this post, I briefly touched on the Eurostep approach – having a Supply Chain Collaboration Hub. Below an image from that post – in this case, the Collaboration Hub is positioned between two OEMs.

Recently Eurostep shared a blog post in the same context: 3 Steps to remove data silos from your supply chain addressing the dreams of many companies: moving from disconnected information silos towards a logical flow of data. This topic is well suited for all companies in the digital transformation process with their supply chain. So, let us hear it from Eurostep.

Eurostep – the company / the mission

First of all, can you give a short introduction to Eurostep as a company and the unique value you are offering to your clients?

First of all, can you give a short introduction to Eurostep as a company and the unique value you are offering to your clients?

Eurostep was founded in 1994 by several world-class experts on product data and information management. In the year 2000, we started developing ShareAspace. We took all the experience we had from working with collaboration in the extended enterprise, mixed it with our standards knowledge, and selected Microsoft as the technology for our software platform.

Eurostep was founded in 1994 by several world-class experts on product data and information management. In the year 2000, we started developing ShareAspace. We took all the experience we had from working with collaboration in the extended enterprise, mixed it with our standards knowledge, and selected Microsoft as the technology for our software platform.

We now offer ShareAspace as a solution for product information collaboration in all three industry verticals where we are active: Manufacturing, Defense and AEC & Plant.

ShareAspace is based on an information standard called PLCS (ISO 10300-239). This means we have a data model covering the complete life cycle of a product from requirements and conceptual design to an existing installed base. We have added things needed, such as consolidation and security. Our partnership with Microsoft has also resulted in ShareAspace being available in Azure as a service (our Design to Manufacturing software).

ShareAspace is based on an information standard called PLCS (ISO 10300-239). This means we have a data model covering the complete life cycle of a product from requirements and conceptual design to an existing installed base. We have added things needed, such as consolidation and security. Our partnership with Microsoft has also resulted in ShareAspace being available in Azure as a service (our Design to Manufacturing software).

Why a supply chain collaboration hub?

Currently, most suppliers work in a disconnected manner with their clients – sending files up and down or the need to work inside the OEM environment. What are the reasons to consider a supply chain collaboration hub or, as you call it, a product information collaboration solution?

Currently, most suppliers work in a disconnected manner with their clients – sending files up and down or the need to work inside the OEM environment. What are the reasons to consider a supply chain collaboration hub or, as you call it, a product information collaboration solution?

The hub concept is not new per se. There are plenty of examples of file sharing hubs. Once you realize that sending files back and forth by email is a disaster for keeping control of your information being shared with suppliers, you would probably try out one of the available file-sharing alternatives.

The hub concept is not new per se. There are plenty of examples of file sharing hubs. Once you realize that sending files back and forth by email is a disaster for keeping control of your information being shared with suppliers, you would probably try out one of the available file-sharing alternatives.

However, after a while, you begin to realize that a file share can be quite time consuming to keep up to date. Files are being changed. Files are being removed! Some files are enormous, and you realize that you only need a fraction of what is in the file. References within one file to another file becomes corrupt because the other file is of a new version. Etc. Etc.

![]() This is about the time when you realize that you need similar control of the data you share with suppliers as you have in your internal systems. If not better.

This is about the time when you realize that you need similar control of the data you share with suppliers as you have in your internal systems. If not better.

A hub allows all partners to continue to use their internal tools and processes. It is also a more secure way of collaboration as the suppliers and partners are not let into the internal systems of the OEM.

Another significant side effect of this is that you only expose the data in the hub intended for external sharing and avoid sharing too much or exposing internal sensitive data.

A hub is also suitable for business flexibility as partners are not hardwired with the OEM. Partners can change, and IT systems in the value chain can change without impacting more than the single system’s connecting to the hub.

Should every company implement a supply chain collaboration hub?

Based on your experience, what types of companies should implement a supply chain collaboration hub and what are the expected benefits?

Based on your experience, what types of companies should implement a supply chain collaboration hub and what are the expected benefits?

The large OEMs and 1st tier suppliers certainly benefit from this since they can incorporate hundreds, if not thousands, of suppliers. Sharing technical data across the supply chain from a dedicated hub will remove confusions, improve control of the shared data, and build trust with their partners.

The large OEMs and 1st tier suppliers certainly benefit from this since they can incorporate hundreds, if not thousands, of suppliers. Sharing technical data across the supply chain from a dedicated hub will remove confusions, improve control of the shared data, and build trust with their partners.

With our cloud-based offering, we now also make it possible for at least mid-sized companies (like 200+ employees) to use ShareAspace. They may not have a well-adopted PLM system or the issues of communicating complex specifications originating from several internal sources. However still, they need to be professional in dealing with suppliers.

![]() The smallest client we have is a manufacturer of pool cleaners, a complex product with many suppliers. The company Weda [www.weda.se] has less than 10 employees, and they use ShareAspace as SaaS. With ShareAspace, they have improved their collaboration process with suppliers and cut costs and lowered inventory levels.

The smallest client we have is a manufacturer of pool cleaners, a complex product with many suppliers. The company Weda [www.weda.se] has less than 10 employees, and they use ShareAspace as SaaS. With ShareAspace, they have improved their collaboration process with suppliers and cut costs and lowered inventory levels.

ShareAspace can really scale big. It serves as a collaboration solution for the two new Aircraft carriers in the UK, the QUEEN ELIZABETH class. The aircraft carriers were built by a consortium that was closed in early 2020.

ShareAspace can really scale big. It serves as a collaboration solution for the two new Aircraft carriers in the UK, the QUEEN ELIZABETH class. The aircraft carriers were built by a consortium that was closed in early 2020.

ShareAspace is being used to hold the design data and other documentation from the consortium to be available to the multiple organizations (both inside and outside of the Ministry of Defence) that need controlled access.

What is the dependency on standards?

I always associate Eurostep with the PLCS (ISO 10303-239) standard, providing an information model for “hardware” products along the lifecycle. How important is this standard for you in the context of your ShareAspace offering?

I always associate Eurostep with the PLCS (ISO 10303-239) standard, providing an information model for “hardware” products along the lifecycle. How important is this standard for you in the context of your ShareAspace offering?

Should everyone adapt to this standard?

We have used PLCS to define the internal data schema in ShareAspace. This is an excellent starting point for capturing information from different systems and domains and still getting it to fit together. Why invent something new?

We have used PLCS to define the internal data schema in ShareAspace. This is an excellent starting point for capturing information from different systems and domains and still getting it to fit together. Why invent something new?

However, we can import data in most formats, and it does not have to be according to a standard. When connecting to Teamcenter, Windchill, Enovia, SAP, Oracle, Maximo etc., it is more often in a proprietary format than according to any standards.

On the other hand, in some industries like Defense, standards-based data exchange is required and put into contracts. Sometimes it prescribes PLCS. For the plant industry, it could be CFIHOS or ISO15926.

Supply Chain Collaboration and digital transformation

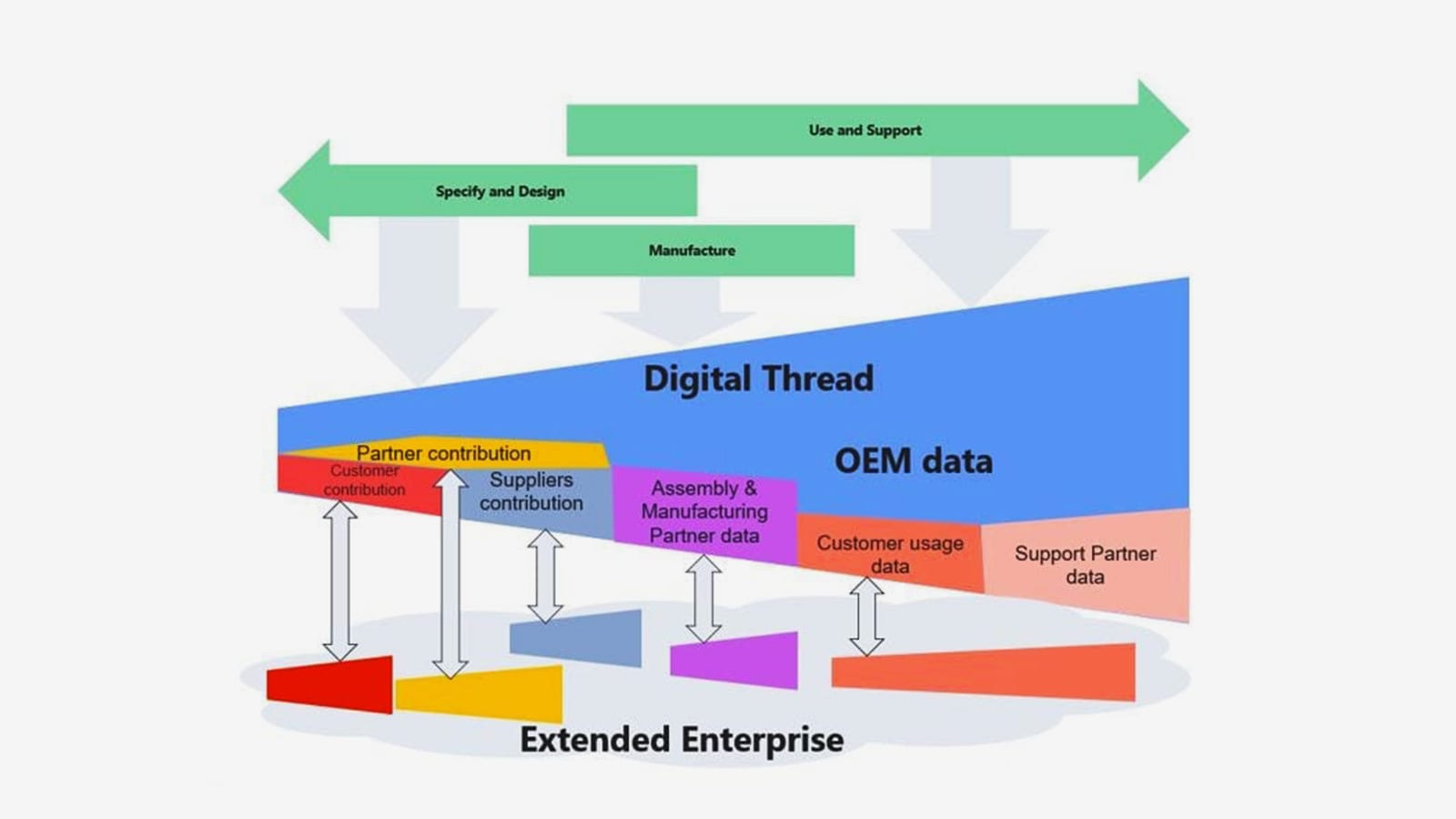

As stated at the beginning of this post, digital transformation is about connecting the information siloes through a digital thread. How important is this related to the supply chain?

As stated at the beginning of this post, digital transformation is about connecting the information siloes through a digital thread. How important is this related to the supply chain?

Many companies have come a long way in improving their internal management of product data. But still, the exchange and sharing of data with the external world has considerable potential for improvement. Just look at the chaos everyone has experienced with emails, still used a lot, in finding the latest Word document or PowerPoint file. Imagine if you collaborate on a ship, a truck, a power plant, or a piece of complex infrastructure. FTP is not the answer, and for product data, Dropbox is not doing the trick.

Many companies have come a long way in improving their internal management of product data. But still, the exchange and sharing of data with the external world has considerable potential for improvement. Just look at the chaos everyone has experienced with emails, still used a lot, in finding the latest Word document or PowerPoint file. Imagine if you collaborate on a ship, a truck, a power plant, or a piece of complex infrastructure. FTP is not the answer, and for product data, Dropbox is not doing the trick.

A Digital Thread must support versions and changes in all directions, as changes are natural with reasonably advanced products. Much of the information created about or around a product is generated within the supply chain, like production parameters, test and inspection protocols, certifications, and more. Without an intelligent way of capturing this data, companies will continue to spend a fortune on administration trying to manage this manually.

As the Digital Thread extends across the value chain, a useful sharing tool is needed to allow for configuration management across the complete chain – ShareAspace is designed for this. The great thing with PLCS is that it gives a standard model for the Digital Thread covering several Digital Twins. PLCS adds the life cycle component, which is essential, and there is no alternative. Therefore, we are welcome with ShareAspace and PLCS to add capabilities to snapshot standards like IFC etc., that are outside the STEP series of standards.

Learning more

We discussed that a supply chain collaboration hub can have specific value to a company. Where can readers learn more?

There is a lot of information available. Of course, on our Eurostep website, you will find information under the tab Resources or on the ShareAspace website under the tab News.

Other sources are:

What I have learned

- I am surprised to see that the type of Supplier Collaboration Platform delivered by Eurostep is not a booming market. Where Time to Market is significantly impacted by how companies work with their suppliers, most companies still rely on the exchange of data packages.

- The most advanced exchanges are using a model-based definition if relevant. Traditional PLM Vendors will not develop such platforms as the platform needs to be agnostic in both directions.

- Having a recommended data model based on PLCS or a custom-data model in case of a large OEM can provide such a collaboration hub. Relative easy to implement (as you do not change your own PLM) and relatively easy to scale (adding a new supplier is easy). For me, the supplier collaboration platform is a must in a modern, digital connected enterprise.

Conclusion

A lot of marketing money is spent on “Digital Thread” or “Digital Continuity”. If you are looking at the full value chain of product development and operational support, there are still many manual hand-over processes with suppliers. A supplier collaboration hub might be the missing piece of the puzzle to realize a real digital thread or continuity.

The past year I have written about PLM in the context of digital transformation, relevant for companies that deliver products to the market. Some years ago, I have advocated the value of a PLM infrastructure for EPC companies and Owners/Operators of a plant.

The past year I have written about PLM in the context of digital transformation, relevant for companies that deliver products to the market. Some years ago, I have advocated the value of a PLM infrastructure for EPC companies and Owners/Operators of a plant.

EPC stands for Engineering, Construction, and Procurement, a typical name for often large capital-intensive projects, executed by a consortium of companies. Together they create buildings, platforms, plants, infrastructure and more one-off deliveries, which will be under control of the Owner/Operator after going-live.

Some references:

2014 EPC related: The year the construction industry did not discover PLM

2013 Owner/Operators related: PLM for all industries?

As you can see from the dates, these posts are not the most recent posts. Meanwhile, EPC-based businesses are discovering the value of a PLM infrastructure. Main component for them is BIM (Building Information Model or Building Information Management) and they use cloud-based collaboration environments to be more cost-efficient. Slowly these companies are moving to a single repository of the data supporting multidisciplinary collaboration related to a BIM model to guarantee a continuity of data and better execution. I am positive about EPC companies that are discovering the value of PLM- It might be slightly different from classical product-selling companies, mainly because data ownership is different. In an EPC environment many companies are responsible for parts of the data and each of them keeps the real knowledge as IP (Intellectual Property) for themselves. They only “publish” deliverables. For companies that deliver products to the market, the OEM keeps responsibility for all relevant product information and h has a different strategy.

I worked in the past with one of my peers, Bjorn Fidjeland (www.plmpartner.com) on PLM for EPCs and Owner/Operators. We share the same passion to bring PLM outside traditional industries. As Bjorn is now more active than I am in this domain, I recommend to read Bjorn´s posts on this topic. For example:

I worked in the past with one of my peers, Bjorn Fidjeland (www.plmpartner.com) on PLM for EPCs and Owner/Operators. We share the same passion to bring PLM outside traditional industries. As Bjorn is now more active than I am in this domain, I recommend to read Bjorn´s posts on this topic. For example:

EPC related 2016: Handover to logistics and supply chain in capital projects

Owner/Operators 2015: Plant Information Management – Information Structures

Bjorn provides a lot of details, which are important as implementing PLM for EPCs or Owner/Operators requires different data structures. I wrote about these concepts in 2014 in two posts – PLM and/or SLM ? post 1 and post 2. At that time not realizing the virtual twin was becoming popular.

PLM complementary to EAM

The last year I have explored these concepts together with (potential) Owner/Operators of a plant, where PLM would be complementary to their EAM system. In the world of Owner/Operators, Enterprise Asset Management (EAM) software is the major software these companies use. You find some of the major EAM players here.

You will discover that all these software suites are good for plant operations, but they all have a challenge to support data consistency and quality in particular when dealing with plant changes and efficient, high-quality plant information management. Versioning and status management, typical PLM capabilities are often not there.

Owner/Operators have challenges with EAM environments as:

- EAM systems are designed to support an as-operated environment, assuming all data it correct. Support for Maintenance, Repair or Overhaul projects is often rudimentary and depending on document-driven processes. The primary business process of these companies is producing continuously, such as, electricity or chemicals. Therefore typical engineering projects to change or enhance the main production process do not have the same financial focus.

- A document-driven approach is the de facto standard common for these industries. Most of the time because the plant has been established through an EPC approach, which was 100 % document-driven due to the different disconnected disciplines/tools working at that time in the EPC project. As the asset information is stored and delivered in documents, most owners/operators keep the document-driven approach for future change projects.

Owners/operator can benefit significantly from a data-driven PLM system as complementary infrastructure to their EAM system. The PLM system will be the source for accurate asset information, manage the change and approvals for the assets and ultimately push the new released information to the EAM system. The PLM system will offer the full history an traceability of decisions made, important for regulatory bodies or insurance companies.

.A data-driven approach for asset information allows owners/operators to benefit from efficient processes, reducing strongly the amount of people required to process data (documents) or reducing the time for people working in maintenance and operations to search for data. I found a nice slide from IBM explaining the concept of PLM an EAM collaboration – see below:

The same benefits modern digital enterprises will have related to a data-driven approach will come available for owner/operators. Operational management is supported by the EAM system combined with real-time capabilities provided by a modern PLM systems to analyze, design and deliver changes to the plant without a costly data conversion process (e.g. compiling new documents) and disconnected processes.

Moving to a virtual twin

Interesting enough the digital transformation is bringing the concepts of connecting engineering, manufacturing and operations together into an infrastructure of digital platforms interacting together. Where owners/operators historically do not focus on optimizing the engineering process to build and maintain their assets, in the “classical” industries companies were not really focusing on how products behaved in the field after they were delivered. With digital continuity (the digital thread) and IoT now these “classical” companies can connect to their products in the field. Their products become assets of information, and in case these companies change their business offering into leasing products and services, these assets become managed assets, like the assets owner/operators are managing.

Interesting enough the digital transformation is bringing the concepts of connecting engineering, manufacturing and operations together into an infrastructure of digital platforms interacting together. Where owners/operators historically do not focus on optimizing the engineering process to build and maintain their assets, in the “classical” industries companies were not really focusing on how products behaved in the field after they were delivered. With digital continuity (the digital thread) and IoT now these “classical” companies can connect to their products in the field. Their products become assets of information, and in case these companies change their business offering into leasing products and services, these assets become managed assets, like the assets owner/operators are managing.

The concept of a virtual twin (or digital twin – image proprietary of GE) , where a virtual model-based environment is linked to one or more real instances in operations, is the dream of all industries. Preparing, Simulating and verifying changes in a virtual world is so much more efficient and cheaper that is allows for higher quality of products and in the case of plant operators higher safety will be the number one topic.

Conclusion

What I have learned so far from plant owners/operators is that they are struggling to grasp a modern digital enterprise concept as their current environment is not model-based but document-driven. Starting with PLM to complement their EAM system could be a first step to understand the value and business benefits of digital continuity. It requires a new way of thinking which is not a commodity at this time. It will happen in the next 5 to 10 years. Expect it to be driven by the realization of virtual twins in the industry and further BIM maturity. The future is model-based !!!

p.s. I am happy to announce WordPress provided a new feature to my blog. In the side panel you can now choose your language (based on Google Translate) if you have difficulties with English. Enjoy !

Coming back from holiday (a life without PLM), it is time to pick up blogging again. And like every start, the first step is to make a status where we are now (with PLM) and where PLM is heading. Of course, it remains a nopinion based on dialogues I had this year.

Coming back from holiday (a life without PLM), it is time to pick up blogging again. And like every start, the first step is to make a status where we are now (with PLM) and where PLM is heading. Of course, it remains a nopinion based on dialogues I had this year.

First and perhaps this is biased, there is a hype in LinkedIn groups or in the blogs that I follow, a kind of enthusiasm coming from OnShape and Oleg Shilovitsky´s new company OpenBOM: the hype of cloud services for CAD/Data Management and BOM management.

Two years ago I discussed at some PLM conferences, that PLM should not necessary be linked to a single PLM system. The functionality of PLM might be delivered by a collection of services, most likely cloud-based, and these services together providing support for the product lifecycle. In 2014 I worked with Kimonex, an Israeli startup that developed a first online BOM solution, targeting the early design collaboration. Their challenge was to find customers that wanted to start this unknown journey. Cloud-based meant real-time collaboration, and this is also what Oleg wrote about last week: Real-time collaborative edit is coming to CAD & PLM

Two years ago I discussed at some PLM conferences, that PLM should not necessary be linked to a single PLM system. The functionality of PLM might be delivered by a collection of services, most likely cloud-based, and these services together providing support for the product lifecycle. In 2014 I worked with Kimonex, an Israeli startup that developed a first online BOM solution, targeting the early design collaboration. Their challenge was to find customers that wanted to start this unknown journey. Cloud-based meant real-time collaboration, and this is also what Oleg wrote about last week: Real-time collaborative edit is coming to CAD & PLM

Real-time collaboration is one of the characteristics of a digital enterprise, where thanks to the fact information is stored as data, information can flow rapidly through an organization. Data can be combined and used by anyone in the organization in a certain context. This approach removes the barriers between PLM and ERP. To my opinion, there is no barrier between PLM and ERP. The barrier that companies create exists because people believe PLM is a system, and ERP is a system. This is the way of (system) thinking is coming from the previous century.

So is the future about cloud-based, data-driven services for PLM?

To my opinion systems are still the biggest inhibitor for modern PLM. Without any statistical analysis based on my impressions and gut feelings, this is what I see:

- The majority of companies that say the DO PLM, actually do PDM. They believe it is PLM because their vendor is a PLM company and they have bought a PLM system. However, in reality, the PLM system is still used as an advanced PDM system by engineering to push (sometimes still manual) at the end information into the well-established ERP system. Check with your company which departments are working in the PLM system – anyone beyond engineering ?

- There is a group of companies that have implemented PLM beyond their engineering department, connecting to their suppliers in the sourcing and manufacturing phase. Most of the time the OEMs forces their suppliers to deliver data in an old-fashioned way or sometimes more advanced integrated in the OEM environment. In this case, the supplier has to work in two systems: their own PDM/PLM environment and the OEM environment. Not efficient, still the way traditional PLM vendors promote partner / supply chain integration .

This is an area where you might think that a services-based environment like OnShape or OpenBOM might help to connect the supply chain. I think to so too. Still, before that we reach this stage there are some hurdles to overcome:

Persistence of data

The current generation in management of companies older than 20 years grew up with the fact that “owning data” is the only way to stay relevant in business. Even open innovation is a sensitive topic. What happens with data your company does not own because it is in the cloud in an environment you do not own (but share ?) . As long as companies insist on owning the data, a service-based PLM environment will not work.

The current generation in management of companies older than 20 years grew up with the fact that “owning data” is the only way to stay relevant in business. Even open innovation is a sensitive topic. What happens with data your company does not own because it is in the cloud in an environment you do not own (but share ?) . As long as companies insist on owning the data, a service-based PLM environment will not work.

A nice compromise at this time is ShareAspace from EuroStep. I wrote about ShareAspace last year when I attended sessions from Volvo Car (The weekend after PI Munich 2016) ShareAspace was used as a middleware to map and connect between two PLM/PDM environments. In this way, persistence of data remains. The ShareAspace data model is based on PLCS, which is a standard in the core industries. And standards are to my opinion the second hurdle for a services-based approach

Standards

A standard is often considered as overhead, and the reason for that is that often a few vendors dominate the market in a certain domain and therefore become THE standard. Similar to persistence of data, what is the value of data that you own but that you only can get access to through a vendor´s particular format?

A standard is often considered as overhead, and the reason for that is that often a few vendors dominate the market in a certain domain and therefore become THE standard. Similar to persistence of data, what is the value of data that you own but that you only can get access to through a vendor´s particular format?

Good for the short-term, but what about the long-term. (Most of the time we do not think about the long-term and consider interoperability problems as a given). Also, a services based PLM environment requires support for standards to avoid expensive interfaces and lack of long-term availability. Check in your company how important standards are when selecting and implementing PLM.

Conclusion

There is a nice hype for real-time collaboration through cloud solutions. For many current companies not good enough as there is a lot of history and the mood to own data. Young companies that discover the need for a modern services-based solution might be tempted to build such an environment. For these companies, the long-term availability might be a topic to study

Note: I just realized if you are interested in persistency and standards you should attend PDT 2016 on 9 & 1o November in Paris. Another interesting post just published from Lionel Grealou : Single Enterprise BOM: Utopia vs Dystopia is also touching this subject

Someone notified me that not everyone subscribed to my blog necessary will read my posts on LinkedIn. Therefore I will repost the upcoming weeks some of my more business oriented posts from LinkedIn here too. This post was from July 3rd and an introduction to all the methodology post I am currently publishing.

Someone notified me that not everyone subscribed to my blog necessary will read my posts on LinkedIn. Therefore I will repost the upcoming weeks some of my more business oriented posts from LinkedIn here too. This post was from July 3rd and an introduction to all the methodology post I am currently publishing.

The importance of a (PLM) data model

What makes it so hard to implement PLM in a correct manner and why is this often a mission impossible? I have been asking myself this question the past ten years again and again. For sure a lot has to do with the culture and legacy every organization has. Imagine if a company could start from scratch with PLM. How would they implement PLM nowadays?

What makes it so hard to implement PLM in a correct manner and why is this often a mission impossible? I have been asking myself this question the past ten years again and again. For sure a lot has to do with the culture and legacy every organization has. Imagine if a company could start from scratch with PLM. How would they implement PLM nowadays?

My conclusion for both situations is that it all leads to a correct (PLM) data model, allowing companies to store their data in an object-oriented manner. In this way reflecting the behavior the information objects have and the way they mature through their information lifecycle. If you making compromises here, it has an effect on your implementation, the way processes are supported out-of-the-box by a PLM system or how information can be shared with other enterprise systems, in particular, ERP. PLM is written between parenthesis as I believe in the future we do not talk PLM or ERP separate anymore – we will talk business.

Let me illustrate this academic statement.

A mid-market example

When I worked with SmarTeam in the nineties, the system was designed more as a PDM system than a PLM system. The principal objects were Projects, Documents, and Items. The Documents had a sub-grouping in Office documents and CAD documents. And the system had a single lifecycle which was very basic and designed for documents. Thanks to the flexibility of the system you could quickly implement a satisfactory environment for the engineering department. Problems (and customizations) came when you wanted to connect the data to the other departments in the company.

When I worked with SmarTeam in the nineties, the system was designed more as a PDM system than a PLM system. The principal objects were Projects, Documents, and Items. The Documents had a sub-grouping in Office documents and CAD documents. And the system had a single lifecycle which was very basic and designed for documents. Thanks to the flexibility of the system you could quickly implement a satisfactory environment for the engineering department. Problems (and customizations) came when you wanted to connect the data to the other departments in the company.

The sales and marketing department defines and sells products. Products were not part of the initial data model, so people misused the Project object for that. To connect to manufacturing a BOM (Bill of Material) was needed. As the connected 3D CAD system generated a structure while saving the assemblies, people start to consider this structure as the EBOM. This might work if your projects are mechanical only.

However, a Document is not the same as a Part. A Document has a complete different behavior as a Part. Documents have continuous iterations, with a check-in/checkout mechanism, where the Part definition remains unchanged and gets meanwhile a higher maturity.

The correct approach is to have the EBOM Part structure, where Part connect to the Documents. And yes, Documents can also have a structure, but it is not a BOM. SmarTeam implemented this around 2004. Meanwhile, a lot of companies had implemented their custom solution for EBOM by customization not matching this approach. This created a first level of legacy.

When SmarTeam implemented Part behavior, it became possible to create a multidisciplinary EBOM, and the next logical step was, of course, to connect the data to the ERP system. At that time, most implementations have been pushing the EBOM to the ERP system and let it live there further. ERP was the enterprise tool, SmarTeam the engineering tool. The information became disconnected in an IT-manner. Applying changes and defining a manufacturing BOM was done manually in the ERP system and could be done by (experienced) people that do not make mistakes.

Next challenge comes when you want to automate the connection to ERP. In that case, it became apparent that the EBOM and MBOM should reside in the same system. (See old and still actual post with comments here: Where is the MBOM) In one system to manage changes and to be able to implement these changes quickly without too much human intervention. And as the EBOM is usually created in the PLM system, the (commercial/emotional) PLM-ERP battle started. “Who owns the part definition”, “Who owns the MBOM definition” became the topic of many PLM implementations. The real questions should be: “Who is responsible for which attributes of the Part ?” and “Who is responsible for which part of the MBOM definition ?” as data should be shared not owned.

The SmarTeam evolution shows how a changing scope and an incomplete/incorrect data model leads to costly rework when aligning to the mainstream. And this is happening with many implementation and other PLM systems. In particular when the path is to grow from PDM to PLM. An important question remains what is going to be mainstream in the future. More on that in my conclusion.

A complex enterprise example

In the recent years, I have been involved in several PLM discussions with large enterprises. These enterprises suffer from their legacy. Often the original data management was not defined in an object-oriented manner, and the implementation has been expanding with connected and disconnected systems like a big spaghetti bowl.

The main message most of the time is:

“Don’t touch the systems it as it works for us”.

The underlying message is;

“We would love to change to a modern approach, but we understand it will be a painful exercise and how will it impact profitability and execution of our company”

The challenge these companies have is that it extremely hard to imagine the potential to-be situation and how it is affected by the legacy. In a project that I participated several years ago the company was migrating from a mainframe database towards a standard object-oriented (PLM) data model. The biggest pain was in mapping data towards the object-oriented data model. As the original mainframe database had all kind of tables with flags and mixed Part & Document data, it was almost impossible to make a 100 % conversion. The other challenge was that knowledge of the old system had vaporized. The result at the end was a customized PLM data model, closer to current reality, still containing legacy “tricks” to assure compatibility.

All these enterprises at a particular time have to go through such a painful exercise. When is the best moment? When business is booming, nobody wants to slow-down. When business is in a lower gear, costs and investments are minimized to keep the old engine running efficiently. I believe the latter would be the best moment to invest in making the transition if you believe your business will still exist in 10 years from now.

Back to the data model.

Businesses should have today a high-level object-oriented data model, describing the main information objects and their behavior in your organization. The term Master Data Management is related to this. How many companies have the time and skills to implement a future-oriented data model? And the data model must stay flexible for the future.

![]() Compare it to your brain, which also stores information by its behavior and by learning the brain understands what it logically related. The internal data model gets enriched while we learn.

Compare it to your brain, which also stores information by its behavior and by learning the brain understands what it logically related. The internal data model gets enriched while we learn.

Once you have a business data model, you are able to implement processes on top of it. Processes can change over time, therefore, avoid hard-coding specific processes in your enterprise systems. Like the brain, we can change our behavior (applying new processes) still it will be based on the data model stored inside our brain.

Conclusion:

A lot of enterprise PLM implementations are in a challenging situation due to legacy or incomplete understanding and availability of an enterprise data model. Therefore cross-department implementations and connecting others systems are considered as a battle between systems and their proprietary capabilities.

The future will be based on business platforms and realizing this take years – imagine openness and usage of data standards. An interesting conference to attend in the near future for this purpose is the PDT2015 conference in Stockholm.

Meanwhile I also learned that a one-day Master Data Management workshop will be held before the PDT2015 conference starts on the 12th of October. A good opportunity to deep-dive for three days !

This time I would like to receive some feedback from my readers as I believe the topic I am discussing here might be similar to a PLM / ERP discussion – a discussion between religions. I have preached the past two years a more data-centric approach for PLM, instead of file management and related tot this data-centric approach, the concept of a PLM platform / Business Platform – CIMdata/ Innovation Platform – Gartner becomes clear.

This time I would like to receive some feedback from my readers as I believe the topic I am discussing here might be similar to a PLM / ERP discussion – a discussion between religions. I have preached the past two years a more data-centric approach for PLM, instead of file management and related tot this data-centric approach, the concept of a PLM platform / Business Platform – CIMdata/ Innovation Platform – Gartner becomes clear.

What´s the issue?

As I wrote in my earlier post (random PLM future thoughts), I realized that talking about platforms is not that straight-forward when meeting companies with their history and terminology. Some claim they are already using a business platform, others have no clue what makes a platform different from a their current PLM implementation ? Therefore I will summarize the different approaches I have seen in my network and give a non-academic opinion as a base for discussion. Looking forward to your opinion.

The platform approach

My definition of a PLM platform:

- A central repository of data based on a core data model. Information is stored as data in a unique way

- On top of this repository, applications can run, using a subset of the overall data elements, proving dedicated functionality and user interface to a particular user / role

- Access to the platform is provided through web-technology. Storage could be on the cloud.

- External applications and data can be connected through an open (standardized?) API embedded or federated

- The PLM platform can be a collection of services and functionality coming from various vendors / suppliers – the app store concept

- The platform approach is THE DREAM for business, being flexible to combine and edit data in any desired context in dedicated apps / environments

In the PLM world, Dassault Systems with their 3DExperience approach is following this trend although here you might argue about the ease of use to add external apps to this platform – is it open ? Aras and Autodesk might also claim they have a PLM platform, where you might question the same and if the depth of the data model and the provided solutions on top of the data model are mature enough. Finally also SAP can be considered as a platform, but I would not name it a PLM platform at this moment in time. An important question for me would be: How can achieve openness of a PLM platform?

In the PLM world, Dassault Systems with their 3DExperience approach is following this trend although here you might argue about the ease of use to add external apps to this platform – is it open ? Aras and Autodesk might also claim they have a PLM platform, where you might question the same and if the depth of the data model and the provided solutions on top of the data model are mature enough. Finally also SAP can be considered as a platform, but I would not name it a PLM platform at this moment in time. An important question for me would be: How can achieve openness of a PLM platform?

Your thoughts?

The PLM backbone approach

My definition of a PLM backbone:

- The core PLM functionality is provided by a single, proprietary PLM system

- Additional functionality that is not part of the core development (acquisitions) is connected to the backbone through proprietary interfaces

- External authoring tools are linked to the backbone through integrations or interfaces which could be developed by third parties

- External system can interface to the PLM backbone through open interfaces

- The PLM backbone is THE DREAM for engineering, as historically this was the domain where PLM started to be implemented

I would consider Siemens and PTC (see picture) the best examples of a PLM backbone approach with their PLM portfolio. Teamcenter and Windchill are both rich PLM systems further connected to several systems, covering the product lifecycle. I am not expert enough to state that the same conclusion is valid for Oracle´s Agile, where I believe the backbone is bigger than the PLM system. What do you think ? Will these PLM vendors also move to a platform approach? And what will be the platform?

I would consider Siemens and PTC (see picture) the best examples of a PLM backbone approach with their PLM portfolio. Teamcenter and Windchill are both rich PLM systems further connected to several systems, covering the product lifecycle. I am not expert enough to state that the same conclusion is valid for Oracle´s Agile, where I believe the backbone is bigger than the PLM system. What do you think ? Will these PLM vendors also move to a platform approach? And what will be the platform?

The Service Bus approach

My understanding of the Service Bus (I am not an IT-expert):

- Service Bus has a standardized interface to request for data or to post data that needs to be stored in other systems

- The Service Bus approach reduces the amount of (custom) interfaces between systems by requiring standardized inputs and outputs per system

- Providing a user with information that is not entirely available in a single system, the service bus needs to acquire the data from other systems, which might not give a high-performance as expected by business people

- The Service Bus is the IT DREAM as it simplifies the complexity for IT to manage point-to-point solutions between systems and makes an upgrade strategy easier to support.

From a very high-level view, the service bus approach has some similarities to a platform. The service bus concept allows business to select the systems they like the most (provided they connect to the service bus) – Image property of IBM.com

From a very high-level view, the service bus approach has some similarities to a platform. The service bus concept allows business to select the systems they like the most (provided they connect to the service bus) – Image property of IBM.com

The main difference would be the persistence of information, where is the real data stored? I came across the service bus approach more often in the past, where the target was most of the time to integrate the PDM functionality (PLM as an enterprise solution was never in scope here).

For the Service Bus approach, I am curious to learn its relevance for future PLM implementations as the challenge would be to provide any user in the company with the relevant information in context. Is the service bus going to be replaced by the platform? Who would be the major players here?

The Business Intelligence approach

This method I discovered in project-centric companies (Oil & Gas companies, EPCs, Construction companies) but strangely enough also at some manufacturing companies, where I would assume integration of systems would bring large benefits.

- Each type of information is managed only in one single system avoiding interfaces or duplication of data.

- Only where needed, data will be pushed from one system to other systems

- Business Intelligence applications extract information from the relevant system and present this in context to the user, giving him/her a better of understanding

- Business users will work have to work in multiple systems to complete their tasks

- The BI approach is the ULTIMATE IT DREAM as it simplifies their works dramatically and shuts down business demands.

I have seen an example where IT dictated that for document management we use product ABC (well-known Content Management system). Next for internal documents we use SharePoint. For CAD, we use product PQR as much as possible (heavily adapted) or AutoCAD 2D (to support the minimum). For ERP, the standard system is XYZ (a famous ERP system – you do not lose your job by selecting them) and of course everyone uses Excel as a common interface of information between people.

I have seen an example where IT dictated that for document management we use product ABC (well-known Content Management system). Next for internal documents we use SharePoint. For CAD, we use product PQR as much as possible (heavily adapted) or AutoCAD 2D (to support the minimum). For ERP, the standard system is XYZ (a famous ERP system – you do not lose your job by selecting them) and of course everyone uses Excel as a common interface of information between people.

It was impossible in this company to have a business view on the solution landscape. As you can imagine, this company’s margins are not (yet) under pressure as their industry is very conservative.

What do you think?

Is the future for PLM in platforms? If Yes, what about openness? Who are the candidates to offer such a platform? Or will lack of industry standards and openness block wider adoption? If No, will there be a massive PLM system in the future, connected to other enterprise systems (ERP/CRM)? Or will PLM be implemented as a collection of smaller systems communicating through an enterprise service bus?

I am looking forward discussing the topic here and soon during the upcoming Product Innovation conference in Düsseldorf

Enterprises that have already a PDM/PLM system in place for several years should not implement the best practices. They have reached the level that the inhibitors off a monolithic, document based environment are becoming clear.

Enterprises that have already a PDM/PLM system in place for several years should not implement the best practices. They have reached the level that the inhibitors off a monolithic, document based environment are becoming clear.

Hi Jos, Having been involved in multiple tool implementations, one other drawback to smart numbering is that tools need to…

Good day Jos, I was involved in many implementations over the years (including) Philips…. Indeed smart part numbers was a…

If one were starting out a brand new company, you could start a part numbering sequence at 100 and just…

Jos, as always a great report. Agree with you, the agenda and the presentations was a bit of Classic PLM…

Another Interesting article, I also see this kind of development in our company where terminology shifts and approach methods change.…