You are currently browsing the tag archive for the ‘Data Model’ tag.

So far, I have been discussing PLM experiences and best practices that have changed due to introducing electronic drawings and affordable 3D CAD systems for the mainstream. From vellum to PDM to item-centric PLM to manage product designs and manufacturing specifications.

So far, I have been discussing PLM experiences and best practices that have changed due to introducing electronic drawings and affordable 3D CAD systems for the mainstream. From vellum to PDM to item-centric PLM to manage product designs and manufacturing specifications.

Although the technology has improved, the overall processes haven’t changed so much. As a result, disciplines could continue to work in their own comfort zone, most of the time hidden and disconnected from the outside world.

Now, thanks to digitalization, we can connect and format information in real-time. Now we can provide every stakeholder in the company’s business to have almost real-time visibility on what is happening (if allowed). We have seen the benefits of platformization, where the benefits come from real-time connectivity within an ecosystem.

Now, thanks to digitalization, we can connect and format information in real-time. Now we can provide every stakeholder in the company’s business to have almost real-time visibility on what is happening (if allowed). We have seen the benefits of platformization, where the benefits come from real-time connectivity within an ecosystem.

Apple, Amazon, Uber, Airbnb are the non-manufacturing related examples. Companies are trying to replicate these models for other businesses, connecting the concept owner (OEM ?), with design and manufacturing (services), with suppliers and customers. All connected through information, managed in data elements instead of documents – I call it connected PLM

Vendors have already shared their PowerPoints, movies, and demos from how the future would be in the ideal world using their software. The reality, however, is that implementing such solutions requires new business models, a new type of organization and probably new skills.

Vendors have already shared their PowerPoints, movies, and demos from how the future would be in the ideal world using their software. The reality, however, is that implementing such solutions requires new business models, a new type of organization and probably new skills.

The last point is vital, as in schools and organizations, we tend to teach what we know from the past as this gives some (fake) feeling of security.

The reality is that most of us will have to go through a learning path, where skills from the past might become obsolete; however, knowledge of the past might be fundamental.

The reality is that most of us will have to go through a learning path, where skills from the past might become obsolete; however, knowledge of the past might be fundamental.

In the upcoming posts, I will share with you what I see, what I deduct from that and what I think would be the next step to learn.

I firmly believe connected PLM requires the usage of various models. Not only the 3D CAD model, as there are so many other models needed to describe and analyze the behavior of a product.

I hope that some of my readers can help us all further on the path of connected PLM (with a model-based approach). This series of posts will be based on the max size per post (avg 1500 words) and the ideas and contributes coming from you and me.

I hope that some of my readers can help us all further on the path of connected PLM (with a model-based approach). This series of posts will be based on the max size per post (avg 1500 words) and the ideas and contributes coming from you and me.

What is platformization?

In our day-to-day life, we are more and more used to direct interaction between resellers and services providers on one side and consumers on the other side. We have a question, and within 24 hours, there is an answer. We want to purchase something, and potentially the next day the goods are delivered. These are examples of a society where all stakeholders are connected in a data-driven manner.

We don’t have to create documents or specialized forms. An app or a digital interface allows us to connect. To enable this type of connectivity, there is a need for an underlying platform that connects all stakeholders. Amazon and Salesforce are examples for commercial activities, Facebook for social activities and, in theory, LinkedIn for professional job activities.

We don’t have to create documents or specialized forms. An app or a digital interface allows us to connect. To enable this type of connectivity, there is a need for an underlying platform that connects all stakeholders. Amazon and Salesforce are examples for commercial activities, Facebook for social activities and, in theory, LinkedIn for professional job activities.

The platform is responsible for direct communication between all stakeholders.

The same applies to businesses. Depending on the products or services they deliver, they could benefit from one or more platforms. The image below shows five potential platforms that I identified in my customer engagements. Of course, they have a PLM focus (in the middle), and the grouping can be made differently.

The 5 potential platforms

The ERP platform

is mainly dedicated to the company’s execution processes – Human Resources, Purchasing, Finance, Production scheduling, and potentially many more services. As platforms try to connect as much as possible all stakeholders. The ERP platform might contain CRM capabilities, which might be sufficient for several companies. However, when the CRM activities become more advanced, it would be better to connect the ERP platform to a CRM platform. The same logic is valid for a Product Innovation Platform and an ERP platform. Examples of ERP platforms are SAP and Oracle (and they will claim they are more than ERP)

is mainly dedicated to the company’s execution processes – Human Resources, Purchasing, Finance, Production scheduling, and potentially many more services. As platforms try to connect as much as possible all stakeholders. The ERP platform might contain CRM capabilities, which might be sufficient for several companies. However, when the CRM activities become more advanced, it would be better to connect the ERP platform to a CRM platform. The same logic is valid for a Product Innovation Platform and an ERP platform. Examples of ERP platforms are SAP and Oracle (and they will claim they are more than ERP)

Note: Historically, most companies started with an ERP system, which is not the same as an ERP platform. A platform is scalable; you can add more apps without having to install a new system. In a platform, all stored data is connected and has a shared data model.

The CRM platform

a platform that is mainly focusing on customer-related activities, and as you can see from the diagram, there is an overlap with capabilities from the other platforms. So again, depending on your core business and products, you might use these capabilities or connect to other platforms. Examples of CRM platforms are Salesforce and Pega, providing a platform to further extend capabilities related to core CRM.

a platform that is mainly focusing on customer-related activities, and as you can see from the diagram, there is an overlap with capabilities from the other platforms. So again, depending on your core business and products, you might use these capabilities or connect to other platforms. Examples of CRM platforms are Salesforce and Pega, providing a platform to further extend capabilities related to core CRM.

The MES platform

In the past, we had PDM and ERP and what happened in detail on the shop floor was a black box for these systems. MES platforms have become more and more important as companies need to trace and guide individual production orders in a data-driven manner. Manufacturing Execution Systems (and platforms) have their own data model. However, they require input from other platforms and will provide specific information to other platforms.

In the past, we had PDM and ERP and what happened in detail on the shop floor was a black box for these systems. MES platforms have become more and more important as companies need to trace and guide individual production orders in a data-driven manner. Manufacturing Execution Systems (and platforms) have their own data model. However, they require input from other platforms and will provide specific information to other platforms.

For example, if we want to know the serial number of a product and the exact production details of this product (used parts, quality status), we would use an MES platform. Examples of MES platforms (none PLM/ERP related vendors) are Parsec and Critical Manufacturing

The IoT platform

these platforms are new and are used to monitor and manage connected products. For example, if you want to trace the individual behavior of a product of a process, you need an IoT platform. The IoT platform provides the product user with performance insights and alerts.

these platforms are new and are used to monitor and manage connected products. For example, if you want to trace the individual behavior of a product of a process, you need an IoT platform. The IoT platform provides the product user with performance insights and alerts.

However, it also provides the product manufacturer with the same insights for all their products. This allows the manufacturer to offer predictive maintenance or optimization services based on the experience of a large number of similar products. Examples of IoT platforms (none PLM/ERP-related vendors) are Hitachi and Microsoft.

The Product Innovation Platform (PIP)

All the above platforms would not have a reason to exist if there was not an environment where products were invented, developed, and managed. The Product Innovation Platform PIP – as described by CIMdata -is the place where Intellectual Property (IP) is created, where companies decide on their portfolio and more.

All the above platforms would not have a reason to exist if there was not an environment where products were invented, developed, and managed. The Product Innovation Platform PIP – as described by CIMdata -is the place where Intellectual Property (IP) is created, where companies decide on their portfolio and more.

The PIP contains the traditional PLM domain. It is also a logical place to manage product quality and technical portfolio decisions, like what kind of product platforms and modules a company will develop. Like all previous platforms, the PIP cannot exist without other platforms and requires connectivity with the other platforms is applicable.

Look below at the CIMdata definition of a Product Innovation Platform.

You will see that most of the historical PLM vendors aiming to be a PIP (with their different flavors): Aras, Dassault Systèmes, PTC and Siemens.

Of course, several vendors sell more than one platform or even create the impression that everything is connected as a single platform. Usually, this is not the case, as each platform has its specific data model and combining them in a single platform would hurt the overall performance.

Of course, several vendors sell more than one platform or even create the impression that everything is connected as a single platform. Usually, this is not the case, as each platform has its specific data model and combining them in a single platform would hurt the overall performance.

Therefore, the interaction between these platforms will be based on standardized interfaces or ad-hoc connections.

Standard interfaces or ad-hoc connections?

Suppose your role and information needs can be satisfied within a single platform. In that case, most likely, the platform will provide you with the right environment to see and manipulate the information.

However, it might be different if your role requires access to information from other platforms. For example, it could be as simple as an engineer analyzing a product change who needs to know the actual stock of materials to decide how and when to implement a change.

However, it might be different if your role requires access to information from other platforms. For example, it could be as simple as an engineer analyzing a product change who needs to know the actual stock of materials to decide how and when to implement a change.

This would be a PIP/ERP platform collaboration scenario.

Or even more complex, it might be a product manager wanting to know how individual products behave in the field to decide on enhancements and new features. This could be a PIP, CRM, IoT and MES collaboration scenario if traceability of serial numbers is needed.

Or even more complex, it might be a product manager wanting to know how individual products behave in the field to decide on enhancements and new features. This could be a PIP, CRM, IoT and MES collaboration scenario if traceability of serial numbers is needed.

The company might decide to build a custom app or dashboard for this role to support such a role. Combining in real-time data from the relevant platforms, using standard interfaces (preferred) or using API’s, web services, REST services, microservices (for specialists) and currently in fashion Low-Code development platforms, which allow users to combine data services from different platforms without being an expert in coding.

Without going too much in technology, the topics in this paragraph require an enterprise architecture and vision. It is opportunistic to think that your existing environment will evolve smoothly into a digital highway for the future by “fixing” demands per user. Your infrastructure is much more likely to end up congested as spaghetti.

Without going too much in technology, the topics in this paragraph require an enterprise architecture and vision. It is opportunistic to think that your existing environment will evolve smoothly into a digital highway for the future by “fixing” demands per user. Your infrastructure is much more likely to end up congested as spaghetti.

In that context, I read last week an interesting post Low code: A promising trend or Pandora’s box. Have a look and decide for yourself

I am less focused on technology, more on methodology. Therefore, I want to come back to the theme of my series: The road to model-based and connected PLM. For sure, in the ideal world, the platforms I mentioned, or other platforms that run across these five platforms, are cloud-based and open to connect to other data sources. So, this is the infrastructure discussion.

In my upcoming blog post, I will explain why platforms require a model-based approach and, therefore, cause a challenge, particularly in the PLM domain.

It took us more than fifty years to get rid of vellum drawings. It took us more than twenty years to introduce 3D CAD for design and engineering. Still primarily relying on drawings. It will take us for sure one generation to switch from document-based engineering to model-based engineering.

It took us more than fifty years to get rid of vellum drawings. It took us more than twenty years to introduce 3D CAD for design and engineering. Still primarily relying on drawings. It will take us for sure one generation to switch from document-based engineering to model-based engineering.

Conclusion

In this post, I tried to paint a picture of the ideal future based on connected platforms. Such an environment is needed if we want to be highly efficient in designing, delivering, and maintaining future complex products based on hardware and software. Concepts like Digital Twin and Industry 4.0 require a model-based foundation.

In addition, we will need Digital Twins to reach our future sustainability goals efficiently. So, there is work to do.

Your opinion, Your contribution?

I believe we are almost at the end of learning from the past. We have seen how, from an initial serial CAD-driven approach with PDM, we evolved to PLM-managed structures, the EBOM and the MBOM. Or to illustrate this statement, look at the image below, where I use a Tech-Clarity image from Jim Brown.

The image on the right describes perfectly the complementary roles of PLM and ERP. The image on the left shows the typical PDM-approach. PDM feeding ERP in a linear process. The image on the right, I believe it is from 2004, shows the best practice before digital transformation. PLM is supporting product innovation in an iterative approach, pushing released information to ERP for execution.

As I think in images, I like the concept of a circle for PLM and an arrow for ERP. I am always using those two images in discussions with my customers when we want to understand if a particular activity should be in the PLM or ERP-domain.

As I think in images, I like the concept of a circle for PLM and an arrow for ERP. I am always using those two images in discussions with my customers when we want to understand if a particular activity should be in the PLM or ERP-domain.

Ten years ago, the PLM-domain was conceptually further extended by introducing support for products in operations and service. Similar to the EBOM (engineering) and the MBOM (manufacturing), the SBOM (service) was introduced to support product information for products in operation. In theory a full connected cicle.

Asset Lifecycle Management

At the same time, I was promoting PLM-practices for owners/operators to enhance Asset Lifecycle Management. My first post from June 2010 was called: PLM for Asset Lifecycle Management and Asset Development introduces this approach.

Conceptually the SBOM and Asset Lifecycle Management have a lot in common. There is a design product, in this case, an asset (plant, machine) running in the field, and we need to make sure operators have the latest information about the asset. And in case of asset changes, which can be a maintenance operation, a repair or complete overall, we need to be sure the changes are based on the correct information from the as-built environment. This requires full configuration management.

Conceptually the SBOM and Asset Lifecycle Management have a lot in common. There is a design product, in this case, an asset (plant, machine) running in the field, and we need to make sure operators have the latest information about the asset. And in case of asset changes, which can be a maintenance operation, a repair or complete overall, we need to be sure the changes are based on the correct information from the as-built environment. This requires full configuration management.

Asset changes can be based on extensive projects that need to be treated like new product development projects, with a staged approach that can take weeks, months, sometimes years. These activities are typical activities performed in PLM-systems, not in MRO-systems that are designed to manage the actual operation. Again here we see the complementary roles of PLM (iterative) and MRO (execution).

Since 2008, I have worked a lot in this environment, mainly in the nuclear and process industry. If you want to learn more about this aspect of PLM, I recommend looking at the PLMpartner website, where Bjørn Fidjeland, in cooperation with SharePLM, published a course on Plant Information Management. We worked together in several projects and Bjørn has done a great effort to describe the logical model to be used instead of a function-feature story.

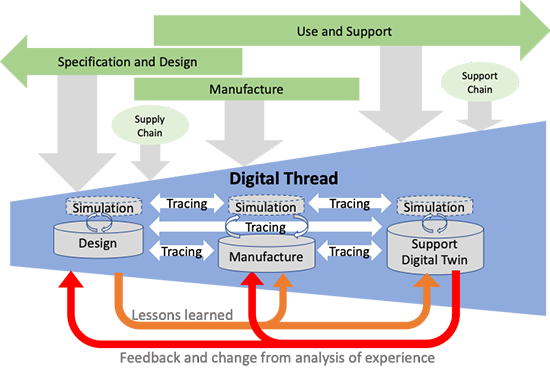

Ten years ago, we were not calling this concept the “Digital Twin,” as the aim was to provide end-to-end support of asset information from engineering, procurement, and construction towards operation in a coordinated manner. The breaking point in the relation between the EPCs and Owner/Operators is the data-handover – how much of your IP can/do you expose and what is needed. Nowadays, we would call striving for end-to-end data continuity the Digital Thread.

Hot from the press in this context, CIMdata just published a commentary Managing the Digital Thread in Global Value Chains describing Eurostep’s ShareAspace capabilities and experiences in managing an end-to-end information flow (Digital Thread) in a heterogeneous environment based on exchange standards like ISO 10303-239 PLCS. Their solution is based on what I consider a more modern approach for managing digital continuity compared to the traditional approach I described before. Compare the two images in this paragraph. The first image represents the old/current way with a disconnected handover, the second represents ShareAspace connected approach based on a real digital thread.

The Service BOM

As discussed with Asset Lifecycle Management, there is a disconnect between the engineering disciplines and operations in the field, looking from the point of view of an Asset owner/operator.

Now when we look from the perspective of a manufacturing company that produces assets to be serviced, we can identify a different dataflow and a new structure, the Service BOM (SBOM).

The SBOM provides information on how a product needs to be serviced. What are the parts that require service, and what are the service kits that are possible for that product? For that reason, service engineering should be done in parallel to product engineering. When designing a product, the engineer needs to identify which the wearing parts (always require service in time) and which parts might be serviceable.

The SBOM provides information on how a product needs to be serviced. What are the parts that require service, and what are the service kits that are possible for that product? For that reason, service engineering should be done in parallel to product engineering. When designing a product, the engineer needs to identify which the wearing parts (always require service in time) and which parts might be serviceable.

There are different ways to look at the SBOM. Conceptually, the SBOM could be created in close relation with the EBOM. At the moment you define your product, you also should specify how the product will be serviced. See the image below

From this example, it is clear that part standardization and modularization have a considerable benefit for services downstream. What if you have only one serviceable part that applies to many products? The number of parts to have in stock will be strongly reduced instead of having many similar parts that only fit in a single product?

Depending on the type of product, the SBOM can be generic, serving many products in the field. In that case, the company has to deal with catalogs, to be defined in PLM. Or the SBOM can be aligned with the As-Built of a capital product in the field. In that case, the concepts of Asset Lifecycle Management apply. Click on the image to see a clear picture.

Depending on the type of product, the SBOM can be generic, serving many products in the field. In that case, the company has to deal with catalogs, to be defined in PLM. Or the SBOM can be aligned with the As-Built of a capital product in the field. In that case, the concepts of Asset Lifecycle Management apply. Click on the image to see a clear picture.

![]() The SBOM on its own, in such an environment, will have links to specific documents, service instructions, operating manuals.

The SBOM on its own, in such an environment, will have links to specific documents, service instructions, operating manuals.

If your PLM-system allows it, extending the EBOM and MBOM with an SBOM is not a complex effort. What is crucial to understand is that the SBOM has its own lifecycle, which can even last longer than the active product sold. So sometimes, manufacturing specifications, related to service parts need to be maintained too, creating a link between the SBOM and potential MBOM(s).

ECM = Enterprise Change Management

When I discussed ECM in my previous post in the context of Engineering Change Management, I got the feedback that nowadays, everyone talks about Enterprise Change Management. Engineering Change Management is old school.

In the past, and even in a 2014 benchmark, a customer had two change management systems. One in PLM and one in ERP, and companies were looking into connecting these two processes. Like the BOM-interaction between PLM and ERP, this is technology-wise, never a real problem.

The real problem in such situations was to come to a logical flow of events. Many times the company insisted that every change should start from the ERP-system as we like to standardize. This means that even an engineering change had to be registered first in the ERP-system

The real problem in such situations was to come to a logical flow of events. Many times the company insisted that every change should start from the ERP-system as we like to standardize. This means that even an engineering change had to be registered first in the ERP-system

Luckily the reach of PLM has grown. PLM is no longer the engineering tool (IT-system thinking). PLM has become the information backbone for product information all along the product lifecycle. Having the MBOM and SBOM available through a PLM-infrastructure allows organizations to streamline their processes.

And in this modern environment, enterprise change management might take place mostly in a PLM-infrastructure. The PLM-infrastructure providing a digital thread, as the Aras picture above illustrates, provides the full traceability to support configuration management.

However, we still have to remember that configuration management and engineering change management, first of all, are based on methodology and processes. Next, the combination of tools to be used will vary.

However, we still have to remember that configuration management and engineering change management, first of all, are based on methodology and processes. Next, the combination of tools to be used will vary.

I like to conclude this topic with a quote from Lee Perrin’s comment on my previous blog post

I would add that aerospace companies implemented CM, to avoid fatal consequences to their companies, but also to their flying customers.

PLM provides the framework within which to carry out Configuration Management. CM can indeed be carried out without PLM, as was done in the old paper-based days. As you have stated, PLM makes the whole CM process much more efficient. I think more transparent too.

Conclusion

After nine posts around the theme Learning from the past to understand the future, I walked through the history of CAD, PDM and PLM in a fast mode, pointing to practices and friction points. In the blogging space, it is hard to find this information as most blog posts are coming from software vendors explaining why their tool is needed. Hopefully, these series have helped many of you to understand a broader context. Now I want to focus on the future again in my upcoming blog posts.

Still, feel free to contact me and discuss methodology topics.

Already five posts since we started looking at the roots of PLM, where every step illustrated that new technical capabilities could create opportunities for better practices. Alternatively, sometimes, these capabilities introduced complexity while maintaining old practices. Where the previous posts were design and engineering-centric, now I want to make the step moving to manufacturing-preparation and the MBOM. In my opinion, if you start to manage your manufacturing BOM in the context of your product design, you are in the scope of PLM.

Already five posts since we started looking at the roots of PLM, where every step illustrated that new technical capabilities could create opportunities for better practices. Alternatively, sometimes, these capabilities introduced complexity while maintaining old practices. Where the previous posts were design and engineering-centric, now I want to make the step moving to manufacturing-preparation and the MBOM. In my opinion, if you start to manage your manufacturing BOM in the context of your product design, you are in the scope of PLM.

For the moment, I will put two other related domains aside, i.e., Configuration Management and Configured Products. Note these domains are entirely different from each other.

Some data model principles

In part five, I introduced the need to have a split between a logical product definition and a technical EBOM definition. The logical product definition is more the system or modular structure to be used when configuring solutions for a customer. The technical EBOM definition is, most of the time, a stable engineering specification independent of how and where the product is manufactured. The manufacturing BOM (the MBOM) should represent how the product will be manufactured, which can vary per location and vary over time. Let us look in some of the essential elements of this data model

In part five, I introduced the need to have a split between a logical product definition and a technical EBOM definition. The logical product definition is more the system or modular structure to be used when configuring solutions for a customer. The technical EBOM definition is, most of the time, a stable engineering specification independent of how and where the product is manufactured. The manufacturing BOM (the MBOM) should represent how the product will be manufactured, which can vary per location and vary over time. Let us look in some of the essential elements of this data model

The Product

The logical definition of the product, which can also be a single component if you are a lower tier-supplier, has an understandable number, like 6030-10B. A customer needs to be able to order this product or part without a typo mistake. The product has features or characteristics that are used to sell the product. Usually, products do not have a revision, as it is a logical definition of a set of capabilities. Most of the time, marketing is responsible for product definition. This would be the sales catalog, which can be connected in a digital PLM environment. Like the PDM-ERP relation, there is a similar discussion related to where the catalog resides—more on the product side later in time.

The logical definition of the product, which can also be a single component if you are a lower tier-supplier, has an understandable number, like 6030-10B. A customer needs to be able to order this product or part without a typo mistake. The product has features or characteristics that are used to sell the product. Usually, products do not have a revision, as it is a logical definition of a set of capabilities. Most of the time, marketing is responsible for product definition. This would be the sales catalog, which can be connected in a digital PLM environment. Like the PDM-ERP relation, there is a similar discussion related to where the catalog resides—more on the product side later in time.

The EBOM

Related to the product or component in the logical definition, there is an actual EBOM, which represents the technical specification of the product. The image above shows the relation represented by the blue “current” link.

Note: not all systems will support such a data model, and often the marketing sides in managed disconnected from the engineering side. Either in Excel or in a specialized Product Line Engineering (PLE) tools.

Note: not all systems will support such a data model, and often the marketing sides in managed disconnected from the engineering side. Either in Excel or in a specialized Product Line Engineering (PLE) tools.

We discussed in the previous post that if you want to minimize maintenance, meaning fewer revisions on your EBOM, you should not embed manufacturer-specific parts in your EBOM.

The EBOM typically contains purchase parts and make parts. The purchased parts are sourced based on their specification, and you might have a single source in the beginning. The make parts are entirely under your engineering control, and you define where they are produced and by whom. For the rest, the EBOM might have functional groupings of modules and subassemblies that are defined for reuse by engineering.

The EBOM typically contains purchase parts and make parts. The purchased parts are sourced based on their specification, and you might have a single source in the beginning. The make parts are entirely under your engineering control, and you define where they are produced and by whom. For the rest, the EBOM might have functional groupings of modules and subassemblies that are defined for reuse by engineering.

Note: An EBOM is the place where multidisciplinary collaboration comes together. This post mainly deals with the mechanical part (as we are looking at the past)

Note: An EBOM is the place where multidisciplinary collaboration comes together. This post mainly deals with the mechanical part (as we are looking at the past)

Note: An EBOM can contain multiple valid configurations which you can filter based on a customer or market-specific demand. In this case, we talk about a Configured EBOM or a 150 % EBOM.

Note: An EBOM can contain multiple valid configurations which you can filter based on a customer or market-specific demand. In this case, we talk about a Configured EBOM or a 150 % EBOM.

The MBOM

The MBOM represents the way the unique product is going to be manufactured. This means the MBOM-structure will represent the manufacturing steps. For each EBOM-purchase-part, the approved manufacturer for that plant needs to be selected. For each make-part in the EBOM, if made in this plant per customer order, the EBOM parts need to be resolved by one or more manufacturing steps combined with purchased materials.

The MBOM represents the way the unique product is going to be manufactured. This means the MBOM-structure will represent the manufacturing steps. For each EBOM-purchase-part, the approved manufacturer for that plant needs to be selected. For each make-part in the EBOM, if made in this plant per customer order, the EBOM parts need to be resolved by one or more manufacturing steps combined with purchased materials.

Let us look at some examples:

The flat MBOM

Some companies do not have real machinery anymore in their plants, the product they deliver to the market is only assembled at the best financial location. This means that all MBOM-parts should arrive at the shop floor to be assembled there. As an example, we have plant A below.

Of course, this is a simplified version to illustrate the basics of the MBOM. The flat MBOM only makes sense if the product is straightforward to assemble. Based on the engineering specifications, the assembly drawing(s) people on the shop floor will know what to do.

The engineering definition specifies that the chassis needs to be painted, and fitting the axles requires grease. These quantities are not visible in the EBOM; they will appear in the MBOM. The quantities and the unit of measure are, of course, relevant here.

Note: The exact quantities for paint and grease might be adjusted in the MBOM when a series of Squads have been manufactured.

The MBOM and Bill of Process

Most of the time, a product is manufactured in several process steps. For that reason, the MBOM is closely related to the Bill of Process or the Routing definitions. The image below illustrates the relationship between an MBOM and the operations in a plant.

If we continue with our example of the Squad, let us now assume that the wheels and the axle are joined together in a work cell. In addition, the chassis is painted in a separate cell. The MBOM would look like the image below:

In the image, we see that the same Engineering definition now results in a different MBOM. A company can change the MBOM when optimizing the production, without affecting the engineering definition. In this MBOM, the Axle assembly might also be used in other squads manufactured by the company.

The MBOM and purchased parts

In the previous example, all components for the Squad were manufactured by the same company with the option to produce in Plant A or in Plant B. Now imagine the company also has a plant C in a location where they cannot produce the wheels and axle assembly. Therefore plant C has to “purchase” the Wheel-Axle assembly, and lucky for them plant B is selling the Wheel+Axle assembly to the market as a product.

In the previous example, all components for the Squad were manufactured by the same company with the option to produce in Plant A or in Plant B. Now imagine the company also has a plant C in a location where they cannot produce the wheels and axle assembly. Therefore plant C has to “purchase” the Wheel-Axle assembly, and lucky for them plant B is selling the Wheel+Axle assembly to the market as a product.

The MBOM for plant C would look like the image below:

For Plant C, they will order the right amount of the Wheel+Axle product, according to its specifications (HF-D240). How the Wheel+Axle product is manufactured is invisible for Plant C, the only point to check is if the Wheel+Axle product complies with the Engineering Definition and if its purchase price is within the target price range.

Why this simple EBOM-MBOM story?

For those always that have been active in the engineering domain, a better understanding of the information flow downstream to manufacturing is crucial. Historically this flow of information has been linear – and in many companies, it is still the fact. The main reason for that lies in the fact that engineering had their own system (PDM or PLM), and manufacturing has their own system (ERP).

For those always that have been active in the engineering domain, a better understanding of the information flow downstream to manufacturing is crucial. Historically this flow of information has been linear – and in many companies, it is still the fact. The main reason for that lies in the fact that engineering had their own system (PDM or PLM), and manufacturing has their own system (ERP).

Engineers did their best to provide the best engineering specification and release the data to ERP. In the early days, as discussed in Part 4, the engineering specification was most of the time based on a kind of hybrid BOM containing engineering and manufacturing parts already defined.

Next, manufacturing engineering uses the engineering specifications to define the manufacturing BOM in the ERP system. Based on the drawings and parts list, they create a preferred manufacturing process (MBOM and BOP) – most of the time, a manual process. Despite the effort done by engineering, there might be a need to change the product. A different shape or dimension make manufacturing more efficient or done with existing tooling. This means an iteration, which causes delays and higher engineering costs.

Next, manufacturing engineering uses the engineering specifications to define the manufacturing BOM in the ERP system. Based on the drawings and parts list, they create a preferred manufacturing process (MBOM and BOP) – most of the time, a manual process. Despite the effort done by engineering, there might be a need to change the product. A different shape or dimension make manufacturing more efficient or done with existing tooling. This means an iteration, which causes delays and higher engineering costs.

The first optimization invented was the PDM-ERP interface to reduce the manual work and introduction of typos/misunderstanding of data. This topic was “hot” between 2000 and 2010, and I visited many SmarTeam customers and implementers to learn and later explain that this is a mission impossible. The picture below says it all.

We have an engineering BOM (with related drawings). Through an interface, this EBOM will be restructured into a manufacturing BOM, thanks to all kinds of “clever” programming based on particular attributes. Discussed in Part 3

The result, however, was that the interface was never covering all situations and became the most expensive part of the implementation.

The result, however, was that the interface was never covering all situations and became the most expensive part of the implementation.

Good business for the implementing companies, bad for the perception of PDM/PLM.

The lesson learned from all these situations: If you have a PLM-system that can support both the EBOM and MBOM in the same environment, you do not need this complex interface anymore. You can still use some automation to move from an EBOM to an MBOM.

However, three essential benefits come from this approach

- Working in a single environment allows manufacturing engineers to work directly in the context of the EBOM, proposing changes to engineering in the same environment and perform manual restructuring on the MBOM as programming logic does not exist. Still, compare tools will ensure all EBOM-parts are resolved in the manufacturing definition.

- All product Intellectual Property is now managed in a single environment. There is no scattered product information residing in local ERP-systems. When companies moved towards multiple plants for manufacturing, there was the need for a centralized generic MBOM to be resolved for the local plant (local suppliers / local plant conditions). Having the generic MBOM and Bill of Process in PLM was the solution.

- When engineers and manufacturing engineers work in the same environment, manufacturing engineering can start earlier with the manufacturing process definition, providing early feedback to engineering even when the engineering specification has not been released. This approach allows real concurrent engineering, reducing time to market and cost significantly

Conclusion

Again 1600 words this time. We are now at the stage that connecting the EBOM and the MBOM in PLM has become a best practice in most standard PLM-systems. If implemented correctly, the interface to ERP is no longer on the critical path – the technology never has been the limitation – it is all about methodology.

Next time a little bit more on advanced EBOM/MBOM interactions

One week ago, Yoann Maingon wrote an innocent post with the question: Has FFF killed? The question was raised related to a 2014 problem at GM, where a changed part was causing fatal accidents.

The discussion started by Yoann and here my short extract. Assuming this problem was a configuration management issue and Yoann somehow indicated that the problem might be related to the fact that ERP-systems do not carry a revision on the part number – leading to an unnoticed change. Therefore, he assumes there is a disconnect between the PLM-side (where we have parts with multiple lifecycle states and revisions) and ERP (where we have an industrial lifecycle – prototype/production).

The discussion started by Yoann and here my short extract. Assuming this problem was a configuration management issue and Yoann somehow indicated that the problem might be related to the fact that ERP-systems do not carry a revision on the part number – leading to an unnoticed change. Therefore, he assumes there is a disconnect between the PLM-side (where we have parts with multiple lifecycle states and revisions) and ERP (where we have an industrial lifecycle – prototype/production).

He posted his thoughts, and then LinkedIn exploded (currently 116 comments), which means it is a topic that is of significant concern in our community. Next, if you read the comments, there are different viewpoints:

- What does FFF really imply?

- What about revisions of parts?

- What are the best practices?

Let’s investigate these viewpoints with some comments

What does FFF really imply?

When we talk about FFF in engineering, we mean Form, Fit and Function – the three primary characteristics to describe a part (source Wikipedia)

- Form refers to such characteristics as external dimensions, weight, size, and visual appearance of a part or assembly. This is the element of FFF that is most affected by an engineer’s aesthetic choices, including enclosure, chassis, and control panel, that become the outward “face” of the product.

- Fit refers to the ability of the part or feature to connect to, mate with, or join to another feature or part within an assembly. The “fit” allows the part to meet the required assembly tolerances to be useful.

- Function is a criterion that is met when the part performs its stated purpose effectively and reliably. In an electronics product, for example, a function can depend on the solid-state components used, the software or firmware, and quite often on the features of the electronics enclosure selected.

One of the comments in Yoann’s post referred to Safe/Unsafe as a potential functional characteristic. I think this addition is not needed. Safety should be a requirement for the part, not a characteristic.

One of the comments in Yoann’s post referred to Safe/Unsafe as a potential functional characteristic. I think this addition is not needed. Safety should be a requirement for the part, not a characteristic.

FFF was and still is an approach for engineers to decide if a new, improved version of the part would get a revision or needs a new part number.

I think before we dive deeper into the other viewpoints, it is crucial to define the part number a little more.

In a correct PLM data model, there are two types of part numbers. First, the internal part number that your company uses inside its engineering Bill of Materials to identify a part. This part number can be a meaningless part only to provide uniqueness inside the company.

In 2015 I wrote several posts related to best practices and data modeling for PLM. The most relevant posts to this discussion are here:

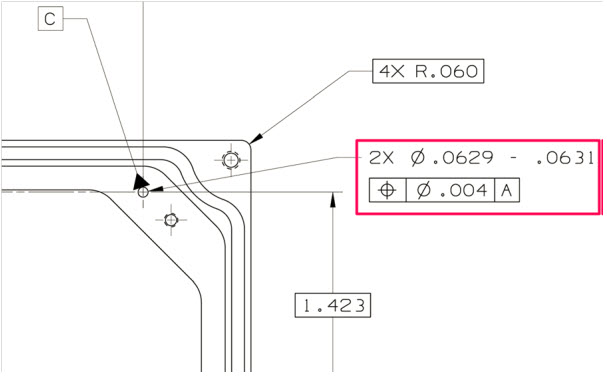

The part number can specify a part that needs to be manufactured according to specification, or it can be a part that needs to be purchased from an available supplier/manufacturer. The manufacturer part number is, most of the time, a meaningful number (6 – 7 characters) as these parts need to be ordered by your company. The manufacturer part number is the SKU for the manufacturer. As you can imagine in the manufacturer’s catalog, there isn’t a revision mentioned. In graphics, see the image below:

Your company might sell Product MP-323121 (note: the ID is meaningful to help the customer to order the product).

Internally there is a related EBOM that specifies the product. The EBOM top part is O122 (note: here, we can use a meaningless identifier as all is digitally connected).

For the manufacturing of O122, we need to resolve the EBOM according to its specifications. Therefore, for Part O124, the company needs to decide to purchase from their approved manufacturers either part ABC-21231 or XYZ-88818 (note: again, a meaningful ID as these companies are not digitally connected).

Now coming back to the FFF-discussion. For the orange parts, with a meaningful ID, no revision exists. However, if Assembly O122 is 100% FFF compatible, the Product ID MP-323121 will not change. It allows your company to optimize the EBOM and/or MBOM, meanwhile keeping 100% compatibility to the outside world. (note: the same principle applies to the two manufacturers for Part O124.)

In case Top Assembly O122 has new or changed parts – what should happen there?

At that moment, the definition has changed. The definitions, most of the time described in documents/drawings/models, are related information to the BOM. Therefore the Top Assembly O122 should get a new identifier. There is no need to name it a revision, it is a new data set in the PLM-system, again with a meaningless identifier as we are connected digitally,

At that moment, the definition has changed. The definitions, most of the time described in documents/drawings/models, are related information to the BOM. Therefore the Top Assembly O122 should get a new identifier. There is no need to name it a revision, it is a new data set in the PLM-system, again with a meaningless identifier as we are connected digitally,

What about revisions of parts?

Of course, the management of changes existed long before PLM-systems were introduced.

The specifications of a part were defined in drawings. The drawing contained all the information, not only the geometry definitions, but also specifications on how to manufacture the part.

The specifications of a part were defined in drawings. The drawing contained all the information, not only the geometry definitions, but also specifications on how to manufacture the part.

For complex products, a considerable set of consistently related drawings would be released to manufacturing. A release process with physical signatures on it.

At the same time, there was no discussion: the drawing represents the part. And as there was no digital connection, part numbers/drawing numbers were meaningful, often with the format of the drawing as part of the identifier.

In case changes were needed, for example, fixing a dimension or tolerance as discovered during manufacturing, the drawing had to be revised to remain consistent. First, in the original drawing, the issue or change was marked in red (redlining). Then engineering had to create a new version of the drawing.

In case changes were needed, for example, fixing a dimension or tolerance as discovered during manufacturing, the drawing had to be revised to remain consistent. First, in the original drawing, the issue or change was marked in red (redlining). Then engineering had to create a new version of the drawing.

Depending on the impact of change (here comes also the FFF-principle), people decided if a new part number was needed (FFF-change) or that the change only required an update of the drawing(s), meaning a revision. If the difference was small (for example, adding a missing annotation), it could be called a minor change, all to be reflected in the drawing number, which equals the part number in this approach. So, when we talk about revisions of parts, we are talking about a document change.

A lousy practice from that approach is also that often manufacturing just redlines a drawing and keeps the redlined drawing as their source. It is too time-consuming or difficult to update the source drawing(s) through a change process. Engineering is not aware of this change, and when a later change comes through from engineering, these “fixes” might be missed as there is no traceability.

Generic example of a PLM data model and its relationsWhen PLM-systems were introduced, of course, companies did not want to disrupt their existing ways of working. Therefore, they were asking the PLM-editors to enable revisions on parts and so the PLM-editors did (or do).

However, if you want to use the PLM-system in the best manner, you need to “decouple” the concept: part number equals drawing number, combined with the possibility to start using meaningless identifiers, as relations between parts and drawings are managed in the PLM-system through relational links.

Relevant post related to the PLM data model are:

- EBOM and (CAD) Documents

- Intelligent Part or Product numbers

- The impact of Non-Intelligent Part numbers

What are the best practices?

As some people mentioned in their comments to Yoann’s post, why do we have to answer this question as all is already well understood and described in best practices? I agree with that statement: Best Practices exist – so how to obtain them?

First, there is the whole framework of Configuration Management, which existed long before PLM-systems were introduced. If you follow their methodology, you can be (almost) guaranteed your information is consistent and correct. Configuration Management is crucial in areas where the impact of an error is enormous, like the GM-example Yoann referred to. Also, companies in the Aerospace and Defense industry are the ones that have strict configuration management in place.

First, there is the whole framework of Configuration Management, which existed long before PLM-systems were introduced. If you follow their methodology, you can be (almost) guaranteed your information is consistent and correct. Configuration Management is crucial in areas where the impact of an error is enormous, like the GM-example Yoann referred to. Also, companies in the Aerospace and Defense industry are the ones that have strict configuration management in place.

Configuration management does not come for free. It requires an investment in skills, potentially a change in ways of working, and requires an overhead. Manufacturing companies that are creating less “risky” products often focus more on optimizing (= reducing) the cost of their internal processes instead of investing in proper methodologies to manage consistency.

If you want to learn more about CM, investigate the Institute of Process Excellence (IPX), the founders of the CM2 framework for Enterprise Configuration Management, and much more. Note: Their knowledge does not come for free, which I can understand. However, it also creates a barrier for the company’s further investment in CM as this kind of strategic investments are hard to sell at the management level by individuals in a company.

In the context of CM, I advise you to follow Martijn Dullaart, who is quite active in our social community. His latest blog post related to this thread is: It’s about Interchangeability and Traceability

In the context of CM, I advise you to follow Martijn Dullaart, who is quite active in our social community. His latest blog post related to this thread is: It’s about Interchangeability and Traceability

With the introduction of PLM-system, these companies and the PLM-editors created the opportunity to implement configuration management in their system.

The data inside the system would be the “single version of the truth.” Unfortunately, this was most of the time, just a sales strategy, falsely giving the impression that information is under control now. Last year I wrote several posts related to the relation between PLM and CM, starting from PLM and Configuration Management – a happy marriage?

The data inside the system would be the “single version of the truth.” Unfortunately, this was most of the time, just a sales strategy, falsely giving the impression that information is under control now. Last year I wrote several posts related to the relation between PLM and CM, starting from PLM and Configuration Management – a happy marriage?

If you are interested in another resource for information related to these topics, have a look at the website from Jörg Eisenträger who also collected his best practices for PLM and CM for sharing (thanks Paul van der Ree for the link)

Don’t expect best practices from your PLM-vendors as their role is to sell software. It is the continuous discussion between:

- A PLM-system that forces companies to work according to embedded methodology (hard to sell/implement but idealistically correct)

And

- A flexible PLM-system that allows you to build and configure anything (easy to sell/challenging to implement correctly, depending on “wise” decisions)

The Future

Even though most companies are working drawing-centric, with or without a linked PLM-backbone for BOM-management, the next upcoming challenge is to evolve to model-based practices. The current CM-practices still talk about documents, although documents are already electronic datasets in that context. The future, however, in a model-based enterprise evolves related to connected models, 3D Models, but also simulation and software models, with different lifecycles and pace of change. For the model-based enterprise, we need to develop digital best practices that guarantee the same level of quality, however, executed and/or supported by (AI) Artificial Intelligence. AI is needed as human beings cannot physically analyze and understand all the impact of a change in such an environment.

Even though most companies are working drawing-centric, with or without a linked PLM-backbone for BOM-management, the next upcoming challenge is to evolve to model-based practices. The current CM-practices still talk about documents, although documents are already electronic datasets in that context. The future, however, in a model-based enterprise evolves related to connected models, 3D Models, but also simulation and software models, with different lifecycles and pace of change. For the model-based enterprise, we need to develop digital best practices that guarantee the same level of quality, however, executed and/or supported by (AI) Artificial Intelligence. AI is needed as human beings cannot physically analyze and understand all the impact of a change in such an environment.

Conclusion

The FFF-discussion illustrates that building a consistent framework within PLM is not an easy goal to achieve. My blog buddy Oleg Shilovitsky would claim that we consultants create the complexity. PLM-editors will never solve this complexity, it is up to your company’s mission to invest in knowledge to understand why and how to reduce the complexity. With this post and the related links and discussions, I hope more clarity will help you to make “wise” decisions.

In my previous post, the PLM blame game, I briefly mentioned that there are two delivery models for PLM. One approach based on a PLM system, that contains predefined business logic and functionality, promoting to use the system as much as possible out-of-the-box (OOTB) somehow driving toward a certain rigidness or the other approach where the PLM capabilities need to be developed on top of a customizable infrastructure, providing more flexibility. I believe there has been a debate about this topic over more than 15 years without a decisive conclusion. Therefore I will take you through the pros and cons of both approaches illustrated by examples from the field.

In my previous post, the PLM blame game, I briefly mentioned that there are two delivery models for PLM. One approach based on a PLM system, that contains predefined business logic and functionality, promoting to use the system as much as possible out-of-the-box (OOTB) somehow driving toward a certain rigidness or the other approach where the PLM capabilities need to be developed on top of a customizable infrastructure, providing more flexibility. I believe there has been a debate about this topic over more than 15 years without a decisive conclusion. Therefore I will take you through the pros and cons of both approaches illustrated by examples from the field.

PLM started as a toolkit

The initial cPDM/PLM systems were toolkits for several reasons. In the early days, scalable connectivity was not available or way too expensive for a standard collaboration approach. Engineering information, mostly design files, needed to be shared globally in an efficient manner, and the PLM backbone was often a centralized repository for CAD-data. Bill of Materials handling in PLM was often at a basic level, as either the ERP-system (mostly Aerospace/Defense) or home-grown developed BOM-systems(Automotive) were in place for manufacturing.

The initial cPDM/PLM systems were toolkits for several reasons. In the early days, scalable connectivity was not available or way too expensive for a standard collaboration approach. Engineering information, mostly design files, needed to be shared globally in an efficient manner, and the PLM backbone was often a centralized repository for CAD-data. Bill of Materials handling in PLM was often at a basic level, as either the ERP-system (mostly Aerospace/Defense) or home-grown developed BOM-systems(Automotive) were in place for manufacturing.

Depending on the business needs of the company, the target was too connect as much as possible engineering data sources to the PLM backbone – PLM originated from engineering and is still considered by many people as an engineering solution. For connectivity interfaces and integrations needed to be developed in a time that application integration frameworks were primitive and complicated. This made PLM implementations complex and expensive, so only the large automotive and aerospace/defense companies could afford to invest in such systems. And a lot of tuition fees spent to achieve results. Many of these environments are still operational as they became too risky to touch, as I described in my post: The PLM Migration Dilemma.

The birth of OOTB

Around the year 2000, there was the first development of OOTB PLM. There was Agile (later acquired by Oracle) focusing on the high-tech and medical industry. Instead of document management, they focused on the scenario from bringing the BOM from engineering to manufacturing based on a relatively fixed scenario – therefore fast to implement and fast to validate. The last point, in particular, is crucial in regulated medical environments.

Around the year 2000, there was the first development of OOTB PLM. There was Agile (later acquired by Oracle) focusing on the high-tech and medical industry. Instead of document management, they focused on the scenario from bringing the BOM from engineering to manufacturing based on a relatively fixed scenario – therefore fast to implement and fast to validate. The last point, in particular, is crucial in regulated medical environments.

At that time, I was working with SmarTeam on the development of templates for various industries, with a similar mindset. A predefined template would lead to faster implementations and therefore reducing the implementation costs. The challenge with SmarTeam, however, was that is was very easy to customize, based on Microsoft technology and wizards for data modeling and UI design.

This was not a benefit for OOTB-delivery as SmarTeam was implemented through Value Added Resellers, and their major revenue came from providing services to their customers. So it was easy to reprogram the concepts of the templates and use them as your unique selling points towards a customer. A similar situation is now happening with Aras – the primary implementation skills are at the implementing companies, and their revenue does not come from software (maintenance).

This was not a benefit for OOTB-delivery as SmarTeam was implemented through Value Added Resellers, and their major revenue came from providing services to their customers. So it was easy to reprogram the concepts of the templates and use them as your unique selling points towards a customer. A similar situation is now happening with Aras – the primary implementation skills are at the implementing companies, and their revenue does not come from software (maintenance).

The result is that each implementer considers another implementer as a competitor and they are not willing to give up their IP to the software company.

SmarTeam resellers were not eager to deliver their IP back to SmarTeam to get it embedded in the product as it would reduce their unique selling points. I assume the same happens currently in the Aras channel – it might be called Open Source however probably it is only high-level infrastructure.

Around 2006 many of the main PLM-vendors had their various mid-market offerings, and I contributed at that time to the SmarTeam Engineering Express – a preconfigured solution that was rapid to implement if you wanted.

Although the SmarTeam Engineering Express was an excellent sales tool, the resellers that started to implement the software began to customize the environment as fast as possible in their own preferred manner. For two reasons: the customer most of the time had different current practices and secondly the money come from services. So why say No to a customer if you can say Yes?

Although the SmarTeam Engineering Express was an excellent sales tool, the resellers that started to implement the software began to customize the environment as fast as possible in their own preferred manner. For two reasons: the customer most of the time had different current practices and secondly the money come from services. So why say No to a customer if you can say Yes?

OOTB and modules

Initially, for the leading PLM Vendors, their mid-market templates were not just aiming at the mid-market. All companies wanted to have a standardized PLM-system with as little as possible customizations. This meant for the PLM vendors that they had to package their functionality into modules, sometimes addressing industry-specific capabilities, sometimes areas of interfaces (CAD and ERP integrations) as a module or generic governance capabilities like portfolio management, project management, and change management.

Initially, for the leading PLM Vendors, their mid-market templates were not just aiming at the mid-market. All companies wanted to have a standardized PLM-system with as little as possible customizations. This meant for the PLM vendors that they had to package their functionality into modules, sometimes addressing industry-specific capabilities, sometimes areas of interfaces (CAD and ERP integrations) as a module or generic governance capabilities like portfolio management, project management, and change management.

The principles behind the modules were that they need to deliver data model capabilities combined with business logic/behavior. Otherwise, the value of the module would be not relevant. And this causes a challenge. The more business logic a module delivers, the more the company that implements the module needs to adapt to more generic practices. This requires business change management, people need to be motivated to work differently. And who is eager to make people work differently? Almost nobody, as it is an intensive coaching job that cannot be done by the vendors (they sell software), often cannot be done by the implementers (they do not have the broad set of skills needed) or by the companies (they do not have the free resources for that). Precisely the principles behind the PLM Blame Game.

OOTB modularity advantages

The first advantage of modularity in the PLM software is that you only buy the software pieces that you really need. However, most companies do not see PLM as a journey, so they agree on a budget to start, and then every module that was not identified before becomes a cost issue. Main reason because the implementation teams focus on delivering capabilities at that stage, not at providing value-based metrics.

The first advantage of modularity in the PLM software is that you only buy the software pieces that you really need. However, most companies do not see PLM as a journey, so they agree on a budget to start, and then every module that was not identified before becomes a cost issue. Main reason because the implementation teams focus on delivering capabilities at that stage, not at providing value-based metrics.

The second potential advantage of PLM modularity is the fact that these modules supposed to be complementary to the other modules as they should have been developed in the context of each other. In reality, this is not always the case. Yes, the modules fit nicely on a single PowerPoint slide, however, when it comes to reality, there are separate systems with a minimum of integration with the core. However, the advantage is that the PLM software provider now becomes responsible for upgradability or extendibility of the provided functionality, which is a serious point to consider.

The second potential advantage of PLM modularity is the fact that these modules supposed to be complementary to the other modules as they should have been developed in the context of each other. In reality, this is not always the case. Yes, the modules fit nicely on a single PowerPoint slide, however, when it comes to reality, there are separate systems with a minimum of integration with the core. However, the advantage is that the PLM software provider now becomes responsible for upgradability or extendibility of the provided functionality, which is a serious point to consider.

The third advantage from the OOTB modular approach is that it forces the PLM vendor to invest in your industry and future needed capabilities, for example, digital twins, AR/VR, and model-based ways of working. Some skeptic people might say PLM vendors create problems to solve that do not exist yet, optimists might say they invest in imagining the future, which can only happen by trial-and-error. In a digital enterprise, it is: think big, start small, fail fast, and scale quickly.

The third advantage from the OOTB modular approach is that it forces the PLM vendor to invest in your industry and future needed capabilities, for example, digital twins, AR/VR, and model-based ways of working. Some skeptic people might say PLM vendors create problems to solve that do not exist yet, optimists might say they invest in imagining the future, which can only happen by trial-and-error. In a digital enterprise, it is: think big, start small, fail fast, and scale quickly.

OOTB modularity disadvantages

Most of the OOTB modularity disadvantages will be advantages in the toolkit approach, therefore discussed in the next paragraph. One downside from the OOTB modular approach is the disconnect between the people developing the modules and the implementers in the field. Often modules are developed based on some leading customer experiences (the big ones), where the majority of usage in the field is targeting smaller companies where people have multiple roles, the typical SMB approach. SMB implementations are often not visible at the PLM Vendor R&D level as they are hidden through the Value Added Reseller network and/or usually too small to become apparent.

Most of the OOTB modularity disadvantages will be advantages in the toolkit approach, therefore discussed in the next paragraph. One downside from the OOTB modular approach is the disconnect between the people developing the modules and the implementers in the field. Often modules are developed based on some leading customer experiences (the big ones), where the majority of usage in the field is targeting smaller companies where people have multiple roles, the typical SMB approach. SMB implementations are often not visible at the PLM Vendor R&D level as they are hidden through the Value Added Reseller network and/or usually too small to become apparent.

Toolkit advantages

The most significant advantage of a PLM toolkit approach is that the implementation can be a journey. Starting with a clear business need, for example in modern PLM, create a digital thread and then once this is achieved dive deeper in areas of the lifecycle that require improvement. And increased functionality is only linked to the number of users, not to extra costs for a new module.

The most significant advantage of a PLM toolkit approach is that the implementation can be a journey. Starting with a clear business need, for example in modern PLM, create a digital thread and then once this is achieved dive deeper in areas of the lifecycle that require improvement. And increased functionality is only linked to the number of users, not to extra costs for a new module.

However, if the development of additional functionality becomes massive, you have the risk that low license costs are nullified by development costs.

The second advantage of a PLM toolkit approach is that the implementer and users will have a better relationship in delivering capabilities and therefore, a higher chance of acceptance. The implementer builds what the customer is asking for.

The second advantage of a PLM toolkit approach is that the implementer and users will have a better relationship in delivering capabilities and therefore, a higher chance of acceptance. The implementer builds what the customer is asking for.

However, as Henry Ford said, if I would ask my customers what they wanted, they would ask for faster horses.

Toolkit considerations

There are several points where a PLM toolkit can be an advantage but also a disadvantage, very much depending on various characteristics of your company and your implementation team. Let’s review some of them:

Innovative: a toolkit does not provide an innovative way of working immediately. The toolkit can have an infrastructure to deliver innovative capabilities, even as small demonstrations, the implementation, and methodology to implement this innovative way of working needs to come from either your company’s resources or your implementer’s skills.

Innovative: a toolkit does not provide an innovative way of working immediately. The toolkit can have an infrastructure to deliver innovative capabilities, even as small demonstrations, the implementation, and methodology to implement this innovative way of working needs to come from either your company’s resources or your implementer’s skills.

Uniqueness: with a toolkit approach, you can build a unique PLM infrastructure that makes you more competitive than the other. Don’t share your IP and best practices to be more competitive. This approach can be valid if you truly have a competing plan here. Otherwise, the risk might be you are creating a legacy for your company that will slow you down later in time.

Uniqueness: with a toolkit approach, you can build a unique PLM infrastructure that makes you more competitive than the other. Don’t share your IP and best practices to be more competitive. This approach can be valid if you truly have a competing plan here. Otherwise, the risk might be you are creating a legacy for your company that will slow you down later in time.

Performance: this is a crucial topic if you want to scale your solution to the enterprise level. I spent a lot of time in the past analyzing and supporting SmarTeam implementers and template developers on their journey to optimize their solutions. Choosing the right algorithms, the right data modeling choices are crucial.

Performance: this is a crucial topic if you want to scale your solution to the enterprise level. I spent a lot of time in the past analyzing and supporting SmarTeam implementers and template developers on their journey to optimize their solutions. Choosing the right algorithms, the right data modeling choices are crucial.

Sometimes I came into a situation where the customer blamed SmarTeam because customizations were possible – you can read about this example in an old LinkedIn post: the importance of a PLM data model

Experience: When you plan to implement PLM “big” with a toolkit approach, experience becomes crucial as initial design decisions and scope are significant for future extensions and maintainability. Beautiful implementations can become a burden after five years as design decisions were not documented or analyzed. Having experience or an experienced partner/coach can help you in these situations. In general, it is sporadic for a company to have internally experienced PLM implementers as it is not their core business to implement PLM. Experienced PLM implementers vary from size and skills – make the right choice.

Experience: When you plan to implement PLM “big” with a toolkit approach, experience becomes crucial as initial design decisions and scope are significant for future extensions and maintainability. Beautiful implementations can become a burden after five years as design decisions were not documented or analyzed. Having experience or an experienced partner/coach can help you in these situations. In general, it is sporadic for a company to have internally experienced PLM implementers as it is not their core business to implement PLM. Experienced PLM implementers vary from size and skills – make the right choice.

Conclusion

After writing this post, I still cannot write a final verdict from my side what is the best approach. Personally, I like the PLM toolkit approach as I have been working in the PLM domain for twenty years seeing and experiencing good and best practices. The OOTB-box approach represents many of these best practices and therefore are a safe path to follow. The undecisive points are who are the people involved and what is your business model. It needs to be an end-to-end coherent approach, no matter which option you choose.

A week ago I attended the joined CIMdata Roadmap and PDT Europe conference in Stuttgart as you can recall from last week’s post: The weekend after CIMdata Roadmap / PDT Europe 2018. As there was so much information to share, I had to split the report into two posts. This time the focus on the PDT Europe. In general, the PDT conferences have always been focusing on sharing experiences and developments related to standards. A topic you will not see at PLM Vendor conferences. Therefore, your chance to learn and take part if you believe in standards.

A week ago I attended the joined CIMdata Roadmap and PDT Europe conference in Stuttgart as you can recall from last week’s post: The weekend after CIMdata Roadmap / PDT Europe 2018. As there was so much information to share, I had to split the report into two posts. This time the focus on the PDT Europe. In general, the PDT conferences have always been focusing on sharing experiences and developments related to standards. A topic you will not see at PLM Vendor conferences. Therefore, your chance to learn and take part if you believe in standards.

This year’s theme: Collaboration in the Engineering and Manufacturing Supply Chain – the Extended Digital Thread and Smart Manufacturing. Industry 4.0 plays a significant role here.

Model-based X: What is it and what is the status?

I have seen Peter Bilello presenting this topic now several times, and every time there is a little more progress. The fact that there is still an acronym war illustrated that the various aspects of a model-based approach are not yet defined. Some critics will be stating that’s because we do not need model-based and it is only a vendor marketing trick again. Two comments here:

I have seen Peter Bilello presenting this topic now several times, and every time there is a little more progress. The fact that there is still an acronym war illustrated that the various aspects of a model-based approach are not yet defined. Some critics will be stating that’s because we do not need model-based and it is only a vendor marketing trick again. Two comments here:

- If you want to implement an end-to-end model-based approach including your customers and supply chain, you cannot avoid standard. More will become clear when you read the rest of this post. Vendors will not promote standards as it reduces their capabilities to deliver unique So standards must come from the market, not from the marketing.