You are currently browsing the tag archive for the ‘Change Management’ tag.

In the past two weeks, I had several discussions with peers in the PLM domain about their experiences.

In the past two weeks, I had several discussions with peers in the PLM domain about their experiences.

Some of them I met after a long time again face-to-face at the LiveWorx 2023 event. See my review of the event here: The Weekend after LiveWorx 2023.

And there were several interactions on LinkedIn, leading to a more extended discussion thread (an example of a digital thread ?) or a Zoom discussion (a so-called 2D conversation).

To complete the story, I also participated in two PLM podcasts from Share PLM, where we interviewed Johan Mikkelä (currently working at FLSmidth) and, in the second episode Issam Darraj (presently working at ABB) about their PLM experiences. Less a discussion, more a dialogue, trying to grasp the non-documented aspects of PLM. We are looking for your feedback on these podcasts too.

To complete the story, I also participated in two PLM podcasts from Share PLM, where we interviewed Johan Mikkelä (currently working at FLSmidth) and, in the second episode Issam Darraj (presently working at ABB) about their PLM experiences. Less a discussion, more a dialogue, trying to grasp the non-documented aspects of PLM. We are looking for your feedback on these podcasts too.

All these discussions led to a reconfirmation that if you are a PLM practitioner, you need a broad skillset to address the business needs, translate them into people and process activities relevant to the industry and ultimately implement the proper collection of tools.

![]() As a sneaky preview for the podcast sessions, we asked both Johan and Issam about the importance of the tools. I will not disclose their answers here; you have to listen.

As a sneaky preview for the podcast sessions, we asked both Johan and Issam about the importance of the tools. I will not disclose their answers here; you have to listen.

Let’s look at some of the discussions.

NOTE: Just before pushing the Publish button, Oleg Shilovitsky published this blog article PLM Project Failures and Unstoppable PLM Playbook. I will comment on his points at the end of this post. It is all part of the extensive discussion.

NOTE: Just before pushing the Publish button, Oleg Shilovitsky published this blog article PLM Project Failures and Unstoppable PLM Playbook. I will comment on his points at the end of this post. It is all part of the extensive discussion.

PLM, LinkedIn and complexity

The most popular discussions on LinkedIn are often related to the various types of Bills of Materials (eBOM, mBOM, sBOM), Part numbering schemes (intelligent or not), version and revision management and the famous FFF discussions.

The most popular discussions on LinkedIn are often related to the various types of Bills of Materials (eBOM, mBOM, sBOM), Part numbering schemes (intelligent or not), version and revision management and the famous FFF discussions.

This post: PLM and Configuration Management Best Practices: Working with Revisions, from Andreas Lindenthal, was a recent example that triggered others to react.

I had some offline discussions on this topic last week, and I noticed Frédéric Zeller wrote his post with the title PLM, LinkedIn and complexity, starting his post with (quote):

I am stunned by the average level of posts on the PLM on LinkedIn.

I’m sorry, but in 2023 :

- Part Number management (significant, non-significant) should no longer be a problem.

- Revision management should no longer be a question.

- Configuration management theory should no longer be a question.

- Notions of EBOMs, MBOMs … should no longer be a question.

So why are there still problems on these topics?

You can see from the at least 40+ comments that this statement created a lot of reactions, including mine. Apparently, these topics are touching many people worldwide, and there is no simple, single answer to each of these topics. And there are so many other topics relevant to PLM.

Talking later with Frederic for one hour in a Zoom session, we discussed the importance of the right PLM data model.

Talking later with Frederic for one hour in a Zoom session, we discussed the importance of the right PLM data model.

I also wrote a series about the (traditional) PLM data model: The importance of a (PLM) data model.

Frederic is more of a PLM architect; we even discussed the wording related to the EBOM and the MBOM. A topic that I feel comfortable discussing after many years of experience seeing the attempts that failed and the dreams people had. And this was only one aspect of PLM.

You also find the discussion related to a PLM certification in the same thread. How would you certify a person as a PLM expert?

You also find the discussion related to a PLM certification in the same thread. How would you certify a person as a PLM expert?

There are so many dimensions to PLM. Even more important, the PLM from 10-15 years ago (more of a system discussion) is no longer the PLM nowadays (a strategy and an infrastructure) –

This is a crucial difference. Learning to use a PLM tool and implement it is not the same as building a PLM strategy for your company. It is Tools, Process, People versus Process, People, Tools and Data.

Time for Methodology workshops?

I recently discussed with several peers what we could do to assist people looking for best practices discussion and lessons learned. There is a need, but how to organize them as we cannot expect this to be voluntary work.

![]() In the past, I suggested MarketKey, the organizer of the PI DX events, extend its theme workshops. For example, instead of a 45-min Focus group with a short introduction to a theme (e.g., eBOM-mBOM, PLM-ERP interfaces), make these sessions last at least half a day and be independent of the PLM vendors.

In the past, I suggested MarketKey, the organizer of the PI DX events, extend its theme workshops. For example, instead of a 45-min Focus group with a short introduction to a theme (e.g., eBOM-mBOM, PLM-ERP interfaces), make these sessions last at least half a day and be independent of the PLM vendors.

Apparently, it did not fit in the PI DX programming; half a day would potentially stretch the duration of the conference and more and more, we see two days of meetings as the maximum. Longer becomes difficult to justify even if the content might have high value for the participants.

I observed a similar situation last year in combination with the PLM roadmap/PDT Europe conference in Gothenburg. Here we had a half-day workshop before the conference led by Erik Herzog(SAAB Aeronautics)/ Judith Crockford (Europstep) to discuss concepts related to federated PLM – read more in this post: The week after PLM Roadmap/PDT Europe 2022.

I observed a similar situation last year in combination with the PLM roadmap/PDT Europe conference in Gothenburg. Here we had a half-day workshop before the conference led by Erik Herzog(SAAB Aeronautics)/ Judith Crockford (Europstep) to discuss concepts related to federated PLM – read more in this post: The week after PLM Roadmap/PDT Europe 2022.

It reminded me of an MDM workshop before the 2015 Event, led by Marc Halpern from Gartner. Unfortunately, the federated PLM discussion remained a pretty Swedish initiative, and the follow-up did not reach a wider audience.

And then there are the Aerospace and Defense PLM action groups that discuss moderated by CIMdata. It is great that they published their findings (look here), although the best lessons learned are during the workshops.

And then there are the Aerospace and Defense PLM action groups that discuss moderated by CIMdata. It is great that they published their findings (look here), although the best lessons learned are during the workshops.

However, I also believe the A&D industry cannot be compared to a mid-market machinery manufacturing company. Therefore, it is helpful for a smaller audience only.

And here, I inserted a paragraph dedicated to Oleg’s recent post, PLM Project Failures and Unstoppable PLM Playbook – starting with a quote:

How to learn to implement PLM? I wrote about it in my earlier article – PLM playbook: how to learn about PLM? While I’m still happy to share my knowledge and experience, I think there is a bigger need in helping manufacturing companies and, especially PLM professionals, with the methodology of how to achieve the right goal when implementing PLM. Which made me think about the Unstoppable PLM playbook ©.

I found a similar passion for helping companies to adopt PLM while talking to Helena Gutierrez. Over many conversations during the last few months, we talked about how to help manufacturing companies with PLM adoption. The unstoppable PLM playbook is still a work in progress, but we want to start talking about it to get your feedback and start the conversation.

It is an excellent confirmation of the fact that there is a need for education and that the education related to PLM on the Internet is not good enough.

As a former teacher in Physics, I do not believe in the Unstoppable PLM Playbook, even if it is a branded name. Many books are written by specific authors, giving their perspectives based on their (academic) knowledge.

As a former teacher in Physics, I do not believe in the Unstoppable PLM Playbook, even if it is a branded name. Many books are written by specific authors, giving their perspectives based on their (academic) knowledge.

Are they useful? I believe only in the context of a classroom discussion where the applicability can be discussed,

Therefore my questions to vendor-neutral global players, like CIMdata, Eurostep, Prostep, SharePLM, TCS and others, are you willing to pick up this request? Or are there other entities that I missed? Please leave your thoughts in the comments. I will be happy to assist in organizing them.

Therefore my questions to vendor-neutral global players, like CIMdata, Eurostep, Prostep, SharePLM, TCS and others, are you willing to pick up this request? Or are there other entities that I missed? Please leave your thoughts in the comments. I will be happy to assist in organizing them.

There are many more future topics to discuss and document too.

- What about the potential split of a PLM infrastructure between Systems of Record & Systems of Engagement?

- What about the Digital Thread, a more and more accepted theme in discussions, but what is the standard definition?

- Is it traceability as some vendors promote it, or is it the continuity of data, direct usable in various contexts – the DevOps approach?

Who likes to discuss methodology?

When asking myself this question, I see the analogy with standards. So let’s look at the various players in the PLM domain – sorry for the immense generalization.

When asking myself this question, I see the analogy with standards. So let’s look at the various players in the PLM domain – sorry for the immense generalization.

Strategic consultants: standards are essential, but spare me the details.

Vendors: standards are limiting the unique capabilities of my products

Implementers: two types – Those who understand and use standards as they see the long-term benefits. Those who avoid standards as it introduces complexity.

Companies: they love standards if they can be implemented seamlessly.

Universities: they love to explore standards and help to set the standards even if they are not scalable

Just replace standards with methodology, and you see the analogy.

We like to discuss the methodology.

As I mentioned in the introduction, I started to work with Share PLM on a series of podcasts where we interview PLM experts in the field that have experience with the people, the process, the tools and the data side. Through these interviews, you will realize PLM is complex and has become even more complicated when you consider PLM a strategy instead of a tool.

As I mentioned in the introduction, I started to work with Share PLM on a series of podcasts where we interview PLM experts in the field that have experience with the people, the process, the tools and the data side. Through these interviews, you will realize PLM is complex and has become even more complicated when you consider PLM a strategy instead of a tool.

We hope these podcasts might be a starting point for further discussion – either through direct interactions or through contributions to the podcast. If you have PLM experts in your network that can explain the complexity of PLM from various angles and have the experience. Please let us know – it is time to share.

Conclusion

By switching gears, I noticed that PLM has become complex. Too complex for a single person to master. With an aging traditional PLM workforce (like me), it is time to consolidate the best practices of the past and discuss the best practices for the future. There are no simple answers, as every industry is different. Help us to energize the PLM community – your thoughts/contributions?

In the previous seven posts, learning from the past to understand the future, we have seen the evolution from manual 2D drawing handling. Next, the emerge of ERP and CAD followed by data management systems (PDM/PLM) and methodology (EBOM/MBOM) to create an infrastructure for product data from concept towards manufacturing.

In the previous seven posts, learning from the past to understand the future, we have seen the evolution from manual 2D drawing handling. Next, the emerge of ERP and CAD followed by data management systems (PDM/PLM) and methodology (EBOM/MBOM) to create an infrastructure for product data from concept towards manufacturing.

Before discussing the extension to the SBOM-concept, I first want to discuss Engineering Change Management and Configuration Management.

ECM and CM – are they the same?

Often when you talk with people in my PLM bubble, the terms Change Management and Configuration Management are mixed or not well understood.

![]() When talking about Change Management, we should clearly distinguish between OCM (Organizational Change Management) and ECM (Engineering Change Management). In this post, I will focus on Engineering Change Management (ECM).

When talking about Change Management, we should clearly distinguish between OCM (Organizational Change Management) and ECM (Engineering Change Management). In this post, I will focus on Engineering Change Management (ECM).

When talking about Configuration Management also here we find two interpretations of it.

![]() The first one is a methodology describing technically how, in your PLM/CAD-environment, you can build the most efficient way connected data structures, representing all product variations. This technology varies per PLM/CAD-vendor, and therefore I will not discuss it here. The other interpretation of Configuration Management is described on Wiki as follows:

The first one is a methodology describing technically how, in your PLM/CAD-environment, you can build the most efficient way connected data structures, representing all product variations. This technology varies per PLM/CAD-vendor, and therefore I will not discuss it here. The other interpretation of Configuration Management is described on Wiki as follows:

Configuration management (CM) is a systems engineering process for establishing and maintaining consistency of a product’s performance, functional, and physical attributes with its requirements, design, and operational information throughout its life.

This is also the area where I will focus on this time.

And as-if great minds think alike and are synchronized, I was happy to see Martijn Dullaart’s recent blog post, referring to a poll and follow-up article on CM.

Here Martijn precisely touches the topic I address in this post. I recommend you to read his post: Configuration Management done right = Product-Centric first and then follow with the rest of this article.

Engineering Change Management

Initially, engineering change management was a departmental activity performed by engineering to manage the changes in a product’s definition. Other stakeholders are often consulted when preparing a change, which can be minor (affecting, for example, only engineering) or major (affecting engineering and manufacturing).

Initially, engineering change management was a departmental activity performed by engineering to manage the changes in a product’s definition. Other stakeholders are often consulted when preparing a change, which can be minor (affecting, for example, only engineering) or major (affecting engineering and manufacturing).

The way engineering change management has been implemented varies a lot. Over time companies all around the world have defined their change methodology, and there is a lot of commonality between these approaches. However, terminology as revision, version, major change, minor change all might vary.

I described the generic approach for engineering change processes in my blog post: ECR / ECO for Dummies from 2010.

The fact that companies have defined their own engineering change processes is not an issue when it works and is done manually. The real challenge came with PDM/PLM-systems that need to provide support for engineering change management.

Do you leave the methodology 100 % open, or do you provide business logic?

I have seen implementations where an engineer with a right-click could release an assembly without any constraints. Related drawings might not exist, parts in the assembly are not released, and more. To obtain a reliable engineering change management process, the company had to customize the PLM-system to its desired behavior.

I have seen implementations where an engineer with a right-click could release an assembly without any constraints. Related drawings might not exist, parts in the assembly are not released, and more. To obtain a reliable engineering change management process, the company had to customize the PLM-system to its desired behavior.

An exercise excellent for a system integrator as there was always a discussion with end-users that do not want to be restricted in case of an emergency (“we will complete the definition later” / “too many clicks” / “do I have to approve 100 parts ?”). In many cases, the system integrator kept on customizing the system to adapt to all wishes. Often the engineering change methodology on paper was not complete or contained contradictions when trying to digitize the processes.

For that reason, the PLM-vendors that aim to provide Out-Of-The-Box solutions have been trying to predefine certain behaviors in their system. For example, you cannot release a part, when its specifications (drawings/documents) are not released. Or, you cannot update a released assembly without creating a new revision.

For that reason, the PLM-vendors that aim to provide Out-Of-The-Box solutions have been trying to predefine certain behaviors in their system. For example, you cannot release a part, when its specifications (drawings/documents) are not released. Or, you cannot update a released assembly without creating a new revision.

These rules speed-up the implementation; however, they require more OCM (Organizational Change Management) as probably naming and methodology has to change within the company. This is the continuous battle in PLM-implementations. In particular where the company has a strong legacy or lack of business understanding, when implementing PLM.

There is an excellent webcast in this context on Minerva PLM TV – How to Increase IT Project Success with Organizational Change Management.

Click on the image or link to watch this recording.

Configuration Management

When we talk about configuration management, we have to think about managing the consistency of product data along the whole product lifecycle, as we have seen from the Wiki-definition before.

Configuration management existed long before we had IT-systems. Therefore, configuration management is more a collection of activities (see diagram above) to ensure the consistency of information is correct for any given product. Consistent during design, where requirements match product capabilities. Consistent with manufacturing, where the manufacturing process is based on the correct engineering specifications. And consistent with operations, meaning that we have the full definition of product in the field, the As-Built, in correct relation to its engineering and manufacturing definition.

This consistency is crucial for products where the cost of an error can have a massive impact on the manufacturer. The first industries that invested heavily in configuration management were the Aerospace and Defense industries. Configuration management is needed in these industries as the products are usually complex, and failure can have a fatal impact on the company. Combined with many regulatory constraints, managing the configuration of a product and the impact of changes is a discipline on its own.

Other industries have also introduced configuration management nowadays. The nuclear power industry and the pharmaceutical industry use configuration management as part of their regulatory compliance. The automotive industry requires configuration management partly for compliance, mainly driven by quality targets. An accident or a recall can be costly for a car manufacturer. Other manufacturing companies all have their own configuration management strategies, mainly depending on their own risk assessment. Configuration management is a pro-active discipline – it costs money – time, people and potential tools to implement it. In my experience, many of these companies try to do “some” configuration management, always hoping that a real disaster will not happen (or can happen). Proper configuration management allows you to perform reliable impact analysis for any change (image above)

What happens in the field?

When introducing PLM in mid-market companies, often, the dream was that with the new PLM-system configuration, management would be there too.

When introducing PLM in mid-market companies, often, the dream was that with the new PLM-system configuration, management would be there too.

Management believes the tools will fix the issue.

Partly because configuration management deals with a structured approach on how to manage changes, there was always confusion with engineering change management. Modern PLM-systems all have an impact analysis capability. However, most of the time, this impact analysis only reaches the content that is in the PLM-system. Configuration Management goes further.

If you think that configuration management is crucial for your company, start educating yourselves first before implementing anything in a tool. There are several places where you can learn all about configuration management.

- Probably the best-known organization is IpX (Institute for Process Excellence), teaching the CM2 methodology. Have a look here: CM2 certification and courses

- Closely related to IpX, Martijn Dullaart shares his thoughts coming from the field as Lead Architect for Enterprise Configuration Management at ASML (one of the Dutch crown jewels) in his blog: MDUX

- CMstat, a configuration and data management solution provider, provides educational posts from their perspective. Have a look at their posts, for example, PLM or PDM or CM

- If you want to have a quick overview of Configuration Management in general, targeted for the mid-market, have a look at this (outdated) course: Training for Small and Medium Enterprises on CONFIGURATION MANAGEMENT. Good for self-study to get an understanding of the domain.

To summarize

In regulated industries, Configuration Management and PLM are a must to ensure compliance and quality. Configuration management and (engineering) change management are, first of all, required methodologies that guarantee the quality of your products. The more complex your products are, the higher the need for change and configuration management.

In regulated industries, Configuration Management and PLM are a must to ensure compliance and quality. Configuration management and (engineering) change management are, first of all, required methodologies that guarantee the quality of your products. The more complex your products are, the higher the need for change and configuration management.

PLM-systems require embedded engineering change management – part of the PDM domain. Performing Engineering Change Management in a system is something many users do not like, as it feels like overhead. Too much administration or too many mouse clicks.

So far, there is no golden egg that performs engineering change management automatically. Perhaps in a data-driven environment, algorithms can speed-up change management processes. Still, there is a need for human decisions.

Similar to configuration management. If you have a PLM-system that connects all the data from concept, design, and manufacturing in a single environment, it does not mean you are performing configuration management. You need to have processes in place, and depending on your product and industry, the importance will vary.

Similar to configuration management. If you have a PLM-system that connects all the data from concept, design, and manufacturing in a single environment, it does not mean you are performing configuration management. You need to have processes in place, and depending on your product and industry, the importance will vary.

Conclusion

In the first seven posts, we discussed the design and engineering practices, from CAD to EBOM, ending with the MBOM. Engineering Change Management and, in particular, Configuration Management are methodologies to ensure the consistency of data along the product lifecycle. These methodologies are connected and need to be fit for the future – more on this when we move to modern model-based approaches.

Closing note:

While finishing this blog post today I read Jan Bosch’s post: Why you should not align. Jan touches the same topic that I try to describe in my series Learning from the Past ….., as my intention is to make us aware that by holding on to practices from the past we are blocking our future. Highly recommended to read his post – a quote:

While finishing this blog post today I read Jan Bosch’s post: Why you should not align. Jan touches the same topic that I try to describe in my series Learning from the Past ….., as my intention is to make us aware that by holding on to practices from the past we are blocking our future. Highly recommended to read his post – a quote:

The problem is, of course, that every time you resist change, you get a bit behind. You accumulate some business, process and technical debt. You become a little less “fitting” to the environment in which you’re operating

In the series learning from the past to understand the future, we have almost reached the current state of PLM before digitization became visible. In the last post, I introduced the value of having the MBOM preparation inside a PLM-system, so manufacturing engineering can benefit from early visibility and richer product context when preparing the manufacturing process.

In the series learning from the past to understand the future, we have almost reached the current state of PLM before digitization became visible. In the last post, I introduced the value of having the MBOM preparation inside a PLM-system, so manufacturing engineering can benefit from early visibility and richer product context when preparing the manufacturing process.

Does everyone need an MBOM?

It is essential to realize that you do not need an EBOM and a separate MBOM in case of an Engineering To Order primary process. The target of ETO is to deliver a unique customer product with no time to lose. Therefore, engineering can design with a manufacturing process in mind.

It is essential to realize that you do not need an EBOM and a separate MBOM in case of an Engineering To Order primary process. The target of ETO is to deliver a unique customer product with no time to lose. Therefore, engineering can design with a manufacturing process in mind.

The need for an MBOM comes when:

- You are selling a specific product over a more extended period of time. The engineering definition, in that case, needs to be as little as possible dependent on supplier-specific parts.

- You are delivering your portfolio based on modules. Modules need to be as long as possible stable, therefore independent of where they are manufactured and supplier-specific parts. The better you can define your modules, the more customers you can reach over time.

- You are having multiple manufacturing locations around the world, allowing you to source locally and manufacture based on local plant-specific resources. I described these options in the previous post

The challenge for all companies that want to move from ETO to BTO/CTO is the fact that they need to change their methodology – building for the future while supporting the past. This is typically something to be analyzed per company on how to deal with the existing legacy and installed base.

The challenge for all companies that want to move from ETO to BTO/CTO is the fact that they need to change their methodology – building for the future while supporting the past. This is typically something to be analyzed per company on how to deal with the existing legacy and installed base.

Configurable EBOM and MBOM

In some previous posts, I mentioned that it is efficient to have a configurable EBOM. This means that various options and variants are managed in the same EBOM-structure that can be filtered based on configuration parameters (date effectivity/version identifier/time baseline). A configurable EBOM is often called a 150 % EBOM

The MBOM can also be configurable as a manufacturing plant might have almost common manufacturing steps for different product variants. By using the same process and filtered MBOM, you will manufacture the specific product version. In that case, we can talk about a 120 % MBOM

The MBOM can also be configurable as a manufacturing plant might have almost common manufacturing steps for different product variants. By using the same process and filtered MBOM, you will manufacture the specific product version. In that case, we can talk about a 120 % MBOM

Note: the freedom of configuration in the EBOM is generally higher than the options in the configurable MBOM.

The real business change for EBOM/MBOM

So far, we have discussed the EBOM/MBOM methodology. It is essential to realize this methodology only brings value when the organization will be adapted to benefit from the new possibilities.

One of the recurring errors in PLM implementations is that users of the system get an extended job scope, without giving them the extra time to perform these activities. Meanwhile, other persons downstream might benefit from these activities. However, they will not complain. I realized that already in 2009, I mentioned such a case: Where is my PLM ROI, Mr. Voskuil?

Now let us look at the recommended business changes when implementing an EBOM/MBOM-strategy

- Working in a single, shared environment for engineering and manufacturing preparation is the first step to take.

Working in a PLM-system is not a problem for engineers who are used to the complexity of a PDM-system. For manufacturing engineers, a PLM-environment will be completely new. Manufacturing engineers might prepare their bill of process first in Excel and ultimately enter the complete details in their ERP-system. ERP-systems are not known for their user-friendliness. However, their interfaces are often so rigid that it is not difficult to master the process. Excel, on the other side, is extremely flexible but not connected to anything else.

And now, this new PLM-system requires people to work in a more user-friendly environment with limited freedom. This is a significant shift in working methodology. This means manufacturing engineers need to be trained and supported over several months. Changing habits and keep people motivated takes energy and time. In reality, where is the budget for these activities? See my 2016 post: PLM and Cultural Change Management – too expensive?

And now, this new PLM-system requires people to work in a more user-friendly environment with limited freedom. This is a significant shift in working methodology. This means manufacturing engineers need to be trained and supported over several months. Changing habits and keep people motivated takes energy and time. In reality, where is the budget for these activities? See my 2016 post: PLM and Cultural Change Management – too expensive?

- From sequential to concurrent

Once your manufacturing engineers are able to work in a PLM-environment, they are able to start the manufacturing definition before the engineering definition is released. Manufacturing engineers can participate in design reviews having the information in their environment available. They can validate critical manufacturing steps and discuss with engineers potential changes that will reduce the complexity or cost for manufacturing. As these changes will be done before the product is released, the cost of change is much lower. After all, having engineering and manufacturing working partially in parallel will reduce time to market.

One of the leading business drivers for many companies is introducing products or enhancements to the market. Bringing engineering and manufacturing preparation together also means that the PLM-system can no longer be an engineering tool under the responsibility of the engineering department.

The responsibility for PLM needs to be at a level higher in the organization to ensure well-balanced choices. A higher level in the organization automatically means more attention for business benefits and less attention for functions and features.

The responsibility for PLM needs to be at a level higher in the organization to ensure well-balanced choices. A higher level in the organization automatically means more attention for business benefits and less attention for functions and features.

From technology to methodology – interface issues?

The whole EBOM/MBOM-discussion often has become a discussion related to a PLM-system and an ERP-system. Next, the discussion diverted to how these two systems could work together, changing the mindset to the complexity of interfaces instead of focusing on the logical flow of information.

In an earlier PI Event in München 2016, I lead a focus group related to the PLM and ERP interaction. The discussion was not about technology, all about focusing on what is the logical flow of information. From initial creation towards formal usage in a product definition (EBOM/MBOM).

In an earlier PI Event in München 2016, I lead a focus group related to the PLM and ERP interaction. The discussion was not about technology, all about focusing on what is the logical flow of information. From initial creation towards formal usage in a product definition (EBOM/MBOM).

What became clear from this workshop and other customer engagements is that people are often locked in their siloed way of thinking. Proposed information flows are based on system capabilities, not on the ideal flow of information. This is often the reason why a PLM/ERP-interface becomes complicated and expensive. System integrators do not want to push for organizational change, they prefer to develop an interface that adheres to the current customer expectations.

SAP has always been promoting that they do not need an interface between engineering and manufacturing as their data management starts from the EBOM. They forgot to mention that they have a difficult time (and almost no intention) to manage the early ideation and design phase. As a Dutch SAP country manager once told me: “Engineers are resources that do not want to be managed.” This remark says all about the mindset of ERP.

After overlooking successful PLM-implementations, I can tell the PLM-ERP interface has never been a technical issue once the methodology is transparent. A company needs to agree on logical data flow from ideation through engineering towards design is the foundation.

It is not about owning data and where to store it in a single system. It is about federated data sets that exist in different systems and that are complementary but connected, requiring data governance and master data management.

It is not about owning data and where to store it in a single system. It is about federated data sets that exist in different systems and that are complementary but connected, requiring data governance and master data management.

The SAP-Siemens partnership

In the context of the previous paragraph, the messaging around the recently announced partnership between SAP and Siemens made me curious. Almost everyone has shared an opinion about the partnership. There is a lot of speculation, and many questions were imaginarily answered by as many blog posts in the field. Last week Stan Przybylinski shared CIMdata’s interpretations in a webinar Putting the SAP-Siemens Partnership In Context, which was, in my opinion, the most in-depth analysis I have seen.

For what it is worth, my analysis:

- First of all, the partnership is a merger of slide decks at this moment, aiming to show to a potential customer that in the SAP/Siemens-combination, you find everything you need. A merger of slides does not mean everything works together.

- It is a merger of two different worlds. You can call SAP a real data platform with connected data, where Siemens offering is based on the Teamcenter backbone providing a foundation for a coordinated approach. In the coordinated approach, the data flexibility is lower. For that reason, Mendix is crucial to make Siemens portfolio behave like a connected platform too.

You can read my doubts about having a coordinated and connected system working together (see image above). It was my #1 identified challenge for this decade: PLM 2020 – PLM the next decade (before COVID-19 became a pandemic and illustrated we need to work connected) - The fact that SAP will sell TC PLM and Siemens will sell SAP PPM seems like loser’s statement, meaning our SAP PLM is probably not good enough, or our TC PPM capabilities are not good enough. In reality, I believe they both should remain, and the partnership should work on logical data flows with data residing in two locations – the federated approach. This is how platforms reside next to each other instead of the single black hole.

- The fact that standard interfaces will be developed between the two systems is a subtle sales argument with relatively low value. As I wrote in the “from technology to methodology”-paragraph, the challenges are in the organizational change within companies. Technology is not the issue, although system integrators also need to make a living.

- What I believe makes sense is that both SAP and Siemens, have to realize their Industry 4.0 end-to-end capabilities. It is a German vision now for several years and it is an excellent vision to strive for. Now it is time to build the two platforms working together. This will be a significant technical challenge mainly for Siemens as its foundation is based on a coordinated backbone.

- The biggest challenge, not only for this partnership, is the organizational change within companies that want to build an end-to-end connected solution. In particular, in companies with a vast legacy, the targeted industries by the partnership, the chasm between coordinated legacy data and intended connected data is enormous. Technology will not fix it, perhaps smoothen the pain a little.

Conclusion

With this post, we have reached the foundation of the item-centric approach for PLM, where the EBOM and MBOM are managed in a real-time context. Organizational change is the biggest inhibitor to move forward. The SAP-Siemens partnership is a sales/marketing approach to create a simplified view for the future at C-level discussions.

Let us watch carefully what happens in reality.

Next time potentially the dimension of change management and configuration management in an item-centric approach.

Or perhaps Martijn Dullaart will show us the way before, following up on his tricky poll question

One week ago, Yoann Maingon wrote an innocent post with the question: Has FFF killed? The question was raised related to a 2014 problem at GM, where a changed part was causing fatal accidents.

The discussion started by Yoann and here my short extract. Assuming this problem was a configuration management issue and Yoann somehow indicated that the problem might be related to the fact that ERP-systems do not carry a revision on the part number – leading to an unnoticed change. Therefore, he assumes there is a disconnect between the PLM-side (where we have parts with multiple lifecycle states and revisions) and ERP (where we have an industrial lifecycle – prototype/production).

The discussion started by Yoann and here my short extract. Assuming this problem was a configuration management issue and Yoann somehow indicated that the problem might be related to the fact that ERP-systems do not carry a revision on the part number – leading to an unnoticed change. Therefore, he assumes there is a disconnect between the PLM-side (where we have parts with multiple lifecycle states and revisions) and ERP (where we have an industrial lifecycle – prototype/production).

He posted his thoughts, and then LinkedIn exploded (currently 116 comments), which means it is a topic that is of significant concern in our community. Next, if you read the comments, there are different viewpoints:

- What does FFF really imply?

- What about revisions of parts?

- What are the best practices?

Let’s investigate these viewpoints with some comments

What does FFF really imply?

When we talk about FFF in engineering, we mean Form, Fit and Function – the three primary characteristics to describe a part (source Wikipedia)

- Form refers to such characteristics as external dimensions, weight, size, and visual appearance of a part or assembly. This is the element of FFF that is most affected by an engineer’s aesthetic choices, including enclosure, chassis, and control panel, that become the outward “face” of the product.

- Fit refers to the ability of the part or feature to connect to, mate with, or join to another feature or part within an assembly. The “fit” allows the part to meet the required assembly tolerances to be useful.

- Function is a criterion that is met when the part performs its stated purpose effectively and reliably. In an electronics product, for example, a function can depend on the solid-state components used, the software or firmware, and quite often on the features of the electronics enclosure selected.

One of the comments in Yoann’s post referred to Safe/Unsafe as a potential functional characteristic. I think this addition is not needed. Safety should be a requirement for the part, not a characteristic.

One of the comments in Yoann’s post referred to Safe/Unsafe as a potential functional characteristic. I think this addition is not needed. Safety should be a requirement for the part, not a characteristic.

FFF was and still is an approach for engineers to decide if a new, improved version of the part would get a revision or needs a new part number.

I think before we dive deeper into the other viewpoints, it is crucial to define the part number a little more.

In a correct PLM data model, there are two types of part numbers. First, the internal part number that your company uses inside its engineering Bill of Materials to identify a part. This part number can be a meaningless part only to provide uniqueness inside the company.

In 2015 I wrote several posts related to best practices and data modeling for PLM. The most relevant posts to this discussion are here:

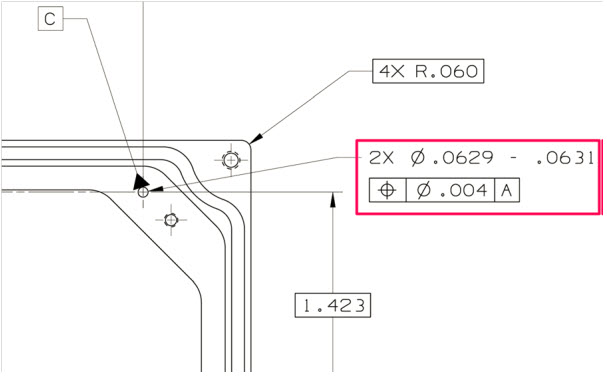

The part number can specify a part that needs to be manufactured according to specification, or it can be a part that needs to be purchased from an available supplier/manufacturer. The manufacturer part number is, most of the time, a meaningful number (6 – 7 characters) as these parts need to be ordered by your company. The manufacturer part number is the SKU for the manufacturer. As you can imagine in the manufacturer’s catalog, there isn’t a revision mentioned. In graphics, see the image below:

Your company might sell Product MP-323121 (note: the ID is meaningful to help the customer to order the product).

Internally there is a related EBOM that specifies the product. The EBOM top part is O122 (note: here, we can use a meaningless identifier as all is digitally connected).

For the manufacturing of O122, we need to resolve the EBOM according to its specifications. Therefore, for Part O124, the company needs to decide to purchase from their approved manufacturers either part ABC-21231 or XYZ-88818 (note: again, a meaningful ID as these companies are not digitally connected).

Now coming back to the FFF-discussion. For the orange parts, with a meaningful ID, no revision exists. However, if Assembly O122 is 100% FFF compatible, the Product ID MP-323121 will not change. It allows your company to optimize the EBOM and/or MBOM, meanwhile keeping 100% compatibility to the outside world. (note: the same principle applies to the two manufacturers for Part O124.)

In case Top Assembly O122 has new or changed parts – what should happen there?

At that moment, the definition has changed. The definitions, most of the time described in documents/drawings/models, are related information to the BOM. Therefore the Top Assembly O122 should get a new identifier. There is no need to name it a revision, it is a new data set in the PLM-system, again with a meaningless identifier as we are connected digitally,

At that moment, the definition has changed. The definitions, most of the time described in documents/drawings/models, are related information to the BOM. Therefore the Top Assembly O122 should get a new identifier. There is no need to name it a revision, it is a new data set in the PLM-system, again with a meaningless identifier as we are connected digitally,

What about revisions of parts?

Of course, the management of changes existed long before PLM-systems were introduced.

The specifications of a part were defined in drawings. The drawing contained all the information, not only the geometry definitions, but also specifications on how to manufacture the part.

The specifications of a part were defined in drawings. The drawing contained all the information, not only the geometry definitions, but also specifications on how to manufacture the part.

For complex products, a considerable set of consistently related drawings would be released to manufacturing. A release process with physical signatures on it.

At the same time, there was no discussion: the drawing represents the part. And as there was no digital connection, part numbers/drawing numbers were meaningful, often with the format of the drawing as part of the identifier.

In case changes were needed, for example, fixing a dimension or tolerance as discovered during manufacturing, the drawing had to be revised to remain consistent. First, in the original drawing, the issue or change was marked in red (redlining). Then engineering had to create a new version of the drawing.

In case changes were needed, for example, fixing a dimension or tolerance as discovered during manufacturing, the drawing had to be revised to remain consistent. First, in the original drawing, the issue or change was marked in red (redlining). Then engineering had to create a new version of the drawing.

Depending on the impact of change (here comes also the FFF-principle), people decided if a new part number was needed (FFF-change) or that the change only required an update of the drawing(s), meaning a revision. If the difference was small (for example, adding a missing annotation), it could be called a minor change, all to be reflected in the drawing number, which equals the part number in this approach. So, when we talk about revisions of parts, we are talking about a document change.

A lousy practice from that approach is also that often manufacturing just redlines a drawing and keeps the redlined drawing as their source. It is too time-consuming or difficult to update the source drawing(s) through a change process. Engineering is not aware of this change, and when a later change comes through from engineering, these “fixes” might be missed as there is no traceability.

Generic example of a PLM data model and its relationsWhen PLM-systems were introduced, of course, companies did not want to disrupt their existing ways of working. Therefore, they were asking the PLM-editors to enable revisions on parts and so the PLM-editors did (or do).

However, if you want to use the PLM-system in the best manner, you need to “decouple” the concept: part number equals drawing number, combined with the possibility to start using meaningless identifiers, as relations between parts and drawings are managed in the PLM-system through relational links.

Relevant post related to the PLM data model are:

- EBOM and (CAD) Documents

- Intelligent Part or Product numbers

- The impact of Non-Intelligent Part numbers

What are the best practices?

As some people mentioned in their comments to Yoann’s post, why do we have to answer this question as all is already well understood and described in best practices? I agree with that statement: Best Practices exist – so how to obtain them?

First, there is the whole framework of Configuration Management, which existed long before PLM-systems were introduced. If you follow their methodology, you can be (almost) guaranteed your information is consistent and correct. Configuration Management is crucial in areas where the impact of an error is enormous, like the GM-example Yoann referred to. Also, companies in the Aerospace and Defense industry are the ones that have strict configuration management in place.

First, there is the whole framework of Configuration Management, which existed long before PLM-systems were introduced. If you follow their methodology, you can be (almost) guaranteed your information is consistent and correct. Configuration Management is crucial in areas where the impact of an error is enormous, like the GM-example Yoann referred to. Also, companies in the Aerospace and Defense industry are the ones that have strict configuration management in place.

Configuration management does not come for free. It requires an investment in skills, potentially a change in ways of working, and requires an overhead. Manufacturing companies that are creating less “risky” products often focus more on optimizing (= reducing) the cost of their internal processes instead of investing in proper methodologies to manage consistency.

If you want to learn more about CM, investigate the Institute of Process Excellence (IPX), the founders of the CM2 framework for Enterprise Configuration Management, and much more. Note: Their knowledge does not come for free, which I can understand. However, it also creates a barrier for the company’s further investment in CM as this kind of strategic investments are hard to sell at the management level by individuals in a company.

In the context of CM, I advise you to follow Martijn Dullaart, who is quite active in our social community. His latest blog post related to this thread is: It’s about Interchangeability and Traceability

In the context of CM, I advise you to follow Martijn Dullaart, who is quite active in our social community. His latest blog post related to this thread is: It’s about Interchangeability and Traceability

With the introduction of PLM-system, these companies and the PLM-editors created the opportunity to implement configuration management in their system.

The data inside the system would be the “single version of the truth.” Unfortunately, this was most of the time, just a sales strategy, falsely giving the impression that information is under control now. Last year I wrote several posts related to the relation between PLM and CM, starting from PLM and Configuration Management – a happy marriage?

The data inside the system would be the “single version of the truth.” Unfortunately, this was most of the time, just a sales strategy, falsely giving the impression that information is under control now. Last year I wrote several posts related to the relation between PLM and CM, starting from PLM and Configuration Management – a happy marriage?

If you are interested in another resource for information related to these topics, have a look at the website from Jörg Eisenträger who also collected his best practices for PLM and CM for sharing (thanks Paul van der Ree for the link)

Don’t expect best practices from your PLM-vendors as their role is to sell software. It is the continuous discussion between:

- A PLM-system that forces companies to work according to embedded methodology (hard to sell/implement but idealistically correct)

And

- A flexible PLM-system that allows you to build and configure anything (easy to sell/challenging to implement correctly, depending on “wise” decisions)

The Future

Even though most companies are working drawing-centric, with or without a linked PLM-backbone for BOM-management, the next upcoming challenge is to evolve to model-based practices. The current CM-practices still talk about documents, although documents are already electronic datasets in that context. The future, however, in a model-based enterprise evolves related to connected models, 3D Models, but also simulation and software models, with different lifecycles and pace of change. For the model-based enterprise, we need to develop digital best practices that guarantee the same level of quality, however, executed and/or supported by (AI) Artificial Intelligence. AI is needed as human beings cannot physically analyze and understand all the impact of a change in such an environment.

Even though most companies are working drawing-centric, with or without a linked PLM-backbone for BOM-management, the next upcoming challenge is to evolve to model-based practices. The current CM-practices still talk about documents, although documents are already electronic datasets in that context. The future, however, in a model-based enterprise evolves related to connected models, 3D Models, but also simulation and software models, with different lifecycles and pace of change. For the model-based enterprise, we need to develop digital best practices that guarantee the same level of quality, however, executed and/or supported by (AI) Artificial Intelligence. AI is needed as human beings cannot physically analyze and understand all the impact of a change in such an environment.

Conclusion

The FFF-discussion illustrates that building a consistent framework within PLM is not an easy goal to achieve. My blog buddy Oleg Shilovitsky would claim that we consultants create the complexity. PLM-editors will never solve this complexity, it is up to your company’s mission to invest in knowledge to understand why and how to reduce the complexity. With this post and the related links and discussions, I hope more clarity will help you to make “wise” decisions.

Last week I shared my thoughts related to my observation that the ROI of PLM is not directly visible or measurable, and I explained why. Also, I explained that the alignment of an organization requires a myth to make it happen. A majority of readers agreed with these observations. Some others either misinterpreted the headlines or twisted the story in favor of their opinion.

Last week I shared my thoughts related to my observation that the ROI of PLM is not directly visible or measurable, and I explained why. Also, I explained that the alignment of an organization requires a myth to make it happen. A majority of readers agreed with these observations. Some others either misinterpreted the headlines or twisted the story in favor of their opinion.

A few came from Oleg Shilovitsky and as Oleg is quite open in his discussions, it allows me to follow-up on his statements. Other people might share similar thoughts but they haven’t had the time or opportunity to be vocal. Feel free to share your thoughts/experiences too.

Some misinterpretations from Oleg’s post: PLM circa 2020 – How to stop selling Myths

- The title “How to stop selling Myths” is the first misinterpretation.

We are not selling myths – more below. - “Jos Voskuil’s recommendation is to create a myth. In his PLM ROI Myths article, he suggests that you should not work on a business case, value, or even technology” is the second misinterpretation, you still need a business case, you need value and you need technology.

And I got some feedback from Lionel Grealou, who’s post was a catalyst for me to write the PLM ROI Myth post. I agree I took some shortcuts based on his blog post. You can read his comments here. The misinterpretation is:

- “Good luck getting your CFO approve the business change or PLM investment based on some “myth” propaganda :-)” as it is the opposite, make your plan, support your plan with a business case and then use the myth to align

I am glad about these statements as they allow me to be more precise, avoiding misperceptions/myth-perceptions.

A Myth is bad

Some people might think that a myth is bad, as the myth is most of the time abstract. I think these people do not realize that there a lot of myths that they are following; it is a typical social human behavior to respond to myths. Some myths:

Some people might think that a myth is bad, as the myth is most of the time abstract. I think these people do not realize that there a lot of myths that they are following; it is a typical social human behavior to respond to myths. Some myths:

- How can you be religious without believing in myths?

- In this country/world, you can become anything if you want?

- In the past, life was better

- I make this country great again

The reason human beings need myths is that without them, it is impossible to align people around abstract themes. Try for each of the myths above to create an end-to-end logical story based on factual and concrete information. Impossible!

Read Yuval Harari’s book Sapiens about the power of myths. Read Steven Pinker’s book Enlightenment Now to understand that statistics show a lot of current myths are false. However, this does not mean a myth is bad. Human beings are driven by social influences and myths – it is our brain.

Unless you have no social interaction, you might be immune to myths. With brings me to quoting Oleg once more time:

“A long time ago when I was too naive and too technical, I thought that the best product (or technology) always wins. Well… I was wrong. “

I went through the same experience, having studied physics and mathematics makes you think extremely logical. Something I enjoyed while developing software. Later, when I started my journey as the virtualdutchman mediating in PLM implementations, I discovered logical alone does not work in businesses. The majority of decisions are done based on “gut feelings” still presented as reasonable cases.

Unless you have an audience of Vulcans, like Mr. Spock, you need to deal with the human brain. Consider the myth as the envelope to pass the PLM-project to the management. C-level acts by myths as so far I haven’t seen C-level management spending serious time on understanding PLM. I will end with a quote from Paul Empringham:

I sometimes wish companies would spend 6 months+ to educate themselves on what it takes to deliver incremental PLM success BEFORE engaging with software providers

You don’t need a business case

Lionel is also skeptical about some “Myth-propaganda” and I agree with him. The Myth is the envelope, inside needs to be something valuable, the strategy, the plan, and the business case. Here I want to stress one more time that most business cases for PLM are focusing on tool and collaboration efficiency. And from there projecting benefits. However, how well can we predict the future?

Lionel is also skeptical about some “Myth-propaganda” and I agree with him. The Myth is the envelope, inside needs to be something valuable, the strategy, the plan, and the business case. Here I want to stress one more time that most business cases for PLM are focusing on tool and collaboration efficiency. And from there projecting benefits. However, how well can we predict the future?

If you implement a process, let’s assume BOM-collaboration done with Excel by BOM-collaboration based on an Excel-on-the-cloud-like solution, you can measure the differences, assuming you can measure people’s efficiency. I guess this is what Oleg means when he explains OpenBOM has a real business case.

However, if you change the intent for people to work differently, for example, consult your supplier or manufacturing earlier in the design process, you touch human behavior. Why should I consult someone before I finish my job, I am measured on output not on collaboration or proactive response? Here is the real ROI challenge.

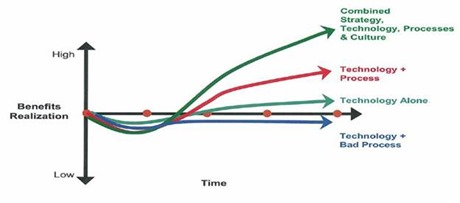

I have participated in dozens of business cases and at the end, they all look like the graph below:

The ROI is fantastic – after a little more than 2 years, we have a positive ROI, and the ROI only gets bigger. So if you trust the numbers, you would be a fool not to approve this project. Right?

And here comes the C-level gut-feeling. If I have a positive feeling (I follow the myth), then I will approve. If I do not like it, I will say I do not trust the numbers.

Needless to say that if there was a business case without ROI, we do not need to meet the C-level. Unless, and it happens incidental, at C-level, there was already a decision we need PLM from Vendor X because we played golf together, we are condemned together or we believe the same myths.

Needless to say that if there was a business case without ROI, we do not need to meet the C-level. Unless, and it happens incidental, at C-level, there was already a decision we need PLM from Vendor X because we played golf together, we are condemned together or we believe the same myths.

In reality, the old Gartner graph from realized benefits says it all. The impact of culture, processes, and people can make or break a plan.

You do not need an abstract story for PLM

Some people believe PLM on its own is a myth. You just need the right technology and people will start using it, spreading it out and see how we have improved business. Sometimes email is used as an example. Email is popular because you can with limited effort, collaborate with people, no matter where they are. Now twenty years later, companies are complaining about the lack of traceability, the lack of knowledge and understanding related to their products and processes.

PLM will always have the complexity of supporting traceability combined with real-time collaboration. If you focus only on traceability, people will complain that they are not a counter clerk. If you focus solely on collaboration, you miss the knowledge build-up and traceability.

That’s why PLM is a mix of governance, optimized processes to guarantee quality and collaboration, combined with a methodology to tune the existing processes implemented in tools that allow people to be confident and efficient. You cannot translate a business strategy into a function-feature list for a tool.

That’s why PLM is a mix of governance, optimized processes to guarantee quality and collaboration, combined with a methodology to tune the existing processes implemented in tools that allow people to be confident and efficient. You cannot translate a business strategy into a function-feature list for a tool.

Conclusion

Myths are part of the human social alignment of large groups of people. If a Myth is true or false, I will not judge. You can use the Myth as an envelope to package your business case. The business case should always be a combination of new ways of working (organizational change), optimized processes and finally, the best tools. A PLM tool-only business case is to my opinion far from realistic

Now preparing for PI PLMx London on 3-4 February – discussing Myths, Single BOMs and the PLM Green Alliance

This time a post that has been on the table already for a long time – the importance of having established processes, in particular with implementing PLM. By nature, most people hate processes as it might give the idea that their personal creativity is limited, where large organizations love processes as for them this is the way to guarantee a confident performance. So let’s have a more in-depth look.

This time a post that has been on the table already for a long time – the importance of having established processes, in particular with implementing PLM. By nature, most people hate processes as it might give the idea that their personal creativity is limited, where large organizations love processes as for them this is the way to guarantee a confident performance. So let’s have a more in-depth look.

Where processes shine

In a transactional world, processes can be implemented like algorithms, assuming the data to be processed has the right quality. That is why MRP (Material Requirement Planning) and ERP (Enterprise Resource Planning) don’t have the mindset of personal creativity. It is about optimized execution driven by financial and quality goals.

When I started my career in the early days of data management, before it was called PDM/PLM, I learned that there is a need for communication-related to product data. Terms are revisions, and versions started to pop-up combined with change processes. Some companies began to talk about configuration management.

Companies were not thinking PLM along the whole lifecycle. It was more PDM for engineering and ERP for manufacturing. Where PDM was ultimate a document-control environment, ERP was the execution engine relying on documented content, but not necessarily connected. Unfortunate this is still the case at many companies, and it has to do with the mindset. Traditionally a company’s performance has been measured based on financial reporting coming from the ERP system. Engineering was an unmanageable cost in the eyes of the manufacturing company’s management and ERP-software vendors.

Companies were not thinking PLM along the whole lifecycle. It was more PDM for engineering and ERP for manufacturing. Where PDM was ultimate a document-control environment, ERP was the execution engine relying on documented content, but not necessarily connected. Unfortunate this is still the case at many companies, and it has to do with the mindset. Traditionally a company’s performance has been measured based on financial reporting coming from the ERP system. Engineering was an unmanageable cost in the eyes of the manufacturing company’s management and ERP-software vendors.

In de middle of the nineties (previous century now ! ), I had a meeting with an ERP-country manager to discuss a potential partnership. The challenge was that he had no clue about the value and complementary need for PLM. Even after discussing with him the differences between iterative product development (with revisioning) and linear execution (on the released product), his statement was:

“Engineers are just resources that do not want to be managed, but we will get them”

Meanwhile, I can say this company has changed its strategy, giving PLM a space in their portfolio combined with excellent slides about what could be possible.

To conclude, for linear execution the meaning of processes is more or less close to algorithms and when there is no algorithm, the individual steps in place are predictable with their own KPIs.

Process certification

As I mentioned in the introduction, processes were established to guarantee a predictable outcome, in particular when it comes to quality. For that reason, in the previous century when globalization started companies were somehow forced to get ISO 900x certified. The idea behind these certifications was that a company had processes in place to guarantee an expected outcome and for when they failed, they would have procedures in place to fix these gaps. The reason companies were doing this because no social internet could name and shame bad companies. Having ISO 900x certification would be the guarantee to deliver quality. In the same perspective, we could see, configuration management, a system of best practices to guarantee that product information was always correct.

As I mentioned in the introduction, processes were established to guarantee a predictable outcome, in particular when it comes to quality. For that reason, in the previous century when globalization started companies were somehow forced to get ISO 900x certified. The idea behind these certifications was that a company had processes in place to guarantee an expected outcome and for when they failed, they would have procedures in place to fix these gaps. The reason companies were doing this because no social internet could name and shame bad companies. Having ISO 900x certification would be the guarantee to deliver quality. In the same perspective, we could see, configuration management, a system of best practices to guarantee that product information was always correct.

Certification was and is heaven for specialized external auditors and consultants. To get certification you needed to invest in people and time to describe your processes, and once these processes were defined, there were regular external audits to ensure the quality system has been followed. The beauty of this system – the described procedures were more or less “best intentions” not enforced. When the auditor would come the company had to play some theater that processes were followed., the auditor would find some improvements for next year and the management was happy certification was passed.

Certification was and is heaven for specialized external auditors and consultants. To get certification you needed to invest in people and time to describe your processes, and once these processes were defined, there were regular external audits to ensure the quality system has been followed. The beauty of this system – the described procedures were more or less “best intentions” not enforced. When the auditor would come the company had to play some theater that processes were followed., the auditor would find some improvements for next year and the management was happy certification was passed.

This has changed early this century. In particular, mid-market companies were no longer motivated to keep up this charade. The quality process manual remained as a source of inspiration, but external audits were no longer needed. Companies were globally connected and reviewed, so reputation could be sourced easily.

The result: there are documented quality procedures, and there is a reality. The more disconnected employees became in a company due to mergers or growth, the more individual best-practices became the way to deliver the right product and quality, combined with accepted errors and fixes downstream or later. The hidden cost of poor quality is still a secret within many companies. Talking with employees they all have examples where their company lost a lot of money due to quality mistakes. Yet in less regulated industries, there is no standard approach, like CAPA (Corrective And Preventive Actions), APQP or 8D to solve it.

The result: there are documented quality procedures, and there is a reality. The more disconnected employees became in a company due to mergers or growth, the more individual best-practices became the way to deliver the right product and quality, combined with accepted errors and fixes downstream or later. The hidden cost of poor quality is still a secret within many companies. Talking with employees they all have examples where their company lost a lot of money due to quality mistakes. Yet in less regulated industries, there is no standard approach, like CAPA (Corrective And Preventive Actions), APQP or 8D to solve it.

Configuration Management and Change Management processes

When it comes to managing the exact definition of a product, either an already manufactured product or products that are currently made, there is a need for Configuration Management. Before there were PLM systems configuration management was done through procedures defining configurations based on references to documents with revisions and versions. In the aerospace industry, separate systems for configuration management were developed, to ensure the exact configuration of an aircraft could be retrieved at any time. Less regulated industries used a more document-based procedural approach as strict as possible. You can read about the history of configuration management and PLM in an earlier blog post: PLM and Configuration Management – a happy marriage?

When it comes to managing the exact definition of a product, either an already manufactured product or products that are currently made, there is a need for Configuration Management. Before there were PLM systems configuration management was done through procedures defining configurations based on references to documents with revisions and versions. In the aerospace industry, separate systems for configuration management were developed, to ensure the exact configuration of an aircraft could be retrieved at any time. Less regulated industries used a more document-based procedural approach as strict as possible. You can read about the history of configuration management and PLM in an earlier blog post: PLM and Configuration Management – a happy marriage?

With the introduction of PDM and PLM-systems, more and more companies wanted to implement their configuration management and in particular their change management inside the system, as the changes are always related to product information that can reside in a PLM-system. The change of part can be proposed (ECR), analyzed and approved, leading to and implementation of the change (ECO) which is based on changed specifications, designs (3D Models / Drawings) and more. You can read the basics here: The Issue and ECR/ECO for Dummies (Reprise)

With the introduction of PDM and PLM-systems, more and more companies wanted to implement their configuration management and in particular their change management inside the system, as the changes are always related to product information that can reside in a PLM-system. The change of part can be proposed (ECR), analyzed and approved, leading to and implementation of the change (ECO) which is based on changed specifications, designs (3D Models / Drawings) and more. You can read the basics here: The Issue and ECR/ECO for Dummies (Reprise)

The Challenge (= Problem) of Digital Processes

More and more companies are implementing change processes fully in PLM, and this is the point that creates the most friction for a PLM implementation. The beauty of digital change processes is that they can be full-proof. No change gets unnoticed as everyone is forced to follow the predefined procedures, either a type of fast track in case of lightweight (= low risk) changes or the full change process when the product is already in a mature state.

Like the ISO-900x processes, the PLM-implementer is often playing the role of the consultancy firm that needs to recommend the company how to implement configuration management and change processes. The challenge here is that the company most of the time does not have a standard view for their change processes and for sure the standard change management inside PLM s not identical to their processes.

Like the ISO-900x processes, the PLM-implementer is often playing the role of the consultancy firm that needs to recommend the company how to implement configuration management and change processes. The challenge here is that the company most of the time does not have a standard view for their change processes and for sure the standard change management inside PLM s not identical to their processes.

Here the battle starts….

Management believes that digital change processes, preferable out-of-the-box, a crucial to implement, where users feel their job becomes more an administrative job than a creative job. Users that create information don’t want to be bothered with the decisions for numbering and revisioning.

Management believes that digital change processes, preferable out-of-the-box, a crucial to implement, where users feel their job becomes more an administrative job than a creative job. Users that create information don’t want to be bothered with the decisions for numbering and revisioning.

They expect the system to do that easily for them – which does not happen as old procedures, responsibilities, and methodologies do not align with the system. Users are not measured or challenged for data quality, they are measured on the work they deliver that is needed now. Let’s first get the work done before we make sure all is consisted defined in the PLM-system.

Digital Transformation allows companies to redefine the responsibilities for users related to the data they produce. It is no longer a 3D Model or a drawing, but a complete data set with properties/attributes that can be shared and used for analysis and automation.

Conclusion

Implementing digital processes for PLM is the most painful, but required step for a successful implementation. As long as data and processes are not consistent, we can keep on dreaming about automation in PLM. Therefore, digital transformation inside PLM should focus on new methods and responsibilities to create a foundation for the future. Without an agreement on the digital processes there will be a growing inefficiency for the future.

Image: 21stcenturypublicservant.wordpress.com/