You are currently browsing the category archive for the ‘Platform’ category.

This week is busy for me as I am finalizing several essential activities related to my favorite hobby, product lifecycle management or is it PLM😉?

And most of these activities will result in lengthy blog posts, starting with:

“The week(end) after <<fill in the event>>”.

Here are the upcoming actions:

Click on each image if you want to see the details:

In this Future of PLM Podcast series, moderated by Michael Finocciaro, we will continue the debate on how to position PLM (as a system or a strategy) and move away from an engineering framing. Personally, I never saw PLM as a system and started talking more and more about product lifecycle management (the strategy) versus PLM/PDM (the systems).

Note: the intention is to be interactive with the audience, so feel free to post questions/remarks in the comments, either upfront or during the event.

You might have seen in the past two weeks some posts and discussions I had with the Share PLM team about a unique offering we are preparing: the PLM Awareness program. From our field experience, PLM is too often treated as a technical issue, handled by a (too) small team.

We believe every PLM program should start by fostering awareness of what people can expect nowadays, given the technology, experiences, and possibilities available. If you want to work with motivated people, you have to involve them and give them all the proper understanding to start with.

Join us for the online event to understand the value and ask your questions. We are looking forward to your participation.

This is another event related to the future of PLM; however, this time it is an in-person workshop, where, inspired by four PLM thought leaders, we will discuss and work on a common understanding of what is required for a modern PLM framework. The workshop, sponsored by the Arrowhead fPVN project, will be held in Paris on November 4th, preceding the PLM Roadmap/PDT Europe conference.

We will not discuss the term PLM; we will discuss business drivers, supporting technologies and more. My role as a moderator of this event is to assist with the workshop, and I will share its findings with a broader audience that wasn’t able to attend.

Be ready to learn more in the near future!

Suppose you have followed my blog posts for the past 10 years. In that case, you know this conference is always a place to get inspired, whether by leading companies across industries or by innovative and engaging new developments. This conference has always inspired and helped me gain a better understanding of digital transformation in the PLM domain and how larger enterprises are addressing their challenges.

This time, I will conclude the conference with a lecture focusing on the challenging side of digital transformation and AI: we humans cannot transform ourselves, so we need help.

At the end of this year, we will “celebrate” our fifth anniversary of the PLM Green Global Alliance. When we started the PGGA in 2020, there was an initial focus on the impact of carbon emissions on the climate, and in the years that followed, climate disasters around the world caused serious damage to countries and people.

How could we, as a PLM community, support each other in developing and sharing best practices for innovative, lower-carbon products and processes?

In parallel, driven by regulations, there was also a need to improve current PLM practices to efficiently support ESG reporting, lifecycle analysis, and, soon, the Digital Product Passport. Regulations that push for a modern data-driven infrastructure, and we discussed this with the major PLM vendors and related software or solution partners. See our YouTube channel @PLM_Green_Global_Alliance

In this online Zoom event, we invite you to join us to discuss the topics mentioned in the announcement. Join us in this event and help us celebrate!

I am closing that week at the PTC/User Benelux event in Eindhoven, the Netherlands, with a keynote speech about digital transformation in the PLM domain. Eindhoven is the city where I grew up, completed my amateur soccer career, ran my first and only marathon, and started my career in PLM with SmarTeam. The city and location feel like home. I am looking forward to discussing and meeting with the PTC user community to learn how they experience product lifecycle management, or is it PLM😉?

With all these upcoming events, I did not have the time to focus on a new blog post; however, luckily, in the 10x PLM discussion started by Oleg Shilovitsky there was an interesting comment from Rob Ferrone related to that triggered my mind. Quote:

With all these upcoming events, I did not have the time to focus on a new blog post; however, luckily, in the 10x PLM discussion started by Oleg Shilovitsky there was an interesting comment from Rob Ferrone related to that triggered my mind. Quote:

The big breakthrough will come from 1. advances in human-machine interface and 2. less % of work executed by human in the loop. Copy/paste, typing, voice recognition are all significant limits right now. It’s like trying to empty a bucket of water through a drinking straw. When tech becomes more intelligent and proactive then we will see at least 10x.

This remark reminded me of one of my first blog posts in 2008, when I was trying to predict what PLM would look like in 2050. I thought it is a nice moment to read it (again). Enjoy!

PLM in 2050

As the year ends, I decided to take my crystal ball to see what would happen with PLM in the future. It felt like a virtual experience, and this is what I saw:

As the year ends, I decided to take my crystal ball to see what would happen with PLM in the future. It felt like a virtual experience, and this is what I saw:

- Data is no longer replicated – every piece of information will have a Universal Unique ID, also known as a UUID. In 2020, this initiative became mature, thanks to the merger of some big PLM and ERP vendors, who brought this initiative to reality. This initiative dramatically reduced exchange costs in supply chains and led to bankruptcy for many companies that provided translation and exchange software.

- Companies store their data in ‘the cloud’ based on the concept outlined above. Only some old-fashioned companies still handle their own data storage and exchange, as they fear someone will access their data. Analysts compare this behavior with the situation in the year 1950, when people kept their money under a mattress, not trusting banks (and they were not always wrong)

- After 3D, a complete virtual world based on holography became the next step in product development and understanding. Thanks to the revolutionary quantum-3D technology, this concept could even be applied to life sciences. Before ordering a product, customers could first experience and describe their needs in a virtual environment.

- Finally, the cumbersome keyboard and mouse were replaced by voice and eye recognition. Initially, voice recognition

and eye tracking were cumbersome. Information was captured by talking to the system and by recording eye movements during hologram analysis. This made the life of engineers so much easier, as while researching and talking, their knowledge was stored and tagged for reuse. No need for designers to send old-fashioned emails or type their design decisions for future reuse - Due to the hologram technology, the world became greener. People did not need to travel around the world, and the standard became virtual meetings with global teams(airlines discontinued business class). Even holidays can be experienced in the virtual world thanks to a Dutch initiative inspired by coffee. The whole IT infrastructure was powered by efficient solar energy, drastically reducing the amount of carbon dioxide.

- Then, with a shock, I noticed PLM no longer existed. Companies were focusing on their core business processes. Systems/terms like PLM, ERP, and CRM no longer existed. Some older people still remembered the battle between those systems over data ownership and the political discomfort this caused within companies.

- As people were working so efficiently, there was no need to work all week. There were community time slots when everyone was active, but 50 per cent of the time, people had time to recreate (to re-create or recreate was the question). Some older French and German designers remembered the days when they had only 10 weeks holiday per year, unimaginable nowadays.

As we still have more than 40 years to reach this future, I wish you all a successful and excellent 2009.

I am looking forward to being part of the green future next year.

After a summer holiday in the south of Greece, it is time to resume my activities. The south of Crete is largely an analogue environment, far from any digital hype.

Tempted by LinkedIn posts, I noticed the summer was full of memories, with Martin Eigner sharing 40 years of PLM experience, Oleg Shilovitsky sharing 30 years of PDM Evolution, and Michael Finochario publishing posts on PLM vendors, CAD kernels, and more.

Tempted by LinkedIn posts, I noticed the summer was full of memories, with Martin Eigner sharing 40 years of PLM experience, Oleg Shilovitsky sharing 30 years of PDM Evolution, and Michael Finochario publishing posts on PLM vendors, CAD kernels, and more.

So where do I stand? While digesting all these historical experiences, I reflected on what we can learn from them and what we didn’t learn from them.

It started with technology.

From 1990 to 1999, I worked with mid-market companies, where data management was the most significant challenge. The introduction of MS Windows made data management more user-friendly, evolving from drawing management systems with version and status management capabilities.

From 1990 to 1999, I worked with mid-market companies, where data management was the most significant challenge. The introduction of MS Windows made data management more user-friendly, evolving from drawing management systems with version and status management capabilities.

Who remembers Automanager Workflow from Cyco, before SmarTeam came on the market?

For that reason, in the early days, PDM was an IT job. As the PDM system primarily dealt with engineering data, it was relatively easy to implement as an organizational change process. We transitioned from analogue to electronic in the department.

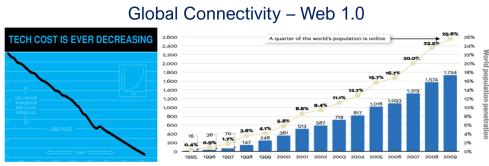

Connecting with other systems, particularly ERP, was a serious IT job and a financial challenge. Connecting with other systems, particularly ERP, was a serious IT job and a financial challenge. The rapid decline of IT components, combined with the rapid growth of global connectivity, has created new opportunities for collaboration.

As part of the Dassault/IBM/SmarTeam organization, I explained and taught these new capabilities worldwide.

In 2008, my VirtualDutchman blog and coaching journey began, evolving from explanations of technology to modern methodologies, which led to organizational change and expectation management – skills not traditionally associated with IT.

In 2008, my VirtualDutchman blog and coaching journey began, evolving from explanations of technology to modern methodologies, which led to organizational change and expectation management – skills not traditionally associated with IT.

Then came digital transformation

With growing connectivity, smartphones and Web 2.0 technology have led to more PLM-like discussions. PLM vendors expanded their scope and developed capabilities beyond mechanical engineering.

The expansion of capabilities was also the moment when the confusion about the term PLM reached its peak: a PLM strategy or a PLM system?

The expansion of capabilities was also the moment when the confusion about the term PLM reached its peak: a PLM strategy or a PLM system?

At the time, they were largely considered the same in discussions and advertisements..

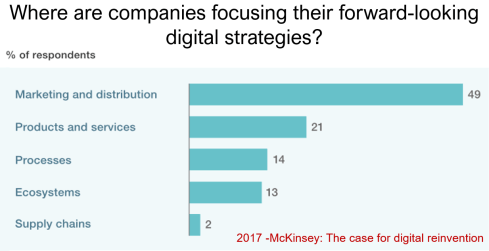

Meanwhile, digital transformation was occurring at the marketing and sales levels – companies invested in direct communication with their customers through the web.

Meanwhile, the internal ways of working for R&D, engineering, and manufacturing did not change significantly. Still, they were following linear processes, and despite the existence of 3D CAD, the 2D drawing remained the primary carrier of legal information between engineering, manufacturing, and suppliers.

Note: the option where the most benefits could be achieved – connected supply chains – had the lowest focus in 2017 – something that would change with COVID-19.

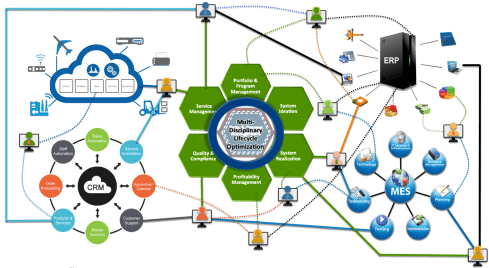

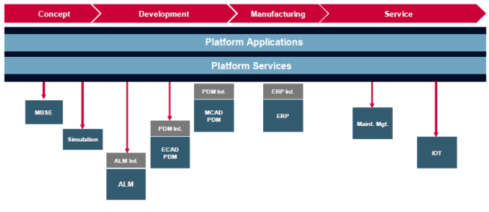

Fundamental digital transformation in the PLM domain occurred gradually. ARAS came with its overlay approach (the platform), connecting various disciplines and enterprise systems. In contrast, Dassault Systèmes introduced its 3DEXPERIENCE platform, utilizing its own software brands as platform components.

Most PLM vendors rapidly countered Aras’ overlay approach with their low-code offerings based on Mendix, ThingWorx or Netvibes, to enable data flows beyond the traditional PDM scope. The Coordinated Digital Thread was born.

The good news is that PLM has now clearly become a strategy based on a federated system infrastructure. The single PLM system no longer exists, although many of us still use the term’ PLM system’ to refer to the main component of a PLM infrastructure – the System of Record.

Moving to a federated PLM infrastructure is already a challenge for companies, not because of the available technology, but first of all because of the legacy data and, closely related to that, legacy processes and people skills.

Legacy is creating the inertia, not technology!

Next came the cloud – SaaS

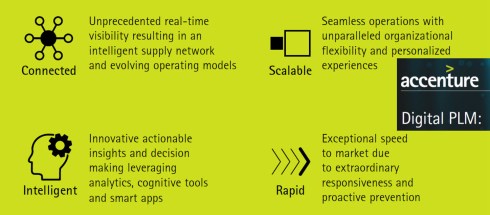

With the availability of cloud solutions that support real-time interactions between stakeholders, either within an enterprise or in a value chain, a new paradigm has emerged: the connected enterprise.

With the availability of cloud solutions that support real-time interactions between stakeholders, either within an enterprise or in a value chain, a new paradigm has emerged: the connected enterprise.

A connected enterprise no longer needs interfaces to transfer data from one system to another.

Instead, with apps and dashboards, combined data from different online sources is presented in a single, user-friendly working environment – A combination of the Systems of Record with the new environments – the Systems of Engagement.

The technology used to create dashboards and apps is based on modern data-driven technologies and principles (ontologies, graph databases, and the semantic web). The Connected Digital Thread was born.

However, legacy systems play an essential role again, as some systems of engagement can be implemented in a complementary manner to the systems of record, allowing companies to work within an integrated technology model.

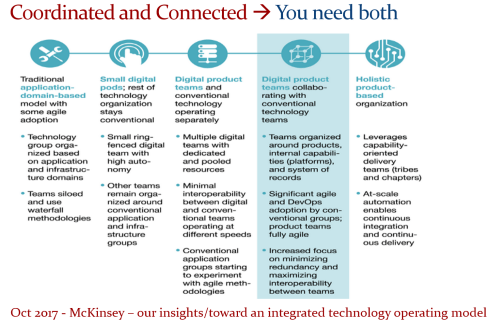

People will work in a particular mode, either coordinated or connected, but organizations can operate in both modes simultaneously. A story I have been sharing a lot – it is not about migrations but about an evolutionary approach towards an integrated technology model.

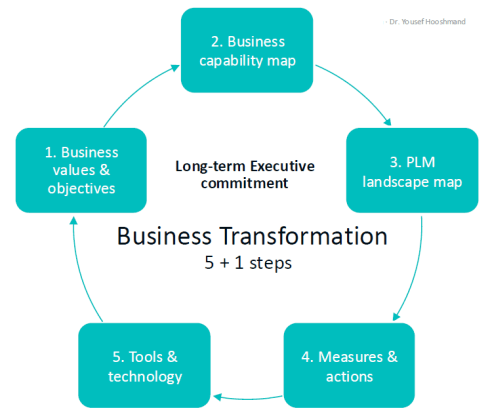

At this point, it becomes essential that business objectives drive the implementation of a PLM infrastructure. Of course, you hear me say we should start from the business; however, the big difference now is that a company should coordinate the technologies, systems, and tools it acquires to avoid isolated islands of information.

Follow Yousef Hooshmand‘s 5 + 1 business transformation steps.

An open SaaS infrastructure enables a company to let data flow almost in real-time. There is a lot of discussion related to data quality and governance, and if you have missed it, please read these three articles I created together with Rob Feronne, the product Digital PLuMber:

An open SaaS infrastructure enables a company to let data flow almost in real-time. There is a lot of discussion related to data quality and governance, and if you have missed it, please read these three articles I created together with Rob Feronne, the product Digital PLuMber:

- Data Quality and Data Governance – A hype? (part 1)

- Data Quality and Data Governance – the WHY and HOW (part 2)

- Building the Future: Data Quality and Governance in the Digital Age (part 3)

There are some great insights in this dialogue and the associated LinkedIn comments.

Despite the increasing availability of technology, it is the legacy of people, processes, and culture that is hindering progress.

Rob Feronne had a shocking lightbulb moment 😲 in our discussion about the future of PLM, where the participants – see below – answered a question related to the importance of technology in our PLM domain – shocking also for me.

My thumb was up because modern technology matters! The question inspired Oleg Shilovitsky to write a whole blog post on this topic. If you’re truly shocked, read his post, where I agree with the content; the question is too simple to answer with a thumbs up/down.

As technology has become more accessible than before, you no longer need an IT department to establish a PLM infrastructure. And then indeed, the people and process side needs and deserves much more attention..

As technology has become more accessible than before, you no longer need an IT department to establish a PLM infrastructure. And then indeed, the people and process side needs and deserves much more attention..

And now there is AI

If you haven’t read anything about AI recently, you must be living in an isolated location. Regardless of the business discussions you are following, it is all about the potential of AI.

If you haven’t read anything about AI recently, you must be living in an isolated location. Regardless of the business discussions you are following, it is all about the potential of AI.

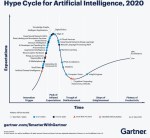

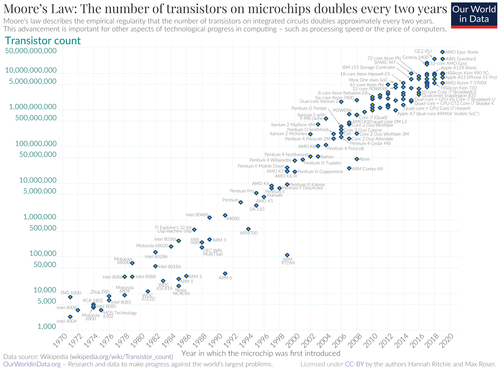

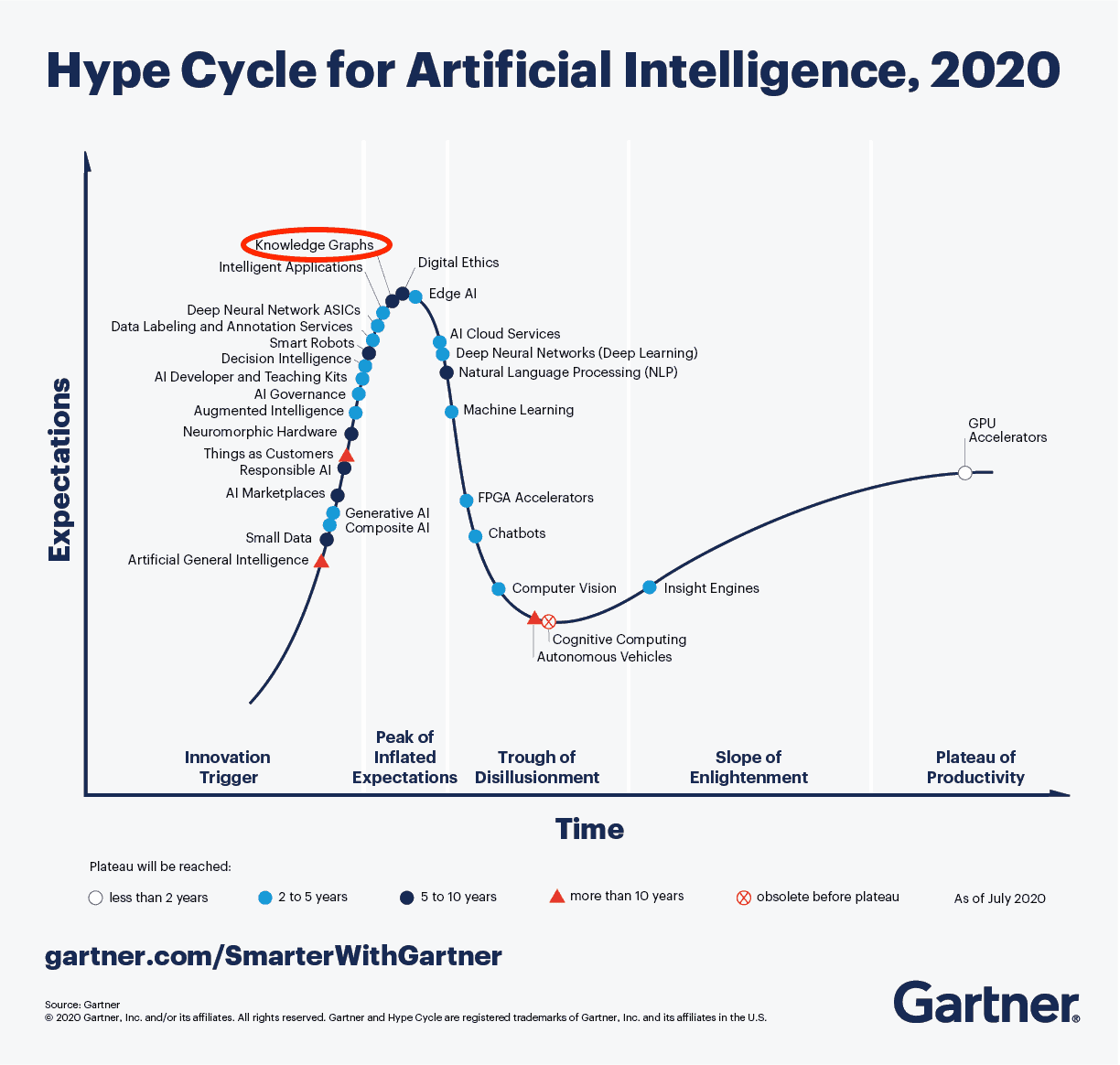

Although AI is not a new concept, the fact that various AI capabilities have now reached the end-user level is what drives the hype. Currently, I believe we are at the peak of the hype.

Last week, I participated in an interesting discussion in the series: The Future of PLM moderated by Michael Finochario, this time talking with the analysts. Click on the link to see Michael’s excellent summary and access to the recording of the event.

It was an interesting discussion for a little more than an hour, and the majority of our discussion was about the potential impact of AI on businesses. First, the impact AI can have on the traditional work of an analyst and next, the effects on the PLM domain.

I believe we agreed that AI at this moment is mainly providing higher user efficiency and performance, very much aligned with the interesting research I have been reading in the MIT NANDA report with the title The GenAI Divide: STATE OF AI IN BUSINESS 2025

I believe we agreed that AI at this moment is mainly providing higher user efficiency and performance, very much aligned with the interesting research I have been reading in the MIT NANDA report with the title The GenAI Divide: STATE OF AI IN BUSINESS 2025

The report’s interesting findings included high adoption of tools but low transformation. Despite significant investment in Generative AI (GenAI), most organizations are not achieving meaningful business transformation.

- 95% of organizations report zero return on GenAI investments.

- Only 5% of integrated AI pilots generate millions in value.

- 80% of organizations have explored or piloted tools like ChatGPT, but these primarily enhance individual productivity.

- 60% of organizations evaluated enterprise-grade systems, but only 20% reached the pilot stage, and just 5% reached production.

- Key barriers include brittle workflows, a lack of contextual learning, and operational misalignment.

Therefore, the question is – Is current AI the next bubble?

In 2014, I wrote about the lack of digital transformation in the PLM domain, and two images (below) from a report by The Economist could be used again. The report can be found here: The Onrushing Wave.

Click on the image to read the 2013 predictions.

I realized that my current job, as a recreational therapist and firefighter at the time, was not at risk, and that some of the predictions from 10 years ago had become a reality. Who is still bothered by telemarketers or retail salespersons?

However, many of the AI symptoms mentioned in the MIT NANDA report are similar to the hype surrounding digital transformation.

The only reservation I have now – will it take a decade before we understand and demonstrate the value of AI, or are we accelerating?

In this context, the upcoming PLM Roadmap/PDT Europe conference on 5 – 6 November will be interesting, as here we will discuss reality.

For a few of you interested in more, there is the day before the conference, a (free) workshop where we will discuss with some thought leaders and experts from various companies how the future of PLM could look like – based on standards, AI tools and more. Click on the image below the conclusion.

Conclusion

The summertime was a nice moment to reflect, inspired by others in my network. What is clear is that there is a shift from technology towards people and change. The rapid expansion of AI tools, along with connected technologies, has created an overwhelming array of possibilities. Now it is time for business leadership to understand them and utilize them for significant business improvement, where the fear is that substantial change will always be slowed down by organizational inertia.

Just before or during the summer holidays, we were pleased to resume our interview series on PLM and Sustainability, where the PLM Green Global Alliance interviews PLM-related software vendors and service organizations, discussing their sustainability missions and offerings.

Just before or during the summer holidays, we were pleased to resume our interview series on PLM and Sustainability, where the PLM Green Global Alliance interviews PLM-related software vendors and service organizations, discussing their sustainability missions and offerings.

Following recent discussions in the PLM ecosystem, including PSC Transition Technologies (EcoPLM), CIMPA PLM services (LCA), and the Design for Sustainability working group (with multiple vendors & service partners), we now have the opportunity to catch up with Sustaira after almost three years.

In 2022, Sustaira was a startup company focused on building and providing data-driven, efficient support for sustainability reporting and analysis based on the Mendix platform, while engaging with their first potential customers. What has happened in those three years?

In 2022, Sustaira was a startup company focused on building and providing data-driven, efficient support for sustainability reporting and analysis based on the Mendix platform, while engaging with their first potential customers. What has happened in those three years?

SUSTAIRA

Sustaira provides a sustainability management software platform that helps organizations track, manage, and report their environmental, social, and governance (ESG) performance through customizable applications and dashboards.

Sustaira provides a sustainability management software platform that helps organizations track, manage, and report their environmental, social, and governance (ESG) performance through customizable applications and dashboards.

We spoke again with Vincent de la Mar, founder and CEO of Sustaira, and it was pretty clear from our conversation that they have evolved and grown in their business and value proposition for businesses. As you will discover by listening to the interview, they are not, per se, in the PLM domain.

Enjoy the 35-minute interview below.

Slides shown during the interview, combined with additional company information, can be found HERE.

What we have learned

- Sustaira is a modular, AI-driven sustainability platform. It offers approximately 150 “sustainability accelerators,” which are either complete Software as a Service (SaaS) products (such as carbon accounting, goal/KPI tracking, and disclosures) or adaptable SaaS products that allow for complete configuration of data models, logic, and user interfaces.

- Their strategy is based on three pillars:

- providing an end-to-end sustainability platform (Ports of Jersey),

- filling gaps in an enterprise architecture and business needs (Science-Based Target Initiatives)

- Co-creating new applications with partners (BCAF with Siemens Financial Services)

- The company has a pragmatic view on AI and thanks to its scalable, data-driven Mendix platform, it can bring integrated value compared to niche applications that might become obsolete due to changing regulations and practices (e.g., dedicated CSRD apps)

- The Sustainability Global Alliance, in partnership with Capgemini, is a strategic alliance that benefits both parties, with a focus on AI & Sustainability.

- The strong partnership with Siemens Digital Solutions.

- Their monthly Sustainability and ESG Insights newsletter, also published in our PGGA group, already has 55.000 subscribers.

Want to learn more?

The following links provide more information related to Sustaira:

- About Sustaira:

- Sustaira’s sustainability marketplace

- Siemens and Sustaira partnership

- Capgemini and Sustaira partnership

- Customer Case Stories

- The Sustainability Insights LinkedIn Newsletter

- Navigating CSRD

- Content Hub (requires registration)

Conclusion

It was great to observe how Sustaira has grown over the past three years, establishing a broad portfolio of sustainability-related solutions for various types of businesses. Their relationship with Siemens Digital Solutions enables them to bring value and add capabilities to the Siemens portfolio, as their platform can be applied to any company that needs a complementary data-driven service related to sustainability insights and reporting.

Follow the news around this event – click on the image to learn more.

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

Often, PLM implementations are either IT-driven or business-driven to implement a need, and yes, there are people who need to work with it as the closing topic. Time and budget are spent on technology and process definitions, and people get trained. Often, only train the trainer, as there is no more budget or time to let the organization adapt, and rapid ROI is expected.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

The conference aims to start with the people, sharing human-related experiences and enabling networking between people – not only about the industry practices (there will be sessions and discussions on this topic too).

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

And if you are interested and have the opportunity, join us and meet some great thought leaders and others with this shared interest.

Why is modern PLM a dream?

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

Traditional PLM

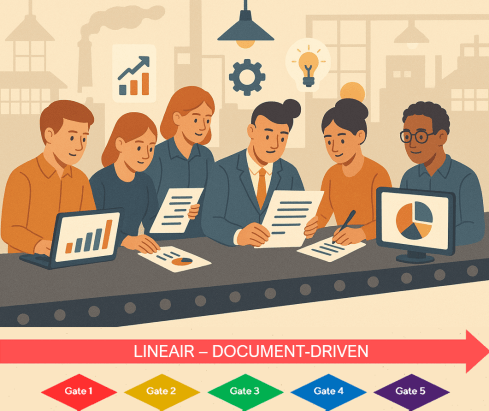

Traditional PLM is often associated with implementing a PLM system, mainly serving engineering. Downstream engineering data usage is usually pushed manually or through interfaces to other enterprise systems, like ERP, MES and service systems.

Traditional PLM is closely connected to the coordinated way of working: a linear process based on passing documents (drawings) and datasets (BOMs). Historically, CAD integrations have been the most significant characteristic of these systems.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

Unfortunately, most PLM implementations in the field are based on this approach and are sometimes characterized as advanced PDM.

You recognize traditional PLM thinking when people talk about the single source of truth.

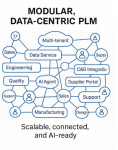

Modern PLM

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

More on this challenge will be discussed later, as in our PLM community, most discussions are IT-driven: architectures, ontologies, and technologies – the WHAT.

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

I think this article from Oleg Shilovitsky, “Rethinking PLM: Is It Time to Move Beyond the Monolith?“ AND the discussion thread in this post is a must-read. I will not quote the content here again.

After reading Oleg’s post and the comments, come back here

The reason for this approach: It is a perfect example of the connected approach. Instead of collecting all the information inside one post (book ?), the information can be accessed by following digital threads. It also illustrates that in a connected environment, you do not own the data; the data comes from accountable people.

Building such a modern infrastructure is challenging when your company depends mainly on its legacy—the people, processes and systems. Where to change, how to change and when to change are questions that should be answered at the top and require a vision and evolutionary implementation strategy.

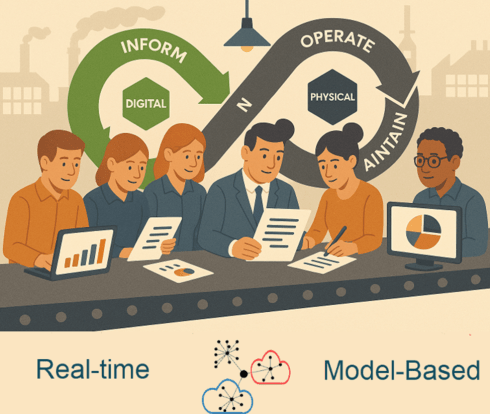

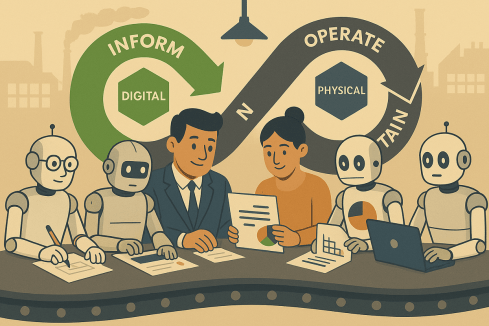

A company should build a layer of connected data on top of the coordinated infrastructure to support users in their new business roles. Implementing a digital twin has significant business benefits if the twin is used to connect with real-time stakeholders from both the virtual and physical worlds.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

Again, there are no quotes from the article; you know how to dive deeper into the connected topic.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

You might recognize modern PLM thinking when people talk about the nearest source of truth and the single source of change.

Is Intelligent PLM the next step?

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

The advantage of these platforms is that they are all created data-driven, not suffering from legacy issues.

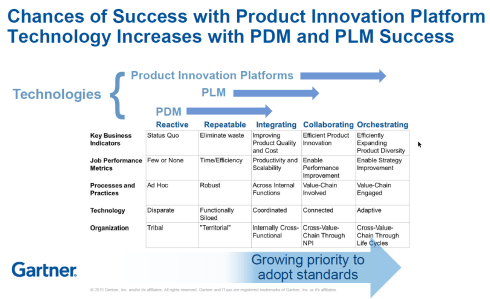

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

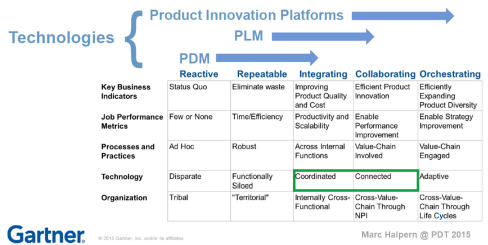

At that time, Peter Bilello shared the CIMdata perspective, Marc Halpern (Gartner) showed my favorite positioning slide (below), and Martin Eigner presented, according to my notes, this digital trend in PLM in his session:” What becomes different for PLM/SysLM?”

2015 Marc Halpern – the Product Innovation Platform (PIP)

While concepts started to become clearer, businesses mainly remained the same. The coordinated approach is the most convenient, as you do not need to reshape your organization. And then came the LLMs that changed everything.

Suddenly, it became possible for organizations to unlock knowledge hidden in their company and make it accessible to people.

Without drastically changing the organization, companies could now improve people’s performance and output (theoretically); therefore, it became a topic of interest for management. One big challenge for reaping the benefits is the quality of the data and information accessed.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

You might recognize intelligent PLM thinking when people and AI agents talk about the most likely truth.

Conclusion

Are you interested in these topics and their meaning for your business and career? Join me at the Share PLM conference, where I will discuss “The dilemma: Humans cannot transform—help them!” Time to work on your dreams!

Last week, I participated in the annual 3DEXPERIENCE User Conference, organized by the ENOVIA and NETVIBES brands. With approximately 250 attendees, the 2-day conference on the High-Tech Campus in Eindhoven was fully booked.

Last week, I participated in the annual 3DEXPERIENCE User Conference, organized by the ENOVIA and NETVIBES brands. With approximately 250 attendees, the 2-day conference on the High-Tech Campus in Eindhoven was fully booked.

My PDM/PLM career started in 1990 in Eindhoven.

First, I spent a significant part of my school life there, and later, I became a physics teacher in Eindhoven. Then, I got infected by CAD and data management, discovering SmarTeam, and the rest is history.

First, I spent a significant part of my school life there, and later, I became a physics teacher in Eindhoven. Then, I got infected by CAD and data management, discovering SmarTeam, and the rest is history.

As I wrote in my last year’s post, the 3DEXPERIENCE conference always feels like a reunion, as I have worked most of my time in the SmarTeam, ENOVIA, and 3DEXPERIENCE Eco-system.

Innovation Drivers in the Generative Economy

Stephane Declee and Morgan Zimmerman kicked off the conference with their keynote, talking about the business theme for 2024: the Generative Economy. Where the initial focus was on the Experience Economy and emotion, the Generative Economy includes Sustainability. It is a clever move as the word Sustainability, like Digital Transformation, has become such a generic term. The Generative Economy clearly explains that the aim is to be sustainable for the planet.

Stephane and Morgan talked about the importance of the virtual twin, which is different from digital twins. A virtual twin typically refers to a broader concept that encompasses not only the physical characteristics and behavior of an object or system but also its environment, interactions, and context within a virtual or simulated world. Virtual Twins are crucial to developing sustainable solutions.

Morgan concluded the session by describing the characteristics of the data-driven 3DEXPERIENCE platform and its AI fundamentals, illustrating all the facets of the mix of a System of Record (traditional PLM) and Systems of Record (MODSIM).

3DEXPERIENCE for All at automation.eXpress

Daniel Schöpf, CEO and founder of automation.eXpress GmbH, gave a passionate story about why, for his business, the 3DEXPERIENCE platform is the only environment for product development, collaboration and sales.

Automation.eXpress is a young but typical Engineering To Order company building special machinery and services in dedicated projects, which means that every project, from sales to delivery, requires a lot of communication.

Automation.eXpress is a young but typical Engineering To Order company building special machinery and services in dedicated projects, which means that every project, from sales to delivery, requires a lot of communication.

For that reason, Daniel insisted all employees to communicate using the 3DEXPERIENCE platform on the cloud. So, there are no separate emails, chats, or other siloed systems.

Everyone should work connected to the project and the product as they need to deliver projects as efficiently and fast as possible.

Daniel made this decision based on his 20 years of experience in traditional ways of working—the coordinated approach. Now, starting from scratch in a new company without a legacy, Daniel chose the connected approach, an ideal fit for his organization, and using the cloud solution as a scalable solution, an essential criterium for a startup company.

My conclusion is that this example shows the unique situation of an inspired leader with 20 years of experience in this business who does not choose ways of working from the past but starts a new company in the same industry, but now based on a modern platform approach instead of individual traditional tools.

My conclusion is that this example shows the unique situation of an inspired leader with 20 years of experience in this business who does not choose ways of working from the past but starts a new company in the same industry, but now based on a modern platform approach instead of individual traditional tools.

Augment Me Through Innovative Technology

Dr. Cara Antoine gave an inspiring keynote based on her own life experience and lessons learned from working in various industries, a major oil & gas company and major high-tech hardware and software brands. Currently, she is an EVP and the Chief Technology, Innovation & Portfolio Officer at Capgemini.

Dr. Cara Antoine gave an inspiring keynote based on her own life experience and lessons learned from working in various industries, a major oil & gas company and major high-tech hardware and software brands. Currently, she is an EVP and the Chief Technology, Innovation & Portfolio Officer at Capgemini.

She explained how a life-threatening infection that caused blindness in one of her eyes inspired her to find ways to augment herself to keep on functioning.

With that, she drew a parallel with humanity, who continuously have been augmenting themselves from the prehistoric day to now at an ever-increasing speed of change.

With that, she drew a parallel with humanity, who continuously have been augmenting themselves from the prehistoric day to now at an ever-increasing speed of change.

The current augmentation is the digital revolution. Digital technology is coming, and you need to be prepared to survive – it is Innovate of Abdicate.

Dr. Cara continued expressing the need to invest in innovation (me: it was not better in the past 😉 ) – and, of course, with an economic purpose; however, it should go hand in hand with social progress (gender diversity) and creating a sustainable planet (innovation is needed here).

Besides the focus on innovation drivers, Dr. Cara always connected her message to personal interaction. Her recently published book Make it Personal describes the importance of personal interaction, even if the topics can be very technical or complex.

Besides the focus on innovation drivers, Dr. Cara always connected her message to personal interaction. Her recently published book Make it Personal describes the importance of personal interaction, even if the topics can be very technical or complex.

I read the book with great pleasure, and it was one of the cornerstones of the panel discussion next.

It is all about people…

It might be strange to have a session like this in an ENOVIA/NETVIBES User Conference; however, it is another illustration that we are not just talking about technology and tools.

I was happy to introduce and moderate this panel discussion,also using the iconic Share PLM image, which is close to my heart.

I was happy to introduce and moderate this panel discussion,also using the iconic Share PLM image, which is close to my heart.

The panelists, Dr. Cara Antoine, Daniel Schöpf, and Florens Wolters, each actively led transformational initiatives with their companies.

We discussed questions related to culture, personal leadership and involvement and concluded with many insights, including “Create chemistry, identify a passion, empower diversity, and make a connection as it could make/break your relationship, were discussed.

And it is about processes.

Another trend I discovered is that cloud-based business platforms, like the 3DEXERIENCE platform, switch the focus from discussing functions and features in tools to establishing platform-based environments, where the focus is more on data-driven and connected processes.

Another trend I discovered is that cloud-based business platforms, like the 3DEXERIENCE platform, switch the focus from discussing functions and features in tools to establishing platform-based environments, where the focus is more on data-driven and connected processes.

Some examples:

Data Driven Quality at Suzlon Energy Ltd.

Florens Wolters, who also participated in the panel discussion “It is all about people ..” explained how he took the lead to reimagine the Sulon Energy Quality Management System using the 3DEXPERIENCE platform and ENOVIA from a disconnected, fragmented, document-driven Quality Management System with many findings in 2020 to a fully integrated data-driven management system with zero findings in 2023.

Florens Wolters, who also participated in the panel discussion “It is all about people ..” explained how he took the lead to reimagine the Sulon Energy Quality Management System using the 3DEXPERIENCE platform and ENOVIA from a disconnected, fragmented, document-driven Quality Management System with many findings in 2020 to a fully integrated data-driven management system with zero findings in 2023.

It is an illustration that a modern data-driven approach in a connected environment brings higher value to the organization as all stakeholders in the addressed solution work within an integrated, real-time environment. No time is wasted to search for related information.

It is an illustration that a modern data-driven approach in a connected environment brings higher value to the organization as all stakeholders in the addressed solution work within an integrated, real-time environment. No time is wasted to search for related information.

Of course, there is the organizational change management needed to convince people not to work in their favorite siloes system, which might be dedicated to the job, but not designed for a connected future.

Of course, there is the organizational change management needed to convince people not to work in their favorite siloes system, which might be dedicated to the job, but not designed for a connected future.

The image to the left was also a part of the “It is all about people”- session.

Enterprise Virtual Twin at Renault Group

The presentation of Renault was also an exciting surprise. Last year, they shared the scope of the Renaulution project at the conference (see also my post: The week after the 3DEXPERIENCE conference 2023).

The presentation of Renault was also an exciting surprise. Last year, they shared the scope of the Renaulution project at the conference (see also my post: The week after the 3DEXPERIENCE conference 2023).

Here, Renault mentioned that they would start using the 3DEXPERIENCE platform as an enterprise business platform instead of a traditional engineering tool.

Their presentation today, which was related to their Engineering Virtual Twin, was an example of that. Instead of using their document-based SCR (Système de Conception Renault – the Renault Design System) with over 1000 documents describing processes connected to over a hundred KPI, they have been modeling their whole business architecture and processes in UAF using a Systems of System Approach.

The image above shows Franck Gana, Renault’s engineering – transformation chief officer, explaining the approach. We could write an entire article about the details of how, again, the 3DEXPERIENCE platform can be used to provide a real-time virtual twin of the actual business processes, ensuring everyone is working on the same referential.

Bringing Business Collaboration to the Next Level with Business Experiences

To conclude this section about the shifting focus toward people and processes instead of system features, Alizée Meissonnier Aubin and Antoine Gravot introduced a new offering from 3DS, the marketplace for Business Experiences.

According to the HBR article, workers switch an average of 1200 times per day between applications, leading to 9 % of their time reorienting themselves after toggling.

1200 is a high number and a plea for working in a collaboration platform instead of siloed systems (the Systems of Engagement, in my terminology – data-driven, real-time connected). The story has been told before by Daniel Schöpf, Florens Wolters and Franck Gana, who shared the benefits of working in a connected collaboration environment.

The announced marketplace will be a place where customers can download Business Experiences.

There is was more ….

There were several engaging presentations and workshops during the conference. But, as we reach 1500 words, I will mention just two of them, which I hope to come back to in a later post with more detail.

- Delivering Sustainable & Eco Design with the 3DS LCA Solution

Valentin Tofana from Comau, an Italian multinational company in the automation and committed to more sustainable products. In the last context Valentin shared his experiences and lessons learned starting to use the 3DS LifeCycle Assessment tools on the 3DEXPERIENCE platform.

Valentin Tofana from Comau, an Italian multinational company in the automation and committed to more sustainable products. In the last context Valentin shared his experiences and lessons learned starting to use the 3DS LifeCycle Assessment tools on the 3DEXPERIENCE platform.

This session gave such a clear overview that we will come back with the PLM Green Global Alliance in a separate interview. - Beyond PLM. Productivity is the Key to Sustainable Business

Neerav MEHTA from L&T Energy Hydrocarbon demonstrated how they currently have implemented a virtual twin of the plant, allowing everyone to navigate, collaborate and explore all activities related to the plant.I was promoting this concept in 2013 also for Oil & Gas EPC companies, at that time, an immense performance and integration challenge. (PLM for all industries) Now, ten years later, thanks to the capabilities of the 3DEXPERIENCE platform, it has become a workable reality. Impressive.

Neerav MEHTA from L&T Energy Hydrocarbon demonstrated how they currently have implemented a virtual twin of the plant, allowing everyone to navigate, collaborate and explore all activities related to the plant.I was promoting this concept in 2013 also for Oil & Gas EPC companies, at that time, an immense performance and integration challenge. (PLM for all industries) Now, ten years later, thanks to the capabilities of the 3DEXPERIENCE platform, it has become a workable reality. Impressive.

Conclusion

Again, I learned a lot during these days, seeing the architecture of the 3DEXPERIENCE platform growing (image below). In addition, more and more companies are shifting their focus to real-time collaboration processes in the cloud on a connected platform. Their testimonies illustrate that to be sustainable in business, you have to augment yourself with digital.

Note: Dassault Systemes did not cover any of the cost for me attending this conference. I picked the topics close to my heart and got encouraged by all the conversations I had.

We are happy to start the year with the next round of the PLM Global Green Alliances (PGGA) series: PLM and Sustainability. This year, we will speak with some new companies, and we will also revisit some of our previous guests to learn about their progress.

We are happy to start the year with the next round of the PLM Global Green Alliances (PGGA) series: PLM and Sustainability. This year, we will speak with some new companies, and we will also revisit some of our previous guests to learn about their progress.

Where we talked with Aras, Autodesk, CIMdata, Dassault Systèmes, PTC, SAP, Sustaira and Transition Technologies PSC, there are still a lot of software companies with an exciting portfolio related to sustainability.

Therefore, we are happy to talk this time with Makersite, a company whose AI-powered Product Lifecycle Intelligence software, according to their home page, brings together your cost, environment, compliance, and risk data in one place to make smarter, greener decisions powered by the deepest understanding of your supply chain. Let’s explore

Makersite

![]() We were lucky to have a stimulating discussion with Neil D’Souza, Makersite’s CEO and founder, who was active in the field of sustainability for almost twenty years, even before it became a cool (or disputed) profession.

We were lucky to have a stimulating discussion with Neil D’Souza, Makersite’s CEO and founder, who was active in the field of sustainability for almost twenty years, even before it became a cool (or disputed) profession.

It was an exciting dialogue where we enjoyed realistic answers without all the buzzwords and marketing terms often used in the new domain of sustainability. Enjoy the 39 minutes of interaction below:

Slides shown during the interview combined with additional company information can be found HERE.

What we have learned

- Makersite’s mission, to enable manufacturers to make better products, faster, initially applied to economic parameters, can be easily extended with sustainability parameters.The power of Makersite is that it connects to enterprise systems and sources using AI, Machine Learning and algorithms to support reporting views on compliance, sustainability, costs and risk.

- Compliance and sustainability are the areas where I see a significant need for companies to invest. It is not a revolutionary business change but an extension of scope.We discussed this in the context of the stage-gate process, where sustainability parameters should be added at each gate.

- Neil has an exciting podcast, Five Lifes to Fifty, where he discusses the path to sustainable products with co-hosts Shelley Metcalfe and Jim Fava, and recently, they discussed sustainability in the context of the stage-gate process.

- Again, to move forward with sustainability, it is about creating the base and caring about the data internally to understand what’s happening, and from there, enable value engineering, including your supplier where possible (IP protection remains a topic) – confirming digital transformation (the connected way of working) is needed for business and sustainability.

Want to learn more?

Here are some links to the topics discussed in our meeting:

- The Website – Makersite.io

- Makersite data foundation – makersite-data-foundation

- Makersite demo video – makersite-platform-demo

- Neil’s LinkedIn – neilsaviodsouza

Conclusions

With Makersite, we discovered an experienced company that used its experience in cost, compliance and risk analysis, including supply chains, to extend it to the domain of sustainability. As their technology partners page shows, they can be complementary in many industries and enterprises.

We will see another complementary solution soon in our following interview. Stay tuned.

This year started for me with a discussion related to federated PLM. A topic that I highlighted as one of the imminent trends of 2022. A topic relevant for PLM consultants and implementers. If you are working in a company struggling with PLM, this topic might be hard to introduce in your company.

This year started for me with a discussion related to federated PLM. A topic that I highlighted as one of the imminent trends of 2022. A topic relevant for PLM consultants and implementers. If you are working in a company struggling with PLM, this topic might be hard to introduce in your company.

Before going into the discussion’s topics and arguments, let’s first describe the historical context.

The traditional PLM frame.

Historically PLM has been framed first as a system for engineering to manage their product data. So you could call it PDM first. After that, PLM systems were introduced and used to provide access to product data, upstream and downstream. The most common usage was the relation with manufacturing, leading to EBOM and MBOM discussions.

IT landscape simplification often led to an infrastructure of siloed solutions – PLM, ERP, CRM and later, MES. IT was driving the standardization of systems and defining interfaces between systems. System capabilities were leading, not the flow of information.

As many companies are still in this stage, I would call it PLM 1.0

PLM 1.0 systems serve mainly as a System of Record for the organization, where disciplines consolidate their data in a given context, the Bills of Information. The Bill of Information then is again the place to connect specification documents, i.e., CAD models, drawings and other documents, providing a Digital Thread.

The actual engineering work is done with specialized tools, MCAD/ECAD, CAE, Simulation, Planning tools and more. Therefore, each person could work in their discipline-specific environment and synchronize their data to the PLM system in a coordinated manner.

The actual engineering work is done with specialized tools, MCAD/ECAD, CAE, Simulation, Planning tools and more. Therefore, each person could work in their discipline-specific environment and synchronize their data to the PLM system in a coordinated manner.

However, this interaction is not easy for some of the end-users. For example, the usability of CAD integrations with the PLM system is constantly debated.

Many of my implementation discussions with customers were in this context. For example, suppose your products are relatively simple, or your company is relatively small. In that case, the opinion is that the System or Record approach is overkill.

Many of my implementation discussions with customers were in this context. For example, suppose your products are relatively simple, or your company is relatively small. In that case, the opinion is that the System or Record approach is overkill.

That’s why many small and medium enterprises do not see the value of a PLM backbone.

This could be true till recently. However, the threats to this approach are digitization and regulations.

Customers, partners, and regulators all expect more accurate and fast responses on specific issues, preferably instantly. In addition, sustainability regulations might push your company to implement a System of Record.

Customers, partners, and regulators all expect more accurate and fast responses on specific issues, preferably instantly. In addition, sustainability regulations might push your company to implement a System of Record.

PLM as a business strategy

For the past fifteen years, we have discussed PLM more as a business strategy implemented with business systems and an infrastructure designed for sharing. Therefore, I choose these words carefully to avoid overhanging the expression: PLM as a business strategy.

For the past fifteen years, we have discussed PLM more as a business strategy implemented with business systems and an infrastructure designed for sharing. Therefore, I choose these words carefully to avoid overhanging the expression: PLM as a business strategy.

The reason for this prudence is that, in reality, I have seen many PLM implementations fail due to the ambiguity of PLM as a system or strategy. Many enterprises have previously selected a preferred PLM Vendor solution as a starting point for their “PLM strategy”.

One of the most neglected best practices.

In reality, this means there was no strategy but a hope that with this impressive set of product demos, the company would find a way to support its business needs. Instead of people, process and then tools to implement the strategy, most of the time, it was starting with the tools trying to implement the processes and transform the people. That is not really the definition of business transformation.

In my opinion, this is happening because, at the management level, decisions are made based on financials.

In my opinion, this is happening because, at the management level, decisions are made based on financials.

Developing a PLM-related business strategy requires management understanding and involvement at all levels of the organization.

This is often not the case; the middle management has to solve the connection between the strategy and the execution. By design, however, the middle management will not restructure the organization. By design, they will collect the inputs van the end users.

And it is clear what end users want – no disruption in their comfortable way of working.

And it is clear what end users want – no disruption in their comfortable way of working.

Halfway conclusion:

Rebranding PLM as a business strategy has not really changed the way companies work. PLM systems remain a System of Record mainly for governance and traceability.

To understand the situation in your company, look at who is responsible for PLM.

- If IT is responsible, then most likely, PLM is not considered a business strategy but more an infrastructure.

- If engineering is responsible for PLM, then you are still in the early days of PLM, the engineering tools to be consulted by others upstream or downstream.

Only when PLM accountability is at the upper management level, it might be a business strategy (assume the upper management understands the details)

Connected is the game changer

Connecting all stakeholders in an engagement has been a game changer in the world. With the introduction of platforms and the smartphone as a connected device, consumers could suddenly benefit from direct responses to desired service requests (Spotify, iTunes, Uber, Amazon, Airbnb, Booking, Netflix, …).

Connecting all stakeholders in an engagement has been a game changer in the world. With the introduction of platforms and the smartphone as a connected device, consumers could suddenly benefit from direct responses to desired service requests (Spotify, iTunes, Uber, Amazon, Airbnb, Booking, Netflix, …).

The business change: connecting real-time all stakeholders to deliver highly rapid results.

What would be the game changer in PLM was the question? The image below describes the 2014 Accenture description of digital PLM and its potential benefits.

Is connected PLM a utopia?

Marc Halpern from Gartner shared in 2015 the slide below that you might have seen many times before. Digital Transformation is really moving from a coordinated to a connected technology, it seems.

The image below gives an impression of an evolution.

I have been following this concept till I was triggered by a 2017 McKinsey publication: “our insights/toward an integrated technology operating model“.

This was the first notion for me that the future should be hybrid, a combination of traditional PLM (system of record) complemented with teams that work digitally connected; McKinsey called them pods that become product-centric (multidisciplinary team focusing on a product) instead of discipline-centric (marketing/engineering/manufacturing/service)

In 2019 I wrote the post: The PLM migration dilemma supporting the “shocking” conclusion “Don’t think about migration when moving to data-driven, connected ways of working. You need both environments.”

One of the main arguments behind this conclusion was that legacy product data and processes were not designed to ensure data accuracy and quality on such a level that it could become connected data. As a result, converting documents into reliable datasets would be a costly, impossible exercise with no real ROI.

One of the main arguments behind this conclusion was that legacy product data and processes were not designed to ensure data accuracy and quality on such a level that it could become connected data. As a result, converting documents into reliable datasets would be a costly, impossible exercise with no real ROI.

The second argument was that the outside world, customers, regulatory bodies and other non-connected stakeholders still need documents as standardized deliverables.

The conclusion led to the image below.

Systems of Record (left) and Systems of Engagement (right)

Splitting PLM?

In 2021 these thoughts became more mature through various publications and players in the PLM domain.

We saw the upcoming of Systems of Engagement – I discussed OpenBOM, Colab and potentially Configit in the post: A new PLM paradigm. These systems can be characterized as connected solutions across the enterprise and value chain, focusing on a platform experience for the stakeholders.

We saw the upcoming of Systems of Engagement – I discussed OpenBOM, Colab and potentially Configit in the post: A new PLM paradigm. These systems can be characterized as connected solutions across the enterprise and value chain, focusing on a platform experience for the stakeholders.

These are all environments addressing the needs of a specific group of users as efficiently and as friendly as possible.

A System of Engagement will not fit naturally in a traditional PLM backbone; the System of Record.

Erik Herzog with SAAB Aerospace and Yousef Houshmand at that time with Daimler published that year papers related to “Federated PLM” or “The end of monolithic PLM.”. They acknowledged a company needs to focus on more than a single PLM solution. The presentation from Erik Herzog at the PLM Roadmap/PDT conference was interesting because Erik talked about the Systems of Engagement and the Systems of Record. He proposed using OSLC as the standard to connect these two types of PLM.

Erik Herzog with SAAB Aerospace and Yousef Houshmand at that time with Daimler published that year papers related to “Federated PLM” or “The end of monolithic PLM.”. They acknowledged a company needs to focus on more than a single PLM solution. The presentation from Erik Herzog at the PLM Roadmap/PDT conference was interesting because Erik talked about the Systems of Engagement and the Systems of Record. He proposed using OSLC as the standard to connect these two types of PLM.

It was a clear example of an attempt to combine the two kinds of PLM.

And here comes my question: Do we need to split PLM?

When I look at PLM implementations in the field, almost all are implemented as a System of Record, an information backbone proved by a single vendor PLM. The various disciplines deliver their content through interfaces to the backbone (Coordinated approach).

However, there is low usability or support for multidisciplinary collaboration; the PLM backbone is not designed for that.

Due to concepts of Model-Based Systems Engineering (MBSE) and Model-Based Definition (MBD), there are now solutions on the market that allow different disciplines to work jointly related to connected datasets that can be manipulated using modeling software (1D, 2D, 3D, 4D,…).

These environments, often a mix of software and hardware tools, are the Systems of Engagement and provide speedy results with high quality in the virtual world. Digital Twins are running on Systems of Engagements, not on Systems of Records.

Systems of Engagement do not need to come from the same vendor, as they serve different purposes. But how to explain this to your management, who wants simplicity. I can imagine the IT organization has a better understanding of this concept as, at the end of 2015, Gartner introduced the concept of the bimodal approach.

Systems of Engagement do not need to come from the same vendor, as they serve different purposes. But how to explain this to your management, who wants simplicity. I can imagine the IT organization has a better understanding of this concept as, at the end of 2015, Gartner introduced the concept of the bimodal approach.

Their definition:

Mode 1 is optimized for areas that are more well-understood. It focuses on exploiting what is known. This includes renovating the legacy environment so it is fit for a digital world. Mode 2 is exploratory, potentially experimenting to solve new problems. Mode 2 is optimized for areas of uncertainty. Mode 2 often works on initiatives that begin with a hypothesis that is tested and adapted during a process involving short iterations.

No Conclusion – but a question this time:

At the management level, unfortunately, there is most of the time still the “Single PLM”-mindset due to a lack of understanding of the business. Clearly splitting your PLM seems the way forward. IT could be ready for this, but will the business realize this opportunity?

What are your thoughts?

In my previous post, “My PLM Bookshelf,” on LinkedIn, I shared some of the books that influenced my thinking related to PLM. As you can see in the LinkedIn comments, other people added their recommendations for PLM-related books to get inspired or more knowledgeable.

In my previous post, “My PLM Bookshelf,” on LinkedIn, I shared some of the books that influenced my thinking related to PLM. As you can see in the LinkedIn comments, other people added their recommendations for PLM-related books to get inspired or more knowledgeable.

Where reading a book is a personal activity, now I want to share with you how to get educated in a more interactive manner related to PLM. In this post, I talk with Peter Bilello, President & CEO of CIMdata. If you haven’t heard about CIMdata and you are active in PLM, more to learn on their website HERE. Now let us focus on Education.

Where reading a book is a personal activity, now I want to share with you how to get educated in a more interactive manner related to PLM. In this post, I talk with Peter Bilello, President & CEO of CIMdata. If you haven’t heard about CIMdata and you are active in PLM, more to learn on their website HERE. Now let us focus on Education.

CIMdata

![]() Peter, knowing CIMdata from its research valid for the whole PLM community, I am curious to learn what is the typical kind of training CIMdata is providing to their customers.

Peter, knowing CIMdata from its research valid for the whole PLM community, I am curious to learn what is the typical kind of training CIMdata is providing to their customers.

![]() Jos, throughout much of CIMdata’s existence, we have delivered educational content to the global PLM industry. With a core business tenant of knowledge transfer, we began offering a rich set of PLM-related tutorials at our North American and pan-European conferences starting in the earlier 1990s.

Jos, throughout much of CIMdata’s existence, we have delivered educational content to the global PLM industry. With a core business tenant of knowledge transfer, we began offering a rich set of PLM-related tutorials at our North American and pan-European conferences starting in the earlier 1990s.

Since then, we have expanded our offering to include a comprehensive set of assessment-based certificate programs in a broader PLM sense. For example, systems engineering and digital transformation-related topics. In total, we offer more than 30 half-day classes. All of which can be delivered in-person as a custom configuration for a specific client and through public virtual-live or in-person classes. We have certificated more than 1,000 PLM professionals since the introduction in 2009 of this PLM Leadership offering.

Based on our experience, we recommend that an organization’s professional education strategy and plans address the organization’s specific processes and enabling technologies. This will help ensure that it drives the appropriate and consistent operations of its processes and technologies.

Based on our experience, we recommend that an organization’s professional education strategy and plans address the organization’s specific processes and enabling technologies. This will help ensure that it drives the appropriate and consistent operations of its processes and technologies.

For that purpose, we expanded our consulting offering to include a comprehensive and strategic digital skills transformation framework. This framework provides an organization with a roadmap that can define the skills an organization’s employees need to possess to ensure a successful digital transformation.

In turn, this framework can be used as an efficient tool for the organization’s HR department to define its training and job progression programs that align with its overall transformation.

In turn, this framework can be used as an efficient tool for the organization’s HR department to define its training and job progression programs that align with its overall transformation.

The success of training

![]() We are both promoting the importance of education to our customers. Can you share with us an example where Education really made a difference? Can we talk about ROI in the context of training?

We are both promoting the importance of education to our customers. Can you share with us an example where Education really made a difference? Can we talk about ROI in the context of training?

![]() Jos, I fully agree. Over the years, we have learned that education and training are often minimized (i.e., sub-optimized). This is unfortunate and has usually led to failed or partially successful implementations.

Jos, I fully agree. Over the years, we have learned that education and training are often minimized (i.e., sub-optimized). This is unfortunate and has usually led to failed or partially successful implementations.

In our view, both education and training are needed, along with strong organizational change management (OCM) and a quality assurance program during and after the implementation.

In our terms, education deals with the “WHY” and training with the “HOW”. Why do we need to change? Why do we need to do things differently? And then “HOW” to use new tools within the new processes.

In our terms, education deals with the “WHY” and training with the “HOW”. Why do we need to change? Why do we need to do things differently? And then “HOW” to use new tools within the new processes.

We have seen far too many failed implementations where sub-optimized decisions were made due to a lack of understanding (i.e., a clear lack of education). We have also witnessed training and education being done too early or too late.

This leads to a reduced Return on Investment (ROI).

Therefore a well-defined skills transformation framework is critical for any company that wants to grow and thrive in the digital world. Finally, a skills transformation framework needs to be tied directly to an organization’s digital implementation roadmap and structure, state of the process, and technology maturity to maximize success.

Therefore a well-defined skills transformation framework is critical for any company that wants to grow and thrive in the digital world. Finally, a skills transformation framework needs to be tied directly to an organization’s digital implementation roadmap and structure, state of the process, and technology maturity to maximize success.

Training for every size of the company?

![]() When CIMdata conducts PLM training, is there a difference, for example, when working with a big global enterprise or a small and medium enterprise?

When CIMdata conducts PLM training, is there a difference, for example, when working with a big global enterprise or a small and medium enterprise?

You might think the complexity might be similar; however, the amount of internal knowledge might differ. So how are you dealing with that?

W![]() e basically find that the amount of training/education required mostly depends on the implementation scope. Meaning the scope of the proposed digital transformation and the current maturity level of the impacted user community.

e basically find that the amount of training/education required mostly depends on the implementation scope. Meaning the scope of the proposed digital transformation and the current maturity level of the impacted user community.

It is important to measure the current maturity and establish appropriate metrics to measure the success of the training (e.g., are people, once trained, using the tools correctly).

CIMdata has created a three-part PLM maturity model that allows an organization to understand its current PLM-related organizational, process, and technology maturity.

The PLM maturity model provides an important baseline for identifying and/or developing the appropriate courses for execution.

This also allows us, when we are supporting the definition of a digital skills transformation framework, to understand how the level of internal knowledge might differ within and between departments, sites, and disciplines. All of which help define an organization-specific action plan, no matter its size.

This also allows us, when we are supporting the definition of a digital skills transformation framework, to understand how the level of internal knowledge might differ within and between departments, sites, and disciplines. All of which help define an organization-specific action plan, no matter its size.

Where is CIMdata training different?

![]() Most of the time, PLM implementers offer training too for their prospects or customers. So, where is CIMdata training different?

Most of the time, PLM implementers offer training too for their prospects or customers. So, where is CIMdata training different?

For this, it is important to differentiate between education and training. So, CIMdata provides education (the why) and training and education strategy development and planning.

For this, it is important to differentiate between education and training. So, CIMdata provides education (the why) and training and education strategy development and planning.