You are currently browsing the category archive for the ‘model-based’ category.

Last week, I wrote about the first day of the crowded PLM Roadmap/PDT Europe conference.

Last week, I wrote about the first day of the crowded PLM Roadmap/PDT Europe conference.

You can still read my post here in case you missed it: A very long week after PLM Roadmap / PDT Europe 2025

My conclusion from that post was that day 1 was a challenging day if you are a newbie in the domain of PLM and data-driven practices. We discussed and learned about relevant standards that support a digital enterprise, as well as the need for ontologies and semantic models to give data meaning and serve as a foundation for potential AI tools and use cases.

My conclusion from that post was that day 1 was a challenging day if you are a newbie in the domain of PLM and data-driven practices. We discussed and learned about relevant standards that support a digital enterprise, as well as the need for ontologies and semantic models to give data meaning and serve as a foundation for potential AI tools and use cases.

This post will focus on the other aspects of product lifecycle management – the evolving methodologies and the human side.

Note: I try to avoid the abbreviation PLM, as many of us in the field associate PLM with a system, where, for me, the system is more of an IT solution, where the strategy and practices are best named as product lifecycle management.

Note: I try to avoid the abbreviation PLM, as many of us in the field associate PLM with a system, where, for me, the system is more of an IT solution, where the strategy and practices are best named as product lifecycle management.

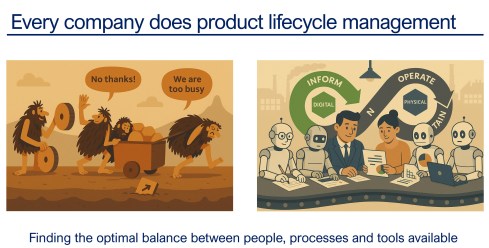

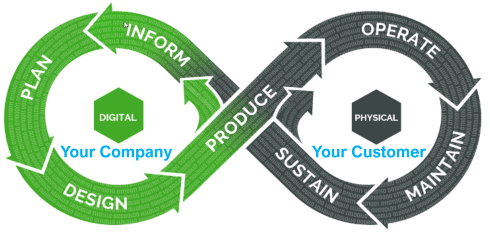

And as a reminder, I used the image above in other conversations. Every company does product lifecycle management; only the number of people, their processes, or their tools might differ. As Peter Billelo mentioned in his opening speech, the products are why the company exists.

Unlocking Efficiency with Model-Based Definition

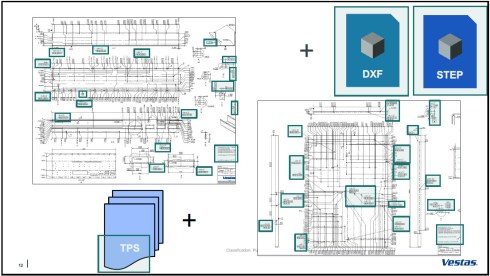

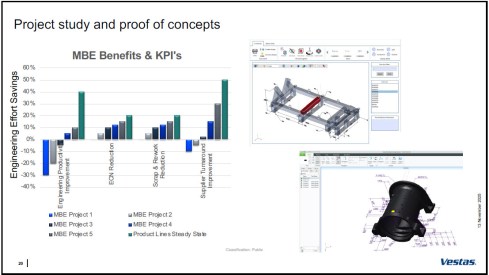

![]() Day 2 started energetically with Dennys Gomes‘ keynote, which introduced model-based definition (MBD) at Vestas, a world-leading OEM for wind turbines.

Day 2 started energetically with Dennys Gomes‘ keynote, which introduced model-based definition (MBD) at Vestas, a world-leading OEM for wind turbines.

Personally, I consider MBD as one of the stepping stones to learning and mastering a model-based enterprise, although do not be confused by the term “model”. In MBD, we use the 3D CAD model as the source to manage and support a data-driven connection among engineering, manufacturing, and suppliers. The business benefits are clear, as reported by companies that follow this approach.

However, it also involves changes in technology, methodology, skills, and even contractual relations.

Dennys started sharing the analysis they conducted on the amount of information in current manufacturing drawings. The image below shows that only the green marker information was used, so the time and effort spent creating the drawings were wasted.

It was an opportunity to explore model-based definition, and the team ran several pilots to learn how to handle MBD, improve their skills, methodologies, and tool usage. As mentioned before, it is a profound change to move from coordinated to connected ways of working; it does not happen by simply installing a new tool.

The image above shows the learning phases and the ultimate benefits accomplished. Besides moving to a model-based definition of the information, Dennys mentioned they used the opportunity to simplify and automate the generation of the information.

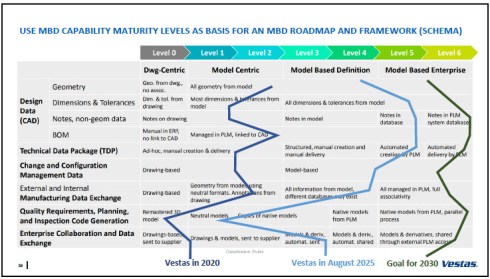

Vestas is on a clear path, and it is interesting to see their ambition in the MBD roadmap below.

An inspirational story, hopefully motivating other companies to make this first step to a model-based enterprise. Perhaps difficult at the beginning from the people’s perspective, but as a business, it is a profitable and required direction.

Bridging The Gap Between IT and Business

It was a great pleasure to listen again to Peter Vind from Siemens Energy, who first explained to the audience how to position the role of an enterprise architect in a company compared to society. He mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

It was a great pleasure to listen again to Peter Vind from Siemens Energy, who first explained to the audience how to position the role of an enterprise architect in a company compared to society. He mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

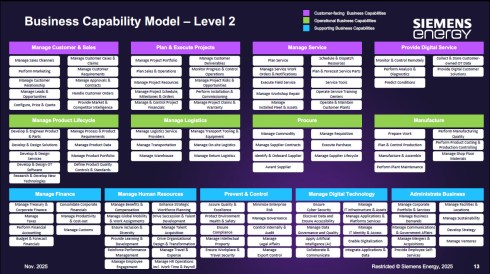

To answer these questions, Peter is referring to the Business Capability Model (BCM) he uses as an Enterprise Architect.

Business Capabilities define ‘what’ a company needs to do to execute its strategy, are structured into logical clusters, and should be the foundation for the enterprise, on which both IT and business can come to a common approach.

The detailed image above is worth studying if you are interested in the levels and the mappings of the capabilities. The BCM approach was beneficial when the company became disconnected from Siemens AG, enabling it to rationalize its application portfolio.

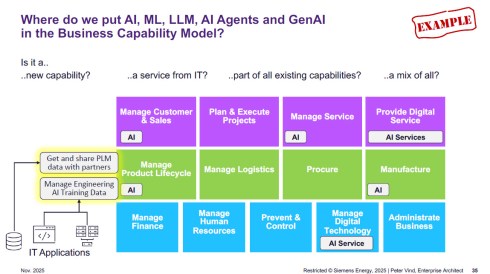

Next, Peter zoomed in on some of the examples of how a BCM and structured application portfolio management can help to rationalize the AI hype/demand – where is it applicable, where does AI have impact – and as he illustrated, it is not that simple. With the BCM, you have a base for further analysis.

Other future-relevant topics he shared included how to address the introduction of the digital product passport and how the BCM methodology supports the shift in business models toward a modern “Power-as-a-Service” model.

He concludes that having a Business Capability Model gives you a stable foundation for managing your enterprise architecture now and into the future. The BCM complements other methodologies that connect business strategy to (IT) execution. See also my 2024 post: Don’t use the P** word! – 5 lessons learned.

He concludes that having a Business Capability Model gives you a stable foundation for managing your enterprise architecture now and into the future. The BCM complements other methodologies that connect business strategy to (IT) execution. See also my 2024 post: Don’t use the P** word! – 5 lessons learned.

Holistic PLM in Action.

or companies struggling with their digital transformation in the PLM domain, Andreas Wank, Head of Smart Innovation at Pepperl+Fuchs SE, shared his journey so far. All the essential aspects of such a transformation were mentioned. Pepperl+Fuchs has a portfolio of approximately 15,000 products that combine hardware and software.

or companies struggling with their digital transformation in the PLM domain, Andreas Wank, Head of Smart Innovation at Pepperl+Fuchs SE, shared his journey so far. All the essential aspects of such a transformation were mentioned. Pepperl+Fuchs has a portfolio of approximately 15,000 products that combine hardware and software.

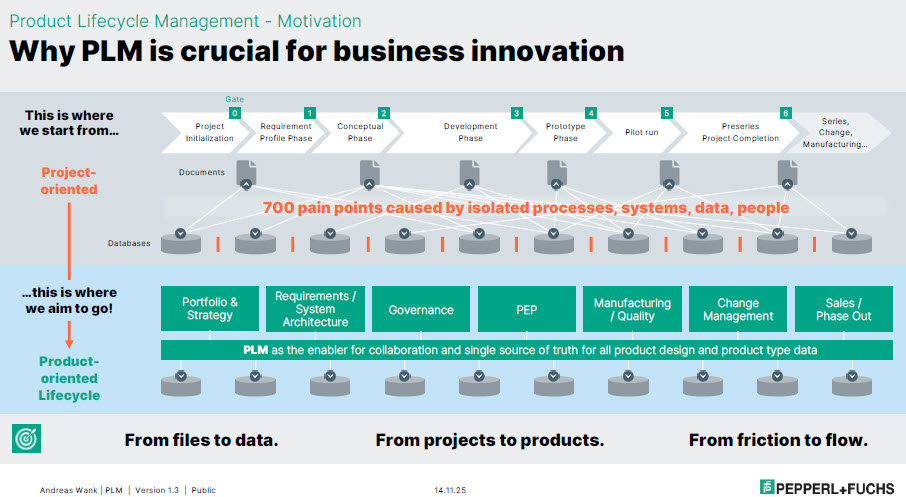

It started with the WHY. With such a massive portfolio, business innovation is under pressure without a PLM infrastructure. Too many changes, fragmented data, no single source of truth, and siloed ways of working lead to much rework, errors, and iterations that keep the company busy while missing the global value drivers.

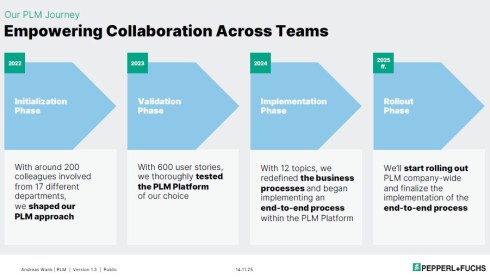

Next, the journey!

The above image is an excellent way to communicate the why, what, and how to a broader audience. All the main messages are in the image, which helps people align with them.

The first phase of the project, creating digital continuity, is also an excellent example of digital transformation in traditional document-driven enterprises. From files to data align with the From Coordinated To Connected theme.

Next, the focus was to describe these new ways of working with all stakeholders involved before starting the selection and implementation of PLM tools. This approach is so crucial, as one of my big lessons learned from the past is: “Never start a PLM implementation in R&D.”

If you start in R&D, the priority shifts away from the easy flow of data between all stakeholders; it becomes an R&D System that others will have to live with.

You never get a second, first impression!

Pepperl+Fuchs spends a long time validating its PLM selection – something you might only see in privately owned companies that are not driven by shareholder demands, but take the time to prepare and understand their next move.

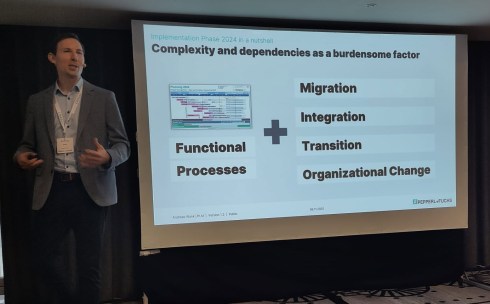

As Andreas also explained, it is not only about the functional processes. As the image shows, migration (often the elephant in the room) and integration with the other enterprise systems also need to be considered. And all of this is combined with managing the transition and the necessary organizational change.

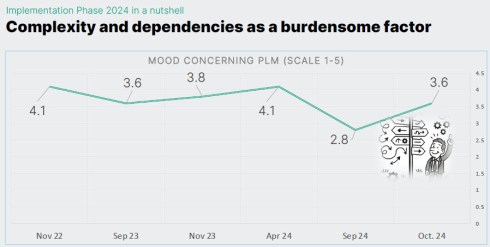

Andreas shared some best practices illustrating the focus on the transition and human aspects. They have implemented a regular survey to measure the PLM mood in the company. And when the mood went radical down on Sept 24, from 4.1 to 2.8 on a scale of 1 to 5, it was time to act.

They used one week at a separate location, where 30 of his colleagues worked on the reported issues in one room, leading to 70 decisions that week. And the result was measurable, as shown in the image below.

Andreas’s story was such a perfect fit for the discussions we have in the Share PLM podcast series that we asked him to tell it in more detail, also for those who have missed it. Subscribe and stay tuned for the podcast, coming soon.

Andreas’s story was such a perfect fit for the discussions we have in the Share PLM podcast series that we asked him to tell it in more detail, also for those who have missed it. Subscribe and stay tuned for the podcast, coming soon.

Trust, Small Changes, and Transformation.

Ashwath Sooriyanarayanan and Sofia Lindgren, both active at the corporate level in the PLM domain at Assa Abloy, came with an interesting story about their PLM lessons learned.

Ashwath Sooriyanarayanan and Sofia Lindgren, both active at the corporate level in the PLM domain at Assa Abloy, came with an interesting story about their PLM lessons learned.

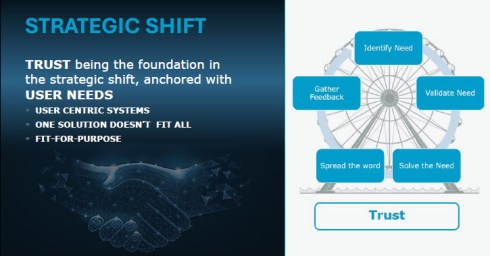

To understand their story, it is essential to comprehend Assa Abloy as a special company, as the image below explains. With over 1000 sites, 200 production facilities, and, last year, on average every two weeks, a new acquisition, it is hard to standardize the company, driven by a corporate organization.

However, this was precisely what Assa Abloy has been trying to do over the past few years. Working towards a single PLM system, with generic processes for all, spending a lot of time integrating and migrating data from the different entities became a mission impossible.

To increase user acceptance, they fell into the trap of customizing the system ever more to meet many user demands. A dead end, as many other companies have probably experienced similarly.

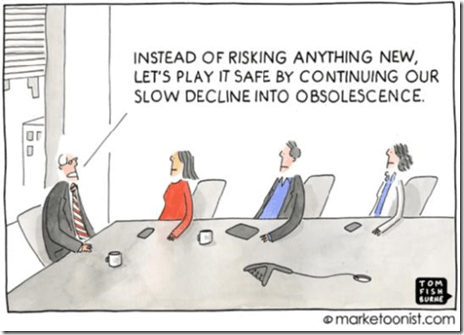

And then they came with a strategic shift. Instead of holding on to the past and the money invested in technology, they shifted to the human side.

The PLM group became a trusted organisation supporting the individual entities. Instead of telling them what to do (Top-Down), they talked with the local business and provided standardized PLM knowledge and capabilities where needed (Bottom-Up).

This “modular” approach made the PLM group the trusted partner of the individual business. A unique approach, making us realize that the human aspect remains part of implementing PLM

Humans cannot be transformed

Given the length of this blog post, I will not spend too much text on my closing presentation at the conference. After a technical start on DAY 1, we gradually moved to broader, human-related topics in the latter part.

Given the length of this blog post, I will not spend too much text on my closing presentation at the conference. After a technical start on DAY 1, we gradually moved to broader, human-related topics in the latter part.

You can find my presentation here on SlideShare as usual, and perhaps the best summary from my session was given in this post from Paul Comis. Enjoy his conclusion.

Conclusion

Two and a half intensive days in Paris again at the PLM Roadmap / PDT Europe conference, where some of the crucial aspects of PLM were shared in detail. The value of the conference lies in the stories and discussions with the participants. Only slides do not provide enough education. You need to be curious and active to discover the best perspective.

For those celebrating: Wishing you a wonderful Thanksgiving!

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

Often, PLM implementations are either IT-driven or business-driven to implement a need, and yes, there are people who need to work with it as the closing topic. Time and budget are spent on technology and process definitions, and people get trained. Often, only train the trainer, as there is no more budget or time to let the organization adapt, and rapid ROI is expected.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

The conference aims to start with the people, sharing human-related experiences and enabling networking between people – not only about the industry practices (there will be sessions and discussions on this topic too).

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

And if you are interested and have the opportunity, join us and meet some great thought leaders and others with this shared interest.

Why is modern PLM a dream?

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

Traditional PLM

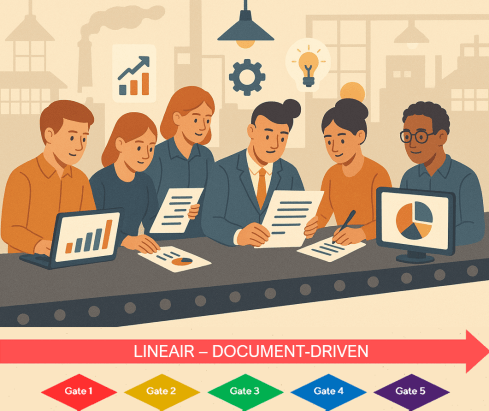

Traditional PLM is often associated with implementing a PLM system, mainly serving engineering. Downstream engineering data usage is usually pushed manually or through interfaces to other enterprise systems, like ERP, MES and service systems.

Traditional PLM is closely connected to the coordinated way of working: a linear process based on passing documents (drawings) and datasets (BOMs). Historically, CAD integrations have been the most significant characteristic of these systems.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

Unfortunately, most PLM implementations in the field are based on this approach and are sometimes characterized as advanced PDM.

You recognize traditional PLM thinking when people talk about the single source of truth.

Modern PLM

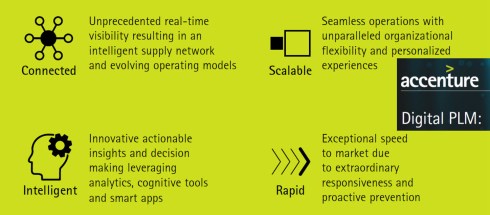

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

More on this challenge will be discussed later, as in our PLM community, most discussions are IT-driven: architectures, ontologies, and technologies – the WHAT.

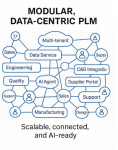

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

I think this article from Oleg Shilovitsky, “Rethinking PLM: Is It Time to Move Beyond the Monolith?“ AND the discussion thread in this post is a must-read. I will not quote the content here again.

After reading Oleg’s post and the comments, come back here

The reason for this approach: It is a perfect example of the connected approach. Instead of collecting all the information inside one post (book ?), the information can be accessed by following digital threads. It also illustrates that in a connected environment, you do not own the data; the data comes from accountable people.

Building such a modern infrastructure is challenging when your company depends mainly on its legacy—the people, processes and systems. Where to change, how to change and when to change are questions that should be answered at the top and require a vision and evolutionary implementation strategy.

A company should build a layer of connected data on top of the coordinated infrastructure to support users in their new business roles. Implementing a digital twin has significant business benefits if the twin is used to connect with real-time stakeholders from both the virtual and physical worlds.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

Again, there are no quotes from the article; you know how to dive deeper into the connected topic.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

You might recognize modern PLM thinking when people talk about the nearest source of truth and the single source of change.

Is Intelligent PLM the next step?

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

The advantage of these platforms is that they are all created data-driven, not suffering from legacy issues.

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

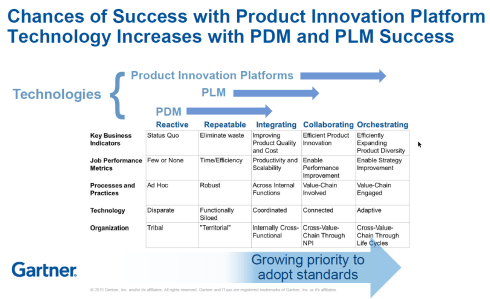

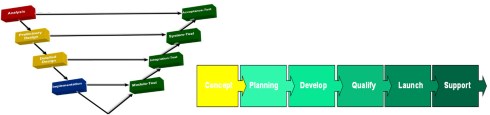

At that time, Peter Bilello shared the CIMdata perspective, Marc Halpern (Gartner) showed my favorite positioning slide (below), and Martin Eigner presented, according to my notes, this digital trend in PLM in his session:” What becomes different for PLM/SysLM?”

2015 Marc Halpern – the Product Innovation Platform (PIP)

While concepts started to become clearer, businesses mainly remained the same. The coordinated approach is the most convenient, as you do not need to reshape your organization. And then came the LLMs that changed everything.

Suddenly, it became possible for organizations to unlock knowledge hidden in their company and make it accessible to people.

Without drastically changing the organization, companies could now improve people’s performance and output (theoretically); therefore, it became a topic of interest for management. One big challenge for reaping the benefits is the quality of the data and information accessed.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

You might recognize intelligent PLM thinking when people and AI agents talk about the most likely truth.

Conclusion

Are you interested in these topics and their meaning for your business and career? Join me at the Share PLM conference, where I will discuss “The dilemma: Humans cannot transform—help them!” Time to work on your dreams!

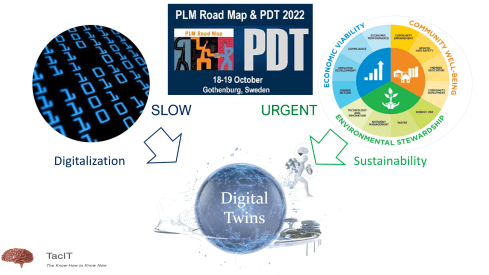

Four years ago, I wrote a series of posts with the common theme: The road to model-based and connected PLM. I discussed the various aspects of model-based and the transition from considering PLM as a system towards considering PLM as a strategy to implement a connected infrastructure.

Four years ago, I wrote a series of posts with the common theme: The road to model-based and connected PLM. I discussed the various aspects of model-based and the transition from considering PLM as a system towards considering PLM as a strategy to implement a connected infrastructure.

Since then, a lot has happened. The terminology of Digital Twin and Digital Thread has become better understood. The difference between Coordinated and Connected ways of working has become more apparent. Spoiler: You need both ways. And at this moment, Artificial Intelligence (AI) has become a new hype.

Many current discussions in the PLM domain are about structures and data connectivity, Bills of Materials (BOM), or Bills of Information(BOI) combined with the new term Digital Thread as a Service (DTaaS) introduced by Oleg Shilovitsky and Rob Ferrone. Here, we envision a digitally connected enterprise, based connected services.

Many current discussions in the PLM domain are about structures and data connectivity, Bills of Materials (BOM), or Bills of Information(BOI) combined with the new term Digital Thread as a Service (DTaaS) introduced by Oleg Shilovitsky and Rob Ferrone. Here, we envision a digitally connected enterprise, based connected services.

A lot can be explored in this direction; also relevant Lionel Grealou’s article in Engineering.com: RIP SaaS, long live AI-as-a-service and follow-up discussions related tot his topic. I chimed in with Data, Processes and AI.

However, we also need to focus on the term model-based or model-driven. When we talk about models currently, Large Language Models (LMM) are the hype, and when you are working in the design space, 3D CAD models might be your first association.

There is still confusion in the PLM domain: what do we mean by model-based, and where are we progressing with working model-based?

A topic I want to explore in this post.

It is not only Model-Based Definition (MBD)

Before I started The Road to Model-Based series, there was already the misunderstanding that model-based means 3D CAD model-based. See my post from that time: Model-Based – the confusion.

Model-Based Definition (MBD) is an excellent first step in understanding information continuity, in this case primarily between engineering and manufacturing, where the annotated model is used as the source for manufacturing.

In this way, there is no need for separate 2D drawings with manufacturing details, reducing the extra need to keep the engineering and manufacturing information in sync and, in addition, reducing the chance of misinterpretations.

MBD is a common practice in aerospace and particularly in the automotive industry. Other industries are struggling to introduce MBD, either because the OEM is not ready or willing to share information in a different format than 3D + 2D drawings, or their supplier consider MBD too complex for them compared to their current document-driven approach.

MBD is a common practice in aerospace and particularly in the automotive industry. Other industries are struggling to introduce MBD, either because the OEM is not ready or willing to share information in a different format than 3D + 2D drawings, or their supplier consider MBD too complex for them compared to their current document-driven approach.

In its current practice, we must remember that MBD is part of a coordinated approach.

Companies exchange technical data packages based on potential MBD standards (ASME Y14.47 /ISO 16792 but also JT and 3D PDF). It is not yet part of the connected enterprise, but it connects engineering and manufacturing using the 3D Model as the core information carrier.

As I wrote, learning to work with MBD is a stepping stone in understanding a modern model-based and data-driven enterprise. See my 2022 post: Why Model-based Definition is important for us all.

As I wrote, learning to work with MBD is a stepping stone in understanding a modern model-based and data-driven enterprise. See my 2022 post: Why Model-based Definition is important for us all.

To conclude on MBD, Model-based definition is a crucial practice to improve collaboration between engineering, manufacturing, and suppliers, and it might be parallel to collaborative BOM structures.

And it is transformational as the following benefits are reported through ChatGPT:

- Up to 30% faster in product development cycles due to reduced need for 2D drawings and fewer design iterations. Boeing reported a 50% reduction in engineering change requests by using MBD.

- Companies using MBD see a 20–50% reduction in manufacturing errors caused by misinterpretations of 2D drawings. Caterpillar reported a 30% improvement in first-pass yield due to better communication between design and manufacturing teams.

- MBD can reduce product launch time by 20–50% by eliminating bottlenecks related to traditional drawings and manual data entry.

- 20–30% reduction in documentation costs by eliminating or reducing 2D drawings. Up to 60% savings on rework and scrap costs by reducing errors and inconsistencies.

Over five years, Lockheed Martin achieved a $300 million cost savings by implementing MBD across parts of its supply chain.

MBSE is not a silo.

For many people, Model-Based Systems Engineering(MBSE) seems to be something not relevant to their business, or it is a discipline for a small group of specialists that are conducting system engineering practices, not in the traditional document-driven V-shape approach but in an iterative process following the V-shape, meanwhile using models to predict and verify assumptions.

And what is the value connected in a PLM environment?

A quick heads up – what is a model

A model is a simplified representation of a system, process, or concept used to understand, predict, or optimize real-world phenomena. Models can be mathematical, computational, or conceptual.

We need models to:

- Simplify Complexity – Break down intricate systems into manageable components and focus on the main components.

- Make Predictions – Forecast outcomes in science, engineering, and economics by simulating behavior – Large Language Models, Machine Learning.

- Optimize Decisions – Improve efficiency in various fields like AI, finance, and logistics by running simulations and find the best virtual solution to apply.

- Test Hypotheses – Evaluate scenarios without real-world risks or costs for example a virtual crash test..

It is important to realize models are as accurate as the data elements they are running on – every modeling practices has a certain need for base data, be it measurements, formulas, statistics.

I watched and listened to the interesting podcast below, where Jonathan Scott and Pat Coulehan discuss this topic: Bridging MBSE and PLM: Overcoming Challenges in Digital Engineering. If you have time – watch it to grasp the challenges.

The challenge in an MBSE environment is that it is not a single tool with a single version of the truth; it is merely a federated environment of shared datasets that are interpreted by modeling applications to understand and define the behavior of a product.

In addition, an interesting article from Nicolas Figay might help you understand the value for a broader audience. Read his article: MBSE: Beyond Diagrams – Unlocking Model Intelligence for Computer-Aided Engineering.

In addition, an interesting article from Nicolas Figay might help you understand the value for a broader audience. Read his article: MBSE: Beyond Diagrams – Unlocking Model Intelligence for Computer-Aided Engineering.

Ultimately, and this is the agreement I found on many PLM conferences, we agree that MBSE practices are the foundation for downstream processes and operations.

We need a data-driven modeling environment to implement Digital Twins, which can span multiple systems and diagrams.

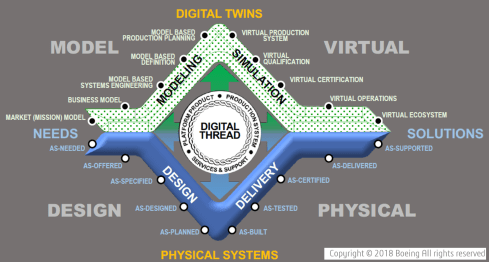

In this context, I like the Boeing diamond presented by Don Farr at the 2018 PLM Roadmap EMEA conference. It is a model view of a system, where between the virtual and the physical flow, we will have data flowing through a digital thread.

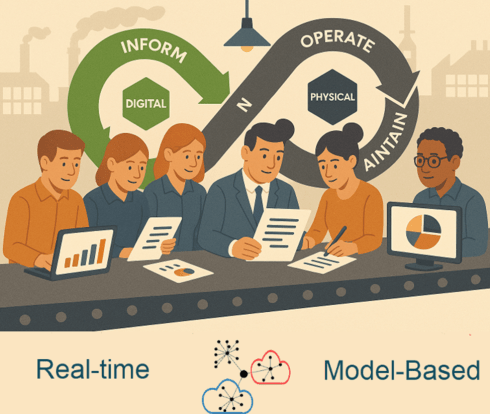

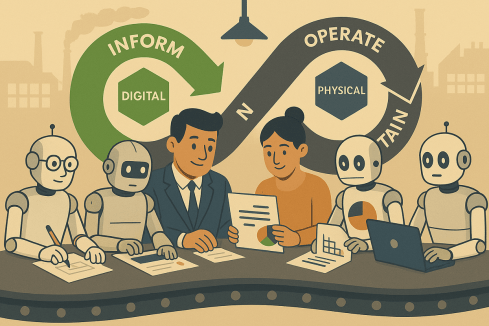

Where this image describes a model-based, data-driven infrastructure to deliver a solution, we can, in addition, apply the DevOp approach to the bigger picture for solutions in operation, as depicted by the PTC image below.

Model-based the foundation of the digital twins

![]() To conclude on MBSE, I hope that it is clear why I am promoting considering MBSE not only as the environment to conceptualize a solution but also as the foundation for a digital enterprise where information is connected through digital threads and AI models (**new**)

To conclude on MBSE, I hope that it is clear why I am promoting considering MBSE not only as the environment to conceptualize a solution but also as the foundation for a digital enterprise where information is connected through digital threads and AI models (**new**)

The data borders between traditional system domains will disappear – the single source of change and the nearest source of truth – paradigm, and this post, The Big Blocks of Future Lifecycle Management, from Prof. Dr. Jörg Fischer, are all about data domains.

However, having accessible data using all kinds of modern data sources and tools are necessary to build digital twins – either to simulate and predict a physical solution or to analyze a physical solution and, based on the analysis, either adjust the solutions or improve your virtual simulations.

Digital Twins at any stage of the product life cycle are crucial to developing and maintaining sustainable solutions, as I discussed in previous lectures. See the image below:

Conclusion

Data quality and architecture are the future of a modern digital enterprise – the building blocks. And there is a lot of discussion related to Artificial Intelligence. This will only work when we master the methodology and practices related to a data-driven and sustainable approach using models. MBD is not new, MBSE perhaps still new, building blocks for a model-based approach. Where are you in your lifecycle?

Again, a “The weekend after …” post related to my favorite event to which I have contributed since 2014.

Expectations were high this time from my side, in particular because we would have a serious discussion related to connected digital threads and federated PLM.

More about these topics in my post next week as all content is not yet available for sharing.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

Looking back to the conference, there were two noticeable streams.

- The stream where people share their current PLM experiences, traditionally the A&D action groups moderated by CIMdata, is part of this stream. This part I will cover in this post.

- There were forward-looking presentations related to standards, ontologies, and federated PLM—all with an AI flavor. This part I will cover in my next post(s).

The connection between all these sessions was the Digital Thread. The conference’s theme was: The Digital Thread in a Heterogeneous, Extended Enterprise Reality. Let’s start the review with the highlights from the first stream.

Digital Thread: Why Should We Care?

As usual, Peter Bilello from CIMdata kicked off the conference by setting the scene. Peter started by clarifying the two definitions of the Digital Thread.

- The first is a communication framework that allows a connected data flow and integrated view of an asset’s data (i.e., its Digital Twin) throughout its lifecycle across traditionally siloed functional perspectives.

In my terminology, the connected digital thread. - The second is a network of connected information sources around the product lifecycle supporting traceability and decision-making.

In my terminology, the coordinated digital thread is the most straightforward digital thread to achieve.

Peter recommends starting a digital thread by connecting at the beginning of product conceptualization, creating an environment where one can analyze the performance of the product portfolio and the product features and capabilities that need to be planned or how they perform in the field.

In addition, when defining the products, connect them with regulatory requirement databases as they have must-have requirements. A topic I addressed in my session too, besides the existing regulatory requirements, it is expected that in the upcoming years, due to environmental regulations, these requirements will increase, and it will be necessary to have them integrated with your digital thread.

Digital Threads require data governance and are the basis for the various digital twins. Peter discussed the multiple applications of the digital twin, primarily a relation between a virtual asset and a physical asset, except in the early concept phase.

The digital thread is still in the early phase of implementation at companies. A CIMdata survey showed that companies still focus primarily on implementing traditional PDM capabilities, although as the image above shows, there is a growing interest in short-term digital twin/thread implementations.

People, Process & Technology:

The Pillars of Digital Transformation Success

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

Having your people at all levels involved and make them understand the need for change is crucial. A change does not happen top-down. You must educate people and understand what is possible and achievable to change – in the right direction. One of her concluding slides highlights the main points.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

Between the regular sessions there were short sessions from sponsors: Altium, Contact Software, Dassault Systemes, ESI, inensia, Modular Management , PTC, SAP, Share PLM and Sinequa could pitch their value offering.

The Share PLM session, shortly after Christine’s presentation was a nice continuation of the focus on people. I loved the Share PLM image to the left explaining why people do not engage with our dreams.

Learn how LEONI is achieving Digital Continuity in the Automotive Industry.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Recently it has gone through a serious financial crisis and the need for restructuring. This makes it always challenging for a “visionary” PLM project. Tobias mentioned that after disappointing engagements with consultancy firms, they decided on a bottom-up approach to analyze existing processes using BPML. They agreed on a to-be state, fixing bottlenecks and streamlining the flow of information.

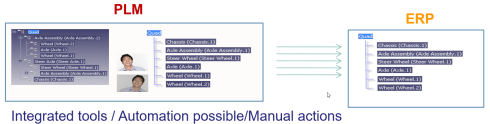

Tobias presented a smooth product data flow between their PLM system (PTC Windchill) and ERP (SAP S/4 HANA), clearly stating that the PLM system has become the controlled source of managing product changes.

Their key achievements reported so far were:

- related to BOM creation and routing (approx. 10x faster – from 2-3 days to ¼ day),

- better data consistency (fewer manual steps)

- complete traceability between the systems with PLM as the change management backbone.

The last point I would call the coordinated Digital Thread. The image below shows their current IT landscape in a simplified manner.

This solution might seem obvious for neutral PLM academics or experts, but it is an achievement to do this in an environment with SAP implemented. The eBOM-mBOM discussion is one of the most frequent held discussions – sometimes a battle.

Often, companies use their IT systems first and listen to the vendor’s experts to build integrations instead of starting from the natural business flow of information.

Aerospace & Defense Action groups outcomes

As usual, several Aerospace & Defense (A&D) action groups reported their progress during this conference. The A&D action groups are facilitated by CIMdata, and per topic, various OEMs and suppliers in the A&D industry study and analyze a particular topic, often inviting software vendors to demonstrate and discuss their capabilities with them.

Their activities and reports can be found on the A&D PLM Action page here; In the remainder of this post I will share briefly the ones presented. For a real deep dive in the topics I recommend to find the proceedings per topic on the A&D action page.

The Promise and Reality of the Digital Thread

James Roche CIMdata presented insights from industry research on The Promise and Reality of the Digital Thread. A total of 90 persons completed an in-depth survey about the status and implementation of digital thread concepts in their company. It is clear that the digital thread is still in its early days in this industry, and it is mainly about the coordinated digital thread. The image below reflects the highlights of the survey.

A&D Industry Digital Twin and Digital Thread Standards

Robert Rencher from Boeing explained the progress of their Digital Twin/Digital Thread project, where they had investigated the applicable standards to support a Digital Twin/Digital Thread (Phase 4 out of 7 currently planned). The image below shows that various standards may apply depending on business perspectives.

Their current findings are:

- Digital twin standards overlap, which is most likely a function of standards bodies representing their respective standards as an ongoing development from a historical perspective.

- The limited availability of mature digital twin/thread standards requires greater attention by standards organizations.

- The concept of the digital twin continues to evolve. This dynamic will be a challenge to standards bodies.

- The digital twin and the digital thread are distinct aspects of digital transformation. The corresponding digital twin and digital thread standards will be distinctly different.

- Coordinating the development of the respective standards between the digital twin/thread is needed.

- The digital twin’s organization, definition, and enablement depend on data and information provided by the digital thread.

Roadmap for Enabling Global Collaboration

Robert Gutwein (Pratt & Whitney Canada) and Agnes Gourillon-Jandot (Safran Aircraft Engines) reported their progress on the Global Collaboration project. Collaboration is challenged as exchange methods can vary, as well as dealing with the validation of exchanged information and governing the exchange of information in the context of IP protection.

One of the focal points was to introduce an approach to define standardized supplier agreements that anticipate modern model-based exchanges and collaboration methods.

Robert & Agnes presented the 8-step guideline for the aerospace industry in specific terms, explicitly mentioning the ISO44001 standard as being generic for all industries. An impression of the eight steps and sub-steps can be found below:

The 8-step approach will be supported by a 3rd-party Collaboration Management System (CMS app), which is not mandatory but recommended for use. When an interaction depends on a specific tool, it cannot become an ISO standard. The purpose of the methodology and app is to assist participants to ensure the collaboration aspect between stakeholders contains all the necessary steps & and people.

Model-based OEM/Supplier Collaboration Needs in Aviation Industry

Hartmut Hintze, working at Airbus Operations, presented the latest findings of the MBSE Data Interoperability working group and presented the model-based OEM/Supplier collaboration requirements and standards that need to be supported by the PLM/MBSE solution providers in the future. This collaboration goes beyond sharing CAD models, as you can see from the supplier engagement framework below:

As there are no standards-based tools, their first focus was looking into methodologies for model and behavior exchanges based on use cases. The use cases are then used to verify the state-of-the-art abilities of the various tools. At this moment, there is a focus on SysML V2 as a potential game-changer due to its new API support. As a relative novice on SysML, I cannot explain this topic in more simple words. I recommend that experts visit their presentations on the AD PAG publications page here.

Conclusions

The theme of the conference was related to the Digital Thread – and as you will discover it is valid for everyone. Learn to see the difference between the coordinated Digital Thread and the connected Digital Tread.This time, a lot of information about the Aerospace and Defense Action Groups (AD PAG), which are a fundamental part of this conference. The A&D industry has always been leading in advanced PLM concepts. However, more advanced concepts will come in my next post when touching the connected Digital Thread in the context of federated PLM and let’s not forget AI.

During May and June, I wrote a guest chapter for the next edition of John Stark’s book Product Lifecycle Management (Volume 2): The Devil is in the Details.

During May and June, I wrote a guest chapter for the next edition of John Stark’s book Product Lifecycle Management (Volume 2): The Devil is in the Details.

The book is considered a standard in the academic world when studying aspects of PLM.

Looking into the table of contents through the above link, it shows that understanding PLM in its full scope is broad. I wrote about it recently: PLM is Complex (and we have to accept it?), and Roger Tempest and others are still fighting to get the job as PLM Professional recognized Associate Yourself With Professional PLM.

To make the scope broader, John invited me to write a chapter about PLM and Sustainability, which is an actual topic in many organizations. As sustainability is my dedicated topic in the PLM Global Green Alliance (PGGA) core team, I was happy to accept this challenge.

To make the scope broader, John invited me to write a chapter about PLM and Sustainability, which is an actual topic in many organizations. As sustainability is my dedicated topic in the PLM Global Green Alliance (PGGA) core team, I was happy to accept this challenge.

This activity is challenging because writing a chapter on a current topic might make it outdated soon. For the same reason, I never wanted to write a PLM book as I wrote in my 2014 post: Did you notice PLM is changing?

The book, with the additional chapter, will be available later this year. I want to share with you in this post the topics I addressed in this chapter. Perhaps relevant for your organization or personal interests. Also, I am looking forward to learning if I missed any topics.

The book, with the additional chapter, will be available later this year. I want to share with you in this post the topics I addressed in this chapter. Perhaps relevant for your organization or personal interests. Also, I am looking forward to learning if I missed any topics.

Introduction

The chapter starts with defining the context. PLM is considered a strategy supported by a connected IT infrastructure, and for the definition of sustainability, I refer to the relevant SDGs as described on our PGGA theme page: PLM and Sustainability

Next, I discuss two major concepts indissoluble connected with sustainability.

The Circular Economy

On a planet with limited resources and still a growing consumption of raw materials, we need to follow the concepts of the circular economy in our businesses and lives. The circular economy section addresses mainly the hardware side of the butterfly as, here, PLM practices have the most significant impact.

The circular economy requires collaboration among various stakeholders, including businesses, governments and consumers. It involves rethinking production processes and establishing new consumption patterns. Policies and regulations will push for circular economy patterns, as seen in the following paragraphs.

Systems Thinking

A significant change in bringing products to the market will be the need to change how we look at our development processes. Historically, many of these processes were linear and only focused on time to market, cost and quality. Now, we have to look into other dimensions, like environmental impact, usage and impact on the planet. As I wrote in the past Systems Thinking – a must-have skill in the 21st century?

Systems Thinking is a cognitive approach that emphasizes understanding complex problems by considering interconnections, feedback loops, and emergent properties. It provides a holistic perspective and explores multiple viewpoints.

Systems Thinking guides problem-solving and decision-making and requires you to treat a solution with a mindset of a system interacting with other systems.

Regulations

More sustainable products and services will be driven primarily by existing and upcoming regulations. In this section, I refer to the success of the CFC (ChloroFluorCarbon) emission reduction, leading to slowly fixing the hole in the Ozon layer. Current regulations like WEEE, RoHS and REACH are already relevant for many companies, and compliance with these regulations is a good exercise for more stringent regulations related to Carbon emissions and upcoming related to the Digital Product Passport.

Making regulatory compliance a part of the concept phase ensures no late changes are needed to become compliant, saving time and costs. In addition, making regulatory compliance as much as possible with a data-driven approach reduces the overhead required to prove regulatory compliance. Both topics are part of a PLM strategy.

![]() In this context, see Lionel Grealou’s article 5 Brand Value Benefits at the Intersection of Sustainability and Product Compliance. The article has also been shared in our PGGA LinkedIn group.

In this context, see Lionel Grealou’s article 5 Brand Value Benefits at the Intersection of Sustainability and Product Compliance. The article has also been shared in our PGGA LinkedIn group.

Business

On the business side, the Greenhouse Gas Protocol is explained. How companies will have to report their Scope 1 and Scope 2 emissions and, ultimately, Scope 3 – see the image below for the details.

GHG reporting will support companies, investors and consumers to decide where to prioritize and put their money.

Ultimately, companies have to be profitable to survive in their business. The ESG framework is relevant in this context as it will allow investors to put their money not only based on short-term gains (as expected) but also on Environmental or Social parameters. There are a lot of discussions related to the ESG framework, as you might have read in Vincent de la Mar’s monthly newsletter, Sustainability & ESG Insights, which is also published in our PGGA group – a link below..

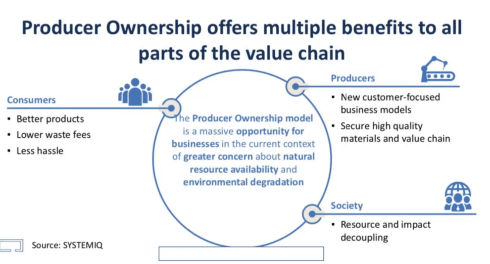

Besides ESG guidelines, there is also the drive by governments and consumers to push for a Product as a Service economy. Instead of owning products, consumers would pay for the usage of these products.

The concept is not new when considering lease cars, EV scooters, or streaming services like Spotify and Netflix. In the CIMdata PLM Roadmap/PDT Fall 2021 conference, we heard Kenn Webster explaining: In the future, you will own nothing & you will be happy.

Changing the business to a Product as a Service is not something done overnight. It requires repairable, upgradeable products. And business related, it requires a connected ecosystem of all stakeholders – the manufacturer, the finance company, and the operating entities.

Digital Transformation

All the subjects discussed before require real-time reporting and analysis combined with data access to compliance-related databases. More in the section related to Life Cycle Assessment. As I discussed last year in several conferences, a sustainability initiative starts with data-driven and model-based approaches during the concept phase, but when manufacturing and operating (connected) products in the field. You can read the entire story here: Sustainability and Data-Driven PLM – the Perfect Storm.

Life Cycle Analysis

Special attention is given in this chapter to Life Cycle Analysis, which seems to be a popular topic among PLM vendors. Here, they can provide tools to make a lifecycle assessment, and you can read an impression of these tools in a guest blog from Roger L. Franz titled PLM Tools to Design for Sustainability – PLM Green Global Alliance.

However, Lifecycle Analysis is not as simple. Looking at the ISO 14040 framework, which describes – having the right goals and scope in mind, allows you to do an LCA where the Product Category Rules (PCS) will enable companies to compare their products with others.

PCRs include the description of the product category, the goal of the LCA, functional units, system boundaries, cut-off criteria, allocation rules, impact categories, information on the use phase, units, calculation procedures, requirements for data quality, and other information on the lifecycle Inventory Phase.

So be aware there is more to do than installing a tool.

Digital Twin

This section describes the importance of implementing a digital twin for the design phase, allowing companies to develop, test and analyze their products and services first virtually. Trade-off studies on virtual products are much cheaper, and when they are done in a data-driven, model-based environment, it will be the most efficient environment. In my terminology, setting up such a collaboration environment might be considered a System of Engagement.

This section describes the importance of implementing a digital twin for the design phase, allowing companies to develop, test and analyze their products and services first virtually. Trade-off studies on virtual products are much cheaper, and when they are done in a data-driven, model-based environment, it will be the most efficient environment. In my terminology, setting up such a collaboration environment might be considered a System of Engagement.

The second crucial digital twin mentioned is the digital twin from a product in operation where performance can be monitored and usage can be optimized for a minimal environmental impact. Suppose a company is able to create a feedback loop between its products in the field and its product innovation platform. In that case, it can benchmark its design models and update the product behavior for better performance.

The manufacturing digital twin is also discussed in the context of environmental impact, as choosing the right processes and resources can significantly affect scope 3 emissions.

The chapter finishes with the story of a fictive company, WePack, where we can follow the impact and implementations of the topics described in this chapter.

Conclusion

As I described in the introduction, the topic of PLM and Sustainability is relatively new and constantly evolving. What do you think? Did I miss any dimensions?

Feel free to contribute to our PLM Global Green Alliance LinkedIn group.

I am writing this post because one of my PLM peers recently asked me this question: “Is the BOM losing its position? He was in discussion with another colleague who told him:

I am writing this post because one of my PLM peers recently asked me this question: “Is the BOM losing its position? He was in discussion with another colleague who told him:

“If you own the BOM, you own the Product Lifecycle”.

This statement made me think of ä recent post from Jan Bosch recent post: Product Development fallacy #8: the bill of materials has the highest priority.

Software becomes increasingly an essential part of the final product, and combined with Jan’s expertise in software development, he wrote this article. I recommend reading the full post (4 min read) and next browse through the comments.

Software becomes increasingly an essential part of the final product, and combined with Jan’s expertise in software development, he wrote this article. I recommend reading the full post (4 min read) and next browse through the comments.

If you cannot afford these 10 minutes, here is my favorite quote from the article:

An excessive focus on the bill of materials leads to significant challenges for companies that are undergoing a digital transformation and adopting continuous value delivery. The lack of headroom, high coupling and versioning hell may easily cause an explosion of R&D expenditure over time.

Where did the BOM focus come from? A historical overview related to the rise (and fall) of the BOM.

In the beginning, there was the drawing.

Before the era of computers, there was “THE drawing”, describing assemblies, subassemblies or parts. And on the drawing, you can find the parts list if relevant. This parts list was the first Bill of Material, describing the parts/materials shown on the drawing.

Before the era of computers, there was “THE drawing”, describing assemblies, subassemblies or parts. And on the drawing, you can find the parts list if relevant. This parts list was the first Bill of Material, describing the parts/materials shown on the drawing.

Next came MRP/ERP

With the introduction of the MRP system (Material Requirement Planning), it was the first step that by using computers, people could collect the material requirements for one system as data and process.

With the introduction of the MRP system (Material Requirement Planning), it was the first step that by using computers, people could collect the material requirements for one system as data and process.

Entering new materials/parts described on drawings was still a manual process, as well as referring to existing parts on the drawing. Reuse of parts was a manual process based on individual knowledge.

In the nineties, MRP evolved into ERP (Enterprise Resource Planning), which included the MRP part and added resource and manufacturing planning and financial reporting.

The ERP system became the most significant IT system, the execution system of the company. As it was the first enterprise system implemented, it was the first moment we learned about implementation challenges – people change and budget overruns. However, as the ERP system brought visibility to the company’s execution, it became a “must-have” system for management.

The ERP system became the most significant IT system, the execution system of the company. As it was the first enterprise system implemented, it was the first moment we learned about implementation challenges – people change and budget overruns. However, as the ERP system brought visibility to the company’s execution, it became a “must-have” system for management.

The introduction of mainstream 2D CAD did not affect the company’s culture so much. Drawings became electronic drawings, and the methodology of the parts list on the drawing remained.

Sometimes the interaction with the MRP/ERP system was enhanced by an interface – sending the drawing BOM to ERP. The advantage of the interface: no manual transfer of data reducing typos and BOM errors. The disadvantages at that time: relatively expensive (connectivity between systems was a challenge) and mostly one direction.

Sometimes the interaction with the MRP/ERP system was enhanced by an interface – sending the drawing BOM to ERP. The advantage of the interface: no manual transfer of data reducing typos and BOM errors. The disadvantages at that time: relatively expensive (connectivity between systems was a challenge) and mostly one direction.

And then there was PDM.

In parallel with the introduction of ERP systems, mainstream 3D CAD systems became affordable, particularly SolidWorks, Solid Edge and Inventor. These 3D CAD systems allow sharing of parts and assemblies in different products, and the PDM database was the first aid to support part reuse, versioning and standardization.

In parallel with the introduction of ERP systems, mainstream 3D CAD systems became affordable, particularly SolidWorks, Solid Edge and Inventor. These 3D CAD systems allow sharing of parts and assemblies in different products, and the PDM database was the first aid to support part reuse, versioning and standardization.

By extracting the parts from the assemblies and subassemblies, it was possible to generate a BOM structure in the PDM system to be transferred or typed into the ERP system. We did not talk about EBOM or MBOM then, as there was only one BOM in the ERP system, and the PDM system was a tool to feed the ERP system.

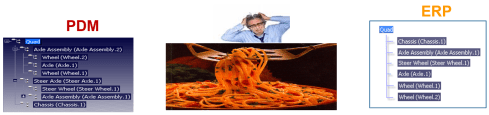

Many companies still have based their processes on this approach. ERP (read SAP nowadays) is the central execution system, and PDM is an external system. You might remember the story and image from my previous post about people, processes and tools. The bad practice example: Asking the ERP system to provide a part number when starting to design a part.

Many companies still have based their processes on this approach. ERP (read SAP nowadays) is the central execution system, and PDM is an external system. You might remember the story and image from my previous post about people, processes and tools. The bad practice example: Asking the ERP system to provide a part number when starting to design a part.

And then products started to change.

In the early 2000s, I worked with SmarTeam to define the E&E (Electronics and Electrical) template. One of the new concepts was to synchronize all design data coming from different disciplines to a single BOM structure.

In the early 2000s, I worked with SmarTeam to define the E&E (Electronics and Electrical) template. One of the new concepts was to synchronize all design data coming from different disciplines to a single BOM structure.

It was the time we started to talk about the EBOM. A type of BOM, as the structure to consolidate all the design data, was based on parts.

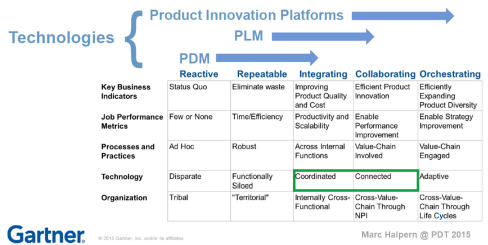

The EBOM, most of the time, reflects the design intent in logical groups and sending the relevant parts in the correct order to the ERP system was a favorite expensive customization for service providers. How to transfer an engineering BOM view to an ERP system that only understands the manufacturing view?

Note: not all ERP systems have the data model to differentiate between engineering parts and manufacturing parts

The image below illustrates the challenge and the customer’s perception.

The automated link between the design side (EBOM) and manufacturing side (MBOM) was a mission impossible – too many exceptions for the (spaghetti) code.

And then came the MBOM.

The identified issues connecting PDM and ERP led to the concept of implementing the MBOM in the PLM system. The MBOM in PLM is one of the characteristics of a PLM implementation compared to a PDM implementation. In a traditional PLM system, there is an interaction and connection between the EBOM and MBOM. EBOM parts should end up as MBOM parts. This interaction can be supported by automation, however, as it is in the same system, still leaving manual changes possible.

The MBOM structure in PLM could then be the information structure to transfer to the ERP system; however, there is more, as Jörg W. Fischer wrote in his provoking post-Die MBOM muss weg (The MBOM must go). He rightly points out (in German) that the MBOM is not a structure on its own but a combination of different views based on Assembly Drawings, Process Planning and Material Requirements.

The MBOM structure in PLM could then be the information structure to transfer to the ERP system; however, there is more, as Jörg W. Fischer wrote in his provoking post-Die MBOM muss weg (The MBOM must go). He rightly points out (in German) that the MBOM is not a structure on its own but a combination of different views based on Assembly Drawings, Process Planning and Material Requirements.

His conclusion:

Calling these structures, MBOM is trying to squeeze all three structures into one. That usually doesn’t work and then leads to much more emotional discussions in the project. It also costs a lot of money. It is, therefore, better not to use the term MBOM at all.

And indeed, just having an MBOM in your PLM system might help you to prepare some of the manufacturing steps, the needed resources and parts. The MBOM result still has to be localized at the local plant where the manufacturing takes place. And here, the systems used are the ERP system and the MES system.

The main advantage of having the MBOM in the PLM system is the direct relation between specification and manufacturing intent, allowing manufacturing engineering to work collaboratively with engineering in the same environment.

- The first benefit is fewer iterations and a shorter time to production, thanks to early interaction and manufacturing involvement in the engineering process.

- The second benefit is: product knowledge is centralized in a single system. Consolidating your Product Knowledge in ERP does not make sense due to global localization and the missing capabilities to manage the iterative engineering processes on non-existing parts.

And then came the SBOM, the xBOM

Traditional PLM vendors and implementations kept using xBOM structures as placeholders for related specification data (mechanical designs, electrical, software deliverables, serialized products). Most of the time, related files.

Traditional PLM vendors and implementations kept using xBOM structures as placeholders for related specification data (mechanical designs, electrical, software deliverables, serialized products). Most of the time, related files.

And with this approach, talking about digital thread, PLM systems also touch on the concepts of Configuration Management.

And with this approach, talking about digital thread, PLM systems also touch on the concepts of Configuration Management.

I will not go into the details here but look at the two images by clicking on them and see a similar mindset.

It is about the traceability of information in structures and systems. These structures work well in a relatively static and linear product development and delivery environment, as illustrated below:

Engineering change and release processes are based on managing the changes in different structures from the left to the right.

And then came software!

Modern connected products are no longer mechanical products. The product’s functionality no longer depends on the mechanical properties but mainly on embedded electronics and software used. For example, look at the mechanical design of a telecom transmission tower – its behavior merely comes from non-mechanical components, and they can change over time. Still, the Bill of Material contains a lot of concrete and steel parts.

Modern connected products are no longer mechanical products. The product’s functionality no longer depends on the mechanical properties but mainly on embedded electronics and software used. For example, look at the mechanical design of a telecom transmission tower – its behavior merely comes from non-mechanical components, and they can change over time. Still, the Bill of Material contains a lot of concrete and steel parts.

The ultimate example is comparing a Tesla (software on wheels) with a traditional car. For modern connected products, electronics and software need to be part of the solution. Software and electronics allow the product to be upgraded over time. Managing these products in the same manner as mechanical products is impossible, inefficient and therefore threatening your company’s future business.

I requote Jan Bosch:

An excessive focus on the bill of materials leads to significant challenges for companies that are undergoing a digital transformation and adopting continuous value delivery. The lack of headroom, high coupling and versioning hell may easily cause an explosion of R&D expenditure over time.

The model-based, connected enterprise

I will not solve the puzzle of the future in this post. You can read my observations in my series: The road to model-based and connected PLM. We need a new infrastructure with at least two modes. One that still serves as a System of Record, storing information in a traditional manner, like a Bill of Materials for the static parts, as not everyone and everything can be connected.

In addition, we need various Systems of Engagement that enable close to real-time interaction between products (systems) and relevant stakeholders for the engagement scope(multidisciplinary / consumers).

In addition, we need various Systems of Engagement that enable close to real-time interaction between products (systems) and relevant stakeholders for the engagement scope(multidisciplinary / consumers).

Digital twins are examples of such environments. Currently, these Systems of Engagement often work disconnected from the System of Record due to the lack of understanding of how to connect. (standard connectors? / OSLC?)

Our mission is to explore, as I wrote in my post Time to split PLM and drop our mechanical mindset.

And while I was finalizing this post, I read a motivating post from Jan Bosch again for all of you working on understanding and pushing the digital transformation in your eco-system.

And while I was finalizing this post, I read a motivating post from Jan Bosch again for all of you working on understanding and pushing the digital transformation in your eco-system.

The title: Be the protagonist of your life: 15 rules A starting point for more to come.

Conclusion

The BOM is no longer the master of the product lifecycle when it comes to managing connected products, where functionality mainly depends on software. BOM structures with related documents are just one of the extracted baselines from a data-driven, connected enterprise. This traditional PLM infrastructure requires other, non-BOM-driven structures to represent the actual status of a virtual or physical product.

The BOM is not dead, but there is more ………

Your thoughts?

This year started for me with a discussion related to federated PLM. A topic that I highlighted as one of the imminent trends of 2022. A topic relevant for PLM consultants and implementers. If you are working in a company struggling with PLM, this topic might be hard to introduce in your company.

This year started for me with a discussion related to federated PLM. A topic that I highlighted as one of the imminent trends of 2022. A topic relevant for PLM consultants and implementers. If you are working in a company struggling with PLM, this topic might be hard to introduce in your company.

Before going into the discussion’s topics and arguments, let’s first describe the historical context.

The traditional PLM frame.

Historically PLM has been framed first as a system for engineering to manage their product data. So you could call it PDM first. After that, PLM systems were introduced and used to provide access to product data, upstream and downstream. The most common usage was the relation with manufacturing, leading to EBOM and MBOM discussions.

IT landscape simplification often led to an infrastructure of siloed solutions – PLM, ERP, CRM and later, MES. IT was driving the standardization of systems and defining interfaces between systems. System capabilities were leading, not the flow of information.

As many companies are still in this stage, I would call it PLM 1.0

PLM 1.0 systems serve mainly as a System of Record for the organization, where disciplines consolidate their data in a given context, the Bills of Information. The Bill of Information then is again the place to connect specification documents, i.e., CAD models, drawings and other documents, providing a Digital Thread.

The actual engineering work is done with specialized tools, MCAD/ECAD, CAE, Simulation, Planning tools and more. Therefore, each person could work in their discipline-specific environment and synchronize their data to the PLM system in a coordinated manner.

The actual engineering work is done with specialized tools, MCAD/ECAD, CAE, Simulation, Planning tools and more. Therefore, each person could work in their discipline-specific environment and synchronize their data to the PLM system in a coordinated manner.

However, this interaction is not easy for some of the end-users. For example, the usability of CAD integrations with the PLM system is constantly debated.

Many of my implementation discussions with customers were in this context. For example, suppose your products are relatively simple, or your company is relatively small. In that case, the opinion is that the System or Record approach is overkill.

Many of my implementation discussions with customers were in this context. For example, suppose your products are relatively simple, or your company is relatively small. In that case, the opinion is that the System or Record approach is overkill.

That’s why many small and medium enterprises do not see the value of a PLM backbone.

This could be true till recently. However, the threats to this approach are digitization and regulations.

Customers, partners, and regulators all expect more accurate and fast responses on specific issues, preferably instantly. In addition, sustainability regulations might push your company to implement a System of Record.

Customers, partners, and regulators all expect more accurate and fast responses on specific issues, preferably instantly. In addition, sustainability regulations might push your company to implement a System of Record.

PLM as a business strategy

For the past fifteen years, we have discussed PLM more as a business strategy implemented with business systems and an infrastructure designed for sharing. Therefore, I choose these words carefully to avoid overhanging the expression: PLM as a business strategy.

For the past fifteen years, we have discussed PLM more as a business strategy implemented with business systems and an infrastructure designed for sharing. Therefore, I choose these words carefully to avoid overhanging the expression: PLM as a business strategy.

The reason for this prudence is that, in reality, I have seen many PLM implementations fail due to the ambiguity of PLM as a system or strategy. Many enterprises have previously selected a preferred PLM Vendor solution as a starting point for their “PLM strategy”.

One of the most neglected best practices.

In reality, this means there was no strategy but a hope that with this impressive set of product demos, the company would find a way to support its business needs. Instead of people, process and then tools to implement the strategy, most of the time, it was starting with the tools trying to implement the processes and transform the people. That is not really the definition of business transformation.

In my opinion, this is happening because, at the management level, decisions are made based on financials.

In my opinion, this is happening because, at the management level, decisions are made based on financials.

Developing a PLM-related business strategy requires management understanding and involvement at all levels of the organization.

This is often not the case; the middle management has to solve the connection between the strategy and the execution. By design, however, the middle management will not restructure the organization. By design, they will collect the inputs van the end users.

And it is clear what end users want – no disruption in their comfortable way of working.

And it is clear what end users want – no disruption in their comfortable way of working.

Halfway conclusion:

Rebranding PLM as a business strategy has not really changed the way companies work. PLM systems remain a System of Record mainly for governance and traceability.

To understand the situation in your company, look at who is responsible for PLM.