You are currently browsing the tag archive for the ‘PLM evolution’ tag.

Recently, I attended several events related to the various aspects of product lifecycle management; most of them were tool-centric, explaining the benefits and values of their products.

In parallel, I am working with several companies, assisting their PLM teams to make their plans understood by the upper management, which has always been my mission in the past.

In parallel, I am working with several companies, assisting their PLM teams to make their plans understood by the upper management, which has always been my mission in the past.

However, nowadays, people working in the business are feeling more and more challenged and pained by not acting adequately to the upcoming business demands.

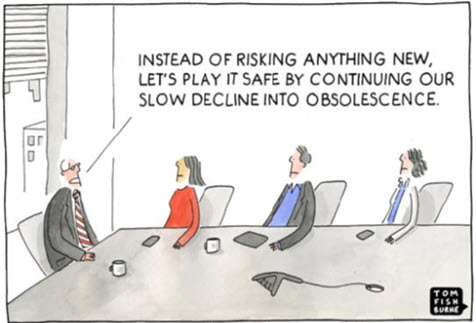

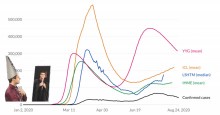

The image below has been shown so many times, and every time, the context becomes more relevant.

Too often, an evolutionary mindset with small steps is considered instead of looking toward the future and reasoning back for what needs to be done.

Let me share some experiences and potential solutions.

Don’t use the P** word!

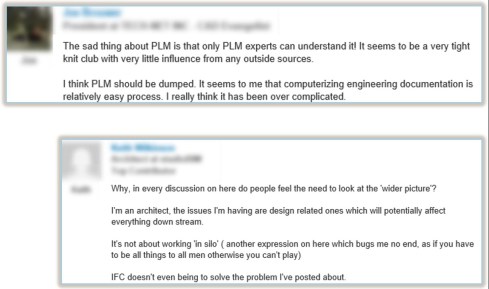

The title of this post is one of the most essential points to consider. By using the term PLM, the discussion is most of the time framed in a debate related to the purchase or installation of a system, the PLM system, which is an engineering tool.

The title of this post is one of the most essential points to consider. By using the term PLM, the discussion is most of the time framed in a debate related to the purchase or installation of a system, the PLM system, which is an engineering tool.

PLM vendors, like Dassault Systèmes and Siemens, have recognized this, and the word PLM is no longer on their home pages.

They are now delivering experiences or digital industries software.

Other companies, such as PTC and Aras, broadened the discussion by naming other domains, such as manufacturing and services, all connected through a digital thread.

The challenge for all these software vendors is why a company would consider buying their products. A growing issue for them is also why would they like to change their existing PLM system to another one, as there is so much legacy.

The challenge for all these software vendors is why a company would consider buying their products. A growing issue for them is also why would they like to change their existing PLM system to another one, as there is so much legacy.

For all of these vendors, success can come if champions inside the targeted company understand the technology and can translate its needs into their daily work.

Here, we meet the internal PLM team, which is motivated by the technology and wants to spread the message to the organization. Often, with no or limited success, as the value and the context they are considering are not understood or felt as urgent.

Here, we meet the internal PLM team, which is motivated by the technology and wants to spread the message to the organization. Often, with no or limited success, as the value and the context they are considering are not understood or felt as urgent.

Lesson 1:

Don’t use the word PLM in your management messaging.

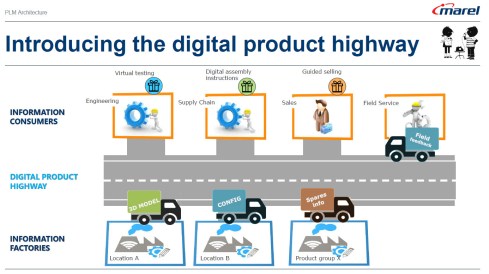

In some of the current projects I have seen, people talk about the digital highway or a digital infrastructure to take this hurdle. For example, listen to the SharePLM podcast with Roger Kabo from Marel, who talks about their vision and digital product highway.

In some of the current projects I have seen, people talk about the digital highway or a digital infrastructure to take this hurdle. For example, listen to the SharePLM podcast with Roger Kabo from Marel, who talks about their vision and digital product highway.

As soon as you use the word PLM, most people think about a (costly) system, as this is how PLM is framed. Engineering, like IT, is often considered a cost center, as money is made by manufacturing and selling products.

According to experts (CIMdata/Gartner), Product Lifecycle Management is considered a strategic approach. However, the majority of people talk about a PLM system. Of course, vendors and system integrators will speak about their PLM offerings.

According to experts (CIMdata/Gartner), Product Lifecycle Management is considered a strategic approach. However, the majority of people talk about a PLM system. Of course, vendors and system integrators will speak about their PLM offerings.

To avoid this framing, first of all, try to explain what you want to establish for the business. The terms Digital Product Highway or Digital Infrastructure, for example, avoid thinking in systems.

Lesson 2:

Don’t tell your management why they need to reward your project – they should tell you what they need.

This might seem like a bit of strange advice; however, you have to realize that most of the time, people do not talk about the details at the management level. At the management level, there are strategies and business objectives, and you will only get attention when your proposal addresses the business needs. At the management level, there should be an understanding of the business need and its potential value for the organization. Next, analyzing the business changes and required tools will lead to an understanding of what value the PLM team can bring.

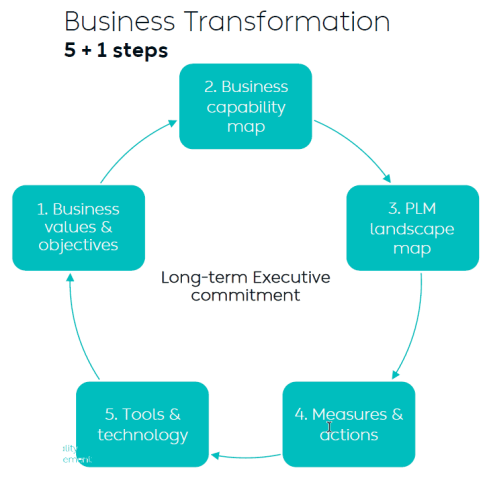

Yousef Hooshmand’s 5 + 1 approach illustrates this perfectly. It is crucial to note that long-term executive commitment is needed to have a serious project, and therefore, the connection to their business objective is vital.

Therefore, if you can connect your project to the business objectives of someone in management, you have the opportunity to get executive sponsorship. A crucial advice you hear all the time when discussing successful PLM projects.

Therefore, if you can connect your project to the business objectives of someone in management, you have the opportunity to get executive sponsorship. A crucial advice you hear all the time when discussing successful PLM projects.

Lesson 3:

Alignment must come from within the organization.

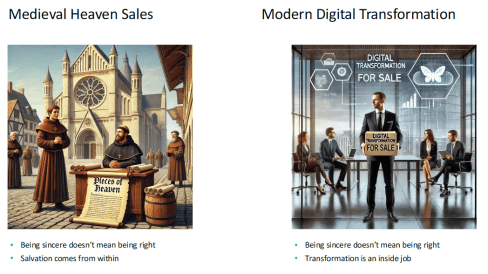

Last week, at the 20th anniversary of the Dutch PLM platform, Yousef Hooshmand gave the keynote speech starting with the images below:

On the left side, we see the medieval Catholic church sincerely selling salvation through indulgences, where the legend says Luther bought the hell, demonstrating salvation comes from inside, not from external activities – read the legend here.

On the right side, we see the Digital Transformation expert sincerely selling digital transformation to companies. According to LinkedIn, there are about 1.170.000 people with the term Digital Transformation in their profile.

On the right side, we see the Digital Transformation expert sincerely selling digital transformation to companies. According to LinkedIn, there are about 1.170.000 people with the term Digital Transformation in their profile.

As Yousef mentioned, the intentions of these people can be sincere, but also, here, the transformation must come from inside (the company).

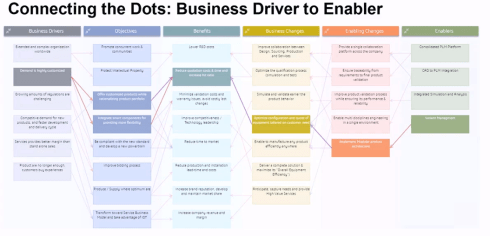

When I work with companies, I use the Benefits Dependency Network methodology to create a storyboard for the company. The BDN network then serves as a base for creating storylines that help people in the organization have a connected view starting from their perspective.

Companies might hire strategic consultancy firms to help them formulate their long-term strategy. This can be very helpful where, in the best case, the consultancy firm educates the company, but the company should decide on the direction.

In an older blog post, I wrote about this methodology, presented by Johannes Storvik at the Technia Innovation forum, and how it defines a value-driven implementation.

Dassault Systèmes and its partners use this methodology in their Value Engagement process, which is tuned to their solution portfolio.

You can also watch the webinar Federated PLM Webinar 5 – The Business Case for the Federated PLM, in which I explained the methodology used.

Lesson 4:

PLM is a business need not an IT service

This lesson is essential for those who believe that PLM is still a system or an IT service. In some companies, I have seen that the (understaffed) PLM team is part of a larger IT organization. In this type of organization, the PLM team, as part of IT, is purely considered a cost center that is available to support the demand from the business.

This lesson is essential for those who believe that PLM is still a system or an IT service. In some companies, I have seen that the (understaffed) PLM team is part of a larger IT organization. In this type of organization, the PLM team, as part of IT, is purely considered a cost center that is available to support the demand from the business.

The business usually focuses on incremental and economic profitability, less on transformational ways of working.

In this context, it is relevant to read Chris Seiler’s post: How to escape the vicious circle in times of transformation? Where he reflects on his 2002 MBA study, which is still valid for many big corporate organizations.

It is a long read, but it is gratifying if you are interested. It shows that PLM concepts should be discussed and executed at the business level. Of course, I read the article with my PLM-twisted brain.

The image above from Chris’s post could be a starting point for a Benefits-Dependent Network diagram, expanded with Objectives, Business Changes and Benefits to fight this vicious downturn.

As PLM is no longer a system but a business strategy, the PLM team should be integrated into the business potential overlooked by the CIO or CDO, as a CEO is usually not able to give this long-term executive commitment.

Lesson 5:

Educate yourselves and your management

![]() The last lesson is crucial, as due to improving technologies like AI and, earlier, the concepts of the digital twin, traditional ways of coordinated working will become inefficient and redundant.

The last lesson is crucial, as due to improving technologies like AI and, earlier, the concepts of the digital twin, traditional ways of coordinated working will become inefficient and redundant.

However, before jumping on these new technologies, everyone, at every level in the organization, should be aware of:

WHY will this be relevant for our business? Is it to cut costs – being more efficient as fewer humans are in the process? Is it to be able to comply with new upcoming (sustainability) regulations? Is it because the aging workforce leaves a knowledge gap?

WHAT will our business need in the next 5 to 10 years? Are there new ways of working that we want to introduce, but we lack the technology and the tools? Do we have skills in-house? Remember, digital transformation must come from the inside.

HOW are we going to adapt our business? Can we do it in a learning mode, as the end target is not clear yet—the MVP (Minimum Viable Product) approach? Are we moving from selling products to providing a Product Service System?

My lesson: Get inspired by the software vendors who will show you what might be possible. Get educated on the topic and understand what it would mean for your organization. Start from the people and the business needs before jumping on the tools.

My lesson: Get inspired by the software vendors who will show you what might be possible. Get educated on the topic and understand what it would mean for your organization. Start from the people and the business needs before jumping on the tools.

In the upcoming PLM Roadmap/PDT Europe conference on 23-24 October, we will be meeting again with a group of P** experts to discuss our experiences and progress in this domain. I will give a lecture here about what it takes to move to a sustainable economy based on a Product-as-a-service concept.

In the upcoming PLM Roadmap/PDT Europe conference on 23-24 October, we will be meeting again with a group of P** experts to discuss our experiences and progress in this domain. I will give a lecture here about what it takes to move to a sustainable economy based on a Product-as-a-service concept.

If you want to learn more – join us – here is the link to the agenda.

Conclusion

I hope you enjoyed reading a blog post not generated by ChatGPT, although I am using bullet points. With the overflow of information, it remains crucial to keep a holistic overview. I hope that with this post, I have helped the P** teams in their mission, and I look forward to learning from your experiences in this domain.

In the past months, I have had several discussions related to migrating PLM data, either from one system to another or from consolidating a collection of applications into a single environment. Does this sound familiar?

In the past months, I have had several discussions related to migrating PLM data, either from one system to another or from consolidating a collection of applications into a single environment. Does this sound familiar?

Let me share some experiences and lessons learned to avoid the Migration Migraine.

It is not a technical guide but a collection of experiences and thoughts that you might have missed when considering to solve the technical dream.

Halfway I realized I was too ambitious; therefore, another post will follow this introduction. Here, I will focus on the business side and the digital transformation journey.

Halfway I realized I was too ambitious; therefore, another post will follow this introduction. Here, I will focus on the business side and the digital transformation journey.

Garbage Out – Garbage In

The Garbage Out-In statement is somehow the paradigm we are used to in our day-to-day lives. When you buy a new computer, you use backup and restore. Even easier, nowadays, the majority of the data is already in the cloud.

The Garbage Out-In statement is somehow the paradigm we are used to in our day-to-day lives. When you buy a new computer, you use backup and restore. Even easier, nowadays, the majority of the data is already in the cloud.

This simple scenario assumes that all professional systems should be easily upgrade-able. We become unaware of the amount of data we store and its relevance.

This phenomenon already has a name: “Dark Data.” Dark Data consumes storage energy in the cloud and is no longer visible. Please read all about it here: Dark Data.

TIP 1: Every migration is a moment to clean up your data. By dragging everything with you, the burden of migrating becomes bigger. In easy migrations, do a clean-up—it prevents future, more extensive issues.

TIP 1: Every migration is a moment to clean up your data. By dragging everything with you, the burden of migrating becomes bigger. In easy migrations, do a clean-up—it prevents future, more extensive issues.

Never follow the Garbage Out – Garbage in principle, even if it is easy!

Migrations in the PLM domain are different – setting the scene.

Before discussing the various scenarios, let’s examine what companies are doing. For early PLM adopters in the Automotive, Aerospace, and Defense Industries, migrations from mainframes to modern infrastructures have become impossible. The real problem is not only the changing hardware but also the changing data and data models.

Before discussing the various scenarios, let’s examine what companies are doing. For early PLM adopters in the Automotive, Aerospace, and Defense Industries, migrations from mainframes to modern infrastructures have become impossible. The real problem is not only the changing hardware but also the changing data and data models.

For these companies, the solution is often to build an entirely new PLM infrastructure on top of the existing infrastructure, where manageable data pieces are migrated to new environments using data lakes, dashboards, and custom apps to support modern users.

Migration in this case is a journey as long as the data lives – and we can learn from them!

Follow the money

From a business perspective, migrations are considered a negative distractor. Talking about them raises awareness of their complexity, which might jeopardize enthusiasm.

From a business perspective, migrations are considered a negative distractor. Talking about them raises awareness of their complexity, which might jeopardize enthusiasm.

For the initiator, the PLM software vendor or implementer, it might endanger the sales deal.

Traditional IT organizations strive for simplification—one CAD, one PLM or one ERP system to manage. Although this argument makes sense, an analysis should always be done comparing the benefits and the (migration) costs and risks to reach the ideal situation.

In those discussions often, migrations are downplayed

Without naming companies, I have observed the downplaying several times, even at some prominent enterprises. So, if you recognize your company in this process, you are not alone.

TIP 2: Migrations are never simple. Make migration a serious topic of your PLM project – as important as the software. This approach means analyzing the potential migration risks and their mitigation is needed.

TIP 2: Migrations are never simple. Make migration a serious topic of your PLM project – as important as the software. This approach means analyzing the potential migration risks and their mitigation is needed.

Please read about the Xylem story in my recent post: The week after the PDSFORUM 2024

The Big Bang has the highest risk and might again lead to garbage out—garbage in.

You are responsible for your garbage.

It may sound disparaging, but it is not. Most companies are aware that people, tools and policies have changed over the years. Due to the coordinated approach to working, disciplines did not need to care about downstream or upstream usage of their initially created data – Excel and PDFs are the bridges between disciplines.

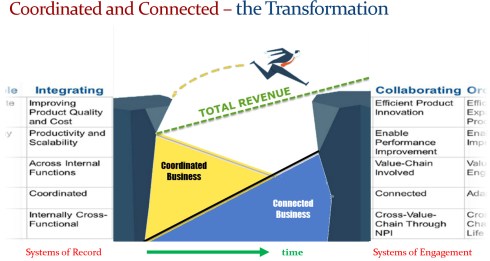

All the actual knowledge and context are stored in the heads of experienced employees who have gotten used to dealing with inconsistencies. And they will retire, so there is an urgent need for actual data quality and governance. Read more about the journey from Coordinated to Connected in these articles.

Even if you are not yet thinking about migrations, the digital transformation in the PLM domain is coming, and we should learn to work in a connected mode.

TIP 3: Create a team in your organization that assesses the current data quality and defines the potential future enterprise (data) architecture. Then, start improving the quality of the current generated data. Like the ISO 900x standard, the ISO 8000 standard already exists for data quality.

TIP 3: Create a team in your organization that assesses the current data quality and defines the potential future enterprise (data) architecture. Then, start improving the quality of the current generated data. Like the ISO 900x standard, the ISO 8000 standard already exists for data quality.

The future is data-driven; prepare yourself for the future.

Migration scenarios and their best practices

Here are some migrations scenario’s – two in this post and more in an upcoming post.

From Relational to Object-oriented

One of my earlier projects, starting in 2010 with SmarTeam, was migrating a mainframe-based application for airplane certification to a modern Microsoft infrastructure.

One of my earlier projects, starting in 2010 with SmarTeam, was migrating a mainframe-based application for airplane certification to a modern Microsoft infrastructure.

The goal was to create a new environment that could be used both as a replacement for the mainframe application and as the design and validation environment to implement changes to the current airplanes during a maintenance or upgrade activity.

The need was high because detailed documentation about the logic of the current application did not exist, and only one person who understood the logic was partly available.

So, internally, the relational database was a black box. The tables in the database contained a mix of item data, document data, change status and versions. The documents were stored in directories with meaningful file names but disconnected from the application.

The initial estimate was that the project would take two to three months, so a fixed price for two months was agreed upon. However, it became almost a two-year project, and in the end, the result seemed to be reliable (there was never mathematical proof).

The disadvantage was that SmarTeam ended up being so highly customized that automatic upgrades would not work for this version anymore—a new legacy was created with modern technology.

The disadvantage was that SmarTeam ended up being so highly customized that automatic upgrades would not work for this version anymore—a new legacy was created with modern technology.

The same story, combined with the example of Ericsson’s migration attempt, is described in the 2016 post, The PLM Migration Dilemma. For me, the lesson learned from these examples leads to the following recommendation.

TIP 4: When there is a paradigm change in the data model, don’t migrate but establish a new (data-driven) infrastructure and connect to your legacy as much as possible in read-only mode.

TIP 4: When there is a paradigm change in the data model, don’t migrate but establish a new (data-driven) infrastructure and connect to your legacy as much as possible in read-only mode.

The automotive and aerospace industries’ story is one of paradigm change.

Listen to the SharePLM podcast Revolutionizing PLM: Insights from Yousef Hooshmand, where Yousef also discusses how to address this transition process.

Listen to the SharePLM podcast Revolutionizing PLM: Insights from Yousef Hooshmand, where Yousef also discusses how to address this transition process.

CAD/PDM to PLM

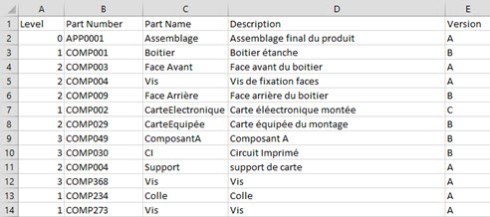

Another migration step happens when companies decide to implement a traditional PLM infrastructure as a System of Record, merging PDM data (mainly CAD) and ERP data (the BOM).

Another migration step happens when companies decide to implement a traditional PLM infrastructure as a System of Record, merging PDM data (mainly CAD) and ERP data (the BOM).

Some of these companies have been working file-based and have stored their final CAD files in folders; others might have a local PDM system native to the 3D CAD. The EBOM usually existed digitally in ERP, and most of the time, it is not a “pure” EBOM but more of a hybrid EBOM/MBOM.

The image above show this type of migration can be very challenging as, in the source systems, there is not necessarily a consistent 3D CAD definition matching the BOM items. As the systems have been disconnected in the past, people have potentially added missing information or fixed information on the BOM side. As in most companies, the manufacturing definition is based on drawings, and the consistency with the 3D CAD definition is not guaranteed.

To address this challenge, companies need to assess the usability of the CAD and BOM data. Is it possible to populate the CAD files with properties that are necessary for an import? For example, does the file path contain helpful information?

I have experienced a situation where a company has poorly defined 3D parts and no properties, as all the focus was on using the 3D to generate the 2D drawing.

I have experienced a situation where a company has poorly defined 3D parts and no properties, as all the focus was on using the 3D to generate the 2D drawing.

The relevant details for manufacturing were next added to the drawing and not anymore to the parts or models – traceability was almost impossible.

In this situation, importing the 3D CAD structures into the new PLM system has limited value. An alternative is to describe and test procedures for handling legacy data when it is needed, either to implement a design change or a new order. Leave the legacy accessible, but do not migrate.

The BOM side is, in theory, stable for manufactured products, as the data should have gone through a release process. However, the company needs to revisit its part definition process for new designs and products.

Some points to consider:

- Meaningful identifiers are not desired in a PLM system as they create a legacy. Therefore, the import of parts with smart identifiers should map to relevant part properties besides the ID. Splitting the ID into properties will create a broader usage in the future. Read more in Smart Part Numbers – do we need them?

- In addition, companies should try to avoid having logistic information, such as supplier-specific part numbers to come from the CAD system. Supplier parts in your CAD environment create inefficiencies when a supplier part becomes obsolete. Concepts such as EBOM and MBOM and potentially the SBOM should be well understood during this migration.

- Concepts of EBOM and MBOM should also be introduced when moving from an ETO to a CTO approach or when modularity is a future business strategy.

Conclusion

As every company is on its PLM journey and technology is evolving, there will always be a migration discussion. Understanding and working towards the future should be the most critical driver for migration. Migrations in the PLM domain are often more than a data migration – new ways of working should be introduced in parallel. And for that reason the “big bang” is often too costly and demotivating for the future.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

I noticed this year/last year that many companies are discussing or working on their future PLM. It is time to make progress after COVID, particularly in digitization.

And as most companies are avoiding the risk of a “big bang”, they are exploring how they can improve their businesses in an evolutionary mode.

PLM is no longer a system

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

More and more, PLM is considered a strategy, with which I fully agree. Therefore, implementing a PLM strategy requires holistic thinking and an infrastructure of different types of systems, where possible, digitally connected.

This trend is bad news for the PLM vendors as they continuously work on an end-to-end portfolio where every aspect of the PLM lifecycle is covered by one of their systems. The company’s IT department often supports the PLM vendors, as IT does not like a diverse landscape.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

However, are these capabilities implementable in an economical and user-friendly manner by actual companies or should PLM players need to change their strategy”?

A question I will try to answer in this post

The future of PLM

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

Some of the posts to refresh your memory are:

- Time to split PLM?

- People, Processes, Data and Tools?

- The rise and the fall of the BOM?

- The new side of PLM? Systems of Engagement!

To summarize what has been discussed in these posts are the following points:

The As Is:

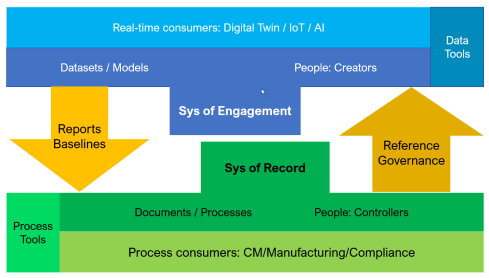

- The traditional PLM systems are examples of a System of Record, not designed to be end-user friendly but designed to have a traceable baseline for manufacturing, service and product compliance.

- The traditional PLM systems are tuned to a mechanical product introduction and release process in a coordinated manner, with a focus on BOM governance.

- The legacy information is stored in BOM structures and related specification files.

System of Record (ENOVIA image 2014)

The To Be:

- We are not talking about a PLM system anymore; a traditional System of Record will be digitally connected to different Systems of Engagement / Domains / Products, which have their own optimized environment for real-time collaboration.

- The BOM structures remain essential for the hardware part; however, overreaching structures are needed to manage software and hardware releases for a product. These structures depend on connected datasets.

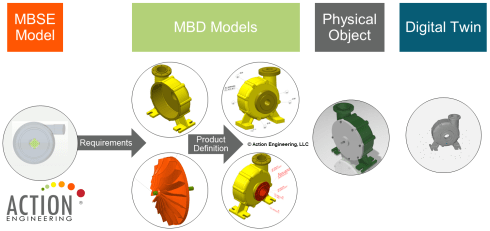

- To support digital twins at the various lifecycle stages (design. Manufacturing, operations), product data needs to be based on and consumed by models.

- A future PLM infrastructure is hybrid, based on a Single Source of Change (SSoC) and an Authoritative Source of Truth (ASoT) instead of a Single Source of Truth (SSoT).

Various Systems of Engagement

Related podcasts

I relistened two podcasts before writing this post, and I think they are a must to listen to.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

Highlights: From System to Platform – the new norman. A Single Source of Truth doesn’t work anymore – it is about value streams. People in big companies fear making wrong PLM decisions, which is seen as a significant risk for your career.

There is no immediate need to change the current status quo.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

Highlights: The term Single Source of Change and the existing concepts of a hybrid PLM infrastructure based on his experiences at Daimler and now at NIO. Yousef stresses the importance of having the vision and the executive support to execute.

![]() The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

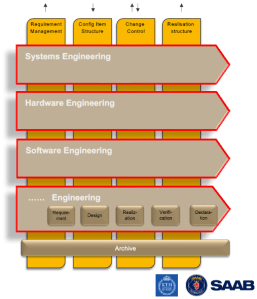

In addition, I want to point to the experiences provided by Erik Herzog in the Heliple project using OSLC interfaces as the “glue” to connect (in my terminology) the Systems of Engagement and the Systems of Record.

If you are interested in these concepts and want to learn and discuss them with your peers, more can be learned during the upcoming CIMdata PLM Roadmap / PDT Europe conference.

In particular, look at the agenda for day two if you are interested in this topic.

The future for the PLM vendors

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

They are more focused with their messaging on the transition from on-premise to the cloud, providing a SaaS offering with their portfolio.

I was slightly disappointed when I saw this article on Engineering.com provided by Autodesk: 5 PLM Best Practices from the Experiences of Autodesk and Its Customers.

The article is tool-centric, with statements that make sense and could be written by any PLM Vendor. However, Best Practice #1 Central Source of Truth Improves Productivity and Collaboration was the message that struck me. Collaboration comes from connecting people, not from the Single Source of Truth utopia.

I don’t believe PLM Vendors have to be afraid of losing their installed base rapidly with companies using their PLM as a System or Record. There is so much legacy stored in these systems that might still be relevant. The existence of legacy information, often documents, makes a migration or swap to another vendor almost impossible and unaffordable.

The System of Record is incompatible with data-driven PLM capabilities

I would like to see more clear developments of the PLM Vendors, creating a plug-and-play infrastructure for Systems of Engagement. Plug-and-play solutions could be based on a neutral partner collaboration hub like ShareAspace or the Systems of Engagement I discussed recently in my post and interview: The new side of PLM? Systems of Engagement!

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Some people believe AI, with the examples we have seen with ChatGPT, will be the future direction without needing interface standards.

I am curious about your thoughts and experiences in that area and am willing to learn.

Talking about learning?

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

As Martijn starts in the preface:

“I decided to write this book because, in my search for more knowledge on the topics of Part Re-Identification, Interchangeability, and Traceability, I could only find bits and pieces but not a comprehensive work that helps fundamentally understand these topics”.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

Martijn writes in an easily readable style and uses step-by-step examples to discuss the various options. There are even exercises at the end to use in a classroom or for your team to digest the content.

The good news is that the book is not about the past. You might also know Martijn for our joint discussion, The Future of Configuration Management, together with Maxime Gravel and Lisa Fenwick, on the impact of a model-based and data-driven approach to CM.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I recommend buying the book as a paperback so you can navigate easily between the diagrams and the text.

Conclusion

The trend for federated PLM is becoming more and more visible as companies start implementing these concepts. The end of monolithic PLM is a threat and an opportunity for the existing PLM Vendors. Will they work towards an open plug-and-play future, or will they keep their portfolios closed? What do you think?

Two weeks ago, I shared my post: Modern PLM is (too) complex on LinkedIn, and apparently, it was a topic that touched many readers. Almost a hundred likes, fifty comments and six shares. Not the usual thing you would expect from a PLM blog post.

Two weeks ago, I shared my post: Modern PLM is (too) complex on LinkedIn, and apparently, it was a topic that touched many readers. Almost a hundred likes, fifty comments and six shares. Not the usual thing you would expect from a PLM blog post.

In addition, the article led to offline discussions with peers, giving me an even better understanding of what people think. Here is a summary of the various talks.

What is PLM?

In particular, since the inception of Product Lifecycle Management, software vendors have battled with the various PLM definitions.

In particular, since the inception of Product Lifecycle Management, software vendors have battled with the various PLM definitions.

Initially, PLM was considered an engineering tool for product development, with an extensive potential set of capabilities supported by PowerPoint. Most companies actually implemented a collaborative PDM system at that time and named it PLM.

Was PLM really understood? Look at the infamous Autodesk CEO Carl Bass’s anti-PLM rap from 2007. Next, in 2012, Autodesk introduced its PLM solution called Autodesk PLM 360 as one of the first cloud solutions.

Only with growing connectivity and enterprise information sharing did the definition of PLM start to change.

Only with growing connectivity and enterprise information sharing did the definition of PLM start to change.

PLM became a product information backbone serving downstream deployment with product data – the traditional Teamcenter, Windchill and ENOVIA implementations are typical examples of this phase.

With a digitization effort taking place in the non-PLM domain, connecting product development, design and delivery data to a company’s digital business became necessary. You could say, and this is the CIMdata definition:

PLM is a strategic business approach that applies a consistent set of business solutions that support the collaborative creation, management, dissemination, and use of product definition information. PLM supports the extended enterprise (customers, design and supply partners, etc.)

I agree with this definition; perhaps 80 % of our PLM community does. But how many times have we been trapped again in the same thinking: PLM is a system.

The most recent example is the post from Oleg Shilovitsky last week where he claims: Discover why OpenBOM reigns supreme in the world of PLM!

Nothing wrong with that, as software vendors will always tweak definitions as they need marketing to make a profit, but PLM is not a system.

My main point is that PLM is a “vague” community label with many interpretations. Software vendors have the most significant marketing budget to push their unique definitions. However, also various practitioners in the field have their interpretations.

And maybe Martin Haket’s comment to the post says it all (partly quote):

I’m a bit late to this discussion, but in my opinion, the complexity is mainly due to the fact that the ownership of the processes and data models underlying PLM are not properly organized. ‘Everybody’ in the company is allowed to mix in the discussion and have their opinion; legacy drives departments to undesirable requirements leading to complex implementations.

My intermediate conclusion: Our legacy and lack of a single definition of PLM make it complex.

The PLM professional

On LinkedIn, there are approximately 14.000 PLM consultants in my first and second levels of connections. This number indicates that the label “PLM Consultant” has a specific recognition.

On LinkedIn, there are approximately 14.000 PLM consultants in my first and second levels of connections. This number indicates that the label “PLM Consultant” has a specific recognition.

During my “PLM is complex” discussion, I noticed Roger Tempest’s Professional PLM White paper and started the dialogue with him.

Roger Tempest is one of the co-founders of the PLM Interest Group. He has been trying to create a baseline for a foundational PLM certification with several others. We discussed the challenges of getting the PLM Professional recognized as an essential business role. Can we certify the PLM professional the same way as a certified Configuration Manager or certified Project Manager?

I shared my thoughts with Roger, claiming that our discipline is too vague and diverse and that finding a common baseline is hard.

Therefore, we are curious about your opinion too. Please tell us in the comments to this post what you think about recognizing the PLM professional and what skills should be the minimum. What are the basics of a PLM professional?

Therefore, we are curious about your opinion too. Please tell us in the comments to this post what you think about recognizing the PLM professional and what skills should be the minimum. What are the basics of a PLM professional?

In addition, I participated in some of the SharePLM podcast recordings with PLM experts from the field (follow us here). I raised the PLM professional question either during the podcast or during the preparation of the after-party. Also, there was no single unique answer.

So much is part of PLM: people (culture, skills), processes & data, tools & infrastructures (architectures, standards) combined with execution (waterfall/agile?)

So much is part of PLM: people (culture, skills), processes & data, tools & infrastructures (architectures, standards) combined with execution (waterfall/agile?)

My intermediate conclusion: The broadness of PLM makes it complex to have a common foundation.

More about complexity

PEOPLE: Let’s zoom in on the aspects of complexity. Starting from the People, Processes, Data and Tools discussion. The first thing mentioned is “the people,” organizations usually claim: “the most important assets in our organization are the people”.

PEOPLE: Let’s zoom in on the aspects of complexity. Starting from the People, Processes, Data and Tools discussion. The first thing mentioned is “the people,” organizations usually claim: “the most important assets in our organization are the people”.

However, people are usually the last dimension considered in business changes. Companies start with the tools, try to build the optimal processes and finally push the people into that framework by training, incentives or just force.

The reason for the last approach is that dealing with people is complex. People have their beliefs, their legacy and their motivation. And if people do not feel connected to the business (change), they will become an obstacle to change – look at the example below from my 2014 PI Apparel presentation:

To support the importance of people, I am excited to work with Share PLM and the Season 2 podcast series.

![]() In these episodes, we talk with successful PLM experts about their lessons learned during PLM implementation. You will discover it is a learning process, and connecting to people in different cultures is essential. As it is a learning process, you will find it takes time and human skills to master this complexity.

In these episodes, we talk with successful PLM experts about their lessons learned during PLM implementation. You will discover it is a learning process, and connecting to people in different cultures is essential. As it is a learning process, you will find it takes time and human skills to master this complexity.

Often human skills are called “soft skills”, but actually, they are “vital skills”!

PROCESSES: Regarding the processes part, this is another challenging topic. Often we try to simplify processes to make them workable (sounds like a good idea). With many seasoned PLM practitioners coming from the mechanical product development world, it is not a surprise that many proposed PLM processes are BOM-centric – building on PDM and ERP capabilities.

PROCESSES: Regarding the processes part, this is another challenging topic. Often we try to simplify processes to make them workable (sounds like a good idea). With many seasoned PLM practitioners coming from the mechanical product development world, it is not a surprise that many proposed PLM processes are BOM-centric – building on PDM and ERP capabilities.

In my post: The rise and fall of the BOM? I started with this quote from Jan Bosch:

An excessive focus on the bill of materials leads to significant challenges for companies that are undergoing a digital transformation and adopting continuous value delivery. The lack of headroom, high coupling and versioning hell may easily cause an explosion of R&D expenditure over time.

Today’s organization and product complexity does not allow us to keep the processes simple to remain competitive. In that context, have a look at Erik Herzog’s comment on PLM complexity:

I believe a contributing factor to making PLM complex lies in our tendency to make too many simplifications. Do we understand a simple thing such as configuration change management in incremental development? At least in my organization, there is room for improvement.

In the comment, Erik also provided a link to his conference paper: Introducing the 4-Box Development Model describing the potential interaction between Systems Engineering and Configuration Management. A topic that is too complex for your current company; however, it illustrates that you cannot generalize and simplify PLM overall.

In addition to Erik’s comments, I want to mention again that we can change our business processes thanks to a modern, connected, data-driven infrastructure. From coordinated to connected working with a mix of Systems of Engagement (new) and Systems of Record (traditional). There are no solid best practices yet, but the real PLM geeks are becoming visible.

In addition to Erik’s comments, I want to mention again that we can change our business processes thanks to a modern, connected, data-driven infrastructure. From coordinated to connected working with a mix of Systems of Engagement (new) and Systems of Record (traditional). There are no solid best practices yet, but the real PLM geeks are becoming visible.

TOOLS & DATA: When discussing the future: From Coordinated to Connected, there has always been a discussion about the legacy.

TOOLS & DATA: When discussing the future: From Coordinated to Connected, there has always been a discussion about the legacy.

Should we migrate the legacy data and systems and replace them with new tools and data models? Or are there other options? The interaction of tools and data is often the domain of Enterprise Solution Architects. The Solution Architect’s role becomes increasingly important in a modern, data-driven company, and several are pretty active in PLM, if you know how to find them, because they are not in the mainstream of PLM.

This week we made a SharePLM podcast recording with Yousef Hooshmand. I wrote about his paper “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh” last year as Yousef describes the complex process, that time working at Daimler, to slowly replace old legacy infrastructure with a new modern user/role-centric data-driven infrastructure.

This week we made a SharePLM podcast recording with Yousef Hooshmand. I wrote about his paper “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh” last year as Yousef describes the complex process, that time working at Daimler, to slowly replace old legacy infrastructure with a new modern user/role-centric data-driven infrastructure.

Watch out for this recording to be published soon as Yousef shares various provoking experiences. Not to provoke our community but to create the awareness that a transformation is possible when you have the right long-term vision, strategy and C-level support.

Fighting complexity

Note: We have CM people involved in many of the PLM discussions. I think they are fighting similar complexity like others in the PLM domain. However, they have the benefit that their role: Configuration Manager, is recognized and supported by a commercial certification organization( the Institute of Process Excellence – IpX ).

Note: We have CM people involved in many of the PLM discussions. I think they are fighting similar complexity like others in the PLM domain. However, they have the benefit that their role: Configuration Manager, is recognized and supported by a commercial certification organization( the Institute of Process Excellence – IpX ).

While completing this post, I read this article from Oleg Shilovitsky: PLM User Groups and Communities. At first glance, you might think that PLM User Groups and Communities might be the solution to address the complexity.

And I think they do; there are within most PLM vendors orchestrated User Groups and Communities. Depending on your tool vendor, you will find like-minded people supported by vendor experts. Are they reducing the complexity? Probably not, as they are at the end of the People, Processes, Data and Tools discussion. You are already working within a specific boundary.

And I think they do; there are within most PLM vendors orchestrated User Groups and Communities. Depending on your tool vendor, you will find like-minded people supported by vendor experts. Are they reducing the complexity? Probably not, as they are at the end of the People, Processes, Data and Tools discussion. You are already working within a specific boundary.

Based on my experience as a core PLM Global Green Alliance member, I think PLM-neutral communities are not viable. There is very little interaction in this community, with currently 686 members, although the topics are very actual. Yes, people want to consume and learn, but making time available to share is, unfortunately, impossible when not financially motivated. Sharing opinions, yes, but working on topics: we are too busy.

Based on my experience as a core PLM Global Green Alliance member, I think PLM-neutral communities are not viable. There is very little interaction in this community, with currently 686 members, although the topics are very actual. Yes, people want to consume and learn, but making time available to share is, unfortunately, impossible when not financially motivated. Sharing opinions, yes, but working on topics: we are too busy.

Conclusion

The term PLM seems adequate to identify a group with a common interest (and skills?) Due to the broad scope and aspects – it is impossible to create a standard job description for the PLM professional, and we must learn to live with that- see my arguments.

What do you think?

I was happy to present and participate at the 3DEXEPRIENCE User Conference held this year in Paris on 14-15 March. The conference was an evolution of the previous ENOVIA User conferences; this time, it was a joint event by both the ENOVIA and the NETVIBES brand.

I was happy to present and participate at the 3DEXEPRIENCE User Conference held this year in Paris on 14-15 March. The conference was an evolution of the previous ENOVIA User conferences; this time, it was a joint event by both the ENOVIA and the NETVIBES brand.

The conference was, for me, like a reunion. As I have worked for over 25 years in the SmarTeam, ENOVIA and 3DEXPERIENCE eco-system, now meeting people I have worked with and have not seen for over fifteen years.

My presentation: Sustainability Demands Virtualization – and it should happen fast was based on explaining the transformation from a coordinated (document-driven) to a connected (data-driven) enterprise.

There were 100+ attendees at the conference, mainly from Europe, and most of the presentations were coming from customers, where the breakout sessions gave the attendees a chance to dive deeper into the Dassault Systèmes portfolio.

Here are some of my impressions.

The power of ENOVIA and NETVIBES

I had a traditional view of the 3DEXPERIENCE platform based on my knowledge of ENOVIA, CATIA and SIMULIA, as many of my engagements were in the domain of MBSE or a model-based approach.

I had a traditional view of the 3DEXPERIENCE platform based on my knowledge of ENOVIA, CATIA and SIMULIA, as many of my engagements were in the domain of MBSE or a model-based approach.

However, at this conference, I discovered the data intelligence side that Dassault Systèmes is bringing with its NETVIBES brand.

Where I would classify the ENOVIA part of the 3DEXPERIENCE platform as a traditional System of Record infrastructure (see Time to Split PLM?).

I discovered that by adding NETVIBES on top of the 3DEXPERIENCE platform and other data sources, the potential scope had changed significantly. See the image below:

As we can see, the ontologies and knowledge graph layer make it possible to make sense of all the indexed data below, including the data from the 3DEXPERIENCE Platform, which provides a modern data-driven layer for its consumers and apps.

The applications on top of this layer, standard or developed, can be considered Systems of Engagement.

My curiosity now: will Dassault Systèmes keep supporting the “old” system of record approach – often based on BOM structures (see also my post: The Rise and Fall of the BOM) combined with the new data-driven environment? In that case, you would have both approaches within one platform.

My curiosity now: will Dassault Systèmes keep supporting the “old” system of record approach – often based on BOM structures (see also my post: The Rise and Fall of the BOM) combined with the new data-driven environment? In that case, you would have both approaches within one platform.

The Virtual Twin versus the Digital Twin

It is interesting to notice that Dassault Systèmes consistently differentiates between the definition of the Virtual Twin and the Digital Twin.

According to the 3DS.com website:

Digital Twins are simply a digital form of an object, a virtual version.

Unlike a digital twin prototype that focuses on one specific object, Virtual Twin Experiences let you visualize, model and simulate the entire environment of a sophisticated experience. As a result, they facilitate sustainable business innovation across the whole product lifecycle.

Understandably, Dassault Systemes makes this differentiation. With the implementation of the Unified Product Structure, they can connect CAD geometry as datasets to other non-CAD datasets, like eBOM and mBOM data.

The Unified Product Structure was not the topic of this event but is worthwhile to notice.

REE Automotive

![]() The presentation from Steve Atherton from REE Automotive was interesting because here we saw an example of an automotive startup that decided to go pure for the cloud.

The presentation from Steve Atherton from REE Automotive was interesting because here we saw an example of an automotive startup that decided to go pure for the cloud.

REE Automotive is an Israeli technology company that designs, develops, and produces electric vehicle platforms. Their mission is to provide a modular and scalable electric vehicle platform that can be used by a wide range of industries, including delivery and logistics, passenger cars, and autonomous vehicles.

Steve Atherton is the PLM 3DExperience lead for REE at the Engineering Centre in Coventry in the UK, where they have most designers. REE also has an R&D center in Tel Aviv with offshore support from India and satellite offices in the US

Steve Atherton is the PLM 3DExperience lead for REE at the Engineering Centre in Coventry in the UK, where they have most designers. REE also has an R&D center in Tel Aviv with offshore support from India and satellite offices in the US

REE decided from the start to implement its PLM backbone in the cloud, a logical choice for such a global spread company.

The cloud was also one of the conference’s central themes, and it was interesting to see that a startup company like REE is pushing for an end-to-end solution based on a cloud solution. So often, you see startups choosing traditional systems as the senior members of the startup to take their (legacy) PLM knowledge to their next company.

The cloud was also one of the conference’s central themes, and it was interesting to see that a startup company like REE is pushing for an end-to-end solution based on a cloud solution. So often, you see startups choosing traditional systems as the senior members of the startup to take their (legacy) PLM knowledge to their next company.

The current challenge for REE is implementing the manufacturing processes (EBOM- MBOM) and complying as much as possible with the out-of-the-box best practices to make their cloud implementation future-proof.

Groupe Renault

Olivier Mougin, Head of PLM at Groupe RENAULT, talked about their Renaulution Virtual Twin (RVT) program. Renault has always been a strategic partner of Dassault Systèmes.

Olivier Mougin, Head of PLM at Groupe RENAULT, talked about their Renaulution Virtual Twin (RVT) program. Renault has always been a strategic partner of Dassault Systèmes.

I remember them as one of the first references for the ENOVIA V6 backbone.

The Renaulution Virtual Twin ambition: from engineering to enterprise platform, is enormous, as you can see below:

Each of the three pillars has transformational aspects beyond traditional ways of working. For each pillar, Olivier explained the business drivers, expected benefits, and why a new approach is needed. I will not go into the details in this post.

However, you can see the transformation from an engineering backbone to an enterprise collaboration platform – The Renaulution!.

Ahmed Lguaouzi, head of marketing at NETVIBES, enforced the extended power of data intelligence on top of an engineering landscape as the target architecture.

Renault’s ambition is enormous – the ultimate dream of digital transformation for a company with a great legacy. The mission will challenge Renault and Dassault Systèmes to implement this vision, which can become a lighthouse for others.

3DS PLM Journey at MIELE

An exciting session close to my heart was the digital transformation story from MIELE, explained by André Lietz, head of the IT Products PLM @ Miele. As an old MIELE dishwasher owner, I was curious to learn about their future.

An exciting session close to my heart was the digital transformation story from MIELE, explained by André Lietz, head of the IT Products PLM @ Miele. As an old MIELE dishwasher owner, I was curious to learn about their future.

Miele has been a family-owned business since 1899, making high-end domestic and commercial equipment. They are a typical example of the power of German mid-market companies. Moreover, family-owned gives them stability and the opportunity to develop a multi-year transformation roadmap without being distracted by investor demands every few years.

André, with his team, is responsible for developing the value chain inside the product development process (PDP), the operation of nearly 90 IT applications, and the strategic transformation of the overarching PLM Mission 2027+.

André, with his team, is responsible for developing the value chain inside the product development process (PDP), the operation of nearly 90 IT applications, and the strategic transformation of the overarching PLM Mission 2027+.

As the slide below illustrates, the team is working on four typical transformation drivers:

- Providing customers with connected, advanced products (increasing R&D complexity)

- Providing employees with a modern, digital environment (the war for digital talent)

- Providing sustainable solutions (addressing the whole product lifecycle)

- Improving internal end-to-end collaboration and information visibility (PLM digital transformation)

André talked about their DELMIA pilot plant/project and its benefits to connect the EBOM and MBOM in the 3DEXPERIENCE platform. From my experience, this is a challenging topic, particularly in German companies, where SAP dominated the BOM for over twenty years.

I am curious to learn more about the progress in the upcoming years. The vision is there; the transformation is significant, but they have the time to succeed! This can be another digital transformation example.

I am curious to learn more about the progress in the upcoming years. The vision is there; the transformation is significant, but they have the time to succeed! This can be another digital transformation example.

And more …

Besides some educational sessions by Dassault Systemes (Laurent Bertaud – NETVIBES data science), there were also other interesting customer testimonies from Fernando Petre (IAR80 – Fly Again project), Christian Barlach (ISC Sustainable Construction) and Thelma Bonello (Methode Electronics – end-to-end BOM infrastructure). All sessions helped to get a better understanding about what is possible and what is done in the domain of PLM.

Conclusion

I learned a lot during these days, particularly the virtual twin strategy and the related capabilities of data intelligence. As the event was also a reunion for me with many people from my network, I discovered that we all aim at a digital transformation. We have a mission and a vision. The upcoming years will be crucial to implement the mission and realizing the vision. It will be the early adopters like Renault pushing Dassault Systèmes to deliver. I hope to stay tuned. You too?

NOTE: Dassault Systèmes covered some of the expenses associated with my participation in this event but did not in any way influence the content of this post.

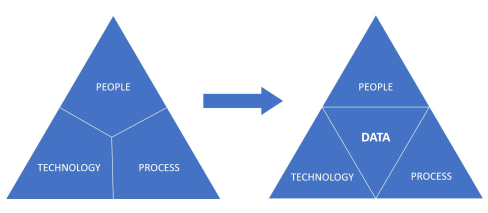

Those who have read my blog posts over the years will have seen the image to the left.

Those who have read my blog posts over the years will have seen the image to the left.

The people, processes and tools slogan points to the best practice of implementing (PLM and CM) systems.

Theoretically, a PLM implementation will move smoothly if the company first agrees on the desired processes and people involved before a system implementation using the right tools.

Too often, companies start from their historical landscape (the tools – starting with a vendor selection) and then try to figure out the optimal usage of their systems. The best example of this approach is the interaction between PDM(PLM) and ERP.

PDM and ERP

Historically ERP was the first enterprise system that most companies implemented. For product development, there was the PDM system, an engineering tool, and for execution, there was the ERP system. Since ERP focuses on the company’s execution, the system became the management’s favorite.

Historically ERP was the first enterprise system that most companies implemented. For product development, there was the PDM system, an engineering tool, and for execution, there was the ERP system. Since ERP focuses on the company’s execution, the system became the management’s favorite.

The ERP system and its information were needed to run and control the company. Unfortunately, this approach has introduced the idea that the ERP system should also be the source of the part information, as it was often the first enterprise system for a company. The PDM system was often considered an engineering tool only. And when we talk about a PLM system, who really implements PLM as an enterprise system or was it still an engineering tool?

This is an example of Tools, Processes, and People – A BAD PRACTICE.

Imagine an engineer who wants to introduce a new part needed for a product to deliver. In many companies at the beginning of this century, even before starting the exercise, the engineer had to request a part number from the ERP system. This is implementation complexity #1.

Imagine an engineer who wants to introduce a new part needed for a product to deliver. In many companies at the beginning of this century, even before starting the exercise, the engineer had to request a part number from the ERP system. This is implementation complexity #1.

Next, the engineer starts developing versions of the part based on the requirements. Ultimately the engineer might come to the conclusion this part will never be implemented. The reserved part number in ERP has been wasted – what to do?

It sounds weird, but this was a reality in discussions on this topic until ten years ago.

Next, as the ERP system could only deal with 7 digits, what about part number reuse? In conclusion, it is a considerable risk that reused part numbers can lead to errors. With the introduction of the PLM systems, there was the opportunity to bridge the gap between engineering and manufacturing. Now it is clear for most companies that the engineer should create the initial part number.

Next, as the ERP system could only deal with 7 digits, what about part number reuse? In conclusion, it is a considerable risk that reused part numbers can lead to errors. With the introduction of the PLM systems, there was the opportunity to bridge the gap between engineering and manufacturing. Now it is clear for most companies that the engineer should create the initial part number.

Only when the conceptual part becomes approved to be used for the realization of the product, an exchange with the ERP system will be needed. Using the same part number or not, we do not care if we can map both identifiers between these environments and have traceability.

It took almost 10 years from PDM to PLM until companies agreed on this approach, and I am curious about your company’s status.

Meanwhile, in the PLM world, we have evolved on this topic. The part and the BOM are no longer simple entities. Instead, we often differentiate between EBOM and MBOM, and the parts in those BOMs are not necessarily the same.

Meanwhile, in the PLM world, we have evolved on this topic. The part and the BOM are no longer simple entities. Instead, we often differentiate between EBOM and MBOM, and the parts in those BOMs are not necessarily the same.

In this context, I like Prof. Dr. Jörg W. Fischer‘s framing:

EBOM is the specification, and MBOM is the realization.

(Leider schreibt Er viel auf Deutsch).

An interesting discussion initiated by Jörg last week was again about the interaction between PLM and ERP. The article is an excellent example of how potentially mainstream enterprises are thinking. PLM = Siemens, ERP = SAP – an illustration of the “tools first” mindset before the ideal process is defined.

An interesting discussion initiated by Jörg last week was again about the interaction between PLM and ERP. The article is an excellent example of how potentially mainstream enterprises are thinking. PLM = Siemens, ERP = SAP – an illustration of the “tools first” mindset before the ideal process is defined.

There was nothing wrong with that in the early days, as connectivity between different systems was difficult and expensive. Therefore people with a 20 year of experience might still rely on their systems infrastructure instead of data flow.

But enough about the bad practice – let’s go to people, processes, (data), and Tools

People, Processes, Data and Tools?

I got inspired by this topic, seeing this post two weeks ago from Juha Korpela, claiming:

Okay, so maybe a hot take, maybe not, but: the old “People, Process, Technology” trinity is one of the most harmful thinking patterns you can have. It leaves out a key element: Data.

His full post was quite focused on data, and I liked the ” wrapping post” from Dr. Nicolas Figay here, putting things more in perspective from his point of view. The reply made me think about how this discussion fits into the PLM digital transformation discussion. How would it work in the two major themes I use to explain the digital transformation in the PLM landscape?

For incidental readers of my blog, these are the two major themes I am using:

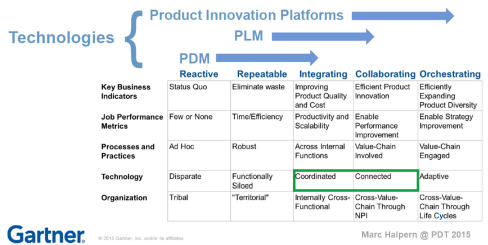

- From Coordinated to Connected, based on the famous diagram from Marc Halpern (image below). The coordinated approach based on documents (files) requires a particular timing (processes) and context (Bills of Information) – it is the traditional and current PLM approach for most companies. On the other hand, the Connected approach is based on connected datasets (here, we talk about data – not files). These connected datasets are available in different contexts, in real-time, to be used by all kinds of applications, particularly modeling applications. Read about it in the series: The road to model-based and connected PLM.

. - The need to split PLM, thinking in System(s) of Record and Systems of Engagement. (example below) The idea behind this split is driven by the observation that companies need various Systems of Record for configuration management, change management, compliance and realization. These activities sound like traditional PLM targets and could still be done in these systems. New in the discussion is the System of Engagement which focuses on a specific value stream in a digitally connected manner. Here data is essential.I discussed the coexistence of these two approaches in my post Time to Split PLM. A post on LinkedIn with many discussions and reshares illustrating the topic is hot. And I am happy to discuss “split PLM architectures” with all of you.

These two concepts discuss the processes and the tools, but what about the people? Here I came to a conclusion to complete the story, we have to imagine three kinds of people. And this will not be new. We have the creators of data, the controllers of data and the consumers of data. Let’s zoom in on their specifics.

A new representation?

I am looking for a new simplifaction of the people, processes, and tools trinity combined with data; I got inspired by the work Don Farr did at Boeing, where he worked on a new visual representation for the model-based enterprise. You might have seen the image on the left before – click on it to see it in detail.

I am looking for a new simplifaction of the people, processes, and tools trinity combined with data; I got inspired by the work Don Farr did at Boeing, where he worked on a new visual representation for the model-based enterprise. You might have seen the image on the left before – click on it to see it in detail.

I wrote the first time about this new representation in my post: The weekend after CIMdata Roadmap / PDT Europe 2018

Related to Configuration Management, Martijn Dullaart and Martin Haket have also worked on a diagram with their peers to depict the scope of CM and Impact Analysis. The image leads to the post with my favorite quote: Communication is merely an exchange of information, but connections tell the story.

Related to Configuration Management, Martijn Dullaart and Martin Haket have also worked on a diagram with their peers to depict the scope of CM and Impact Analysis. The image leads to the post with my favorite quote: Communication is merely an exchange of information, but connections tell the story.

Below I share my first attempt to combine the people, process and tools trinity with the concepts of document and data, system(s) of record and system(s) of engagement. Trying to build the story. Look if you recognize the aspects of the discussion above, and feel free to develop enhancements.

I look forward to your suggestions. Like the understanding that we have to split PLM thinking, as it impacts how we look at implementations.

Conclusion

Digital transformation in the PLM domain is forcing us to think differently. There will still be processes based on people collecting, interpreting and combining information. However, there will also be a new domain of connected data interpreted by models and algorithms, not necessarily depending on processes.

Therefore we need to work on new representations that can be used to tell this combined story. What do you think? How can we improve?

In my last post, I zoomed in on a preferred technical architecture for the future digital enterprise. Drawing the conclusion that it is a mission impossible to aim for a single connected environment. Instead, information will be stored in different platforms, both domain-oriented (PLM, ERP, CRM, MES, IoT) and value chain oriented (OEM, Supplier, Marketplace, Supply Chain hub).

In my last post, I zoomed in on a preferred technical architecture for the future digital enterprise. Drawing the conclusion that it is a mission impossible to aim for a single connected environment. Instead, information will be stored in different platforms, both domain-oriented (PLM, ERP, CRM, MES, IoT) and value chain oriented (OEM, Supplier, Marketplace, Supply Chain hub).

In part 3, I posted seven statements that I will be discussing in this series. In this post, I will zoom in on point 2:

Data-driven does not mean we do not need any documents anymore. Read electronic files for documents. Likely, document sets will still be the interface to non-connected entities, suppliers, and regulatory bodies. These document sets can be considered a configuration baseline.

System of Record and System of Engagement

In the image below, a slide from 2016, I show a simplified view when discussing the difference between the current, coordinated approach and the future, connected approach. This picture might create the wrong impression that there are two different worlds – either you are document-driven, or you are data-driven.

In the follow-up of this presentation, I explained that companies need both environments in the future. The most efficient way of working for operations will be infrastructure on the right side, the platform-based approach using connected information.

For traceability and disconnected information exchanges, the left side will be there for many years to come. Systems of Record are needed for data exchange with disconnected suppliers, disconnected regulatory bodies and probably crucial for configuration management.

For traceability and disconnected information exchanges, the left side will be there for many years to come. Systems of Record are needed for data exchange with disconnected suppliers, disconnected regulatory bodies and probably crucial for configuration management.

The System of Record will probably remain as a capability in every platform or cross-section of platform information. The Systems of Engagement will be the configured real-time environment for anyone involved in active company processes, not only ERP or MES, all execution.

Introducing SysML and SML

This summer, I received a copy of Martin Eigner’s System Lifecycle Management book, which I am reading at his moment in my spare moments. I always enjoyed Martin’s presentations. In many ways, we share similar ideas. Martin from his profession spent more time on the academic aspects of product and system lifecycle than I. But, on the other hand, I have always been in the field observing and trying to make sense of what I see and learn in a coherent approach. I am halfway through the book now, and for sure, I will come back on the book when I have finished.

This summer, I received a copy of Martin Eigner’s System Lifecycle Management book, which I am reading at his moment in my spare moments. I always enjoyed Martin’s presentations. In many ways, we share similar ideas. Martin from his profession spent more time on the academic aspects of product and system lifecycle than I. But, on the other hand, I have always been in the field observing and trying to make sense of what I see and learn in a coherent approach. I am halfway through the book now, and for sure, I will come back on the book when I have finished.

A first impression: A great and interesting book for all. Martin and I share the same history of data management. Read all about this in his second chapter: Forty Years of Product Data Management

From PDM via PLM to SysLM, is a chapter that everyone should read when you haven’t lived it yourself. It helps you to understand the past (Learning for the past to understand the future). When I finish this series about the model-based and connected approach for products and systems, Martin’s book will be highly complementary given the content he describes.

There is one point for which I am looking forward to is feedback from the readers of this blog.

Should we, in our everyday language, better differentiate between Product Lifecycle Management (PLM) and System Lifecycle Management(SysLM)?

In some customer situations, I talk on purpose about System Lifecycle Management to create the awareness that the company’s offering is more than an electro/mechanical product. Or ultimately, in a more circular economy, would we use the term Solution Lifecycle Management as not only hardware and software might be part of the value proposition?

Martin uses consistently the abbreviation SysLM, where I would prefer the TLA SLM. The problem we both have is that both abbreviations are not unique or explicit enough. SysLM creates confusion with SysML (for dyslectic people or fast readers). SLM already has so many less valuable meanings: Simulation Lifecycle Management, Service Lifecycle Management or Software Lifecycle Management.

Martin uses consistently the abbreviation SysLM, where I would prefer the TLA SLM. The problem we both have is that both abbreviations are not unique or explicit enough. SysLM creates confusion with SysML (for dyslectic people or fast readers). SLM already has so many less valuable meanings: Simulation Lifecycle Management, Service Lifecycle Management or Software Lifecycle Management.

For the moment, I will use the abbreviation SLM, leaving it in the middle if it is System Lifecycle Management or Solution Lifecycle Management.