You are currently browsing the tag archive for the ‘Standards’ tag.

In recent months, I’ve noticed a decline in momentum around sustainability discussions, both in my professional network and personal life. With current global crises—like the Middle East conflict and the erosion of democratic institutions—dominating our attention, long-term topics like sustainability seem to have taken a back seat.

In recent months, I’ve noticed a decline in momentum around sustainability discussions, both in my professional network and personal life. With current global crises—like the Middle East conflict and the erosion of democratic institutions—dominating our attention, long-term topics like sustainability seem to have taken a back seat.

But don’t stop reading yet—there is good news, though we’ll start with the bad.

The Convenient Truth

Human behavior is primarily emotional. A lesson valuable in the PLM domain and discussed during the Share PLM summit. As SharePLM notes in their change management approach, we rely on our “gator brain”—our limbic system – call it System 1 and System 2 or Thinking Fast and Slow. Faced with uncomfortable truths, we often seek out comforting alternatives.

Human behavior is primarily emotional. A lesson valuable in the PLM domain and discussed during the Share PLM summit. As SharePLM notes in their change management approach, we rely on our “gator brain”—our limbic system – call it System 1 and System 2 or Thinking Fast and Slow. Faced with uncomfortable truths, we often seek out comforting alternatives.

The film Don’t Look Up humorously captures this tendency. It mirrors real-life responses to climate change: “CO₂ levels were high before, so it’s nothing new.” Yet the data tells a different story. For 800,000 years, CO₂ ranged between 170–300 ppm. Today’s level is ~420 ppm—an unprecedented spike in just 150 years as illustrated below.

Frustratingly, some of this scientific data is no longer prominently published. The narrative has become inconvenient, particularly for the fossil fuel industry.

Persistent Myths

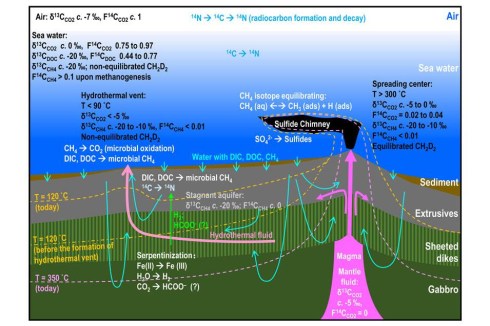

Then there is the pseudo-scientific claim that fossil fuels are infinite because the Earth’s core continually generates them. The Abiogenic Petroleum Origin theory is a fringe theory, sometimes revived from old Soviet science, and lacks credible evidence. See image below

Oil remains a finite, biologically sourced resource. Yet such myths persist, often supported by overly complex jargon designed to impress rather than inform.

The Dissonance of Daily Life

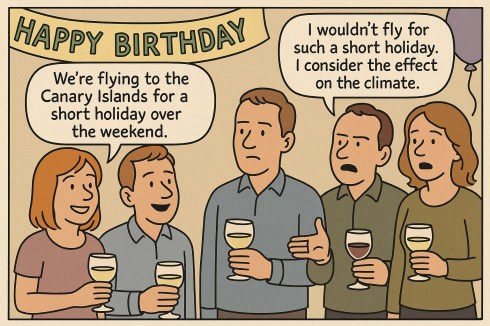

A young couple casually mentioned flying to the Canary Islands for a weekend at a recent birthday party. When someone objected on climate grounds, they simply replied, “But the climate is so nice there!”

“Great climate on the Canary Islands”

This reflects a common divide among young people—some are deeply concerned about the climate, while many prioritize enjoying life now. And that’s understandable. The sustainability transition is hard because it challenges our comfort, habits, and current economic models.

The Cost of Transition

Companies now face regulatory pressure such as CSRD (Corporate Sustainability Reporting Directive), DPP (Digital Product Passport), ESG, and more, especially when selling in or to the European market. These shifts aren’t usually driven by passion but by obligation. Transitioning to sustainable business models comes at a cost—learning curves and overheads that don’t align with most corporations’ short-term, profit-driven strategies.

Companies now face regulatory pressure such as CSRD (Corporate Sustainability Reporting Directive), DPP (Digital Product Passport), ESG, and more, especially when selling in or to the European market. These shifts aren’t usually driven by passion but by obligation. Transitioning to sustainable business models comes at a cost—learning curves and overheads that don’t align with most corporations’ short-term, profit-driven strategies.

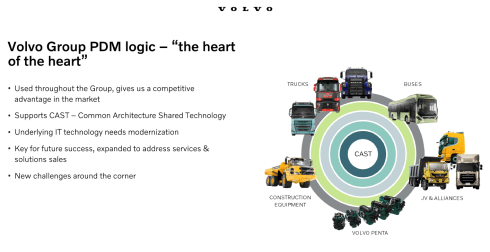

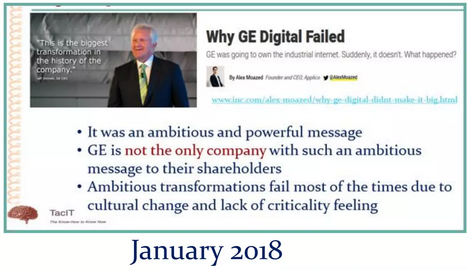

However, we have also seen how long-term visions can be crushed by shareholder demands:

- Xerox (1970s–1980s) pioneered GUI, the mouse, and Ethernet, but failed to commercialize them. Apple and Microsoft reaped the benefits instead.

- General Electric under Jeff Immelt tried to pivot to renewables and tech-driven industries. Shareholders, frustrated by slow returns, dismantled many initiatives.

- Despite ambitious sustainability goals, Siemens faced similar investor pressure, leading to spin-offs like Siemens Energy and Gamesa.

The lesson?

Transforming a business sustainably requires vision, compelling leadership, and patience—qualities often at odds with quarterly profit expectations. I explored these tensions again in my presentation at the PLM Roadmap/PDT Europe 2024 conference, read more here: Model-Based: The Digital Twin.

I noticed discomfort in smaller, closed-company sessions, some attendees said, “We’re far from that vision. ”

I noticed discomfort in smaller, closed-company sessions, some attendees said, “We’re far from that vision. ”

My response: “That’s okay. Sustainability is a generational journey, but it must start now”.

Signs of Hope

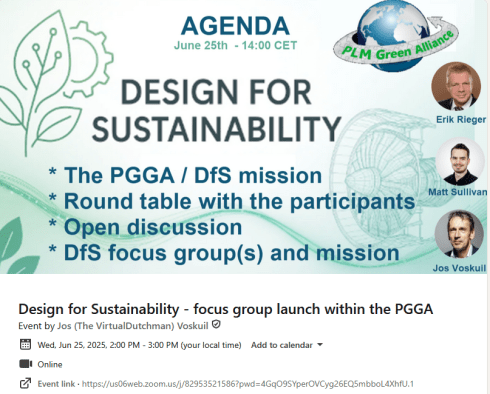

Now for the good news. In our recent PGGA (PLM Green Global Alliance) meeting, we asked: “Are we tired?” Surprisingly, the mood was optimistic.

Yes, some companies are downscaling their green initiatives or engaging in superficial greenwashing. But other developments give hope:

- China is now the global leader in clean energy investments, responsible for ~37% of the world’s total. In 2023 alone, it installed over 216 GW of solar PV—more than the rest of the world combined—and leads in wind power too. With over 1,400 GW of renewable capacity, China demonstrates that a centralized strategy can overcome investor hesitation.

- Long-term-focused companies like Iberdrola (Spain), Ørsted (Denmark), Tesla (US), BYD, and CATL (China) continue to invest heavily in EVs and batteries—critical to our shared future.

A Call to Engineers: Design for Sustainability

We may be small at the PLM Green Global Alliance, but we’re committed to educating and supporting the Product Lifecycle Management (PLM) community on sustainability.

That’s why I’m excited to announce the launch of our Design for Sustainability initiative on June 25th.

Led by Eric Rieger and Matthew Sullivan, this initiative will bring together engineers to collaborate and explore sustainable design practices. Whether or not you can attend live, we encourage everyone to engage with the recording afterward.

Conclusion

Sustainability might not dominate headlines today. In fact, there’s a rising tide of misinformation, offering people a “convenient truth” that avoids hard choices. But our work remains urgent. Building a livable planet for future generations requires long-term vision and commitment, even when it is difficult or unpopular.

So, are you tired—or ready to shape the future?

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

There were several interesting sessions which I will not mention here as I want to focus on forward-looking topics with a mix of (federated) data-driven PLM environments and the applicability of AI, staying around 1500 words.

R-evolutionizing PLM and ERP and Heliple

Cristina Paniagua from the Luleå University of Technology closed the first day of the conference, giving us food for thought to discuss over dinner. Her session, describing the Arrowhead fPTN project, fitted nicely with the concepts of the Federated PLM Heliple project presented by Erik Herzog also on Day 2.

They are both research products related to the future state of a digital enterprise. Therefore, it makes sense to treat them together.

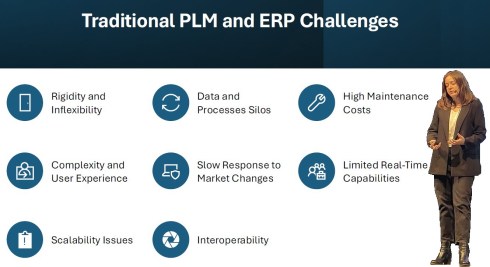

Cristina’s session started with sharing the challenges of traditional PLM and ERP systems:

These statements align with the drivers of the Heliple project. The PLM and ERP systems—Systems of Record—provide baselines and traceability. However, Systems of Record have not historically been designed to support real-time collaboration or to create an attractive user experience.

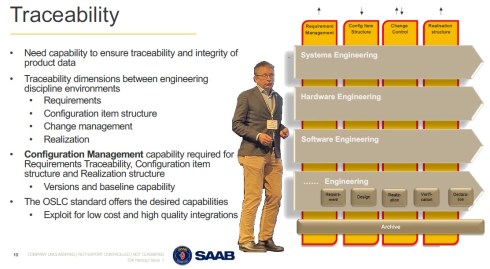

The Heliple project focuses on connecting various modules—the horizontal bars—for systems engineering, hardware engineering, etc., as real-time collaboration environments that can be highly customized and replaceable if needed. The Heliple project explored the usage of OSLC to connect these modules, the Systems of Engagement, with the Systems of Record.

![]() By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

Now, the Heliple team is aiming to expand their research with industry scale validation through the Demoiple project (Validate that the Heliple-2 technology can be implemented and accredited in Saab Aeronautics’ operational IT) combined with the Nextiple project, where they will investigate the role of heterogeneous information models/ontologies for heterogeneous analysis.

![]() If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

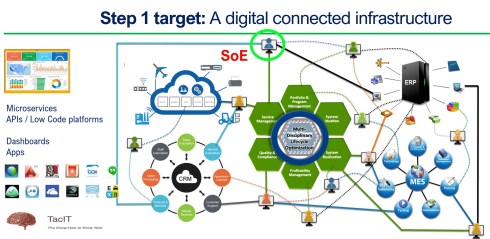

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

The resulting technology is projected to impact manufacturing productivity and flexibility substantially.

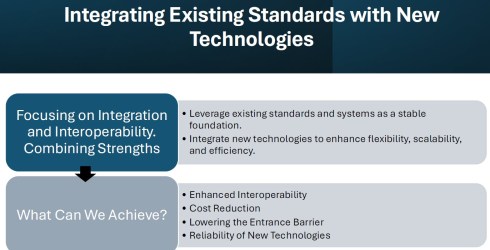

The exciting starting point of the Arrowhead project is that it wants to use existing standards and systems as a foundation and, on top of that, create a business and user-oriented layer, using modern technologies such as micro-services to support real-time processing and semantic technologies, ontologies, system modeling, and AI for data translations and learning—a much broader and ambitious scope than the Heliple project.

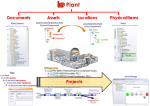

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

Potentially, there are five platforms with their own internal ways of working, a mix of systems of record and systems of engagement, supported by an overlay of several Systems of Engagement environments.

![]() I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

See below my artist’s impression of how a Service Engineer would work in its app connected to CRM, PLM and ERP platform datasets:

The exciting part of the Arrowhead fPVN project is that it wants to explore the interactions between systems and user roles based on existing mature standards instead of leaving the connections to software developers.

Christina mentioned some of these standards below:

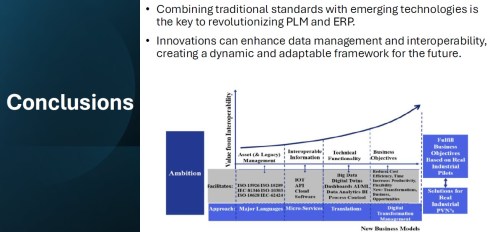

I greatly support this approach as, historically, much knowledge and effort has been put into developing standards to support interoperability. Maybe not in real-time, but the embedded knowledge in these standards will speed up the broader usage. Therefore, I concur with the concluding slide:

A final comment: Industrial users must push for these standards if they do not want a future vendor lock-in. Vendors will do what the majority of their customers ask for but will also keep their customers’ data in proprietary formats to prevent them from switching to another system.

Accelerated Product Development Enabled by Digitalization

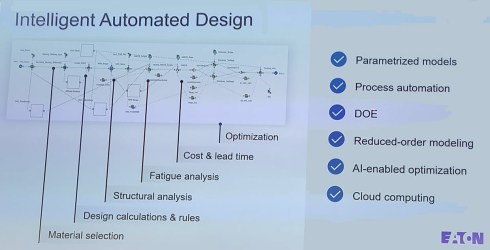

![]() The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

With its broad range of products, Eaton is exploring new, innovative ways to accelerate product design by modeling the design process and applying AI to narrow design decisions and customer-specific engineering work. The picture below shows the areas of attention needed to model the design processes. Uyiosa mentioned the significant beneficial results that have already been reached.

Together with generative design, Eaton works towards modern digital engineering processes built on models and knowledge. His session was complementary to the Heliple and Arrowhead story. To reach such a contemporary design engineering environment, it must be data-driven and built upon open PLM and software components to fully use AI and automation.

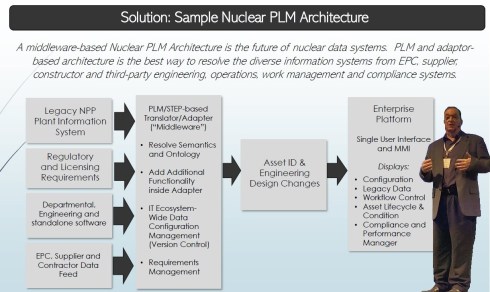

Next Gen” Life Cycle Management in Next-Gen Nuclear Power and LTO Legacy Plants

Kent Freeland‘s presentation was a trip into memory land when he discussed the issues with Long Term Operations of legacy nuclear plants.

I spent several years in Ringhals (Sweden) discussing and piloting the setup of a PLM front-end next to the MRO (Maintenance Repair Overhaul) system. As nuclear plants developed in the sixties, they required a longer than anticipated lifecycle, with access to the right design and operational data; maintenance and upgrade changes in the plant needed to be planned and controlled. The design data is often lacking; it resides at the EPC or has been stored in a document management system with limited retrieval capabilities.

I spent several years in Ringhals (Sweden) discussing and piloting the setup of a PLM front-end next to the MRO (Maintenance Repair Overhaul) system. As nuclear plants developed in the sixties, they required a longer than anticipated lifecycle, with access to the right design and operational data; maintenance and upgrade changes in the plant needed to be planned and controlled. The design data is often lacking; it resides at the EPC or has been stored in a document management system with limited retrieval capabilities.

See also my 2019 post: How PLM, ALM, and BIM converge thanks to the digital twin.

Kent described these experienced challenges – we must have worked in parallel universes – that now, for the future, we need a digitally connected infrastructure for both plant design and maintenance artifacts, as envisioned below:

The solution reminded me of a lecture I saw at the PI PLMx 2019 conference, where the Swedish ESS facility demonstrated its Asset Lifecycle Data Management solution based on the 3DEXPERIENCE platform.

The solution reminded me of a lecture I saw at the PI PLMx 2019 conference, where the Swedish ESS facility demonstrated its Asset Lifecycle Data Management solution based on the 3DEXPERIENCE platform.

You can still find the presentation here: Henrik Lindblad Ola Nanzell ESS – Enabling Predictive Maintenance Through PLM & IIOT.

Also, Kent focused on the relevant standards to support a “Single Source of Truth” concept, where I would say after all the federated PLM discussions, I would go for:

“The nearest source of truth and a single source of Change”

assuming this makes more sense in a digitally connected enterprise.

Why do you need to be SMART when contracting for information?

Rob Bodington‘s presentation was complementary to Kent Freeland’s presentation. Ron, a technical fellow at Eurostep, described the challenge of information acquisition when working with large assets that require access to the correct data once the asset is in operation. The large asset could be a nuclear plant or an aircraft carrier.

Rob Bodington‘s presentation was complementary to Kent Freeland’s presentation. Ron, a technical fellow at Eurostep, described the challenge of information acquisition when working with large assets that require access to the correct data once the asset is in operation. The large asset could be a nuclear plant or an aircraft carrier.

In the ideal world, the asset owner wants to have a digital twin of the asset fed by different data sources through a digital thread. Of course, this environment will only be reliable when accurate data is used and presented.

Getting accurate data starts with the information acquisition process, and Rob explained that this needed to be done SMARTly – see the image below:

Rob zoomed in on the SMART keywords and the challenge the various standards provide to make the information SMARTly accessible, like the ISO 10303 / PLCS standard, the CFIHOS exchange standard and more. And then there is the ISO 8000 standard about data quality.

Rob zoomed in on the SMART keywords and the challenge the various standards provide to make the information SMARTly accessible, like the ISO 10303 / PLCS standard, the CFIHOS exchange standard and more. And then there is the ISO 8000 standard about data quality.

Click on the image to get smart.

Rob believes that AI might be the silver bullet as it might help understand the data quality, ontology and context of the data and even improve contracting, generating data clauses for contracting….

And there was a lot of AI ….

![]() There was a dazzling presentation from Gary Langridge, engineering manager at Ocado, explaining their Ocado Smart Platform (OSP), which leverages AI, robotics, and automation to tackle the challenges of online grocery and allow their clients to excel in performance and customer responsiveness.

There was a dazzling presentation from Gary Langridge, engineering manager at Ocado, explaining their Ocado Smart Platform (OSP), which leverages AI, robotics, and automation to tackle the challenges of online grocery and allow their clients to excel in performance and customer responsiveness.

There was a significant AI component in his presentation, and if you are tired of reading, watch this video

![]() But here was more AI – from the 25 sessions in this conference, 19 of them mentioned the potential or usage of AI somewhere in their speech – this is more than 75 %!

But here was more AI – from the 25 sessions in this conference, 19 of them mentioned the potential or usage of AI somewhere in their speech – this is more than 75 %!

There was a dedicated closing panel discussion related to the real business value of Artificial Intelligence in the PLM domain, moderated by Peter Bilello and answered by selected speakers from the conference, Sandeep Natu (CIMdata), Lars Fossum (SAP), Diana Goenage (Dassault Systemes) and Uyiosa Abusomwan (Eaton).

The discussion was realistic and helpful for the audience. It is clear that to reap the benefits, companies must explore the technology and use it to create valuable business scenarios. One could argue that many AI tools are already available, but the challenge remains that they have to run on reliable data. The data foundation is crucial for a successful outcome.

An interesting point in the discussion was the statement from Diane Goenage, who repeatedly warned that using LLM-based solutions has an environmental impact due to the amount of energy they consume.

An interesting point in the discussion was the statement from Diane Goenage, who repeatedly warned that using LLM-based solutions has an environmental impact due to the amount of energy they consume.

We have a similar debate in the Netherlands – do we want the wind energy consumed by data centers (the big tech companies with a minimum workforce in the Netherlands), or should the Dutch citizens benefit from renewable energy resources?

Conclusion

There were even more interesting presentations during these two days, and you might have noticed that I did not advertise my content. This is because I have already reached 1600 words, but I also want to spend more time on the content separately.

It was about PLM and Sustainability, a topic often covered in this conference. Unfortunately, only 25 % of the presentations touched on sustainability, and AI over-hypes the topic.

Hopefully, it is not a sign of the time?

Two weeks ago, this post from Ilan Madjar drew my attention. He pointed to a demo movie, explaining how to support Smart Part Numbering on the 3DEXPERIENCE platform. You can watch the recording here.

Two weeks ago, this post from Ilan Madjar drew my attention. He pointed to a demo movie, explaining how to support Smart Part Numbering on the 3DEXPERIENCE platform. You can watch the recording here.

I was surprised that Smart Part Numbering is still used, and if you read through the comments on the post, you see the various arguments that exist.

- “Many mid-market customers are still using it”

me: I think it is not only the mid-market – however, the argument is no reason to keep it alive. - “The problem remains in the customer’s desire (or need or capability) for change.”

me: This is part of the lowest resistance. - “User resistance to change. Training and management sponsorship has proven to be not enough.”

me: probably because discussions are feature-oriented, not starting from the business benefits. - “Cost and effort- rolling this change through downstream systems. The cost and effort of changing PN in PLM,ERP,MES, etc., are high. Trying to phase it out across systems is a recipe for a disaster.”

me: The hidden costs of maintaining Smart Numbers inside an organization are high and invisible, reducing the company’s competitiveness. - “Existing users often complain that it takes seconds to minutes more for unintelligent PN vs. using intelligent PN.”

me: If we talk about a disconnected user without access to information, it could be true if the number of Smart Numbers to comprehend is low.

There were many other arguments for why you should not change. It reminded me of the image below:

Smart Numbers related to the Coordinated approach

Smart Part Numbers are a characteristic of best practices from the past. Where people were working in different systems, the information moving from one system to another was done manually.

For example, it is re-entering the Bill of Materials from the PDM system into the ERP system or attaching drawings to materials/parts in the ERP system. The filename often reflects the material or part number in the latter case.

The problems with the coordinated, smart numbering approach are:

New people in the organization need to learn the meaning of the numbering scheme. This learning process reduces the flexibility of an organization and increases the risk of making errors.

New people in the organization need to learn the meaning of the numbering scheme. This learning process reduces the flexibility of an organization and increases the risk of making errors.- Typos go unnoticed when transferring numbers from one system to another and only get noticed late when the cost of fixing the error might be 10 -100 fold.

- The argument that people will understand the meaning of a part is partly valid. A person can have a good guess of the part based on the smart part number; however, the details can be different unless you work every day with the same and small range of parts.

- Smart Numbers created a legacy. After Mergers and Acquisitions, there will be multiple part number schemes. Do you want to renumber old parts, meaning non-value-added, risky activities? Do you want to continue with various numbering schemes, meaning people need to learn more than one numbering schema – a higher entry barrier and risk of errors?

There were and still are many advanced smart numbering systems.

In one of my first PDM implementations in the Netherlands, I learned about the 12NC code system from Philips – introduced at Philips in 1963 and used to identify complete products, documentation, and bare components, up to the finest detail. At this moment, many companies in the Philips family (suppliers or offspring) still use this numbering system, illustrating that it is not only the small & medium enterprises that are reluctant to change their numbering system.

In one of my first PDM implementations in the Netherlands, I learned about the 12NC code system from Philips – introduced at Philips in 1963 and used to identify complete products, documentation, and bare components, up to the finest detail. At this moment, many companies in the Philips family (suppliers or offspring) still use this numbering system, illustrating that it is not only the small & medium enterprises that are reluctant to change their numbering system.

The costs of working with Smart Part Numbers are often unnoticed as they are considered a given.

From Coordinated to Connected

Digital transformation in the PLM domain means moving from coordinated practices toward practices that benefit from connected technology. In many of my blog posts, you can read why organizations need to learn to work in a connected manner. It is both for their business sustainability and also for being able to deal with regulations related to sustainability in the short term.

GHG reporting, ESG reporting, material compliance, and the DPP are all examples of the outside world pushing companies to work connected. Besides the regulations, if you are in a competitive business, you must be more efficient, innovative and faster than your competitors.

In a connected environment, relations between artifacts (datasets) are maintained in an IT infrastructure without requiring manual data transformations and people to process the data. In a connected enterprise, this non-value-added work will be reduced.

How to move away from Smart Numbering systems?

Several comments related to the Smart Numbering discussion mentioned that changing the numbering system is too costly and risky to implement and that no business case exists to support it. This statement only makes sense if you want your business to become obsolete slowly. Modern best practices based on digitization should be introduced as fast as possible, allowing companies to learn and adapt. There is no need for a big bang.

Several comments related to the Smart Numbering discussion mentioned that changing the numbering system is too costly and risky to implement and that no business case exists to support it. This statement only makes sense if you want your business to become obsolete slowly. Modern best practices based on digitization should be introduced as fast as possible, allowing companies to learn and adapt. There is no need for a big bang.

Start with mapping, prioritizing, and mapping value streams in your company. Where do we see the most significant business benefits related to cost of handling, speed, and quality?

Start with mapping, prioritizing, and mapping value streams in your company. Where do we see the most significant business benefits related to cost of handling, speed, and quality?

Note: It is not necessary to start with engineering as they might be creators of data – start, for example, with the xBOM flow, where the xBOM can be a concept BOM, the engineering BOM, the Manufacturing BOM, and more. Building this connected data flow is an investment for every department; do not start from the systems.

Note: It is not necessary to start with engineering as they might be creators of data – start, for example, with the xBOM flow, where the xBOM can be a concept BOM, the engineering BOM, the Manufacturing BOM, and more. Building this connected data flow is an investment for every department; do not start from the systems.

- Next point: Do not rename or rework legacy data. These activities do not add value; they can only create problems. Instead, build new process definitions that do not depend on the smartness of the number.

Make sure these objects have, besides the part number, the right properties, the right status, and the right connections. In other words, create a connected digital thread – first internally in your company and next with your ecosystem (OEMs, suppliers, vendors)

Make sure these objects have, besides the part number, the right properties, the right status, and the right connections. In other words, create a connected digital thread – first internally in your company and next with your ecosystem (OEMs, suppliers, vendors)

- Next point: Give newly created artifacts a guaranteed unique ID independent of others. Each artifact has its status, properties and context. In this step, it is time to break any 1 : 1 relation between a physical part and a CAD-part or drawing. If a document gets revised, it gets a new version, but the version change should not always lead to a part number change. You can find many discussions on why to decouple parts and documents and the flexibility it provides.

- Next point: New generated IDs are not necessarily generated in a single system. The idea of a single source of truth is outdated. Build your infrastructure upon existing standards if possible. For example, the UID of the Digital Product Passport will be based on the ISO/IEC 15459 standard, similar to the UID for retail products managed by the GS1 standard. Or, probably closer to home, look into your computer’s registry, and you will discover a lot of software components with a unique ID that specific programs or applications can use in a shared manner.

When will it happen?

In January 2016, I wrote about “the impact of non-intelligent part numbers” and surprisingly almost 8 years later and we are still in the same situation.

I just read Oleg Shilovitsky’s post The Data Dilemma: Why Engineers and Manufacturing Companies Struggle to Find Time for Data Management where he mentions Legacy Systems and Processes, Overwhelming Workloads, Lack of (Data) Expertise, Short-Term Focus and Resource Constraints as inhibitors.

I just read Oleg Shilovitsky’s post The Data Dilemma: Why Engineers and Manufacturing Companies Struggle to Find Time for Data Management where he mentions Legacy Systems and Processes, Overwhelming Workloads, Lack of (Data) Expertise, Short-Term Focus and Resource Constraints as inhibitors.

You probably all know the above cartoon. How can companies get out of this armor or habits? Will they be forced by the competition or by regulations. What do you think ?

Conclusion

Despite proven business benefits and insights, it remains challenging for companies to move toward modern, data-driven practices where Smart Number generators are no longer needed. When talking one-on-one to individuals, they are convinced a change is necessary, and they are pointing to the “others”.

I wish you all a prosperous 2024 and the power to involve the “others”.

Again, a “The weekend after …” post related to my favorite event to which I have contributed since 2014.

Expectations were high this time from my side, in particular because we would have a serious discussion related to connected digital threads and federated PLM.

More about these topics in my post next week as all content is not yet available for sharing.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

Looking back to the conference, there were two noticeable streams.

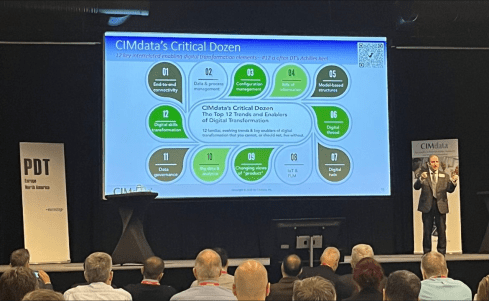

- The stream where people share their current PLM experiences, traditionally the A&D action groups moderated by CIMdata, is part of this stream. This part I will cover in this post.

- There were forward-looking presentations related to standards, ontologies, and federated PLM—all with an AI flavor. This part I will cover in my next post(s).

The connection between all these sessions was the Digital Thread. The conference’s theme was: The Digital Thread in a Heterogeneous, Extended Enterprise Reality. Let’s start the review with the highlights from the first stream.

Digital Thread: Why Should We Care?

As usual, Peter Bilello from CIMdata kicked off the conference by setting the scene. Peter started by clarifying the two definitions of the Digital Thread.

- The first is a communication framework that allows a connected data flow and integrated view of an asset’s data (i.e., its Digital Twin) throughout its lifecycle across traditionally siloed functional perspectives.

In my terminology, the connected digital thread. - The second is a network of connected information sources around the product lifecycle supporting traceability and decision-making.

In my terminology, the coordinated digital thread is the most straightforward digital thread to achieve.

Peter recommends starting a digital thread by connecting at the beginning of product conceptualization, creating an environment where one can analyze the performance of the product portfolio and the product features and capabilities that need to be planned or how they perform in the field.

In addition, when defining the products, connect them with regulatory requirement databases as they have must-have requirements. A topic I addressed in my session too, besides the existing regulatory requirements, it is expected that in the upcoming years, due to environmental regulations, these requirements will increase, and it will be necessary to have them integrated with your digital thread.

Digital Threads require data governance and are the basis for the various digital twins. Peter discussed the multiple applications of the digital twin, primarily a relation between a virtual asset and a physical asset, except in the early concept phase.

The digital thread is still in the early phase of implementation at companies. A CIMdata survey showed that companies still focus primarily on implementing traditional PDM capabilities, although as the image above shows, there is a growing interest in short-term digital twin/thread implementations.

People, Process & Technology:

The Pillars of Digital Transformation Success

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

Having your people at all levels involved and make them understand the need for change is crucial. A change does not happen top-down. You must educate people and understand what is possible and achievable to change – in the right direction. One of her concluding slides highlights the main points.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

Between the regular sessions there were short sessions from sponsors: Altium, Contact Software, Dassault Systemes, ESI, inensia, Modular Management , PTC, SAP, Share PLM and Sinequa could pitch their value offering.

The Share PLM session, shortly after Christine’s presentation was a nice continuation of the focus on people. I loved the Share PLM image to the left explaining why people do not engage with our dreams.

Learn how LEONI is achieving Digital Continuity in the Automotive Industry.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Recently it has gone through a serious financial crisis and the need for restructuring. This makes it always challenging for a “visionary” PLM project. Tobias mentioned that after disappointing engagements with consultancy firms, they decided on a bottom-up approach to analyze existing processes using BPML. They agreed on a to-be state, fixing bottlenecks and streamlining the flow of information.

Tobias presented a smooth product data flow between their PLM system (PTC Windchill) and ERP (SAP S/4 HANA), clearly stating that the PLM system has become the controlled source of managing product changes.

Their key achievements reported so far were:

- related to BOM creation and routing (approx. 10x faster – from 2-3 days to ¼ day),

- better data consistency (fewer manual steps)

- complete traceability between the systems with PLM as the change management backbone.

The last point I would call the coordinated Digital Thread. The image below shows their current IT landscape in a simplified manner.

This solution might seem obvious for neutral PLM academics or experts, but it is an achievement to do this in an environment with SAP implemented. The eBOM-mBOM discussion is one of the most frequent held discussions – sometimes a battle.

Often, companies use their IT systems first and listen to the vendor’s experts to build integrations instead of starting from the natural business flow of information.

Aerospace & Defense Action groups outcomes

As usual, several Aerospace & Defense (A&D) action groups reported their progress during this conference. The A&D action groups are facilitated by CIMdata, and per topic, various OEMs and suppliers in the A&D industry study and analyze a particular topic, often inviting software vendors to demonstrate and discuss their capabilities with them.

Their activities and reports can be found on the A&D PLM Action page here; In the remainder of this post I will share briefly the ones presented. For a real deep dive in the topics I recommend to find the proceedings per topic on the A&D action page.

The Promise and Reality of the Digital Thread

James Roche CIMdata presented insights from industry research on The Promise and Reality of the Digital Thread. A total of 90 persons completed an in-depth survey about the status and implementation of digital thread concepts in their company. It is clear that the digital thread is still in its early days in this industry, and it is mainly about the coordinated digital thread. The image below reflects the highlights of the survey.

A&D Industry Digital Twin and Digital Thread Standards

Robert Rencher from Boeing explained the progress of their Digital Twin/Digital Thread project, where they had investigated the applicable standards to support a Digital Twin/Digital Thread (Phase 4 out of 7 currently planned). The image below shows that various standards may apply depending on business perspectives.

Their current findings are:

- Digital twin standards overlap, which is most likely a function of standards bodies representing their respective standards as an ongoing development from a historical perspective.

- The limited availability of mature digital twin/thread standards requires greater attention by standards organizations.

- The concept of the digital twin continues to evolve. This dynamic will be a challenge to standards bodies.

- The digital twin and the digital thread are distinct aspects of digital transformation. The corresponding digital twin and digital thread standards will be distinctly different.

- Coordinating the development of the respective standards between the digital twin/thread is needed.

- The digital twin’s organization, definition, and enablement depend on data and information provided by the digital thread.

Roadmap for Enabling Global Collaboration

Robert Gutwein (Pratt & Whitney Canada) and Agnes Gourillon-Jandot (Safran Aircraft Engines) reported their progress on the Global Collaboration project. Collaboration is challenged as exchange methods can vary, as well as dealing with the validation of exchanged information and governing the exchange of information in the context of IP protection.

One of the focal points was to introduce an approach to define standardized supplier agreements that anticipate modern model-based exchanges and collaboration methods.

Robert & Agnes presented the 8-step guideline for the aerospace industry in specific terms, explicitly mentioning the ISO44001 standard as being generic for all industries. An impression of the eight steps and sub-steps can be found below:

The 8-step approach will be supported by a 3rd-party Collaboration Management System (CMS app), which is not mandatory but recommended for use. When an interaction depends on a specific tool, it cannot become an ISO standard. The purpose of the methodology and app is to assist participants to ensure the collaboration aspect between stakeholders contains all the necessary steps & and people.

Model-based OEM/Supplier Collaboration Needs in Aviation Industry

Hartmut Hintze, working at Airbus Operations, presented the latest findings of the MBSE Data Interoperability working group and presented the model-based OEM/Supplier collaboration requirements and standards that need to be supported by the PLM/MBSE solution providers in the future. This collaboration goes beyond sharing CAD models, as you can see from the supplier engagement framework below:

As there are no standards-based tools, their first focus was looking into methodologies for model and behavior exchanges based on use cases. The use cases are then used to verify the state-of-the-art abilities of the various tools. At this moment, there is a focus on SysML V2 as a potential game-changer due to its new API support. As a relative novice on SysML, I cannot explain this topic in more simple words. I recommend that experts visit their presentations on the AD PAG publications page here.

Conclusions

The theme of the conference was related to the Digital Thread – and as you will discover it is valid for everyone. Learn to see the difference between the coordinated Digital Thread and the connected Digital Tread.This time, a lot of information about the Aerospace and Defense Action Groups (AD PAG), which are a fundamental part of this conference. The A&D industry has always been leading in advanced PLM concepts. However, more advanced concepts will come in my next post when touching the connected Digital Thread in the context of federated PLM and let’s not forget AI.

During May and June, I wrote a guest chapter for the next edition of John Stark’s book Product Lifecycle Management (Volume 2): The Devil is in the Details.

During May and June, I wrote a guest chapter for the next edition of John Stark’s book Product Lifecycle Management (Volume 2): The Devil is in the Details.

The book is considered a standard in the academic world when studying aspects of PLM.

Looking into the table of contents through the above link, it shows that understanding PLM in its full scope is broad. I wrote about it recently: PLM is Complex (and we have to accept it?), and Roger Tempest and others are still fighting to get the job as PLM Professional recognized Associate Yourself With Professional PLM.

To make the scope broader, John invited me to write a chapter about PLM and Sustainability, which is an actual topic in many organizations. As sustainability is my dedicated topic in the PLM Global Green Alliance (PGGA) core team, I was happy to accept this challenge.

To make the scope broader, John invited me to write a chapter about PLM and Sustainability, which is an actual topic in many organizations. As sustainability is my dedicated topic in the PLM Global Green Alliance (PGGA) core team, I was happy to accept this challenge.

This activity is challenging because writing a chapter on a current topic might make it outdated soon. For the same reason, I never wanted to write a PLM book as I wrote in my 2014 post: Did you notice PLM is changing?

The book, with the additional chapter, will be available later this year. I want to share with you in this post the topics I addressed in this chapter. Perhaps relevant for your organization or personal interests. Also, I am looking forward to learning if I missed any topics.

The book, with the additional chapter, will be available later this year. I want to share with you in this post the topics I addressed in this chapter. Perhaps relevant for your organization or personal interests. Also, I am looking forward to learning if I missed any topics.

Introduction

The chapter starts with defining the context. PLM is considered a strategy supported by a connected IT infrastructure, and for the definition of sustainability, I refer to the relevant SDGs as described on our PGGA theme page: PLM and Sustainability

Next, I discuss two major concepts indissoluble connected with sustainability.

The Circular Economy

On a planet with limited resources and still a growing consumption of raw materials, we need to follow the concepts of the circular economy in our businesses and lives. The circular economy section addresses mainly the hardware side of the butterfly as, here, PLM practices have the most significant impact.

The circular economy requires collaboration among various stakeholders, including businesses, governments and consumers. It involves rethinking production processes and establishing new consumption patterns. Policies and regulations will push for circular economy patterns, as seen in the following paragraphs.

Systems Thinking

A significant change in bringing products to the market will be the need to change how we look at our development processes. Historically, many of these processes were linear and only focused on time to market, cost and quality. Now, we have to look into other dimensions, like environmental impact, usage and impact on the planet. As I wrote in the past Systems Thinking – a must-have skill in the 21st century?

Systems Thinking is a cognitive approach that emphasizes understanding complex problems by considering interconnections, feedback loops, and emergent properties. It provides a holistic perspective and explores multiple viewpoints.

Systems Thinking guides problem-solving and decision-making and requires you to treat a solution with a mindset of a system interacting with other systems.

Regulations

More sustainable products and services will be driven primarily by existing and upcoming regulations. In this section, I refer to the success of the CFC (ChloroFluorCarbon) emission reduction, leading to slowly fixing the hole in the Ozon layer. Current regulations like WEEE, RoHS and REACH are already relevant for many companies, and compliance with these regulations is a good exercise for more stringent regulations related to Carbon emissions and upcoming related to the Digital Product Passport.

Making regulatory compliance a part of the concept phase ensures no late changes are needed to become compliant, saving time and costs. In addition, making regulatory compliance as much as possible with a data-driven approach reduces the overhead required to prove regulatory compliance. Both topics are part of a PLM strategy.

![]() In this context, see Lionel Grealou’s article 5 Brand Value Benefits at the Intersection of Sustainability and Product Compliance. The article has also been shared in our PGGA LinkedIn group.

In this context, see Lionel Grealou’s article 5 Brand Value Benefits at the Intersection of Sustainability and Product Compliance. The article has also been shared in our PGGA LinkedIn group.

Business

On the business side, the Greenhouse Gas Protocol is explained. How companies will have to report their Scope 1 and Scope 2 emissions and, ultimately, Scope 3 – see the image below for the details.

GHG reporting will support companies, investors and consumers to decide where to prioritize and put their money.

Ultimately, companies have to be profitable to survive in their business. The ESG framework is relevant in this context as it will allow investors to put their money not only based on short-term gains (as expected) but also on Environmental or Social parameters. There are a lot of discussions related to the ESG framework, as you might have read in Vincent de la Mar’s monthly newsletter, Sustainability & ESG Insights, which is also published in our PGGA group – a link below..

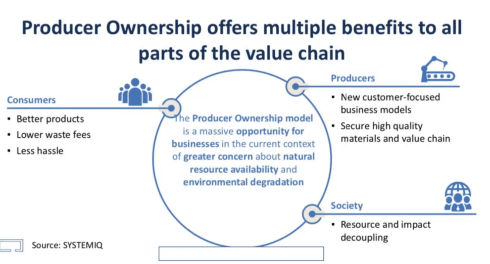

Besides ESG guidelines, there is also the drive by governments and consumers to push for a Product as a Service economy. Instead of owning products, consumers would pay for the usage of these products.

The concept is not new when considering lease cars, EV scooters, or streaming services like Spotify and Netflix. In the CIMdata PLM Roadmap/PDT Fall 2021 conference, we heard Kenn Webster explaining: In the future, you will own nothing & you will be happy.

Changing the business to a Product as a Service is not something done overnight. It requires repairable, upgradeable products. And business related, it requires a connected ecosystem of all stakeholders – the manufacturer, the finance company, and the operating entities.

Digital Transformation

All the subjects discussed before require real-time reporting and analysis combined with data access to compliance-related databases. More in the section related to Life Cycle Assessment. As I discussed last year in several conferences, a sustainability initiative starts with data-driven and model-based approaches during the concept phase, but when manufacturing and operating (connected) products in the field. You can read the entire story here: Sustainability and Data-Driven PLM – the Perfect Storm.

Life Cycle Analysis

Special attention is given in this chapter to Life Cycle Analysis, which seems to be a popular topic among PLM vendors. Here, they can provide tools to make a lifecycle assessment, and you can read an impression of these tools in a guest blog from Roger L. Franz titled PLM Tools to Design for Sustainability – PLM Green Global Alliance.

However, Lifecycle Analysis is not as simple. Looking at the ISO 14040 framework, which describes – having the right goals and scope in mind, allows you to do an LCA where the Product Category Rules (PCS) will enable companies to compare their products with others.

PCRs include the description of the product category, the goal of the LCA, functional units, system boundaries, cut-off criteria, allocation rules, impact categories, information on the use phase, units, calculation procedures, requirements for data quality, and other information on the lifecycle Inventory Phase.

So be aware there is more to do than installing a tool.

Digital Twin

This section describes the importance of implementing a digital twin for the design phase, allowing companies to develop, test and analyze their products and services first virtually. Trade-off studies on virtual products are much cheaper, and when they are done in a data-driven, model-based environment, it will be the most efficient environment. In my terminology, setting up such a collaboration environment might be considered a System of Engagement.

This section describes the importance of implementing a digital twin for the design phase, allowing companies to develop, test and analyze their products and services first virtually. Trade-off studies on virtual products are much cheaper, and when they are done in a data-driven, model-based environment, it will be the most efficient environment. In my terminology, setting up such a collaboration environment might be considered a System of Engagement.

The second crucial digital twin mentioned is the digital twin from a product in operation where performance can be monitored and usage can be optimized for a minimal environmental impact. Suppose a company is able to create a feedback loop between its products in the field and its product innovation platform. In that case, it can benchmark its design models and update the product behavior for better performance.

The manufacturing digital twin is also discussed in the context of environmental impact, as choosing the right processes and resources can significantly affect scope 3 emissions.

The chapter finishes with the story of a fictive company, WePack, where we can follow the impact and implementations of the topics described in this chapter.

Conclusion

As I described in the introduction, the topic of PLM and Sustainability is relatively new and constantly evolving. What do you think? Did I miss any dimensions?

Feel free to contribute to our PLM Global Green Alliance LinkedIn group.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

I noticed this year/last year that many companies are discussing or working on their future PLM. It is time to make progress after COVID, particularly in digitization.

And as most companies are avoiding the risk of a “big bang”, they are exploring how they can improve their businesses in an evolutionary mode.

PLM is no longer a system

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

More and more, PLM is considered a strategy, with which I fully agree. Therefore, implementing a PLM strategy requires holistic thinking and an infrastructure of different types of systems, where possible, digitally connected.

This trend is bad news for the PLM vendors as they continuously work on an end-to-end portfolio where every aspect of the PLM lifecycle is covered by one of their systems. The company’s IT department often supports the PLM vendors, as IT does not like a diverse landscape.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

However, are these capabilities implementable in an economical and user-friendly manner by actual companies or should PLM players need to change their strategy”?

A question I will try to answer in this post

The future of PLM

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

Some of the posts to refresh your memory are:

- Time to split PLM?

- People, Processes, Data and Tools?

- The rise and the fall of the BOM?

- The new side of PLM? Systems of Engagement!

To summarize what has been discussed in these posts are the following points:

The As Is:

- The traditional PLM systems are examples of a System of Record, not designed to be end-user friendly but designed to have a traceable baseline for manufacturing, service and product compliance.

- The traditional PLM systems are tuned to a mechanical product introduction and release process in a coordinated manner, with a focus on BOM governance.

- The legacy information is stored in BOM structures and related specification files.

System of Record (ENOVIA image 2014)

The To Be:

- We are not talking about a PLM system anymore; a traditional System of Record will be digitally connected to different Systems of Engagement / Domains / Products, which have their own optimized environment for real-time collaboration.

- The BOM structures remain essential for the hardware part; however, overreaching structures are needed to manage software and hardware releases for a product. These structures depend on connected datasets.

- To support digital twins at the various lifecycle stages (design. Manufacturing, operations), product data needs to be based on and consumed by models.

- A future PLM infrastructure is hybrid, based on a Single Source of Change (SSoC) and an Authoritative Source of Truth (ASoT) instead of a Single Source of Truth (SSoT).

Various Systems of Engagement

Related podcasts

I relistened two podcasts before writing this post, and I think they are a must to listen to.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

Highlights: From System to Platform – the new norman. A Single Source of Truth doesn’t work anymore – it is about value streams. People in big companies fear making wrong PLM decisions, which is seen as a significant risk for your career.

There is no immediate need to change the current status quo.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

Highlights: The term Single Source of Change and the existing concepts of a hybrid PLM infrastructure based on his experiences at Daimler and now at NIO. Yousef stresses the importance of having the vision and the executive support to execute.

![]() The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

In addition, I want to point to the experiences provided by Erik Herzog in the Heliple project using OSLC interfaces as the “glue” to connect (in my terminology) the Systems of Engagement and the Systems of Record.

If you are interested in these concepts and want to learn and discuss them with your peers, more can be learned during the upcoming CIMdata PLM Roadmap / PDT Europe conference.

In particular, look at the agenda for day two if you are interested in this topic.

The future for the PLM vendors

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

They are more focused with their messaging on the transition from on-premise to the cloud, providing a SaaS offering with their portfolio.

I was slightly disappointed when I saw this article on Engineering.com provided by Autodesk: 5 PLM Best Practices from the Experiences of Autodesk and Its Customers.

The article is tool-centric, with statements that make sense and could be written by any PLM Vendor. However, Best Practice #1 Central Source of Truth Improves Productivity and Collaboration was the message that struck me. Collaboration comes from connecting people, not from the Single Source of Truth utopia.

I don’t believe PLM Vendors have to be afraid of losing their installed base rapidly with companies using their PLM as a System or Record. There is so much legacy stored in these systems that might still be relevant. The existence of legacy information, often documents, makes a migration or swap to another vendor almost impossible and unaffordable.

The System of Record is incompatible with data-driven PLM capabilities

I would like to see more clear developments of the PLM Vendors, creating a plug-and-play infrastructure for Systems of Engagement. Plug-and-play solutions could be based on a neutral partner collaboration hub like ShareAspace or the Systems of Engagement I discussed recently in my post and interview: The new side of PLM? Systems of Engagement!

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Some people believe AI, with the examples we have seen with ChatGPT, will be the future direction without needing interface standards.

I am curious about your thoughts and experiences in that area and am willing to learn.

Talking about learning?

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

As Martijn starts in the preface:

“I decided to write this book because, in my search for more knowledge on the topics of Part Re-Identification, Interchangeability, and Traceability, I could only find bits and pieces but not a comprehensive work that helps fundamentally understand these topics”.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

Martijn writes in an easily readable style and uses step-by-step examples to discuss the various options. There are even exercises at the end to use in a classroom or for your team to digest the content.

The good news is that the book is not about the past. You might also know Martijn for our joint discussion, The Future of Configuration Management, together with Maxime Gravel and Lisa Fenwick, on the impact of a model-based and data-driven approach to CM.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I recommend buying the book as a paperback so you can navigate easily between the diagrams and the text.

Conclusion

The trend for federated PLM is becoming more and more visible as companies start implementing these concepts. The end of monolithic PLM is a threat and an opportunity for the existing PLM Vendors. Will they work towards an open plug-and-play future, or will they keep their portfolios closed? What do you think?

In the past two weeks, I had several discussions with peers in the PLM domain about their experiences.

In the past two weeks, I had several discussions with peers in the PLM domain about their experiences.

Some of them I met after a long time again face-to-face at the LiveWorx 2023 event. See my review of the event here: The Weekend after LiveWorx 2023.

And there were several interactions on LinkedIn, leading to a more extended discussion thread (an example of a digital thread ?) or a Zoom discussion (a so-called 2D conversation).

To complete the story, I also participated in two PLM podcasts from Share PLM, where we interviewed Johan Mikkelä (currently working at FLSmidth) and, in the second episode Issam Darraj (presently working at ABB) about their PLM experiences. Less a discussion, more a dialogue, trying to grasp the non-documented aspects of PLM. We are looking for your feedback on these podcasts too.

To complete the story, I also participated in two PLM podcasts from Share PLM, where we interviewed Johan Mikkelä (currently working at FLSmidth) and, in the second episode Issam Darraj (presently working at ABB) about their PLM experiences. Less a discussion, more a dialogue, trying to grasp the non-documented aspects of PLM. We are looking for your feedback on these podcasts too.

All these discussions led to a reconfirmation that if you are a PLM practitioner, you need a broad skillset to address the business needs, translate them into people and process activities relevant to the industry and ultimately implement the proper collection of tools.

![]() As a sneaky preview for the podcast sessions, we asked both Johan and Issam about the importance of the tools. I will not disclose their answers here; you have to listen.

As a sneaky preview for the podcast sessions, we asked both Johan and Issam about the importance of the tools. I will not disclose their answers here; you have to listen.

Let’s look at some of the discussions.

NOTE: Just before pushing the Publish button, Oleg Shilovitsky published this blog article PLM Project Failures and Unstoppable PLM Playbook. I will comment on his points at the end of this post. It is all part of the extensive discussion.

NOTE: Just before pushing the Publish button, Oleg Shilovitsky published this blog article PLM Project Failures and Unstoppable PLM Playbook. I will comment on his points at the end of this post. It is all part of the extensive discussion.

PLM, LinkedIn and complexity

The most popular discussions on LinkedIn are often related to the various types of Bills of Materials (eBOM, mBOM, sBOM), Part numbering schemes (intelligent or not), version and revision management and the famous FFF discussions.

The most popular discussions on LinkedIn are often related to the various types of Bills of Materials (eBOM, mBOM, sBOM), Part numbering schemes (intelligent or not), version and revision management and the famous FFF discussions.

This post: PLM and Configuration Management Best Practices: Working with Revisions, from Andreas Lindenthal, was a recent example that triggered others to react.

I had some offline discussions on this topic last week, and I noticed Frédéric Zeller wrote his post with the title PLM, LinkedIn and complexity, starting his post with (quote):

I am stunned by the average level of posts on the PLM on LinkedIn.

I’m sorry, but in 2023 :

- Part Number management (significant, non-significant) should no longer be a problem.

- Revision management should no longer be a question.

- Configuration management theory should no longer be a question.

- Notions of EBOMs, MBOMs … should no longer be a question.

So why are there still problems on these topics?

You can see from the at least 40+ comments that this statement created a lot of reactions, including mine. Apparently, these topics are touching many people worldwide, and there is no simple, single answer to each of these topics. And there are so many other topics relevant to PLM.

Talking later with Frederic for one hour in a Zoom session, we discussed the importance of the right PLM data model.

Talking later with Frederic for one hour in a Zoom session, we discussed the importance of the right PLM data model.

I also wrote a series about the (traditional) PLM data model: The importance of a (PLM) data model.

Frederic is more of a PLM architect; we even discussed the wording related to the EBOM and the MBOM. A topic that I feel comfortable discussing after many years of experience seeing the attempts that failed and the dreams people had. And this was only one aspect of PLM.

You also find the discussion related to a PLM certification in the same thread. How would you certify a person as a PLM expert?

You also find the discussion related to a PLM certification in the same thread. How would you certify a person as a PLM expert?

There are so many dimensions to PLM. Even more important, the PLM from 10-15 years ago (more of a system discussion) is no longer the PLM nowadays (a strategy and an infrastructure) –

This is a crucial difference. Learning to use a PLM tool and implement it is not the same as building a PLM strategy for your company. It is Tools, Process, People versus Process, People, Tools and Data.

Time for Methodology workshops?

I recently discussed with several peers what we could do to assist people looking for best practices discussion and lessons learned. There is a need, but how to organize them as we cannot expect this to be voluntary work.

![]() In the past, I suggested MarketKey, the organizer of the PI DX events, extend its theme workshops. For example, instead of a 45-min Focus group with a short introduction to a theme (e.g., eBOM-mBOM, PLM-ERP interfaces), make these sessions last at least half a day and be independent of the PLM vendors.

In the past, I suggested MarketKey, the organizer of the PI DX events, extend its theme workshops. For example, instead of a 45-min Focus group with a short introduction to a theme (e.g., eBOM-mBOM, PLM-ERP interfaces), make these sessions last at least half a day and be independent of the PLM vendors.

Apparently, it did not fit in the PI DX programming; half a day would potentially stretch the duration of the conference and more and more, we see two days of meetings as the maximum. Longer becomes difficult to justify even if the content might have high value for the participants.

I observed a similar situation last year in combination with the PLM roadmap/PDT Europe conference in Gothenburg. Here we had a half-day workshop before the conference led by Erik Herzog(SAAB Aeronautics)/ Judith Crockford (Europstep) to discuss concepts related to federated PLM – read more in this post: The week after PLM Roadmap/PDT Europe 2022.

I observed a similar situation last year in combination with the PLM roadmap/PDT Europe conference in Gothenburg. Here we had a half-day workshop before the conference led by Erik Herzog(SAAB Aeronautics)/ Judith Crockford (Europstep) to discuss concepts related to federated PLM – read more in this post: The week after PLM Roadmap/PDT Europe 2022.

It reminded me of an MDM workshop before the 2015 Event, led by Marc Halpern from Gartner. Unfortunately, the federated PLM discussion remained a pretty Swedish initiative, and the follow-up did not reach a wider audience.

And then there are the Aerospace and Defense PLM action groups that discuss moderated by CIMdata. It is great that they published their findings (look here), although the best lessons learned are during the workshops.

And then there are the Aerospace and Defense PLM action groups that discuss moderated by CIMdata. It is great that they published their findings (look here), although the best lessons learned are during the workshops.

However, I also believe the A&D industry cannot be compared to a mid-market machinery manufacturing company. Therefore, it is helpful for a smaller audience only.

And here, I inserted a paragraph dedicated to Oleg’s recent post, PLM Project Failures and Unstoppable PLM Playbook – starting with a quote:

How to learn to implement PLM? I wrote about it in my earlier article – PLM playbook: how to learn about PLM? While I’m still happy to share my knowledge and experience, I think there is a bigger need in helping manufacturing companies and, especially PLM professionals, with the methodology of how to achieve the right goal when implementing PLM. Which made me think about the Unstoppable PLM playbook ©.

I found a similar passion for helping companies to adopt PLM while talking to Helena Gutierrez. Over many conversations during the last few months, we talked about how to help manufacturing companies with PLM adoption. The unstoppable PLM playbook is still a work in progress, but we want to start talking about it to get your feedback and start the conversation.

It is an excellent confirmation of the fact that there is a need for education and that the education related to PLM on the Internet is not good enough.

As a former teacher in Physics, I do not believe in the Unstoppable PLM Playbook, even if it is a branded name. Many books are written by specific authors, giving their perspectives based on their (academic) knowledge.

As a former teacher in Physics, I do not believe in the Unstoppable PLM Playbook, even if it is a branded name. Many books are written by specific authors, giving their perspectives based on their (academic) knowledge.

Are they useful? I believe only in the context of a classroom discussion where the applicability can be discussed,

Therefore my questions to vendor-neutral global players, like CIMdata, Eurostep, Prostep, SharePLM, TCS and others, are you willing to pick up this request? Or are there other entities that I missed? Please leave your thoughts in the comments. I will be happy to assist in organizing them.

Therefore my questions to vendor-neutral global players, like CIMdata, Eurostep, Prostep, SharePLM, TCS and others, are you willing to pick up this request? Or are there other entities that I missed? Please leave your thoughts in the comments. I will be happy to assist in organizing them.

There are many more future topics to discuss and document too.

- What about the potential split of a PLM infrastructure between Systems of Record & Systems of Engagement?

- What about the Digital Thread, a more and more accepted theme in discussions, but what is the standard definition?

- Is it traceability as some vendors promote it, or is it the continuity of data, direct usable in various contexts – the DevOps approach?

Who likes to discuss methodology?

When asking myself this question, I see the analogy with standards. So let’s look at the various players in the PLM domain – sorry for the immense generalization.

When asking myself this question, I see the analogy with standards. So let’s look at the various players in the PLM domain – sorry for the immense generalization.

Strategic consultants: standards are essential, but spare me the details.

Vendors: standards are limiting the unique capabilities of my products

Implementers: two types – Those who understand and use standards as they see the long-term benefits. Those who avoid standards as it introduces complexity.

Companies: they love standards if they can be implemented seamlessly.

Universities: they love to explore standards and help to set the standards even if they are not scalable

Just replace standards with methodology, and you see the analogy.

We like to discuss the methodology.