You are currently browsing the tag archive for the ‘Digital Enterprise’ tag.

Another year passed, and as usual, I took the time to look back. I always feel that things are going so much slower than expected. But that’s reality – there is always friction, and in particular, in the PLM domain, there is so much legacy we cannot leave behind.

Another year passed, and as usual, I took the time to look back. I always feel that things are going so much slower than expected. But that’s reality – there is always friction, and in particular, in the PLM domain, there is so much legacy we cannot leave behind.

It is better to plan what we can do in 2024 to be prepared for the next steps or, if lucky, even implement the next steps in progress.

In this post, I will discuss four significant areas of attention (AI – DATA – PEOPLE – SUSTAINABILITY) in an alphabetic order, not prioritized.

Here are some initial thoughts. In the upcoming weeks I will elaborate further on them and look forward to your input.

AI (Artificial Intelligence)

![]() Where would I be without talking about AI?

Where would I be without talking about AI?

When you look at the image below, the Gartner Hype Cycle for AI in 2023, you see the potential coming on the left, with Generative AI at the peak.

Part of the hype comes from the availability of generative AI tools in the public domain, allowing everyone to play with them or use them. Some barriers are gone, but what does it mean? Many AI tools can make our lives easier, and there is for sure no threat if our job does not depend on standard practices.

AI and People

When I was teaching physics in high school, it was during the introduction of the pocket calculator, which replaced the slide rule.You need to be skilled to uyse the slide rule, now there was a device that gave immediate answers. Was this bad for the pupils?

When I was teaching physics in high school, it was during the introduction of the pocket calculator, which replaced the slide rule.You need to be skilled to uyse the slide rule, now there was a device that gave immediate answers. Was this bad for the pupils?

If you do not know a slide rule, it was en example of new technology replacing old tools, providing more time for other details. Click on the image or read more about the slide rule here on Wiki.

![]() Or today you would ask the question about the slide rule to ChatGPT? Does generative AI mean the end of Wikipedia? Or does generative AI need the common knowledge of sites like Wikipedia?

Or today you would ask the question about the slide rule to ChatGPT? Does generative AI mean the end of Wikipedia? Or does generative AI need the common knowledge of sites like Wikipedia?

AI can empower people in legacy environments, when working with disconnected systems. AI will be a threat for to people and companies that rely on people and processes to bring information together without adding value. These activities will disappear soon and you must consider using this innovative approach.

During the recent holiday period, there was an interesting discussion about why companies are reluctant to change and implement better solution concepts. Initially launched by Alex Bruskin here on LinkedIn , the debate spilled over into the topic of TECHNICAL DEBT , well addressed here by Lionel Grealou.

During the recent holiday period, there was an interesting discussion about why companies are reluctant to change and implement better solution concepts. Initially launched by Alex Bruskin here on LinkedIn , the debate spilled over into the topic of TECHNICAL DEBT , well addressed here by Lionel Grealou.

![]() Both articles and the related discussion in the comments are recommended to follow and learn.

Both articles and the related discussion in the comments are recommended to follow and learn.

AI and Sustainability

![]() Similar to the introduction of Bitcoin using blockchain technology, some people are warning about the vast energy consumption required for training and interaction with Large Language Models (LLM), as Sasha Luccioni explains in her interesting TED talk when addressing sustainability.

Similar to the introduction of Bitcoin using blockchain technology, some people are warning about the vast energy consumption required for training and interaction with Large Language Models (LLM), as Sasha Luccioni explains in her interesting TED talk when addressing sustainability.

She proposes that tech companies should be more transparent on this topic, the size and the type of the LLM matters, as the indicative picture below illustrates.

Carbon Emissions of LLMs compared

In addition, I found an interesting article discussing the pros and cons of AI related to Sustainability. The image below from the article Risks and Benefits of Large Language Models for the Environment illustrates nicely that we must start discussing and balancing these topics.

![]() To conclude, in discussing AI related to sustainability, I see the significant advantage of using generative AI for ESG reporting.

To conclude, in discussing AI related to sustainability, I see the significant advantage of using generative AI for ESG reporting.

ESG reporting is currently a very fragmented activity for organizations, based on (marketing) people’s goodwill and currently these reports are not always be evidence-based.

Data

The transformation from a coordinated, document-driven enterprise towards a hybrid coordinated/connected enterprise using a data-driven approach became increasingly visible in 2023. I expect this transformation to grow faster in 2024 – the momentum is here.

The transformation from a coordinated, document-driven enterprise towards a hybrid coordinated/connected enterprise using a data-driven approach became increasingly visible in 2023. I expect this transformation to grow faster in 2024 – the momentum is here.

We saw last year that the discussions related to Federated PLM nicely converged at the PLM Roadmap / PDT Europe conference in Paris. I shared most of the topics in this post: The week after PLM Roadmap / PDT Europe 2023. In addition, there is now the Heliple Federated PLM LinkedIn group with regular discussions planned.

We saw last year that the discussions related to Federated PLM nicely converged at the PLM Roadmap / PDT Europe conference in Paris. I shared most of the topics in this post: The week after PLM Roadmap / PDT Europe 2023. In addition, there is now the Heliple Federated PLM LinkedIn group with regular discussions planned.

In addition, if you read here Jan Bosch’s reflection on 2023, he mentions (quote):

… 2023 was the year where many of the companies in the center became serious about the use of data. Whether it is historical analysis, high-frequency data collection during R&D, A/B testing or data pipelines, I notice a remarkable shift from a focus on software to a focus on data. The notion of data as a product, for now predominantly for internal use, is increasingly strong in the companies we work with

I am a big fan of Jan’s posting; coming from the software world, he describes the same issues that we have in the PLM world, except he does not carry the hardware legacy that much and, therefore, acts faster than us in the PLM world.

I am a big fan of Jan’s posting; coming from the software world, he describes the same issues that we have in the PLM world, except he does not carry the hardware legacy that much and, therefore, acts faster than us in the PLM world.

An interesting illustration of the slow pace to a data-driven environment is the revival of the PLM and ERP integration discussion. Prof. Jörg Fischer and Martin Eigner contributed to the broader debate of a modern enterprise infrastructure, not based on systems (PLM, ERP, MES, ….) but more on the flow of data through the lifecycle and an organization.

It is a great restart of the debate, showing we should care more about data semantics and the flow of information.

The articles: The Future of PLM & ERP: Bridging the Gap. An Epic Battle of Opinions! and Is part master in PLM and ERP equal or not) combined with the comments to these posts, are a must read to follow this change towards a more connected flow of information.

While writing this post, Andreas Lindenthal expanded the discussion with his post: PLM and Configuration Management Best Practices: Part Traceability and Revisions. Again thanks to data-driven approaches, there is an extending support for the entire product lifecycle. Product Lifecycle Management, Configuration Management and AIM (Asset Information Management) have come together.

![]() PLM and CM are more and more overlapping as I discussed some time ago with Martijn Dullaart, Maxime Gravel and Lisa Fenwick in the The future of Configuration Management. This topic will be “hot”in 2024.

PLM and CM are more and more overlapping as I discussed some time ago with Martijn Dullaart, Maxime Gravel and Lisa Fenwick in the The future of Configuration Management. This topic will be “hot”in 2024.

People

From the people’s perspective towards AI, DATA and SUSTAINABILITY, there is a noticeable divide between generations. Of course, for the sake of the article, I am generalizing, assuming most people do not like to change their habits or want to reprogram themselves.

From the people’s perspective towards AI, DATA and SUSTAINABILITY, there is a noticeable divide between generations. Of course, for the sake of the article, I am generalizing, assuming most people do not like to change their habits or want to reprogram themselves.

Unfortunate, we have to adapt our skills as our environment is changing. Most of my generation was brought up with the single source of truth idea, documented and supported by science papers.

In my terminology, information processing takes place in our head by combining all the information we learned or collected through documents/books/newspapers – the coordinated approach.

For people living in this mindset, AI can become a significant threat, as their brain is no longer needed to make a judgment, and they are not used to differentiate between facts and fake news as they were never trained to do so

For people living in this mindset, AI can become a significant threat, as their brain is no longer needed to make a judgment, and they are not used to differentiate between facts and fake news as they were never trained to do so

The same is valid for practices like the model-based approach, working data-centric, or considering sustainability. It is not in the DNA of the older generations and, therefore, hard to change.

The older generation is mostly part of an organization’s higher management, so we are returning to the technical debt discussion.

Later generations that grew up as digital natives are used to almost real-time interaction, and when applied consistently in a digital enterprise, people will benefit from the information available to them in a rich context – in my terminology – the connected approach.

AI is a blessing for people living in this mindset as they do not need to use old-fashioned methods to acquire information.

AI is a blessing for people living in this mindset as they do not need to use old-fashioned methods to acquire information.

“Let ChatGPT write my essay.”

However, their challenge could be what I would call “processing time”. Because data is available, it does not necessarily mean it is the correct information. For that reason it remains important to spend time digesting the impact of information you are reading – don’t click “Like”based on the tittle, read the full article and then decide.

Experience is what you get, when you don’t get what you expect.

meaning you only become experienced if you learn from failures.

Sustainability

Unfortunately, sustainability is not only the last topic in alphabetic order, as when you look at the image below, you see that discussions related to sustainability are in a slight decline at C-level at the moment.

Unfortunately, sustainability is not only the last topic in alphabetic order, as when you look at the image below, you see that discussions related to sustainability are in a slight decline at C-level at the moment.

I share this observation in my engagements when discussing sustainability with the companies I interact with.

The PLM software and services providers are all on a trajectory of providing tools and an infrastructure to support a transition to a more circular economy and better traceability of materials and carbon emissions.

The PLM software and services providers are all on a trajectory of providing tools and an infrastructure to support a transition to a more circular economy and better traceability of materials and carbon emissions.

In the PLM Global Green Alliance, we talked with Aras, Autodesk, Dassault Systems, PTC, SAP, Sustaira, TTPSC(Green PLM) and more to come in 2024. The solution offerings in the PLM domain are available to start, now the people and processes.

For sure, AI tools will help companies to get a better understanding of their sustainability efforts. As mentioned before AI could help companies in understanding their environmental impact and build more accurate ESG reports.

Next, being DATA-driven will be crucial. As discussed during the latest PLM Roadmap/PDT Europe conference: The Need for a Governance Digital Thread.

And regarding PEOPLE, the good news is that younger generations want to take care of their future. They are in a position to choose the company to work for or influence companies by their consumer behavior. Unfortunately, climate disasters will remind us continuously in the upcoming decades that we are in a critical phase.

With the PLM Global Green Alliance, we strive to bring people together with a PLM mindset, sharing news and information on how to move forward to a sustainable future.

![]() Mark Reisig (CIMdata – moderator for Sustainability & Energy) and Patrice Quencez (CIMPA – moderator for the Circular Economy) joined the PGGA last year and you will experience their inputs this year.

Mark Reisig (CIMdata – moderator for Sustainability & Energy) and Patrice Quencez (CIMPA – moderator for the Circular Economy) joined the PGGA last year and you will experience their inputs this year.

Conclusion

As you can see from this long post, there is so much to learn. The topics described are all actual, and each topic requires education, experience (success & failures) combined with understanding of the technology concepts. Make sure you consider all of them, as focusing on a single topic will not make move faster forward – they are all related. Please share your experiences this year—Happy New Year of Learning.

Two weeks ago, this post from Ilan Madjar drew my attention. He pointed to a demo movie, explaining how to support Smart Part Numbering on the 3DEXPERIENCE platform. You can watch the recording here.

Two weeks ago, this post from Ilan Madjar drew my attention. He pointed to a demo movie, explaining how to support Smart Part Numbering on the 3DEXPERIENCE platform. You can watch the recording here.

I was surprised that Smart Part Numbering is still used, and if you read through the comments on the post, you see the various arguments that exist.

- “Many mid-market customers are still using it”

me: I think it is not only the mid-market – however, the argument is no reason to keep it alive. - “The problem remains in the customer’s desire (or need or capability) for change.”

me: This is part of the lowest resistance. - “User resistance to change. Training and management sponsorship has proven to be not enough.”

me: probably because discussions are feature-oriented, not starting from the business benefits. - “Cost and effort- rolling this change through downstream systems. The cost and effort of changing PN in PLM,ERP,MES, etc., are high. Trying to phase it out across systems is a recipe for a disaster.”

me: The hidden costs of maintaining Smart Numbers inside an organization are high and invisible, reducing the company’s competitiveness. - “Existing users often complain that it takes seconds to minutes more for unintelligent PN vs. using intelligent PN.”

me: If we talk about a disconnected user without access to information, it could be true if the number of Smart Numbers to comprehend is low.

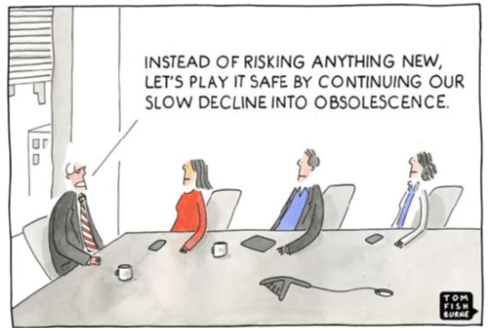

There were many other arguments for why you should not change. It reminded me of the image below:

Smart Numbers related to the Coordinated approach

Smart Part Numbers are a characteristic of best practices from the past. Where people were working in different systems, the information moving from one system to another was done manually.

For example, it is re-entering the Bill of Materials from the PDM system into the ERP system or attaching drawings to materials/parts in the ERP system. The filename often reflects the material or part number in the latter case.

The problems with the coordinated, smart numbering approach are:

New people in the organization need to learn the meaning of the numbering scheme. This learning process reduces the flexibility of an organization and increases the risk of making errors.

New people in the organization need to learn the meaning of the numbering scheme. This learning process reduces the flexibility of an organization and increases the risk of making errors.- Typos go unnoticed when transferring numbers from one system to another and only get noticed late when the cost of fixing the error might be 10 -100 fold.

- The argument that people will understand the meaning of a part is partly valid. A person can have a good guess of the part based on the smart part number; however, the details can be different unless you work every day with the same and small range of parts.

- Smart Numbers created a legacy. After Mergers and Acquisitions, there will be multiple part number schemes. Do you want to renumber old parts, meaning non-value-added, risky activities? Do you want to continue with various numbering schemes, meaning people need to learn more than one numbering schema – a higher entry barrier and risk of errors?

There were and still are many advanced smart numbering systems.

In one of my first PDM implementations in the Netherlands, I learned about the 12NC code system from Philips – introduced at Philips in 1963 and used to identify complete products, documentation, and bare components, up to the finest detail. At this moment, many companies in the Philips family (suppliers or offspring) still use this numbering system, illustrating that it is not only the small & medium enterprises that are reluctant to change their numbering system.

In one of my first PDM implementations in the Netherlands, I learned about the 12NC code system from Philips – introduced at Philips in 1963 and used to identify complete products, documentation, and bare components, up to the finest detail. At this moment, many companies in the Philips family (suppliers or offspring) still use this numbering system, illustrating that it is not only the small & medium enterprises that are reluctant to change their numbering system.

The costs of working with Smart Part Numbers are often unnoticed as they are considered a given.

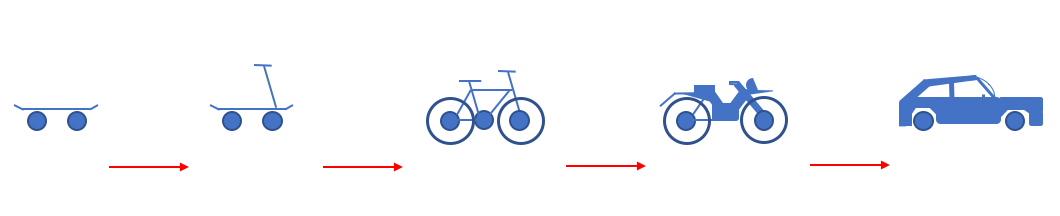

From Coordinated to Connected

Digital transformation in the PLM domain means moving from coordinated practices toward practices that benefit from connected technology. In many of my blog posts, you can read why organizations need to learn to work in a connected manner. It is both for their business sustainability and also for being able to deal with regulations related to sustainability in the short term.

GHG reporting, ESG reporting, material compliance, and the DPP are all examples of the outside world pushing companies to work connected. Besides the regulations, if you are in a competitive business, you must be more efficient, innovative and faster than your competitors.

In a connected environment, relations between artifacts (datasets) are maintained in an IT infrastructure without requiring manual data transformations and people to process the data. In a connected enterprise, this non-value-added work will be reduced.

How to move away from Smart Numbering systems?

Several comments related to the Smart Numbering discussion mentioned that changing the numbering system is too costly and risky to implement and that no business case exists to support it. This statement only makes sense if you want your business to become obsolete slowly. Modern best practices based on digitization should be introduced as fast as possible, allowing companies to learn and adapt. There is no need for a big bang.

Several comments related to the Smart Numbering discussion mentioned that changing the numbering system is too costly and risky to implement and that no business case exists to support it. This statement only makes sense if you want your business to become obsolete slowly. Modern best practices based on digitization should be introduced as fast as possible, allowing companies to learn and adapt. There is no need for a big bang.

Start with mapping, prioritizing, and mapping value streams in your company. Where do we see the most significant business benefits related to cost of handling, speed, and quality?

Start with mapping, prioritizing, and mapping value streams in your company. Where do we see the most significant business benefits related to cost of handling, speed, and quality?

Note: It is not necessary to start with engineering as they might be creators of data – start, for example, with the xBOM flow, where the xBOM can be a concept BOM, the engineering BOM, the Manufacturing BOM, and more. Building this connected data flow is an investment for every department; do not start from the systems.

Note: It is not necessary to start with engineering as they might be creators of data – start, for example, with the xBOM flow, where the xBOM can be a concept BOM, the engineering BOM, the Manufacturing BOM, and more. Building this connected data flow is an investment for every department; do not start from the systems.

- Next point: Do not rename or rework legacy data. These activities do not add value; they can only create problems. Instead, build new process definitions that do not depend on the smartness of the number.

Make sure these objects have, besides the part number, the right properties, the right status, and the right connections. In other words, create a connected digital thread – first internally in your company and next with your ecosystem (OEMs, suppliers, vendors)

Make sure these objects have, besides the part number, the right properties, the right status, and the right connections. In other words, create a connected digital thread – first internally in your company and next with your ecosystem (OEMs, suppliers, vendors)

- Next point: Give newly created artifacts a guaranteed unique ID independent of others. Each artifact has its status, properties and context. In this step, it is time to break any 1 : 1 relation between a physical part and a CAD-part or drawing. If a document gets revised, it gets a new version, but the version change should not always lead to a part number change. You can find many discussions on why to decouple parts and documents and the flexibility it provides.

- Next point: New generated IDs are not necessarily generated in a single system. The idea of a single source of truth is outdated. Build your infrastructure upon existing standards if possible. For example, the UID of the Digital Product Passport will be based on the ISO/IEC 15459 standard, similar to the UID for retail products managed by the GS1 standard. Or, probably closer to home, look into your computer’s registry, and you will discover a lot of software components with a unique ID that specific programs or applications can use in a shared manner.

When will it happen?

In January 2016, I wrote about “the impact of non-intelligent part numbers” and surprisingly almost 8 years later and we are still in the same situation.

I just read Oleg Shilovitsky’s post The Data Dilemma: Why Engineers and Manufacturing Companies Struggle to Find Time for Data Management where he mentions Legacy Systems and Processes, Overwhelming Workloads, Lack of (Data) Expertise, Short-Term Focus and Resource Constraints as inhibitors.

I just read Oleg Shilovitsky’s post The Data Dilemma: Why Engineers and Manufacturing Companies Struggle to Find Time for Data Management where he mentions Legacy Systems and Processes, Overwhelming Workloads, Lack of (Data) Expertise, Short-Term Focus and Resource Constraints as inhibitors.

You probably all know the above cartoon. How can companies get out of this armor or habits? Will they be forced by the competition or by regulations. What do you think ?

Conclusion

Despite proven business benefits and insights, it remains challenging for companies to move toward modern, data-driven practices where Smart Number generators are no longer needed. When talking one-on-one to individuals, they are convinced a change is necessary, and they are pointing to the “others”.

I wish you all a prosperous 2024 and the power to involve the “others”.

Last week, I shared my first impressions from my favorite conference, in the post: The weekend after PLM Roadmap/PDT Europe 2023, where most impressions could be classified as traditional PLM and model-based.

Last week, I shared my first impressions from my favorite conference, in the post: The weekend after PLM Roadmap/PDT Europe 2023, where most impressions could be classified as traditional PLM and model-based.

There is nothing wrong with conventional PLM, as there is still much to do within this scope. A model-based approach for MBSE (Model-Based Systems Engineering) and MBD (Model-Based Definition) and efficient supplier collaboration are not topics you solve by implementing a new system.

Ultimately, to have a business-sustainable PLM infrastructure, you need to structure your company internally and connect to the outside world with a focus on standards to avoid a vendor lock-in or a dead end.

Ultimately, to have a business-sustainable PLM infrastructure, you need to structure your company internally and connect to the outside world with a focus on standards to avoid a vendor lock-in or a dead end.

In short, this is what I described so far in The weekend after ….part 1.

Now, let’s look at the relatively new topics for this audience.

Enabling the Marketing, Engineering & Manufacturing Digital Thread

Cyril Bouillard, the PLM & CAD Tools Referent at the Mersen Electrical Protection (EP) business unit, shared his experience implementing an end-to-end digital backbone from marketing through engineering and manufacturing.

Cyril Bouillard, the PLM & CAD Tools Referent at the Mersen Electrical Protection (EP) business unit, shared his experience implementing an end-to-end digital backbone from marketing through engineering and manufacturing.

Cyril showed the benefits of a modern PLM infrastructure that is not CAD-centric and focused on engineering only. The advantages of this approach are a seamless integrated flow of PLM and PIM (Product Information Management).

I wrote about this topic in 2019: PLM and PIM – the complementary value in a digital enterprise. Combining the concepts of PLM and PIM in an integrated, connected environment could also provide a serious benefit when collaborating with external parties.

Another benefit Cyril demonstrated was the integration of RoHS compliance to the BOM as an integrated environment. In my session, I also addressed integrated RoHS compliance as a stepping stone to efficiency in future compliance needs.

Another benefit Cyril demonstrated was the integration of RoHS compliance to the BOM as an integrated environment. In my session, I also addressed integrated RoHS compliance as a stepping stone to efficiency in future compliance needs.

Read more later or in this post: Material Compliance – as a stepping-stone towards Life Cycle Assessment (LCA)

Cyril concluded with some lessons learned.

Data quality is essential in such an environment, and there are significant time savings implementing the connected Digital Thread.

Meeting the Challenges of Sustainability in Critical Transport Infrastructures

Etienne Pansart, head of digital engineering for construction at SYSTRA, explained how they address digital continuity with PLM throughout the built assets’ lifecycle.

Etienne Pansart, head of digital engineering for construction at SYSTRA, explained how they address digital continuity with PLM throughout the built assets’ lifecycle.

Etienne’s story was related to the complexity of managing a railway infrastructure, which is a linear and vertical distribution at multiple scales; it needs to be predictable and under constant monitoring; it is a typical system of systems network, and on top of that, maintenance and operational conditions need to be continued up to date.

Regarding railway assets – a railway needs renewal every two years, bridges are designed to last a hundred years, and train stations should support everyday use.

When complaining about disturbances, you might have a little more respect now (depending on your country). However, on top of these challenges, Etienne also talked about the additional difficulties expected due to climate change: floods, fire, earth movements, and droughts, all of which will influence the availability of the rail infrastructure.

In that context, Etienne talked about the MINERVE project – see image below:

As you can see from the main challenges, there is an effort of digitalization for both the assets and a need to provide digital continuity over the entire asset lifecycle. This is not typically done in an environment with many different partners and suppliers delivering a part of the information.

Etienne explained in more detail how they aim to establish digital twins and MBSE practices to build and maintain a data-driven, model-based environment.

Having digital twins allows much more granular monitoring and making accurate design decisions, mainly related to sustainability, without the need to study the physical world.

His presentation was again a proof point that through digitalization and digital twins, the traditional worlds of Product Lifecycle Management and Asset Information Management become part of the same infrastructure.

And it may be clear that in such a collaboration environment, standards are crucial to connect the various stakeholder’s data sources – Etienne mentioned ISO 16739 (IFC), IFC Rail, and ISO 19650 (BIM) as obvious standards but also ISO 10303 (PLCS) to support the digital thread leveraged by OSLC.

And it may be clear that in such a collaboration environment, standards are crucial to connect the various stakeholder’s data sources – Etienne mentioned ISO 16739 (IFC), IFC Rail, and ISO 19650 (BIM) as obvious standards but also ISO 10303 (PLCS) to support the digital thread leveraged by OSLC.

I am curious to learn more about the progress of such a challenging project – having worked with the high-speed railway project in the Netherlands in 1995 – no standards at that time (BIM did not exist) – mainly a location reference structure with documents. Nothing digital.

The connected Digital Thread

The theme of the conference was The Digital Thread in a Heterogeneous, Extended Enterprise Reality, and in the next section, I will zoom in on some of the inspiring sessions for the future, where collaboration or information sharing is all based on a connected Digital Thread – a term I will explain in more depth in my next blog post.

Transforming the PLM Landscape:

The Gateway to Business Transformation

Yousef Hooshmand‘s presentation was the highlight of this conference for me.

Yousef Hooshmand‘s presentation was the highlight of this conference for me.

Yousef is the PLM Architect and Lead for the Modernization of the PLM Landscape at NIO, and he has been active before in the IT-landscape transformation at Daimler, on which he published the paper: From a monolithic PLM landscape to a federated domain and data mesh.

If you read my blog or follow Share PLM, you might seen the reference to Yousef’s work before, or recently, you can hear the full story at the Share PLM Podcast: Episode 6: Revolutionizing PLM: Insights.

If you read my blog or follow Share PLM, you might seen the reference to Yousef’s work before, or recently, you can hear the full story at the Share PLM Podcast: Episode 6: Revolutionizing PLM: Insights.

It was the first time I met Yousef in 3D after several virtual meetings, and his passion for the topic made it hard to fit in the assigned 30 minutes.

There is so much to share on this topic, and part of it we already did before the conference in a half-day workshop related to Federated PLM (more on this in the following review).

First, Yousef started with the five steps of the business transformation at NIO, where long-term executive commitment is a must.

His statement: “If you don’t report directly to the board, your project is not important”, caused some discomfort in the audience.

As the image shows, a business transformation should start with a systematic description and analysis of which business values and objectives should be targeted, where they fit in the business and IT landscape, what are the measures and how they can be tracked or assessed and ultimately, what we need as tools and technology.

In his paper From a Monolithic PLM Landscape to a Federated Domain and Data Mesh, Yousef described the targeted federated landscape in the image below.

And now some vendors might say, we have all these domains in our product portfolio (or we have slides for that) – so buy our software, and you are good.

And here Yousef added his essential message, illustrated by the image below.

Start by delivering the best user-centric solutions (in an MVP manner – days/weeks – not months/years). Next, be data-centric in all your choices and ultimately build an environment ready for change. As Yousef mentioned: “Make sure you own the data – people and tools can leave!”

And to conclude reporting about his passionate plea for Federated PLM:

“Stop talking about the Single Source of Truth, start Thinking of the Nearest Source of Truth based on the Single Source of Change”.

Heliple-2 PLM Federation:

A Call for Action & Contributions

A great follow-up on Yousef’s session was Erik Herzog‘s presentation about the final findings of the Heliple 2 project, where SAAB Aeronautics, together with Volvo, Eurostep, KTH, IBM and Lynxwork, are investigating a new way of federated PLM, by using an OSLC-based, heterogeneous linked product lifecycle environment.

A great follow-up on Yousef’s session was Erik Herzog‘s presentation about the final findings of the Heliple 2 project, where SAAB Aeronautics, together with Volvo, Eurostep, KTH, IBM and Lynxwork, are investigating a new way of federated PLM, by using an OSLC-based, heterogeneous linked product lifecycle environment.

Heliple stands for HEterogeneous LInked Product Lifecycle Environment

The image below, which I shared several times before, illustrates the mindset of the project.

Last year, during the previous conference in Gothenburg, Erik introduced the concept of federated PLM – read more in my post: The week after PLM Roadmap / PDT Europe 2022, mentioning two open issues to be investigated: Operational feasibility (is it maintainable over time) and Realisation effectivity (is it affordable and maintainable at a reasonable cost)

As you can see from the slide below, the results were positive and encouraged SAAB to continue on this path.

One of the points to mention was that during this project, Lynxwork was used to speed up the development of the OSLC adapter, reducing costs, time and needed skills.

After this successful effort, Erik and several others who joined us at the pre-conference workshop agreed that this initiative is valid to be tested, discussed and exposed outside Sweden.

After this successful effort, Erik and several others who joined us at the pre-conference workshop agreed that this initiative is valid to be tested, discussed and exposed outside Sweden.

Therefore, the Federated PLM Interest Group was launched to join people worldwide who want to contribute to this concept with their experiences and tools.

A first webinar from the group is already scheduled for December 12th at 4 PM CET – you can join and register here.

More to come

Given the length of this blog post, I want to stop here.

Topics to share in the next post are related to my contribution at the conference The Need for a Governance Digital Thread, where I addressed the need for federated PLM capabilities with the upcoming regulations and practices related to sustainability, which require a connected Digital.

Topics to share in the next post are related to my contribution at the conference The Need for a Governance Digital Thread, where I addressed the need for federated PLM capabilities with the upcoming regulations and practices related to sustainability, which require a connected Digital.

I want to combine this post with the findings that Mattias Johansson, CEO of Eurostep, shared in his session: Why a Digital Thread makes a lot of sense, goes beyond manufacturing, and should be standards-based.

I want to combine this post with the findings that Mattias Johansson, CEO of Eurostep, shared in his session: Why a Digital Thread makes a lot of sense, goes beyond manufacturing, and should be standards-based.

There are some interesting findings in these two presentations.

And there was the introduction of AI at the conference, with some experts’ talks and thoughts. Perhaps at this stage, it is too high on Gartner’s hype cycle to go into details. It will surely be THE topic of discussion or interest you must have noticed.

And there was the introduction of AI at the conference, with some experts’ talks and thoughts. Perhaps at this stage, it is too high on Gartner’s hype cycle to go into details. It will surely be THE topic of discussion or interest you must have noticed.

The recent turmoil at OpenAI is an example of that. More to come for sure in the future.

Conclusion

The PLM Roadmap/PDT Europe conference was significant for me because I discovered that companies are working on concepts for a data-driven infrastructure for PLM and are (working on) implementing them. The end of monolithic PLM is visible, and companies need to learn to master data using ontologies, standards and connected digital threads.

Again, a “The weekend after …” post related to my favorite event to which I have contributed since 2014.

Expectations were high this time from my side, in particular because we would have a serious discussion related to connected digital threads and federated PLM.

More about these topics in my post next week as all content is not yet available for sharing.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

Looking back to the conference, there were two noticeable streams.

- The stream where people share their current PLM experiences, traditionally the A&D action groups moderated by CIMdata, is part of this stream. This part I will cover in this post.

- There were forward-looking presentations related to standards, ontologies, and federated PLM—all with an AI flavor. This part I will cover in my next post(s).

The connection between all these sessions was the Digital Thread. The conference’s theme was: The Digital Thread in a Heterogeneous, Extended Enterprise Reality. Let’s start the review with the highlights from the first stream.

Digital Thread: Why Should We Care?

As usual, Peter Bilello from CIMdata kicked off the conference by setting the scene. Peter started by clarifying the two definitions of the Digital Thread.

- The first is a communication framework that allows a connected data flow and integrated view of an asset’s data (i.e., its Digital Twin) throughout its lifecycle across traditionally siloed functional perspectives.

In my terminology, the connected digital thread. - The second is a network of connected information sources around the product lifecycle supporting traceability and decision-making.

In my terminology, the coordinated digital thread is the most straightforward digital thread to achieve.

Peter recommends starting a digital thread by connecting at the beginning of product conceptualization, creating an environment where one can analyze the performance of the product portfolio and the product features and capabilities that need to be planned or how they perform in the field.

In addition, when defining the products, connect them with regulatory requirement databases as they have must-have requirements. A topic I addressed in my session too, besides the existing regulatory requirements, it is expected that in the upcoming years, due to environmental regulations, these requirements will increase, and it will be necessary to have them integrated with your digital thread.

Digital Threads require data governance and are the basis for the various digital twins. Peter discussed the multiple applications of the digital twin, primarily a relation between a virtual asset and a physical asset, except in the early concept phase.

The digital thread is still in the early phase of implementation at companies. A CIMdata survey showed that companies still focus primarily on implementing traditional PDM capabilities, although as the image above shows, there is a growing interest in short-term digital twin/thread implementations.

People, Process & Technology:

The Pillars of Digital Transformation Success

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

Having your people at all levels involved and make them understand the need for change is crucial. A change does not happen top-down. You must educate people and understand what is possible and achievable to change – in the right direction. One of her concluding slides highlights the main points.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

Between the regular sessions there were short sessions from sponsors: Altium, Contact Software, Dassault Systemes, ESI, inensia, Modular Management , PTC, SAP, Share PLM and Sinequa could pitch their value offering.

The Share PLM session, shortly after Christine’s presentation was a nice continuation of the focus on people. I loved the Share PLM image to the left explaining why people do not engage with our dreams.

Learn how LEONI is achieving Digital Continuity in the Automotive Industry.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Recently it has gone through a serious financial crisis and the need for restructuring. This makes it always challenging for a “visionary” PLM project. Tobias mentioned that after disappointing engagements with consultancy firms, they decided on a bottom-up approach to analyze existing processes using BPML. They agreed on a to-be state, fixing bottlenecks and streamlining the flow of information.

Tobias presented a smooth product data flow between their PLM system (PTC Windchill) and ERP (SAP S/4 HANA), clearly stating that the PLM system has become the controlled source of managing product changes.

Their key achievements reported so far were:

- related to BOM creation and routing (approx. 10x faster – from 2-3 days to ¼ day),

- better data consistency (fewer manual steps)

- complete traceability between the systems with PLM as the change management backbone.

The last point I would call the coordinated Digital Thread. The image below shows their current IT landscape in a simplified manner.

This solution might seem obvious for neutral PLM academics or experts, but it is an achievement to do this in an environment with SAP implemented. The eBOM-mBOM discussion is one of the most frequent held discussions – sometimes a battle.

Often, companies use their IT systems first and listen to the vendor’s experts to build integrations instead of starting from the natural business flow of information.

Aerospace & Defense Action groups outcomes

As usual, several Aerospace & Defense (A&D) action groups reported their progress during this conference. The A&D action groups are facilitated by CIMdata, and per topic, various OEMs and suppliers in the A&D industry study and analyze a particular topic, often inviting software vendors to demonstrate and discuss their capabilities with them.

Their activities and reports can be found on the A&D PLM Action page here; In the remainder of this post I will share briefly the ones presented. For a real deep dive in the topics I recommend to find the proceedings per topic on the A&D action page.

The Promise and Reality of the Digital Thread

James Roche CIMdata presented insights from industry research on The Promise and Reality of the Digital Thread. A total of 90 persons completed an in-depth survey about the status and implementation of digital thread concepts in their company. It is clear that the digital thread is still in its early days in this industry, and it is mainly about the coordinated digital thread. The image below reflects the highlights of the survey.

A&D Industry Digital Twin and Digital Thread Standards

Robert Rencher from Boeing explained the progress of their Digital Twin/Digital Thread project, where they had investigated the applicable standards to support a Digital Twin/Digital Thread (Phase 4 out of 7 currently planned). The image below shows that various standards may apply depending on business perspectives.

Their current findings are:

- Digital twin standards overlap, which is most likely a function of standards bodies representing their respective standards as an ongoing development from a historical perspective.

- The limited availability of mature digital twin/thread standards requires greater attention by standards organizations.

- The concept of the digital twin continues to evolve. This dynamic will be a challenge to standards bodies.

- The digital twin and the digital thread are distinct aspects of digital transformation. The corresponding digital twin and digital thread standards will be distinctly different.

- Coordinating the development of the respective standards between the digital twin/thread is needed.

- The digital twin’s organization, definition, and enablement depend on data and information provided by the digital thread.

Roadmap for Enabling Global Collaboration

Robert Gutwein (Pratt & Whitney Canada) and Agnes Gourillon-Jandot (Safran Aircraft Engines) reported their progress on the Global Collaboration project. Collaboration is challenged as exchange methods can vary, as well as dealing with the validation of exchanged information and governing the exchange of information in the context of IP protection.

One of the focal points was to introduce an approach to define standardized supplier agreements that anticipate modern model-based exchanges and collaboration methods.

Robert & Agnes presented the 8-step guideline for the aerospace industry in specific terms, explicitly mentioning the ISO44001 standard as being generic for all industries. An impression of the eight steps and sub-steps can be found below:

The 8-step approach will be supported by a 3rd-party Collaboration Management System (CMS app), which is not mandatory but recommended for use. When an interaction depends on a specific tool, it cannot become an ISO standard. The purpose of the methodology and app is to assist participants to ensure the collaboration aspect between stakeholders contains all the necessary steps & and people.

Model-based OEM/Supplier Collaboration Needs in Aviation Industry

Hartmut Hintze, working at Airbus Operations, presented the latest findings of the MBSE Data Interoperability working group and presented the model-based OEM/Supplier collaboration requirements and standards that need to be supported by the PLM/MBSE solution providers in the future. This collaboration goes beyond sharing CAD models, as you can see from the supplier engagement framework below:

As there are no standards-based tools, their first focus was looking into methodologies for model and behavior exchanges based on use cases. The use cases are then used to verify the state-of-the-art abilities of the various tools. At this moment, there is a focus on SysML V2 as a potential game-changer due to its new API support. As a relative novice on SysML, I cannot explain this topic in more simple words. I recommend that experts visit their presentations on the AD PAG publications page here.

Conclusions

The theme of the conference was related to the Digital Thread – and as you will discover it is valid for everyone. Learn to see the difference between the coordinated Digital Thread and the connected Digital Tread.This time, a lot of information about the Aerospace and Defense Action Groups (AD PAG), which are a fundamental part of this conference. The A&D industry has always been leading in advanced PLM concepts. However, more advanced concepts will come in my next post when touching the connected Digital Thread in the context of federated PLM and let’s not forget AI.

Last week I had the opportunity to discuss the topic of Systems of Engagement in the context of the more extensive PLM landscape.

Last week I had the opportunity to discuss the topic of Systems of Engagement in the context of the more extensive PLM landscape.

I spoke with Andre Wegner from Authentise and their product Threads, MJ Smith from CoLab and Oleg Shilovitsky from OpenBOM.

I invited all three of them to discuss their background, their target customers, the significance of real-time collaboration outside discipline siloes, how they connect to existing PLM systems (Systems of Record), and finally, whether a company culture plays a role.

![]() Listen to this almost 45 min discussion here (save the m4a file first) or watch the discussion below on YouTube.

Listen to this almost 45 min discussion here (save the m4a file first) or watch the discussion below on YouTube.

What I learned from this conversation

- Systems of Engagement are bringing value to small enterprises but also as complementary systems to traditional PLM environments in larger companies.

- Thanks to their SaaS approach, they are easy to install and use to fulfill a need that would take weeks/months to implement in a traditional PLM environment. They can be implemented at a department level or by connecting a value chain of people.

- Due to their real-time collaboration capabilities, these systems provide fast and significant benefits.

- Systems of Engagement represent the trend that companies want to move away from monolithic systems and focus on working with the correct data connected to the users. A topic I will explore in a future blog post/

![]() I am curious to learn what you pick up from this conversation – are we missing other trends? Use the comments to this post.

I am curious to learn what you pick up from this conversation – are we missing other trends? Use the comments to this post.

Related to the company:

Visit Authentise.com

Related to the product:

Learn more about Collaborative Threads

Related to the reported benefits:

– Surgical robotics R&D team tracks 100% of their decisions and saves 150 hours in the first two weeks… doubling the effective size of their team:

Related to the company:

Visit Colabsoftware.com

Related to the product

Raise the bar for your design conversations

Related to the reported benefits

– How Mainspring used CoLab to achieve a 50% cost reduction redesign in half the time

– How Ford Pro Accelerated Time to Market by 30%

Related to the company:

Visit openbom.com

Related to the product:

Global Collaborative SaaS Platform For Industrial Companies

Related to reported benefits:

– OpenBOM makes the OKOS team 20% more efficient by helping to reduce inventory errors, costs, and streamlining supplier process

– VarTech Systems Optimizes Efficiency by Saving Two Hours of Engineering Time Daily with OpenBOM

Conclusion

I believe that Systems of Engagement are important for the digital transformation of a company.

They allow companies to learn what it means to work in a SaaS environment, potentially outside traditional company borders but with a focus on a specific value stream.

Thanks to their rapid deployment times, they help the company to grow its revenue even when the existing business is under threat due to newcomers.

The diagram below says it all. What are your favorite Systems of Engagement?

Hot from the press

Don’t miss the latest episode from the Share PLM podcast with Yousef Hooshmand – the discussion is very much connected to this discussion.

In the past few weeks, together with Share PLM, we recorded and prepared a few podcasts to be published soon. As you might have noticed, for Season 2, our target is to discuss the human side of PLM and PLM best practices and less the technology side. Meaning:

In the past few weeks, together with Share PLM, we recorded and prepared a few podcasts to be published soon. As you might have noticed, for Season 2, our target is to discuss the human side of PLM and PLM best practices and less the technology side. Meaning:

- How to align and motivate people around a PLM initiative?

- What are the best practices when running a PLM initiative?

- What are the crucial skills you need to have as a PLM lead?

And as there are always many success stories to learn on the internet, we also challenged our guests to share the moments where they got experienced.

As the famous quote says:

Experience is what you get when you don’t get what you expect!

We recently published our with Antonio Casaschi from Assa Abloy, a Swedish company you might have never noticed, although their products and services are a part of your daily life.

It was a discussion to my heart. We discussed the various aspects of PLM. What makes a person a PLM professional? And if you have no time to listen for these 35 minutes, read and scan the recording transcript on the transcription tab.

At 0:24:00, Antonio mentioned the concept of Proof of Concept as he had good experiences with them in the past. The remark triggered me to share some observations that a Proof of Concept (POC) is an old-fashioned way to drive change within organizations. Not discussed in this podcast but based on my experience, companies have been using the Proof Of Concepts to win time, as they were afraid to make a decision.

A POC to gain time?

Company A

When working with a well-known company in 2014, I learned they were planning approximately ten POC per year to explore new ways of working or new technologies. As it was a POC based on an annual time scheme, the evaluation at the end of the year was often very discouraging.

When working with a well-known company in 2014, I learned they were planning approximately ten POC per year to explore new ways of working or new technologies. As it was a POC based on an annual time scheme, the evaluation at the end of the year was often very discouraging.

Most of the time, the conclusion was: “Interesting, we should explore this further” /“What are the next POCs for the upcoming year?”

There was no commitment to follow-up; it was more of a learning exercise not connected to any follow-up.

Company B

During one of the PDT events, a company presented that two years POC with the three leading PLM vendors, exploring supplier collaboration. I understood the PLM vendors had invested much time and resources to support this POC, expecting a big deal. However, the team mentioned it was an interesting exercise, and they learned a lot about supplier collaboration.

During one of the PDT events, a company presented that two years POC with the three leading PLM vendors, exploring supplier collaboration. I understood the PLM vendors had invested much time and resources to support this POC, expecting a big deal. However, the team mentioned it was an interesting exercise, and they learned a lot about supplier collaboration.

And nothing happened afterward ………

In 2019

At the 2019 Product Innovation Conference in London, when discussing Digital Transformation within the PLM domain, I shared in my conclusion that the POC was mainly a waste of time as it does not push you to transform; it is an option to win time but is uncommitted.

My main reason for not pushing a POC is that it is more of a limited feasibility study.

- Often to push people and processes into the technical capabilities of the systems used. A focus starting from technology is the opposite of what I have been pushing for longer: First, focus on the value stream – people and processes- and then study which tools and technologies support these demands.

- Second, the POC approach often blocks innovation as the incumbent system providers will claim the desired capabilities will come (soon) within their systems—a safe bet.

The Minimum Viable Product approach (MVP)

With the awareness that we need to work differently and benefit from digital capabilities also came the term Minimum Viable Product or MVP.

The abbreviation MVP is not to be confused with the minimum valuable products or most valuable players.

There are two significant differences with the POC approach:

- You admit the solution does not exist anywhere – so it cannot be purchased or copied.

- You commit to the fact that this new approach will be the right direction to take and agree that a perfect fit solution is not blocking you from starting for real.

These two differences highlight the main challenges of digital transformation in the PLM domain. Digital Transformation is a learning process – it takes time for organizations to acquire and master the needed skills. And secondly, it cannot be a big bang, and I have often referred to the 2017 article from McKinsey: Toward an integrated technology operating model. Image below.

We will soon hear more about digital transformation within the PLM domain during the next episode of our SharePLM podcast. We spoke with Yousef Hooshmand, currently working for NIO, a Chinese multinational automobile manufacturer specializing in designing and developing electric vehicles, as their PLM data lead.

You might have discovered Yousef earlier when he published his paper: “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh”. It is highly recommended that to read the paper if you are interested in a potential PLM future infrastructure. I wrote about this whitepaper in 2022: A new PLM paradigm discussing the upcoming Systems of Engagement on top of a Systems or Record infrastructure.

You might have discovered Yousef earlier when he published his paper: “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh”. It is highly recommended that to read the paper if you are interested in a potential PLM future infrastructure. I wrote about this whitepaper in 2022: A new PLM paradigm discussing the upcoming Systems of Engagement on top of a Systems or Record infrastructure.

To align our terminology with Yousef’s wording, his domains align with the Systems of Engagement definition.

As we discovered and discussed with Yousef, technology is not the blocking issue to start. You must understand the target infrastructure well and where each domain’s activities fit. Yousef mentions that there is enough literature about this topic, and I can refer to the SAAB conference paper: Genesis -an Architectural Pattern for Federated PLM.

For a less academic impression, read my blog post, The week after PLM Roadmap / PDT Europe 2022, where I share the highlights of Erik Herzog’s presentation: Heterogeneous and Federated PLM – is it feasible?

For a less academic impression, read my blog post, The week after PLM Roadmap / PDT Europe 2022, where I share the highlights of Erik Herzog’s presentation: Heterogeneous and Federated PLM – is it feasible?

There is much to learn and discover which standards will be relevant, as both Yousef and Erik mention the importance of standards.

The podcast with Yousef (soon to be found HERE) was not so much about organizational change management and people.

However, Yousef mentioned the most crucial success factor for the transformation project he supported at Daimler. It was C-level support, trust and understanding of the approach, knowing it will be many years, an unavoidable journey if you want to remain competitive.

However, Yousef mentioned the most crucial success factor for the transformation project he supported at Daimler. It was C-level support, trust and understanding of the approach, knowing it will be many years, an unavoidable journey if you want to remain competitive.

And with the journey aspect comes the importance of the Minimal Viable Product. You are starting a journey with an end goal in mind (top-of-the-mountain), and step by step (from base camp to base camp), people will be better covered in their day-to-day activities thanks to digitization.

And with the journey aspect comes the importance of the Minimal Viable Product. You are starting a journey with an end goal in mind (top-of-the-mountain), and step by step (from base camp to base camp), people will be better covered in their day-to-day activities thanks to digitization.

A POC would not help you make the journey; perhaps a small POC would understand what it takes to cross a barrier.

Conclusion

The concept of POCs is outdated in a fast-changing environment where technology is not necessary the blocking issue. Developing practices, new architectures and using the best-fit standards is the future. Embrace the Minimal Viable Product approach. Are you?

Two weeks ago, I shared my post: Modern PLM is (too) complex on LinkedIn, and apparently, it was a topic that touched many readers. Almost a hundred likes, fifty comments and six shares. Not the usual thing you would expect from a PLM blog post.

Two weeks ago, I shared my post: Modern PLM is (too) complex on LinkedIn, and apparently, it was a topic that touched many readers. Almost a hundred likes, fifty comments and six shares. Not the usual thing you would expect from a PLM blog post.

In addition, the article led to offline discussions with peers, giving me an even better understanding of what people think. Here is a summary of the various talks.

What is PLM?

In particular, since the inception of Product Lifecycle Management, software vendors have battled with the various PLM definitions.

In particular, since the inception of Product Lifecycle Management, software vendors have battled with the various PLM definitions.

Initially, PLM was considered an engineering tool for product development, with an extensive potential set of capabilities supported by PowerPoint. Most companies actually implemented a collaborative PDM system at that time and named it PLM.

Was PLM really understood? Look at the infamous Autodesk CEO Carl Bass’s anti-PLM rap from 2007. Next, in 2012, Autodesk introduced its PLM solution called Autodesk PLM 360 as one of the first cloud solutions.

Only with growing connectivity and enterprise information sharing did the definition of PLM start to change.

Only with growing connectivity and enterprise information sharing did the definition of PLM start to change.

PLM became a product information backbone serving downstream deployment with product data – the traditional Teamcenter, Windchill and ENOVIA implementations are typical examples of this phase.

With a digitization effort taking place in the non-PLM domain, connecting product development, design and delivery data to a company’s digital business became necessary. You could say, and this is the CIMdata definition:

PLM is a strategic business approach that applies a consistent set of business solutions that support the collaborative creation, management, dissemination, and use of product definition information. PLM supports the extended enterprise (customers, design and supply partners, etc.)

I agree with this definition; perhaps 80 % of our PLM community does. But how many times have we been trapped again in the same thinking: PLM is a system.

The most recent example is the post from Oleg Shilovitsky last week where he claims: Discover why OpenBOM reigns supreme in the world of PLM!

Nothing wrong with that, as software vendors will always tweak definitions as they need marketing to make a profit, but PLM is not a system.

My main point is that PLM is a “vague” community label with many interpretations. Software vendors have the most significant marketing budget to push their unique definitions. However, also various practitioners in the field have their interpretations.

And maybe Martin Haket’s comment to the post says it all (partly quote):

I’m a bit late to this discussion, but in my opinion, the complexity is mainly due to the fact that the ownership of the processes and data models underlying PLM are not properly organized. ‘Everybody’ in the company is allowed to mix in the discussion and have their opinion; legacy drives departments to undesirable requirements leading to complex implementations.

My intermediate conclusion: Our legacy and lack of a single definition of PLM make it complex.

The PLM professional

On LinkedIn, there are approximately 14.000 PLM consultants in my first and second levels of connections. This number indicates that the label “PLM Consultant” has a specific recognition.

On LinkedIn, there are approximately 14.000 PLM consultants in my first and second levels of connections. This number indicates that the label “PLM Consultant” has a specific recognition.

During my “PLM is complex” discussion, I noticed Roger Tempest’s Professional PLM White paper and started the dialogue with him.

Roger Tempest is one of the co-founders of the PLM Interest Group. He has been trying to create a baseline for a foundational PLM certification with several others. We discussed the challenges of getting the PLM Professional recognized as an essential business role. Can we certify the PLM professional the same way as a certified Configuration Manager or certified Project Manager?

I shared my thoughts with Roger, claiming that our discipline is too vague and diverse and that finding a common baseline is hard.

Therefore, we are curious about your opinion too. Please tell us in the comments to this post what you think about recognizing the PLM professional and what skills should be the minimum. What are the basics of a PLM professional?

Therefore, we are curious about your opinion too. Please tell us in the comments to this post what you think about recognizing the PLM professional and what skills should be the minimum. What are the basics of a PLM professional?

In addition, I participated in some of the SharePLM podcast recordings with PLM experts from the field (follow us here). I raised the PLM professional question either during the podcast or during the preparation of the after-party. Also, there was no single unique answer.

So much is part of PLM: people (culture, skills), processes & data, tools & infrastructures (architectures, standards) combined with execution (waterfall/agile?)

So much is part of PLM: people (culture, skills), processes & data, tools & infrastructures (architectures, standards) combined with execution (waterfall/agile?)

My intermediate conclusion: The broadness of PLM makes it complex to have a common foundation.

More about complexity

PEOPLE: Let’s zoom in on the aspects of complexity. Starting from the People, Processes, Data and Tools discussion. The first thing mentioned is “the people,” organizations usually claim: “the most important assets in our organization are the people”.

PEOPLE: Let’s zoom in on the aspects of complexity. Starting from the People, Processes, Data and Tools discussion. The first thing mentioned is “the people,” organizations usually claim: “the most important assets in our organization are the people”.

However, people are usually the last dimension considered in business changes. Companies start with the tools, try to build the optimal processes and finally push the people into that framework by training, incentives or just force.

The reason for the last approach is that dealing with people is complex. People have their beliefs, their legacy and their motivation. And if people do not feel connected to the business (change), they will become an obstacle to change – look at the example below from my 2014 PI Apparel presentation:

To support the importance of people, I am excited to work with Share PLM and the Season 2 podcast series.

![]() In these episodes, we talk with successful PLM experts about their lessons learned during PLM implementation. You will discover it is a learning process, and connecting to people in different cultures is essential. As it is a learning process, you will find it takes time and human skills to master this complexity.

In these episodes, we talk with successful PLM experts about their lessons learned during PLM implementation. You will discover it is a learning process, and connecting to people in different cultures is essential. As it is a learning process, you will find it takes time and human skills to master this complexity.

Often human skills are called “soft skills”, but actually, they are “vital skills”!

PROCESSES: Regarding the processes part, this is another challenging topic. Often we try to simplify processes to make them workable (sounds like a good idea). With many seasoned PLM practitioners coming from the mechanical product development world, it is not a surprise that many proposed PLM processes are BOM-centric – building on PDM and ERP capabilities.

PROCESSES: Regarding the processes part, this is another challenging topic. Often we try to simplify processes to make them workable (sounds like a good idea). With many seasoned PLM practitioners coming from the mechanical product development world, it is not a surprise that many proposed PLM processes are BOM-centric – building on PDM and ERP capabilities.

In my post: The rise and fall of the BOM? I started with this quote from Jan Bosch:

An excessive focus on the bill of materials leads to significant challenges for companies that are undergoing a digital transformation and adopting continuous value delivery. The lack of headroom, high coupling and versioning hell may easily cause an explosion of R&D expenditure over time.

Today’s organization and product complexity does not allow us to keep the processes simple to remain competitive. In that context, have a look at Erik Herzog’s comment on PLM complexity:

I believe a contributing factor to making PLM complex lies in our tendency to make too many simplifications. Do we understand a simple thing such as configuration change management in incremental development? At least in my organization, there is room for improvement.

In the comment, Erik also provided a link to his conference paper: Introducing the 4-Box Development Model describing the potential interaction between Systems Engineering and Configuration Management. A topic that is too complex for your current company; however, it illustrates that you cannot generalize and simplify PLM overall.

In addition to Erik’s comments, I want to mention again that we can change our business processes thanks to a modern, connected, data-driven infrastructure. From coordinated to connected working with a mix of Systems of Engagement (new) and Systems of Record (traditional). There are no solid best practices yet, but the real PLM geeks are becoming visible.

In addition to Erik’s comments, I want to mention again that we can change our business processes thanks to a modern, connected, data-driven infrastructure. From coordinated to connected working with a mix of Systems of Engagement (new) and Systems of Record (traditional). There are no solid best practices yet, but the real PLM geeks are becoming visible.

TOOLS & DATA: When discussing the future: From Coordinated to Connected, there has always been a discussion about the legacy.

TOOLS & DATA: When discussing the future: From Coordinated to Connected, there has always been a discussion about the legacy.

Should we migrate the legacy data and systems and replace them with new tools and data models? Or are there other options? The interaction of tools and data is often the domain of Enterprise Solution Architects. The Solution Architect’s role becomes increasingly important in a modern, data-driven company, and several are pretty active in PLM, if you know how to find them, because they are not in the mainstream of PLM.

This week we made a SharePLM podcast recording with Yousef Hooshmand. I wrote about his paper “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh” last year as Yousef describes the complex process, that time working at Daimler, to slowly replace old legacy infrastructure with a new modern user/role-centric data-driven infrastructure.

This week we made a SharePLM podcast recording with Yousef Hooshmand. I wrote about his paper “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh” last year as Yousef describes the complex process, that time working at Daimler, to slowly replace old legacy infrastructure with a new modern user/role-centric data-driven infrastructure.

Watch out for this recording to be published soon as Yousef shares various provoking experiences. Not to provoke our community but to create the awareness that a transformation is possible when you have the right long-term vision, strategy and C-level support.

Fighting complexity

Note: We have CM people involved in many of the PLM discussions. I think they are fighting similar complexity like others in the PLM domain. However, they have the benefit that their role: Configuration Manager, is recognized and supported by a commercial certification organization( the Institute of Process Excellence – IpX ).

Note: We have CM people involved in many of the PLM discussions. I think they are fighting similar complexity like others in the PLM domain. However, they have the benefit that their role: Configuration Manager, is recognized and supported by a commercial certification organization( the Institute of Process Excellence – IpX ).

While completing this post, I read this article from Oleg Shilovitsky: PLM User Groups and Communities. At first glance, you might think that PLM User Groups and Communities might be the solution to address the complexity.

And I think they do; there are within most PLM vendors orchestrated User Groups and Communities. Depending on your tool vendor, you will find like-minded people supported by vendor experts. Are they reducing the complexity? Probably not, as they are at the end of the People, Processes, Data and Tools discussion. You are already working within a specific boundary.

And I think they do; there are within most PLM vendors orchestrated User Groups and Communities. Depending on your tool vendor, you will find like-minded people supported by vendor experts. Are they reducing the complexity? Probably not, as they are at the end of the People, Processes, Data and Tools discussion. You are already working within a specific boundary.