You are currently browsing the tag archive for the ‘Awareness’ tag.

In recent months, I’ve noticed a decline in momentum around sustainability discussions, both in my professional network and personal life. With current global crises—like the Middle East conflict and the erosion of democratic institutions—dominating our attention, long-term topics like sustainability seem to have taken a back seat.

In recent months, I’ve noticed a decline in momentum around sustainability discussions, both in my professional network and personal life. With current global crises—like the Middle East conflict and the erosion of democratic institutions—dominating our attention, long-term topics like sustainability seem to have taken a back seat.

But don’t stop reading yet—there is good news, though we’ll start with the bad.

The Convenient Truth

Human behavior is primarily emotional. A lesson valuable in the PLM domain and discussed during the Share PLM summit. As SharePLM notes in their change management approach, we rely on our “gator brain”—our limbic system – call it System 1 and System 2 or Thinking Fast and Slow. Faced with uncomfortable truths, we often seek out comforting alternatives.

Human behavior is primarily emotional. A lesson valuable in the PLM domain and discussed during the Share PLM summit. As SharePLM notes in their change management approach, we rely on our “gator brain”—our limbic system – call it System 1 and System 2 or Thinking Fast and Slow. Faced with uncomfortable truths, we often seek out comforting alternatives.

The film Don’t Look Up humorously captures this tendency. It mirrors real-life responses to climate change: “CO₂ levels were high before, so it’s nothing new.” Yet the data tells a different story. For 800,000 years, CO₂ ranged between 170–300 ppm. Today’s level is ~420 ppm—an unprecedented spike in just 150 years as illustrated below.

Frustratingly, some of this scientific data is no longer prominently published. The narrative has become inconvenient, particularly for the fossil fuel industry.

Persistent Myths

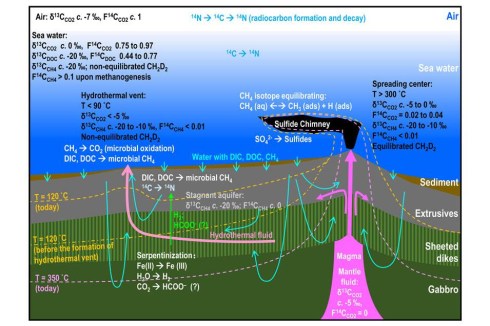

Then there is the pseudo-scientific claim that fossil fuels are infinite because the Earth’s core continually generates them. The Abiogenic Petroleum Origin theory is a fringe theory, sometimes revived from old Soviet science, and lacks credible evidence. See image below

Oil remains a finite, biologically sourced resource. Yet such myths persist, often supported by overly complex jargon designed to impress rather than inform.

The Dissonance of Daily Life

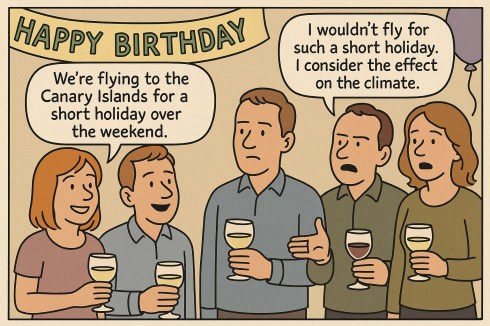

A young couple casually mentioned flying to the Canary Islands for a weekend at a recent birthday party. When someone objected on climate grounds, they simply replied, “But the climate is so nice there!”

“Great climate on the Canary Islands”

This reflects a common divide among young people—some are deeply concerned about the climate, while many prioritize enjoying life now. And that’s understandable. The sustainability transition is hard because it challenges our comfort, habits, and current economic models.

The Cost of Transition

Companies now face regulatory pressure such as CSRD (Corporate Sustainability Reporting Directive), DPP (Digital Product Passport), ESG, and more, especially when selling in or to the European market. These shifts aren’t usually driven by passion but by obligation. Transitioning to sustainable business models comes at a cost—learning curves and overheads that don’t align with most corporations’ short-term, profit-driven strategies.

Companies now face regulatory pressure such as CSRD (Corporate Sustainability Reporting Directive), DPP (Digital Product Passport), ESG, and more, especially when selling in or to the European market. These shifts aren’t usually driven by passion but by obligation. Transitioning to sustainable business models comes at a cost—learning curves and overheads that don’t align with most corporations’ short-term, profit-driven strategies.

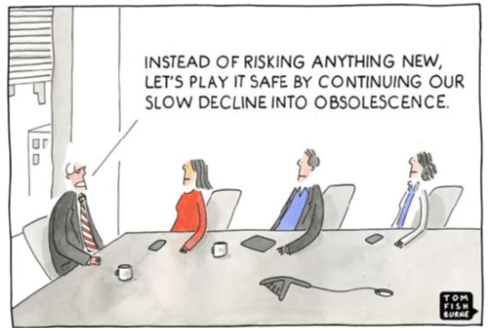

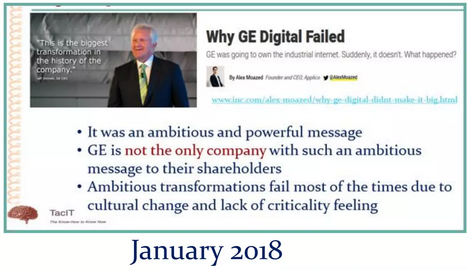

However, we have also seen how long-term visions can be crushed by shareholder demands:

- Xerox (1970s–1980s) pioneered GUI, the mouse, and Ethernet, but failed to commercialize them. Apple and Microsoft reaped the benefits instead.

- General Electric under Jeff Immelt tried to pivot to renewables and tech-driven industries. Shareholders, frustrated by slow returns, dismantled many initiatives.

- Despite ambitious sustainability goals, Siemens faced similar investor pressure, leading to spin-offs like Siemens Energy and Gamesa.

The lesson?

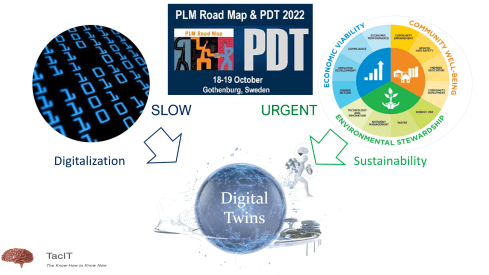

Transforming a business sustainably requires vision, compelling leadership, and patience—qualities often at odds with quarterly profit expectations. I explored these tensions again in my presentation at the PLM Roadmap/PDT Europe 2024 conference, read more here: Model-Based: The Digital Twin.

I noticed discomfort in smaller, closed-company sessions, some attendees said, “We’re far from that vision. ”

I noticed discomfort in smaller, closed-company sessions, some attendees said, “We’re far from that vision. ”

My response: “That’s okay. Sustainability is a generational journey, but it must start now”.

Signs of Hope

Now for the good news. In our recent PGGA (PLM Green Global Alliance) meeting, we asked: “Are we tired?” Surprisingly, the mood was optimistic.

Yes, some companies are downscaling their green initiatives or engaging in superficial greenwashing. But other developments give hope:

- China is now the global leader in clean energy investments, responsible for ~37% of the world’s total. In 2023 alone, it installed over 216 GW of solar PV—more than the rest of the world combined—and leads in wind power too. With over 1,400 GW of renewable capacity, China demonstrates that a centralized strategy can overcome investor hesitation.

- Long-term-focused companies like Iberdrola (Spain), Ørsted (Denmark), Tesla (US), BYD, and CATL (China) continue to invest heavily in EVs and batteries—critical to our shared future.

A Call to Engineers: Design for Sustainability

We may be small at the PLM Green Global Alliance, but we’re committed to educating and supporting the Product Lifecycle Management (PLM) community on sustainability.

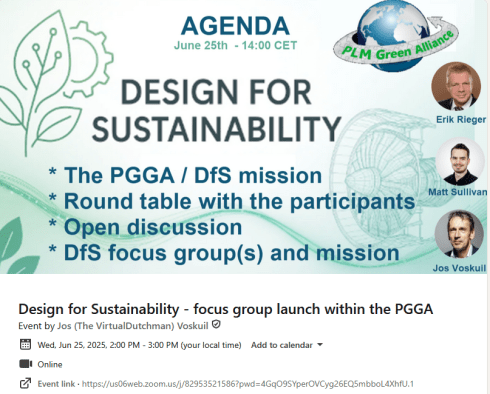

That’s why I’m excited to announce the launch of our Design for Sustainability initiative on June 25th.

Led by Eric Rieger and Matthew Sullivan, this initiative will bring together engineers to collaborate and explore sustainable design practices. Whether or not you can attend live, we encourage everyone to engage with the recording afterward.

Conclusion

Sustainability might not dominate headlines today. In fact, there’s a rising tide of misinformation, offering people a “convenient truth” that avoids hard choices. But our work remains urgent. Building a livable planet for future generations requires long-term vision and commitment, even when it is difficult or unpopular.

So, are you tired—or ready to shape the future?

In the last two weeks, I have had mixed discussions related to PLM, where I realized the two different ways people can look at PLM. Are implementing PLM capabilities driven by a cost-benefit analysis and a business case? Or is implementing PLM capabilities driven by strategy providing business value for a company?

In the last two weeks, I have had mixed discussions related to PLM, where I realized the two different ways people can look at PLM. Are implementing PLM capabilities driven by a cost-benefit analysis and a business case? Or is implementing PLM capabilities driven by strategy providing business value for a company?

Most companies I am working with focus on the first option – there needs to be a business case.

This observation is a pleasant passageway into a broader discussion started by Rob Ferrone recently with his article Money for nothing and PLM for free. He explains the PDM cost of doing business, which goes beyond the software’s cost. Often, companies consider the other expenses inescapable.

This observation is a pleasant passageway into a broader discussion started by Rob Ferrone recently with his article Money for nothing and PLM for free. He explains the PDM cost of doing business, which goes beyond the software’s cost. Often, companies consider the other expenses inescapable.

At the same time, Benedict Smith wrote some visionary posts about the potential power of an AI-driven PLM strategy, the most recent article being PLM augmentation – Panning for Gold.

At the same time, Benedict Smith wrote some visionary posts about the potential power of an AI-driven PLM strategy, the most recent article being PLM augmentation – Panning for Gold.

It is a visionary article about what is possible in the PLM space (if there was no legacy ☹), based on Robust Reasoning and how you could even start with LLM Augmentation for PLM “Micro-Tasks.

Interestingly, the articles from both Rob and Benedict were supported by AI-generated images – I believe this is the future: Creating an AI image of the message you have in mind.

![]() When you have digested their articles, it is time to dive deeper into the different perspectives of value and costs for PLM.

When you have digested their articles, it is time to dive deeper into the different perspectives of value and costs for PLM.

From a system to a strategy

The biggest obstacle I have discovered is that people relate PLM to a system or, even worse, to an engineering tool. This 20-year-old misunderstanding probably comes from the fact that in the past, implementing PLM was more an IT activity – providing the best support for engineers and their data – than a business-driven set of capabilities needed to support the product lifecycle.

The biggest obstacle I have discovered is that people relate PLM to a system or, even worse, to an engineering tool. This 20-year-old misunderstanding probably comes from the fact that in the past, implementing PLM was more an IT activity – providing the best support for engineers and their data – than a business-driven set of capabilities needed to support the product lifecycle.

The System approach

Traditional organizations are siloed, and initially, PLM always had the challenge of supporting product information shared throughout the whole lifecycle, where there was no conventional focus per discipline to invest in sharing – every discipline has its P&L – and sharing comes with a cost.

At the management level, the financial data coming from the ERP system drives the business. ERP systems are transactional and can provide real-time data about the company’s performance. C-level management wants to be sure they can see what is happening, so there is a massive focus on implementing the best ERP system.

At the management level, the financial data coming from the ERP system drives the business. ERP systems are transactional and can provide real-time data about the company’s performance. C-level management wants to be sure they can see what is happening, so there is a massive focus on implementing the best ERP system.

In some cases, I noticed that the investment in ERP was twenty times more than the PLM investment.

Why would you invest in PLM? Although the ERP engine will slow down without proper PLM, the complexity of PLM compared to ERP is a reason for management to look at the costs, as the PLM benefits are hard to grasp and depend on so much more than just execution.

Why would you invest in PLM? Although the ERP engine will slow down without proper PLM, the complexity of PLM compared to ERP is a reason for management to look at the costs, as the PLM benefits are hard to grasp and depend on so much more than just execution.

See also my old 2015 article: How do you measure collaboration?

As I mentioned, the Cost of Non-Quality, too many iterations, time lost by searching, material scrap, manufacturing delays or customer complaints – often are considered inescapable parts of doing business (like everyone else) – it happens all the time..

The strategy approach

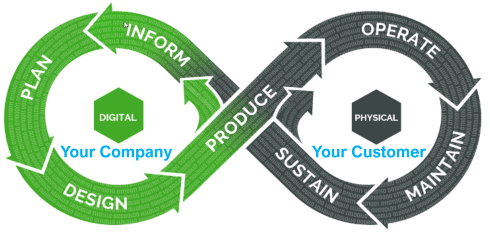

It is clear that when we accept the modern definition of PLM, we should be considering product lifecycle management as the management of the product lifecycle (as Patrick Hillberg says eloquently in our Share PLM podcast – see the image at the bottom of this post, too).

It is clear that when we accept the modern definition of PLM, we should be considering product lifecycle management as the management of the product lifecycle (as Patrick Hillberg says eloquently in our Share PLM podcast – see the image at the bottom of this post, too).

When you implement a strategy, it is evident that there should be a long(er) term vision behind it, which can be challenging for companies. Also, please read my previous article: The importance of a (PLM) vision.

I cannot believe that, although perhaps not fully understood, the importance of a data-driven approach will be discussed at many strategic board meetings. A data-driven approach is needed to implement a digital thread as the foundation for enhanced business models based on digital twins and to ensure data quality and governance supporting AI initiatives.

I cannot believe that, although perhaps not fully understood, the importance of a data-driven approach will be discussed at many strategic board meetings. A data-driven approach is needed to implement a digital thread as the foundation for enhanced business models based on digital twins and to ensure data quality and governance supporting AI initiatives.

It is a process I have been preaching: From Coordinated to Coordinated and Connected.

We can be sure that at the board level, strategy discussions should be about value creation, not about reducing costs or avoiding risks as the future strategy.

Understanding the (PLM) value

The biggest challenge for companies is to understand how to modernize their PLM infrastructure to bring value.

* Step 1 is obvious. Stop considering PLM as a system with capabilities, but investigate how you transform your infrastructure from a collection of systems and (document) interfaces towards a federated infrastructure of connected tools.

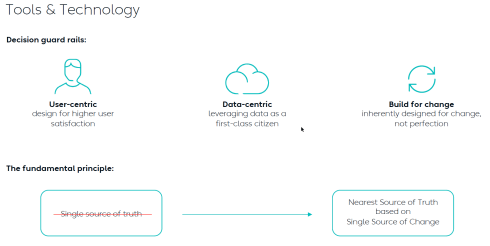

![]() Note: the paradigm shift from a Single Source of Truth (in my system) towards a Nearest Source of Truth and a Single Source of Change.

Note: the paradigm shift from a Single Source of Truth (in my system) towards a Nearest Source of Truth and a Single Source of Change.

* Step 2 is education. A data-driven approach creates new opportunities and impacts how companies should run their business. Different skills are needed, and other organizational structures are required, from disciplines working in siloes to hybrid organizations where people can work in domain-driven environments (the Systems of Record) and product-centric teams (the System of Engagement). AI tools and capabilities will likely create an effortless flow of information within the enterprise.

* Step 3 is building a compelling story to implement the vision. Implementing new ways of working based on new technical capabilities requires also organizational change. If your organization keeps working similarly, you might gain some percentage of efficiency improvements.

The real benefits come from doing things differently, and technology allows you to do it differently. However, this requires people to work differently, too, and this is the most common mistake in transformational projects.

The real benefits come from doing things differently, and technology allows you to do it differently. However, this requires people to work differently, too, and this is the most common mistake in transformational projects.

Companies understand the WHY and WHAT but leave the HOW to the middle management.

People are squeezed into an ideal performance without taking them on the journey. For that reason, it is essential to build a compelling story that motivates individuals to join the transformation. Assisting companies in building compelling story lines is one of the areas where I specialize.

People are squeezed into an ideal performance without taking them on the journey. For that reason, it is essential to build a compelling story that motivates individuals to join the transformation. Assisting companies in building compelling story lines is one of the areas where I specialize.

Feel free to contact me to explore the opportunity for your business.

It is not the technology!

With the upcoming availability of AI tools, implementing a PLM strategy will no longer depend on how IT understands the technology, the systems and the interfaces needed.

As Yousef Hooshmand‘s above image describes, a federated infrastructure of connected (SaaS) solutions will enable companies to focus on accurate data (priority #1) and people creating and using accurate data (priority #1). As you can see, people and data in modern PLM are the highest priority.

Therefore, I look forward to participating in the upcoming Share PLM Summit on 27-28 May in Jerez.

It will be a breakthrough – where traditional PLM conferences focus on technology and best practices. This conference will focus on how we can involve and motivate people. Regardless of which industry you are active in, it is a universal topic for any company that wants to transform.

Conclusion

Returning to this article’s introduction, modern PLM is an opportunity to transform the business and make it future-proof. It needs to be done for sure now or in the near future. Therefore PLM initiatives should be considered from the value point first instead of focusing on the costs. How well are you connected to your management’s vision to make PLM a value discussion?

Enjoy the podcast – several topics discuss relate to this post.

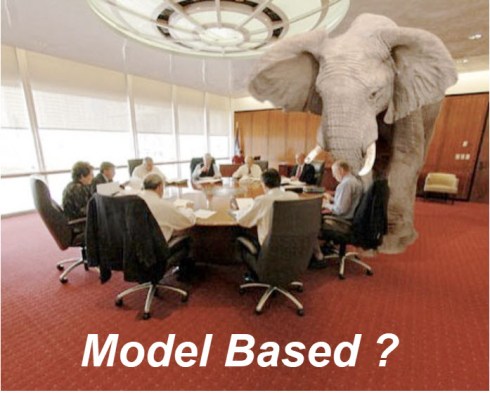

Four years ago, I wrote a series of posts with the common theme: The road to model-based and connected PLM. I discussed the various aspects of model-based and the transition from considering PLM as a system towards considering PLM as a strategy to implement a connected infrastructure.

Four years ago, I wrote a series of posts with the common theme: The road to model-based and connected PLM. I discussed the various aspects of model-based and the transition from considering PLM as a system towards considering PLM as a strategy to implement a connected infrastructure.

Since then, a lot has happened. The terminology of Digital Twin and Digital Thread has become better understood. The difference between Coordinated and Connected ways of working has become more apparent. Spoiler: You need both ways. And at this moment, Artificial Intelligence (AI) has become a new hype.

Many current discussions in the PLM domain are about structures and data connectivity, Bills of Materials (BOM), or Bills of Information(BOI) combined with the new term Digital Thread as a Service (DTaaS) introduced by Oleg Shilovitsky and Rob Ferrone. Here, we envision a digitally connected enterprise, based connected services.

Many current discussions in the PLM domain are about structures and data connectivity, Bills of Materials (BOM), or Bills of Information(BOI) combined with the new term Digital Thread as a Service (DTaaS) introduced by Oleg Shilovitsky and Rob Ferrone. Here, we envision a digitally connected enterprise, based connected services.

A lot can be explored in this direction; also relevant Lionel Grealou’s article in Engineering.com: RIP SaaS, long live AI-as-a-service and follow-up discussions related tot his topic. I chimed in with Data, Processes and AI.

However, we also need to focus on the term model-based or model-driven. When we talk about models currently, Large Language Models (LMM) are the hype, and when you are working in the design space, 3D CAD models might be your first association.

There is still confusion in the PLM domain: what do we mean by model-based, and where are we progressing with working model-based?

A topic I want to explore in this post.

It is not only Model-Based Definition (MBD)

Before I started The Road to Model-Based series, there was already the misunderstanding that model-based means 3D CAD model-based. See my post from that time: Model-Based – the confusion.

Model-Based Definition (MBD) is an excellent first step in understanding information continuity, in this case primarily between engineering and manufacturing, where the annotated model is used as the source for manufacturing.

In this way, there is no need for separate 2D drawings with manufacturing details, reducing the extra need to keep the engineering and manufacturing information in sync and, in addition, reducing the chance of misinterpretations.

MBD is a common practice in aerospace and particularly in the automotive industry. Other industries are struggling to introduce MBD, either because the OEM is not ready or willing to share information in a different format than 3D + 2D drawings, or their supplier consider MBD too complex for them compared to their current document-driven approach.

MBD is a common practice in aerospace and particularly in the automotive industry. Other industries are struggling to introduce MBD, either because the OEM is not ready or willing to share information in a different format than 3D + 2D drawings, or their supplier consider MBD too complex for them compared to their current document-driven approach.

In its current practice, we must remember that MBD is part of a coordinated approach.

Companies exchange technical data packages based on potential MBD standards (ASME Y14.47 /ISO 16792 but also JT and 3D PDF). It is not yet part of the connected enterprise, but it connects engineering and manufacturing using the 3D Model as the core information carrier.

As I wrote, learning to work with MBD is a stepping stone in understanding a modern model-based and data-driven enterprise. See my 2022 post: Why Model-based Definition is important for us all.

As I wrote, learning to work with MBD is a stepping stone in understanding a modern model-based and data-driven enterprise. See my 2022 post: Why Model-based Definition is important for us all.

To conclude on MBD, Model-based definition is a crucial practice to improve collaboration between engineering, manufacturing, and suppliers, and it might be parallel to collaborative BOM structures.

And it is transformational as the following benefits are reported through ChatGPT:

- Up to 30% faster in product development cycles due to reduced need for 2D drawings and fewer design iterations. Boeing reported a 50% reduction in engineering change requests by using MBD.

- Companies using MBD see a 20–50% reduction in manufacturing errors caused by misinterpretations of 2D drawings. Caterpillar reported a 30% improvement in first-pass yield due to better communication between design and manufacturing teams.

- MBD can reduce product launch time by 20–50% by eliminating bottlenecks related to traditional drawings and manual data entry.

- 20–30% reduction in documentation costs by eliminating or reducing 2D drawings. Up to 60% savings on rework and scrap costs by reducing errors and inconsistencies.

Over five years, Lockheed Martin achieved a $300 million cost savings by implementing MBD across parts of its supply chain.

MBSE is not a silo.

For many people, Model-Based Systems Engineering(MBSE) seems to be something not relevant to their business, or it is a discipline for a small group of specialists that are conducting system engineering practices, not in the traditional document-driven V-shape approach but in an iterative process following the V-shape, meanwhile using models to predict and verify assumptions.

And what is the value connected in a PLM environment?

A quick heads up – what is a model

A model is a simplified representation of a system, process, or concept used to understand, predict, or optimize real-world phenomena. Models can be mathematical, computational, or conceptual.

We need models to:

- Simplify Complexity – Break down intricate systems into manageable components and focus on the main components.

- Make Predictions – Forecast outcomes in science, engineering, and economics by simulating behavior – Large Language Models, Machine Learning.

- Optimize Decisions – Improve efficiency in various fields like AI, finance, and logistics by running simulations and find the best virtual solution to apply.

- Test Hypotheses – Evaluate scenarios without real-world risks or costs for example a virtual crash test..

It is important to realize models are as accurate as the data elements they are running on – every modeling practices has a certain need for base data, be it measurements, formulas, statistics.

I watched and listened to the interesting podcast below, where Jonathan Scott and Pat Coulehan discuss this topic: Bridging MBSE and PLM: Overcoming Challenges in Digital Engineering. If you have time – watch it to grasp the challenges.

The challenge in an MBSE environment is that it is not a single tool with a single version of the truth; it is merely a federated environment of shared datasets that are interpreted by modeling applications to understand and define the behavior of a product.

In addition, an interesting article from Nicolas Figay might help you understand the value for a broader audience. Read his article: MBSE: Beyond Diagrams – Unlocking Model Intelligence for Computer-Aided Engineering.

In addition, an interesting article from Nicolas Figay might help you understand the value for a broader audience. Read his article: MBSE: Beyond Diagrams – Unlocking Model Intelligence for Computer-Aided Engineering.

Ultimately, and this is the agreement I found on many PLM conferences, we agree that MBSE practices are the foundation for downstream processes and operations.

We need a data-driven modeling environment to implement Digital Twins, which can span multiple systems and diagrams.

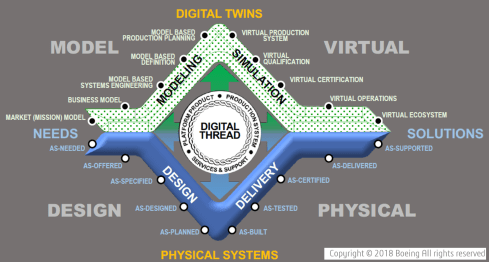

In this context, I like the Boeing diamond presented by Don Farr at the 2018 PLM Roadmap EMEA conference. It is a model view of a system, where between the virtual and the physical flow, we will have data flowing through a digital thread.

Where this image describes a model-based, data-driven infrastructure to deliver a solution, we can, in addition, apply the DevOp approach to the bigger picture for solutions in operation, as depicted by the PTC image below.

Model-based the foundation of the digital twins

![]() To conclude on MBSE, I hope that it is clear why I am promoting considering MBSE not only as the environment to conceptualize a solution but also as the foundation for a digital enterprise where information is connected through digital threads and AI models (**new**)

To conclude on MBSE, I hope that it is clear why I am promoting considering MBSE not only as the environment to conceptualize a solution but also as the foundation for a digital enterprise where information is connected through digital threads and AI models (**new**)

The data borders between traditional system domains will disappear – the single source of change and the nearest source of truth – paradigm, and this post, The Big Blocks of Future Lifecycle Management, from Prof. Dr. Jörg Fischer, are all about data domains.

However, having accessible data using all kinds of modern data sources and tools are necessary to build digital twins – either to simulate and predict a physical solution or to analyze a physical solution and, based on the analysis, either adjust the solutions or improve your virtual simulations.

Digital Twins at any stage of the product life cycle are crucial to developing and maintaining sustainable solutions, as I discussed in previous lectures. See the image below:

Conclusion

Data quality and architecture are the future of a modern digital enterprise – the building blocks. And there is a lot of discussion related to Artificial Intelligence. This will only work when we master the methodology and practices related to a data-driven and sustainable approach using models. MBD is not new, MBSE perhaps still new, building blocks for a model-based approach. Where are you in your lifecycle?

It was a great pleasure to attend my favorite vendor-neutral PLM conference this year in Gothenburg—approximately 150 attendees, where most have expertise in the PLM domain.

It was a great pleasure to attend my favorite vendor-neutral PLM conference this year in Gothenburg—approximately 150 attendees, where most have expertise in the PLM domain.

We had the opportunity to learn new trends, discuss reality, and meet our peers.

The theme of the conference was:Value Drivers for Digitalization of the Product Lifecycle, a topic I have been discussing in my recent blog posts, as we need help and educate companies to understand the importance of digitalization for their business.

The two-day conference covered various lectures – view the agenda here – and of course the topic of AI was part of half of the lectures, giving the attendees a touch of reality.

The two-day conference covered various lectures – view the agenda here – and of course the topic of AI was part of half of the lectures, giving the attendees a touch of reality.

In this first post, I will cover the main highlight of Day 1.

Value Drivers for Digitalization of the Product Lifecycle

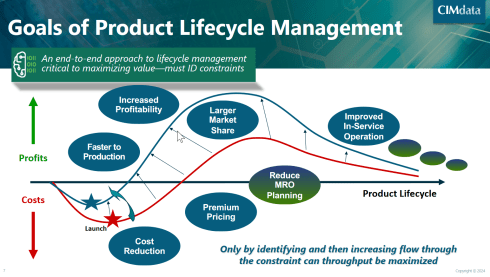

As usual, the conference started with Peter Bilello, president & CEO of CIMdata, stressing again that when implementing a PLM strategy, the maximum result comes from a holistic approach, meaning look at the big picture, don’t just focus on one topic.

As usual, the conference started with Peter Bilello, president & CEO of CIMdata, stressing again that when implementing a PLM strategy, the maximum result comes from a holistic approach, meaning look at the big picture, don’t just focus on one topic.

It was interesting to see again the classic graph (below) explaining the benefits of the end-to-end approach – I believe it is still valid for most companies; however, as I shared in my session the next day, implementing concepts of a Products Service System will require more a DevOp type of graph (more next week).

Next, Peter went through the CIMdata’s critical dozen with some updates. You can look at the updated 2024 image here.

Some of the changes: Digital Thread and Digital Twin are merged– as Digital Twins do not run on documents. And instead of focusing on Artificial Intelligence only, CIMdata introduced Augmented Intelligence as we should also consider solutions that augment human activities, not just replace them.

Some of the changes: Digital Thread and Digital Twin are merged– as Digital Twins do not run on documents. And instead of focusing on Artificial Intelligence only, CIMdata introduced Augmented Intelligence as we should also consider solutions that augment human activities, not just replace them.

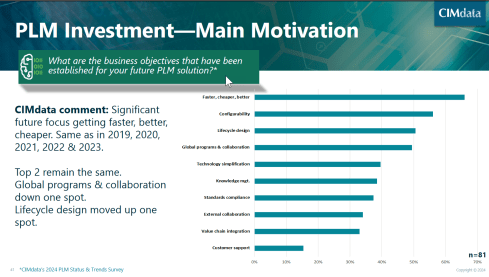

Peter also shared the results of a recent PLM survey where companies were asked about their main motivation for PLM investments. I found the result a little discouraging for several reasons:

The number one topic is still faster, cheaper and better – almost 65 % of the respondents see this as their priority. This number one topic illustrates that Sustainability has not reached the level of urgency, and perhaps the topic can be found in standards compliance.

Many of the companies with Sustainability in their mission should understand that a digital PLM infrastructure is the foundation for most initiatives, like Lifecycle Analysis (LCA). Sustainability is more than part of standards compliance, if it was mentioned anyway.

Many of the companies with Sustainability in their mission should understand that a digital PLM infrastructure is the foundation for most initiatives, like Lifecycle Analysis (LCA). Sustainability is more than part of standards compliance, if it was mentioned anyway.

The second disappointing observation for the understanding of PLM is that customer support is mentioned only by 15 % of the companies. Again, connecting your products to your customers is the first step to a DevOp approach, and you need to be able to optimize your product offering to what the customer really wants.

Digital Transformation of the Value Chain in Pharma

The second keynote was from Anders Romare, Chief Digital and Information Officer at Novo Nordisk. Anders has been participating in the PDT conference in the past. See my 2016 PLM Roadmap/PDT Europe post, where Anders presented on behalf of Airbus: Digital Transformation through an e2e PLM backbone.

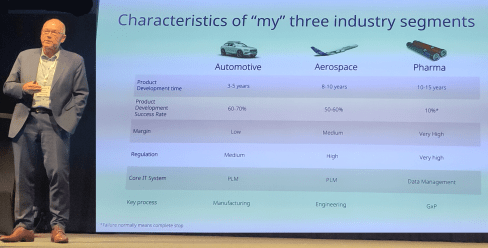

Anders started by sharing some of the main characteristics of the companies he has been working for. Volvo, Airbus and now Novo Nordisk. It is interesting to compare these characteristics as they say a lot about the industry’s focus. See below:

Anders is now responsible for digital transformation in Novo Nordisk, which is a challenge in a heavily regulated industry.

One of the focus areas for Novo Nordisk in 2024 is also Artificial Intelligence, as you can see from the image to the left (click on it for the details).

One of the focus areas for Novo Nordisk in 2024 is also Artificial Intelligence, as you can see from the image to the left (click on it for the details).

As many others in this conference, Anders mentioned AI can only be applicable when it runs on top of accurate data.

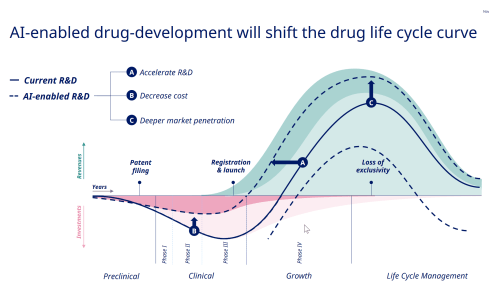

Understanding the potential of AI, they identified 59 areas where AI can create value for the business, and it is interesting to compare the traditional PLM curve Peter shared in his session with the potential AI-enabled drug-development curve as presented by Anders below:

Next, Anders shared some of the example cases of this exploration, and if you are interested in the details, visit their tech.life site.

When talking about the engineering framing of PLM, it was interesting to learn from Anders, who had a long history in PLM before Novo Nordisk, when he replied to a question from the audience that he would never talk about PLM at the management level. It’s very much aligned with my Don’t mention the P** word post.

A Strategy for the Management of Large Enterprise PLM Platforms

One of the highlights for me on Day 1 was Jorgen Dahl‘s presentation. Jorgen, a senior PLM director at GE Aerospace, shared their story towards a single PLM approach needed due to changes in businesses. And addressing the need for a digital thread also comes with an increased need for uptime.

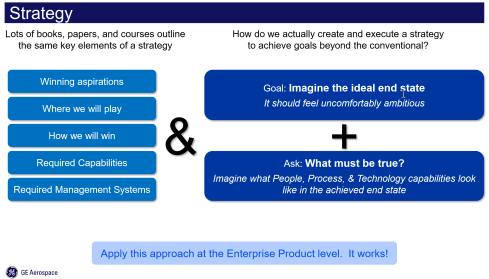

I like his strategy to execution approach, as shown in the image below, as it contains the most important topics. The business vision and understanding, the imagination of the end status and What must be True?

In my experience, the three blocks are iteratively connected. When describing the strategy, you might not be able to identify the required capabilities and management systems yet.

But then, when you start to imagine the ideal end state, you will have to consider them. And for companies, it is essential to be ambitious – or, as Jorgen stated, uncomfortable ambitious. Go for the 75 % to almost 100 % to be true. Also, asking What must be True is an excellent way to allow people to be involved and creatively explore the next steps.

Note: This approach does not provide all the details, as it will be a multiyear journey of learning and adjusting towards the future. Therefore, the strategy must be aligned with the culture to avoid continuous top-down governance of the details. In that context, Jorgen stated:

“Culture is what happens when you leave the room.”

It is a more positive statement than the famous Peter Drucker’s quote: “Culture eats strategy for breakfast.”

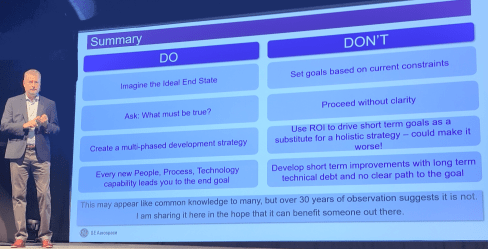

Jorgen’s concluding slide mentions potential common knowledge, although I believe the way Jorgen used the right easy-to-digest points will be helpful for all organizations to step back, look at their initiatives, and compare where they can improve.

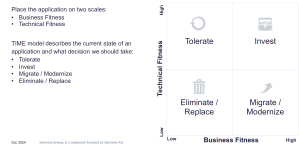

How a Business Capability Model and Application Portfolio Management Support Through Changing Times

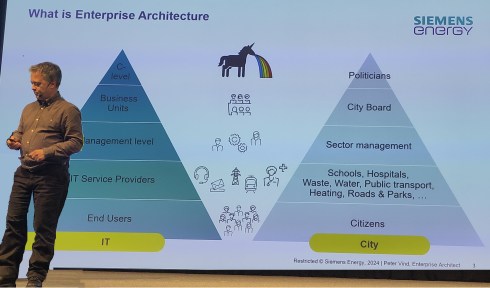

Peter Vind‘s presentation was nicely connected to the presentation from Jorgen Dahl. Peter, who is an enterprise architect at Siemens Energy, started by explaining where the enterprise architect fits in an organization and comparing it to a city.

In his entertaining session, he mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

Peter explained how they used Business Capability Modeling when Siemens Energy went through various business stages. First, the carve-out from Siemens AG and later the merger with Siemens Gamesa. Their challenge is to understand which capabilities remain, which are new or overlapping, both during the carve-out and merging process.

The business capability modeling leads to a classification of the applications used at different levels of the organization, such as customer-facing, operational, or supporting business capabilities.

Next, for the lifecycle of the applications, the TIME approach was used, meaning that each application was mapped to business fitness and technical fitness. Click on the diagram to see the details.

The result could look like the mapping shown below – a comprehensive overview of where the action is

It is a rational approach; however, Peter mentioned that we also should be aware of the HIPPOs in an organization. If there is a HiPPO (Highest Paid Person’s Opinion) in play, you might face a political battle too.

It was a great educational session illustrating the need for an Enterprise Architect, the value of business capabilities modeling and the TIME concept.

And some more …

There were several other exciting presentations during day 1; however, as not all presentations are publicly available, I cannot discuss them in detail; I just looked at my notes.

Driving Trade Compliance and Efficiency

Peter Sandeck, Director of Project Management at TE Connectivity shared what they did to motivate engineers to endorse their Jurisdiction and Classification Assessment (JCA) process. Peter showed how, through a Minimal Viable Product (MVP) approach and listening to the end-users, they reached a higher Customer Satisfaction (CSAT) score after several iterations of the solution developed for the JCA process.

Peter Sandeck, Director of Project Management at TE Connectivity shared what they did to motivate engineers to endorse their Jurisdiction and Classification Assessment (JCA) process. Peter showed how, through a Minimal Viable Product (MVP) approach and listening to the end-users, they reached a higher Customer Satisfaction (CSAT) score after several iterations of the solution developed for the JCA process.

This approach is an excellent example of an agile method in which engineers are involved. My remaining question is still – are the same engineers in the short term also pushed to make lifecycle assessments? More work; however, I believe if you make it personal, the same MVP approach could work again.

Value of Model-Based Product Architecture

Jussi Sippola, Chief Expert, Product Architecture Management & Modularity at Wärtsilä, presented an excellent story related to the advantages of a more modular product architecture. Where historically, products were delivered based on customer requirements through the order fulfillment process, now there is in parallel the portfolio management process, defining the platform of modules, features and options.

Jussi Sippola, Chief Expert, Product Architecture Management & Modularity at Wärtsilä, presented an excellent story related to the advantages of a more modular product architecture. Where historically, products were delivered based on customer requirements through the order fulfillment process, now there is in parallel the portfolio management process, defining the platform of modules, features and options.

Jussi mentioned that they were able to reduce the number of parts by 50 % while still maintaining the same level of customer capabilities. In addition, thanks to modularity, they were able to reduce the production lead time by 40 % – essential numbers if you want to remain competitive.

Conclusion

Day 1 was a day where we learned a lot as an audience, and in addition, the networking time and dinner in the evening were precious for me and, I assume, also for many of the participants. In my next post, we will see more about new ways of working, the AI dream and Sustainability.

In the past months, I have had several discussions related to migrating PLM data, either from one system to another or from consolidating a collection of applications into a single environment. Does this sound familiar?

In the past months, I have had several discussions related to migrating PLM data, either from one system to another or from consolidating a collection of applications into a single environment. Does this sound familiar?

Let me share some experiences and lessons learned to avoid the Migration Migraine.

It is not a technical guide but a collection of experiences and thoughts that you might have missed when considering to solve the technical dream.

Halfway I realized I was too ambitious; therefore, another post will follow this introduction. Here, I will focus on the business side and the digital transformation journey.

Halfway I realized I was too ambitious; therefore, another post will follow this introduction. Here, I will focus on the business side and the digital transformation journey.

Garbage Out – Garbage In

The Garbage Out-In statement is somehow the paradigm we are used to in our day-to-day lives. When you buy a new computer, you use backup and restore. Even easier, nowadays, the majority of the data is already in the cloud.

The Garbage Out-In statement is somehow the paradigm we are used to in our day-to-day lives. When you buy a new computer, you use backup and restore. Even easier, nowadays, the majority of the data is already in the cloud.

This simple scenario assumes that all professional systems should be easily upgrade-able. We become unaware of the amount of data we store and its relevance.

This phenomenon already has a name: “Dark Data.” Dark Data consumes storage energy in the cloud and is no longer visible. Please read all about it here: Dark Data.

TIP 1: Every migration is a moment to clean up your data. By dragging everything with you, the burden of migrating becomes bigger. In easy migrations, do a clean-up—it prevents future, more extensive issues.

TIP 1: Every migration is a moment to clean up your data. By dragging everything with you, the burden of migrating becomes bigger. In easy migrations, do a clean-up—it prevents future, more extensive issues.

Never follow the Garbage Out – Garbage in principle, even if it is easy!

Migrations in the PLM domain are different – setting the scene.

Before discussing the various scenarios, let’s examine what companies are doing. For early PLM adopters in the Automotive, Aerospace, and Defense Industries, migrations from mainframes to modern infrastructures have become impossible. The real problem is not only the changing hardware but also the changing data and data models.

Before discussing the various scenarios, let’s examine what companies are doing. For early PLM adopters in the Automotive, Aerospace, and Defense Industries, migrations from mainframes to modern infrastructures have become impossible. The real problem is not only the changing hardware but also the changing data and data models.

For these companies, the solution is often to build an entirely new PLM infrastructure on top of the existing infrastructure, where manageable data pieces are migrated to new environments using data lakes, dashboards, and custom apps to support modern users.

Migration in this case is a journey as long as the data lives – and we can learn from them!

Follow the money

From a business perspective, migrations are considered a negative distractor. Talking about them raises awareness of their complexity, which might jeopardize enthusiasm.

From a business perspective, migrations are considered a negative distractor. Talking about them raises awareness of their complexity, which might jeopardize enthusiasm.

For the initiator, the PLM software vendor or implementer, it might endanger the sales deal.

Traditional IT organizations strive for simplification—one CAD, one PLM or one ERP system to manage. Although this argument makes sense, an analysis should always be done comparing the benefits and the (migration) costs and risks to reach the ideal situation.

In those discussions often, migrations are downplayed

Without naming companies, I have observed the downplaying several times, even at some prominent enterprises. So, if you recognize your company in this process, you are not alone.

TIP 2: Migrations are never simple. Make migration a serious topic of your PLM project – as important as the software. This approach means analyzing the potential migration risks and their mitigation is needed.

TIP 2: Migrations are never simple. Make migration a serious topic of your PLM project – as important as the software. This approach means analyzing the potential migration risks and their mitigation is needed.

Please read about the Xylem story in my recent post: The week after the PDSFORUM 2024

The Big Bang has the highest risk and might again lead to garbage out—garbage in.

You are responsible for your garbage.

It may sound disparaging, but it is not. Most companies are aware that people, tools and policies have changed over the years. Due to the coordinated approach to working, disciplines did not need to care about downstream or upstream usage of their initially created data – Excel and PDFs are the bridges between disciplines.

All the actual knowledge and context are stored in the heads of experienced employees who have gotten used to dealing with inconsistencies. And they will retire, so there is an urgent need for actual data quality and governance. Read more about the journey from Coordinated to Connected in these articles.

Even if you are not yet thinking about migrations, the digital transformation in the PLM domain is coming, and we should learn to work in a connected mode.

TIP 3: Create a team in your organization that assesses the current data quality and defines the potential future enterprise (data) architecture. Then, start improving the quality of the current generated data. Like the ISO 900x standard, the ISO 8000 standard already exists for data quality.

TIP 3: Create a team in your organization that assesses the current data quality and defines the potential future enterprise (data) architecture. Then, start improving the quality of the current generated data. Like the ISO 900x standard, the ISO 8000 standard already exists for data quality.

The future is data-driven; prepare yourself for the future.

Migration scenarios and their best practices

Here are some migrations scenario’s – two in this post and more in an upcoming post.

From Relational to Object-oriented

One of my earlier projects, starting in 2010 with SmarTeam, was migrating a mainframe-based application for airplane certification to a modern Microsoft infrastructure.

One of my earlier projects, starting in 2010 with SmarTeam, was migrating a mainframe-based application for airplane certification to a modern Microsoft infrastructure.

The goal was to create a new environment that could be used both as a replacement for the mainframe application and as the design and validation environment to implement changes to the current airplanes during a maintenance or upgrade activity.

The need was high because detailed documentation about the logic of the current application did not exist, and only one person who understood the logic was partly available.

So, internally, the relational database was a black box. The tables in the database contained a mix of item data, document data, change status and versions. The documents were stored in directories with meaningful file names but disconnected from the application.

The initial estimate was that the project would take two to three months, so a fixed price for two months was agreed upon. However, it became almost a two-year project, and in the end, the result seemed to be reliable (there was never mathematical proof).

The disadvantage was that SmarTeam ended up being so highly customized that automatic upgrades would not work for this version anymore—a new legacy was created with modern technology.

The disadvantage was that SmarTeam ended up being so highly customized that automatic upgrades would not work for this version anymore—a new legacy was created with modern technology.

The same story, combined with the example of Ericsson’s migration attempt, is described in the 2016 post, The PLM Migration Dilemma. For me, the lesson learned from these examples leads to the following recommendation.

TIP 4: When there is a paradigm change in the data model, don’t migrate but establish a new (data-driven) infrastructure and connect to your legacy as much as possible in read-only mode.

TIP 4: When there is a paradigm change in the data model, don’t migrate but establish a new (data-driven) infrastructure and connect to your legacy as much as possible in read-only mode.

The automotive and aerospace industries’ story is one of paradigm change.

Listen to the SharePLM podcast Revolutionizing PLM: Insights from Yousef Hooshmand, where Yousef also discusses how to address this transition process.

Listen to the SharePLM podcast Revolutionizing PLM: Insights from Yousef Hooshmand, where Yousef also discusses how to address this transition process.

CAD/PDM to PLM

Another migration step happens when companies decide to implement a traditional PLM infrastructure as a System of Record, merging PDM data (mainly CAD) and ERP data (the BOM).

Another migration step happens when companies decide to implement a traditional PLM infrastructure as a System of Record, merging PDM data (mainly CAD) and ERP data (the BOM).

Some of these companies have been working file-based and have stored their final CAD files in folders; others might have a local PDM system native to the 3D CAD. The EBOM usually existed digitally in ERP, and most of the time, it is not a “pure” EBOM but more of a hybrid EBOM/MBOM.

The image above show this type of migration can be very challenging as, in the source systems, there is not necessarily a consistent 3D CAD definition matching the BOM items. As the systems have been disconnected in the past, people have potentially added missing information or fixed information on the BOM side. As in most companies, the manufacturing definition is based on drawings, and the consistency with the 3D CAD definition is not guaranteed.

To address this challenge, companies need to assess the usability of the CAD and BOM data. Is it possible to populate the CAD files with properties that are necessary for an import? For example, does the file path contain helpful information?

I have experienced a situation where a company has poorly defined 3D parts and no properties, as all the focus was on using the 3D to generate the 2D drawing.

I have experienced a situation where a company has poorly defined 3D parts and no properties, as all the focus was on using the 3D to generate the 2D drawing.

The relevant details for manufacturing were next added to the drawing and not anymore to the parts or models – traceability was almost impossible.

In this situation, importing the 3D CAD structures into the new PLM system has limited value. An alternative is to describe and test procedures for handling legacy data when it is needed, either to implement a design change or a new order. Leave the legacy accessible, but do not migrate.

The BOM side is, in theory, stable for manufactured products, as the data should have gone through a release process. However, the company needs to revisit its part definition process for new designs and products.

Some points to consider:

- Meaningful identifiers are not desired in a PLM system as they create a legacy. Therefore, the import of parts with smart identifiers should map to relevant part properties besides the ID. Splitting the ID into properties will create a broader usage in the future. Read more in Smart Part Numbers – do we need them?

- In addition, companies should try to avoid having logistic information, such as supplier-specific part numbers to come from the CAD system. Supplier parts in your CAD environment create inefficiencies when a supplier part becomes obsolete. Concepts such as EBOM and MBOM and potentially the SBOM should be well understood during this migration.

- Concepts of EBOM and MBOM should also be introduced when moving from an ETO to a CTO approach or when modularity is a future business strategy.

Conclusion

As every company is on its PLM journey and technology is evolving, there will always be a migration discussion. Understanding and working towards the future should be the most critical driver for migration. Migrations in the PLM domain are often more than a data migration – new ways of working should be introduced in parallel. And for that reason the “big bang” is often too costly and demotivating for the future.

In the past few weeks, together with Share PLM, we recorded and prepared a few podcasts to be published soon. As you might have noticed, for Season 2, our target is to discuss the human side of PLM and PLM best practices and less the technology side. Meaning:

In the past few weeks, together with Share PLM, we recorded and prepared a few podcasts to be published soon. As you might have noticed, for Season 2, our target is to discuss the human side of PLM and PLM best practices and less the technology side. Meaning:

- How to align and motivate people around a PLM initiative?

- What are the best practices when running a PLM initiative?

- What are the crucial skills you need to have as a PLM lead?

And as there are always many success stories to learn on the internet, we also challenged our guests to share the moments where they got experienced.

As the famous quote says:

Experience is what you get when you don’t get what you expect!

We recently published our with Antonio Casaschi from Assa Abloy, a Swedish company you might have never noticed, although their products and services are a part of your daily life.

It was a discussion to my heart. We discussed the various aspects of PLM. What makes a person a PLM professional? And if you have no time to listen for these 35 minutes, read and scan the recording transcript on the transcription tab.

At 0:24:00, Antonio mentioned the concept of Proof of Concept as he had good experiences with them in the past. The remark triggered me to share some observations that a Proof of Concept (POC) is an old-fashioned way to drive change within organizations. Not discussed in this podcast but based on my experience, companies have been using the Proof Of Concepts to win time, as they were afraid to make a decision.

A POC to gain time?

Company A

When working with a well-known company in 2014, I learned they were planning approximately ten POC per year to explore new ways of working or new technologies. As it was a POC based on an annual time scheme, the evaluation at the end of the year was often very discouraging.

When working with a well-known company in 2014, I learned they were planning approximately ten POC per year to explore new ways of working or new technologies. As it was a POC based on an annual time scheme, the evaluation at the end of the year was often very discouraging.

Most of the time, the conclusion was: “Interesting, we should explore this further” /“What are the next POCs for the upcoming year?”

There was no commitment to follow-up; it was more of a learning exercise not connected to any follow-up.

Company B

During one of the PDT events, a company presented that two years POC with the three leading PLM vendors, exploring supplier collaboration. I understood the PLM vendors had invested much time and resources to support this POC, expecting a big deal. However, the team mentioned it was an interesting exercise, and they learned a lot about supplier collaboration.

During one of the PDT events, a company presented that two years POC with the three leading PLM vendors, exploring supplier collaboration. I understood the PLM vendors had invested much time and resources to support this POC, expecting a big deal. However, the team mentioned it was an interesting exercise, and they learned a lot about supplier collaboration.

And nothing happened afterward ………

In 2019

At the 2019 Product Innovation Conference in London, when discussing Digital Transformation within the PLM domain, I shared in my conclusion that the POC was mainly a waste of time as it does not push you to transform; it is an option to win time but is uncommitted.

My main reason for not pushing a POC is that it is more of a limited feasibility study.

- Often to push people and processes into the technical capabilities of the systems used. A focus starting from technology is the opposite of what I have been pushing for longer: First, focus on the value stream – people and processes- and then study which tools and technologies support these demands.

- Second, the POC approach often blocks innovation as the incumbent system providers will claim the desired capabilities will come (soon) within their systems—a safe bet.

The Minimum Viable Product approach (MVP)

With the awareness that we need to work differently and benefit from digital capabilities also came the term Minimum Viable Product or MVP.

The abbreviation MVP is not to be confused with the minimum valuable products or most valuable players.

There are two significant differences with the POC approach:

- You admit the solution does not exist anywhere – so it cannot be purchased or copied.

- You commit to the fact that this new approach will be the right direction to take and agree that a perfect fit solution is not blocking you from starting for real.

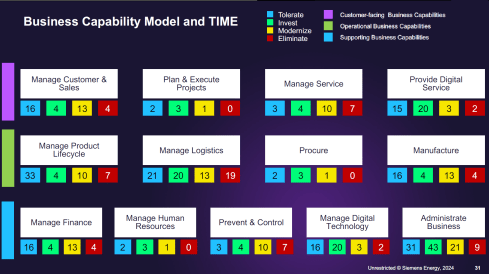

These two differences highlight the main challenges of digital transformation in the PLM domain. Digital Transformation is a learning process – it takes time for organizations to acquire and master the needed skills. And secondly, it cannot be a big bang, and I have often referred to the 2017 article from McKinsey: Toward an integrated technology operating model. Image below.

We will soon hear more about digital transformation within the PLM domain during the next episode of our SharePLM podcast. We spoke with Yousef Hooshmand, currently working for NIO, a Chinese multinational automobile manufacturer specializing in designing and developing electric vehicles, as their PLM data lead.

You might have discovered Yousef earlier when he published his paper: “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh”. It is highly recommended that to read the paper if you are interested in a potential PLM future infrastructure. I wrote about this whitepaper in 2022: A new PLM paradigm discussing the upcoming Systems of Engagement on top of a Systems or Record infrastructure.

You might have discovered Yousef earlier when he published his paper: “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh”. It is highly recommended that to read the paper if you are interested in a potential PLM future infrastructure. I wrote about this whitepaper in 2022: A new PLM paradigm discussing the upcoming Systems of Engagement on top of a Systems or Record infrastructure.

To align our terminology with Yousef’s wording, his domains align with the Systems of Engagement definition.

As we discovered and discussed with Yousef, technology is not the blocking issue to start. You must understand the target infrastructure well and where each domain’s activities fit. Yousef mentions that there is enough literature about this topic, and I can refer to the SAAB conference paper: Genesis -an Architectural Pattern for Federated PLM.

For a less academic impression, read my blog post, The week after PLM Roadmap / PDT Europe 2022, where I share the highlights of Erik Herzog’s presentation: Heterogeneous and Federated PLM – is it feasible?

For a less academic impression, read my blog post, The week after PLM Roadmap / PDT Europe 2022, where I share the highlights of Erik Herzog’s presentation: Heterogeneous and Federated PLM – is it feasible?

There is much to learn and discover which standards will be relevant, as both Yousef and Erik mention the importance of standards.

The podcast with Yousef (soon to be found HERE) was not so much about organizational change management and people.

However, Yousef mentioned the most crucial success factor for the transformation project he supported at Daimler. It was C-level support, trust and understanding of the approach, knowing it will be many years, an unavoidable journey if you want to remain competitive.

However, Yousef mentioned the most crucial success factor for the transformation project he supported at Daimler. It was C-level support, trust and understanding of the approach, knowing it will be many years, an unavoidable journey if you want to remain competitive.

And with the journey aspect comes the importance of the Minimal Viable Product. You are starting a journey with an end goal in mind (top-of-the-mountain), and step by step (from base camp to base camp), people will be better covered in their day-to-day activities thanks to digitization.

And with the journey aspect comes the importance of the Minimal Viable Product. You are starting a journey with an end goal in mind (top-of-the-mountain), and step by step (from base camp to base camp), people will be better covered in their day-to-day activities thanks to digitization.

A POC would not help you make the journey; perhaps a small POC would understand what it takes to cross a barrier.

Conclusion

The concept of POCs is outdated in a fast-changing environment where technology is not necessary the blocking issue. Developing practices, new architectures and using the best-fit standards is the future. Embrace the Minimal Viable Product approach. Are you?

Last week I enjoyed visiting LiveWorx 2023 on behalf of the PLM Global Green Alliance. PTC had invited us to understand their sustainability ambitions and meet with the relevant people from PTC, partners, customers and several of my analyst friends. It felt like a reunion.

Last week I enjoyed visiting LiveWorx 2023 on behalf of the PLM Global Green Alliance. PTC had invited us to understand their sustainability ambitions and meet with the relevant people from PTC, partners, customers and several of my analyst friends. It felt like a reunion.

In addition, I used the opportunity to understand better their Velocity SaaS offering with OnShape and Arena. The almost 4-days event, with approximately 5000 attendees, was massive and well-organized.

So many people were excited that this was again an in-person event after four years.

With PTC’s broad product portfolio, you could easily have a full agenda for the whole event, depending on your interests.

I was personally motivated that I had a relatively full schedule focusing purely on Sustainability, leaving all these other beautiful end-to-end concepts for another time.

Here are some of my observations

Jim Heppelman’s keynote

The primary presentation of such an event is the keynote from PTC’s CEO. This session allows you to understand the company’s key focus areas.

My takeaways:

- Need for Speed: Software-driven innovation, or as Jim said, Software is eating the BOM, reminding me of my recent blog post: The Rise and Fall of the BOM. Here Jim was referring to the integration with ALM (CodeBeamer) and IoT to have full traceability of products. However, including Software also requires agile ways of working.

- Need for Speed: Agile ways of working – the OnShape and Arena offerings are examples of agile working methods. A SaaS solution is easy to extend with suppliers or other stakeholders. PTC calls this their Velocity offering, typical Systems of Engagement, and I spoke later with people working on this topic. More in the future.

- Need for Speed: Model-based digital continuity – a theme I have discussed in my blog post too. Here Jim explains the interaction between Windchill and ServiceMax, both Systems of Record for product definition and Operation.

- Environmental Sustainability: introducing Catherine Kniker, PTC’s Chief Strategy and Sustainability Officer, announcing that PTC has committed to Science Based Targets, pledging near-term emissions reductions and long-term net-zero targets – see image below and more on Sustainability in the next section.

- A further investment in a SaaS architecture, announcing CREO+ as a SaaS solution supporting dynamic multi-user collaboration (a System of Engagement)

- A further investment in the partnership with Ansys fits the needs of a model-based future where modeling and simulation go hand in hand.

You can watch the full session Path to the Future: Products in the Age of Transformation here.

Sustainability

The PGGA spoke with Dave Duncan and James Norman last year about PTC’s sustainability initiatives. Remember: PLM and Sustainability: talking with PTC. Therefore, Klaus Brettschneider and I were happy to meet Dave and James in person just before the event and align on understanding what’s coming at PTC.

The PGGA spoke with Dave Duncan and James Norman last year about PTC’s sustainability initiatives. Remember: PLM and Sustainability: talking with PTC. Therefore, Klaus Brettschneider and I were happy to meet Dave and James in person just before the event and align on understanding what’s coming at PTC.

We agreed there is no “sustainability super app”; it is more about providing an open, digital infrastructure to connect data sources at any time of the product lifecycle, supporting decision-making and analysis. It is all about reliable data.

Product Sustainability 101

On Tuesday, Dave Duncan gave a great introductory session, Product Sustainability 101, addressing Business Drivers and Technical Opportunities. Dave started by explaining the business context aiming at greenhouse gas (GHG) reduction based on science-based targets, describing the content of Scope 1, Scope 2 and Scope 3 emissions.

The image above, which came back in several presentations later that week, nicely describes the mapping of lifecycle decisions and operations in the context of the GHG protocol.

Design for Sustainability (DfS)

On Wednesday, I started with a session moderated by James Norman titled Design for Sustainability: Harnessing Innovation for a Resilient Future. The panel consisted of Neil D’Souza (CEO Makersite), Tim Greiner (MD Pure Strategies), Francois Lamy (SVP Product Management PTC) and Asheen Phansey (Director ESG & Sustainability at PagerDuty). You can find the topic discussed below:

Some of the notes I took:

- No specific PLM modules are needed, LCA needs to become an additional practice for companies, and they rely on a connected infrastructure.

- Where to start? First, understand the current baseline based on data collection – what is your environmental impact? Next, decide where to start

- The importance of Design for Service – many companies design products for easy delivery, not for service. Being able to service products better will extend their lifetime, therefore reducing their environmental impact (manufacturing/decommissioning)

- There Is a value chain for carbon data. In addition, suppliers significantly impact reaching net zero, as many OEMs have an Assembly To Order process, and most of the emissions are done during part manufacturing.

DfS: an example from Cummins

Next, on Wednesday, I attended the session from David Genter from Cummins, who presented their Design for Sustainability (DfS) project.

Next, on Wednesday, I attended the session from David Genter from Cummins, who presented their Design for Sustainability (DfS) project.

Dave started by sharing their 2030 sustainability goals:

- On Facilities and Operations: A reduction of 50 % of GHG emissions, reducing water usage by 30 %, reducing waste by 25 % and reducing organic compound emissions by 50%

- Reducing Scope 3 emissions for new products by 25%

- In general, reducing Scope 3 emissions by 55M metric tons.

The benefits for products were documented using a standardized scorecard (example below) to ensure the benefits are real and not based on wishful thinking.

Many motivated people wanted to participate in the project, and the ultimate result demonstrated that DfS has both business value for Cummins and the environment.

The project has been very well described in this whitepaper: How Cummins Made Changes to Optimize Product Designs for the Environment – a recommended case study to read.

Tangible Strategies for Improving Product Sustainability

The session was a dialogue between Catherine Kniker and Dave Duncan, discussing the strategies to move forward with Sustainability.

They reiterated the three areas where we as a PLM community can improve: Material choice and usage, Addressing Energy Emissions and Reducing Waste. And it is worth addressing them all, as you can see below – it is not only about carbon reduction.

It was an informative dialogue going through the different aspects of where we, as an engineering/ PLM community, can contribute. You can watch their full dialog here: Tangible Strategies for Improving Product Sustainability.

Conclusion

It was encouraging to see that at such an event as LiveWorx, you could learn about Sustainability and discuss Sustainability with the audience and PTC partners. And as I mentioned before, we need to learn to measure (data-driven / reliable data), and we need to be able to work in a connected infrastructure (digital thread) to allow design, simulation, validation and feedback to go hand in hand. It requires adapting a business strategy, not just a tactical solution. With the PLM Global Green Alliance, we are looking forward to following up on these.

NOTE: PTC covered the expenses associated with my participation in this event but did not in any way influence the content of this post – I made my tour fully independent through the conference and got encouraged by all the conversations I had.

This month it is exactly 15 years ago that I started my blog, a little bit nervous and insecure. Blogging had not reached mainstream yet, and how would people react to my shared experiences?

This month it is exactly 15 years ago that I started my blog, a little bit nervous and insecure. Blogging had not reached mainstream yet, and how would people react to my shared experiences?

The main driver behind my blog in 2008 was to share field experiences when implementing PLM in the mid-market.

As a SmarTeam contractor working closely with Dassault and IBM PLM, I learned that implementing PLM (or PDM) is more than a technology issue.

Discussing implementations made me aware of the importance of the human side. Customers had huge expectations with such a flexible toolkit, and implementers made money by providing customization to any user request.

Discussing implementations made me aware of the importance of the human side. Customers had huge expectations with such a flexible toolkit, and implementers made money by providing customization to any user request.

No discussion if it was needed, as the implementer always said: “Yes, we can (if you pay)”.

The parallel tree

And that’s where my mediation started. At a particular moment, the customer started to get annoyed of again another customization. The concept of a “parallel tree,” a sync between the 3D CAD structure and the BOM, was many times a point of discussion.

So many algorithms have been invented to convert a 3D CAD structure into a manufacturing BOM. Designing glue and paint in CAD as this way it would appear in the BOM.

The “exploded” data model

A result of customizations that ended up in failure were the ones with a crazy data model, too many detailed classes, and too many attributes per class.

Monsters were created by some well-willingly IT departments collecting all the user needs, however unworkable by the end users. See my 2015 post here: The Importance of a PLM data model

The BOM concepts

While concepts and best practices have become stable for traditional PLM, where we talk more about a Product Information backbone, there is still considerable debate about this type of implementation. The leading cause for the discussion is that companies often start from their systems and newly purchased systems and then try to push the people and processes into that environment.

While concepts and best practices have become stable for traditional PLM, where we talk more about a Product Information backbone, there is still considerable debate about this type of implementation. The leading cause for the discussion is that companies often start from their systems and newly purchased systems and then try to push the people and processes into that environment.

For example, see this recent discussion we had with Oleg Shilovitsky (PLM, ERP, MES) and others on LinkedIn.

These were the days before we entered into digital transformation in the PLM domain, and starting from 2015, you can see in my blog posts the mission. Exploring what a digital enterprise would look like and what the role of PLM will be.

The Future

Some findings I can already share:

- No PLM system can do it all – where historically, companies bought a PLM system; now, they have to define a PLM strategy where the data can flow (controlled) in any direction. The PLM strategy needs to be based on value streams of connected data between relevant stakeholders supported by systems of engagement. From System to Strategy.

- Master Data Management and standardization of data models might still be a company’s internal activity (as the environment is stable). Still, to the outside world/domains, there is a need for flexible connections (standard flows / semantic web). From Rigid to Flexible.

- The meaning of the BOM will change for coordinated structures towards an extract of a data-driven PLM environment, where the BOM mainly represents the hardware connected to software releases. Configuration management practices must also change (see Martijn – and the Rise and Fall of the BOM). From Placeholders to Baselines.

- Digital Transformation in the PLM domain is not an evolution of the data. Legacy data has never been designed to be data-driven; migration is a mission impossible. Therefore there is a need to focus on a hybrid environment with two modes: enterprise backbone (System of Record) and product-centric infrastructure (Systems of Engagements). From Single Source of Truth to Authoritative Source of Truth.

Switching Gears

Next week I have reached the liable age for my Dutch pension, allowing me to switch gears.

Next week I have reached the liable age for my Dutch pension, allowing me to switch gears.

Instead of driving in high-performance mode, I will start practicing driving in a touristic mode, moving from points of interest to other points of interest while caring for the environment.

Here are some of the topics to mention at this moment.

Reviving the Share PLM podcast

Together with the Share PLM team, we decided to revive their podcast as Season 2. I referred to their podcast last year in my PLM Holiday thoughts 2022 post.

Together with the Share PLM team, we decided to revive their podcast as Season 2. I referred to their podcast last year in my PLM Holiday thoughts 2022 post.

The Share PLM team has always been the next level of what I started alone in 2008. Sharing and discussing PLM topics with interest on the human side, supporting organizational change through targeted e-learning deliverables based on the purpose of a PLM implementation. People (first), Processes (needed) and the Tools (how) – in this order.

In Season 2 of the podcast, we want to discuss with experienced PLM practitioners the various aspects of PLM – not only success stories you often hear at PLM conferences.

Experience is what you get when you do not get what you expect.

And PLM is a domain where experience with people, processes and tools counts.

Follow our podcast here, subscribe to it on your favorite platform and feel free to send us questions. Besides the longer interviews, we will also discuss common questions in separate recordings or as a structured part of the podcast.

Follow our podcast here, subscribe to it on your favorite platform and feel free to send us questions. Besides the longer interviews, we will also discuss common questions in separate recordings or as a structured part of the podcast.

Sustainability!

I noticed from my Sustainability related blog posts that they resonate less with my blogging audience. I am curious about the reason behind this.

Does it mean in our PLM community, Sustainability is still too vague and not addressed in the reader’s daily environment? Or is it because people do not see the relation to PLM and are more focused on carbon emissions, greenhouse gasses and the energy transition – a crucial part of the sustainable future that currently gets much attention?

Does it mean in our PLM community, Sustainability is still too vague and not addressed in the reader’s daily environment? Or is it because people do not see the relation to PLM and are more focused on carbon emissions, greenhouse gasses and the energy transition – a crucial part of the sustainable future that currently gets much attention?

I just discovered this week I just read this post: CEO priorities from 2019 until now: What has changed? As the end result shows below, sustainability has been ranked #7 in 2019, and after some ups and downs, it is still at priority level #7. This is worrying me as it illustrates that at the board level, not so much has changed, despite the increasing understanding of the environmental impact and the recent warnings from the climate. The warnings did not reach the boardrooms yet.