You are currently browsing the category archive for the ‘Standards’ category.

For those of you following my blog over the years, there is, every time after the PLM Roadmap PDT Europe conference, one or two blog posts, where the first starts with “The weekend after ….”

This time, November has been a hectic week for me, with first this engaging workshop “Shape the future of PLM – together” – you can read about it in my blog post or the latest post from Arrowhead fPVN, the sponsor of the workshop.

This time, November has been a hectic week for me, with first this engaging workshop “Shape the future of PLM – together” – you can read about it in my blog post or the latest post from Arrowhead fPVN, the sponsor of the workshop.

Last week, I celebrated with the core team from the PLM Green Global Alliance our 5th anniversary, during which we discussed sustainability in action. The term sustainability is currently under the radar, but if you want to learn what is happening, read this post with a link to the webinar recording.

Last week, I celebrated with the core team from the PLM Green Global Alliance our 5th anniversary, during which we discussed sustainability in action. The term sustainability is currently under the radar, but if you want to learn what is happening, read this post with a link to the webinar recording.

Last week, I was also active at the PTC/User Benelux conference, where I had many interesting discussions about PTC’s strategy and portfolio. A big and well-organized event in the town where I grew up in the world of teaching and data management.

Last week, I was also active at the PTC/User Benelux conference, where I had many interesting discussions about PTC’s strategy and portfolio. A big and well-organized event in the town where I grew up in the world of teaching and data management.

And now it is time for the PLM roadmap / PDT conference review

The conference

The conference is my favorite technical conference 😉 for learning what is happening in the field. Over the years, we have seen reports from the Aerospace & Defense PLM Action Groups, which systematically work on various themes related to a digital enterprise. The usage of standards, MBSE, Supplier Collaboration, Digital Thread & Digital Twin are all topics discussed.

The conference is my favorite technical conference 😉 for learning what is happening in the field. Over the years, we have seen reports from the Aerospace & Defense PLM Action Groups, which systematically work on various themes related to a digital enterprise. The usage of standards, MBSE, Supplier Collaboration, Digital Thread & Digital Twin are all topics discussed.

This time, the conference was sold out with 150+ attendees, just fitting in the conference space, and the two-day program started with a challenging day 1 of advanced topics, and on day 2 we saw more company experiences.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

PLM’s Integral Role in Digital Transformation

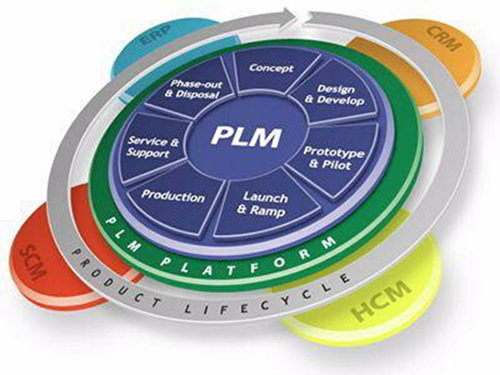

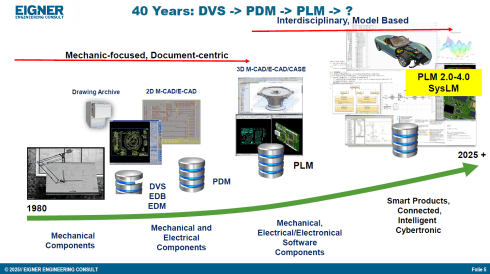

As usual, Peter Bilello, CIMdata’s President & CEO, kicked off the conference, and his message has not changed over the years. PLM should be understood as a strategic, enterprise-wide approach that manages intellectual assets and connects the entire product lifecycle.

As usual, Peter Bilello, CIMdata’s President & CEO, kicked off the conference, and his message has not changed over the years. PLM should be understood as a strategic, enterprise-wide approach that manages intellectual assets and connects the entire product lifecycle.

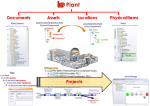

I like the image below explaining the WHY behind product lifecycle management.

It enables end-to-end digitalization, supports digital threads and twins, and provides the backbone for data governance, analytics, AI, and skills transformation.

Peter walked us briefly through CIMdata’s Critical Dozen (a YouTube recording is available here), all of which are relevant to the scope of digital transformation. Without strong PLM foundations and governance, digital transformation efforts will fail.

The Digital Thread as the Foundation of the Omniverse

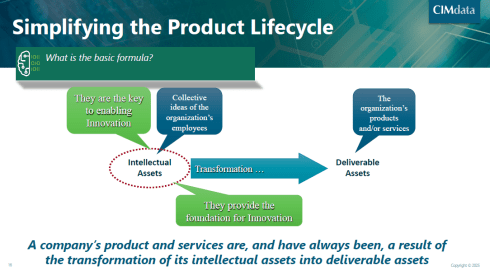

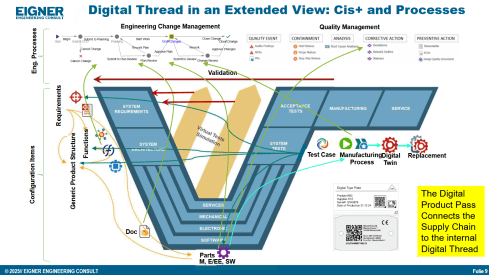

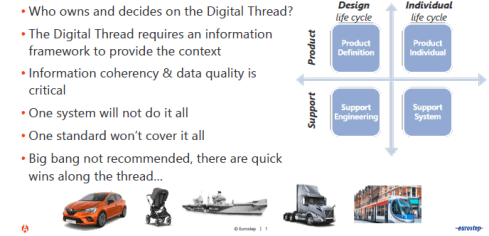

Prof. Dr.-Ing. Martin Eigner, well known for his lifetime passion and vision in product lifecycle management (PDM and PLM tools & methodology), shared insights from his 40-year journey, highlighting the growing complexity and ever-increasing fragmentation of customer solution landscapes.

Prof. Dr.-Ing. Martin Eigner, well known for his lifetime passion and vision in product lifecycle management (PDM and PLM tools & methodology), shared insights from his 40-year journey, highlighting the growing complexity and ever-increasing fragmentation of customer solution landscapes.

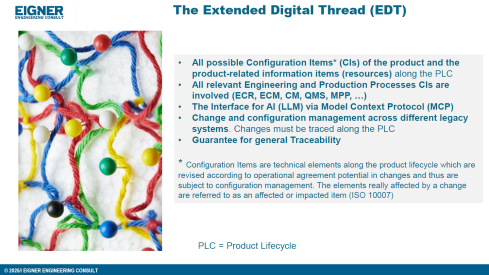

In his current eco-system, ERP (read SAP) is playing a significant role as an execution platform, complemented by PDM or ECTR capabilities. Few of his customers go for the broad PLM systems, and therefore, he stresses the importance of the so-called Extended Digital Thread.

Prof Eigner describes the EDT more precisely as an overlaying infrastructure implemented by a graph database that serves as a performant knowledge graph of the enterprise.

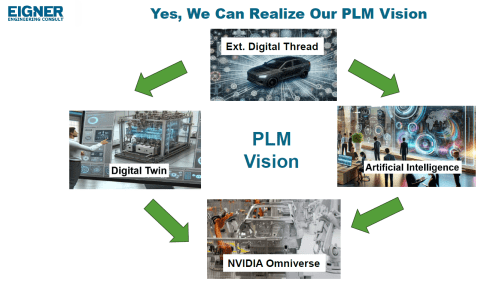

The EDT serves as the foundation for AI-driven applications, supporting impact analysis, change management, and natural-language interaction with product data. The presentation also provides a detailed view of Digital Twin concepts, ranging from component to system and process twins, and demonstrates how twins enhance predictive maintenance, sustainability, and process optimization.

Combined with the NVIDIA Omniverse as the next step toward immersive, real-time collaboration and simulation, enabling virtual factories and physics-accurate visualization. The outlook emphasizes that combining EDT, Digital Twin, AI, and Omniverse moves the industry closer to the original PLM vision: a unified, consistent Single Source of Truth 😮that boosts innovation, efficiency, and ROI.

![]() For me, hearing and reading the term Single Source of Truth still creates discomfort with reality and humanity, so we still have something to discuss.

For me, hearing and reading the term Single Source of Truth still creates discomfort with reality and humanity, so we still have something to discuss.

Semantic Digital Thread for Enhanced Systems Engineering in a Federated PLM Landscape

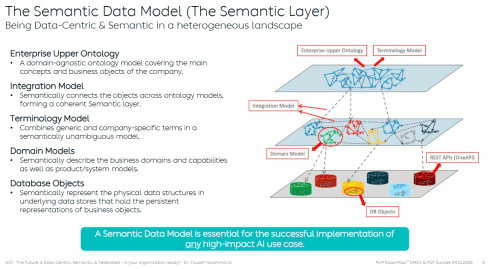

Dr. Yousef Hooshmand‘s presentation was a great continuation of the Extended Digital Thread theme discussed by Dr. Martin Eigner. Where the core of Martin’s EDT is based on traceability between artifacts and processes throughout the lifecycle, Yousef introduced a (for me) totally new concept: starting with managing and structuring the data to manage the knowledge, rather than starting from the models and tools to understand the knowledge.

Dr. Yousef Hooshmand‘s presentation was a great continuation of the Extended Digital Thread theme discussed by Dr. Martin Eigner. Where the core of Martin’s EDT is based on traceability between artifacts and processes throughout the lifecycle, Yousef introduced a (for me) totally new concept: starting with managing and structuring the data to manage the knowledge, rather than starting from the models and tools to understand the knowledge.

It is a fundamentally different approach to addressing the same problem of complexity. During our pre-conference workshop “Shape the future of PLM – together,” I already got a bit familiar with this approach, and Yousef’s recently released paper provides all the details.

All the relevant information can be found in his recent LinkedIn post here.

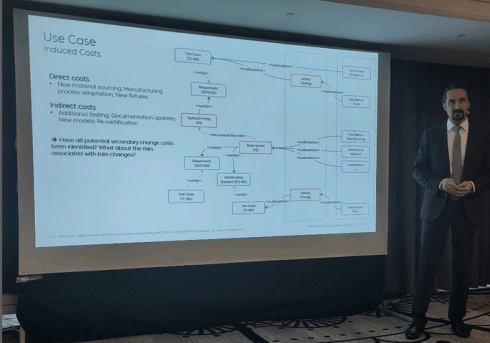

In his presentation during the conference, Yousef illustrated the value and applicability of the Semantic Digital Thread approach by presenting it in an automotive use case: Impact Analysis and Cost Estimation (image above)

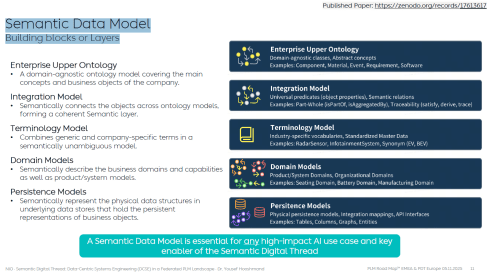

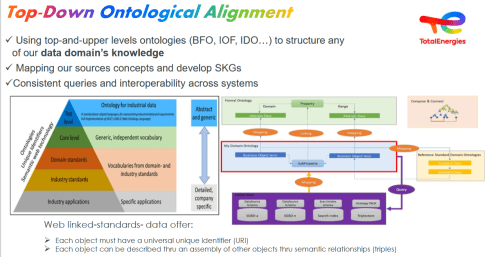

To understand the Semantic Digital Thread, it is essential to understand the Semantic Data Model and its building blocks or layers, as illustrated in the image below:

In addition, such an infrastructure is ideal for AI applications and avoids vendor- or tool lock-in, providing a significant long-term advantage.

I am sure it will take time for us to digest the content if you are entering the domain of a data-driven enterprise (the connected approach) instead of a document-driven enterprise (the coordinated approach).

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

Where is your company on the path to becoming more data-driven?

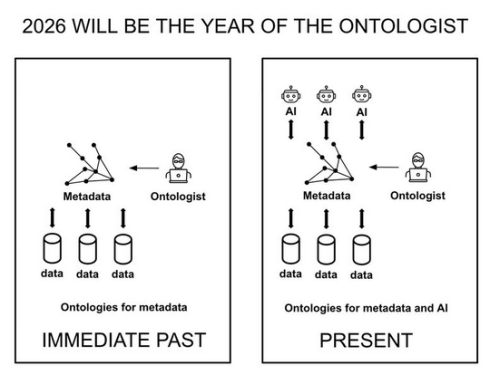

Note: I just saw this post and the image above, which emphasizes the importance of the relationship between ontologies and the application of AI agents.

Evaluation of SysML v2 for use in Collaborative MBSE between OEMs and Suppliers

It was interesting to hear Chris Watkins’ speech, which presented the findings from the AD PLM Action Group MBSE Collaboration Working Group on digital collaboration based on SysML v2.

It was interesting to hear Chris Watkins’ speech, which presented the findings from the AD PLM Action Group MBSE Collaboration Working Group on digital collaboration based on SysML v2.

The topic they research is that currently there are no common methods and standards for exchanging digital model-based requirements and architecture deliverables for the design, procurement, and acceptance of aerospace systems equipment across the industry.

The action group explored the value of SysML v2 for data-driven collaboration between OEMs and suppliers, particularly in the early concept phases.

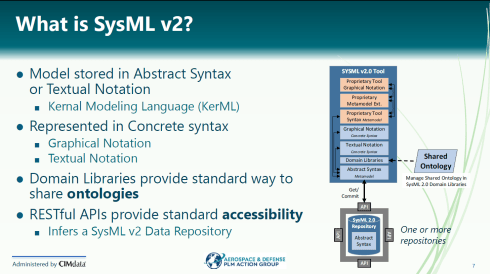

Chris started with a brief explanation of what SysXML v2 is – image below:

As the image illustrates, SysML v2-ready tools allow people to work in their proprietary interfaces while sharing results in common, defined structures and ontologies.

When analyzing various collaboration scenarios, one of the main challenges remained managing changes, the required ontologies, and working in a shared IT environment.

👉You can read the full report here: AD PAG reports: Model-Based Systems Engineering.

An interesting point of discussion here is that, in the report, participants note that, despite calling out significant gaps and concerns, a substantial majority of the industry indicated that their MBSE solution provider is a good partner. At the same time, only a small minority expressed a negative view.

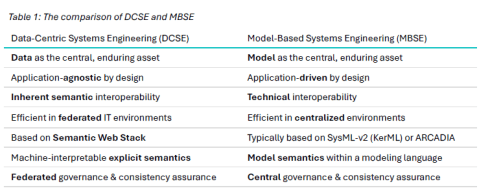

Would Data-Centric Systems Engineering change the discussion? See table 1 below from Yousef’s paper:

An illustration that there was enough food for discussion during the conference.

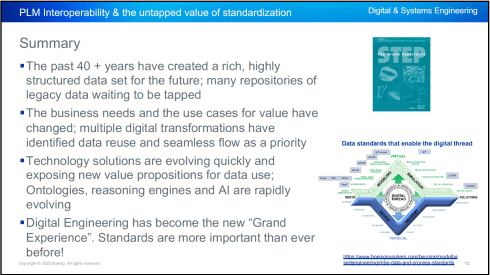

PLM Interoperability and the Untapped Value of 40 Years in Standardization

In the context of collaboration, two sessions fit together perfectly.

First, Kenny Swope from Boeing. Kenny is a longtime Boeing engineering leader and global industrial-data standards expert who oversees enterprise interoperability efforts, chairs ISO/TC 184/SC 4, and mentors youth in technology through 4-H and FIRST programs.

First, Kenny Swope from Boeing. Kenny is a longtime Boeing engineering leader and global industrial-data standards expert who oversees enterprise interoperability efforts, chairs ISO/TC 184/SC 4, and mentors youth in technology through 4-H and FIRST programs.

Kenny shared that over the past 40+ years, the understanding and value of this approach have become increasingly apparent, especially as organizations move toward a digital enterprise. In a digital enterprise, these standards are needed for efficient interoperability between various stakeholders. And the next session was an example of this.

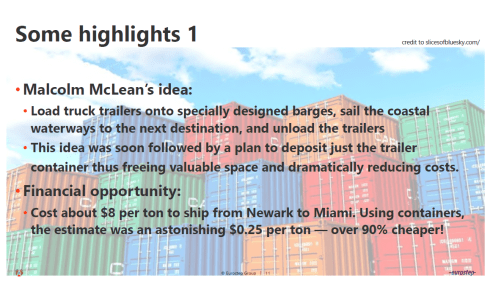

Unlocking Enterprise Knowledge

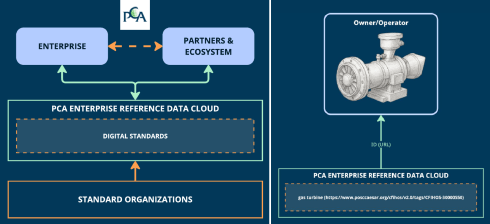

Fredrik Anthonisen, the CTO of the POSC Caesar Association (PCA), started his story about the potential value of efficient standard use.

Fredrik Anthonisen, the CTO of the POSC Caesar Association (PCA), started his story about the potential value of efficient standard use.

According to a Siemens report, “The true costs of downtime” a $1,4 trillion is lost to unplanned downtime.

The root cause is that, most of the time, the information needed to support the MRO activity is inaccessible or incomplete.

Making data available using standards can provide part of the answer, but static documents and slow consensus processes can’t keep up with the pace of change.

Therefore, PCA established the PCA enterprise reference data cloud, where all stakeholders in enterprise collaboration can relate their data to digital exposed standards, as the left side of the image shows.

Fredrik shared a use case (on the right side of the image) as an example. Also, he mentioned that the process for defining and making the digital reference data available to participants is ongoing. The reference data needs to become the trusted resource for the participants to monetize the benefits.

Summary

Day 1 had many more interesting and advanced concepts related to standards and the potential usage of AI.

Jean-Charles Leclerc, Head of Innovation & Standards at TotalEnergies, in his session, “Bringing Meaning Back To Data,” elaborated on the need to provide data in the context of the domain for which it is intended, rather than “indexed” LLM data.

Jean-Charles Leclerc, Head of Innovation & Standards at TotalEnergies, in his session, “Bringing Meaning Back To Data,” elaborated on the need to provide data in the context of the domain for which it is intended, rather than “indexed” LLM data.

Very much aligned with Yousef’s statement that there is a need to apply semantic technologies, and especially ontologies, to turn the data into knowledge.

More details can also be found in the “Shape the future of PLM – together” post, where Jean-Charles was one of the leading voices.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

“Reliable AI comes from a foundation that supports knowledge in its domain context.”

Conclusion

For the casual user, day 1 was tough – digital transformation in the product lifecycle domain requires skills that might not yet exist in smaller organizations. Understanding the need for ontologies (generic/domain-specific) and semantic models is essential to benefit from what AI can bring – a challenging and enjoyable journey to follow!

Together with Håkan Kårdén, we had the pleasure of bringing together 32 passionate professionals on November 4th to explore the future of PLM (Product Lifecycle Management) and ALM (Asset Lifecycle Management), inspired by insights from four leading thinkers in the field. Please, click on the image for more details.

Together with Håkan Kårdén, we had the pleasure of bringing together 32 passionate professionals on November 4th to explore the future of PLM (Product Lifecycle Management) and ALM (Asset Lifecycle Management), inspired by insights from four leading thinkers in the field. Please, click on the image for more details.

The meeting had two primary purposes.

- Firstly, we aimed to create an environment where these concepts could be discussed and presented to a broader audience, comprising academics, industrial professionals, and software developers. The group’s feedback could serve as a benchmark for them.

- The second goal was to bring people together and create a networking opportunity, either during the PLM Roadmap/PDT Europe conference, the day after, or through meetings established after this workshop.

Personally, it was a great pleasure to meet some people in person whose LinkedIn articles I had admired and read.

The meeting was sponsored by the Arrowhead fPVN project, a project I discussed in a previous blog post related to the PLM Roadmap/PDT Europe 2024 conference last year. Together with the speakers, we have begun working on a more in-depth paper that describes the similarities and the lessons learned that are relevant. This activity will take some time.

Therefore, this post only includes the abstracts from the speakers and links to their presentations. It concludes with a few observations from some attendees.

Reasoning Machines: Semantic Integration in Cyber-Physical Environments

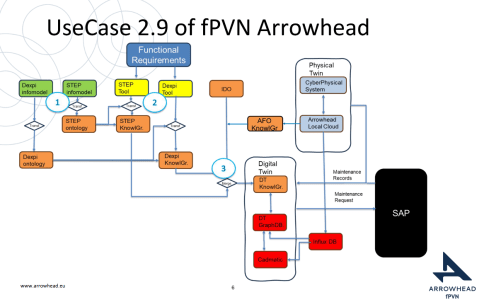

Torbjörn Holm / Jan van Deventer: The presentation discussed the transition from requirements to handover and operations, emphasizing the role of knowledge graphs in unifying standards and technologies for a flexible product value network

Torbjörn Holm / Jan van Deventer: The presentation discussed the transition from requirements to handover and operations, emphasizing the role of knowledge graphs in unifying standards and technologies for a flexible product value network

The presentation outlines the phases of the product and production lifecycle, including requirements, specification, design, build-up, handover, and operations. It raises a question about unifying these phases and their associated technologies and standards, emphasizing that the most extended phase, which involves operation, maintenance, failure, and evolution until retirement, should be the primary focus.

It also discusses seamless integration, outlining a partial list of standards and technologies categorized into three sections: “Modelling & Representation Standards,” “Communication & Integration Protocols,” and “Architectural & Security Standards.” Each section contains a table listing various technology standards, their purposes, and references. Additionally, the presentation includes a “Conceptual Layer Mapping” table that details the different layers (Knowledge, Service, Communication, Security, and Data), along with examples, functions, and references.

The presentation outlines an approach for utilizing semantic technologies to ensure interoperability across heterogeneous datasets throughout a product’s lifecycle. Key strategies include using OWL 2 DL for semantic consistency, aligning domain-specific knowledge graphs with ISO 23726-3, applying W3C Alignment techniques, and leveraging Arrowhead’s microservice-based architecture and Framework Ontology for scalable and interoperable system integration.

The utilized software architecture system, including three main sections: “Functional Requirements,” “Physical Twin,” and “Digital Twin,” each containing various interconnected components, will be presented. The Architecture includes today several Knowledge Graphs (KG): A DEXPI KG, A STEP (ISO 10303) KG, An Arrowhead Framework KG and under work the CFIHOS Semantics Ontology, all aligned.

👉The presentation: W3C Major standard interoperability_Paris

Beyond Handover: Building Lifecycle-Ready Semantic Interoperability

Jean-Charles Leclerc argued that Industrial data standards must evolve beyond the narrow scope of handover and static interoperability. To truly support digital transformation, they must embrace lifecycle semantics or, at the very least, be designed for future extensibility.

This shift enables technical objects and models to be reused, orchestrated, and enriched across internal and external processes, unlocking value for all stakeholders and managing the temporal evolution of properties throughout the lifecycle. A key enabler is the “pattern of change”, a dynamic framework that connects data, knowledge, and processes over time. It allows semantic models to reflect how things evolve, not just how they are delivered.

By grounding semantic knowledge graphs (SKGs) in such rigorous logic and aligning them with W3C standards, we ensure they are both robust and adaptable. This approach supports sustainable knowledge management across domains and disciplines, bridging engineering, operations, and applications.

Ultimately, it’s not just about technology; it’s about governance.

Being Sustainab’OWL (Web Ontology Language) by Design! means building semantic ecosystems that are reliable, scalable, and lifecycle-ready by nature.

Additional Insight: From Static Models to Living Knowledge

To transition from static information to living knowledge, organizations must reassess how they model and manage technical data. Lifecycle-ready interoperability means enabling continuous alignment between evolving assets, processes, and systems. This requires not only semantic precision but also a governance framework that supports change, traceability, and reuse, turning standards into operational levers rather than compliance checkboxes.

👉The presentation: Beyond Handover – Building Lifecycle Ready Semantic Interoperability

The first two presentations had a lot in common as they both come from the Asset Lifecycle Management domain and focus on an infrastructure to support assets over a long lifetime. This is particularly visible in the usage and references to standards such as DEXPI, STEP, and CFIHOS, which are typical for this domain.

The first two presentations had a lot in common as they both come from the Asset Lifecycle Management domain and focus on an infrastructure to support assets over a long lifetime. This is particularly visible in the usage and references to standards such as DEXPI, STEP, and CFIHOS, which are typical for this domain.

How can we achieve our vision of PLM – the Single Source of Truth?

Martin Eigner stated that Product Lifecycle Management (PLM) has long promised to serve as the Single Source of Truth for organizations striving to manage product data, processes, and knowledge across their entire value chain. Yet, realizing this vision remains a complex challenge.

Achieving a unified PLM environment requires more than just implementing advanced software systems—it demands cultural alignment, organizational commitment, and seamless integration of diverse technologies. Central to this vision is data consistency: ensuring that stakeholders across engineering, manufacturing, supply chain, and service have access to accurate, up-to-date, and contextualized information along the Product Lifecycle. This involves breaking down silos, harmonizing data models, and establishing governance frameworks that enforce standards without limiting flexibility.

Emerging technologies and methodologies, such as Extended Digital Thread, Digital Twins, cloud-based platforms, and Artificial Intelligence, offer new opportunities to enhance collaboration and integrated data management.

However, their success depends on strong change management and a shared understanding of PLM as a strategic enabler rather than a purely technical solution. By fostering cross-functional collaboration, investing in interoperability, and adopting scalable architectures, organizations can move closer to a trustworthy single source of truth. Ultimately, realizing the vision of PLM requires striking a balance between innovation and discipline—ensuring trust in data while empowering agility in product development and lifecycle management.

👉The presentation: Martin – Workshop PLM Future 04_10_25

The Future is Data-Centric, Semantic, and Federated … Is your organization ready?

Yousef Hooshmand, who is currently working at NIO as PLM & R&D Toolchain Lead Architect, discussed the must-have relations between a data-centric approach, semantic models and a federated environment as the image below illustrates:

Why This Matters for the Future?

- Engineering is under unprecedented pressure: products are becoming increasingly complex, customers are demanding personalization, and development cycles must be accelerated to meet these demands. Traditional, siloed methods can no longer keep up.

- The way forward is a data-centric, semantic, and federated approach that transforms overwhelming complexity into actionable insights, reduces weeks of impact analysis to minutes, and connects fragmented silos to create a resilient ecosystem.

- This is not just an evolution, but a fundamental shift that will define the future of systems engineering. Is your organization ready to embrace it?

👉The presentation: The Future is Data-Centric, Semantic, and Federated.

Some of first impressions

👉 Bhanu Prakash Ila from Tata Consultancy Services– you can find his original comment in this LinkedIn post

Key points:

- Traditional PLM architectures struggle with the fundamental challenge of managing increasingly complex relationships between product data, process information, and enterprise systems.

- Ontology-Based Semantic Models – The Way Forward for PLM Digital Thread Integration: Ontology-based semantic models address this by providing explicit, machine-interpretable representations of domain knowledge that capture both concepts and their relationships. These lay the foundations for AI-related capabilities.

It’s clear that as AI, semantic technologies, and data intelligence mature, the way we think and talk about PLM must evolve too – from system-centric to value-driven, from managing data to enabling knowledge and decisions.

A quick & temporary conclusion

Typically, I conclude my blog posts with a summary. However, this time the conclusion is not there yet. There is work to be done to align concepts and understand for which industry they are most applicable. Using standards or avoiding standards as they move too slowly for the business is a point of ongoing discussion. The takeaway for everyone in the workshop was that data without context has no value. Ontologies, semantic models and domain-specific methodologies are mandatory for modern data-driven enterprises. You cannot avoid this learning path by just installing a graph database.

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

There were several interesting sessions which I will not mention here as I want to focus on forward-looking topics with a mix of (federated) data-driven PLM environments and the applicability of AI, staying around 1500 words.

R-evolutionizing PLM and ERP and Heliple

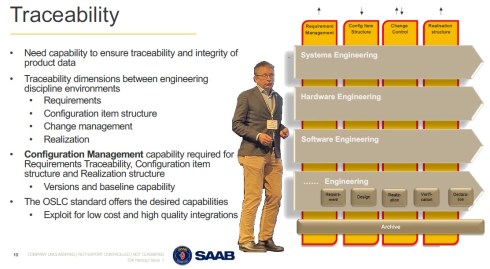

Cristina Paniagua from the Luleå University of Technology closed the first day of the conference, giving us food for thought to discuss over dinner. Her session, describing the Arrowhead fPTN project, fitted nicely with the concepts of the Federated PLM Heliple project presented by Erik Herzog also on Day 2.

They are both research products related to the future state of a digital enterprise. Therefore, it makes sense to treat them together.

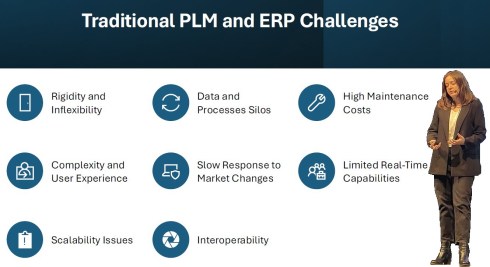

Cristina’s session started with sharing the challenges of traditional PLM and ERP systems:

These statements align with the drivers of the Heliple project. The PLM and ERP systems—Systems of Record—provide baselines and traceability. However, Systems of Record have not historically been designed to support real-time collaboration or to create an attractive user experience.

The Heliple project focuses on connecting various modules—the horizontal bars—for systems engineering, hardware engineering, etc., as real-time collaboration environments that can be highly customized and replaceable if needed. The Heliple project explored the usage of OSLC to connect these modules, the Systems of Engagement, with the Systems of Record.

![]() By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

Now, the Heliple team is aiming to expand their research with industry scale validation through the Demoiple project (Validate that the Heliple-2 technology can be implemented and accredited in Saab Aeronautics’ operational IT) combined with the Nextiple project, where they will investigate the role of heterogeneous information models/ontologies for heterogeneous analysis.

![]() If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

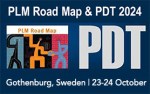

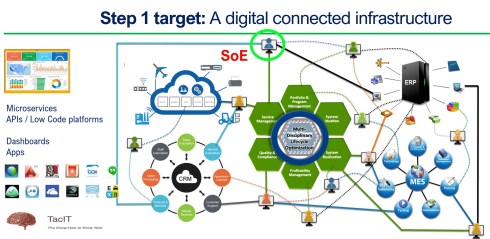

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

The resulting technology is projected to impact manufacturing productivity and flexibility substantially.

The exciting starting point of the Arrowhead project is that it wants to use existing standards and systems as a foundation and, on top of that, create a business and user-oriented layer, using modern technologies such as micro-services to support real-time processing and semantic technologies, ontologies, system modeling, and AI for data translations and learning—a much broader and ambitious scope than the Heliple project.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

Potentially, there are five platforms with their own internal ways of working, a mix of systems of record and systems of engagement, supported by an overlay of several Systems of Engagement environments.

![]() I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

See below my artist’s impression of how a Service Engineer would work in its app connected to CRM, PLM and ERP platform datasets:

The exciting part of the Arrowhead fPVN project is that it wants to explore the interactions between systems and user roles based on existing mature standards instead of leaving the connections to software developers.

Christina mentioned some of these standards below:

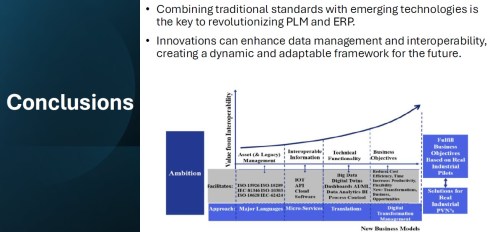

I greatly support this approach as, historically, much knowledge and effort has been put into developing standards to support interoperability. Maybe not in real-time, but the embedded knowledge in these standards will speed up the broader usage. Therefore, I concur with the concluding slide:

A final comment: Industrial users must push for these standards if they do not want a future vendor lock-in. Vendors will do what the majority of their customers ask for but will also keep their customers’ data in proprietary formats to prevent them from switching to another system.

Accelerated Product Development Enabled by Digitalization

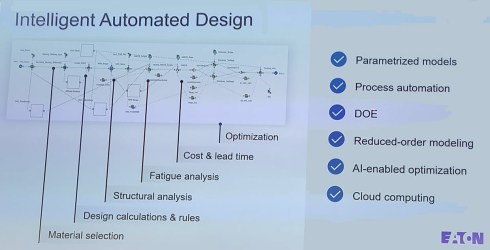

![]() The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

With its broad range of products, Eaton is exploring new, innovative ways to accelerate product design by modeling the design process and applying AI to narrow design decisions and customer-specific engineering work. The picture below shows the areas of attention needed to model the design processes. Uyiosa mentioned the significant beneficial results that have already been reached.

Together with generative design, Eaton works towards modern digital engineering processes built on models and knowledge. His session was complementary to the Heliple and Arrowhead story. To reach such a contemporary design engineering environment, it must be data-driven and built upon open PLM and software components to fully use AI and automation.

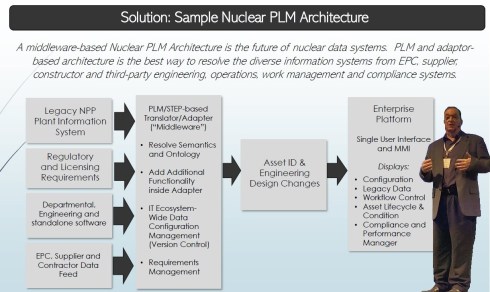

Next Gen” Life Cycle Management in Next-Gen Nuclear Power and LTO Legacy Plants

Kent Freeland‘s presentation was a trip into memory land when he discussed the issues with Long Term Operations of legacy nuclear plants.

I spent several years in Ringhals (Sweden) discussing and piloting the setup of a PLM front-end next to the MRO (Maintenance Repair Overhaul) system. As nuclear plants developed in the sixties, they required a longer than anticipated lifecycle, with access to the right design and operational data; maintenance and upgrade changes in the plant needed to be planned and controlled. The design data is often lacking; it resides at the EPC or has been stored in a document management system with limited retrieval capabilities.

I spent several years in Ringhals (Sweden) discussing and piloting the setup of a PLM front-end next to the MRO (Maintenance Repair Overhaul) system. As nuclear plants developed in the sixties, they required a longer than anticipated lifecycle, with access to the right design and operational data; maintenance and upgrade changes in the plant needed to be planned and controlled. The design data is often lacking; it resides at the EPC or has been stored in a document management system with limited retrieval capabilities.

See also my 2019 post: How PLM, ALM, and BIM converge thanks to the digital twin.

Kent described these experienced challenges – we must have worked in parallel universes – that now, for the future, we need a digitally connected infrastructure for both plant design and maintenance artifacts, as envisioned below:

The solution reminded me of a lecture I saw at the PI PLMx 2019 conference, where the Swedish ESS facility demonstrated its Asset Lifecycle Data Management solution based on the 3DEXPERIENCE platform.

The solution reminded me of a lecture I saw at the PI PLMx 2019 conference, where the Swedish ESS facility demonstrated its Asset Lifecycle Data Management solution based on the 3DEXPERIENCE platform.

You can still find the presentation here: Henrik Lindblad Ola Nanzell ESS – Enabling Predictive Maintenance Through PLM & IIOT.

Also, Kent focused on the relevant standards to support a “Single Source of Truth” concept, where I would say after all the federated PLM discussions, I would go for:

“The nearest source of truth and a single source of Change”

assuming this makes more sense in a digitally connected enterprise.

Why do you need to be SMART when contracting for information?

Rob Bodington‘s presentation was complementary to Kent Freeland’s presentation. Ron, a technical fellow at Eurostep, described the challenge of information acquisition when working with large assets that require access to the correct data once the asset is in operation. The large asset could be a nuclear plant or an aircraft carrier.

Rob Bodington‘s presentation was complementary to Kent Freeland’s presentation. Ron, a technical fellow at Eurostep, described the challenge of information acquisition when working with large assets that require access to the correct data once the asset is in operation. The large asset could be a nuclear plant or an aircraft carrier.

In the ideal world, the asset owner wants to have a digital twin of the asset fed by different data sources through a digital thread. Of course, this environment will only be reliable when accurate data is used and presented.

Getting accurate data starts with the information acquisition process, and Rob explained that this needed to be done SMARTly – see the image below:

Rob zoomed in on the SMART keywords and the challenge the various standards provide to make the information SMARTly accessible, like the ISO 10303 / PLCS standard, the CFIHOS exchange standard and more. And then there is the ISO 8000 standard about data quality.

Rob zoomed in on the SMART keywords and the challenge the various standards provide to make the information SMARTly accessible, like the ISO 10303 / PLCS standard, the CFIHOS exchange standard and more. And then there is the ISO 8000 standard about data quality.

Click on the image to get smart.

Rob believes that AI might be the silver bullet as it might help understand the data quality, ontology and context of the data and even improve contracting, generating data clauses for contracting….

And there was a lot of AI ….

![]() There was a dazzling presentation from Gary Langridge, engineering manager at Ocado, explaining their Ocado Smart Platform (OSP), which leverages AI, robotics, and automation to tackle the challenges of online grocery and allow their clients to excel in performance and customer responsiveness.

There was a dazzling presentation from Gary Langridge, engineering manager at Ocado, explaining their Ocado Smart Platform (OSP), which leverages AI, robotics, and automation to tackle the challenges of online grocery and allow their clients to excel in performance and customer responsiveness.

There was a significant AI component in his presentation, and if you are tired of reading, watch this video

![]() But here was more AI – from the 25 sessions in this conference, 19 of them mentioned the potential or usage of AI somewhere in their speech – this is more than 75 %!

But here was more AI – from the 25 sessions in this conference, 19 of them mentioned the potential or usage of AI somewhere in their speech – this is more than 75 %!

There was a dedicated closing panel discussion related to the real business value of Artificial Intelligence in the PLM domain, moderated by Peter Bilello and answered by selected speakers from the conference, Sandeep Natu (CIMdata), Lars Fossum (SAP), Diana Goenage (Dassault Systemes) and Uyiosa Abusomwan (Eaton).

The discussion was realistic and helpful for the audience. It is clear that to reap the benefits, companies must explore the technology and use it to create valuable business scenarios. One could argue that many AI tools are already available, but the challenge remains that they have to run on reliable data. The data foundation is crucial for a successful outcome.

An interesting point in the discussion was the statement from Diane Goenage, who repeatedly warned that using LLM-based solutions has an environmental impact due to the amount of energy they consume.

An interesting point in the discussion was the statement from Diane Goenage, who repeatedly warned that using LLM-based solutions has an environmental impact due to the amount of energy they consume.

We have a similar debate in the Netherlands – do we want the wind energy consumed by data centers (the big tech companies with a minimum workforce in the Netherlands), or should the Dutch citizens benefit from renewable energy resources?

Conclusion

There were even more interesting presentations during these two days, and you might have noticed that I did not advertise my content. This is because I have already reached 1600 words, but I also want to spend more time on the content separately.

It was about PLM and Sustainability, a topic often covered in this conference. Unfortunately, only 25 % of the presentations touched on sustainability, and AI over-hypes the topic.

Hopefully, it is not a sign of the time?

This is the third and last post related to the PLM Roadmap / PDT Europe conference, held from 15-16 November in Paris. The first post reported more about “traditional” PLM engagements, whereas the second post focused on more data-driven and federated PLM. If you missed them, here they are:

This is the third and last post related to the PLM Roadmap / PDT Europe conference, held from 15-16 November in Paris. The first post reported more about “traditional” PLM engagements, whereas the second post focused on more data-driven and federated PLM. If you missed them, here they are:

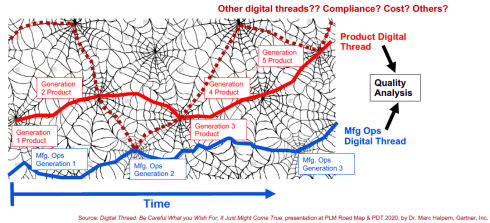

Now, I want to conclude on what I would call, in my terminology, the connected digital thread. This topic was already addressed when I reported on the federated PLM story from NIO (Yousef Hooshmand) and SAAB Aeronautics (Erik Herzog).

The Need for a Governance Digital Thread

This time, my presentation was a memory refresher related to digital transformation in the PLM domain – moving from coordinated ways of working towards connected ways of working.

A typology that is also valid for the digital thread definition.

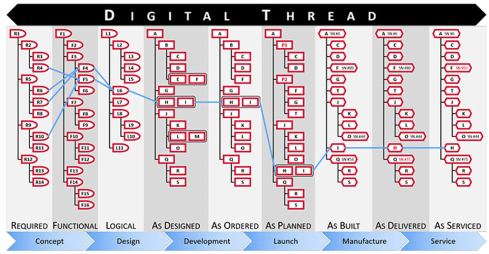

- A Coordinated Digital Thread is a digital thread that connects various artifacts in an enterprise. These relations are created and managed to support traceability and an impact analysis. The coordinated digital thread requires human interpretation to process the information. The image below from Aras is a perfect example of a coordinated digital thread.

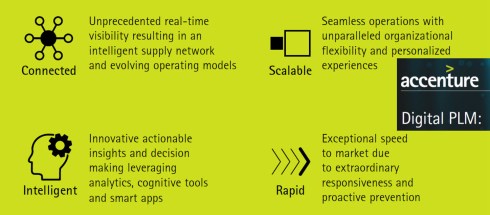

- The Connected Digital Thread is the digital thread where the artifacts are datasets stored in a federated infrastructure of databases. A connected digital thread provides real-time access to data through applications or dashboards for users. The real-time access makes the connected digital thread a solution for real-time, multidisciplinary collaboration activities.

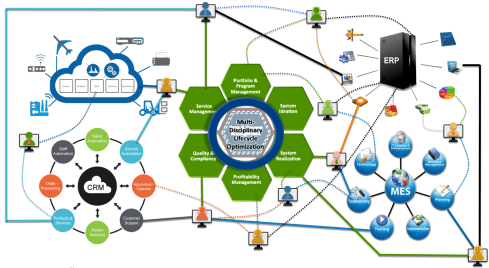

The image above illustrates the connected digital thread as an infrastructure on top of five potential business platforms, i.e., the IoT platform, the CRM platform, the ERP platform, the MES platform and ultimately, the Product Innovation Platform.

Note: These platforms are usually a collection of systems that logically work together efficiently.

The importance of the Connected Digital Thread

When looking at the benefits of the Connected Digital Thread, the most essential feature is that it allows people in an organization to have all relevant data and its context available for making changes, analysis and design choices.

Due to the rich context, people can work proactively and reduce the number of iterations and fixes later.

The above image from Accenture (2014) describing the business benefits can be divided into two categories:

- The top, Connected and Scalable describing capabilities

- The bottom, Intelligent and Rapid, describes the business impact

The connected digital thread for governance

In my session, I gave examples of why companies must invest in the connected digital thread. If you are interested in the slides from the session you can download them here on SlideShare: The Need for a Governance Digital Thread

In my session, I gave examples of why companies must invest in the connected digital thread. If you are interested in the slides from the session you can download them here on SlideShare: The Need for a Governance Digital Thread

First of all, as more and more companies need to provide ESG reporting related to the business, either by law or demanded by their customers, this is an area where data needs to be collected from various sources in the organization.

First of all, as more and more companies need to provide ESG reporting related to the business, either by law or demanded by their customers, this is an area where data needs to be collected from various sources in the organization.

The PLM system will be one of the sources; other sources can be fragmented in an organization. Bringing them together manually in one report is a significant human effort, time-consuming and not supporting the business.

By creating a connected digital thread between these sources, reporting becomes a push on the button, and the continuous availability of information will help companies assess and improve their products to reduce environmental and social risks.

By creating a connected digital thread between these sources, reporting becomes a push on the button, and the continuous availability of information will help companies assess and improve their products to reduce environmental and social risks.

According to a recent KPMG report, only a quarter of companies are ready for ESG Reporting Requirements.

Sustaira, a company we reported in the PGGA, provides such an infrastructure based on Mendix, and during the conference, I shared a customer case with the audience. You can find more about Sustaira in our interview with them: PLM and Sustainability: talking with Sustaira.

Sustaira, a company we reported in the PGGA, provides such an infrastructure based on Mendix, and during the conference, I shared a customer case with the audience. You can find more about Sustaira in our interview with them: PLM and Sustainability: talking with Sustaira.

The Connected Digital Thread and the Digital Product Passport

One of the areas where the connected digital thread will become important is the implementation of the Digital Product Passport (DPP), which is an obligation coming from the European Green Deal, affecting all companies that want to sell their product to the European market in 2026 and beyond.

One of the areas where the connected digital thread will become important is the implementation of the Digital Product Passport (DPP), which is an obligation coming from the European Green Deal, affecting all companies that want to sell their product to the European market in 2026 and beyond.

The DPP is based on the GS1 infrastructure, originating from the retail industry. Each product will have a unique ID (UID based on ISO/IEC 15459:2015), and this UID will provide digital access to product information, containing information about the product’s used materials, its environmental impact, and recycle/reuse–ability.

It will serve both for regulatory compliance and as an information source for consumers to make informed decisions about the products they buy. The DPP aims to stimulate and enforce a more circular economy.

![]() Interesting to note is that the infrastructure needed for the DPP is based on the GS1 infrastructure, where GS1 is a not-for-profit organization providing data services.

Interesting to note is that the infrastructure needed for the DPP is based on the GS1 infrastructure, where GS1 is a not-for-profit organization providing data services.

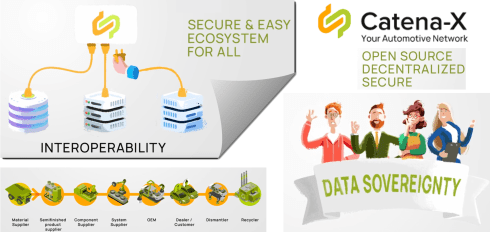

The Connected Digital Thread and Catena-X

So far, I have discussed the connected digital thread as an internal infrastructure in a company. Also, the examples of the connected digital thread at NIO and Saab Aeronautics focused on internal interaction.

So far, I have discussed the connected digital thread as an internal infrastructure in a company. Also, the examples of the connected digital thread at NIO and Saab Aeronautics focused on internal interaction.

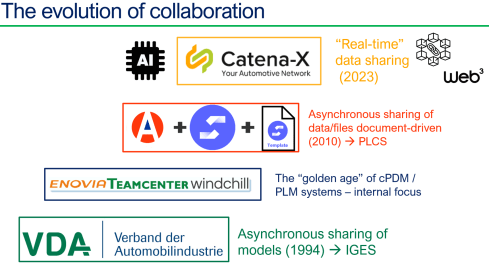

A new exciting trend is the potential rise of not-for-profit infrastructure for a particular industry. Where the GS1-based infrastructure is designed to provide visibility on sustainable targets and decisions, Catena-X is focusing on the automotive industry.

Catena-X is the establishment of a data-driven value chain for the German automotive industry and is now in the process of expanding to become a global network.

It is a significant building block in what I would call the connected or even adaptive enterprise, using a data-driven infrastructure to let information flow through the whole value chain.

It is one of the best examples of a Connected Digital Thread covering an end-to-end value chain.

Although sustainability is mentioned in their vision statement, the main business drivers are increased efficiency, improved competitiveness, and cost reduction by removing the overhead and latency of such a network.

Although sustainability is mentioned in their vision statement, the main business drivers are increased efficiency, improved competitiveness, and cost reduction by removing the overhead and latency of such a network.

So Sustainability and Digitization go hand in hand.

Why a Digital Thread makes a lot of sense

Following the inter-company digital thread story, Mattias Johansson‘s presentation was an excellent continuation of this concept. The full title of Mattias’ session was: Why a Digital Thread makes a lot of sense, Why It Goes Beyond Manufacturing, and Why It Should Be Standards-based.

Following the inter-company digital thread story, Mattias Johansson‘s presentation was an excellent continuation of this concept. The full title of Mattias’ session was: Why a Digital Thread makes a lot of sense, Why It Goes Beyond Manufacturing, and Why It Should Be Standards-based.

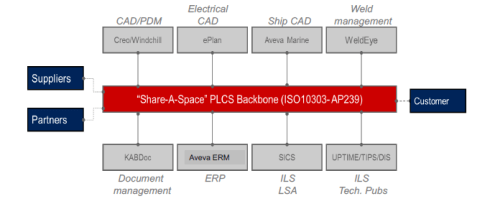

Eurostep, recently acquired by BAE Systems, is known for its collaboration hub or information backbone, ShareAspace. The interesting trend here is switching from a traditional PLM infrastructure to an asset-centric one.

This approach makes a lot of sense for complex assets with a long lifecycle, as the development phase is usually done with a consortium of companies. Still, the owner/operator wants to maintain a digital twin of the asset – for maintenance and upgrades.

A standards-based backbone makes much sense in such an environment due to the various data formats. This setup also means we are looking at a Coordinated Digital Thread at this stage, not a Connected Digital Thread.

Mattias concluded with the question of who owns and who decides on the coordinated digital thread – a discussion also valid in the construction industry when discussing Building Information Management (BIM) and a Common Data Environment(CDE).

I believe software vendors can provide the Coordinated Digital Thread option when they can demonstrate and provide a positive business case for their solution. Still, it will be seen as an overhead to connect the dots.

For a Connected Digital Thread, I think it might be provided as an infrastructure like the World Wide Web (W3C) organization. Here, the business case is much easier to demonstrate as it is really a digital highway.

Such an infrastructure could be provided by not-for-profit organizations like GS1 (Digital Product Passport/Retail), Catena-X (Automotive) and others (Gaia-X).

![]() For sure, these networks will leverage blockchain concepts (affordable now) and data sovereignty concepts now developed for web3, and of course, an aspect of AI will reduce the complexity of maintaining such an environment.

For sure, these networks will leverage blockchain concepts (affordable now) and data sovereignty concepts now developed for web3, and of course, an aspect of AI will reduce the complexity of maintaining such an environment.

AI

And then there was AI. During the conference, people spoke more about AI than Sustainability topics, illustrating that our audience is more interested in understanding the next hype instead of feeling the short-term need to address climate change and planet sustainability.

David Henstock, Chief Data Scientist at BAE Systems Digital Intelligence, talked about turning AI into an Operational Reality, sharing some lessons & challenges from Defence. David mentioned that he was not an expert in PLM but shared various viewpoints on the usage (benefits & risks) of implementing AI in an organization.

David Henstock, Chief Data Scientist at BAE Systems Digital Intelligence, talked about turning AI into an Operational Reality, sharing some lessons & challenges from Defence. David mentioned that he was not an expert in PLM but shared various viewpoints on the usage (benefits & risks) of implementing AI in an organization.

Erdal Tekin, Senior Chief Leader for Digital Transformation at Turkish Aerospace, talked about AI-powered collaboration. I am a bit skeptical on this topic as AI always comes with a flavor.

Erdal Tekin, Senior Chief Leader for Digital Transformation at Turkish Aerospace, talked about AI-powered collaboration. I am a bit skeptical on this topic as AI always comes with a flavor.

And we closed the conference with a roundtable discussion: AI, PLM and the Digital Thread: Why should we care about AI?

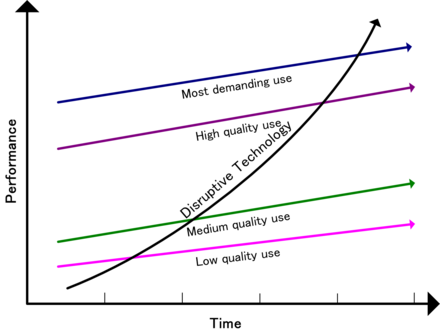

From the roundtable, I concluded that we are all convinced AI will have a significant impact in the upcoming years and are all in the early phases of the AI hype.

Will AI introduction go faster than digital transformation?

Conclusion

The conference gave me confidence that digital transformation in the PLM domain has reached the next level. Many sessions were related to collaboration concepts outside the traditional engineering domain – coordinated and connected digital threads.

The connected digital thread is the future, and as we saw it, it heralds the downfall of monolithic PLM. The change is needed for business efficiency AND compliance with more and more environmental regulations.

I am looking forward to seeing the pace of progress here next year.

Again, a “The weekend after …” post related to my favorite event to which I have contributed since 2014.

Expectations were high this time from my side, in particular because we would have a serious discussion related to connected digital threads and federated PLM.

More about these topics in my post next week as all content is not yet available for sharing.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

Looking back to the conference, there were two noticeable streams.

- The stream where people share their current PLM experiences, traditionally the A&D action groups moderated by CIMdata, is part of this stream. This part I will cover in this post.

- There were forward-looking presentations related to standards, ontologies, and federated PLM—all with an AI flavor. This part I will cover in my next post(s).

The connection between all these sessions was the Digital Thread. The conference’s theme was: The Digital Thread in a Heterogeneous, Extended Enterprise Reality. Let’s start the review with the highlights from the first stream.

Digital Thread: Why Should We Care?

As usual, Peter Bilello from CIMdata kicked off the conference by setting the scene. Peter started by clarifying the two definitions of the Digital Thread.

- The first is a communication framework that allows a connected data flow and integrated view of an asset’s data (i.e., its Digital Twin) throughout its lifecycle across traditionally siloed functional perspectives.

In my terminology, the connected digital thread. - The second is a network of connected information sources around the product lifecycle supporting traceability and decision-making.

In my terminology, the coordinated digital thread is the most straightforward digital thread to achieve.

Peter recommends starting a digital thread by connecting at the beginning of product conceptualization, creating an environment where one can analyze the performance of the product portfolio and the product features and capabilities that need to be planned or how they perform in the field.

In addition, when defining the products, connect them with regulatory requirement databases as they have must-have requirements. A topic I addressed in my session too, besides the existing regulatory requirements, it is expected that in the upcoming years, due to environmental regulations, these requirements will increase, and it will be necessary to have them integrated with your digital thread.

Digital Threads require data governance and are the basis for the various digital twins. Peter discussed the multiple applications of the digital twin, primarily a relation between a virtual asset and a physical asset, except in the early concept phase.

The digital thread is still in the early phase of implementation at companies. A CIMdata survey showed that companies still focus primarily on implementing traditional PDM capabilities, although as the image above shows, there is a growing interest in short-term digital twin/thread implementations.

People, Process & Technology:

The Pillars of Digital Transformation Success

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

Having your people at all levels involved and make them understand the need for change is crucial. A change does not happen top-down. You must educate people and understand what is possible and achievable to change – in the right direction. One of her concluding slides highlights the main points.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

Between the regular sessions there were short sessions from sponsors: Altium, Contact Software, Dassault Systemes, ESI, inensia, Modular Management , PTC, SAP, Share PLM and Sinequa could pitch their value offering.

The Share PLM session, shortly after Christine’s presentation was a nice continuation of the focus on people. I loved the Share PLM image to the left explaining why people do not engage with our dreams.

Learn how LEONI is achieving Digital Continuity in the Automotive Industry.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Recently it has gone through a serious financial crisis and the need for restructuring. This makes it always challenging for a “visionary” PLM project. Tobias mentioned that after disappointing engagements with consultancy firms, they decided on a bottom-up approach to analyze existing processes using BPML. They agreed on a to-be state, fixing bottlenecks and streamlining the flow of information.

Tobias presented a smooth product data flow between their PLM system (PTC Windchill) and ERP (SAP S/4 HANA), clearly stating that the PLM system has become the controlled source of managing product changes.

Their key achievements reported so far were:

- related to BOM creation and routing (approx. 10x faster – from 2-3 days to ¼ day),

- better data consistency (fewer manual steps)

- complete traceability between the systems with PLM as the change management backbone.

The last point I would call the coordinated Digital Thread. The image below shows their current IT landscape in a simplified manner.

This solution might seem obvious for neutral PLM academics or experts, but it is an achievement to do this in an environment with SAP implemented. The eBOM-mBOM discussion is one of the most frequent held discussions – sometimes a battle.

Often, companies use their IT systems first and listen to the vendor’s experts to build integrations instead of starting from the natural business flow of information.

Aerospace & Defense Action groups outcomes

As usual, several Aerospace & Defense (A&D) action groups reported their progress during this conference. The A&D action groups are facilitated by CIMdata, and per topic, various OEMs and suppliers in the A&D industry study and analyze a particular topic, often inviting software vendors to demonstrate and discuss their capabilities with them.

Their activities and reports can be found on the A&D PLM Action page here; In the remainder of this post I will share briefly the ones presented. For a real deep dive in the topics I recommend to find the proceedings per topic on the A&D action page.

The Promise and Reality of the Digital Thread

James Roche CIMdata presented insights from industry research on The Promise and Reality of the Digital Thread. A total of 90 persons completed an in-depth survey about the status and implementation of digital thread concepts in their company. It is clear that the digital thread is still in its early days in this industry, and it is mainly about the coordinated digital thread. The image below reflects the highlights of the survey.

A&D Industry Digital Twin and Digital Thread Standards

Robert Rencher from Boeing explained the progress of their Digital Twin/Digital Thread project, where they had investigated the applicable standards to support a Digital Twin/Digital Thread (Phase 4 out of 7 currently planned). The image below shows that various standards may apply depending on business perspectives.

Their current findings are:

- Digital twin standards overlap, which is most likely a function of standards bodies representing their respective standards as an ongoing development from a historical perspective.

- The limited availability of mature digital twin/thread standards requires greater attention by standards organizations.

- The concept of the digital twin continues to evolve. This dynamic will be a challenge to standards bodies.

- The digital twin and the digital thread are distinct aspects of digital transformation. The corresponding digital twin and digital thread standards will be distinctly different.

- Coordinating the development of the respective standards between the digital twin/thread is needed.

- The digital twin’s organization, definition, and enablement depend on data and information provided by the digital thread.

Roadmap for Enabling Global Collaboration

Robert Gutwein (Pratt & Whitney Canada) and Agnes Gourillon-Jandot (Safran Aircraft Engines) reported their progress on the Global Collaboration project. Collaboration is challenged as exchange methods can vary, as well as dealing with the validation of exchanged information and governing the exchange of information in the context of IP protection.

One of the focal points was to introduce an approach to define standardized supplier agreements that anticipate modern model-based exchanges and collaboration methods.

Robert & Agnes presented the 8-step guideline for the aerospace industry in specific terms, explicitly mentioning the ISO44001 standard as being generic for all industries. An impression of the eight steps and sub-steps can be found below:

The 8-step approach will be supported by a 3rd-party Collaboration Management System (CMS app), which is not mandatory but recommended for use. When an interaction depends on a specific tool, it cannot become an ISO standard. The purpose of the methodology and app is to assist participants to ensure the collaboration aspect between stakeholders contains all the necessary steps & and people.

Model-based OEM/Supplier Collaboration Needs in Aviation Industry

Hartmut Hintze, working at Airbus Operations, presented the latest findings of the MBSE Data Interoperability working group and presented the model-based OEM/Supplier collaboration requirements and standards that need to be supported by the PLM/MBSE solution providers in the future. This collaboration goes beyond sharing CAD models, as you can see from the supplier engagement framework below:

As there are no standards-based tools, their first focus was looking into methodologies for model and behavior exchanges based on use cases. The use cases are then used to verify the state-of-the-art abilities of the various tools. At this moment, there is a focus on SysML V2 as a potential game-changer due to its new API support. As a relative novice on SysML, I cannot explain this topic in more simple words. I recommend that experts visit their presentations on the AD PAG publications page here.

Conclusions

The theme of the conference was related to the Digital Thread – and as you will discover it is valid for everyone. Learn to see the difference between the coordinated Digital Thread and the connected Digital Tread.This time, a lot of information about the Aerospace and Defense Action Groups (AD PAG), which are a fundamental part of this conference. The A&D industry has always been leading in advanced PLM concepts. However, more advanced concepts will come in my next post when touching the connected Digital Thread in the context of federated PLM and let’s not forget AI.

In the past two weeks, I had several discussions with peers in the PLM domain about their experiences.

In the past two weeks, I had several discussions with peers in the PLM domain about their experiences.

Some of them I met after a long time again face-to-face at the LiveWorx 2023 event. See my review of the event here: The Weekend after LiveWorx 2023.

And there were several interactions on LinkedIn, leading to a more extended discussion thread (an example of a digital thread ?) or a Zoom discussion (a so-called 2D conversation).

To complete the story, I also participated in two PLM podcasts from Share PLM, where we interviewed Johan Mikkelä (currently working at FLSmidth) and, in the second episode Issam Darraj (presently working at ABB) about their PLM experiences. Less a discussion, more a dialogue, trying to grasp the non-documented aspects of PLM. We are looking for your feedback on these podcasts too.

To complete the story, I also participated in two PLM podcasts from Share PLM, where we interviewed Johan Mikkelä (currently working at FLSmidth) and, in the second episode Issam Darraj (presently working at ABB) about their PLM experiences. Less a discussion, more a dialogue, trying to grasp the non-documented aspects of PLM. We are looking for your feedback on these podcasts too.

All these discussions led to a reconfirmation that if you are a PLM practitioner, you need a broad skillset to address the business needs, translate them into people and process activities relevant to the industry and ultimately implement the proper collection of tools.

![]() As a sneaky preview for the podcast sessions, we asked both Johan and Issam about the importance of the tools. I will not disclose their answers here; you have to listen.

As a sneaky preview for the podcast sessions, we asked both Johan and Issam about the importance of the tools. I will not disclose their answers here; you have to listen.

Let’s look at some of the discussions.

NOTE: Just before pushing the Publish button, Oleg Shilovitsky published this blog article PLM Project Failures and Unstoppable PLM Playbook. I will comment on his points at the end of this post. It is all part of the extensive discussion.

NOTE: Just before pushing the Publish button, Oleg Shilovitsky published this blog article PLM Project Failures and Unstoppable PLM Playbook. I will comment on his points at the end of this post. It is all part of the extensive discussion.

PLM, LinkedIn and complexity

The most popular discussions on LinkedIn are often related to the various types of Bills of Materials (eBOM, mBOM, sBOM), Part numbering schemes (intelligent or not), version and revision management and the famous FFF discussions.

The most popular discussions on LinkedIn are often related to the various types of Bills of Materials (eBOM, mBOM, sBOM), Part numbering schemes (intelligent or not), version and revision management and the famous FFF discussions.

This post: PLM and Configuration Management Best Practices: Working with Revisions, from Andreas Lindenthal, was a recent example that triggered others to react.

I had some offline discussions on this topic last week, and I noticed Frédéric Zeller wrote his post with the title PLM, LinkedIn and complexity, starting his post with (quote):

I am stunned by the average level of posts on the PLM on LinkedIn.

I’m sorry, but in 2023 :

- Part Number management (significant, non-significant) should no longer be a problem.

- Revision management should no longer be a question.

- Configuration management theory should no longer be a question.

- Notions of EBOMs, MBOMs … should no longer be a question.

So why are there still problems on these topics?

You can see from the at least 40+ comments that this statement created a lot of reactions, including mine. Apparently, these topics are touching many people worldwide, and there is no simple, single answer to each of these topics. And there are so many other topics relevant to PLM.

Talking later with Frederic for one hour in a Zoom session, we discussed the importance of the right PLM data model.

Talking later with Frederic for one hour in a Zoom session, we discussed the importance of the right PLM data model.

I also wrote a series about the (traditional) PLM data model: The importance of a (PLM) data model.

Frederic is more of a PLM architect; we even discussed the wording related to the EBOM and the MBOM. A topic that I feel comfortable discussing after many years of experience seeing the attempts that failed and the dreams people had. And this was only one aspect of PLM.

You also find the discussion related to a PLM certification in the same thread. How would you certify a person as a PLM expert?

You also find the discussion related to a PLM certification in the same thread. How would you certify a person as a PLM expert?

There are so many dimensions to PLM. Even more important, the PLM from 10-15 years ago (more of a system discussion) is no longer the PLM nowadays (a strategy and an infrastructure) –

This is a crucial difference. Learning to use a PLM tool and implement it is not the same as building a PLM strategy for your company. It is Tools, Process, People versus Process, People, Tools and Data.

Time for Methodology workshops?

I recently discussed with several peers what we could do to assist people looking for best practices discussion and lessons learned. There is a need, but how to organize them as we cannot expect this to be voluntary work.

![]() In the past, I suggested MarketKey, the organizer of the PI DX events, extend its theme workshops. For example, instead of a 45-min Focus group with a short introduction to a theme (e.g., eBOM-mBOM, PLM-ERP interfaces), make these sessions last at least half a day and be independent of the PLM vendors.

In the past, I suggested MarketKey, the organizer of the PI DX events, extend its theme workshops. For example, instead of a 45-min Focus group with a short introduction to a theme (e.g., eBOM-mBOM, PLM-ERP interfaces), make these sessions last at least half a day and be independent of the PLM vendors.

Apparently, it did not fit in the PI DX programming; half a day would potentially stretch the duration of the conference and more and more, we see two days of meetings as the maximum. Longer becomes difficult to justify even if the content might have high value for the participants.

I observed a similar situation last year in combination with the PLM roadmap/PDT Europe conference in Gothenburg. Here we had a half-day workshop before the conference led by Erik Herzog(SAAB Aeronautics)/ Judith Crockford (Europstep) to discuss concepts related to federated PLM – read more in this post: The week after PLM Roadmap/PDT Europe 2022.

I observed a similar situation last year in combination with the PLM roadmap/PDT Europe conference in Gothenburg. Here we had a half-day workshop before the conference led by Erik Herzog(SAAB Aeronautics)/ Judith Crockford (Europstep) to discuss concepts related to federated PLM – read more in this post: The week after PLM Roadmap/PDT Europe 2022.

It reminded me of an MDM workshop before the 2015 Event, led by Marc Halpern from Gartner. Unfortunately, the federated PLM discussion remained a pretty Swedish initiative, and the follow-up did not reach a wider audience.

And then there are the Aerospace and Defense PLM action groups that discuss moderated by CIMdata. It is great that they published their findings (look here), although the best lessons learned are during the workshops.

And then there are the Aerospace and Defense PLM action groups that discuss moderated by CIMdata. It is great that they published their findings (look here), although the best lessons learned are during the workshops.

However, I also believe the A&D industry cannot be compared to a mid-market machinery manufacturing company. Therefore, it is helpful for a smaller audience only.

And here, I inserted a paragraph dedicated to Oleg’s recent post, PLM Project Failures and Unstoppable PLM Playbook – starting with a quote:

How to learn to implement PLM? I wrote about it in my earlier article – PLM playbook: how to learn about PLM? While I’m still happy to share my knowledge and experience, I think there is a bigger need in helping manufacturing companies and, especially PLM professionals, with the methodology of how to achieve the right goal when implementing PLM. Which made me think about the Unstoppable PLM playbook ©.

I found a similar passion for helping companies to adopt PLM while talking to Helena Gutierrez. Over many conversations during the last few months, we talked about how to help manufacturing companies with PLM adoption. The unstoppable PLM playbook is still a work in progress, but we want to start talking about it to get your feedback and start the conversation.

It is an excellent confirmation of the fact that there is a need for education and that the education related to PLM on the Internet is not good enough.

As a former teacher in Physics, I do not believe in the Unstoppable PLM Playbook, even if it is a branded name. Many books are written by specific authors, giving their perspectives based on their (academic) knowledge.

As a former teacher in Physics, I do not believe in the Unstoppable PLM Playbook, even if it is a branded name. Many books are written by specific authors, giving their perspectives based on their (academic) knowledge.

Are they useful? I believe only in the context of a classroom discussion where the applicability can be discussed,

Therefore my questions to vendor-neutral global players, like CIMdata, Eurostep, Prostep, SharePLM, TCS and others, are you willing to pick up this request? Or are there other entities that I missed? Please leave your thoughts in the comments. I will be happy to assist in organizing them.

Therefore my questions to vendor-neutral global players, like CIMdata, Eurostep, Prostep, SharePLM, TCS and others, are you willing to pick up this request? Or are there other entities that I missed? Please leave your thoughts in the comments. I will be happy to assist in organizing them.

There are many more future topics to discuss and document too.

- What about the potential split of a PLM infrastructure between Systems of Record & Systems of Engagement?

- What about the Digital Thread, a more and more accepted theme in discussions, but what is the standard definition?

- Is it traceability as some vendors promote it, or is it the continuity of data, direct usable in various contexts – the DevOps approach?

Who likes to discuss methodology?

When asking myself this question, I see the analogy with standards. So let’s look at the various players in the PLM domain – sorry for the immense generalization.