You are currently browsing the category archive for the ‘EBOM’ category.

The last month, it seems like in my ecosystem, people are incredibly focused on “THE BOM” combined with AI agents working around the clock. One of the reasons I have this impression, of course, is my irregular participation in the Future of PLM panel discussions, moderated and organized by Michael Finocharrio.

Yesterday, the continuously growing Future of PLM team held another interesting discussion: “A BOMversation”. You can watch the replay and the comments during the debate here: To BOM or Not to BOM: A BOMversation

On the other hand, there is Prof. Jorg Fischer with his provocative post: 📌 2026 – The year we have to unlearn BOMs! –

On the other hand, there is Prof. Jorg Fischer with his provocative post: 📌 2026 – The year we have to unlearn BOMs! –

Sounds like a dramatic opening, but when you read his post and my post below, you will learn that there is a lot of (conceptual) alignment.

Then there are PLM vendors who announce “next-generation BOM management,” startup companies that promise AI-powered configuration engines, and consultants who explain how the BOM has become the foundation of digital transformation. (I do not think so)

And as Anup Karumanchi states, BOMs can be the reason if production keeps breaking.

I must confess that I also have a strong opinion about the various BOMs and their application in multiple industries.

I must confess that I also have a strong opinion about the various BOMs and their application in multiple industries.

My 2019 blog post: The importance of EBOM and MBOM is in the top 3 of most-read posts. BOM discussions, single BOM, multiview BOM, etc., always attract an audience.

I continuously observe a big challenge at the companies I am working with – the difference between theory and reality.

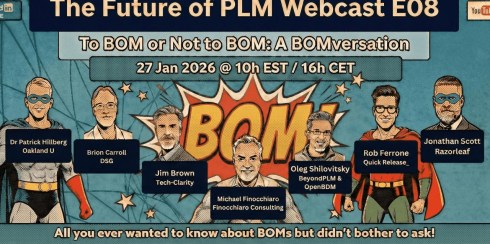

![]() If the BOM is so important, why do so many organizations still struggle to make it work across engineering, manufacturing, supply chain, and service?

If the BOM is so important, why do so many organizations still struggle to make it work across engineering, manufacturing, supply chain, and service?

The answer is two-fold: LEGACY DATA, PROCESSES and PEOPLE, and the understanding that the BOM we are using today was designed for a different industrial reality.

Let me share my experiences, which take longer to digest than an entertaining webinar.

Some BOM history and theory

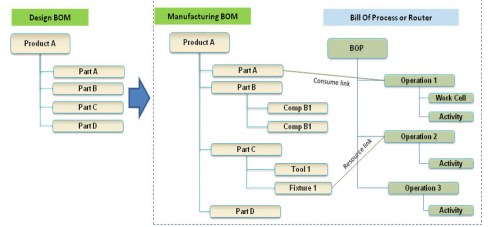

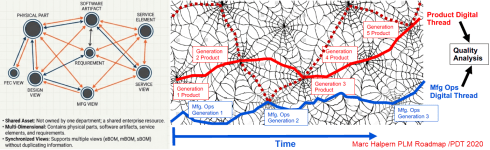

Historically, the BOM was a production artifact. It described what was needed to build something and in what quantities. When PLM systems emerged, the 3D CAD model structure became the authoritative structure representing product definition, driven mainly by the PLM vendors with dominant 3D CAD tools in their portfolio.

Historically, the BOM was a production artifact. It described what was needed to build something and in what quantities. When PLM systems emerged, the 3D CAD model structure became the authoritative structure representing product definition, driven mainly by the PLM vendors with dominant 3D CAD tools in their portfolio.

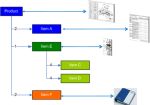

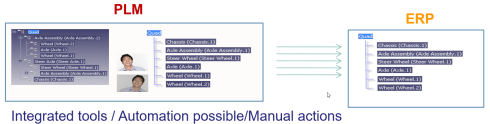

As the various disciplines in the company were not integrated at all, the BOM structure derived from the 3D CAD model was often a simplified way to prepare a BOM for ERP. The transfer to ERP was done manually (retype the structure in ERP), advanced (using Excel export and import with some manipulation) or advanced through an “intelligent” interface.

![]() There are still a lot of companies working this way, probably because, due to the siloed organization, there is no one owning or driving a smooth flow of information in the company.

There are still a lot of companies working this way, probably because, due to the siloed organization, there is no one owning or driving a smooth flow of information in the company.

The need for an eBOM and mBOM

When companies become more mature and start to implement a PLM system, they will discover, depending on their core business processes, that it makes sense to split the BOM concept into a specification structure, the eBOM and a manufacturing structure for ERP, the mBOM.

The advantage of this split is that the engineering specification can remain stable over time, as it provides a functional view of the product with its functional assemblies and part definitions.

This definition needs to be resolved and adapted for a specific plant with its local suppliers and resources. PLM systems often support the transformation from the eBOM to a proposed mBOM, and if done more completely with a Bill of Process.

This definition needs to be resolved and adapted for a specific plant with its local suppliers and resources. PLM systems often support the transformation from the eBOM to a proposed mBOM, and if done more completely with a Bill of Process.

The advantages of a split in an eBOM and an mBOM are:

- Reduced the number of engineering changes when supplier parts change

- Centralized control of all product IP related to its specifications (eBOM/3DCAD)

- Efficient support for modularity, as each module has its own lifecycle and can be used in multiple products.

Implementing an eBOM/mBOM concept

The theory, the methodology and implementation are clear, and you can ask ChatGPT and others to support you in this step.

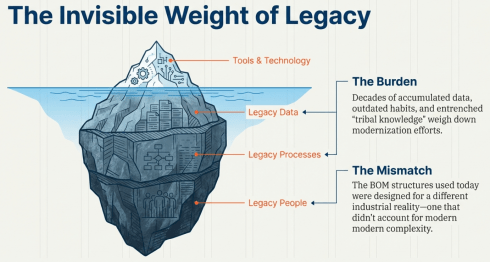

However where ChatGPT or service providers often fail is to motivate a company to move to this next steps, as either their legacy data and tools are incompatible (WHY CHANGE?), the future is not understood and feels risky (I DON’T LIKE IT) or for political career reasons a change is blocked (I DON’T LIKE YOU or the HIPPO says differently)

Extending to the sBOM

When you sell products in large volumes, like cars or consumer products, companies have discovered and organized a well-established service business, as the margins are high here.

Companies that sell almost unique solutions for customers, batch-size 1 or small series, are also discovering or asked by their customers to come up with service plans and related pricing.

The challenge for these companies is that there is a lot of guesswork to be done, as the service business was not planned in their legacy business. A quick and dirty solution was to use the mBOM in ERP as the source of information. However, the ERP system usually does not provide any context information, such as where the part is located and what potential other parts need to be replaced—a challenging job for service engineers.

The challenge for these companies is that there is a lot of guesswork to be done, as the service business was not planned in their legacy business. A quick and dirty solution was to use the mBOM in ERP as the source of information. However, the ERP system usually does not provide any context information, such as where the part is located and what potential other parts need to be replaced—a challenging job for service engineers.

A less quick and still a little dirty solution was create a new structure in the PLM system, which provided the service kits and service parts for the defined product, preferably done based on the eBOM, if an eBOM exists.

![]() The ideal solution would be that service engineers are working in parallel and in the same environment as the other engineers, but this requires an organisational change.

The ideal solution would be that service engineers are working in parallel and in the same environment as the other engineers, but this requires an organisational change.

The organization often becomes the blocker.

As long as the PLM system is considered a tool for engineering, advanced extensions to other disciplines will be hard to achieve.

A linear organization aligned with a traditional release process will have difficulties changing to work with a common PLM backbone that satisfies engineering, manufacturing engineering and service engineering at the same time.

Now, the term PLM becomes Product Lifecycle MANAGEMENT and this brings us to the core issue: the BOM is too often reduced to a parts list without understanding the broader context of the product, needed for service or operation support where artifacts can be hardware and software in a system.

What is really needed is an extended data model with at least a logical product structure that can represent multiple views of the same product: engineering intent, manufacturing reality, service configuration, software composition, and operational context. These views should not be separate silos connected by fragile integrations. They should be derived from a shared, consistent digital infrastructure – this is what I extract from Prof. Jorg Fischer’s post, be it that he comes with a strong SAP background and focus on CTO+

Most companies are still organized around linear processes with a focus on mechanical products: engineering hands over to manufacturing, manufacturing hands over to service, and feedback loops are weak or nonexistent.

Most companies are still organized around linear processes with a focus on mechanical products: engineering hands over to manufacturing, manufacturing hands over to service, and feedback loops are weak or nonexistent.

Changing the BOM without changing the organization is like repainting a house with structural cracks. It may look better, but the underlying issues remain.

Listen to this snippet from the BOMversation where Patrick Hilberg touches this point too.

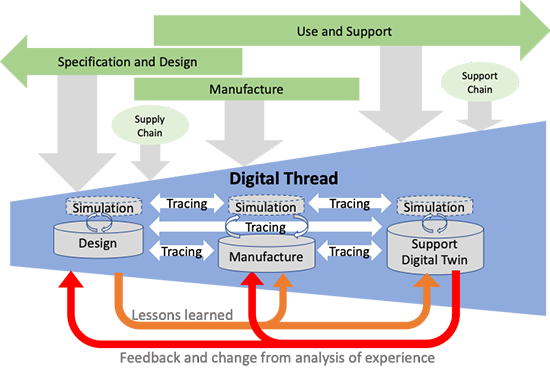

With this approach, the digital thread becomes more than a buzzword. A digital thread must provide digital continuity, which means that changes propagate across domains, that data is contextualized, and that lifecycle feedback flows back into product development. Without this continuity, digital twins concepts remain isolated models rather than living representations of real products.

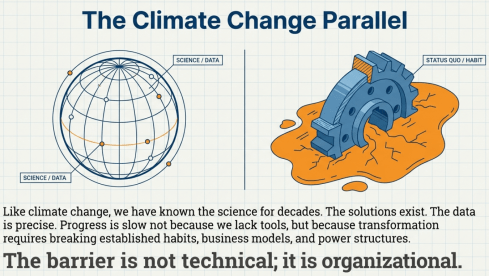

![]() However, the most significant barrier is not technical. It is organizational. There is an interesting parallel with how we address climate change and are willing to take action against it.

However, the most significant barrier is not technical. It is organizational. There is an interesting parallel with how we address climate change and are willing to take action against it.

For decades, we have known what needs to change. The science is precise. The solutions exist. Yet progress is slow because transformation requires breaking established habits, business models, and power structures.

Digital transformation in product lifecycle management follows a similar pattern. Everyone agrees that data silos are a problem. Everyone wants “end-to-end visibility.” Yet few organizations are willing to rethink ownership of product data and processes fundamentally.

So what does the future BOM look like?

It is not a single hierarchical tree. It is part of a maze; some will say it is a graph. It is a connected network of product-related information: physical components, software artifacts, service elements, configurations, requirements, and operational data. It supports multiple synchronized views without duplicating information. It evolves as products change when operated in the field.

Most importantly, it is not owned by one department. It becomes a shared enterprise asset – with shared accountability for various datasets. But we should not abandon the BOM concept. On the contrary, the BOM remains essential and managing BOMs consistently is already a challenge.

But its role must shift from being a collection of static structures to becoming part of the digital product definition infrastructure, extended by a logical product structure and beyond – the MBSE question.

![]() The BOM is not dead. But the traditional BOM mindset is no longer sufficient. The question is not whether the BOM will change. It already is. The real question is whether organizations are ready to change with it.

The BOM is not dead. But the traditional BOM mindset is no longer sufficient. The question is not whether the BOM will change. It already is. The real question is whether organizations are ready to change with it.

Conclusion

Inspired by various BOMversations and AI graphical support, I tried to reflect the business reality, observed for over 10++ years. Technology and the Academic truth do not create breakthroughs in organisations due to the big legacy and fear of failure. Will AI fix this gap, as many software vendors believe, or do we need a new generation with no legacy PLM experience, as some others suggest? Your thoughts?

p.s. My trick to join the BOMversation without being thrown from the balcony 🙃

In the past months, I have had several discussions related to migrating PLM data, either from one system to another or from consolidating a collection of applications into a single environment. Does this sound familiar?

In the past months, I have had several discussions related to migrating PLM data, either from one system to another or from consolidating a collection of applications into a single environment. Does this sound familiar?

Let me share some experiences and lessons learned to avoid the Migration Migraine.

It is not a technical guide but a collection of experiences and thoughts that you might have missed when considering to solve the technical dream.

Halfway I realized I was too ambitious; therefore, another post will follow this introduction. Here, I will focus on the business side and the digital transformation journey.

Halfway I realized I was too ambitious; therefore, another post will follow this introduction. Here, I will focus on the business side and the digital transformation journey.

Garbage Out – Garbage In

The Garbage Out-In statement is somehow the paradigm we are used to in our day-to-day lives. When you buy a new computer, you use backup and restore. Even easier, nowadays, the majority of the data is already in the cloud.

The Garbage Out-In statement is somehow the paradigm we are used to in our day-to-day lives. When you buy a new computer, you use backup and restore. Even easier, nowadays, the majority of the data is already in the cloud.

This simple scenario assumes that all professional systems should be easily upgrade-able. We become unaware of the amount of data we store and its relevance.

This phenomenon already has a name: “Dark Data.” Dark Data consumes storage energy in the cloud and is no longer visible. Please read all about it here: Dark Data.

TIP 1: Every migration is a moment to clean up your data. By dragging everything with you, the burden of migrating becomes bigger. In easy migrations, do a clean-up—it prevents future, more extensive issues.

TIP 1: Every migration is a moment to clean up your data. By dragging everything with you, the burden of migrating becomes bigger. In easy migrations, do a clean-up—it prevents future, more extensive issues.

Never follow the Garbage Out – Garbage in principle, even if it is easy!

Migrations in the PLM domain are different – setting the scene.

Before discussing the various scenarios, let’s examine what companies are doing. For early PLM adopters in the Automotive, Aerospace, and Defense Industries, migrations from mainframes to modern infrastructures have become impossible. The real problem is not only the changing hardware but also the changing data and data models.

Before discussing the various scenarios, let’s examine what companies are doing. For early PLM adopters in the Automotive, Aerospace, and Defense Industries, migrations from mainframes to modern infrastructures have become impossible. The real problem is not only the changing hardware but also the changing data and data models.

For these companies, the solution is often to build an entirely new PLM infrastructure on top of the existing infrastructure, where manageable data pieces are migrated to new environments using data lakes, dashboards, and custom apps to support modern users.

Migration in this case is a journey as long as the data lives – and we can learn from them!

Follow the money

From a business perspective, migrations are considered a negative distractor. Talking about them raises awareness of their complexity, which might jeopardize enthusiasm.

From a business perspective, migrations are considered a negative distractor. Talking about them raises awareness of their complexity, which might jeopardize enthusiasm.

For the initiator, the PLM software vendor or implementer, it might endanger the sales deal.

Traditional IT organizations strive for simplification—one CAD, one PLM or one ERP system to manage. Although this argument makes sense, an analysis should always be done comparing the benefits and the (migration) costs and risks to reach the ideal situation.

In those discussions often, migrations are downplayed

Without naming companies, I have observed the downplaying several times, even at some prominent enterprises. So, if you recognize your company in this process, you are not alone.

TIP 2: Migrations are never simple. Make migration a serious topic of your PLM project – as important as the software. This approach means analyzing the potential migration risks and their mitigation is needed.

TIP 2: Migrations are never simple. Make migration a serious topic of your PLM project – as important as the software. This approach means analyzing the potential migration risks and their mitigation is needed.

Please read about the Xylem story in my recent post: The week after the PDSFORUM 2024

The Big Bang has the highest risk and might again lead to garbage out—garbage in.

You are responsible for your garbage.

It may sound disparaging, but it is not. Most companies are aware that people, tools and policies have changed over the years. Due to the coordinated approach to working, disciplines did not need to care about downstream or upstream usage of their initially created data – Excel and PDFs are the bridges between disciplines.

All the actual knowledge and context are stored in the heads of experienced employees who have gotten used to dealing with inconsistencies. And they will retire, so there is an urgent need for actual data quality and governance. Read more about the journey from Coordinated to Connected in these articles.

Even if you are not yet thinking about migrations, the digital transformation in the PLM domain is coming, and we should learn to work in a connected mode.

TIP 3: Create a team in your organization that assesses the current data quality and defines the potential future enterprise (data) architecture. Then, start improving the quality of the current generated data. Like the ISO 900x standard, the ISO 8000 standard already exists for data quality.

TIP 3: Create a team in your organization that assesses the current data quality and defines the potential future enterprise (data) architecture. Then, start improving the quality of the current generated data. Like the ISO 900x standard, the ISO 8000 standard already exists for data quality.

The future is data-driven; prepare yourself for the future.

Migration scenarios and their best practices

Here are some migrations scenario’s – two in this post and more in an upcoming post.

From Relational to Object-oriented

One of my earlier projects, starting in 2010 with SmarTeam, was migrating a mainframe-based application for airplane certification to a modern Microsoft infrastructure.

One of my earlier projects, starting in 2010 with SmarTeam, was migrating a mainframe-based application for airplane certification to a modern Microsoft infrastructure.

The goal was to create a new environment that could be used both as a replacement for the mainframe application and as the design and validation environment to implement changes to the current airplanes during a maintenance or upgrade activity.

The need was high because detailed documentation about the logic of the current application did not exist, and only one person who understood the logic was partly available.

So, internally, the relational database was a black box. The tables in the database contained a mix of item data, document data, change status and versions. The documents were stored in directories with meaningful file names but disconnected from the application.

The initial estimate was that the project would take two to three months, so a fixed price for two months was agreed upon. However, it became almost a two-year project, and in the end, the result seemed to be reliable (there was never mathematical proof).

The disadvantage was that SmarTeam ended up being so highly customized that automatic upgrades would not work for this version anymore—a new legacy was created with modern technology.

The disadvantage was that SmarTeam ended up being so highly customized that automatic upgrades would not work for this version anymore—a new legacy was created with modern technology.

The same story, combined with the example of Ericsson’s migration attempt, is described in the 2016 post, The PLM Migration Dilemma. For me, the lesson learned from these examples leads to the following recommendation.

TIP 4: When there is a paradigm change in the data model, don’t migrate but establish a new (data-driven) infrastructure and connect to your legacy as much as possible in read-only mode.

TIP 4: When there is a paradigm change in the data model, don’t migrate but establish a new (data-driven) infrastructure and connect to your legacy as much as possible in read-only mode.

The automotive and aerospace industries’ story is one of paradigm change.

Listen to the SharePLM podcast Revolutionizing PLM: Insights from Yousef Hooshmand, where Yousef also discusses how to address this transition process.

Listen to the SharePLM podcast Revolutionizing PLM: Insights from Yousef Hooshmand, where Yousef also discusses how to address this transition process.

CAD/PDM to PLM

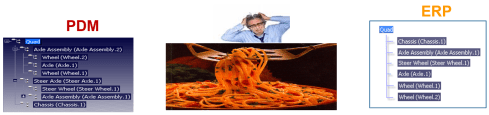

Another migration step happens when companies decide to implement a traditional PLM infrastructure as a System of Record, merging PDM data (mainly CAD) and ERP data (the BOM).

Another migration step happens when companies decide to implement a traditional PLM infrastructure as a System of Record, merging PDM data (mainly CAD) and ERP data (the BOM).

Some of these companies have been working file-based and have stored their final CAD files in folders; others might have a local PDM system native to the 3D CAD. The EBOM usually existed digitally in ERP, and most of the time, it is not a “pure” EBOM but more of a hybrid EBOM/MBOM.

The image above show this type of migration can be very challenging as, in the source systems, there is not necessarily a consistent 3D CAD definition matching the BOM items. As the systems have been disconnected in the past, people have potentially added missing information or fixed information on the BOM side. As in most companies, the manufacturing definition is based on drawings, and the consistency with the 3D CAD definition is not guaranteed.

To address this challenge, companies need to assess the usability of the CAD and BOM data. Is it possible to populate the CAD files with properties that are necessary for an import? For example, does the file path contain helpful information?

I have experienced a situation where a company has poorly defined 3D parts and no properties, as all the focus was on using the 3D to generate the 2D drawing.

I have experienced a situation where a company has poorly defined 3D parts and no properties, as all the focus was on using the 3D to generate the 2D drawing.

The relevant details for manufacturing were next added to the drawing and not anymore to the parts or models – traceability was almost impossible.

In this situation, importing the 3D CAD structures into the new PLM system has limited value. An alternative is to describe and test procedures for handling legacy data when it is needed, either to implement a design change or a new order. Leave the legacy accessible, but do not migrate.

The BOM side is, in theory, stable for manufactured products, as the data should have gone through a release process. However, the company needs to revisit its part definition process for new designs and products.

Some points to consider:

- Meaningful identifiers are not desired in a PLM system as they create a legacy. Therefore, the import of parts with smart identifiers should map to relevant part properties besides the ID. Splitting the ID into properties will create a broader usage in the future. Read more in Smart Part Numbers – do we need them?

- In addition, companies should try to avoid having logistic information, such as supplier-specific part numbers to come from the CAD system. Supplier parts in your CAD environment create inefficiencies when a supplier part becomes obsolete. Concepts such as EBOM and MBOM and potentially the SBOM should be well understood during this migration.

- Concepts of EBOM and MBOM should also be introduced when moving from an ETO to a CTO approach or when modularity is a future business strategy.

Conclusion

As every company is on its PLM journey and technology is evolving, there will always be a migration discussion. Understanding and working towards the future should be the most critical driver for migration. Migrations in the PLM domain are often more than a data migration – new ways of working should be introduced in parallel. And for that reason the “big bang” is often too costly and demotivating for the future.

In the past two weeks, I had several discussions with peers in the PLM domain about their experiences.

In the past two weeks, I had several discussions with peers in the PLM domain about their experiences.

Some of them I met after a long time again face-to-face at the LiveWorx 2023 event. See my review of the event here: The Weekend after LiveWorx 2023.

And there were several interactions on LinkedIn, leading to a more extended discussion thread (an example of a digital thread ?) or a Zoom discussion (a so-called 2D conversation).

To complete the story, I also participated in two PLM podcasts from Share PLM, where we interviewed Johan Mikkelä (currently working at FLSmidth) and, in the second episode Issam Darraj (presently working at ABB) about their PLM experiences. Less a discussion, more a dialogue, trying to grasp the non-documented aspects of PLM. We are looking for your feedback on these podcasts too.

To complete the story, I also participated in two PLM podcasts from Share PLM, where we interviewed Johan Mikkelä (currently working at FLSmidth) and, in the second episode Issam Darraj (presently working at ABB) about their PLM experiences. Less a discussion, more a dialogue, trying to grasp the non-documented aspects of PLM. We are looking for your feedback on these podcasts too.

All these discussions led to a reconfirmation that if you are a PLM practitioner, you need a broad skillset to address the business needs, translate them into people and process activities relevant to the industry and ultimately implement the proper collection of tools.

![]() As a sneaky preview for the podcast sessions, we asked both Johan and Issam about the importance of the tools. I will not disclose their answers here; you have to listen.

As a sneaky preview for the podcast sessions, we asked both Johan and Issam about the importance of the tools. I will not disclose their answers here; you have to listen.

Let’s look at some of the discussions.

NOTE: Just before pushing the Publish button, Oleg Shilovitsky published this blog article PLM Project Failures and Unstoppable PLM Playbook. I will comment on his points at the end of this post. It is all part of the extensive discussion.

NOTE: Just before pushing the Publish button, Oleg Shilovitsky published this blog article PLM Project Failures and Unstoppable PLM Playbook. I will comment on his points at the end of this post. It is all part of the extensive discussion.

PLM, LinkedIn and complexity

The most popular discussions on LinkedIn are often related to the various types of Bills of Materials (eBOM, mBOM, sBOM), Part numbering schemes (intelligent or not), version and revision management and the famous FFF discussions.

The most popular discussions on LinkedIn are often related to the various types of Bills of Materials (eBOM, mBOM, sBOM), Part numbering schemes (intelligent or not), version and revision management and the famous FFF discussions.

This post: PLM and Configuration Management Best Practices: Working with Revisions, from Andreas Lindenthal, was a recent example that triggered others to react.

I had some offline discussions on this topic last week, and I noticed Frédéric Zeller wrote his post with the title PLM, LinkedIn and complexity, starting his post with (quote):

I am stunned by the average level of posts on the PLM on LinkedIn.

I’m sorry, but in 2023 :

- Part Number management (significant, non-significant) should no longer be a problem.

- Revision management should no longer be a question.

- Configuration management theory should no longer be a question.

- Notions of EBOMs, MBOMs … should no longer be a question.

So why are there still problems on these topics?

You can see from the at least 40+ comments that this statement created a lot of reactions, including mine. Apparently, these topics are touching many people worldwide, and there is no simple, single answer to each of these topics. And there are so many other topics relevant to PLM.

Talking later with Frederic for one hour in a Zoom session, we discussed the importance of the right PLM data model.

Talking later with Frederic for one hour in a Zoom session, we discussed the importance of the right PLM data model.

I also wrote a series about the (traditional) PLM data model: The importance of a (PLM) data model.

Frederic is more of a PLM architect; we even discussed the wording related to the EBOM and the MBOM. A topic that I feel comfortable discussing after many years of experience seeing the attempts that failed and the dreams people had. And this was only one aspect of PLM.

You also find the discussion related to a PLM certification in the same thread. How would you certify a person as a PLM expert?

You also find the discussion related to a PLM certification in the same thread. How would you certify a person as a PLM expert?

There are so many dimensions to PLM. Even more important, the PLM from 10-15 years ago (more of a system discussion) is no longer the PLM nowadays (a strategy and an infrastructure) –

This is a crucial difference. Learning to use a PLM tool and implement it is not the same as building a PLM strategy for your company. It is Tools, Process, People versus Process, People, Tools and Data.

Time for Methodology workshops?

I recently discussed with several peers what we could do to assist people looking for best practices discussion and lessons learned. There is a need, but how to organize them as we cannot expect this to be voluntary work.

![]() In the past, I suggested MarketKey, the organizer of the PI DX events, extend its theme workshops. For example, instead of a 45-min Focus group with a short introduction to a theme (e.g., eBOM-mBOM, PLM-ERP interfaces), make these sessions last at least half a day and be independent of the PLM vendors.

In the past, I suggested MarketKey, the organizer of the PI DX events, extend its theme workshops. For example, instead of a 45-min Focus group with a short introduction to a theme (e.g., eBOM-mBOM, PLM-ERP interfaces), make these sessions last at least half a day and be independent of the PLM vendors.

Apparently, it did not fit in the PI DX programming; half a day would potentially stretch the duration of the conference and more and more, we see two days of meetings as the maximum. Longer becomes difficult to justify even if the content might have high value for the participants.

I observed a similar situation last year in combination with the PLM roadmap/PDT Europe conference in Gothenburg. Here we had a half-day workshop before the conference led by Erik Herzog(SAAB Aeronautics)/ Judith Crockford (Europstep) to discuss concepts related to federated PLM – read more in this post: The week after PLM Roadmap/PDT Europe 2022.

I observed a similar situation last year in combination with the PLM roadmap/PDT Europe conference in Gothenburg. Here we had a half-day workshop before the conference led by Erik Herzog(SAAB Aeronautics)/ Judith Crockford (Europstep) to discuss concepts related to federated PLM – read more in this post: The week after PLM Roadmap/PDT Europe 2022.

It reminded me of an MDM workshop before the 2015 Event, led by Marc Halpern from Gartner. Unfortunately, the federated PLM discussion remained a pretty Swedish initiative, and the follow-up did not reach a wider audience.

And then there are the Aerospace and Defense PLM action groups that discuss moderated by CIMdata. It is great that they published their findings (look here), although the best lessons learned are during the workshops.

And then there are the Aerospace and Defense PLM action groups that discuss moderated by CIMdata. It is great that they published their findings (look here), although the best lessons learned are during the workshops.

However, I also believe the A&D industry cannot be compared to a mid-market machinery manufacturing company. Therefore, it is helpful for a smaller audience only.

And here, I inserted a paragraph dedicated to Oleg’s recent post, PLM Project Failures and Unstoppable PLM Playbook – starting with a quote:

How to learn to implement PLM? I wrote about it in my earlier article – PLM playbook: how to learn about PLM? While I’m still happy to share my knowledge and experience, I think there is a bigger need in helping manufacturing companies and, especially PLM professionals, with the methodology of how to achieve the right goal when implementing PLM. Which made me think about the Unstoppable PLM playbook ©.

I found a similar passion for helping companies to adopt PLM while talking to Helena Gutierrez. Over many conversations during the last few months, we talked about how to help manufacturing companies with PLM adoption. The unstoppable PLM playbook is still a work in progress, but we want to start talking about it to get your feedback and start the conversation.

It is an excellent confirmation of the fact that there is a need for education and that the education related to PLM on the Internet is not good enough.

As a former teacher in Physics, I do not believe in the Unstoppable PLM Playbook, even if it is a branded name. Many books are written by specific authors, giving their perspectives based on their (academic) knowledge.

As a former teacher in Physics, I do not believe in the Unstoppable PLM Playbook, even if it is a branded name. Many books are written by specific authors, giving their perspectives based on their (academic) knowledge.

Are they useful? I believe only in the context of a classroom discussion where the applicability can be discussed,

Therefore my questions to vendor-neutral global players, like CIMdata, Eurostep, Prostep, SharePLM, TCS and others, are you willing to pick up this request? Or are there other entities that I missed? Please leave your thoughts in the comments. I will be happy to assist in organizing them.

Therefore my questions to vendor-neutral global players, like CIMdata, Eurostep, Prostep, SharePLM, TCS and others, are you willing to pick up this request? Or are there other entities that I missed? Please leave your thoughts in the comments. I will be happy to assist in organizing them.

There are many more future topics to discuss and document too.

- What about the potential split of a PLM infrastructure between Systems of Record & Systems of Engagement?

- What about the Digital Thread, a more and more accepted theme in discussions, but what is the standard definition?

- Is it traceability as some vendors promote it, or is it the continuity of data, direct usable in various contexts – the DevOps approach?

Who likes to discuss methodology?

When asking myself this question, I see the analogy with standards. So let’s look at the various players in the PLM domain – sorry for the immense generalization.

When asking myself this question, I see the analogy with standards. So let’s look at the various players in the PLM domain – sorry for the immense generalization.

Strategic consultants: standards are essential, but spare me the details.

Vendors: standards are limiting the unique capabilities of my products

Implementers: two types – Those who understand and use standards as they see the long-term benefits. Those who avoid standards as it introduces complexity.

Companies: they love standards if they can be implemented seamlessly.

Universities: they love to explore standards and help to set the standards even if they are not scalable

Just replace standards with methodology, and you see the analogy.

We like to discuss the methodology.

As I mentioned in the introduction, I started to work with Share PLM on a series of podcasts where we interview PLM experts in the field that have experience with the people, the process, the tools and the data side. Through these interviews, you will realize PLM is complex and has become even more complicated when you consider PLM a strategy instead of a tool.

As I mentioned in the introduction, I started to work with Share PLM on a series of podcasts where we interview PLM experts in the field that have experience with the people, the process, the tools and the data side. Through these interviews, you will realize PLM is complex and has become even more complicated when you consider PLM a strategy instead of a tool.

We hope these podcasts might be a starting point for further discussion – either through direct interactions or through contributions to the podcast. If you have PLM experts in your network that can explain the complexity of PLM from various angles and have the experience. Please let us know – it is time to share.

Conclusion

By switching gears, I noticed that PLM has become complex. Too complex for a single person to master. With an aging traditional PLM workforce (like me), it is time to consolidate the best practices of the past and discuss the best practices for the future. There are no simple answers, as every industry is different. Help us to energize the PLM community – your thoughts/contributions?

I am writing this post because one of my PLM peers recently asked me this question: “Is the BOM losing its position? He was in discussion with another colleague who told him:

I am writing this post because one of my PLM peers recently asked me this question: “Is the BOM losing its position? He was in discussion with another colleague who told him:

“If you own the BOM, you own the Product Lifecycle”.

This statement made me think of ä recent post from Jan Bosch recent post: Product Development fallacy #8: the bill of materials has the highest priority.

Software becomes increasingly an essential part of the final product, and combined with Jan’s expertise in software development, he wrote this article. I recommend reading the full post (4 min read) and next browse through the comments.

Software becomes increasingly an essential part of the final product, and combined with Jan’s expertise in software development, he wrote this article. I recommend reading the full post (4 min read) and next browse through the comments.

If you cannot afford these 10 minutes, here is my favorite quote from the article:

An excessive focus on the bill of materials leads to significant challenges for companies that are undergoing a digital transformation and adopting continuous value delivery. The lack of headroom, high coupling and versioning hell may easily cause an explosion of R&D expenditure over time.

Where did the BOM focus come from? A historical overview related to the rise (and fall) of the BOM.

In the beginning, there was the drawing.

Before the era of computers, there was “THE drawing”, describing assemblies, subassemblies or parts. And on the drawing, you can find the parts list if relevant. This parts list was the first Bill of Material, describing the parts/materials shown on the drawing.

Before the era of computers, there was “THE drawing”, describing assemblies, subassemblies or parts. And on the drawing, you can find the parts list if relevant. This parts list was the first Bill of Material, describing the parts/materials shown on the drawing.

Next came MRP/ERP

With the introduction of the MRP system (Material Requirement Planning), it was the first step that by using computers, people could collect the material requirements for one system as data and process.

With the introduction of the MRP system (Material Requirement Planning), it was the first step that by using computers, people could collect the material requirements for one system as data and process.

Entering new materials/parts described on drawings was still a manual process, as well as referring to existing parts on the drawing. Reuse of parts was a manual process based on individual knowledge.

In the nineties, MRP evolved into ERP (Enterprise Resource Planning), which included the MRP part and added resource and manufacturing planning and financial reporting.

The ERP system became the most significant IT system, the execution system of the company. As it was the first enterprise system implemented, it was the first moment we learned about implementation challenges – people change and budget overruns. However, as the ERP system brought visibility to the company’s execution, it became a “must-have” system for management.

The ERP system became the most significant IT system, the execution system of the company. As it was the first enterprise system implemented, it was the first moment we learned about implementation challenges – people change and budget overruns. However, as the ERP system brought visibility to the company’s execution, it became a “must-have” system for management.

The introduction of mainstream 2D CAD did not affect the company’s culture so much. Drawings became electronic drawings, and the methodology of the parts list on the drawing remained.

Sometimes the interaction with the MRP/ERP system was enhanced by an interface – sending the drawing BOM to ERP. The advantage of the interface: no manual transfer of data reducing typos and BOM errors. The disadvantages at that time: relatively expensive (connectivity between systems was a challenge) and mostly one direction.

Sometimes the interaction with the MRP/ERP system was enhanced by an interface – sending the drawing BOM to ERP. The advantage of the interface: no manual transfer of data reducing typos and BOM errors. The disadvantages at that time: relatively expensive (connectivity between systems was a challenge) and mostly one direction.

And then there was PDM.

In parallel with the introduction of ERP systems, mainstream 3D CAD systems became affordable, particularly SolidWorks, Solid Edge and Inventor. These 3D CAD systems allow sharing of parts and assemblies in different products, and the PDM database was the first aid to support part reuse, versioning and standardization.

In parallel with the introduction of ERP systems, mainstream 3D CAD systems became affordable, particularly SolidWorks, Solid Edge and Inventor. These 3D CAD systems allow sharing of parts and assemblies in different products, and the PDM database was the first aid to support part reuse, versioning and standardization.

By extracting the parts from the assemblies and subassemblies, it was possible to generate a BOM structure in the PDM system to be transferred or typed into the ERP system. We did not talk about EBOM or MBOM then, as there was only one BOM in the ERP system, and the PDM system was a tool to feed the ERP system.

Many companies still have based their processes on this approach. ERP (read SAP nowadays) is the central execution system, and PDM is an external system. You might remember the story and image from my previous post about people, processes and tools. The bad practice example: Asking the ERP system to provide a part number when starting to design a part.

Many companies still have based their processes on this approach. ERP (read SAP nowadays) is the central execution system, and PDM is an external system. You might remember the story and image from my previous post about people, processes and tools. The bad practice example: Asking the ERP system to provide a part number when starting to design a part.

And then products started to change.

In the early 2000s, I worked with SmarTeam to define the E&E (Electronics and Electrical) template. One of the new concepts was to synchronize all design data coming from different disciplines to a single BOM structure.

In the early 2000s, I worked with SmarTeam to define the E&E (Electronics and Electrical) template. One of the new concepts was to synchronize all design data coming from different disciplines to a single BOM structure.

It was the time we started to talk about the EBOM. A type of BOM, as the structure to consolidate all the design data, was based on parts.

The EBOM, most of the time, reflects the design intent in logical groups and sending the relevant parts in the correct order to the ERP system was a favorite expensive customization for service providers. How to transfer an engineering BOM view to an ERP system that only understands the manufacturing view?

Note: not all ERP systems have the data model to differentiate between engineering parts and manufacturing parts

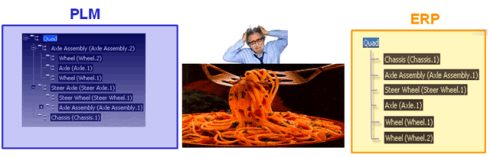

The image below illustrates the challenge and the customer’s perception.

The automated link between the design side (EBOM) and manufacturing side (MBOM) was a mission impossible – too many exceptions for the (spaghetti) code.

And then came the MBOM.

The identified issues connecting PDM and ERP led to the concept of implementing the MBOM in the PLM system. The MBOM in PLM is one of the characteristics of a PLM implementation compared to a PDM implementation. In a traditional PLM system, there is an interaction and connection between the EBOM and MBOM. EBOM parts should end up as MBOM parts. This interaction can be supported by automation, however, as it is in the same system, still leaving manual changes possible.

The MBOM structure in PLM could then be the information structure to transfer to the ERP system; however, there is more, as Jörg W. Fischer wrote in his provoking post-Die MBOM muss weg (The MBOM must go). He rightly points out (in German) that the MBOM is not a structure on its own but a combination of different views based on Assembly Drawings, Process Planning and Material Requirements.

The MBOM structure in PLM could then be the information structure to transfer to the ERP system; however, there is more, as Jörg W. Fischer wrote in his provoking post-Die MBOM muss weg (The MBOM must go). He rightly points out (in German) that the MBOM is not a structure on its own but a combination of different views based on Assembly Drawings, Process Planning and Material Requirements.

His conclusion:

Calling these structures, MBOM is trying to squeeze all three structures into one. That usually doesn’t work and then leads to much more emotional discussions in the project. It also costs a lot of money. It is, therefore, better not to use the term MBOM at all.

And indeed, just having an MBOM in your PLM system might help you to prepare some of the manufacturing steps, the needed resources and parts. The MBOM result still has to be localized at the local plant where the manufacturing takes place. And here, the systems used are the ERP system and the MES system.

The main advantage of having the MBOM in the PLM system is the direct relation between specification and manufacturing intent, allowing manufacturing engineering to work collaboratively with engineering in the same environment.

- The first benefit is fewer iterations and a shorter time to production, thanks to early interaction and manufacturing involvement in the engineering process.

- The second benefit is: product knowledge is centralized in a single system. Consolidating your Product Knowledge in ERP does not make sense due to global localization and the missing capabilities to manage the iterative engineering processes on non-existing parts.

And then came the SBOM, the xBOM

Traditional PLM vendors and implementations kept using xBOM structures as placeholders for related specification data (mechanical designs, electrical, software deliverables, serialized products). Most of the time, related files.

Traditional PLM vendors and implementations kept using xBOM structures as placeholders for related specification data (mechanical designs, electrical, software deliverables, serialized products). Most of the time, related files.

And with this approach, talking about digital thread, PLM systems also touch on the concepts of Configuration Management.

And with this approach, talking about digital thread, PLM systems also touch on the concepts of Configuration Management.

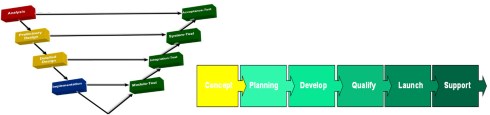

I will not go into the details here but look at the two images by clicking on them and see a similar mindset.

It is about the traceability of information in structures and systems. These structures work well in a relatively static and linear product development and delivery environment, as illustrated below:

Engineering change and release processes are based on managing the changes in different structures from the left to the right.

And then came software!

Modern connected products are no longer mechanical products. The product’s functionality no longer depends on the mechanical properties but mainly on embedded electronics and software used. For example, look at the mechanical design of a telecom transmission tower – its behavior merely comes from non-mechanical components, and they can change over time. Still, the Bill of Material contains a lot of concrete and steel parts.

Modern connected products are no longer mechanical products. The product’s functionality no longer depends on the mechanical properties but mainly on embedded electronics and software used. For example, look at the mechanical design of a telecom transmission tower – its behavior merely comes from non-mechanical components, and they can change over time. Still, the Bill of Material contains a lot of concrete and steel parts.

The ultimate example is comparing a Tesla (software on wheels) with a traditional car. For modern connected products, electronics and software need to be part of the solution. Software and electronics allow the product to be upgraded over time. Managing these products in the same manner as mechanical products is impossible, inefficient and therefore threatening your company’s future business.

I requote Jan Bosch:

An excessive focus on the bill of materials leads to significant challenges for companies that are undergoing a digital transformation and adopting continuous value delivery. The lack of headroom, high coupling and versioning hell may easily cause an explosion of R&D expenditure over time.

The model-based, connected enterprise

I will not solve the puzzle of the future in this post. You can read my observations in my series: The road to model-based and connected PLM. We need a new infrastructure with at least two modes. One that still serves as a System of Record, storing information in a traditional manner, like a Bill of Materials for the static parts, as not everyone and everything can be connected.

In addition, we need various Systems of Engagement that enable close to real-time interaction between products (systems) and relevant stakeholders for the engagement scope(multidisciplinary / consumers).

In addition, we need various Systems of Engagement that enable close to real-time interaction between products (systems) and relevant stakeholders for the engagement scope(multidisciplinary / consumers).

Digital twins are examples of such environments. Currently, these Systems of Engagement often work disconnected from the System of Record due to the lack of understanding of how to connect. (standard connectors? / OSLC?)

Our mission is to explore, as I wrote in my post Time to split PLM and drop our mechanical mindset.

And while I was finalizing this post, I read a motivating post from Jan Bosch again for all of you working on understanding and pushing the digital transformation in your eco-system.

And while I was finalizing this post, I read a motivating post from Jan Bosch again for all of you working on understanding and pushing the digital transformation in your eco-system.

The title: Be the protagonist of your life: 15 rules A starting point for more to come.

Conclusion

The BOM is no longer the master of the product lifecycle when it comes to managing connected products, where functionality mainly depends on software. BOM structures with related documents are just one of the extracted baselines from a data-driven, connected enterprise. This traditional PLM infrastructure requires other, non-BOM-driven structures to represent the actual status of a virtual or physical product.

The BOM is not dead, but there is more ………

Your thoughts?

In the last weeks, I had several discussions related to sustainability. What can companies do to become sustainable and prove it? But, unfortunately, there is so much greenwashing at this moment.

In the last weeks, I had several discussions related to sustainability. What can companies do to become sustainable and prove it? But, unfortunately, there is so much greenwashing at this moment.

Look at this post: 10 Companies and Corporations Called Out For Greenwashing.

Therefore I thought about which practical steps a company should take to prepare for a sustainable future, as the change will not happen overnight. It reminds me of the path towards a digital, model-based enterprise (my other passion). In my post Why Model-Based definition is important for all, I mentioned that MBD (Model-Based Definition) could be considered the first stepping-stone toward a Model-Based enterprise.

Therefore I thought about which practical steps a company should take to prepare for a sustainable future, as the change will not happen overnight. It reminds me of the path towards a digital, model-based enterprise (my other passion). In my post Why Model-Based definition is important for all, I mentioned that MBD (Model-Based Definition) could be considered the first stepping-stone toward a Model-Based enterprise.

The analogy for Material Compliance came after an Aras seminar I watched a month ago. The webinar How PLM Paves the Way for Sustainability with Insensia (an Aras implementer) demonstrates how material compliance is the first step toward sustainable product development.

The analogy for Material Compliance came after an Aras seminar I watched a month ago. The webinar How PLM Paves the Way for Sustainability with Insensia (an Aras implementer) demonstrates how material compliance is the first step toward sustainable product development.

Let’s understand why

The first steps

Companies that currently deliver solutions mostly only focus on economic gains. The projects or products they sell need to be profitable and competitive, which makes sense if you want a future.

Companies that currently deliver solutions mostly only focus on economic gains. The projects or products they sell need to be profitable and competitive, which makes sense if you want a future.

And this would not have changed if the awareness of climate impact has not become apparent.

First, CFKs and hazardous materials lead to new regulations. Next global agreements to fight climate change – the Paris agreement and more to come – have led and will lead to regulations that will change how products will be developed. All companies will have to change their product development and delivery models when it becomes a global mandate.

A required change is likely going to happen. In Europe, the Green Deal is making stable progress. However, what will happen in the US will be a mystery as even their supreme court becomes a political entity against sustainability (money first).

A required change is likely going to happen. In Europe, the Green Deal is making stable progress. However, what will happen in the US will be a mystery as even their supreme court becomes a political entity against sustainability (money first).

Still, compliance with regulations will be required if a company wants to operate in a global market.

What is Material Compliance?

In 2002, the European Union published a directive to restrict hazardous substances in materials. The directive, known as RoHS (Restriction of Hazardous Substances), was mainly related to electronic components. In the first directive, six hazardous materials were restricted.

In 2002, the European Union published a directive to restrict hazardous substances in materials. The directive, known as RoHS (Restriction of Hazardous Substances), was mainly related to electronic components. In the first directive, six hazardous materials were restricted.

The most infamous are Cadmium(Cd), Lead(Pb), and Mercury (Hg). In 2006 all products on the EU market must pass RoHS compliance, and in 2011 was now connected the CE marking of products sold in the European market was.

In 2015 four additional chemical substances were added, most softening PVC but also affecting the immune system. Meanwhile, other countries have introduced similar RoHS regulations; therefore, we can see it as a global restricting. Read more here: The RoHS guide.

In 2015 four additional chemical substances were added, most softening PVC but also affecting the immune system. Meanwhile, other countries have introduced similar RoHS regulations; therefore, we can see it as a global restricting. Read more here: The RoHS guide.

Consumers buying RoHS-compliant products now can be assured that none of the threshold values of the substances is reached in the product. The challenge for the manufacturer is to go through each of the components of the MBOM. To understand if it contains one of the ten restricted substances and, if yes, in which quantity.

Therefore, they need to get that information from each relevant supplier a RoHS declaration.

Besides RoHS, additional regulations protect the environment and the consumer. For example, REACH (Registration, Evaluation, Authorization and Restriction of Chemicals) compliance deals with the regulations created to improve the environment and protect human health. In addition, REACH addresses the risks associated with chemicals and promotes alternative methods for the hazard assessment of substances.

Besides RoHS, additional regulations protect the environment and the consumer. For example, REACH (Registration, Evaluation, Authorization and Restriction of Chemicals) compliance deals with the regulations created to improve the environment and protect human health. In addition, REACH addresses the risks associated with chemicals and promotes alternative methods for the hazard assessment of substances.

The compliance process in four steps

Material compliance is most of all the job of engineers. Therefore around 2005, some of my customers started to add RoHS support to their PLM environment.

Step 1

The image below shows the simple implementation – the PDF-from from the supplier was linked to the (M)BOM part.

An employee had to manually add the substances into a table and ensure the threshold values were not reached. But, of course, there was already a selection of preferred manufacturer parts during the engineering phase. Therefore RoHS compliance was almost guaranteed when releasing the EBOM.

An employee had to manually add the substances into a table and ensure the threshold values were not reached. But, of course, there was already a selection of preferred manufacturer parts during the engineering phase. Therefore RoHS compliance was almost guaranteed when releasing the EBOM.

But this process could be done more cleverly.

Step 2

So the next step was that manufacturers started to extend their PLM data model with the additional attributes for RoHS compliance. Again, this could be done cleverly or extremely generic, adding the attributes to all parts.

So now, when receiving the material declaration, a person just has to add the substance values to the part attributes. Then, through either standard functionality or customization, a compliance report could be generated for the (M)BOM. So this already saves some work.

So now, when receiving the material declaration, a person just has to add the substance values to the part attributes. Then, through either standard functionality or customization, a compliance report could be generated for the (M)BOM. So this already saves some work.

Step 3

The next step was to provide direct access to these attributes to the supplier and push the supplier to do the work.

Now the overhead for the manufacturer has been reduced again. This is because only the supplier needs to do the job for his customer.

Now the overhead for the manufacturer has been reduced again. This is because only the supplier needs to do the job for his customer.

Step 4

In step 4, we see a real connected environment, where information is stored only once, referenced by manufacturers, and kept actual by the part suppliers.

Who will host the RoHS databank? From some of my customer projects, I recall IHS as a data provider – it seems they are into this business when you look at their website HERE.

Who will host the RoHS databank? From some of my customer projects, I recall IHS as a data provider – it seems they are into this business when you look at their website HERE.

Where is your company at this moment?

Having seen the four stepping-stones leading towards efficient RoHS compliance, you see the challenge of moving from a document-driven approach to a data-driven approach.

Now let’s look into the future. Concepts like Life Cycle Assessment (LCA) or a Digital Product Passport (DPP) will require a fully connected approach.

Now let’s look into the future. Concepts like Life Cycle Assessment (LCA) or a Digital Product Passport (DPP) will require a fully connected approach.

Where is your company at this moment – have you reached RoHS compliance step 3 or 4? A first step to learn and work connected and data-driven.

Life Cycle Assessment – the ultimate target

A lifecycle assessment, or lifecycle analysis (two times LCA again), is a methodology to assess the environmental impact of a product (or solution) through its whole lifecycle. From materials sourcing, manufacturing, transportation, usage, service, and decommissioning. And by assessing, we mean a clear, verifiable, and shareable manner, not just guessing.

A lifecycle assessment, or lifecycle analysis (two times LCA again), is a methodology to assess the environmental impact of a product (or solution) through its whole lifecycle. From materials sourcing, manufacturing, transportation, usage, service, and decommissioning. And by assessing, we mean a clear, verifiable, and shareable manner, not just guessing.

Traditional engineering education is not bringing these skills, although LCA is not new, as this 10-years old YouTube movie from Autodesk illustrates:

What is new is that due to global understanding, we are reaching the limits of what our planet can endure; we must act now. Upcoming international regulations will enforce life cycle analysis reporting for manufacturers or service providers. This will happen gradually.

Meanwhile, we all should work on a circular economy, the major framework for a sustainable planet- click on the image on the left.

Meanwhile, we all should work on a circular economy, the major framework for a sustainable planet- click on the image on the left.

In my post, I wrote about these combined topics: SYSTEMS THINKING – a must-have skill in the 21st century.

Life Cycle Analysis – Digital Twin – Digitization

The big elephant in the room is that when we talk about introducing LCA in your company, it has a lot to do with the digitization of your company. Assessment data in a document can require too much human effort to maintain the data at the right quality. The costs are not affordable if your competitor is more efficient.

When coming to the Analysis part, here, a model-based, data-driven infrastructure is the most efficient way to run virtual analysis, using digital twin concepts at each stage of the product lifecycle.

When coming to the Analysis part, here, a model-based, data-driven infrastructure is the most efficient way to run virtual analysis, using digital twin concepts at each stage of the product lifecycle.

Virtual models for design, manufacturing and operations allow your company to make trade-off studies with low cost before committing to the physical world. 80 % of the environmental impact of a product comes from decisions in the virtual world.

Once you have your digital twins for each phase of the product lifecycle, you can benchmark your models with data reported from the physical world. All these interactions can be found in the beautiful Boeing diamond below, which I discussed before – Read A digital twin for everybody.

Conclusion

Efficient and sustainable life cycle assessment and analysis will come from connected information sources. The old document-driven paradigm is too costly and too slow to maintain. In particular, when the scope is not only a subset of your product, it is your full product and its full lifecycle with LCA. Another stepping stone towards the near future. Where are you?

Stepping-stone 1: From Model-Based Definition to an efficient Model-Based, Data-driven Enterprise

Stepping-stone 2: For RoHS compliance to an efficient and sustainable Model-Based, data-driven enterprise.

In March 2018, I started a series of blog posts related to model-based approaches. The first post was: Model-Based – an introduction. The reactions to these series of posts can be summarized in two bullets:

In March 2018, I started a series of blog posts related to model-based approaches. The first post was: Model-Based – an introduction. The reactions to these series of posts can be summarized in two bullets:

- Readers believed that the term model-based was focusing on the 3D CAD model. A logical association as PLM is often associated with 3D CAD-model data management (actually PDM), and in many companies, the 3D CAD model is (yet) not a major information carrier/

- Readers were telling me that a model-based approach is too far from their day-to-day life. I have to agree here. I was active in some advanced projects where the product’s behavior depends on a combination of hardware and software. However, most companies still work in a document-driven, siloed discipline manner merging all deliverables in a BOM.

More than 3 years later, I feel that model-based approaches have become more and more visible for companies. One of the primary reasons is that companies start to collaborate in the cloud and realize the differences between a coordinated and a connected manner.

More than 3 years later, I feel that model-based approaches have become more and more visible for companies. One of the primary reasons is that companies start to collaborate in the cloud and realize the differences between a coordinated and a connected manner.

Initiatives as Industry 4.0 or concepts like the Digital Twin demand a model-based approach. This post is a follow-up to my recent post, The Future of PLM.

History has shown that it is difficult for companies to change engineering concepts. So let’s first look back at how concepts slowly changed.

The age of paper drawings

In the sixties of the previous century, the drawing board was the primary “tool” to specify a mechanical product. The drawing on its own was often a masterpiece drawn on special paper, with perspectives, details, cross-sections.

In the sixties of the previous century, the drawing board was the primary “tool” to specify a mechanical product. The drawing on its own was often a masterpiece drawn on special paper, with perspectives, details, cross-sections.

All these details were needed to transfer the part or assembly information to manufacturing. The drawing set should contain all information as there were no computers.

Making a prototype was, depending on the complexity of the product, the interpretation of the drawings and manufacturability of a product, not always that easy. After a first release, further modifications to the product definition were often marked on the manufacturing drawings using a red pencil. Terms like blueprint and redlining come from the age of paper drawings.

Making a prototype was, depending on the complexity of the product, the interpretation of the drawings and manufacturability of a product, not always that easy. After a first release, further modifications to the product definition were often marked on the manufacturing drawings using a red pencil. Terms like blueprint and redlining come from the age of paper drawings.

There are still people talking nostalgically about these days as creating and interpreting drawings was an important skill. However, the inefficiencies with this approach were significant.

- First, updating drawings because there was redlining in manufacturing was often not done – too much work.

- Second, drawing reuse was almost impossible; you had to start from scratch.

- Third, and most importantly, you needed to be very skilled in interpreting a drawing set. In particular, when dealing with suppliers that might not have the same skillset and the knowledge of which drawing version was actual.

However, paper was and still is the cheapest neutral format to distribute designs. The last time I saw companies still working with paper drawings was at the end of the previous century.

However, paper was and still is the cheapest neutral format to distribute designs. The last time I saw companies still working with paper drawings was at the end of the previous century.

Curious to learn if they are now extinct?

The age of electronic drawings (CAD)

With the introduction of AutoCAD and personal computers around 1982, more companies started to look into drafting with the computer. There was already the IBM drafting system in 1965, but it was Autodesk that pushed the 2D drafting business with their slogan:

“80 percent of the functionality for 20 percent of the price (Autodesk 1982)”

A little later, I started to work for an Autodesk distributor/reseller. People would come to the showroom to see how a computer drawing could be plotted in the finest quality at the end. But, of course, the original draftsman did not like the computer as the screen was too small.

However, the enormous value came from making changes, the easy way of sharing drawings and the ease of reuse. The picture on the left is me in 1989, demonstrating AutoCAD with a custom-defined tablet and PS/2 computer.

However, the enormous value came from making changes, the easy way of sharing drawings and the ease of reuse. The picture on the left is me in 1989, demonstrating AutoCAD with a custom-defined tablet and PS/2 computer.

The introduction of electronic drawings was not a disruption, more optimization of the previous ways of working.

The exchange with suppliers and manufacturing could still be based on plotted drawings – the most neutral format. And thanks to the filename, there was better control of versions between all stakeholders.

Aren’t we all happy?

The introduction of mainstream 3D CAD

In 1995, 3D CAD became available for the mid-market, thanks to SolidWorks, Solid Edge and a little later Inventor. Before that working with 3D CAD was only possible for companies that could afford expensive graphic stations, provided by IBM, Silicon Graphics, DEC and SUN. Where are they nowadays? The PC is an example of disruptive innovation, purely based on technology. See Clayton Christensen’s famous book: The Innovator’s Dilemma.

In 1995, 3D CAD became available for the mid-market, thanks to SolidWorks, Solid Edge and a little later Inventor. Before that working with 3D CAD was only possible for companies that could afford expensive graphic stations, provided by IBM, Silicon Graphics, DEC and SUN. Where are they nowadays? The PC is an example of disruptive innovation, purely based on technology. See Clayton Christensen’s famous book: The Innovator’s Dilemma.

The introduction of 3D CAD on PCs in the mid-market did not lead directly to new ways of working. Designing a product in 3D was much more efficient if you mastered the skills. 3D brought a better understanding of the product dimensions and shape, reducing the number of interpretation errors.

Still, (electronic) drawings were the contractual deliverable when interacting with suppliers and manufacturing. As students were more and more trained with the 3D CAD tools, the traditional art of the draftsman disappeared.

Still, (electronic) drawings were the contractual deliverable when interacting with suppliers and manufacturing. As students were more and more trained with the 3D CAD tools, the traditional art of the draftsman disappeared.

3D CAD introduced some new topics to solve.

- First of all, a 3D CAD Assembly in the system was a collection of separate files, subassemblies, parts, and drawings that relate to each other with a specific version. So how to ensure the final assembly drawings were based on the correct part revisions? Companies were solving this by either using intelligent filenames (with revisions) or by using a PDM system where the database of the PDM system managed all the relations and their status.

- The second point was that the 3D CAD assembly also introduced a new feature, the product structure, or the “Bill of Materials”. This logical structure of the assembly up resembled a lot of the Bill of Material of the product. You could even browse deeper levels, which was not the case in the traditional Bill of Material on a drawing.

Note: The concept of EBOM and MBOM was not known in most companies. People were talking about the BOM as a one-level definition of parts or subassemblies in the assembly. See my Where is the MBOM? Post from July 2008 when this topic was still under discussion.

- The third point that would have a more significant impact later is that parts and assemblies could be reused in other products. This introduced the complexity of configuration management. For example, a 3D CAD part or assembly file could contain several configurations where only one configuration would be valid for the given product. Managing this in the 3D CAD system lead to higher productivity of the designer, however downstream when it came to data management with PDM systems, it became a nightmare.

I experienced these issues a lot when discussing with companies and implementers, mainly the implementation of SmarTeam combined with SolidWorks and Inventor. Where to manage the configuration constraints? In the PDM system or inside the 3D CAD system.

These environments were not friends (image above), and even if they came from the same vendor, it felt like discussing with tribes.

The third point also covered another topic. So far, CAD had been the first step for the detailed design of a product. However, companies now had an existing Bill of Material in the system thanks to the PDM systems. It could be a Bill of Material of a sub-assembly that is used in many other products.

Configuring a product no longer started from CAD; it started from a Product or Bill of Material structure. Sales and Engineers identified the changes needed on the BoM, keeping as much as possible released information untouched. This led to a new best practice.

The item-centric approach

Around 2005, five years after introducing the term Product Lifecycle Management, slowly, a new approach became the standard. Product Lifecycle Management was initially introduced to connect engineering and manufacturing, driven by the automotive and aerospace industry.

Around 2005, five years after introducing the term Product Lifecycle Management, slowly, a new approach became the standard. Product Lifecycle Management was initially introduced to connect engineering and manufacturing, driven by the automotive and aerospace industry.

It was with PLM that concepts as EBOM and MBOM became visible.

In particular, the EBOM was closely linked to engineering practices, i.e., modularity and reuse. The EBOM and its related information represented the product as it was specified. It is essential to realize that the parts in the EBOM could be generic specified purchase parts to be resolved when producing the product or that the EBOM contained Make-parts specified by drawings.

At that time, the EBOM was often used as the foundation for the ERP system – see image above. The BOM was restructured and organized according to the manufacturing process specifying materials and resources needed in the ERP system. Therefore, although it was an item-like structure, this BOM (the MBOM) always had a close relation to the Bill of Process.

For companies with a single manufacturing site, the notion of EBOM and MBOM was not that big, as the ERP system would be the source of the MBOM. However, the complexity came when companies have several manufacturing sites. That was when a generic MBOM in the PLM system made more sense to centralize all product information in a single system.

The EBOM-MBOM approach has become more and more a standard practice since 2010. As a result, even small and medium-sized enterprises realized a need to manage the EBOM and the MBOM.

There were two disadvantages introduced with this EBOM-MBOM approach.

- First, the EBOM and the MBOM as information structures require a lot of administrative maintenance if information needs to be always correct (and that is the CM target). Some try to simplify this by keeping the EBOM part the same as the MBOM part, meaning the EBOM specification already targets a single supplier or manufacturer.