You are currently browsing the category archive for the ‘Brain’ category.

Last week, I wrote about the first day of the crowded PLM Roadmap/PDT Europe conference.

Last week, I wrote about the first day of the crowded PLM Roadmap/PDT Europe conference.

You can still read my post here in case you missed it: A very long week after PLM Roadmap / PDT Europe 2025

My conclusion from that post was that day 1 was a challenging day if you are a newbie in the domain of PLM and data-driven practices. We discussed and learned about relevant standards that support a digital enterprise, as well as the need for ontologies and semantic models to give data meaning and serve as a foundation for potential AI tools and use cases.

My conclusion from that post was that day 1 was a challenging day if you are a newbie in the domain of PLM and data-driven practices. We discussed and learned about relevant standards that support a digital enterprise, as well as the need for ontologies and semantic models to give data meaning and serve as a foundation for potential AI tools and use cases.

This post will focus on the other aspects of product lifecycle management – the evolving methodologies and the human side.

Note: I try to avoid the abbreviation PLM, as many of us in the field associate PLM with a system, where, for me, the system is more of an IT solution, where the strategy and practices are best named as product lifecycle management.

Note: I try to avoid the abbreviation PLM, as many of us in the field associate PLM with a system, where, for me, the system is more of an IT solution, where the strategy and practices are best named as product lifecycle management.

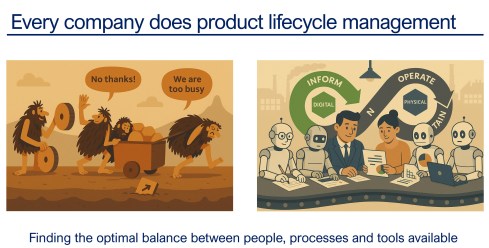

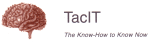

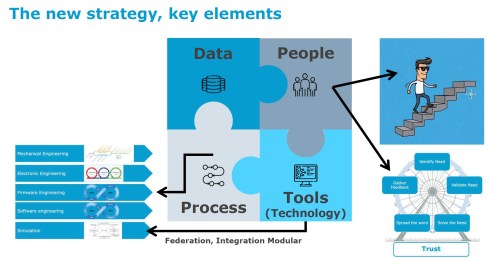

And as a reminder, I used the image above in other conversations. Every company does product lifecycle management; only the number of people, their processes, or their tools might differ. As Peter Billelo mentioned in his opening speech, the products are why the company exists.

Unlocking Efficiency with Model-Based Definition

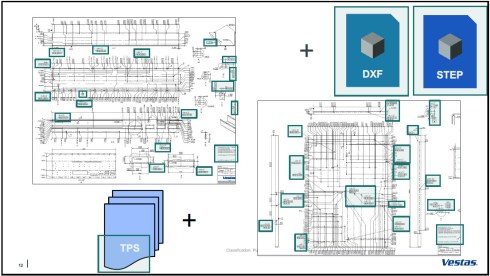

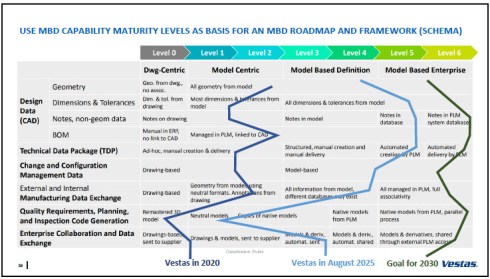

![]() Day 2 started energetically with Dennys Gomes‘ keynote, which introduced model-based definition (MBD) at Vestas, a world-leading OEM for wind turbines.

Day 2 started energetically with Dennys Gomes‘ keynote, which introduced model-based definition (MBD) at Vestas, a world-leading OEM for wind turbines.

Personally, I consider MBD as one of the stepping stones to learning and mastering a model-based enterprise, although do not be confused by the term “model”. In MBD, we use the 3D CAD model as the source to manage and support a data-driven connection among engineering, manufacturing, and suppliers. The business benefits are clear, as reported by companies that follow this approach.

However, it also involves changes in technology, methodology, skills, and even contractual relations.

Dennys started sharing the analysis they conducted on the amount of information in current manufacturing drawings. The image below shows that only the green marker information was used, so the time and effort spent creating the drawings were wasted.

It was an opportunity to explore model-based definition, and the team ran several pilots to learn how to handle MBD, improve their skills, methodologies, and tool usage. As mentioned before, it is a profound change to move from coordinated to connected ways of working; it does not happen by simply installing a new tool.

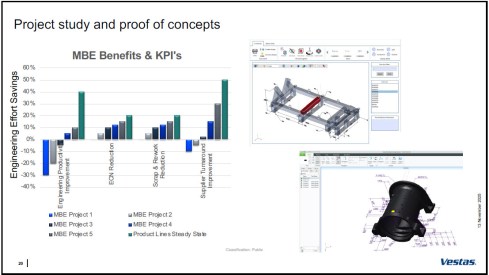

The image above shows the learning phases and the ultimate benefits accomplished. Besides moving to a model-based definition of the information, Dennys mentioned they used the opportunity to simplify and automate the generation of the information.

Vestas is on a clear path, and it is interesting to see their ambition in the MBD roadmap below.

An inspirational story, hopefully motivating other companies to make this first step to a model-based enterprise. Perhaps difficult at the beginning from the people’s perspective, but as a business, it is a profitable and required direction.

Bridging The Gap Between IT and Business

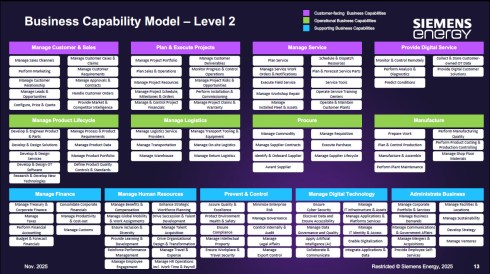

It was a great pleasure to listen again to Peter Vind from Siemens Energy, who first explained to the audience how to position the role of an enterprise architect in a company compared to society. He mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

It was a great pleasure to listen again to Peter Vind from Siemens Energy, who first explained to the audience how to position the role of an enterprise architect in a company compared to society. He mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

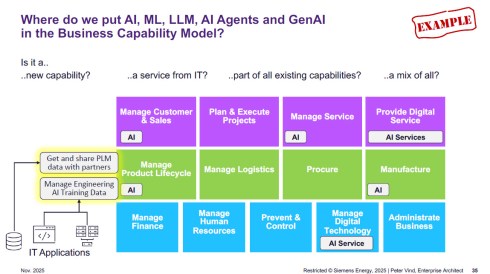

To answer these questions, Peter is referring to the Business Capability Model (BCM) he uses as an Enterprise Architect.

Business Capabilities define ‘what’ a company needs to do to execute its strategy, are structured into logical clusters, and should be the foundation for the enterprise, on which both IT and business can come to a common approach.

The detailed image above is worth studying if you are interested in the levels and the mappings of the capabilities. The BCM approach was beneficial when the company became disconnected from Siemens AG, enabling it to rationalize its application portfolio.

Next, Peter zoomed in on some of the examples of how a BCM and structured application portfolio management can help to rationalize the AI hype/demand – where is it applicable, where does AI have impact – and as he illustrated, it is not that simple. With the BCM, you have a base for further analysis.

Other future-relevant topics he shared included how to address the introduction of the digital product passport and how the BCM methodology supports the shift in business models toward a modern “Power-as-a-Service” model.

He concludes that having a Business Capability Model gives you a stable foundation for managing your enterprise architecture now and into the future. The BCM complements other methodologies that connect business strategy to (IT) execution. See also my 2024 post: Don’t use the P** word! – 5 lessons learned.

He concludes that having a Business Capability Model gives you a stable foundation for managing your enterprise architecture now and into the future. The BCM complements other methodologies that connect business strategy to (IT) execution. See also my 2024 post: Don’t use the P** word! – 5 lessons learned.

Holistic PLM in Action.

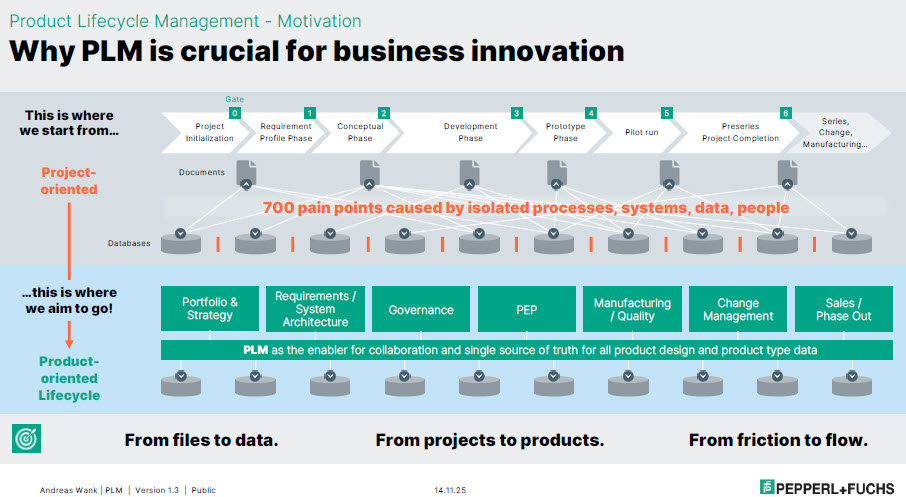

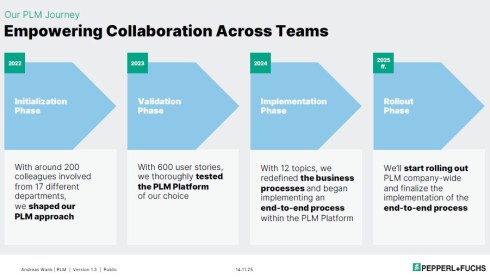

or companies struggling with their digital transformation in the PLM domain, Andreas Wank, Head of Smart Innovation at Pepperl+Fuchs SE, shared his journey so far. All the essential aspects of such a transformation were mentioned. Pepperl+Fuchs has a portfolio of approximately 15,000 products that combine hardware and software.

or companies struggling with their digital transformation in the PLM domain, Andreas Wank, Head of Smart Innovation at Pepperl+Fuchs SE, shared his journey so far. All the essential aspects of such a transformation were mentioned. Pepperl+Fuchs has a portfolio of approximately 15,000 products that combine hardware and software.

It started with the WHY. With such a massive portfolio, business innovation is under pressure without a PLM infrastructure. Too many changes, fragmented data, no single source of truth, and siloed ways of working lead to much rework, errors, and iterations that keep the company busy while missing the global value drivers.

Next, the journey!

The above image is an excellent way to communicate the why, what, and how to a broader audience. All the main messages are in the image, which helps people align with them.

The first phase of the project, creating digital continuity, is also an excellent example of digital transformation in traditional document-driven enterprises. From files to data align with the From Coordinated To Connected theme.

Next, the focus was to describe these new ways of working with all stakeholders involved before starting the selection and implementation of PLM tools. This approach is so crucial, as one of my big lessons learned from the past is: “Never start a PLM implementation in R&D.”

If you start in R&D, the priority shifts away from the easy flow of data between all stakeholders; it becomes an R&D System that others will have to live with.

You never get a second, first impression!

Pepperl+Fuchs spends a long time validating its PLM selection – something you might only see in privately owned companies that are not driven by shareholder demands, but take the time to prepare and understand their next move.

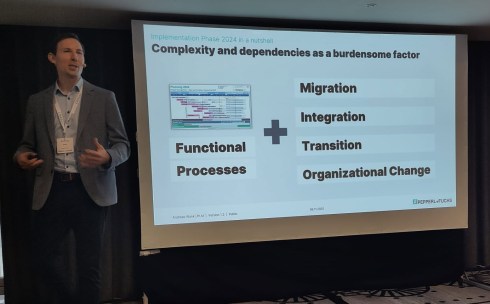

As Andreas also explained, it is not only about the functional processes. As the image shows, migration (often the elephant in the room) and integration with the other enterprise systems also need to be considered. And all of this is combined with managing the transition and the necessary organizational change.

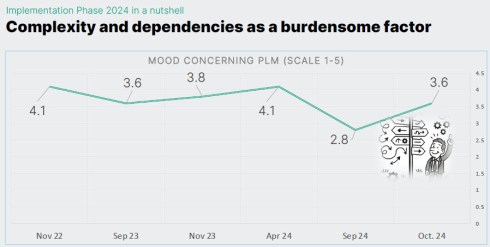

Andreas shared some best practices illustrating the focus on the transition and human aspects. They have implemented a regular survey to measure the PLM mood in the company. And when the mood went radical down on Sept 24, from 4.1 to 2.8 on a scale of 1 to 5, it was time to act.

They used one week at a separate location, where 30 of his colleagues worked on the reported issues in one room, leading to 70 decisions that week. And the result was measurable, as shown in the image below.

Andreas’s story was such a perfect fit for the discussions we have in the Share PLM podcast series that we asked him to tell it in more detail, also for those who have missed it. Subscribe and stay tuned for the podcast, coming soon.

Andreas’s story was such a perfect fit for the discussions we have in the Share PLM podcast series that we asked him to tell it in more detail, also for those who have missed it. Subscribe and stay tuned for the podcast, coming soon.

Trust, Small Changes, and Transformation.

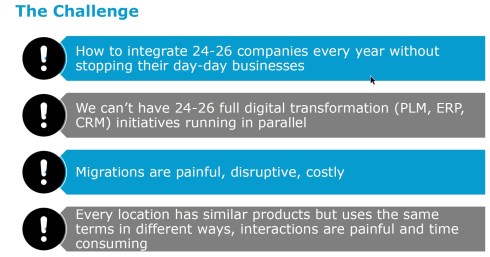

Ashwath Sooriyanarayanan and Sofia Lindgren, both active at the corporate level in the PLM domain at Assa Abloy, came with an interesting story about their PLM lessons learned.

Ashwath Sooriyanarayanan and Sofia Lindgren, both active at the corporate level in the PLM domain at Assa Abloy, came with an interesting story about their PLM lessons learned.

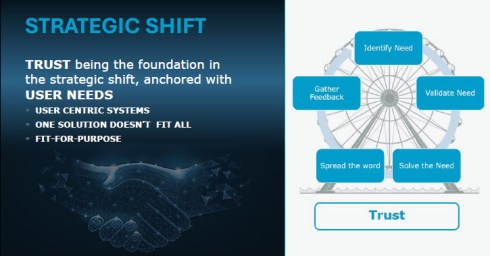

To understand their story, it is essential to comprehend Assa Abloy as a special company, as the image below explains. With over 1000 sites, 200 production facilities, and, last year, on average every two weeks, a new acquisition, it is hard to standardize the company, driven by a corporate organization.

However, this was precisely what Assa Abloy has been trying to do over the past few years. Working towards a single PLM system, with generic processes for all, spending a lot of time integrating and migrating data from the different entities became a mission impossible.

To increase user acceptance, they fell into the trap of customizing the system ever more to meet many user demands. A dead end, as many other companies have probably experienced similarly.

And then they came with a strategic shift. Instead of holding on to the past and the money invested in technology, they shifted to the human side.

The PLM group became a trusted organisation supporting the individual entities. Instead of telling them what to do (Top-Down), they talked with the local business and provided standardized PLM knowledge and capabilities where needed (Bottom-Up).

This “modular” approach made the PLM group the trusted partner of the individual business. A unique approach, making us realize that the human aspect remains part of implementing PLM

Humans cannot be transformed

Given the length of this blog post, I will not spend too much text on my closing presentation at the conference. After a technical start on DAY 1, we gradually moved to broader, human-related topics in the latter part.

Given the length of this blog post, I will not spend too much text on my closing presentation at the conference. After a technical start on DAY 1, we gradually moved to broader, human-related topics in the latter part.

You can find my presentation here on SlideShare as usual, and perhaps the best summary from my session was given in this post from Paul Comis. Enjoy his conclusion.

Conclusion

Two and a half intensive days in Paris again at the PLM Roadmap / PDT Europe conference, where some of the crucial aspects of PLM were shared in detail. The value of the conference lies in the stories and discussions with the participants. Only slides do not provide enough education. You need to be curious and active to discover the best perspective.

For those celebrating: Wishing you a wonderful Thanksgiving!

This week is busy for me as I am finalizing several essential activities related to my favorite hobby, product lifecycle management or is it PLM😉?

And most of these activities will result in lengthy blog posts, starting with:

“The week(end) after <<fill in the event>>”.

Here are the upcoming actions:

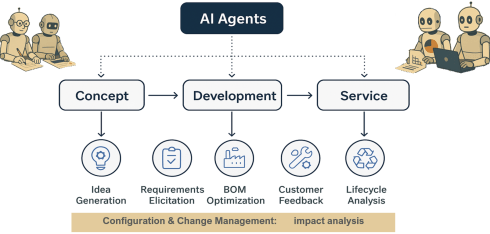

Click on each image if you want to see the details:

In this Future of PLM Podcast series, moderated by Michael Finocciaro, we will continue the debate on how to position PLM (as a system or a strategy) and move away from an engineering framing. Personally, I never saw PLM as a system and started talking more and more about product lifecycle management (the strategy) versus PLM/PDM (the systems).

Note: the intention is to be interactive with the audience, so feel free to post questions/remarks in the comments, either upfront or during the event.

You might have seen in the past two weeks some posts and discussions I had with the Share PLM team about a unique offering we are preparing: the PLM Awareness program. From our field experience, PLM is too often treated as a technical issue, handled by a (too) small team.

We believe every PLM program should start by fostering awareness of what people can expect nowadays, given the technology, experiences, and possibilities available. If you want to work with motivated people, you have to involve them and give them all the proper understanding to start with.

Join us for the online event to understand the value and ask your questions. We are looking forward to your participation.

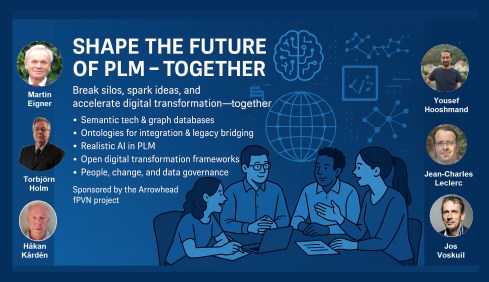

This is another event related to the future of PLM; however, this time it is an in-person workshop, where, inspired by four PLM thought leaders, we will discuss and work on a common understanding of what is required for a modern PLM framework. The workshop, sponsored by the Arrowhead fPVN project, will be held in Paris on November 4th, preceding the PLM Roadmap/PDT Europe conference.

We will not discuss the term PLM; we will discuss business drivers, supporting technologies and more. My role as a moderator of this event is to assist with the workshop, and I will share its findings with a broader audience that wasn’t able to attend.

Be ready to learn more in the near future!

Suppose you have followed my blog posts for the past 10 years. In that case, you know this conference is always a place to get inspired, whether by leading companies across industries or by innovative and engaging new developments. This conference has always inspired and helped me gain a better understanding of digital transformation in the PLM domain and how larger enterprises are addressing their challenges.

This time, I will conclude the conference with a lecture focusing on the challenging side of digital transformation and AI: we humans cannot transform ourselves, so we need help.

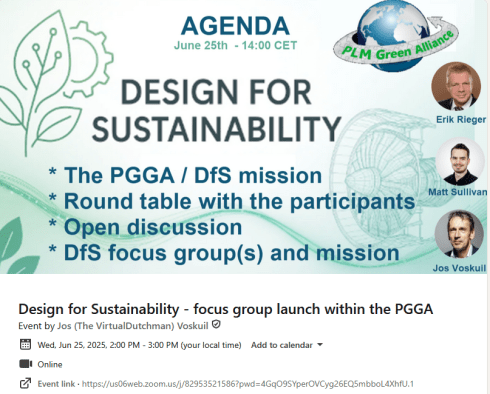

At the end of this year, we will “celebrate” our fifth anniversary of the PLM Green Global Alliance. When we started the PGGA in 2020, there was an initial focus on the impact of carbon emissions on the climate, and in the years that followed, climate disasters around the world caused serious damage to countries and people.

How could we, as a PLM community, support each other in developing and sharing best practices for innovative, lower-carbon products and processes?

In parallel, driven by regulations, there was also a need to improve current PLM practices to efficiently support ESG reporting, lifecycle analysis, and, soon, the Digital Product Passport. Regulations that push for a modern data-driven infrastructure, and we discussed this with the major PLM vendors and related software or solution partners. See our YouTube channel @PLM_Green_Global_Alliance

In this online Zoom event, we invite you to join us to discuss the topics mentioned in the announcement. Join us in this event and help us celebrate!

I am closing that week at the PTC/User Benelux event in Eindhoven, the Netherlands, with a keynote speech about digital transformation in the PLM domain. Eindhoven is the city where I grew up, completed my amateur soccer career, ran my first and only marathon, and started my career in PLM with SmarTeam. The city and location feel like home. I am looking forward to discussing and meeting with the PTC user community to learn how they experience product lifecycle management, or is it PLM😉?

With all these upcoming events, I did not have the time to focus on a new blog post; however, luckily, in the 10x PLM discussion started by Oleg Shilovitsky there was an interesting comment from Rob Ferrone related to that triggered my mind. Quote:

With all these upcoming events, I did not have the time to focus on a new blog post; however, luckily, in the 10x PLM discussion started by Oleg Shilovitsky there was an interesting comment from Rob Ferrone related to that triggered my mind. Quote:

The big breakthrough will come from 1. advances in human-machine interface and 2. less % of work executed by human in the loop. Copy/paste, typing, voice recognition are all significant limits right now. It’s like trying to empty a bucket of water through a drinking straw. When tech becomes more intelligent and proactive then we will see at least 10x.

This remark reminded me of one of my first blog posts in 2008, when I was trying to predict what PLM would look like in 2050. I thought it is a nice moment to read it (again). Enjoy!

PLM in 2050

As the year ends, I decided to take my crystal ball to see what would happen with PLM in the future. It felt like a virtual experience, and this is what I saw:

As the year ends, I decided to take my crystal ball to see what would happen with PLM in the future. It felt like a virtual experience, and this is what I saw:

- Data is no longer replicated – every piece of information will have a Universal Unique ID, also known as a UUID. In 2020, this initiative became mature, thanks to the merger of some big PLM and ERP vendors, who brought this initiative to reality. This initiative dramatically reduced exchange costs in supply chains and led to bankruptcy for many companies that provided translation and exchange software.

- Companies store their data in ‘the cloud’ based on the concept outlined above. Only some old-fashioned companies still handle their own data storage and exchange, as they fear someone will access their data. Analysts compare this behavior with the situation in the year 1950, when people kept their money under a mattress, not trusting banks (and they were not always wrong)

- After 3D, a complete virtual world based on holography became the next step in product development and understanding. Thanks to the revolutionary quantum-3D technology, this concept could even be applied to life sciences. Before ordering a product, customers could first experience and describe their needs in a virtual environment.

- Finally, the cumbersome keyboard and mouse were replaced by voice and eye recognition. Initially, voice recognition

and eye tracking were cumbersome. Information was captured by talking to the system and by recording eye movements during hologram analysis. This made the life of engineers so much easier, as while researching and talking, their knowledge was stored and tagged for reuse. No need for designers to send old-fashioned emails or type their design decisions for future reuse - Due to the hologram technology, the world became greener. People did not need to travel around the world, and the standard became virtual meetings with global teams(airlines discontinued business class). Even holidays can be experienced in the virtual world thanks to a Dutch initiative inspired by coffee. The whole IT infrastructure was powered by efficient solar energy, drastically reducing the amount of carbon dioxide.

- Then, with a shock, I noticed PLM no longer existed. Companies were focusing on their core business processes. Systems/terms like PLM, ERP, and CRM no longer existed. Some older people still remembered the battle between those systems over data ownership and the political discomfort this caused within companies.

- As people were working so efficiently, there was no need to work all week. There were community time slots when everyone was active, but 50 per cent of the time, people had time to recreate (to re-create or recreate was the question). Some older French and German designers remembered the days when they had only 10 weeks holiday per year, unimaginable nowadays.

As we still have more than 40 years to reach this future, I wish you all a successful and excellent 2009.

I am looking forward to being part of the green future next year.

In recent months, I’ve noticed a decline in momentum around sustainability discussions, both in my professional network and personal life. With current global crises—like the Middle East conflict and the erosion of democratic institutions—dominating our attention, long-term topics like sustainability seem to have taken a back seat.

In recent months, I’ve noticed a decline in momentum around sustainability discussions, both in my professional network and personal life. With current global crises—like the Middle East conflict and the erosion of democratic institutions—dominating our attention, long-term topics like sustainability seem to have taken a back seat.

But don’t stop reading yet—there is good news, though we’ll start with the bad.

The Convenient Truth

Human behavior is primarily emotional. A lesson valuable in the PLM domain and discussed during the Share PLM summit. As SharePLM notes in their change management approach, we rely on our “gator brain”—our limbic system – call it System 1 and System 2 or Thinking Fast and Slow. Faced with uncomfortable truths, we often seek out comforting alternatives.

Human behavior is primarily emotional. A lesson valuable in the PLM domain and discussed during the Share PLM summit. As SharePLM notes in their change management approach, we rely on our “gator brain”—our limbic system – call it System 1 and System 2 or Thinking Fast and Slow. Faced with uncomfortable truths, we often seek out comforting alternatives.

The film Don’t Look Up humorously captures this tendency. It mirrors real-life responses to climate change: “CO₂ levels were high before, so it’s nothing new.” Yet the data tells a different story. For 800,000 years, CO₂ ranged between 170–300 ppm. Today’s level is ~420 ppm—an unprecedented spike in just 150 years as illustrated below.

Frustratingly, some of this scientific data is no longer prominently published. The narrative has become inconvenient, particularly for the fossil fuel industry.

Persistent Myths

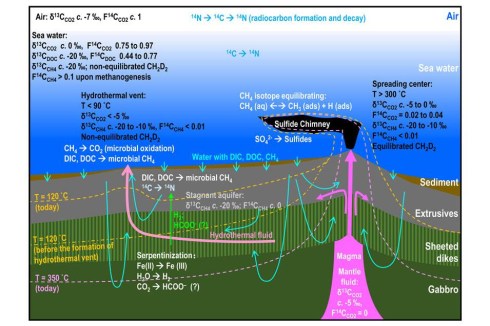

Then there is the pseudo-scientific claim that fossil fuels are infinite because the Earth’s core continually generates them. The Abiogenic Petroleum Origin theory is a fringe theory, sometimes revived from old Soviet science, and lacks credible evidence. See image below

Oil remains a finite, biologically sourced resource. Yet such myths persist, often supported by overly complex jargon designed to impress rather than inform.

The Dissonance of Daily Life

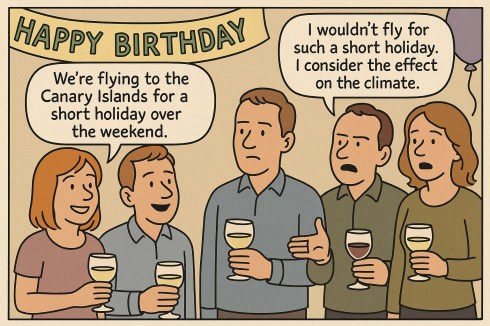

A young couple casually mentioned flying to the Canary Islands for a weekend at a recent birthday party. When someone objected on climate grounds, they simply replied, “But the climate is so nice there!”

“Great climate on the Canary Islands”

This reflects a common divide among young people—some are deeply concerned about the climate, while many prioritize enjoying life now. And that’s understandable. The sustainability transition is hard because it challenges our comfort, habits, and current economic models.

The Cost of Transition

Companies now face regulatory pressure such as CSRD (Corporate Sustainability Reporting Directive), DPP (Digital Product Passport), ESG, and more, especially when selling in or to the European market. These shifts aren’t usually driven by passion but by obligation. Transitioning to sustainable business models comes at a cost—learning curves and overheads that don’t align with most corporations’ short-term, profit-driven strategies.

Companies now face regulatory pressure such as CSRD (Corporate Sustainability Reporting Directive), DPP (Digital Product Passport), ESG, and more, especially when selling in or to the European market. These shifts aren’t usually driven by passion but by obligation. Transitioning to sustainable business models comes at a cost—learning curves and overheads that don’t align with most corporations’ short-term, profit-driven strategies.

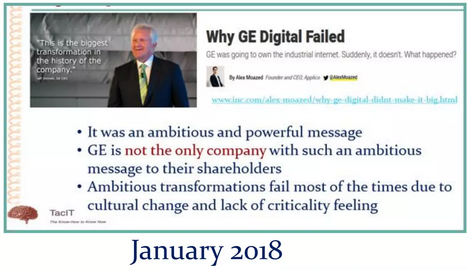

However, we have also seen how long-term visions can be crushed by shareholder demands:

- Xerox (1970s–1980s) pioneered GUI, the mouse, and Ethernet, but failed to commercialize them. Apple and Microsoft reaped the benefits instead.

- General Electric under Jeff Immelt tried to pivot to renewables and tech-driven industries. Shareholders, frustrated by slow returns, dismantled many initiatives.

- Despite ambitious sustainability goals, Siemens faced similar investor pressure, leading to spin-offs like Siemens Energy and Gamesa.

The lesson?

Transforming a business sustainably requires vision, compelling leadership, and patience—qualities often at odds with quarterly profit expectations. I explored these tensions again in my presentation at the PLM Roadmap/PDT Europe 2024 conference, read more here: Model-Based: The Digital Twin.

I noticed discomfort in smaller, closed-company sessions, some attendees said, “We’re far from that vision. ”

I noticed discomfort in smaller, closed-company sessions, some attendees said, “We’re far from that vision. ”

My response: “That’s okay. Sustainability is a generational journey, but it must start now”.

Signs of Hope

Now for the good news. In our recent PGGA (PLM Green Global Alliance) meeting, we asked: “Are we tired?” Surprisingly, the mood was optimistic.

Yes, some companies are downscaling their green initiatives or engaging in superficial greenwashing. But other developments give hope:

- China is now the global leader in clean energy investments, responsible for ~37% of the world’s total. In 2023 alone, it installed over 216 GW of solar PV—more than the rest of the world combined—and leads in wind power too. With over 1,400 GW of renewable capacity, China demonstrates that a centralized strategy can overcome investor hesitation.

- Long-term-focused companies like Iberdrola (Spain), Ørsted (Denmark), Tesla (US), BYD, and CATL (China) continue to invest heavily in EVs and batteries—critical to our shared future.

A Call to Engineers: Design for Sustainability

We may be small at the PLM Green Global Alliance, but we’re committed to educating and supporting the Product Lifecycle Management (PLM) community on sustainability.

That’s why I’m excited to announce the launch of our Design for Sustainability initiative on June 25th.

Led by Eric Rieger and Matthew Sullivan, this initiative will bring together engineers to collaborate and explore sustainable design practices. Whether or not you can attend live, we encourage everyone to engage with the recording afterward.

Conclusion

Sustainability might not dominate headlines today. In fact, there’s a rising tide of misinformation, offering people a “convenient truth” that avoids hard choices. But our work remains urgent. Building a livable planet for future generations requires long-term vision and commitment, even when it is difficult or unpopular.

So, are you tired—or ready to shape the future?

Wow, what a tremendous amount of impressions to digest when traveling back from Jerez de la Frontera, where Share PLM held its first PLM conference. You might have seen the energy from the messages on LinkedIn, as this conference had a new and unique daring starting point: Starting from human-led transformations.

Wow, what a tremendous amount of impressions to digest when traveling back from Jerez de la Frontera, where Share PLM held its first PLM conference. You might have seen the energy from the messages on LinkedIn, as this conference had a new and unique daring starting point: Starting from human-led transformations.

Look what Jens Chemnitz, Linda Kangastie, Martin Eigner, Jakob Äsell or Oleg Shilovitsky had to say.

For over twenty years, I have attended all kinds of PLM events, either vendor-neutral or from specific vendors. None of these conferences created so many connections between the attendees and the human side of PLM implementation.

We can present perfect PLM concepts, architectures and methodologies, but the crucial success factor is the people—they can make or break a transformative project.

Here are some of the first highlights for those who missed the event and feel sorry they missed the vibe. I might follow up in a second post with more details. And sorry for the reduced quality—I am still enjoying Spain and refuse to use AI to generate this human-centric content.

The scenery

Approximately 75 people have been attending the event in a historic bodega, Bodegas Fundador, in the historic center of Jerez. It is not a typical place for PLM experts, but an excellent place for humans with an Andalusian atmosphere. It was great to see companies like Razorleaf, Technia, Aras, XPLM and QCM sponsor the event, confirming their commitment. You cannot start a conference from scratch alone.

The next great differentiator was the diversity of the audience. Almost 50 % of the attendees were women, all working on the human side of PLM.

Another brilliant idea was to have the summit breakfast in the back of the stage area, so before the conference days started, you could mingle and mix with the people instead of having a lonely breakfast in your hotel.

Another brilliant idea was to have the summit breakfast in the back of the stage area, so before the conference days started, you could mingle and mix with the people instead of having a lonely breakfast in your hotel.

Now, let’s go into some of the highlights; there were more.

A warm welcome from Share PLM

Beatriz Gonzalez, CEO and co-founder of Share PLM, kicked off the conference, explaining the importance of human-led transformations and organizational change management and sharing some of their best practices that have led to success for their customers.

You might have seen this famous image in the past, explaining why you must address people’s emotions.

Working with Design Sprints?

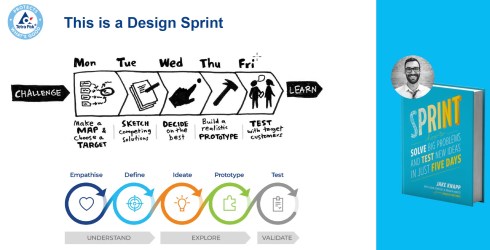

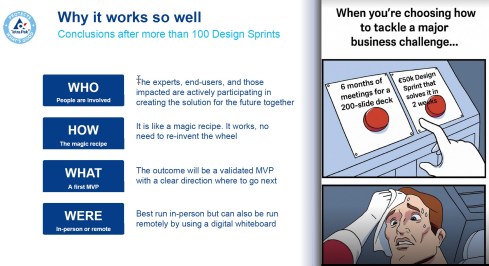

Have you ever heard of design sprints as a methodology for problem-solving within your company? If not, you should read the book by Jake Knapp- Creator of Design Sprint.

Andrea Järvén, program manager at Tetra Pak and closely working with the PLM team, recommended this to us. She explained how Tetra Pak successfully used design sprints to implement changes. You would use design sprints when development cycles run too looong, Teams lose enthusiasm and focus, work is fragmented, and the challenges are too complex.

Andrea Järvén, program manager at Tetra Pak and closely working with the PLM team, recommended this to us. She explained how Tetra Pak successfully used design sprints to implement changes. You would use design sprints when development cycles run too looong, Teams lose enthusiasm and focus, work is fragmented, and the challenges are too complex.

Instead of a big waterfall project, you run many small design sprints with the relevant stakeholders per sprint, coming step by step closer to the desired outcome.

The sprints are short – five days of the full commitment of a team targeting a business challenge, where every day has a dedicated goal, as you can see from the image above.

It was an eye-opener, and I am eager to learn where this methodology can be used in the PLM projects I contribute.

Unlocking Success: Building a Resilient Team for Your PLM Journey

Johan Mikkelä from FLSmidth shared a great story about the skills, capacities, and mindset needed for a PLM transformational project.

Johan Mikkelä from FLSmidth shared a great story about the skills, capacities, and mindset needed for a PLM transformational project.

Johan brought up several topics to consider when implementing a PLM project based on his experiences.

One statement that resonated well with the audience of this conference was:

The more diversified your team is, the faster you can adapt to changes.

He mentioned that PLM projects feel like a marathon, and I believe it is true when you talk about a single project.

However, instead of a marathon, we should approach PLM activities as a never-ending project, but a pleasant journey that is not about reaching a finish but about step-by-step enjoying, observing, and changing a little direction when needed.

However, instead of a marathon, we should approach PLM activities as a never-ending project, but a pleasant journey that is not about reaching a finish but about step-by-step enjoying, observing, and changing a little direction when needed.

Strategic Shift of Focus – a human-centric perspective

Besides great storytelling, Antonio Casaschi‘s PLM learning journey at Assa Abloy was a perfect example of why PLM theory and reality often do not match. With much energy and experience, he came to Assa Abloy to work on the PLM strategy.

Besides great storytelling, Antonio Casaschi‘s PLM learning journey at Assa Abloy was a perfect example of why PLM theory and reality often do not match. With much energy and experience, he came to Assa Abloy to work on the PLM strategy.

He started his PLM strategies top-down, trying to rationalize the PLM infrastructure within Assa Abloy with a historically bad perception of a big Teamcenter implementation from the past. Antonio and his team were the enemies disrupting the day-to-day life of the 200+ companies under the umbrella of Assa Abloy.

A logical lesson learned here is that aiming top-down for a common PLM strategy is impossible in a company that acquires another six new companies per quarter.

His final strategy is a bottom-up strategy, where he and the team listen to and work with the end-users in the native environments. They have become trusted advisors now as they have broad PLM experience but focus on current user pains. With the proper interaction, his team of trusted advisors can help each of the individual companies move towards a more efficient and future-focused infrastructure at their own pace.

The great lessons I learned from Antonio are:

- If your plan does not work out, be open to failure. Learn from your failures and aim for the next success.

- Human relations—I trust you, understand you, and know what to do—are crucial in such a complex company landscape.

Navigating Change: Lessons from My First Year as a Program Manager

Linda Kangastie from Valmet Technologies Oy in Finland shared her experiences within the company, from being a PLM key user to now being a PLM program manager for the PAP Digi Roadmap, containing PLM, sales tools, installed base, digitalization, process harmonization and change management, business transformation—a considerable scope.

Linda Kangastie from Valmet Technologies Oy in Finland shared her experiences within the company, from being a PLM key user to now being a PLM program manager for the PAP Digi Roadmap, containing PLM, sales tools, installed base, digitalization, process harmonization and change management, business transformation—a considerable scope.

The recommendations she gave should be a checklist for most PLM projects – if you are missing one of them, ask yourself what you are missing:

- THE ROADMAP and THE BIG PICTURE – is your project supported by a vision and a related roadmap of milestones to achieve?

- Biggest Buy-in comes with money! – The importance of a proper business case describing the value of the PLM activities and working with use cases demonstrating the value.

- Identify the correct people in the organization – the people that help you win, find sparring partners in your organization and make sure you have a common language.

- Repetition – taking time to educate, learn new concepts and have informal discussions with people –is a continuous process.

As you can see, there is no discussion about technology– it is about business and people.

To conclude, other speakers mentioned this topic too; it is about being honest and increasing trust.

The Future Is Human: Leading with Soul in a World of AI

Helena Guitierez‘s keynote on day two was the one that touched me the most as she shared her optimistic vision of the future where AI will allow us to be so more efficient in using our time, combined, of course, with new ways of working and behaviors.

Helena Guitierez‘s keynote on day two was the one that touched me the most as she shared her optimistic vision of the future where AI will allow us to be so more efficient in using our time, combined, of course, with new ways of working and behaviors.

As an example, she demonstrated she had taken an academic paper from Martin Eigner, and by using an AI tool, the German paper was transformed into an English learning course, including quizzes. And all of this with ½ day compared to the 3 to 4 days it would take the Share PLM team for that.

With the time we save for non-value-added work, we should not remain addicted to passive entertainment behind a flat screen. There is the opportunity to restore human and social interactions in person in areas and places where we want to satisfy our human curiosity.

I agree with her optimism. During Corona and the introduction of teams and Zoom sessions, I saw people become resources who popped up at designated times behind a flat screen.

I agree with her optimism. During Corona and the introduction of teams and Zoom sessions, I saw people become resources who popped up at designated times behind a flat screen.

The real human world was gone, with people talking in the corridors at the coffee machine. These are places where social interactions and innovation happen. Coffee stimulates our human brain; we are social beings, not resources.

Death on the Shop Floor: A PLM Murder Mystery

Rob Ferrone‘s theatre play was an original way of explaining and showing that everyone in the company does their best. The product was found dead, and Andrea Järvén alias Angie Neering, Oleg Shilovitsky alias Per Chasing, Patrick Willemsen alias Manny Facturing, Linda Kangastie alias Gannt Chartman and Antonio Casaschi alias Archie Tect were either pleaded guilty by the public jury or not guilty, mainly on the audience’s prejudices.

You can watch the play here, thanks to Michael Finocchiaro :

According to Rob, the absolute need to solve these problems that allow products to die is the missing discipline of product data people, who care for the flow, speed, and quality of product data. Rob gave some examples of his experience with Quick Release project he had worked with.

My learnings from this presentation are that you can make PLM stories fun, but even more important, instead of focusing on data quality by pushing each individual to be more accurate—it seems easy to push, but we know the quality; you should implement a workforce with this responsibility. The ROI for these people is clear.

![]() Note: I believe that once companies become more mature in working with data-driven tools and processes, AI will slowly take over the role of these product data people.

Note: I believe that once companies become more mature in working with data-driven tools and processes, AI will slowly take over the role of these product data people.

Conclusion

I greatly respect Helena Guitierez and the Share PLM team. I appreciate how they demonstrated that organizing a human-centric PLM summit brings much more excitement than traditional technology—or industry-focused PLM conferences. Starting from the human side of the transformation, the audience was much more diverse and connected.

Closing the conference with a fantastic flamenco performance was perhaps another excellent demonstration of the human-centric approach. The raw performance, a combination of dance, music, and passion, went straight into the heart of the audience – this is how PLM should be (not every day)

There is so much more to share. Meanwhile, you can read more highlights through Michal Finocchiaro’s overview channel here.

Two weeks ago, I wrote a generic post related to System Thinking, in my opinion, a must-have skill for the 21st century (and beyond). Have a look at the post on LinkedIn; in particular interesting to see the discussion related to Systems Thinking: a must-have skill for the 21st century.

Two weeks ago, I wrote a generic post related to System Thinking, in my opinion, a must-have skill for the 21st century (and beyond). Have a look at the post on LinkedIn; in particular interesting to see the discussion related to Systems Thinking: a must-have skill for the 21st century.

I liked Remy Fannader’s remark that thinking about complexity was not something new.

This remark is understandable from his personal context. Many people enjoy thinking – it was a respected 20th-century skill.

However, I believe, as Daniel Kahneman describes in his famous book: Thinking Fast and Slow, our brain is trying to avoid thinking.

However, I believe, as Daniel Kahneman describes in his famous book: Thinking Fast and Slow, our brain is trying to avoid thinking.

This is because thinking consumes energy, the energy the body wants to save in the case of an emergency.

So let’s do a simple test (coming from Daniel):

xx

A bat and a ball cost together $ 1.10 – the bat costs one dollar more than the ball. So how much does the ball cost?

Look at the answer at the bottom of this post. If you have it wrong, you are a fast thinker. And this brings me to my next point. Our brain does not want to think deeply; we want fast and simple solutions. This is a challenge in a complex society as now we hear real-time information coming from all around the world. What is true and what is fake is hard to judge.

However, according to Kahneman, we do not want to waste energy on thinking. We create or adhere to simple solutions allowing our brains to feel relaxed.

This human behavior has always been exploited by populists and dictators: avoid complexity because, in this way, you lose people. Yuval Harari builds upon this with his claim that to align many people, you need a myth. I wrote about the need for myths in the PLM space a few times, e.g., PLM as a myth? and The myth perception

And this is where my second thoughts related to Systems Thinking started. Is the majority of people able and willing to digest complex problems?

And this is where my second thoughts related to Systems Thinking started. Is the majority of people able and willing to digest complex problems?

My doubts grew bigger when I had several discussions about fighting climate change and sustainability.

Both Brains required

By coincidence, I bumped on this interesting article Market-led Sustainability is a ‘Fix that Fails’…

By coincidence, I bumped on this interesting article Market-led Sustainability is a ‘Fix that Fails’…

I provided a link to the post indirectly through LinkedIn. If you are a LinkedIn PLM Global Green Alliance member, you can see below the article an interesting analysis related to market-led sustainability, system thinking and economics.

Join the PLM Global Green Alliance group to be part of the full discussion; otherwise, I recommend you visit Both Brains Required, where you can find the source article and other related content.

Join the PLM Global Green Alliance group to be part of the full discussion; otherwise, I recommend you visit Both Brains Required, where you can find the source article and other related content.

It is a great article with great images illustrating the need for systems thinking and sustainability. All information is there to help you realize that sustainability is not just a left-brain exercise.

The left brain is supposed to be logical and analytical. That’s systems thinking, you might say quickly. However, the other part of our brain is about our human behavior, and this side is mostly overlooked. My favorite quote from the article:

Voluntary Market-Led activities are not so much a solution to the sustainability crisis as a symptom of more profoundly unsustainable foundations of human behavior.

The article triggered my second thoughts related to systems thinking. Behavioral change is not part of systems thinking. It is another dimension harder to address and even harder to focus on sustainability.

The LinkedIn discussion below the article Market-led Sustainability is a ‘Fix that Fails’… is a great example of the talks we would like to have in our PLM Global Green Alliance group. Nina Dar, Patrick Hillberg and Richard McFall brought in several points worth discussing. Too many to discuss them all here – let’s take two fundamental issues:

1. More than economics

An interesting viewpoint in this discussion was the relation to economics. We don’t believe that economic growth is the main point to measure. Even a statement like: “Sustainable businesses will be more profitable than traditional ones” is misleading when companies are measured by shareholder value or EBIT (Earnings Before Interest or Taxes). We briefly touched on Kate Raworth’s doughnut economics.

This HBR article mentioned in the discussion: Business Schools Must Do More to Address the Climate Crisis also shows it is not just about systems thinking.

We have seen this in the Apparel industry with the horrible collapse of a factory in Bangladesh (2013). Still, the inhumane accidents happen in southeast Asia. I like to quote Chris Calverley in his LinkedIn article: Making ethical apparel supply chains achievable on a global scale.

No one gets into business because they want to behave unethically. On the contrary, a lack of ethics is usually driven by a common desire to operate more efficiently and increase profit margins.

In my last post, I shared a similar example from an automotive tier 2 supplier. Unfortunately, suppliers are not measured or rewarded for sustainability efforts; only efficiency and costs are relevant.

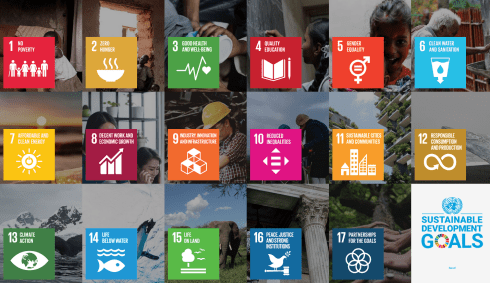

The seventeen Sustainability Development Goals (SDG), as defined by the United Nations, are the best guidance for sustainable drivers beyond money. Supporting the SDGs enforce systems thinking when developing a part, a product, or a solution. Many other stakeholders need to be taken care of, at least if you truly support sustainability as a company.

The seventeen Sustainability Development Goals (SDG), as defined by the United Nations, are the best guidance for sustainable drivers beyond money. Supporting the SDGs enforce systems thinking when developing a part, a product, or a solution. Many other stakeholders need to be taken care of, at least if you truly support sustainability as a company.

2. The downside of social media

The LinkedIn discussion related to Market-led Sustainability is a ‘Fix that Fails’… The thread shows that LinkedIn, like other social media, is not really interested in supporting in-depth discussions – try to navigate what has been said in chronological order. With Patrick, Nina and Richard, we agreed to organize a follow-up discussion in our PLM Global Green Alliance Group.

The LinkedIn discussion related to Market-led Sustainability is a ‘Fix that Fails’… The thread shows that LinkedIn, like other social media, is not really interested in supporting in-depth discussions – try to navigate what has been said in chronological order. With Patrick, Nina and Richard, we agreed to organize a follow-up discussion in our PLM Global Green Alliance Group.

And although we are happy with social media as it allows each of us to reach a global audience, there seems to be a worrying contra-productive impact. If you read the book Stolen Focus. A quote:

And although we are happy with social media as it allows each of us to reach a global audience, there seems to be a worrying contra-productive impact. If you read the book Stolen Focus. A quote:

All over the world, our ability to pay attention is collapsing. In the US, college students now focus on one task for only 65 seconds, and office workers, on average, manage only three minutes

This is worrying, returning to Remy Fannader’s remark: thinking about complexity was not something new. The main difference is that it is not new. However, our society is changing towards thinking too fast, not rewarding systems thinking.

Even scarier, if you have time, read this article from The Atlantic: about the impact of social media on the US Society. It is about trust in science and data. Are we facing the new (Trump) Tower of Babel in our modern society? As the writers state: Babel is a metaphor for what some forms of social media have done to nearly all of the groups and institutions most important to the country’s future—and to us as a people.

Even scarier, if you have time, read this article from The Atlantic: about the impact of social media on the US Society. It is about trust in science and data. Are we facing the new (Trump) Tower of Babel in our modern society? As the writers state: Babel is a metaphor for what some forms of social media have done to nearly all of the groups and institutions most important to the country’s future—and to us as a people.

xx

Congratulations

support

The fact that you reached this part of the post means your attention span has been larger than 3 minutes, showing there is hope for people like you and me. As an experiment to discover how many people read the post till here, please answer with the “support” icon if you have reached this part of the post.

I am curious to learn how many of us who saw the post came here.

Conclusion

Systems Thinking is a must-have skill for the 21st century. Many of us working in the PLM domain focus on providing support for systems thinking, particularly Life Cycle Assessment capabilities. However, the discussion with Patrick Hillberg, Nina Darr and Richard McFall made me realize there is more: economics and human behavior. For example, can we change our economic models, measuring companies not only for the money profit they deliver? What do?

Answering this type of question will be the extended mission for PLM consultants of the future – are you ready?

The answer to the question with the ball and the bat:

A fast answer would say the price of the ball is 10 cents. However, this would make the price of the bat $1.10, giving a total cost of $1.20. So the right answer should be 5 cents. To be honest, I got tricked the first time too. Never too late to confirm you make mistakes, as only people who do not do anything make no mistakes.

After two quiet weeks of spending time with my family in slow motion, it is time to start the year.

After two quiet weeks of spending time with my family in slow motion, it is time to start the year.

First of all, I wish you all a happy, healthy, and positive outcome for 2022, as we need energy and positivism together. Then, of course, a good start is always cleaning up your desk and only leaving the relevant things for work on the desk.

Still, I have some books at arm’s length, either physical or on my e-reader, that I want to share with you – first, the non-obvious ones:

The Innovators Dilemma

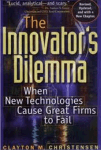

A must-read book was written by Clayton Christensen explaining how new technologies can overthrow established big companies within a very short period. The term Disruptive Innovation comes up here. Companies need to remain aware of what is happening outside and ready to adapt to your business. There are many examples even recently where big established brands are gone or diminished in a short period.

A must-read book was written by Clayton Christensen explaining how new technologies can overthrow established big companies within a very short period. The term Disruptive Innovation comes up here. Companies need to remain aware of what is happening outside and ready to adapt to your business. There are many examples even recently where big established brands are gone or diminished in a short period.

In his book, he wrote about DEC (Digital Equipment Company) market leader in minicomputers, not having seen the threat of the PC. Or later Blockbuster (from video rental to streaming), Kodak (from analog photography to digital imaging) or as a double example NOKIA (from paper to market leader in mobile phones killed by the smartphone).

The book always inspired me to be alert for new technologies, how simple they might look like, as simplicity is the answer at the end. I wrote about in 2012: The Innovator’s Dilemma and PLM, where I believed cloud, search-based applications and Facebook-like environments could disrupt the PLM world. None of this happened as a disruption; these technologies are now, most of the time, integrated by the major vendors whose businesses are not really disrupted. Newcomers still have a hard time to concur marketspace.

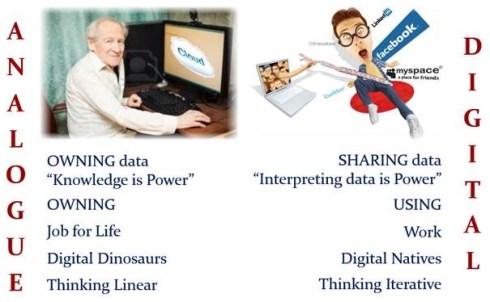

In 2015 I wrote again about this book, The Innovator’s dilemma and Generation change. – image above. At that time, understanding disruption will not happen in the PLM domain. Instead, I predict there will be a more evolutionary process, which I would later call: From Coordinated to Connected.

The future ways of working address the new skills needed for the future. You need to become a digital native, as COVID-19 pushed many organizations to do so. But digital native alone does not bring success. We need new ways of working which are more difficult to implement.

Sapiens

The book Sapiens by Yuval Harari made me realize the importance of storytelling in the domain of PLM and business transformation. In short, Yuval Harari explains why the human race became so dominant because we were able to align large groups around an abstract theme. The abstract theme can be related to religion, the power of a race or nation, the value of money, or even a brand’s image.

The book Sapiens by Yuval Harari made me realize the importance of storytelling in the domain of PLM and business transformation. In short, Yuval Harari explains why the human race became so dominant because we were able to align large groups around an abstract theme. The abstract theme can be related to religion, the power of a race or nation, the value of money, or even a brand’s image.

The myth (read: simplified and abstract story) hides complexity and inconsistencies. It allows everyone to get motivated to work towards one common goal. A Yuval says: “Fiction is far more powerful because reality is too complex”.

Too often, I have seen well-analyzed PLM projects that were “killed” by management because it was considered too complex. I wrote about this in 2019 PLM – measurable or a myth? claiming that the real benefits of PLM are hard to predict, and we should not look isolated only to PLM.

My 2020 follow-up post The PLM ROI Myth, eludes to that topic. However, even if you have a soundproof business case at the management level, still the myth might be decisive to justify the investment.

That’s why PLM vendors are always working on their myths: the most cost-effective solution, the most visionary solution, the solution most used by your peers and many other messages to influence your emotions, not your factual thinking. So just read the myths on their websites.

If you have no time to read the book, look at the above 2015 Ted to grasp the concept and use it with a PLM -twisted mind.

Re-use your CAD

In 2015, I read this book during a summer holiday (meanwhile, there is a second edition). Although it was not a PLM book, it was helping me to understand the transition effort from a classical document-driven enterprise towards a model-based enterprise.

In 2015, I read this book during a summer holiday (meanwhile, there is a second edition). Although it was not a PLM book, it was helping me to understand the transition effort from a classical document-driven enterprise towards a model-based enterprise.

Jennifer Herron‘s book helps companies to understand how to break down the (information) wall between engineering and manufacturing.

At that time, I contacted Jennifer to see if others like her and Action Engineering could explain Model-Based Definition comprehensively, for example, in Europe- with no success.

As the Model-Based Enterprise becomes more and more the apparent future for companies that want to be competitive or benefit from the various Digital Twin concepts. For that reason, I contacted Jennifer again last year in my post: PLM and Model-Based Definition.

As you can read, the world has improved, there is a new version of the book, and there is more and more information to share about the benefits of a model-based approach.

I am still referencing Action Engineering and their OSCAR learning environment for my customers. Unfortunately, many small and medium enterprises do not have the resources and skills to implement a model-based environment.

I am still referencing Action Engineering and their OSCAR learning environment for my customers. Unfortunately, many small and medium enterprises do not have the resources and skills to implement a model-based environment.

Instead, these companies stay on their customers’ lowest denominator: the 2D Drawing. For me, a model-based definition is one of the first steps to master if your company wants to provide digital continuity of design and engineering information towards manufacturing and operations. Digital twins do not run on documents; they require model-based environments.

The book is still on my desk, and all the time, I am working on finding the best PLM practices related to a Model-Based enterprise.

It is a learning journey to deal with a data-driven, model-based environment, not only for PLM but also for CM experts, as you might have seen from my recent dialogue with CM experts: The future of Configuration Management.

It is a learning journey to deal with a data-driven, model-based environment, not only for PLM but also for CM experts, as you might have seen from my recent dialogue with CM experts: The future of Configuration Management.

Products2019

This book was an interesting novelty published by John Stark in 2020. John is known for his academic and educational books related to PLM. However, during the early days of the COVID-pandemic, John decided to write a novel. The novel describes the learning journey of Jane from Somerset, who, as part of her MBA studies, is performing a research project for the Josef Mayer Maschinenfabrik. Her mission is to report to the newly appointed CEO what happens with the company’s products all along the lifecycle.

This book was an interesting novelty published by John Stark in 2020. John is known for his academic and educational books related to PLM. However, during the early days of the COVID-pandemic, John decided to write a novel. The novel describes the learning journey of Jane from Somerset, who, as part of her MBA studies, is performing a research project for the Josef Mayer Maschinenfabrik. Her mission is to report to the newly appointed CEO what happens with the company’s products all along the lifecycle.

Although it is not directly a PLM book, the book illustrates the complexity of PLM. It Is about people and culture; many different processes, often disconnected. Everyone has their focus on their particular discipline in the center of importance. If you believe PLM is all about the best technology only, read this book and learn how many other aspects are also relevant.

I wrote about the book in 2020: Products2019 – a must-read if you are new to PLM if you want to read more details. An important point to pick up from this book is that it is not about PLM but about doing business.

PLM is not a magical product. Instead, it is a strategy to support and improve your business.

System Lifecycle Management

Another book, published a little later and motivated by the extra time we all got during the COVID-19 pandemic, was Martin Eigner‘s book System Lifecycle Management.

Another book, published a little later and motivated by the extra time we all got during the COVID-19 pandemic, was Martin Eigner‘s book System Lifecycle Management.

A 281-page journey from the early days of data management towards what Martin calls System Lifecycle Management (SysLM). He was one of the first to talk about System Lifecycle Management instead of PLM.

I always enjoyed Martin’s presentations at various PLM conferences where we met. In many ways, we share similar ideas. However, during his time as a professor at the University of Kaiserslautern (2003-2017), he explored new concepts with his students.

I briefly mentioned the book in my series The road to model-based and connected PLM (Part 5) when discussing SLM or SysLM. His academic research and analysis make this book very valuable. It takes you in a very structured way through the times that mechatronics becomes important, next the time that systems (hardware and software) become important.

I briefly mentioned the book in my series The road to model-based and connected PLM (Part 5) when discussing SLM or SysLM. His academic research and analysis make this book very valuable. It takes you in a very structured way through the times that mechatronics becomes important, next the time that systems (hardware and software) become important.

We discussed in 2015 the applicability of the bimodal approach for PLM. However, as many enterprises are locked in their highly customized PDM/PLM environments, their legacy blocks the introduction of modern model-based and connected approaches.

Where John Stark’s book might miss the PLM details, Martin’s book brings you everything in detail and with all its references.

Where John Stark’s book might miss the PLM details, Martin’s book brings you everything in detail and with all its references.

It is an interesting book if you want to catch up with what has happened in the past 20 years.

More Books …..

More books on my desk have helped me understand the past or that helped me shape the future. As this is a blog post, I will not discuss more books this time reaching my 1500 words.

Still books worthwhile to read – click on their images to learn more:

I discussed this book two times last year. An introduction in PLM and Modularity and a discussion with the authors and some readers of the book: The Modular Way – a follow-up discussion

I discussed this book two times last year. An introduction in PLM and Modularity and a discussion with the authors and some readers of the book: The Modular Way – a follow-up discussion

x

x

A book I read this summer contributed to a better understanding of sustainability. I mentioned this book in my presentation for the Swedish CATIA Forum in October last year – slide 29 of

A book I read this summer contributed to a better understanding of sustainability. I mentioned this book in my presentation for the Swedish CATIA Forum in October last year – slide 29 of

System Thinking becomes crucial for a sustainable future, as I addressed in my post PLM and Sustainability.

System Thinking becomes crucial for a sustainable future, as I addressed in my post PLM and Sustainability.

Sustainability is my area of interest at the PLM Green Global Alliance, an international community of professionals working with Product Lifecycle Management (PLM) enabling technologies and collaborating for a more sustainable decarbonized circular economy.

Conclusion

There is a lot to learn. Tell us something about your PLM bookshelf – which books would you recommend. In the upcoming posts, I will further focus on PLM education. So stay tuned and keep on learning.

In my last post in this series, The road to model-based and connected PLM, I mentioned that perhaps it is time to talk about SLM instead of PLM when discussing popular TLA’s for our domain of expertise. There were not so many encouraging statements for SLM so far.

In my last post in this series, The road to model-based and connected PLM, I mentioned that perhaps it is time to talk about SLM instead of PLM when discussing popular TLA’s for our domain of expertise. There were not so many encouraging statements for SLM so far.

SLM could mean for me, Solution Lifecycle Management, considering that the company’s offering more and more is a mix of products and services. Or SLM could mean System Lifecycle Management, in that case pushing the idea that more and more products are interacting with the outside world and therefore could be considered systems. Products are (almost) dead.

In addition, I mentioned that the typical product lifecycle and related configuration management concepts need to change as in the SLM domain. There is hardware and software with different lifecycles and change processes.

It is a topic I want to explore further. I am curious to learn more from Martijn Dullaart, who will be lecturing at the PLM Road map and PDT 2021 fall conference in November. I hope my expectations are not too high, knowing it is a topic of interest for Martijn. Feel free to join this discussion

It is a topic I want to explore further. I am curious to learn more from Martijn Dullaart, who will be lecturing at the PLM Road map and PDT 2021 fall conference in November. I hope my expectations are not too high, knowing it is a topic of interest for Martijn. Feel free to join this discussion

In this post, it is time to follow up on my third statement related to what data-driven implies:

Data-driven means that we need to manage data in a much more granular manner. We have to look different at data ownership. It becomes more about data accountability per role as the data can be used and consumed throughout the product lifecycle

On this topic, I have a list of points to consider; let’s go through them.

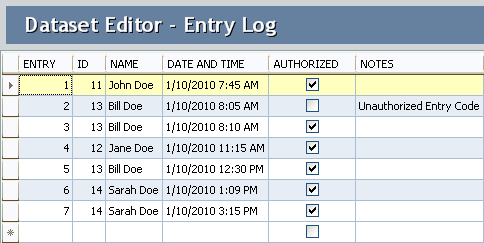

The dataset

In this post, I will often use the term dataset (you are also allowed to write the data set I understood).

A dataset means a predefined number of attributes and values that belong logically to each other. Datasets should be defined based on the purpose and, if possible, designated for a single goal. In this way, they can be stored in a database.

A dataset means a predefined number of attributes and values that belong logically to each other. Datasets should be defined based on the purpose and, if possible, designated for a single goal. In this way, they can be stored in a database.

Combined with other datasets, a combination can result in relevant business information. Note a dataset is not only transactional data; a dataset could also describe geometry.

Identify the dataset

In the document-based world, a lot of information could be stored in a single file. In a data-driven world, we should define a dataset that contains a specific piece of information, logically belonging together. If we are more precise, a part would have various related datasets that make up the definition of a part. These definitions could be:

In the document-based world, a lot of information could be stored in a single file. In a data-driven world, we should define a dataset that contains a specific piece of information, logically belonging together. If we are more precise, a part would have various related datasets that make up the definition of a part. These definitions could be:

- Core identification attributes like ID, Name, Type and Status

- The Type could define a set of linked information. For example, a valve would have different characteristics as a resistor. Through classification, we can link data sets to the core definition of a part.

- The part can have engineering-specific data (CAD and metadata), manufacturing-specific data, supplier-specific data, and service-specific data. Each of these datasets needs to be defined as a unique element in a data-driven environment

- CAD is a particular case as most current CAD systems don’t treat geometry as a single dataset. In a file-based world, many other datasets are stored in the file (e.g., engineering or manufacturing details). In a data-driven environment, we want to have the CAD definition to be treated like a dataset. Dassault Systèmes with their CATIA V6 and 3DEXPERIENCE platform or PTC with OnShape are examples of this approach.Having CAD as separate datasets makes sharing and collaboration so much easier, as we can see from these solutions. The concept for CAD stored in a database is not new, and this approach has been used in various disciplines. Mechanical CAD was always a challenge.

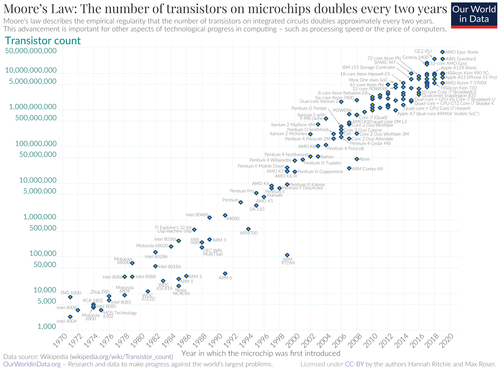

Thanks to Moore’s Law (approximate every 2 years, processor power doubled – click on the image for the details) and higher network connection speed, it starts to make sense to have mechanical CAD also stored in a database instead of a file

Thanks to Moore’s Law (approximate every 2 years, processor power doubled – click on the image for the details) and higher network connection speed, it starts to make sense to have mechanical CAD also stored in a database instead of a file

An important point to consider is a kind of standardization of datasets. In theory, there should be a kind of minimum agreed collection of datasets. Industry standards provide these collections in their dictionary. Whenever you optimize your data model for a connected enterprise, make sure you look first into the standards that apply to your industry.

They might not be perfect or complete, but inventing your own new standard is a guarantee for legacy issues in the future. This remark is also valid for the software vendors in this domain. A proprietary data model might give you a competitive advantage.

They might not be perfect or complete, but inventing your own new standard is a guarantee for legacy issues in the future. This remark is also valid for the software vendors in this domain. A proprietary data model might give you a competitive advantage.

Still, in the long term, there is always the need to connect with outside stakeholders.

Identify the RACI

To ensure a dataset is complete and well maintained, the concept of RACI could be used. RACI is the abbreviation for Responsible Accountable Consulted and Informed and a simplification of the RASCI Model, see also a responsibility assignment matrix.

In a data-driven environment, there is no data ownership anymore like you have for documents. The main reason that data ownership can no longer be used is that datasets can be consumed by anyone in the ecosystem. No longer only your department or the manufacturing or service department.

In a data-driven environment, there is no data ownership anymore like you have for documents. The main reason that data ownership can no longer be used is that datasets can be consumed by anyone in the ecosystem. No longer only your department or the manufacturing or service department.

Data sets in a data-driven environment bring value when connected with other datasets in applications or dashboards.

A dataset describing the specification attributes of a part could be used in a spare part app and a service app. Of course, the dataset will be used in a different context – still, we need to ensure we can trust the data.

Therefore, per identified dataset, there should be governed by a kind of RACI concept. The RACI concept is a way to break the siloes in an organization.

Identify Inside / outside

There is a lot of fear that a connected, data-driven environment will expose Intellectual Property (IP). It came up in recent discussions. If you like storytelling and technology, read my old SmarTeam colleague Alex Bruskin’s post: The Bilbo Baggins Threat to PLM Assets. Alex has written some “poetry” with a deep technical message behind it.

It is true that if your data set is too big, you have the challenge of exposing IP when connecting this dataset with others. Therefore, when building a data model, you should make it possible to have datasets pure for internal usage and datasets for sharing.

It is true that if your data set is too big, you have the challenge of exposing IP when connecting this dataset with others. Therefore, when building a data model, you should make it possible to have datasets pure for internal usage and datasets for sharing.

When you use the concept of RACI, the difference should be defined by the I(informed) – is it PLM-data or PIM-data for example?

Tracking relations

Suppose we follow up on the concept of datasets. In that case, it becomes clear that relations between the datasets are as crucial as the dataset. In traditional PLM applications, these relations are often predefined as part of the core data model/

For example, the EBOM parts have relationships between themselves and specification data – see image.

For example, the EBOM parts have relationships between themselves and specification data – see image.

The MBOM parts have links with the supplier data or the manufacturing process.

The prepared relations in a PLM system allow people to implement the system relatively quickly to map their approaches to this taxonomy.

However, traditional PLM systems are based on a document-based (or file-based) taxonomy combined with related metadata. In a model-based and connected environment, we have to get rid of the document-based type of data.

However, traditional PLM systems are based on a document-based (or file-based) taxonomy combined with related metadata. In a model-based and connected environment, we have to get rid of the document-based type of data.

Therefore, the datasets will be more granular, and there is a need to manage exponential more relations between datasets.

This is why you see the graph database coming up as a needed infrastructure for modern connected applications. If you haven’t heard of a graph database yet, you are probably far from technology hypes. To understand the principles of a graph database you can read this article from neo4j: Graph Databases for Beginners: Why graph technology is the future

This is why you see the graph database coming up as a needed infrastructure for modern connected applications. If you haven’t heard of a graph database yet, you are probably far from technology hypes. To understand the principles of a graph database you can read this article from neo4j: Graph Databases for Beginners: Why graph technology is the future

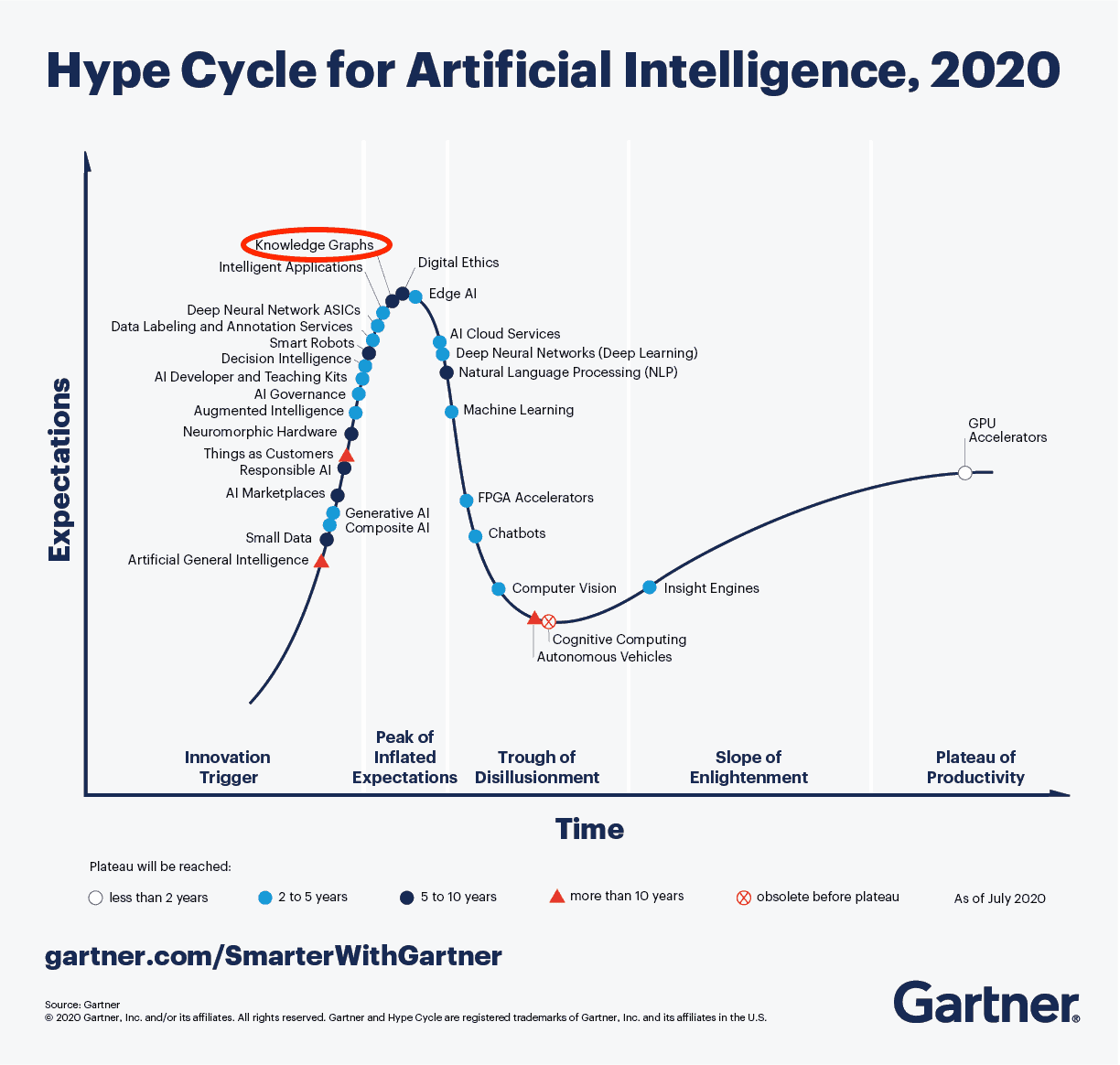

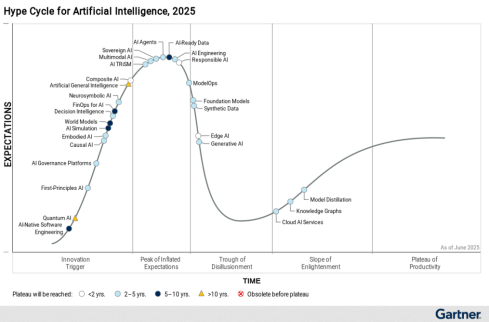

As you can see from the 2020 Gartner Hype Cycle for Artificial Intelligence this technology is at the top of the hype and conceptually the way to manage a connected enterprise. The discussion in this post also demonstrates that besides technology there is a lot of additional conceptual thinking needed before it can be implemented.

Although software vendors might handle the relations and datasets within their platform, the ultimate challenge will be sharing datasets with other platforms to get a connected ecosystem.

For example, the digital web picture shown above and introduced by Marc Halpern at the 2018 PDT conference shows this concept. Recently CIMdata discussed this topic in a similar manner: The Digital Thread is Really a Web, with the Engineering Bill of Materials at Its Center

(Note I am not sure if CIMdata has published a recording of this webinar – if so I will update the link)

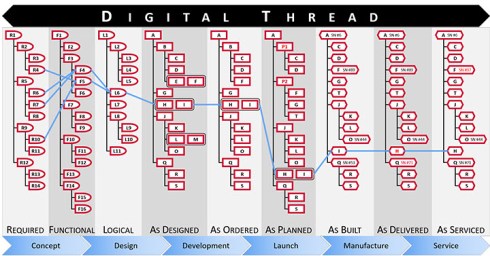

Anyway, these are signs that we started to find the right visuals to imagine new concepts. The traditional digital thread pictures, like the one below, are, for me, impressions of the past as they are too rigid and focusing on some particular value streams.

From a distance, it looks like a connected enterprise should work like our brain. We story information on different abstraction levels. We keep incredibly many relations between information elements. As the brain is a biological organ, connections degrade or get lost. Or the opposite other relationships become so strong that we cannot change them anymore. (“I know I am always right”)

Interestingly, the brain does not use the “single source of truth”-concept – there can be various “truths” inside a brain. This makes us human beings with all the good and the harmful effects of that.

Interestingly, the brain does not use the “single source of truth”-concept – there can be various “truths” inside a brain. This makes us human beings with all the good and the harmful effects of that.

As long as we realize there is no single source of truth.

In business and our technological world, we need sometimes the undisputed truth. Blockchain could be the basis for securing the right connections between datasets to guarantee the result is valid. I am curious if blockchain can scale to complex connected situations, although Moore’s Law might ultimately help us here too(if still valid).

The topic is not new – in 2014 I wrote a post with the title: PLM is doomed unless …. Where I introduced the topic of owning and sharing in the context of the human brain. In the post, I refer to the book On Intelligence by Jeff Hawkins how tries to analyze what is human-based intelligence and how could we apply it to our technology concepts. Still a fascinating book worth reading if you have the time and opportunity.

The topic is not new – in 2014 I wrote a post with the title: PLM is doomed unless …. Where I introduced the topic of owning and sharing in the context of the human brain. In the post, I refer to the book On Intelligence by Jeff Hawkins how tries to analyze what is human-based intelligence and how could we apply it to our technology concepts. Still a fascinating book worth reading if you have the time and opportunity.

Conclusion