You are currently browsing the category archive for the ‘intelligence’ category.

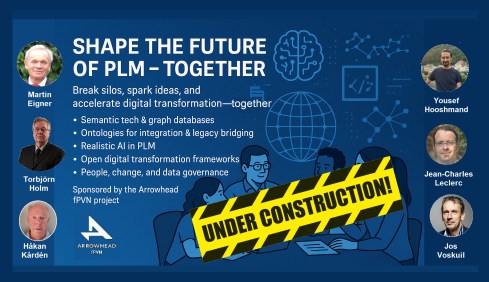

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

Often, PLM implementations are either IT-driven or business-driven to implement a need, and yes, there are people who need to work with it as the closing topic. Time and budget are spent on technology and process definitions, and people get trained. Often, only train the trainer, as there is no more budget or time to let the organization adapt, and rapid ROI is expected.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

The conference aims to start with the people, sharing human-related experiences and enabling networking between people – not only about the industry practices (there will be sessions and discussions on this topic too).

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

And if you are interested and have the opportunity, join us and meet some great thought leaders and others with this shared interest.

Why is modern PLM a dream?

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

Traditional PLM

Traditional PLM is often associated with implementing a PLM system, mainly serving engineering. Downstream engineering data usage is usually pushed manually or through interfaces to other enterprise systems, like ERP, MES and service systems.

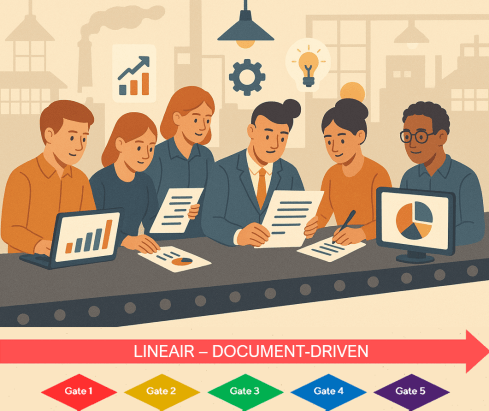

Traditional PLM is closely connected to the coordinated way of working: a linear process based on passing documents (drawings) and datasets (BOMs). Historically, CAD integrations have been the most significant characteristic of these systems.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

Unfortunately, most PLM implementations in the field are based on this approach and are sometimes characterized as advanced PDM.

You recognize traditional PLM thinking when people talk about the single source of truth.

Modern PLM

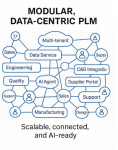

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

More on this challenge will be discussed later, as in our PLM community, most discussions are IT-driven: architectures, ontologies, and technologies – the WHAT.

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

I think this article from Oleg Shilovitsky, “Rethinking PLM: Is It Time to Move Beyond the Monolith?“ AND the discussion thread in this post is a must-read. I will not quote the content here again.

After reading Oleg’s post and the comments, come back here

The reason for this approach: It is a perfect example of the connected approach. Instead of collecting all the information inside one post (book ?), the information can be accessed by following digital threads. It also illustrates that in a connected environment, you do not own the data; the data comes from accountable people.

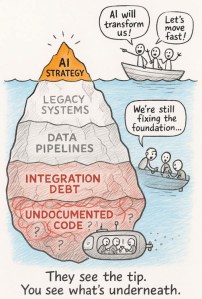

Building such a modern infrastructure is challenging when your company depends mainly on its legacy—the people, processes and systems. Where to change, how to change and when to change are questions that should be answered at the top and require a vision and evolutionary implementation strategy.

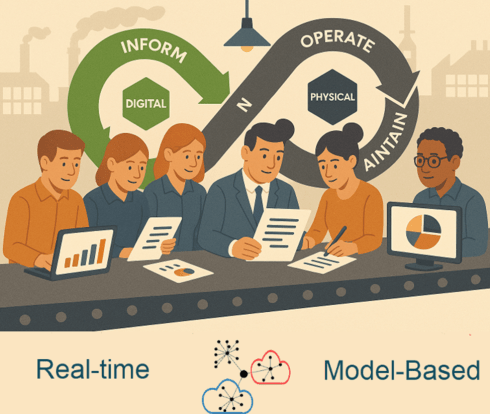

A company should build a layer of connected data on top of the coordinated infrastructure to support users in their new business roles. Implementing a digital twin has significant business benefits if the twin is used to connect with real-time stakeholders from both the virtual and physical worlds.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

Again, there are no quotes from the article; you know how to dive deeper into the connected topic.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

You might recognize modern PLM thinking when people talk about the nearest source of truth and the single source of change.

Is Intelligent PLM the next step?

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

The advantage of these platforms is that they are all created data-driven, not suffering from legacy issues.

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

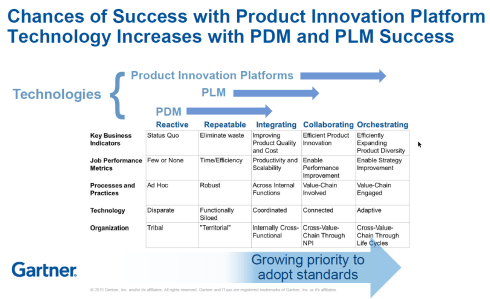

At that time, Peter Bilello shared the CIMdata perspective, Marc Halpern (Gartner) showed my favorite positioning slide (below), and Martin Eigner presented, according to my notes, this digital trend in PLM in his session:” What becomes different for PLM/SysLM?”

2015 Marc Halpern – the Product Innovation Platform (PIP)

While concepts started to become clearer, businesses mainly remained the same. The coordinated approach is the most convenient, as you do not need to reshape your organization. And then came the LLMs that changed everything.

Suddenly, it became possible for organizations to unlock knowledge hidden in their company and make it accessible to people.

Without drastically changing the organization, companies could now improve people’s performance and output (theoretically); therefore, it became a topic of interest for management. One big challenge for reaping the benefits is the quality of the data and information accessed.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

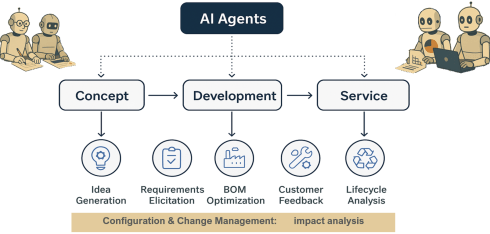

You might recognize intelligent PLM thinking when people and AI agents talk about the most likely truth.

Conclusion

Are you interested in these topics and their meaning for your business and career? Join me at the Share PLM conference, where I will discuss “The dilemma: Humans cannot transform—help them!” Time to work on your dreams!

Happy New Year to all of you, and may this year be a year of progress in understanding and addressing the challenges ahead of us.

Happy New Year to all of you, and may this year be a year of progress in understanding and addressing the challenges ahead of us.

To help us focus, I selected three major domains I will explore further this year. These domains are connected – of course – as nothing is isolated in a world of System Thinking. Also, I wrote about these domains in the past, as usually, noting happens out of the blue.

Meanwhile, there are a lot of discussions related to Artificial Intelligence (AI), in particular ChatGPT (openAI). But can AI provide the answers? I believe not, as AI is mainly about explicit knowledge, the knowledge you can define by (learning) algorithms.

Meanwhile, there are a lot of discussions related to Artificial Intelligence (AI), in particular ChatGPT (openAI). But can AI provide the answers? I believe not, as AI is mainly about explicit knowledge, the knowledge you can define by (learning) algorithms.

Expert knowledge, often called Tacit knowledge, is the knowledge of the expert, combining information from different domains into innovative solutions.

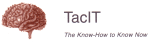

![]() I started my company, TacIT, in 1999 because I thought (and still think) that Tacit knowledge is the holy grail for companies.

I started my company, TacIT, in 1999 because I thought (and still think) that Tacit knowledge is the holy grail for companies.

Let’s see with openAI how far we get ……

Digitization of the PLM domain

The PLM domain is suffering from its legacy data (documents), legacy processes (linear – mechanical focus) and legacy people (siloed). The statement is a generalization.

The PLM domain is suffering from its legacy data (documents), legacy processes (linear – mechanical focus) and legacy people (siloed). The statement is a generalization.

More details can be found in my blog series: The road to model-based and connected PLM.

So why should companies move to a model-based and connected approach for their PLM infrastructure?

![]() There are several reasons why companies may want to move to a model-based and connected approach for their Product Lifecycle Management (PLM) infrastructure:

There are several reasons why companies may want to move to a model-based and connected approach for their Product Lifecycle Management (PLM) infrastructure:

- Increased efficiency: A model-based approach allows for creating a digital twin of the product, which can be used to simulate and test various design scenarios, reducing the need for physical prototypes and testing. This can lead to faster and more efficient product development.

- Improved collaboration: A connected PLM infrastructure allows for better collaboration between different teams and departments, as all product-related information is stored in a central location and can be accessed by authorized personnel. This can improve communication and decision-making within the organization.

- Enhanced visibility: A model-based PLM system provides a single source of truth for all product-related data, giving management a clear and comprehensive view of the product development process. This can help identify bottlenecks and areas for improvement.

- Reduced risk: By keeping all product-related information in a centralized location, the risk of data loss or inconsistencies is reduced. This can help ensure that the product is developed in accordance with regulatory requirements and company standards.

- Increased competitiveness: A model-based and connected PLM infrastructure can help companies bring new products to market faster and with fewer errors, giving them a competitive advantage in their industry.

The text in italics was created by ChatGPT. After three learning cycles, this was the best answer I got. What we are missing in this answer is the innovative and transformative part that modern PLM can bring. Where is the concept of different ways of working, and new business models, both drivers for digitalization in many businesses?

Expert knowledge related to Federated PLM (or Killing the PLM Monolith) are topics you will not find through AI. This is, for me, the most interesting part to explore.

Expert knowledge related to Federated PLM (or Killing the PLM Monolith) are topics you will not find through AI. This is, for me, the most interesting part to explore.

We see the need but lack a common understanding of the HOW.

Algorithms will not innovate; for that, you need Tacit intelligence & Curiosity instead of Artificial Intelligence. More exploration of Federated PLM this year.

PLM and Sustainability

Last year as part of the PLM Global Green Alliance, we spoke with six different PLM solution providers to understand their sustainability goals, targets, and planned support for Sustainability. All of them confirmed Sustainability has become an important issue for their customers in 2022. Sustainability is on everyone’s agenda.

Last year as part of the PLM Global Green Alliance, we spoke with six different PLM solution providers to understand their sustainability goals, targets, and planned support for Sustainability. All of them confirmed Sustainability has become an important issue for their customers in 2022. Sustainability is on everyone’s agenda.

Why is PLM important for Sustainability?

PLM is important for Sustainability because a PLM helps organizations manage the entire lifecycle of a product, from its conception and design to its manufacture, distribution, use, and disposal. PLM can be important for Sustainability because it can help organizations make more informed decisions about the environmental impacts of their products and take steps to minimize those impacts throughout the product’s lifecycle.

PLM is important for Sustainability because a PLM helps organizations manage the entire lifecycle of a product, from its conception and design to its manufacture, distribution, use, and disposal. PLM can be important for Sustainability because it can help organizations make more informed decisions about the environmental impacts of their products and take steps to minimize those impacts throughout the product’s lifecycle.

For example, using PLM, an organization can consider the environmental impacts of the materials that are used in a product, the energy consumption of the manufacturing process, the product’s end-of-life disposal, and other factors that may affect its overall Sustainability. By considering these factors early in the design process, organizations can make more sustainable choices that reduce the environmental impact of their products over their lifecycle.

In addition, PLM can help organizations track and measure the Sustainability of their products over time, allowing them to continuously improve and optimize their products for Sustainability. This can be particularly important for organizations that are looking to meet regulatory requirements or consumer demand for more sustainable products.

Again not a wrong answer, but there is no mention of supporting the concepts of the circular economy, the potential of using digital twins at the various lifecycle stages of the products to perform trade-off studies in the virtual world, or to analyze the product behavior from the physical world in the virtual world for optimization.

This is (again), for me, the most interesting part to explore.

We see the need but lack the sense of urgency on the NOW.

More on Sustainability this year.

PLM Education at all levels

Historically PLM was framed as an “engineering thing” or a system needed for R&D/Engineering. Modern PLM is much broader and, unfortunately, more hard to grasp. I support the CIMdata definition:

CIMdata defines PLM as:

-

A strategic business approach that applies a consistent set of business solutions that support the collaborative creation, management, dissemination, and use of product definition information

-

Supporting the extended enterprise (customers, design and supply partners, etc.)

-

Spanning from concept to end of life of a product or plant

-

Integrating people, processes, business systems, and information

And even here, I would say this definition is challenging as the scope is extensive and could touch a lot of activities inside an enterprise. As I wrote last year in my series of posts related to PLM and Education, there is:

And even here, I would say this definition is challenging as the scope is extensive and could touch a lot of activities inside an enterprise. As I wrote last year in my series of posts related to PLM and Education, there is:

Strategy education (what are the trends/potential of digital transformation in the business domain related to the company’s products)

Methodology/ Academic Education (independent from tools, what are the best practices, where they reside, potential lessons learned and pitfalls to avoid)

Organisational Change Management (the Education and support needed to enable a business transformation – modern PLM is a transformation enabler)

By asking ChatGPT for their opinion, we learn the following:

Product Lifecycle Management (PLM) education refers to training and development programs that focus on teaching individuals how to effectively manage the lifecycle of a product within an organization. This can include a wide range of topics, such as product design, development, and engineering; supply chain management; quality control and assurance; and product retirement and disposal.

Product Lifecycle Management (PLM) education refers to training and development programs that focus on teaching individuals how to effectively manage the lifecycle of a product within an organization. This can include a wide range of topics, such as product design, development, and engineering; supply chain management; quality control and assurance; and product retirement and disposal.

Some specific types of PLM education that a company may need to provide to its employees include:

- Introduction to PLM: This type of training is designed to provide a general overview of PLM concepts and practices and may be suitable for employees who are new to the field or who have limited experience with PLM.

- PLM software training: Many companies use specialized PLM software to manage their product lifecycle processes. Employees who will be using this software will need training on how to use it effectively.

- Industry-specific PLM training: Depending on the industry in which a company operates, there may be specific PLM practices and regulations that employees need to be familiar with. For example, a company in the aerospace industry may need to provide training on PLM practices that are specific to the aerospace industry.

- Leadership and management training: For employees who are responsible for leading and managing PLM projects or teams, additional training in leadership and project management may be necessary.

Overall, the types of PLM education that a company needs will depend on the specific needs and goals of the organization, as well as the roles and responsibilities of its employees.

In general, we see from the answer that strategy and organizational change management beyond the tool are not considered.

This is precisely the area where a PLM Expert can help.

We see the need for Education, but we lack the willingness to invest in it.

Conclusion

It was an exciting exercise to combine my blogging thoughts with the answers from OpenAI. I am impressed by the given answers, knowing that the topics discussed about PLM are not obvious. On the other hand, I am not worried that AI will take over the job of the PLM consultant. As I mentioned before, the difference between Explicit Knowledge and Tacit Knowledge is clear, and business transformations will largely depend on the usage of Tacit knowledge.

I am curious about your experiences and will follow the topics mentioned in this post and write about them with great interest.

In my last post in this series, The road to model-based and connected PLM, I mentioned that perhaps it is time to talk about SLM instead of PLM when discussing popular TLA’s for our domain of expertise. There were not so many encouraging statements for SLM so far.

In my last post in this series, The road to model-based and connected PLM, I mentioned that perhaps it is time to talk about SLM instead of PLM when discussing popular TLA’s for our domain of expertise. There were not so many encouraging statements for SLM so far.

SLM could mean for me, Solution Lifecycle Management, considering that the company’s offering more and more is a mix of products and services. Or SLM could mean System Lifecycle Management, in that case pushing the idea that more and more products are interacting with the outside world and therefore could be considered systems. Products are (almost) dead.

In addition, I mentioned that the typical product lifecycle and related configuration management concepts need to change as in the SLM domain. There is hardware and software with different lifecycles and change processes.

It is a topic I want to explore further. I am curious to learn more from Martijn Dullaart, who will be lecturing at the PLM Road map and PDT 2021 fall conference in November. I hope my expectations are not too high, knowing it is a topic of interest for Martijn. Feel free to join this discussion

It is a topic I want to explore further. I am curious to learn more from Martijn Dullaart, who will be lecturing at the PLM Road map and PDT 2021 fall conference in November. I hope my expectations are not too high, knowing it is a topic of interest for Martijn. Feel free to join this discussion

In this post, it is time to follow up on my third statement related to what data-driven implies:

Data-driven means that we need to manage data in a much more granular manner. We have to look different at data ownership. It becomes more about data accountability per role as the data can be used and consumed throughout the product lifecycle

On this topic, I have a list of points to consider; let’s go through them.

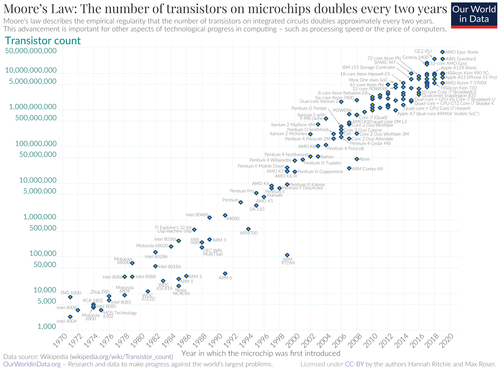

The dataset

In this post, I will often use the term dataset (you are also allowed to write the data set I understood).

A dataset means a predefined number of attributes and values that belong logically to each other. Datasets should be defined based on the purpose and, if possible, designated for a single goal. In this way, they can be stored in a database.

A dataset means a predefined number of attributes and values that belong logically to each other. Datasets should be defined based on the purpose and, if possible, designated for a single goal. In this way, they can be stored in a database.

Combined with other datasets, a combination can result in relevant business information. Note a dataset is not only transactional data; a dataset could also describe geometry.

Identify the dataset

In the document-based world, a lot of information could be stored in a single file. In a data-driven world, we should define a dataset that contains a specific piece of information, logically belonging together. If we are more precise, a part would have various related datasets that make up the definition of a part. These definitions could be:

In the document-based world, a lot of information could be stored in a single file. In a data-driven world, we should define a dataset that contains a specific piece of information, logically belonging together. If we are more precise, a part would have various related datasets that make up the definition of a part. These definitions could be:

- Core identification attributes like ID, Name, Type and Status

- The Type could define a set of linked information. For example, a valve would have different characteristics as a resistor. Through classification, we can link data sets to the core definition of a part.

- The part can have engineering-specific data (CAD and metadata), manufacturing-specific data, supplier-specific data, and service-specific data. Each of these datasets needs to be defined as a unique element in a data-driven environment

- CAD is a particular case as most current CAD systems don’t treat geometry as a single dataset. In a file-based world, many other datasets are stored in the file (e.g., engineering or manufacturing details). In a data-driven environment, we want to have the CAD definition to be treated like a dataset. Dassault Systèmes with their CATIA V6 and 3DEXPERIENCE platform or PTC with OnShape are examples of this approach.Having CAD as separate datasets makes sharing and collaboration so much easier, as we can see from these solutions. The concept for CAD stored in a database is not new, and this approach has been used in various disciplines. Mechanical CAD was always a challenge.

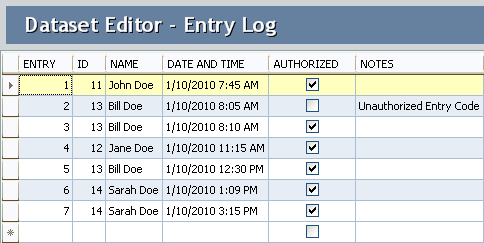

Thanks to Moore’s Law (approximate every 2 years, processor power doubled – click on the image for the details) and higher network connection speed, it starts to make sense to have mechanical CAD also stored in a database instead of a file

Thanks to Moore’s Law (approximate every 2 years, processor power doubled – click on the image for the details) and higher network connection speed, it starts to make sense to have mechanical CAD also stored in a database instead of a file

An important point to consider is a kind of standardization of datasets. In theory, there should be a kind of minimum agreed collection of datasets. Industry standards provide these collections in their dictionary. Whenever you optimize your data model for a connected enterprise, make sure you look first into the standards that apply to your industry.

They might not be perfect or complete, but inventing your own new standard is a guarantee for legacy issues in the future. This remark is also valid for the software vendors in this domain. A proprietary data model might give you a competitive advantage.

They might not be perfect or complete, but inventing your own new standard is a guarantee for legacy issues in the future. This remark is also valid for the software vendors in this domain. A proprietary data model might give you a competitive advantage.

Still, in the long term, there is always the need to connect with outside stakeholders.

Identify the RACI

To ensure a dataset is complete and well maintained, the concept of RACI could be used. RACI is the abbreviation for Responsible Accountable Consulted and Informed and a simplification of the RASCI Model, see also a responsibility assignment matrix.

In a data-driven environment, there is no data ownership anymore like you have for documents. The main reason that data ownership can no longer be used is that datasets can be consumed by anyone in the ecosystem. No longer only your department or the manufacturing or service department.

In a data-driven environment, there is no data ownership anymore like you have for documents. The main reason that data ownership can no longer be used is that datasets can be consumed by anyone in the ecosystem. No longer only your department or the manufacturing or service department.

Data sets in a data-driven environment bring value when connected with other datasets in applications or dashboards.

A dataset describing the specification attributes of a part could be used in a spare part app and a service app. Of course, the dataset will be used in a different context – still, we need to ensure we can trust the data.

Therefore, per identified dataset, there should be governed by a kind of RACI concept. The RACI concept is a way to break the siloes in an organization.

Identify Inside / outside

There is a lot of fear that a connected, data-driven environment will expose Intellectual Property (IP). It came up in recent discussions. If you like storytelling and technology, read my old SmarTeam colleague Alex Bruskin’s post: The Bilbo Baggins Threat to PLM Assets. Alex has written some “poetry” with a deep technical message behind it.

It is true that if your data set is too big, you have the challenge of exposing IP when connecting this dataset with others. Therefore, when building a data model, you should make it possible to have datasets pure for internal usage and datasets for sharing.

It is true that if your data set is too big, you have the challenge of exposing IP when connecting this dataset with others. Therefore, when building a data model, you should make it possible to have datasets pure for internal usage and datasets for sharing.

When you use the concept of RACI, the difference should be defined by the I(informed) – is it PLM-data or PIM-data for example?

Tracking relations

Suppose we follow up on the concept of datasets. In that case, it becomes clear that relations between the datasets are as crucial as the dataset. In traditional PLM applications, these relations are often predefined as part of the core data model/

For example, the EBOM parts have relationships between themselves and specification data – see image.

For example, the EBOM parts have relationships between themselves and specification data – see image.

The MBOM parts have links with the supplier data or the manufacturing process.

The prepared relations in a PLM system allow people to implement the system relatively quickly to map their approaches to this taxonomy.

However, traditional PLM systems are based on a document-based (or file-based) taxonomy combined with related metadata. In a model-based and connected environment, we have to get rid of the document-based type of data.

However, traditional PLM systems are based on a document-based (or file-based) taxonomy combined with related metadata. In a model-based and connected environment, we have to get rid of the document-based type of data.

Therefore, the datasets will be more granular, and there is a need to manage exponential more relations between datasets.

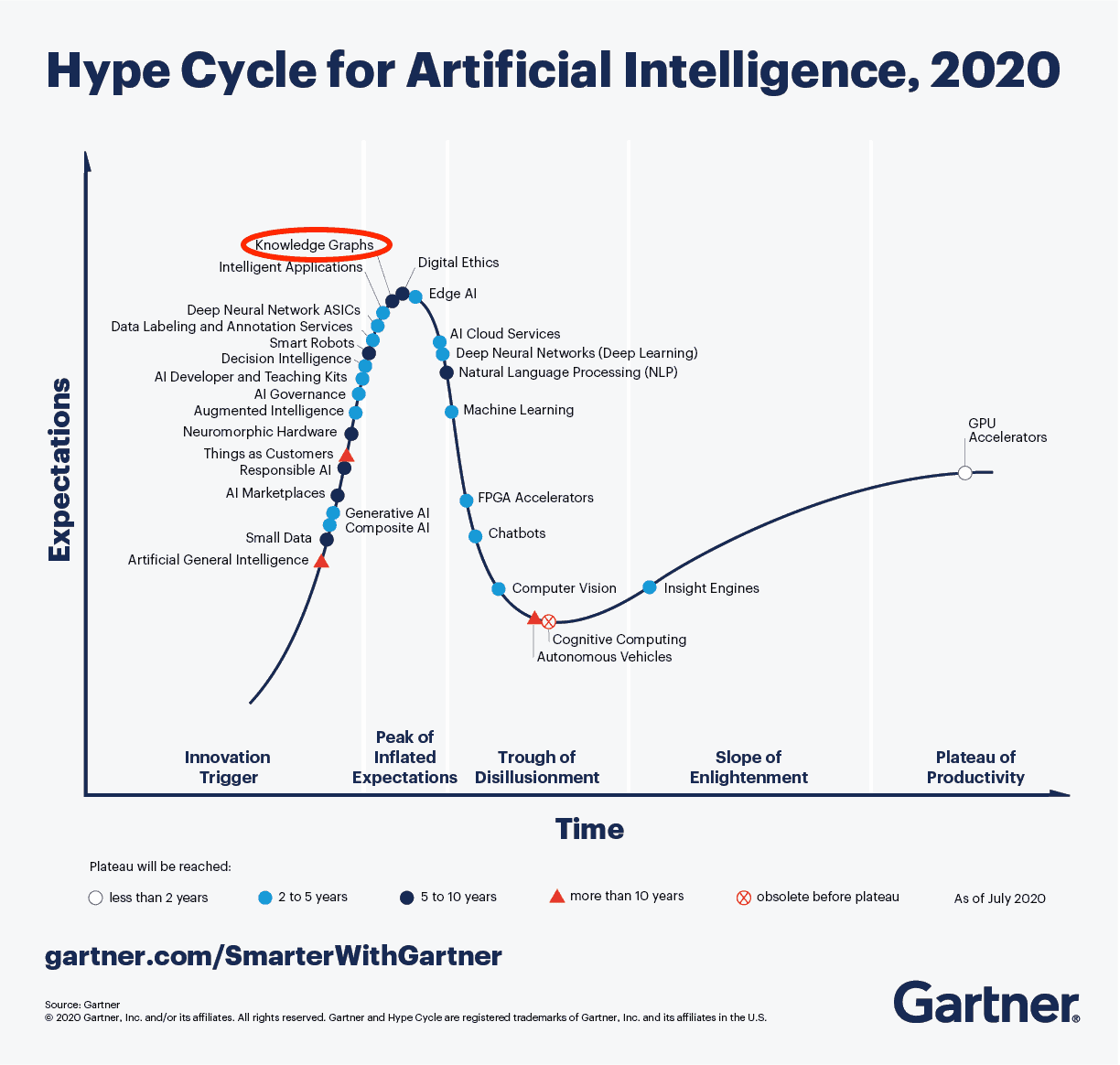

This is why you see the graph database coming up as a needed infrastructure for modern connected applications. If you haven’t heard of a graph database yet, you are probably far from technology hypes. To understand the principles of a graph database you can read this article from neo4j: Graph Databases for Beginners: Why graph technology is the future

This is why you see the graph database coming up as a needed infrastructure for modern connected applications. If you haven’t heard of a graph database yet, you are probably far from technology hypes. To understand the principles of a graph database you can read this article from neo4j: Graph Databases for Beginners: Why graph technology is the future

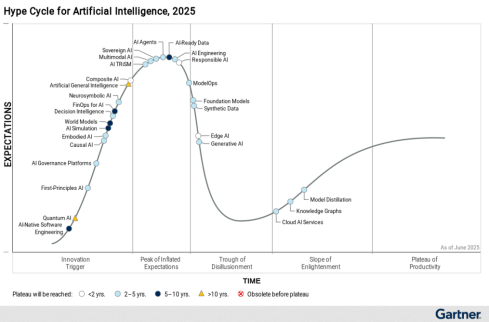

As you can see from the 2020 Gartner Hype Cycle for Artificial Intelligence this technology is at the top of the hype and conceptually the way to manage a connected enterprise. The discussion in this post also demonstrates that besides technology there is a lot of additional conceptual thinking needed before it can be implemented.

Although software vendors might handle the relations and datasets within their platform, the ultimate challenge will be sharing datasets with other platforms to get a connected ecosystem.

For example, the digital web picture shown above and introduced by Marc Halpern at the 2018 PDT conference shows this concept. Recently CIMdata discussed this topic in a similar manner: The Digital Thread is Really a Web, with the Engineering Bill of Materials at Its Center

(Note I am not sure if CIMdata has published a recording of this webinar – if so I will update the link)

Anyway, these are signs that we started to find the right visuals to imagine new concepts. The traditional digital thread pictures, like the one below, are, for me, impressions of the past as they are too rigid and focusing on some particular value streams.

From a distance, it looks like a connected enterprise should work like our brain. We story information on different abstraction levels. We keep incredibly many relations between information elements. As the brain is a biological organ, connections degrade or get lost. Or the opposite other relationships become so strong that we cannot change them anymore. (“I know I am always right”)

Interestingly, the brain does not use the “single source of truth”-concept – there can be various “truths” inside a brain. This makes us human beings with all the good and the harmful effects of that.

Interestingly, the brain does not use the “single source of truth”-concept – there can be various “truths” inside a brain. This makes us human beings with all the good and the harmful effects of that.

As long as we realize there is no single source of truth.

In business and our technological world, we need sometimes the undisputed truth. Blockchain could be the basis for securing the right connections between datasets to guarantee the result is valid. I am curious if blockchain can scale to complex connected situations, although Moore’s Law might ultimately help us here too(if still valid).

The topic is not new – in 2014 I wrote a post with the title: PLM is doomed unless …. Where I introduced the topic of owning and sharing in the context of the human brain. In the post, I refer to the book On Intelligence by Jeff Hawkins how tries to analyze what is human-based intelligence and how could we apply it to our technology concepts. Still a fascinating book worth reading if you have the time and opportunity.

The topic is not new – in 2014 I wrote a post with the title: PLM is doomed unless …. Where I introduced the topic of owning and sharing in the context of the human brain. In the post, I refer to the book On Intelligence by Jeff Hawkins how tries to analyze what is human-based intelligence and how could we apply it to our technology concepts. Still a fascinating book worth reading if you have the time and opportunity.

Conclusion

A data-driven approach requires a more granular definition of information, leading to the concepts of datasets and managing relations between datasets. This is a fundamental difference compared to the past, where we were operating systems with information. Now we are heading towards connected platforms that provide a filtered set of real-time data to act upon.

I am curious to learn more about how people have solved the connected challenges and in what kind of granularity. Let us know!

This is the moment of the year, where at least in my region, most people take some time off to disconnect from their day-to-day business. For me, it is never a full disconnect as PLM became my passion, and you should never switch off your passion.

On August 1st, 1999, I started my company TacIT, the same year the acronym PLM was born. I wanted to focus on knowledge management, therefore the name TacIT. Being dragged into the SmarTeam world with a unique position interfacing between R&D, implementers and customers I found the unique sweet spot, helping me to see all aspects from PLM – the vendor position, the implementer’s view, the customer’s end-user, and management view.

On August 1st, 1999, I started my company TacIT, the same year the acronym PLM was born. I wanted to focus on knowledge management, therefore the name TacIT. Being dragged into the SmarTeam world with a unique position interfacing between R&D, implementers and customers I found the unique sweet spot, helping me to see all aspects from PLM – the vendor position, the implementer’s view, the customer’s end-user, and management view.

It has been, and still, is 20 years of learning and have been sharing most in the past ten years through my blog. What I have learned is that the more you know, the more you understand that situations are not black and white. See one of my favorite blog pictures below.

So there is enough to overthink during the holidays. Some of my upcoming points:

So there is enough to overthink during the holidays. Some of my upcoming points:

From coordinated to connected

Instead of using the over-hyped term: Digital Transformation, I believe companies should learn to work in a connected mode, which has become the standard in our daily life. Connected means that information needs to be stored in databases somewhere, combined with openness and standards to make data accessible. For more transactional environments, like CRM, MES, and ERP, the connected mode is not new.

In the domain of product development and selling, we have still a long learning path to go as the majority of organizations is relying on documents, be it Excels, Drawings (PDF) and reports. The fact that they are stored in electronic file formats does not mean that they are accessible. There is still manpower needed to create these artifacts or to extract the required information from them.

In the domain of product development and selling, we have still a long learning path to go as the majority of organizations is relying on documents, be it Excels, Drawings (PDF) and reports. The fact that they are stored in electronic file formats does not mean that they are accessible. There is still manpower needed to create these artifacts or to extract the required information from them.

The challenge for modern PLM is to establish new best practices around a model-based approach for systems engineering (MBSE), for engineering to manufacturing (MBD/MBE) and operations (Digital Twins). All these best practices should be generic and connected ultimately. I wrote about these topics in the past, have a look at:

PLM Vendors are showing pieces of the puzzle, but it is up to the implementers to establish the puzzle, without knowing in detail what the end result will be. This is the same journey of Columbus. He had a boat and a target towards the unknown. He discovered a country with a small population, nowadays a country full of immigrants who call themselves natives.

PLM Vendors are showing pieces of the puzzle, but it is up to the implementers to establish the puzzle, without knowing in detail what the end result will be. This is the same journey of Columbus. He had a boat and a target towards the unknown. He discovered a country with a small population, nowadays a country full of immigrants who call themselves natives.

However, the result was an impressive transformation.

Reading about transformation

Last year I read several books to get more insight into what motivates us, and how can we motivate people to change. In one way, it is disappointing to learn that we civilized human beings most of the time to not make rational decisions but act based on our per-historic brain.

Thinking, Fast and Slow from Daniel Kahneman was one of the first books in that direction as a must-read to understand our personal thinking and decision processes.

I read Idiot Brain: What Your Head Is Really Up To from Dean Burnett, where he explains this how our brain appears to be sabotaging our life, and what on earth it is really up to. Interesting to read but could be a little more comprehensive

I got more excited from Dan Ariely”s book: Predictably Irrational: The Hidden Forces That Shape Our Decisions as it was structured around topics where we handle completely irrational but predictable. And this predictability is used by people (sales/politicians/ management) to drive your actions. Useful to realize when you recognize the situation

These three books also illustrate the flaws of our modern time – we communicate fast (preferable through tweets) – we decide fast based on our gut feelings – so you realize towards what kind of world we are heading. Going through a transformation should be considered as a slow, learning process. Like reading a book – it takes time to digest.

Once you are aiming at a business transformation for your company or supporting a company in its transformation, the following books were insightful:

Leading Digital: Turning Technology into Business Transformation by George Westerman, Didier Bonnet and Andrew McAfee is maybe not the most inspiring book, however as it stays close to what we experience in our day-to-day-life it is for sure a book to read to get a foundational understanding of business transformation.

Leading Digital: Turning Technology into Business Transformation by George Westerman, Didier Bonnet and Andrew McAfee is maybe not the most inspiring book, however as it stays close to what we experience in our day-to-day-life it is for sure a book to read to get a foundational understanding of business transformation.

The book I liked the most recent was Leading Transformation: How to Take Charge of Your Company’s Future by Nathan Furr, Kyle Nel, Thomas Zoega Ramsoy as it gives examples of transformation addressing parts of the irrational brain to get a transformation story. I believe in storytelling instead of business cases for transformation. I wrote about it in my blog post: PLM Measurable or a myth referring to Yuval Harari’s book Homo Sapiens

The book I liked the most recent was Leading Transformation: How to Take Charge of Your Company’s Future by Nathan Furr, Kyle Nel, Thomas Zoega Ramsoy as it gives examples of transformation addressing parts of the irrational brain to get a transformation story. I believe in storytelling instead of business cases for transformation. I wrote about it in my blog post: PLM Measurable or a myth referring to Yuval Harari’s book Homo Sapiens

Note: I am starting my holidays now with a small basket of e-books. If you have any recommendations for books that I must read – please write them in the comments of this blog

Discussing transformation

After the summer holidays, I plan to have fruitful discussions around topics close to PLM. Working on a post and starting a conversation related to PLM, PIM, and Master Data Management. The borders between these domains are perhaps getting vaguer in a digital enterprise.

After the summer holidays, I plan to have fruitful discussions around topics close to PLM. Working on a post and starting a conversation related to PLM, PIM, and Master Data Management. The borders between these domains are perhaps getting vaguer in a digital enterprise.

Further, I am looking forward to a discussion around the value of PLM assisting companies in developing sustainable products. A sustainable and probably circular economy is required to keep this earth a place to live for everybody. The whole discussion around climate change, however, is worrying as we should be Thinking – not fast and slow – but balanced.

A circular economy has been several times a topic during the joint CIMdata PLM Roadmap and PDT conferences, which bring me to the final point.

On 13th and 14th November this year I will participate again in the upcoming PLM Roadmap and PDT conference. This time in La Defense, Paris, France. I will share my experiences from working with companies trying to understand and implement pieces of a digital transformation related to PLM.

On 13th and 14th November this year I will participate again in the upcoming PLM Roadmap and PDT conference. This time in La Defense, Paris, France. I will share my experiences from working with companies trying to understand and implement pieces of a digital transformation related to PLM.

There will be inspiring presentations from other speakers, all working on some of the aspects of moving to facets of a connected enterprise. It is not a marketing event, it is done by professionals, serving professionals. Therefore I hope if you are passioned about the new aspects of PLM, no matter how you name label them, come and join, discuss and most of all, learn.

Conclusion

Modern life is about continuous learning – make it a habit. Even a holiday is again a way to learn to disconnect.

How disconnected I was you will see after the holidays.

This is the moment of the year to switch-off from the details. No more talking and writing about digital transformation or model-based approaches. It is time to sit back and relax. Two years ago I shared the PLM Songbook, now it is time to see one or more movies. Here are my favorite top five PLM movies:

Bruce Almighty

Bruce Nolan, an engineer in Buffalo, N.Y., is discontented with almost everything in the company despite his popularity and the love of his draftswoman Grace. At the end of the worst day of his life, Bruce angrily ridicules and rages against PLM and PLM responds. PLM appears in human form and, endowing Bruce with divine powers op collaboration, challenges Bruce to take on the big job to see if he can do it any better.

Bruce Nolan, an engineer in Buffalo, N.Y., is discontented with almost everything in the company despite his popularity and the love of his draftswoman Grace. At the end of the worst day of his life, Bruce angrily ridicules and rages against PLM and PLM responds. PLM appears in human form and, endowing Bruce with divine powers op collaboration, challenges Bruce to take on the big job to see if he can do it any better.

A movie that makes you modest and you realize there is more than your small ecosystem.

The good, the bad and the ugly

Blondie (The Good PLM consultant) is a professional who is out trying to earn a few dollars. Angel Eyes (The Bad PLM Vendor) is a PLM salesman who always commits to a task and sees it through, as long as he is paid to do so. And Tuco (The Ugly PLM Implementer) is a wanted outlaw trying to take care of his own hide. Tuco and Blondie share a partnership together making money off Tuco’s bounty, but when Blondie unties the partnership, Tuco tries to hunt down Blondie. When Blondie and Tuco come across a PLM implementation loaded with dead bodies, they soon learn from the only survivor (Bill Carson – the PLM admin) that he and a few other men have buried a stash of value on a file server. Unfortunately, Carson dies, and Tuco only finds out the name of the file server, while Blondie finds out the name on the hard disk. Now the two must keep each other alive in order to find the value. Angel Eyes (who had been looking for Bill Carson) discovers that Tuco and Blondie met with Carson and knows they know the location of the value. All he needs is for the two to ..

Blondie (The Good PLM consultant) is a professional who is out trying to earn a few dollars. Angel Eyes (The Bad PLM Vendor) is a PLM salesman who always commits to a task and sees it through, as long as he is paid to do so. And Tuco (The Ugly PLM Implementer) is a wanted outlaw trying to take care of his own hide. Tuco and Blondie share a partnership together making money off Tuco’s bounty, but when Blondie unties the partnership, Tuco tries to hunt down Blondie. When Blondie and Tuco come across a PLM implementation loaded with dead bodies, they soon learn from the only survivor (Bill Carson – the PLM admin) that he and a few other men have buried a stash of value on a file server. Unfortunately, Carson dies, and Tuco only finds out the name of the file server, while Blondie finds out the name on the hard disk. Now the two must keep each other alive in order to find the value. Angel Eyes (who had been looking for Bill Carson) discovers that Tuco and Blondie met with Carson and knows they know the location of the value. All he needs is for the two to ..

A movie that makes you realize that it is a challenging journey to find the value out of PLM. It is not only about execution – but it is also about all the politics of people involved – and there are good, bad and ugly people on a PLM journey.

The Grump

The Grump is a draftsman in Finland from the past. A man who knows that everything used to be so much better in the old days. Pretty much everything that’s been done after 1953 has always managed to ruin The Grump’s day. Our story unfolds The Grump opens a 3D Model on his computer, hurting his brain. He has to spend a weekend in Helsinki to attend a model-based therapy. Then the drama unfolds …….

The Grump is a draftsman in Finland from the past. A man who knows that everything used to be so much better in the old days. Pretty much everything that’s been done after 1953 has always managed to ruin The Grump’s day. Our story unfolds The Grump opens a 3D Model on his computer, hurting his brain. He has to spend a weekend in Helsinki to attend a model-based therapy. Then the drama unfolds …….

A movie that makes you realize that progress and innovation do not come from grumps. In every environment when you want to do a change of the status quo, grumps will appear. With the exciting Finish atmosphere, a perfect film for Christmas.

Deliverance

The Cahulawassee River Valley company in Northern Georgia is one of the last analog companies in the state, which will soon change with the imminent implementation of a PLM system in the company, breaking down silos everywhere. As such, four Atlanta city slickers, alpha male Lewis Medlock, generally even-keeled Ed Gentry, slightly condescending Bobby Trippe, and wide-eyed Drew Ballinger decide to implement PLM in one trip, with only Lewis and Ed having experience in CAD. They know going in that the area is ethnoculturally homogeneous and isolated, but don’t understand the full extent of such until they arrive and see what they believe is the result of generations of inbreeding. Their relatively peaceful trip takes a turn for the worse when half way through they encounter a couple of hillbilly moonshiners. That encounter not only makes the four battle their way out of the PLM project intact and alive but threatens the relationships of the four as they do.

The Cahulawassee River Valley company in Northern Georgia is one of the last analog companies in the state, which will soon change with the imminent implementation of a PLM system in the company, breaking down silos everywhere. As such, four Atlanta city slickers, alpha male Lewis Medlock, generally even-keeled Ed Gentry, slightly condescending Bobby Trippe, and wide-eyed Drew Ballinger decide to implement PLM in one trip, with only Lewis and Ed having experience in CAD. They know going in that the area is ethnoculturally homogeneous and isolated, but don’t understand the full extent of such until they arrive and see what they believe is the result of generations of inbreeding. Their relatively peaceful trip takes a turn for the worse when half way through they encounter a couple of hillbilly moonshiners. That encounter not only makes the four battle their way out of the PLM project intact and alive but threatens the relationships of the four as they do.

This movie, from 1972, makes you realize that in the early days of PLM starting a big-bang implementation journey into an area that is not ready for it, can be deadly, for your career and friendship. Not suitable for small children!

Diamonds Are Forever or Tron (legacy)

James Bond’s mission is to find out who has been drawing diamonds, which are appearing on blogs. He adopts another identity in the form of Don Farr. He joins up with CIMdata and acts as if he is developing diamonds, but everyone is hungry for these diamonds. He also has to avoid Mr. Brouwer and Mr. Kidd, the dangerous couple who do not leave anyone in their way when it comes to model-based. And Ernst Stavro Blofeld isn’t out of the question. He may have changed his looks, but is he linked with the V-shape? And if he is, can Bond finally defeat his ultimate enemy?

James Bond’s mission is to find out who has been drawing diamonds, which are appearing on blogs. He adopts another identity in the form of Don Farr. He joins up with CIMdata and acts as if he is developing diamonds, but everyone is hungry for these diamonds. He also has to avoid Mr. Brouwer and Mr. Kidd, the dangerous couple who do not leave anyone in their way when it comes to model-based. And Ernst Stavro Blofeld isn’t out of the question. He may have changed his looks, but is he linked with the V-shape? And if he is, can Bond finally defeat his ultimate enemy?

Sam Flynn, the tech-savvy 27-year-old son of Kevin Flynn, looks into his father’s disappearance and finds himself pulled into the same world of virtual twins and augmented reality where his father has been living for 20 years. Along with Kevin’s loyal confidant Quorra, father and son embark on a life-and-death journey across a visually-stunning cyber universe that has become far more advanced and exceedingly dangerous. Meanwhile, the malevolent program IoT, who dominates the digital world, plans to invade the real world and will stop at nothing to prevent their escape

Sam Flynn, the tech-savvy 27-year-old son of Kevin Flynn, looks into his father’s disappearance and finds himself pulled into the same world of virtual twins and augmented reality where his father has been living for 20 years. Along with Kevin’s loyal confidant Quorra, father and son embark on a life-and-death journey across a visually-stunning cyber universe that has become far more advanced and exceedingly dangerous. Meanwhile, the malevolent program IoT, who dominates the digital world, plans to invade the real world and will stop at nothing to prevent their escape

I could not decide about number five. The future is bright with Boeing’s new representation of Systems Engineering, see my post on CIMdata’s PLM Europe roadmap event where Don Farr presented his diamond(s). However, the future is also becoming a mix of real with virtual and here Tron (legacy) will help my readers to understand the beauty of a mixed virtual and real world. You can decide – or send me your favorite PLM movies.

Note: All movie reviews are based on IMBd.com story lines, and I thank the authors of these story lines for their contribution and hope they agree with the PLM-related twist. Click on the image to find the full details and original review.

Conclusion

2018 has been an exciting year with a lot of buzzwords combined with the reality that the current PLM approach is incompatible with the future. How we can address this issue more in 2019 – first at PI PLMx 2019 in London (be there – last chance to meet people in the UK when they are still Europeans and share/discuss plans for the upcoming year)

Wishing you all the best during the break and a happy and prosperous 2019

Ontology example: description of the business entities and their relationships

In my recent posts, I have talked a lot about the model-based enterprise and already after my first post: Model-Based – an introduction I got a lot of feedback where most of the audience was automatically associating the words Model-Based to a 3D CAD Model.

In my recent posts, I have talked a lot about the model-based enterprise and already after my first post: Model-Based – an introduction I got a lot of feedback where most of the audience was automatically associating the words Model-Based to a 3D CAD Model.

Trying to clarify this through my post: Why Model-Based – the 3D CAD Model stirred up the discussion even more leading into: Model- Based: The confusion.

A Digital Twin of the Organization

At that time, I briefly touched on business models and business processes that also need to be reshaped and build for a digital enterprise. Business modeling is necessary if you want to understand and streamline large enterprises, where nobody can overview the overall company. This approach is like systems engineering where we try to understand and simulate complex systems.

![]() With this post, I want to close on the Model-Based series and focus on the aspects of the business model. I was caught by this catchy article: How would you like a digital twin of your organization? which provides a nice introduction to this theme. Also, I met with Steve Dunnico, Creator and co-founder of Clearvision, a Swedish startup company focusing on modern ways of business modeling.

With this post, I want to close on the Model-Based series and focus on the aspects of the business model. I was caught by this catchy article: How would you like a digital twin of your organization? which provides a nice introduction to this theme. Also, I met with Steve Dunnico, Creator and co-founder of Clearvision, a Swedish startup company focusing on modern ways of business modeling.

Introduction

Jos (VirtualDutchman): Steve can you give us an introduction to your company and the which parts of the model-based enterprise you are addressing with Clearvision?

Steve (Clearvision): Clearvision started as a concept over two decades ago – modeling complex situations across multiple domains needed a simplistic approach to create a copy of the complete ecosystem. Along the way, technology advancements have opened up big-data to everyone, and now we have Clearvision as a modeling tool/SaaS that creates a digital business ecosystem that enables better visibility to deliver transformation.

Steve (Clearvision): Clearvision started as a concept over two decades ago – modeling complex situations across multiple domains needed a simplistic approach to create a copy of the complete ecosystem. Along the way, technology advancements have opened up big-data to everyone, and now we have Clearvision as a modeling tool/SaaS that creates a digital business ecosystem that enables better visibility to deliver transformation.

As we all know, change is constant, so we must transition from the old silo projects and programs to a business world of continuous monitoring and transformation.

Clearvision enables this by connecting the disparate parts of an organization into a model linking people, competence, technology services, data flow, organization, and processes.

Complex inter-dependencies can be visualized, showing impact and opportunity to deliver corporate transformation goals in measured minimum viable transformation – many small changes, with measurable benefit, delivered frequently. This is what Clearvision enables!

Jos: What is your definition of business modeling?

Steve: Business modeling historically, has long been the domain of financial experts – taking the “business model” of the company (such as production, sales, support) and looking at cost, profit, margins for opportunity and remodeling to suit. Now, with the availability of increased digital data about many dimensions of a business, it is possible to model more than the financials.

Steve: Business modeling historically, has long been the domain of financial experts – taking the “business model” of the company (such as production, sales, support) and looking at cost, profit, margins for opportunity and remodeling to suit. Now, with the availability of increased digital data about many dimensions of a business, it is possible to model more than the financials.

This is the business modeling that we (Clearvision) work with – connecting all the entities that define a business so that a change is connected to process, people, data, technology and other dimensions such as cost, time, quality. So if we change a part, all of the connected parts are checked for impact and benefit.

Jos: What are the benefits of business modeling?

Steve: Connecting the disparate entities of a business opens up limitless opportunities to analyze “what is affected if I change this?”. This can be applied to simple static “as-is” gap analyses, to the more advanced studies needed to future forecast and move into predictive planning rather than reactive.

Steve: Connecting the disparate entities of a business opens up limitless opportunities to analyze “what is affected if I change this?”. This can be applied to simple static “as-is” gap analyses, to the more advanced studies needed to future forecast and move into predictive planning rather than reactive.

The benefits of using a digital model of the business ecosystem are applicable to the whole organization. The “C-suite” team get to see heat-maps for not only technology-project deliveries but can use workforce-culture maps to assess the company’s understanding and adoption of new ways of working and achievement of strategic goals. While at an operational level, teams can collaborate more effectively knowing which parts of the ecosystem help or hinder their deliveries and vice-versa.

Jos: Is business modeling applicable for any type or size of the company?

The complexity of business has driven us to silo our way of working, to simplify tasks to achieve our own goals, and it is larger organizations which can benefit from modeling their business ecosystems. On that basis, it is unlikely that a standalone small business would engage in its own digital ecosystem model. However, as a supplier to a larger organization, it can be beneficial for the larger organizations to model their smaller suppliers to ensure a holistic view of their ecosystem.

The complexity of business has driven us to silo our way of working, to simplify tasks to achieve our own goals, and it is larger organizations which can benefit from modeling their business ecosystems. On that basis, it is unlikely that a standalone small business would engage in its own digital ecosystem model. However, as a supplier to a larger organization, it can be beneficial for the larger organizations to model their smaller suppliers to ensure a holistic view of their ecosystem.

The core digital business ecosystem model delivers integrated views of dependencies, clashes, hot-spots to support transformation

Jos: How is business modeling related to digital transformation?

Digital transformation is an often heard topic in large corporations, by implication we should take advantage of the digital data we generate and collect in our businesses and connect it, so we benefit from the whole not work in silos. Therefore, using a digital model of a business ecosystem will help identify areas of connectivity and collaboration that can deliver best benefit but through Minimum Viable Transformation, not a multi-year program with a big-bang output (which sometimes misses its goals…).

Digital transformation is an often heard topic in large corporations, by implication we should take advantage of the digital data we generate and collect in our businesses and connect it, so we benefit from the whole not work in silos. Therefore, using a digital model of a business ecosystem will help identify areas of connectivity and collaboration that can deliver best benefit but through Minimum Viable Transformation, not a multi-year program with a big-bang output (which sometimes misses its goals…).

Today’s digital technology brings new capabilities to businesses and is driving competence changes in organizations and their partner companies. So another use of business modeling is to map competence of internal/external resources to the needed capabilities of digital transformation. Mapping competence rather than roles brings a better fit for resources to support transformation. Understanding which competencies we have and what the gaps are pr-requisite to plan and deliver transformation.

Jos: Then perhaps close with your Clearvision mission where you fit (uniquely)?

Having worked on early digital business ecosystem models in the late 90’s, we’ve cut our teeth on slow processing time, difficult to change data relationships and poor access to data, combined with a very silo’d work mentality. Clearvision is now positioned to help organizations realize that the value of the whole of their business is greater than the sum of their parts (silos) by enabling a holistic view of their business ecosystem that can be used to deliver measured transformation on a continual basis.

Having worked on early digital business ecosystem models in the late 90’s, we’ve cut our teeth on slow processing time, difficult to change data relationships and poor access to data, combined with a very silo’d work mentality. Clearvision is now positioned to help organizations realize that the value of the whole of their business is greater than the sum of their parts (silos) by enabling a holistic view of their business ecosystem that can be used to deliver measured transformation on a continual basis.

Jos: Thanks Steve for your contribution and with this completing the series of post related to a model-based enterprise with its various facets. I am aware this post the opinion from one company describing the importance of a model-based business in general. There are no commercial relations between the two of us and I recommend you to explore this topic further in case relevant for your situation.

Conclusion

Companies and their products are becoming more and more complex, most if it happening now, a lot more happening in the near future. In order to understand and manage this complexity models are needed to virtually define and analyze the real world without the high costs of making prototypes or changes in the real world. This applies for organizations, for systems, engineering and manufacturing coordination and finally in-field operating systems. They all can be described by – connected – models. This is the future of a model-based enterprise

Coming up next time: CIMdata PDM Roadmap Europe and PDT Europe. You can still register and meet a large group of people who care about the details of aspects of a digital enterprise

What I want to discuss this time is the challenging transformation related to product data that needs to take place.

The top image of this post illustrates the current PLM world on the left, and on the right, the potential future positioning of PLM in a digital enterprise. How the right side will behave is still vague – it can be a collection of platforms or a vast collection of small services, all contributing to the performance of the company. Some vendors might dream all these capabilities are defined in one system of systems, like the human body; all functions are available and connected.

The top image of this post illustrates the current PLM world on the left, and on the right, the potential future positioning of PLM in a digital enterprise. How the right side will behave is still vague – it can be a collection of platforms or a vast collection of small services, all contributing to the performance of the company. Some vendors might dream all these capabilities are defined in one system of systems, like the human body; all functions are available and connected.

Coordinated or connected?

This is THE big question for a future digital enterprise. In the current PLM approach, there are governance structures that allow people to share data along the product lifecycle in a structured way.

These governance structures can be project breakdown structures, where with a phase-gate approach, the full delivery is guided. Deliverables related to tasks and gates will make sure information is stored and available for every stakeholder. For example, a well-known process in the automotive industry, the Advanced Product Quality Process ( APQP process) is a standardized approach to make sure parts or products are introduced with the right quality for the customer.

Deliverables at any stage in the process can be reviewed or consumed by another stakeholder. The result is most of the time a collection of approved documents (Office-type, Design & Test files) stored centrally. This is what I would call a coordinated data approach.

In complex environments, besides the project governance, there will be product structures and Bill of Materials, where each object in such a structure will be the placeholder for related information. In case of a product structure it can be its specifications per component, in case of a Bill of Materials, it can be its design specification (usually in CAD models) and its manufacturing specifications, in case of an MBOM.

Although these structures contain information about the product composition themselves, the related information makes the content understandable/realizable.

Again it is a coordinated approach, and most PLM systems and implementations are focused on providing these structures.

Sometimes with their own system only – you need to follow the vendor portfolio to get the full benefit or sometimes, the system is positioned as an overlay to existing systems in the company, therefore less invasive.

Providing a single version of the truth is often associated with this approach. The question is: Is the green bin on the left the single version of the truth?

The Coordinated – Single Version of the Truth – problem

The challenge of a coordinated approach is that there is no thorough consistency in checking if the data delivered is representing the real truth. Through serious review procedures, we do our best to make sure every deliverable has the required content and quality. As information inside these deliverables is not connected to the outside world, there will be discrepancies between reality and what has been stored. Still, we feel comfortable enough as an organization to pretend we know where the risks are. Until the costly impossible happens!

The challenge of a coordinated approach is that there is no thorough consistency in checking if the data delivered is representing the real truth. Through serious review procedures, we do our best to make sure every deliverable has the required content and quality. As information inside these deliverables is not connected to the outside world, there will be discrepancies between reality and what has been stored. Still, we feel comfortable enough as an organization to pretend we know where the risks are. Until the costly impossible happens!

The connected enterprise

The ultimate dream of a digital enterprise is that everything relevant is connected in context. This means no more documents or files but a very granular information model for linking data and keeping it in context. We can apply algorithms and automation to connected data and use Artificial Intelligence to make sense of massive amounts of data.

Connected data allows us to share combined sets of information that are relevant to a particular role. Real-time dashboarding is one of the benefits of such an infrastructure. There are still a lot of challenges with this approach. How do we know which information is valid in the context of other information? What are the rules that describe a valid product or project baseline at a particular time?

Connected data allows us to share combined sets of information that are relevant to a particular role. Real-time dashboarding is one of the benefits of such an infrastructure. There are still a lot of challenges with this approach. How do we know which information is valid in the context of other information? What are the rules that describe a valid product or project baseline at a particular time?

Although all data is stored as unique information objects in a network of information, we cannot apply the old mechanisms for a coordinated approach all the time. Generated reports from a connected environment can still serve as baselines or records related to a specific state, such as when the design was approved for manufacturing, we can generate approved Product Baselines structures or Bill of Materials structures.

However, this linearity in the lifecycle for passing information through an enterprise will not exist anymore. It might be there are various design alternatives, and the delivery process is already part of the design phase. Through integrated virtual simulation and testing, we reach a state where the product satisfies the market for that moment, and the delivery process is known at the same time

Almost immediately and based on first experiences in the field, new features can be added virtually, tested and validated for the next stage. We need to design new PLM infrastructures that can support this granularity and, therefore, complexity.

The connected – Single Version of the Truth – problem

The concepts I described related to the connected enterprise made me realize that this is analog to how the brain works. Our brain is a giant network of connected information, dynamically maintaining associations, having different abstraction levels and always pretending there is one truth.

The concepts I described related to the connected enterprise made me realize that this is analog to how the brain works. Our brain is a giant network of connected information, dynamically maintaining associations, having different abstraction levels and always pretending there is one truth.

If you want to understand a potential model of the brain, please read On Intelligence from Jeff Hawkins. With the possible upcoming of the Quantum Computer, we might be able to create performing brain models.

In my earlier post: Are we blocking our future, I referred to the book; The Idiot Brain: What Your Head is Really Up To from Dean Burnett, where Dean is stating that due to the complexity of stored information, our brain continuously adapts “non-compliant” information to make sure the owner of the brain feels comfortable.

What we think that is the truth might be just the creation from the brain, combining the positive parts into a compelling story and suppressing or deleting information that does not fit. Although it sounds absurd, I believe if we are able to create a connected digital enterprise, we will face the same symptoms. Due to the complexity of connected information, we are looking for the best suitable version, and as all became so complex, ordinary human beings will no longer be able to distinguish this.

What we think that is the truth might be just the creation from the brain, combining the positive parts into a compelling story and suppressing or deleting information that does not fit. Although it sounds absurd, I believe if we are able to create a connected digital enterprise, we will face the same symptoms. Due to the complexity of connected information, we are looking for the best suitable version, and as all became so complex, ordinary human beings will no longer be able to distinguish this.

Conclusion:

As part of the preparation for the upcoming PDT Europe 2018, I was investigating the topics coordinated and connected enterprises to discover potential transformation steps. We all need to explore the future with an open mind, and the challenge is: WHERE and HOW FAST can we transform from coordinated to connected? I am curious if you have experiences or thoughts on this topic.

December is the last month when daylight is getting shorter in the Netherlands, and with the end of the year approaching, this is the time to reflect on 2025.

December is the last month when daylight is getting shorter in the Netherlands, and with the end of the year approaching, this is the time to reflect on 2025. It was already clear that AI-generated content was going to drown the blogging space. The result: Original content became less and less visible, and a self-reinforcing amount of general messages reduced further excitement.

It was already clear that AI-generated content was going to drown the blogging space. The result: Original content became less and less visible, and a self-reinforcing amount of general messages reduced further excitement. Therefore, if you are still interested in content that has not been generated with AI, I recommend subscribing to my blog and interacting directly with me through the comments, either on LinkedIn or via a direct message.