You are currently browsing the category archive for the ‘Configuration Management’ category.

Over the last month, I have been actively engaged in the field; however, unfortunately, I have not been able to respond to all the interesting and sometimes humorous posts in my LinkedIn stream.

Over the last month, I have been actively engaged in the field; however, unfortunately, I have not been able to respond to all the interesting and sometimes humorous posts in my LinkedIn stream.

The fun started with a post from Oleg referring to a so-called BOM battle presented at Autodesk University by Gus Quade.

The image seems fake; however, the muscle power behind the BOM players looks real.

Prof. Dr. Jörg Fischer, also pictured, is advocating for rethinking PLM and BOM structures, and I share his discomfort.

Prof. Fischer wrote recently: “Forget everything you know about EBOM and MBOM. CTO+ is rewriting the rules of PLM. “

I am not a CTO expert, but I can grasp the underlying concepts and understand why it is closely associated with SAP. It aligns with the ultimate goal of maintaining a continuous flow of information throughout the company, with ERP (SAP?) at its core.

My question is, how far are we from that option?

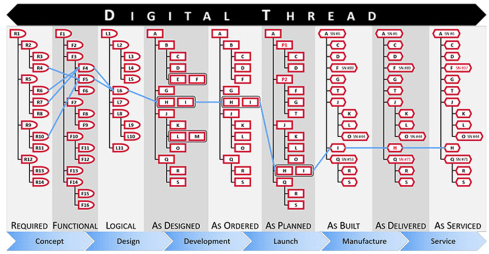

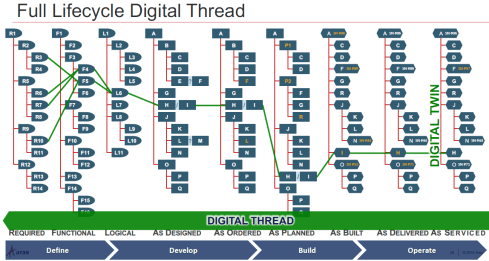

Current PLM implementations often focus on a linear process and data collection from left to right, as illustrated in the old Aras image below. I call this the coordinated approach.

During the recent Dutch PLM platform meeting, we also discussed the potential need for an eBOM, mBOM, and potentially the sBOM. A topic many mid-sized manufacturing companies have not mastered or implemented yet – illustrating the friction in current businesses.

During the recent Dutch PLM platform meeting, we also discussed the potential need for an eBOM, mBOM, and potentially the sBOM. A topic many mid-sized manufacturing companies have not mastered or implemented yet – illustrating the friction in current businesses.

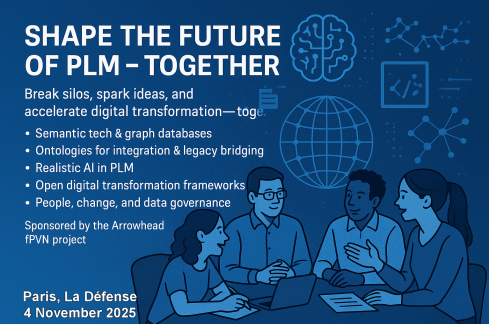

Meanwhile, we discuss agentic AI, the need for data quality, ontologies and graph databases. Take a look at the upcoming workshop on the Future of PLM, scheduled for November 4th in Paris, which serves as a precursor to the PLM Roadmap/PDT Europe 2025 conference on November 5th and 6th.

The reality in the field and future capabilities seem to be so far apart, which made me think about what the next step is after BOM management to move towards the future.

The evolution of the BOM

For those active in PLM, this brief theory ensures we share a common understanding of BOMs.

Level 0: In the beginning, there was THE BOM.

Initially, the Bill of Materials (BOM) existed only in ERP systems to support manufacturing. Together with the Bill of Process (BOP), it formed the heart of production execution. Without a BOM in ERP, product delivery would fail.

Initially, the Bill of Materials (BOM) existed only in ERP systems to support manufacturing. Together with the Bill of Process (BOP), it formed the heart of production execution. Without a BOM in ERP, product delivery would fail.

Level 1: Then came a new BOM from CAD.

With the rise of PDM systems and 3D CAD, another BOM emerged — reflecting the product’s design structure, including assemblies and parts. Often referred to as the CAD or engineering BOM, it frequently contained manufacturing details, such as supplier parts or consumables like paint and glue.

With the rise of PDM systems and 3D CAD, another BOM emerged — reflecting the product’s design structure, including assemblies and parts. Often referred to as the CAD or engineering BOM, it frequently contained manufacturing details, such as supplier parts or consumables like paint and glue.

This hybrid BOM bridged engineering and manufacturing, linking CAD/PDM with ERP. Many machine manufacturers adopted this model, as each project was customer-specific and often involved reusing data by copying similar projects.

![]() Many industrial manufacturers still use this linear approach to deliver solutions to their customers.

Many industrial manufacturers still use this linear approach to deliver solutions to their customers.

Level 2: The real eBOM and mBOM arrived.

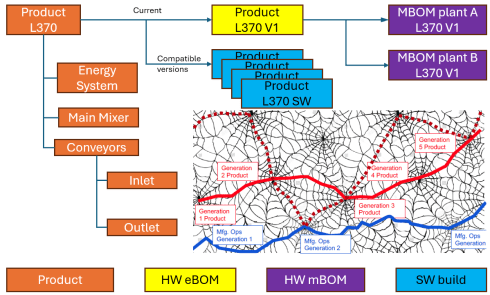

Later, companies began distinguishing between the engineering BOM (eBOM) and manufacturing BOM (mBOM), especially as engineering became centralized and manufacturing decentralized.

The eBOM represented the stable engineering definition, while the mBOM was derived locally, adapting parts to specific suppliers or production needs.

At the same time, many organizations aimed to evolve toward a Configure-to-Order (CTO) business model — a long-term aspiration in aligning engineering and manufacturing flexibility, as noted by Prof. Jörg Fischer in his CTO+ concept.

A side step: The impact of modularity

Shifting from Engineer-to-Order (ETO) to Configure-to-Order (CTO) relies on adopting a modular product architecture. Modularity enables specific modules to remain stable while others evolve in response to ongoing innovation.

Shifting from Engineer-to-Order (ETO) to Configure-to-Order (CTO) relies on adopting a modular product architecture. Modularity enables specific modules to remain stable while others evolve in response to ongoing innovation.

It’s not just about creating a 200% eBOM or 150% mBOM but about defining modules with their own lifecycles that may span multiple product platforms. Many companies still struggle to apply these principles, as seen in discussions within the North European Modularity (NEM) network.

See one of my reports: The week after the North European Modularity network meeting.

We remain here primarily in the xBOM mindset: the eBOM defines engineering specifications, while the mBOM defines the physical realization—specific to suppliers or production sites.

Level 3: Extending to the sBOM?

To support service operations, the service BOM (sBOM) is introduced, managing serviceable parts and kits linked to the product. Managing service information in a connected manner adds complexity but also significant value, as the best margins often come from after-sales service.

To support service operations, the service BOM (sBOM) is introduced, managing serviceable parts and kits linked to the product. Managing service information in a connected manner adds complexity but also significant value, as the best margins often come from after-sales service.

Click on the image above to understand the relations between the eBOM, mBOM(s) and sBOM.

However, is the sBOM the real solution or only a theme pushed by BOM/PLM vendors to keep everything within their system? So far, this represents a linear hardware delivery model, with BOM structures tied to local ERP systems.

However, is the sBOM the real solution or only a theme pushed by BOM/PLM vendors to keep everything within their system? So far, this represents a linear hardware delivery model, with BOM structures tied to local ERP systems.

For most hardware manufacturers, the story ends here—but when software and product updates become part of the service, the lifecycle story continues.

The next levels: Software and Product Services require more than a BOM

As I mentioned earlier, during the Dutch PLM platform discussion, we had an interesting debate that began with the question of how to manage and service a product during operation. Here, we reach a new level of PLM – not only delivering products as efficiently as possible, but also maintaining them in the field – often for many years.

As I mentioned earlier, during the Dutch PLM platform discussion, we had an interesting debate that began with the question of how to manage and service a product during operation. Here, we reach a new level of PLM – not only delivering products as efficiently as possible, but also maintaining them in the field – often for many years.

There were two themes we discussed:

- The product gets physical updates and upgrades – how can we manage this with the sBOM – challenges with BOM versions or revisions ( a legacy approach)

- The product functions based on software-driven behavior, and the software can be updated on demand – how can we manage this with the sBOM (a different lifecycle)

The conclusion and answer to these two questions were:

We cannot use the sBOM anymore for this; in both cases, you need an additional (infra)structure to keep track of changes over time, I call it the logical product structure or product architecture.

The Logical Product Structure

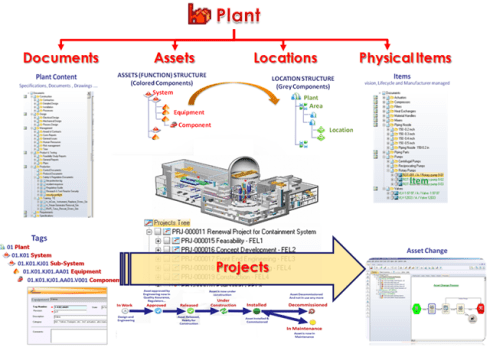

Since 2008, I have been involved in Asset Lifecycle Management projects, explaining the complementary value of PLM methodology and concepts related to an MRO environment, particularly for managing significant assets, such as those in the nuclear plants industry.

Since 2008, I have been involved in Asset Lifecycle Management projects, explaining the complementary value of PLM methodology and concepts related to an MRO environment, particularly for managing significant assets, such as those in the nuclear plants industry.

Historically, the configuration management of a plant was a human effort undertaken by individuals with extensive intrinsic knowledge.

A nuclear plant is an asset with a very long lifecycle that requires regular upgrades and services, and where safety is the top priority. However, thanks to digitization and an aging workforce, there was also a need to embed these practices within a digital infrastructure.

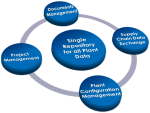

What I learned is that the logical product structure, also known as the plant breakdown structure (PBS), became an essential structure for combining the as-designed and as-operated structures of the plant.

In the SmarTeam image below, the plant breakdown structure was represented by the tag structure.

Coming back to our industrial products in service, it is conceptually a similar approach, albeit that the safety drivers and business margins might make it less urgent. For a product, there can also be a logical product structure that represents the logical components and their connections.

The logical structure of a product remains stable over time; however, specific modules or capabilities may be required, while the physical implementation (mBOM) and engineering definition (eBOM) may evolve over time.

Additionally, all relevant service activities, including issues and operational and maintenance data, can be linked to the logical structure. The logical structure is also the structure used for a digital twin representation.

The logical product structure and software

The logical product structure is also where hardware and software meet. The software can be managed in an ALM environment and provides traceability to the product in service through the product structure.

Note: this is a very simplified version, as you can imagine, it looks more like a web of connected datasets – the top level shows the traceability between the various artifacts – HW and SW

Where is the product structure defined?

The product structure originates from a system architect, and it depends on the tools they are using, where it is defined – historically in a document, later in an Excel file – the coordinated approach.

In a modern data-driven environment, you can find the product structure in an MBSE environment and then connect to a PLM system – the federated and connected approach.

There are also PLM vendors that have the main MBSE data elements in their core data model, reducing the need for building connectivity between the main PLM and MBSE elements. In my experience, the “all-in-one” solutions still underperform in usability and completeness.

Conclusion

I wrote this post to raise awareness that a narrow focus on BOM structures can create a potential risk for the future. Changing business models, for example, the product-service system, require a data-driven infrastructure where both hardware and software artifacts need to be managed in context. Probably not in a single system but supported by a federated infrastructure with a mix of technologies. And I feel sorry that I could not write about a model-based enterprise at this time!

I am looking forward to discussing the future of PLM with a select group of thought leaders on November 4th in Paris, as a precursor to the upcoming PLM Roadmap/PDT Europe conference. For the workshop on November 4th, we almost reached our maximum size we can accommodate, but for the conference, there is still the option to join us.

Please review the agenda and join us for engaging and educational discussions if you can.

And if you are not tired of discussing PLM as a term, a system or a strategy – watch the recording of this unique collection of PLM voices moderated by Michael Finochario.

![]() In my first discussion with Rob Ferrone, the original Product Data PLuMber, we discussed the necessary foundation for implementing a Digital Thread or leveraging AI capabilities beyond the hype. This is important because all these concepts require data quality and data governance as essential elements.

In my first discussion with Rob Ferrone, the original Product Data PLuMber, we discussed the necessary foundation for implementing a Digital Thread or leveraging AI capabilities beyond the hype. This is important because all these concepts require data quality and data governance as essential elements.

If you missed part 1, here is the link: Data Quality and Data Governance – A hype?

Rob, did you receive any feedback related to part 1? I spoke with a company that emphasized the importance of data quality; however, they were more interested in applying plasters, as they consider a broader approach too disruptive to their current business. Do you see similar situations?

Rob, did you receive any feedback related to part 1? I spoke with a company that emphasized the importance of data quality; however, they were more interested in applying plasters, as they consider a broader approach too disruptive to their current business. Do you see similar situations?

Honestly, not much feedback. Data Governance isn’t as sexy or exciting as discussions on Designing, Engineering, Manufacturing, or PLM Technology. HOWEVER, as the saying goes, all roads lead to Rome, and all Digital Engineering discussions ultimately lead to data.

Honestly, not much feedback. Data Governance isn’t as sexy or exciting as discussions on Designing, Engineering, Manufacturing, or PLM Technology. HOWEVER, as the saying goes, all roads lead to Rome, and all Digital Engineering discussions ultimately lead to data.

Cristina Jimenez Pavo’s comment illustrates that the question is in the air.:

Everyone knows that it should be better; high-performing businesses have good data governance, but most people don’t know how to systematically and sustainably improve their data quality. It’s hard and not glamorous (for most), so people tend to focus on buying new systems, which they believe will magically resolve their underlying issues.

Data governance as a strategy

Thanks for the clarification. I imagine it is similar to Configuration Management, i.e., with different needs per industry. I have seen ISO 8000 in the aerospace industry, but it has not spread further to other businesses. What about data governance as a strategy, similar to CM?

Thanks for the clarification. I imagine it is similar to Configuration Management, i.e., with different needs per industry. I have seen ISO 8000 in the aerospace industry, but it has not spread further to other businesses. What about data governance as a strategy, similar to CM?

That’s a great idea. Do you mind if I steal it?

That’s a great idea. Do you mind if I steal it?

If you ask any PLM or ERP vendor, they’ll claim to have a master product data governance template for every industry. While the core principles—ownership, control, quality, traceability, and change management, as in Configuration Management—are consistent, their application must vary based on the industry context, data types, and business priorities.

Designing effective data governance involves tailoring foundational elements, including data stewardship, standards, lineage, metadata, glossaries, and quality rules. These elements must reflect the realities of operations, striking a balance between trade-offs such as speed versus rigor or openness versus control.

Designing effective data governance involves tailoring foundational elements, including data stewardship, standards, lineage, metadata, glossaries, and quality rules. These elements must reflect the realities of operations, striking a balance between trade-offs such as speed versus rigor or openness versus control.

The challenge is that both configuration management (CM) and data governance often suffer from a perception problem, being viewed as abstract or compliance-heavy. In truth, they must be practical, embedded in daily workflows, and treated as dynamic systems central to business operations, rather than static documents.

Think of it like the difference between stepping on a scale versus using a smartwatch that tracks your weight, heart rate, and activity, schedules workouts, suggests meals, and aligns with your goals.

![]() Governance should function the same way:

Governance should function the same way:

responsive, integrated, and outcome-driven.

Who is responsible for data quality?

I have seen companies simplifying data quality as an enhancement step for everyone in the organization, like a “You have to be more accurate” message, similar perhaps to configuration management. Here we touch people and organizational change. How do you make improving data quality happen beyond the wish?

I have seen companies simplifying data quality as an enhancement step for everyone in the organization, like a “You have to be more accurate” message, similar perhaps to configuration management. Here we touch people and organizational change. How do you make improving data quality happen beyond the wish?

In most companies, managing product data is a responsibility shared among all employees. But increasingly complex systems and processes are not designed around people, making the work challenging, unpleasant, and often poorly executed.

In most companies, managing product data is a responsibility shared among all employees. But increasingly complex systems and processes are not designed around people, making the work challenging, unpleasant, and often poorly executed.

I like to quote Larry English – The Father of Information Quality:

“Information producers will create information only to the quality level for which they are trained, measured and held accountable.”

A common reaction is to add data “police” or transactional administrators, who unintentionally create more noise or burden those generating the data.

The real solution lies in embedding capable, proactive individuals throughout the product lifecycle who care about data quality as much as others care about the product itself – it was the topic I discussed at the 2025 Share PLM summit in Jerez – Rob Ferrone – Bill O-Materials also presented in part 1 of our discussion.

These data professionals collaborate closely with designers, engineers, procurement, manufacturing, supply chain, maintenance, and repair teams. They take ownership of data quality in systems, without relieving engineers of their responsibility for the accuracy of source data.

Some data, like component weight, is best owned by engineers, while others—such as BoM structure—may be better managed by system specialists. The emphasis should be on giving data professionals precise requirements and the authority to deliver.

They not only understand what good data looks like in their domain but also appreciate the needs of adjacent teams. This results in improved data quality across the business, not just within silos. They also work with IT and process teams to manage system changes and lead continuous improvement efforts.

![]() The real challenge is finding leaders with the vision and drive to implement this approach.

The real challenge is finding leaders with the vision and drive to implement this approach.

The costs or benefits associated with good or poor data quality

At the peak of interest in being data-driven, large consulting firms published numerous studies and analyses, proving that data-driven companies achieve better results than their data-averse competitors. Have you seen situations where the business case for improving “product data” quality has led to noticeable business benefits, and if so, in what range? Double digit, single digit?

At the peak of interest in being data-driven, large consulting firms published numerous studies and analyses, proving that data-driven companies achieve better results than their data-averse competitors. Have you seen situations where the business case for improving “product data” quality has led to noticeable business benefits, and if so, in what range? Double digit, single digit?

Improving data quality in isolation delivers limited value. Data quality is a means to an end. To realise real benefits, you must not only know how to improve it, but also how to utilise high-quality data in conjunction with other levers to drive improved business outcomes.

Improving data quality in isolation delivers limited value. Data quality is a means to an end. To realise real benefits, you must not only know how to improve it, but also how to utilise high-quality data in conjunction with other levers to drive improved business outcomes.

I built a company whose premise was that good-quality product data flowing efficiently throughout the business delivered dividends due to improved business performance. We grew because we delivered results that outweighed our fees.

Last year’s turnover was €35M, so even with a conservatively estimated average in-year ROI of 3:1, the company delivered over € 100 M of cost savings or additional revenue per year to clients, with the majority of these benefits being sustainable.

There is also the potential to unlock new value and business models through data-driven innovation.

For example, connecting disparate product data sources into a unified view and taking steps to sustainably improve data quality enables faster, more accurate, and easier collaboration between OEMs, fleet operators, spare parts providers, workshops, and product users, which leads to a new value proposition around minimizing painful operational downtime.

For example, connecting disparate product data sources into a unified view and taking steps to sustainably improve data quality enables faster, more accurate, and easier collaboration between OEMs, fleet operators, spare parts providers, workshops, and product users, which leads to a new value proposition around minimizing painful operational downtime.

AI and Data Quality

Currently, we are seeing numerous concepts emerge where AI, particularly AI agents, can be highly valuable for PLM. However, we also know that in legacy environments, the overall quality of data is poor. How do you envision AI supporting PLM processes, and where should you start? Or has it already started?

Currently, we are seeing numerous concepts emerge where AI, particularly AI agents, can be highly valuable for PLM. However, we also know that in legacy environments, the overall quality of data is poor. How do you envision AI supporting PLM processes, and where should you start? Or has it already started?

It’s like mining for rare elements—sifting through massive amounts of legacy data to find the diamonds. Is it worth the effort, especially when diamonds can now be manufactured? AI certainly makes the task faster and easier. Interestingly, Elon Musk recently announced plans to use AI to rewrite legacy data and create a new, high-quality knowledge base. This suggests a potential market for trusted, validated, and industry-specific legacy training data.

It’s like mining for rare elements—sifting through massive amounts of legacy data to find the diamonds. Is it worth the effort, especially when diamonds can now be manufactured? AI certainly makes the task faster and easier. Interestingly, Elon Musk recently announced plans to use AI to rewrite legacy data and create a new, high-quality knowledge base. This suggests a potential market for trusted, validated, and industry-specific legacy training data.

Will OEMs sell it as valuable IP, or will it be made open source like Tesla’s patents?

AI also offers enormous potential for data quality and governance. From live monitoring to proactive guidance, adopting this approach will become a much easier business strategy. One can imagine AI forming the core of a company’s Digital Thread—no longer requiring rigidly hardwired systems and data flows, but instead intelligently comparing team data and flagging misalignments.

AI also offers enormous potential for data quality and governance. From live monitoring to proactive guidance, adopting this approach will become a much easier business strategy. One can imagine AI forming the core of a company’s Digital Thread—no longer requiring rigidly hardwired systems and data flows, but instead intelligently comparing team data and flagging misalignments.

That said, data alignment remains complex, as discrepancies can be valid depending on context.

A practical starting point?

Data Quality as a Service. My former company, Quick Release, is piloting an AI-enabled service focused on EBoM to MBoM alignment. It combines a data quality platform with expert knowledge, collecting metadata from PLM, ERP, MES, and other systems to map engineering data models.

Experts define quality rules (completeness, consistency, relationship integrity), and AI enables automated anomaly detection. Initially, humans triage issues, but over time, as trust in AI grows, more of the process can be automated. Eventually, no oversight may be needed; alerts could be sent directly to those empowered to act, whether human or AI.

Experts define quality rules (completeness, consistency, relationship integrity), and AI enables automated anomaly detection. Initially, humans triage issues, but over time, as trust in AI grows, more of the process can be automated. Eventually, no oversight may be needed; alerts could be sent directly to those empowered to act, whether human or AI.

Summary

We hope the discussions in parts 1 and 2 helped you understand where to begin. It doesn’t need to stay theoretical or feel unachievable.

- The first step is simple: recognise product data as an asset that powers performance, not just admin.

Then treat it accordingly. - You don’t need a 5-year roadmap or a board-approved strategy before you begin. Start by identifying the product data that supports your most critical workflows, the stuff that breaks things when it’s wrong or missing. Work out what “good enough” looks like for that data at each phase of the lifecycle.

Then look around your business: who owns it, who touches it, and who cares when it fails? - From there, establish the roles, rules, and routines that help this data improve over time, even if it’s manual and messy to begin with. Add tooling where it helps.

- Use quality KPIs that reflect the business, not the system. Focus your governance efforts where there’s friction, waste, or rework.

- And where are you already getting value? Lock it in. Scale what works.

Conclusion

It’s not about perfection or policies; it’s about momentum and value. Data quality is a lever. Data governance is how you pull it.

Just start pulling- and then get serious with your AI applications!

Are you attending the PLM Roadmap/PDT Europe 2025 conference on

November 5th & 6th in Paris, La Defense?

There is an opportunity to discuss the future of PLM in a workshop before the event.

More information will be shared soon; please mark November 4th in the afternoon on your agenda.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

I noticed this year/last year that many companies are discussing or working on their future PLM. It is time to make progress after COVID, particularly in digitization.

And as most companies are avoiding the risk of a “big bang”, they are exploring how they can improve their businesses in an evolutionary mode.

PLM is no longer a system

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

More and more, PLM is considered a strategy, with which I fully agree. Therefore, implementing a PLM strategy requires holistic thinking and an infrastructure of different types of systems, where possible, digitally connected.

This trend is bad news for the PLM vendors as they continuously work on an end-to-end portfolio where every aspect of the PLM lifecycle is covered by one of their systems. The company’s IT department often supports the PLM vendors, as IT does not like a diverse landscape.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

However, are these capabilities implementable in an economical and user-friendly manner by actual companies or should PLM players need to change their strategy”?

A question I will try to answer in this post

The future of PLM

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

Some of the posts to refresh your memory are:

- Time to split PLM?

- People, Processes, Data and Tools?

- The rise and the fall of the BOM?

- The new side of PLM? Systems of Engagement!

To summarize what has been discussed in these posts are the following points:

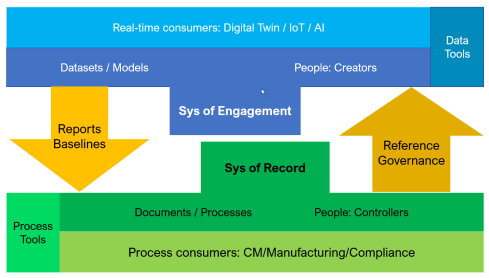

The As Is:

- The traditional PLM systems are examples of a System of Record, not designed to be end-user friendly but designed to have a traceable baseline for manufacturing, service and product compliance.

- The traditional PLM systems are tuned to a mechanical product introduction and release process in a coordinated manner, with a focus on BOM governance.

- The legacy information is stored in BOM structures and related specification files.

System of Record (ENOVIA image 2014)

The To Be:

- We are not talking about a PLM system anymore; a traditional System of Record will be digitally connected to different Systems of Engagement / Domains / Products, which have their own optimized environment for real-time collaboration.

- The BOM structures remain essential for the hardware part; however, overreaching structures are needed to manage software and hardware releases for a product. These structures depend on connected datasets.

- To support digital twins at the various lifecycle stages (design. Manufacturing, operations), product data needs to be based on and consumed by models.

- A future PLM infrastructure is hybrid, based on a Single Source of Change (SSoC) and an Authoritative Source of Truth (ASoT) instead of a Single Source of Truth (SSoT).

Various Systems of Engagement

Related podcasts

I relistened two podcasts before writing this post, and I think they are a must to listen to.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

Highlights: From System to Platform – the new norman. A Single Source of Truth doesn’t work anymore – it is about value streams. People in big companies fear making wrong PLM decisions, which is seen as a significant risk for your career.

There is no immediate need to change the current status quo.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

Highlights: The term Single Source of Change and the existing concepts of a hybrid PLM infrastructure based on his experiences at Daimler and now at NIO. Yousef stresses the importance of having the vision and the executive support to execute.

![]() The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

In addition, I want to point to the experiences provided by Erik Herzog in the Heliple project using OSLC interfaces as the “glue” to connect (in my terminology) the Systems of Engagement and the Systems of Record.

If you are interested in these concepts and want to learn and discuss them with your peers, more can be learned during the upcoming CIMdata PLM Roadmap / PDT Europe conference.

In particular, look at the agenda for day two if you are interested in this topic.

The future for the PLM vendors

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

They are more focused with their messaging on the transition from on-premise to the cloud, providing a SaaS offering with their portfolio.

I was slightly disappointed when I saw this article on Engineering.com provided by Autodesk: 5 PLM Best Practices from the Experiences of Autodesk and Its Customers.

The article is tool-centric, with statements that make sense and could be written by any PLM Vendor. However, Best Practice #1 Central Source of Truth Improves Productivity and Collaboration was the message that struck me. Collaboration comes from connecting people, not from the Single Source of Truth utopia.

I don’t believe PLM Vendors have to be afraid of losing their installed base rapidly with companies using their PLM as a System or Record. There is so much legacy stored in these systems that might still be relevant. The existence of legacy information, often documents, makes a migration or swap to another vendor almost impossible and unaffordable.

The System of Record is incompatible with data-driven PLM capabilities

I would like to see more clear developments of the PLM Vendors, creating a plug-and-play infrastructure for Systems of Engagement. Plug-and-play solutions could be based on a neutral partner collaboration hub like ShareAspace or the Systems of Engagement I discussed recently in my post and interview: The new side of PLM? Systems of Engagement!

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Some people believe AI, with the examples we have seen with ChatGPT, will be the future direction without needing interface standards.

I am curious about your thoughts and experiences in that area and am willing to learn.

Talking about learning?

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

As Martijn starts in the preface:

“I decided to write this book because, in my search for more knowledge on the topics of Part Re-Identification, Interchangeability, and Traceability, I could only find bits and pieces but not a comprehensive work that helps fundamentally understand these topics”.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

Martijn writes in an easily readable style and uses step-by-step examples to discuss the various options. There are even exercises at the end to use in a classroom or for your team to digest the content.

The good news is that the book is not about the past. You might also know Martijn for our joint discussion, The Future of Configuration Management, together with Maxime Gravel and Lisa Fenwick, on the impact of a model-based and data-driven approach to CM.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I recommend buying the book as a paperback so you can navigate easily between the diagrams and the text.

Conclusion

The trend for federated PLM is becoming more and more visible as companies start implementing these concepts. The end of monolithic PLM is a threat and an opportunity for the existing PLM Vendors. Will they work towards an open plug-and-play future, or will they keep their portfolios closed? What do you think?

Two weeks ago, I shared my post: Modern PLM is (too) complex on LinkedIn, and apparently, it was a topic that touched many readers. Almost a hundred likes, fifty comments and six shares. Not the usual thing you would expect from a PLM blog post.

Two weeks ago, I shared my post: Modern PLM is (too) complex on LinkedIn, and apparently, it was a topic that touched many readers. Almost a hundred likes, fifty comments and six shares. Not the usual thing you would expect from a PLM blog post.

In addition, the article led to offline discussions with peers, giving me an even better understanding of what people think. Here is a summary of the various talks.

What is PLM?

In particular, since the inception of Product Lifecycle Management, software vendors have battled with the various PLM definitions.

In particular, since the inception of Product Lifecycle Management, software vendors have battled with the various PLM definitions.

Initially, PLM was considered an engineering tool for product development, with an extensive potential set of capabilities supported by PowerPoint. Most companies actually implemented a collaborative PDM system at that time and named it PLM.

Was PLM really understood? Look at the infamous Autodesk CEO Carl Bass’s anti-PLM rap from 2007. Next, in 2012, Autodesk introduced its PLM solution called Autodesk PLM 360 as one of the first cloud solutions.

Only with growing connectivity and enterprise information sharing did the definition of PLM start to change.

Only with growing connectivity and enterprise information sharing did the definition of PLM start to change.

PLM became a product information backbone serving downstream deployment with product data – the traditional Teamcenter, Windchill and ENOVIA implementations are typical examples of this phase.

With a digitization effort taking place in the non-PLM domain, connecting product development, design and delivery data to a company’s digital business became necessary. You could say, and this is the CIMdata definition:

PLM is a strategic business approach that applies a consistent set of business solutions that support the collaborative creation, management, dissemination, and use of product definition information. PLM supports the extended enterprise (customers, design and supply partners, etc.)

I agree with this definition; perhaps 80 % of our PLM community does. But how many times have we been trapped again in the same thinking: PLM is a system.

The most recent example is the post from Oleg Shilovitsky last week where he claims: Discover why OpenBOM reigns supreme in the world of PLM!

Nothing wrong with that, as software vendors will always tweak definitions as they need marketing to make a profit, but PLM is not a system.

My main point is that PLM is a “vague” community label with many interpretations. Software vendors have the most significant marketing budget to push their unique definitions. However, also various practitioners in the field have their interpretations.

And maybe Martin Haket’s comment to the post says it all (partly quote):

I’m a bit late to this discussion, but in my opinion, the complexity is mainly due to the fact that the ownership of the processes and data models underlying PLM are not properly organized. ‘Everybody’ in the company is allowed to mix in the discussion and have their opinion; legacy drives departments to undesirable requirements leading to complex implementations.

My intermediate conclusion: Our legacy and lack of a single definition of PLM make it complex.

The PLM professional

On LinkedIn, there are approximately 14.000 PLM consultants in my first and second levels of connections. This number indicates that the label “PLM Consultant” has a specific recognition.

On LinkedIn, there are approximately 14.000 PLM consultants in my first and second levels of connections. This number indicates that the label “PLM Consultant” has a specific recognition.

During my “PLM is complex” discussion, I noticed Roger Tempest’s Professional PLM White paper and started the dialogue with him.

Roger Tempest is one of the co-founders of the PLM Interest Group. He has been trying to create a baseline for a foundational PLM certification with several others. We discussed the challenges of getting the PLM Professional recognized as an essential business role. Can we certify the PLM professional the same way as a certified Configuration Manager or certified Project Manager?

I shared my thoughts with Roger, claiming that our discipline is too vague and diverse and that finding a common baseline is hard.

Therefore, we are curious about your opinion too. Please tell us in the comments to this post what you think about recognizing the PLM professional and what skills should be the minimum. What are the basics of a PLM professional?

Therefore, we are curious about your opinion too. Please tell us in the comments to this post what you think about recognizing the PLM professional and what skills should be the minimum. What are the basics of a PLM professional?

In addition, I participated in some of the SharePLM podcast recordings with PLM experts from the field (follow us here). I raised the PLM professional question either during the podcast or during the preparation of the after-party. Also, there was no single unique answer.

So much is part of PLM: people (culture, skills), processes & data, tools & infrastructures (architectures, standards) combined with execution (waterfall/agile?)

So much is part of PLM: people (culture, skills), processes & data, tools & infrastructures (architectures, standards) combined with execution (waterfall/agile?)

My intermediate conclusion: The broadness of PLM makes it complex to have a common foundation.

More about complexity

PEOPLE: Let’s zoom in on the aspects of complexity. Starting from the People, Processes, Data and Tools discussion. The first thing mentioned is “the people,” organizations usually claim: “the most important assets in our organization are the people”.

PEOPLE: Let’s zoom in on the aspects of complexity. Starting from the People, Processes, Data and Tools discussion. The first thing mentioned is “the people,” organizations usually claim: “the most important assets in our organization are the people”.

However, people are usually the last dimension considered in business changes. Companies start with the tools, try to build the optimal processes and finally push the people into that framework by training, incentives or just force.

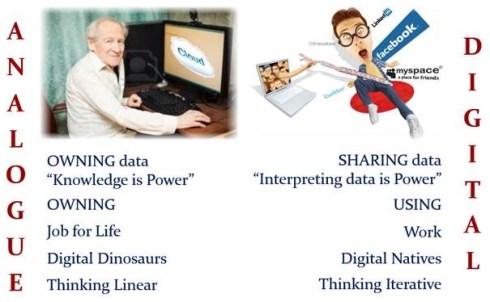

The reason for the last approach is that dealing with people is complex. People have their beliefs, their legacy and their motivation. And if people do not feel connected to the business (change), they will become an obstacle to change – look at the example below from my 2014 PI Apparel presentation:

To support the importance of people, I am excited to work with Share PLM and the Season 2 podcast series.

![]() In these episodes, we talk with successful PLM experts about their lessons learned during PLM implementation. You will discover it is a learning process, and connecting to people in different cultures is essential. As it is a learning process, you will find it takes time and human skills to master this complexity.

In these episodes, we talk with successful PLM experts about their lessons learned during PLM implementation. You will discover it is a learning process, and connecting to people in different cultures is essential. As it is a learning process, you will find it takes time and human skills to master this complexity.

Often human skills are called “soft skills”, but actually, they are “vital skills”!

PROCESSES: Regarding the processes part, this is another challenging topic. Often we try to simplify processes to make them workable (sounds like a good idea). With many seasoned PLM practitioners coming from the mechanical product development world, it is not a surprise that many proposed PLM processes are BOM-centric – building on PDM and ERP capabilities.

PROCESSES: Regarding the processes part, this is another challenging topic. Often we try to simplify processes to make them workable (sounds like a good idea). With many seasoned PLM practitioners coming from the mechanical product development world, it is not a surprise that many proposed PLM processes are BOM-centric – building on PDM and ERP capabilities.

In my post: The rise and fall of the BOM? I started with this quote from Jan Bosch:

An excessive focus on the bill of materials leads to significant challenges for companies that are undergoing a digital transformation and adopting continuous value delivery. The lack of headroom, high coupling and versioning hell may easily cause an explosion of R&D expenditure over time.

Today’s organization and product complexity does not allow us to keep the processes simple to remain competitive. In that context, have a look at Erik Herzog’s comment on PLM complexity:

I believe a contributing factor to making PLM complex lies in our tendency to make too many simplifications. Do we understand a simple thing such as configuration change management in incremental development? At least in my organization, there is room for improvement.

In the comment, Erik also provided a link to his conference paper: Introducing the 4-Box Development Model describing the potential interaction between Systems Engineering and Configuration Management. A topic that is too complex for your current company; however, it illustrates that you cannot generalize and simplify PLM overall.

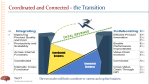

In addition to Erik’s comments, I want to mention again that we can change our business processes thanks to a modern, connected, data-driven infrastructure. From coordinated to connected working with a mix of Systems of Engagement (new) and Systems of Record (traditional). There are no solid best practices yet, but the real PLM geeks are becoming visible.

In addition to Erik’s comments, I want to mention again that we can change our business processes thanks to a modern, connected, data-driven infrastructure. From coordinated to connected working with a mix of Systems of Engagement (new) and Systems of Record (traditional). There are no solid best practices yet, but the real PLM geeks are becoming visible.

TOOLS & DATA: When discussing the future: From Coordinated to Connected, there has always been a discussion about the legacy.

TOOLS & DATA: When discussing the future: From Coordinated to Connected, there has always been a discussion about the legacy.

Should we migrate the legacy data and systems and replace them with new tools and data models? Or are there other options? The interaction of tools and data is often the domain of Enterprise Solution Architects. The Solution Architect’s role becomes increasingly important in a modern, data-driven company, and several are pretty active in PLM, if you know how to find them, because they are not in the mainstream of PLM.

This week we made a SharePLM podcast recording with Yousef Hooshmand. I wrote about his paper “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh” last year as Yousef describes the complex process, that time working at Daimler, to slowly replace old legacy infrastructure with a new modern user/role-centric data-driven infrastructure.

This week we made a SharePLM podcast recording with Yousef Hooshmand. I wrote about his paper “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh” last year as Yousef describes the complex process, that time working at Daimler, to slowly replace old legacy infrastructure with a new modern user/role-centric data-driven infrastructure.

Watch out for this recording to be published soon as Yousef shares various provoking experiences. Not to provoke our community but to create the awareness that a transformation is possible when you have the right long-term vision, strategy and C-level support.

Fighting complexity

Note: We have CM people involved in many of the PLM discussions. I think they are fighting similar complexity like others in the PLM domain. However, they have the benefit that their role: Configuration Manager, is recognized and supported by a commercial certification organization( the Institute of Process Excellence – IpX ).

Note: We have CM people involved in many of the PLM discussions. I think they are fighting similar complexity like others in the PLM domain. However, they have the benefit that their role: Configuration Manager, is recognized and supported by a commercial certification organization( the Institute of Process Excellence – IpX ).

While completing this post, I read this article from Oleg Shilovitsky: PLM User Groups and Communities. At first glance, you might think that PLM User Groups and Communities might be the solution to address the complexity.

And I think they do; there are within most PLM vendors orchestrated User Groups and Communities. Depending on your tool vendor, you will find like-minded people supported by vendor experts. Are they reducing the complexity? Probably not, as they are at the end of the People, Processes, Data and Tools discussion. You are already working within a specific boundary.

And I think they do; there are within most PLM vendors orchestrated User Groups and Communities. Depending on your tool vendor, you will find like-minded people supported by vendor experts. Are they reducing the complexity? Probably not, as they are at the end of the People, Processes, Data and Tools discussion. You are already working within a specific boundary.

Based on my experience as a core PLM Global Green Alliance member, I think PLM-neutral communities are not viable. There is very little interaction in this community, with currently 686 members, although the topics are very actual. Yes, people want to consume and learn, but making time available to share is, unfortunately, impossible when not financially motivated. Sharing opinions, yes, but working on topics: we are too busy.

Based on my experience as a core PLM Global Green Alliance member, I think PLM-neutral communities are not viable. There is very little interaction in this community, with currently 686 members, although the topics are very actual. Yes, people want to consume and learn, but making time available to share is, unfortunately, impossible when not financially motivated. Sharing opinions, yes, but working on topics: we are too busy.

Conclusion

The term PLM seems adequate to identify a group with a common interest (and skills?) Due to the broad scope and aspects – it is impossible to create a standard job description for the PLM professional, and we must learn to live with that- see my arguments.

What do you think?

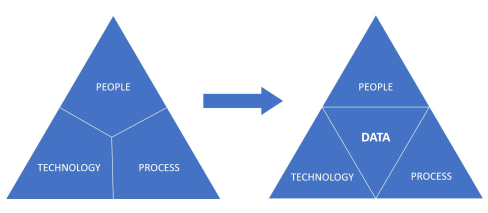

Those who have read my blog posts over the years will have seen the image to the left.

Those who have read my blog posts over the years will have seen the image to the left.

The people, processes and tools slogan points to the best practice of implementing (PLM and CM) systems.

Theoretically, a PLM implementation will move smoothly if the company first agrees on the desired processes and people involved before a system implementation using the right tools.

Too often, companies start from their historical landscape (the tools – starting with a vendor selection) and then try to figure out the optimal usage of their systems. The best example of this approach is the interaction between PDM(PLM) and ERP.

PDM and ERP

Historically ERP was the first enterprise system that most companies implemented. For product development, there was the PDM system, an engineering tool, and for execution, there was the ERP system. Since ERP focuses on the company’s execution, the system became the management’s favorite.

Historically ERP was the first enterprise system that most companies implemented. For product development, there was the PDM system, an engineering tool, and for execution, there was the ERP system. Since ERP focuses on the company’s execution, the system became the management’s favorite.

The ERP system and its information were needed to run and control the company. Unfortunately, this approach has introduced the idea that the ERP system should also be the source of the part information, as it was often the first enterprise system for a company. The PDM system was often considered an engineering tool only. And when we talk about a PLM system, who really implements PLM as an enterprise system or was it still an engineering tool?

This is an example of Tools, Processes, and People – A BAD PRACTICE.

Imagine an engineer who wants to introduce a new part needed for a product to deliver. In many companies at the beginning of this century, even before starting the exercise, the engineer had to request a part number from the ERP system. This is implementation complexity #1.

Imagine an engineer who wants to introduce a new part needed for a product to deliver. In many companies at the beginning of this century, even before starting the exercise, the engineer had to request a part number from the ERP system. This is implementation complexity #1.

Next, the engineer starts developing versions of the part based on the requirements. Ultimately the engineer might come to the conclusion this part will never be implemented. The reserved part number in ERP has been wasted – what to do?

It sounds weird, but this was a reality in discussions on this topic until ten years ago.

Next, as the ERP system could only deal with 7 digits, what about part number reuse? In conclusion, it is a considerable risk that reused part numbers can lead to errors. With the introduction of the PLM systems, there was the opportunity to bridge the gap between engineering and manufacturing. Now it is clear for most companies that the engineer should create the initial part number.

Next, as the ERP system could only deal with 7 digits, what about part number reuse? In conclusion, it is a considerable risk that reused part numbers can lead to errors. With the introduction of the PLM systems, there was the opportunity to bridge the gap between engineering and manufacturing. Now it is clear for most companies that the engineer should create the initial part number.

Only when the conceptual part becomes approved to be used for the realization of the product, an exchange with the ERP system will be needed. Using the same part number or not, we do not care if we can map both identifiers between these environments and have traceability.

It took almost 10 years from PDM to PLM until companies agreed on this approach, and I am curious about your company’s status.

Meanwhile, in the PLM world, we have evolved on this topic. The part and the BOM are no longer simple entities. Instead, we often differentiate between EBOM and MBOM, and the parts in those BOMs are not necessarily the same.

Meanwhile, in the PLM world, we have evolved on this topic. The part and the BOM are no longer simple entities. Instead, we often differentiate between EBOM and MBOM, and the parts in those BOMs are not necessarily the same.

In this context, I like Prof. Dr. Jörg W. Fischer‘s framing:

EBOM is the specification, and MBOM is the realization.

(Leider schreibt Er viel auf Deutsch).

An interesting discussion initiated by Jörg last week was again about the interaction between PLM and ERP. The article is an excellent example of how potentially mainstream enterprises are thinking. PLM = Siemens, ERP = SAP – an illustration of the “tools first” mindset before the ideal process is defined.

An interesting discussion initiated by Jörg last week was again about the interaction between PLM and ERP. The article is an excellent example of how potentially mainstream enterprises are thinking. PLM = Siemens, ERP = SAP – an illustration of the “tools first” mindset before the ideal process is defined.

There was nothing wrong with that in the early days, as connectivity between different systems was difficult and expensive. Therefore people with a 20 year of experience might still rely on their systems infrastructure instead of data flow.

But enough about the bad practice – let’s go to people, processes, (data), and Tools

People, Processes, Data and Tools?

I got inspired by this topic, seeing this post two weeks ago from Juha Korpela, claiming:

Okay, so maybe a hot take, maybe not, but: the old “People, Process, Technology” trinity is one of the most harmful thinking patterns you can have. It leaves out a key element: Data.

His full post was quite focused on data, and I liked the ” wrapping post” from Dr. Nicolas Figay here, putting things more in perspective from his point of view. The reply made me think about how this discussion fits into the PLM digital transformation discussion. How would it work in the two major themes I use to explain the digital transformation in the PLM landscape?

For incidental readers of my blog, these are the two major themes I am using:

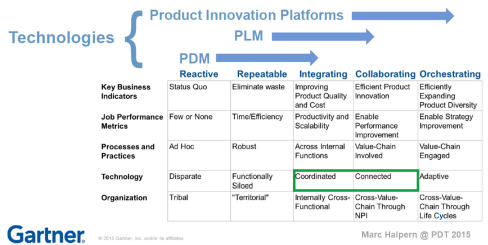

- From Coordinated to Connected, based on the famous diagram from Marc Halpern (image below). The coordinated approach based on documents (files) requires a particular timing (processes) and context (Bills of Information) – it is the traditional and current PLM approach for most companies. On the other hand, the Connected approach is based on connected datasets (here, we talk about data – not files). These connected datasets are available in different contexts, in real-time, to be used by all kinds of applications, particularly modeling applications. Read about it in the series: The road to model-based and connected PLM.

. - The need to split PLM, thinking in System(s) of Record and Systems of Engagement. (example below) The idea behind this split is driven by the observation that companies need various Systems of Record for configuration management, change management, compliance and realization. These activities sound like traditional PLM targets and could still be done in these systems. New in the discussion is the System of Engagement which focuses on a specific value stream in a digitally connected manner. Here data is essential.I discussed the coexistence of these two approaches in my post Time to Split PLM. A post on LinkedIn with many discussions and reshares illustrating the topic is hot. And I am happy to discuss “split PLM architectures” with all of you.

These two concepts discuss the processes and the tools, but what about the people? Here I came to a conclusion to complete the story, we have to imagine three kinds of people. And this will not be new. We have the creators of data, the controllers of data and the consumers of data. Let’s zoom in on their specifics.

A new representation?

I am looking for a new simplifaction of the people, processes, and tools trinity combined with data; I got inspired by the work Don Farr did at Boeing, where he worked on a new visual representation for the model-based enterprise. You might have seen the image on the left before – click on it to see it in detail.

I am looking for a new simplifaction of the people, processes, and tools trinity combined with data; I got inspired by the work Don Farr did at Boeing, where he worked on a new visual representation for the model-based enterprise. You might have seen the image on the left before – click on it to see it in detail.

I wrote the first time about this new representation in my post: The weekend after CIMdata Roadmap / PDT Europe 2018

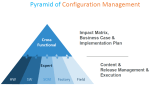

Related to Configuration Management, Martijn Dullaart and Martin Haket have also worked on a diagram with their peers to depict the scope of CM and Impact Analysis. The image leads to the post with my favorite quote: Communication is merely an exchange of information, but connections tell the story.

Related to Configuration Management, Martijn Dullaart and Martin Haket have also worked on a diagram with their peers to depict the scope of CM and Impact Analysis. The image leads to the post with my favorite quote: Communication is merely an exchange of information, but connections tell the story.

Below I share my first attempt to combine the people, process and tools trinity with the concepts of document and data, system(s) of record and system(s) of engagement. Trying to build the story. Look if you recognize the aspects of the discussion above, and feel free to develop enhancements.

I look forward to your suggestions. Like the understanding that we have to split PLM thinking, as it impacts how we look at implementations.

Conclusion

Digital transformation in the PLM domain is forcing us to think differently. There will still be processes based on people collecting, interpreting and combining information. However, there will also be a new domain of connected data interpreted by models and algorithms, not necessarily depending on processes.

Therefore we need to work on new representations that can be used to tell this combined story. What do you think? How can we improve?

This week there was an interesting discussion on LinkedIn initiated by Alex Bruskin from Senticore Technologies. I have known Alex for over 20 years, starting from the SmarTeam days and later through encounters in the PLM space. Alex is a real techie on the outside but also a person with a very creative mind to connect technology to business.

This week there was an interesting discussion on LinkedIn initiated by Alex Bruskin from Senticore Technologies. I have known Alex for over 20 years, starting from the SmarTeam days and later through encounters in the PLM space. Alex is a real techie on the outside but also a person with a very creative mind to connect technology to business.

You can see his LinkedIn featured posts here to get an impression.

Where is PLM @ Startups?

This time Alex shared an observation from an event organized by the Pittsburgh Robotics Network, where he spoke with several startups.

This time Alex shared an observation from an event organized by the Pittsburgh Robotics Network, where he spoke with several startups.

His point, and I quote Alex:

Then, I spoke to a number of presenters there, explaining Senticore capabilities and listening to their situation around engineering/ manufacturing.

– many startups offered an add-on to other platforms => an autonomous module for UAV/helicopter/Vehicle. Some offered robotic components or entire robots (robot-dog).

– all startups use #solidworks , and none use #catia or #nx

– none of them have a PLM system nor an MES. I am 90% certain none of them have ERP, either. They all are apparently using #excel for all these purposes.

– only a handful of them are considering getting a PLM system in the near future.

Read the full post here and the comments below to get a broader insight into the topic.

The PLM Doctor knows it all.

The point reminded me of an episode I did together with Helena Gutierrez from Share PLM last year. She asked the same question to the PLM Doctor.

Do you think PLM is only for big corporations or can startups also benefit from it?

You can see the conversation here:

Meanwhile, the PLM Doctor is unemployed due to the lack of incoming questions.

When looking at startups, I could see two paths. One is the traditional path based on historical mechanical PLM, and a second (potential) approach which is based on understanding the future complexity of the startup offering.

There are two paths – path #1

The first evolutionary path you might have seen a few times before in my blog post is the one depicted by Marc Halpern from Gartner in 2015. At that time, we started discussing Product Innovation Platforms and the new generation of PLM. You can see Marc’s slide below, which is still valid for most situations.

In the slide above, you see the startup company on the left side.

Often the main purpose of a startup company is to be visible on the market with their concept as fast as possible. Startups are often driven by a small group of multifunctional people developing a solution. In this approach, there is no place for people and reflection on processes as they are considered overhead.

Often the main purpose of a startup company is to be visible on the market with their concept as fast as possible. Startups are often driven by a small group of multifunctional people developing a solution. In this approach, there is no place for people and reflection on processes as they are considered overhead.

Only when you target your solution in a strongly regulated environment, e.g., medical devices and aerospace, you need to focus on the process too.

Therefore it is logical that most startup companies focus on the tools to develop their solution. A logical path, as what could you do without tools? Next, the choice of the tools will be, most of the time, driven by the team’s experience and available skills in the market.

Again statistics show it is not likely that advanced tools like NX or CATIA will be chosen for the design part. More likely mid-market products like SolidWorks or Autodesk products. And for data management and reporting, the logical tools are the office tools, Excel, Word and Visio.

Again statistics show it is not likely that advanced tools like NX or CATIA will be chosen for the design part. More likely mid-market products like SolidWorks or Autodesk products. And for data management and reporting, the logical tools are the office tools, Excel, Word and Visio.

And don’t forget PowerPoint to sell the solution.

The role of investors is often also here to question investments that are not clearly understood or relevant at that time.

How a startup scales up very much depends on the choices they make for Repeatable business. This is the moment that a company starts to create its legacy. Processes and best practices need to be established and why you often see is that seasoned people join the company. These people have proven their skills in the past, and most likely, they are willing to repeat this.

And here comes the risk – experienced people come with a much better holistic overview of the product lifecycle aspects. They know what critical steps are needed to move the company to an Integrated business. These experiences are crucial; however, they should not become the new single standard.

Implementing the past is not a guarantee for success in a digital and connected future.

Implementing their past experiences would focus too much on creating a System of Record (PLM 1.0), which is crucial for configuration management, change management and compliance. However, it would also create a productivity dip for those developing the product or solution.

This is the same dilemma that very small and medium enterprises face. They function reasonably well in a Repeatable business. How much should they invest in an Integrated or Collaborating business approach?

This is the same dilemma that very small and medium enterprises face. They function reasonably well in a Repeatable business. How much should they invest in an Integrated or Collaborating business approach?

Following the evolution path described by Marc Halpern always brings you to the point where technology changes from Coordinated to Connected. This is a challenging and immature topic, which I have discussed in my blog posts and during conferences.

See: The Challenges of a connected ecosystem for PLM or this full series of posts: The road to model-based and connected PLM.

There are two paths – path #2

Another path that startups could follow is a more forward-looking path, understanding that you need a coordinated and connected approach in the long term. For the fastest execution, you would like to work in a multidisciplinary mode in real time, exactly the characteristic of a startup.

However, in path #2, the startup should have a longer-term vision. Instead of choosing the obvious tools, they should focus on their company’s most important value streams. They have the opportunity to select integrated domains that are based on a connected, often model-based approach. Some examples of these integrated domains:

However, in path #2, the startup should have a longer-term vision. Instead of choosing the obvious tools, they should focus on their company’s most important value streams. They have the opportunity to select integrated domains that are based on a connected, often model-based approach. Some examples of these integrated domains:

- An MBSE environment focusing on real-time interaction related to product architecture and solution components(RFLP)

- A connected product design environment, where in real-time a virtual product can be created, analyzed, and optimized – connected software might be relevant here.

- A connected product realization environment where product engineering and suppliers work together in real time.

All three examples are typical Systems of Engagement. The big difference with individual tools is that they already focus on multidisciplinary collaboration on a data-driven, model-based approach.

In addition, having these systems in place allows the startup company to invest separately in a System of Record(s) environment when scaling up. This could be a traditional PLM system combined with a Configuration Management System or an Asset Management System.

System of Record choices, of course, depends on the industry needs and the usage of the product in the field. We should not consider one system that serves all; it is an infrastructure.

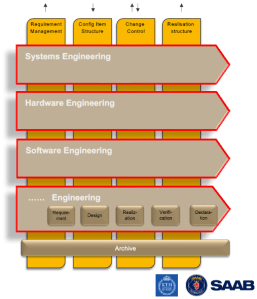

In the image below, you see the concept of this approach described by Erik Herzog from SAAB Aeronautics during the recent PLM Roadmap / PDT Europe conference. You can read more details of this approach in this post: The Week after PLM Roadmap PDT Europe.

![]() Note: SAAB is not a startup; therefore, they must deal with their legacy. They are now working on business sustainable concepts for the future: Heterogeneous and federated PLM.

Note: SAAB is not a startup; therefore, they must deal with their legacy. They are now working on business sustainable concepts for the future: Heterogeneous and federated PLM.

My opinion: The heterogeneous and federated approach is the ultimate target for any enterprise. I already mentioned the importance of connected environments regarding digital twins and sustainability. Material properties, process environmental impacts and product behavior coming from the field will all work only efficiently if dealt with in a connected and federated manner.

Conclusion

The challenge for startups is that they often start without the knowledge and experience that multidisciplinary collaboration within a value stream is crucial for a connected future. This a topic that I would like to explore further with startups and peers in my ecosystem. What do you think? What are your questions? Join the conversation.

Once and a while, the discussion pops up if, given the changes in technology and business scope, we still should talk about PLM. John Stark and others have been making a point that PLM should become a profession.

Once and a while, the discussion pops up if, given the changes in technology and business scope, we still should talk about PLM. John Stark and others have been making a point that PLM should become a profession.

In a way, I like the vagueness of the definition and the fact that the PLM profession is not written in stone. There is an ongoing change, and who wants to be certified for the past or framed to the past?

However, most people, particularly at the C-level, consider PLM as something complex, costly, and related to engineering. Partly this had to do with the early introduction of PLM, which was a little more advanced than PDM.

However, most people, particularly at the C-level, consider PLM as something complex, costly, and related to engineering. Partly this had to do with the early introduction of PLM, which was a little more advanced than PDM.

The focus and capabilities made engineering teams happy by giving them more access to their data. But unfortunately, that did not work, as engineers are not looking for more control.

Old (current) PLM

Therefore, I would like to suggest that when we talk about PLM, we frame it as Product Lifecycle Data Management (the definition). A PLM infrastructure or system should be considered the System of Record, ensuring product data is archived to be used for manufacturing, service, and proving compliance with regulations.

In a modern way, the digital thread results from building such an infrastructure with related artifacts. The digital thread is somehow a slow-moving environment, connecting the various as-xxx structures (As-Designed, As-Planned, As-Manufactured, etc.). Looking at the different PLM vendor images, Aras example above, I consider the digital thread a fancy name for traceability.

I discussed the topic of Digital Thread in 2018: Document Management or Digital Thread. One of the observations was that few people talk about the quality of the relations when providing traceability between artifacts.

The quality of traceability is relevant for traditional Configuration Management (CM). Traditional CM has been framed, like PLM, to be engineering-centric.

The quality of traceability is relevant for traditional Configuration Management (CM). Traditional CM has been framed, like PLM, to be engineering-centric.

Both PLM and CM need to become enterprise activities – perhaps unified.

Read my blog post and see the discussion with Martijn Dullaart, Lisa Fenwick and Maxim Gravel when discussing the future of Configuration Management.

New digital PLM

In my posts, I talked about modern PLM. I described it as data-driven, often in relation to a model-based approach. And as a result of the data-driven approach, a digital PLM environment could be connected to processes outside the engineering domain. I wrote a series of posts related to the potential of such a new PLM infrastructure (The road to model-based and connected PLM)

In my posts, I talked about modern PLM. I described it as data-driven, often in relation to a model-based approach. And as a result of the data-driven approach, a digital PLM environment could be connected to processes outside the engineering domain. I wrote a series of posts related to the potential of such a new PLM infrastructure (The road to model-based and connected PLM)

Digital PLM, if implemented correctly, could serve people along the full product lifecycle, from marketing/portfolio management until service and, if relevant, decommissioning). The bigger challenge is even connecting eco-systems to the same infrastructure, in particular suppliers & partners but also customers. This is the new platform paradigm.

Some years ago, people stated IoT is the new PLM (IoT is the new PLM – PTC 2017). Or MBSE is the foundation for a new PLM (Will MBSE be the new PLM instead of IoT? A discussion @ PLM Roadmap conference 2018).

Some years ago, people stated IoT is the new PLM (IoT is the new PLM – PTC 2017). Or MBSE is the foundation for a new PLM (Will MBSE be the new PLM instead of IoT? A discussion @ PLM Roadmap conference 2018).