You are currently browsing the tag archive for the ‘Data centric’ tag.

Last week, I shared my first impressions from my favorite conference, in the post: The weekend after PLM Roadmap/PDT Europe 2023, where most impressions could be classified as traditional PLM and model-based.

Last week, I shared my first impressions from my favorite conference, in the post: The weekend after PLM Roadmap/PDT Europe 2023, where most impressions could be classified as traditional PLM and model-based.

There is nothing wrong with conventional PLM, as there is still much to do within this scope. A model-based approach for MBSE (Model-Based Systems Engineering) and MBD (Model-Based Definition) and efficient supplier collaboration are not topics you solve by implementing a new system.

Ultimately, to have a business-sustainable PLM infrastructure, you need to structure your company internally and connect to the outside world with a focus on standards to avoid a vendor lock-in or a dead end.

Ultimately, to have a business-sustainable PLM infrastructure, you need to structure your company internally and connect to the outside world with a focus on standards to avoid a vendor lock-in or a dead end.

In short, this is what I described so far in The weekend after ….part 1.

Now, let’s look at the relatively new topics for this audience.

Enabling the Marketing, Engineering & Manufacturing Digital Thread

Cyril Bouillard, the PLM & CAD Tools Referent at the Mersen Electrical Protection (EP) business unit, shared his experience implementing an end-to-end digital backbone from marketing through engineering and manufacturing.

Cyril Bouillard, the PLM & CAD Tools Referent at the Mersen Electrical Protection (EP) business unit, shared his experience implementing an end-to-end digital backbone from marketing through engineering and manufacturing.

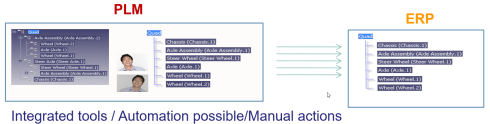

Cyril showed the benefits of a modern PLM infrastructure that is not CAD-centric and focused on engineering only. The advantages of this approach are a seamless integrated flow of PLM and PIM (Product Information Management).

I wrote about this topic in 2019: PLM and PIM – the complementary value in a digital enterprise. Combining the concepts of PLM and PIM in an integrated, connected environment could also provide a serious benefit when collaborating with external parties.

Another benefit Cyril demonstrated was the integration of RoHS compliance to the BOM as an integrated environment. In my session, I also addressed integrated RoHS compliance as a stepping stone to efficiency in future compliance needs.

Another benefit Cyril demonstrated was the integration of RoHS compliance to the BOM as an integrated environment. In my session, I also addressed integrated RoHS compliance as a stepping stone to efficiency in future compliance needs.

Read more later or in this post: Material Compliance – as a stepping-stone towards Life Cycle Assessment (LCA)

Cyril concluded with some lessons learned.

Data quality is essential in such an environment, and there are significant time savings implementing the connected Digital Thread.

Meeting the Challenges of Sustainability in Critical Transport Infrastructures

Etienne Pansart, head of digital engineering for construction at SYSTRA, explained how they address digital continuity with PLM throughout the built assets’ lifecycle.

Etienne Pansart, head of digital engineering for construction at SYSTRA, explained how they address digital continuity with PLM throughout the built assets’ lifecycle.

Etienne’s story was related to the complexity of managing a railway infrastructure, which is a linear and vertical distribution at multiple scales; it needs to be predictable and under constant monitoring; it is a typical system of systems network, and on top of that, maintenance and operational conditions need to be continued up to date.

Regarding railway assets – a railway needs renewal every two years, bridges are designed to last a hundred years, and train stations should support everyday use.

When complaining about disturbances, you might have a little more respect now (depending on your country). However, on top of these challenges, Etienne also talked about the additional difficulties expected due to climate change: floods, fire, earth movements, and droughts, all of which will influence the availability of the rail infrastructure.

In that context, Etienne talked about the MINERVE project – see image below:

As you can see from the main challenges, there is an effort of digitalization for both the assets and a need to provide digital continuity over the entire asset lifecycle. This is not typically done in an environment with many different partners and suppliers delivering a part of the information.

Etienne explained in more detail how they aim to establish digital twins and MBSE practices to build and maintain a data-driven, model-based environment.

Having digital twins allows much more granular monitoring and making accurate design decisions, mainly related to sustainability, without the need to study the physical world.

His presentation was again a proof point that through digitalization and digital twins, the traditional worlds of Product Lifecycle Management and Asset Information Management become part of the same infrastructure.

And it may be clear that in such a collaboration environment, standards are crucial to connect the various stakeholder’s data sources – Etienne mentioned ISO 16739 (IFC), IFC Rail, and ISO 19650 (BIM) as obvious standards but also ISO 10303 (PLCS) to support the digital thread leveraged by OSLC.

And it may be clear that in such a collaboration environment, standards are crucial to connect the various stakeholder’s data sources – Etienne mentioned ISO 16739 (IFC), IFC Rail, and ISO 19650 (BIM) as obvious standards but also ISO 10303 (PLCS) to support the digital thread leveraged by OSLC.

I am curious to learn more about the progress of such a challenging project – having worked with the high-speed railway project in the Netherlands in 1995 – no standards at that time (BIM did not exist) – mainly a location reference structure with documents. Nothing digital.

The connected Digital Thread

The theme of the conference was The Digital Thread in a Heterogeneous, Extended Enterprise Reality, and in the next section, I will zoom in on some of the inspiring sessions for the future, where collaboration or information sharing is all based on a connected Digital Thread – a term I will explain in more depth in my next blog post.

Transforming the PLM Landscape:

The Gateway to Business Transformation

Yousef Hooshmand‘s presentation was the highlight of this conference for me.

Yousef Hooshmand‘s presentation was the highlight of this conference for me.

Yousef is the PLM Architect and Lead for the Modernization of the PLM Landscape at NIO, and he has been active before in the IT-landscape transformation at Daimler, on which he published the paper: From a monolithic PLM landscape to a federated domain and data mesh.

If you read my blog or follow Share PLM, you might seen the reference to Yousef’s work before, or recently, you can hear the full story at the Share PLM Podcast: Episode 6: Revolutionizing PLM: Insights.

If you read my blog or follow Share PLM, you might seen the reference to Yousef’s work before, or recently, you can hear the full story at the Share PLM Podcast: Episode 6: Revolutionizing PLM: Insights.

It was the first time I met Yousef in 3D after several virtual meetings, and his passion for the topic made it hard to fit in the assigned 30 minutes.

There is so much to share on this topic, and part of it we already did before the conference in a half-day workshop related to Federated PLM (more on this in the following review).

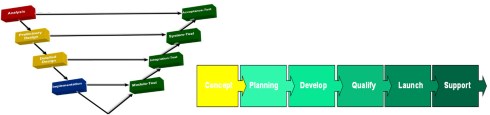

First, Yousef started with the five steps of the business transformation at NIO, where long-term executive commitment is a must.

His statement: “If you don’t report directly to the board, your project is not important”, caused some discomfort in the audience.

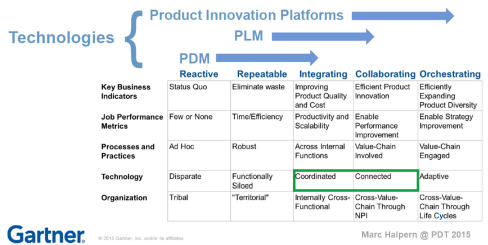

As the image shows, a business transformation should start with a systematic description and analysis of which business values and objectives should be targeted, where they fit in the business and IT landscape, what are the measures and how they can be tracked or assessed and ultimately, what we need as tools and technology.

In his paper From a Monolithic PLM Landscape to a Federated Domain and Data Mesh, Yousef described the targeted federated landscape in the image below.

And now some vendors might say, we have all these domains in our product portfolio (or we have slides for that) – so buy our software, and you are good.

And here Yousef added his essential message, illustrated by the image below.

Start by delivering the best user-centric solutions (in an MVP manner – days/weeks – not months/years). Next, be data-centric in all your choices and ultimately build an environment ready for change. As Yousef mentioned: “Make sure you own the data – people and tools can leave!”

And to conclude reporting about his passionate plea for Federated PLM:

“Stop talking about the Single Source of Truth, start Thinking of the Nearest Source of Truth based on the Single Source of Change”.

Heliple-2 PLM Federation:

A Call for Action & Contributions

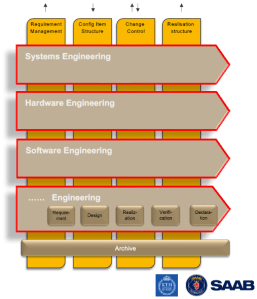

A great follow-up on Yousef’s session was Erik Herzog‘s presentation about the final findings of the Heliple 2 project, where SAAB Aeronautics, together with Volvo, Eurostep, KTH, IBM and Lynxwork, are investigating a new way of federated PLM, by using an OSLC-based, heterogeneous linked product lifecycle environment.

A great follow-up on Yousef’s session was Erik Herzog‘s presentation about the final findings of the Heliple 2 project, where SAAB Aeronautics, together with Volvo, Eurostep, KTH, IBM and Lynxwork, are investigating a new way of federated PLM, by using an OSLC-based, heterogeneous linked product lifecycle environment.

Heliple stands for HEterogeneous LInked Product Lifecycle Environment

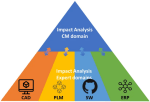

The image below, which I shared several times before, illustrates the mindset of the project.

Last year, during the previous conference in Gothenburg, Erik introduced the concept of federated PLM – read more in my post: The week after PLM Roadmap / PDT Europe 2022, mentioning two open issues to be investigated: Operational feasibility (is it maintainable over time) and Realisation effectivity (is it affordable and maintainable at a reasonable cost)

As you can see from the slide below, the results were positive and encouraged SAAB to continue on this path.

One of the points to mention was that during this project, Lynxwork was used to speed up the development of the OSLC adapter, reducing costs, time and needed skills.

After this successful effort, Erik and several others who joined us at the pre-conference workshop agreed that this initiative is valid to be tested, discussed and exposed outside Sweden.

After this successful effort, Erik and several others who joined us at the pre-conference workshop agreed that this initiative is valid to be tested, discussed and exposed outside Sweden.

Therefore, the Federated PLM Interest Group was launched to join people worldwide who want to contribute to this concept with their experiences and tools.

A first webinar from the group is already scheduled for December 12th at 4 PM CET – you can join and register here.

More to come

Given the length of this blog post, I want to stop here.

Topics to share in the next post are related to my contribution at the conference The Need for a Governance Digital Thread, where I addressed the need for federated PLM capabilities with the upcoming regulations and practices related to sustainability, which require a connected Digital.

Topics to share in the next post are related to my contribution at the conference The Need for a Governance Digital Thread, where I addressed the need for federated PLM capabilities with the upcoming regulations and practices related to sustainability, which require a connected Digital.

I want to combine this post with the findings that Mattias Johansson, CEO of Eurostep, shared in his session: Why a Digital Thread makes a lot of sense, goes beyond manufacturing, and should be standards-based.

I want to combine this post with the findings that Mattias Johansson, CEO of Eurostep, shared in his session: Why a Digital Thread makes a lot of sense, goes beyond manufacturing, and should be standards-based.

There are some interesting findings in these two presentations.

And there was the introduction of AI at the conference, with some experts’ talks and thoughts. Perhaps at this stage, it is too high on Gartner’s hype cycle to go into details. It will surely be THE topic of discussion or interest you must have noticed.

And there was the introduction of AI at the conference, with some experts’ talks and thoughts. Perhaps at this stage, it is too high on Gartner’s hype cycle to go into details. It will surely be THE topic of discussion or interest you must have noticed.

The recent turmoil at OpenAI is an example of that. More to come for sure in the future.

Conclusion

The PLM Roadmap/PDT Europe conference was significant for me because I discovered that companies are working on concepts for a data-driven infrastructure for PLM and are (working on) implementing them. The end of monolithic PLM is visible, and companies need to learn to master data using ontologies, standards and connected digital threads.

It might have been silent in the series of PLM and Sustainability … interviews where we as PLM Green Global Alliance core team members, talk with software vendors, implementers and consultants and their relation to PLM and sustainability. The interviews are still in a stage of exploring what is happening at this moment. More details per vendor or service provider next year.

It might have been silent in the series of PLM and Sustainability … interviews where we as PLM Green Global Alliance core team members, talk with software vendors, implementers and consultants and their relation to PLM and sustainability. The interviews are still in a stage of exploring what is happening at this moment. More details per vendor or service provider next year.

Our last interview was in April this year when we spoke with Mark Reisig, Green Energy Practice Director & Executive Consultant at CIMdata. You can find the interview here, and at that time, I mentioned the good news is that sustainability is no longer a software discussion.

As companies are planning or pushed by regulations to implement sustainable strategies, it becomes clear that education and guidance are needed beyond the tools.

This trend is also noticeable in our PLM Green Global Alliance community, which has grown significantly in the past half year. While writing this post, we have 862 members, not all as active as we hoped. Still, there is more good news related to dedicated contributors and more to come in the next PGGA update.

This time, we want to share the interview with Erik Rieger and Rafał Witkowski, both working for Transition Technologies PSC, a global IT solution integrator in the PLM world known for their PTC implementation services.

This time, we want to share the interview with Erik Rieger and Rafał Witkowski, both working for Transition Technologies PSC, a global IT solution integrator in the PLM world known for their PTC implementation services.

I met them during the LiveWorx conference in Boston in May – you can read more about the conference in my post: The weekend after LiveWorx 2023. Here we decided to follow-up on GreenPLM/

GreenPLM

![]() The label “GreenPLM” is always challenging as it could be considered green-washing. However, in this case, GreenPLM is an additional software offering that can be implemented on top of a PLM system, enabling people to make scientifically informed decisions for a more sustainable, greener product.

The label “GreenPLM” is always challenging as it could be considered green-washing. However, in this case, GreenPLM is an additional software offering that can be implemented on top of a PLM system, enabling people to make scientifically informed decisions for a more sustainable, greener product.

For GreenPLM, Rafal’s and Erik’s experiences are based on implementing GreenPLM on top of the PTC Windchill suite. Listen for the next 34 minutes to an educative session and learn.

You can download the slides shown in the recording here.

What I learned

- It was more a general educative session related to the relation PLM and Sustainability, focusing on the importance of design decisions – the 80 % impact number.

- Erik considers sustainability not a disruption for designers; they already work within cost, quality and time parameters. Now, sustainability is the fourth dimension to consider.

- Erik’s opinion is also reflected in the pragmatic approach of GreenPLM as an additional extension of Windchill using PTC Navigate and OSLC standards.

- GreenPLM is more design-oriented than Mendix-based Sustaira, a sustainability platform we discussed in this series – you can find the recording here.

Want to learn more?

Here are some links related to the topics discussed in our meeting:

Conclusions

With GreenPLM, it is clear that the focus of design for sustainability is changing from a vision (led by software vendors and environmental regulations) towards implementations in the field. Pragmatic and an extension of the current PLM infrastructure. System integrators like Transition Technologies are the required bridge between vision and realization. We are looking for more examples from the field.

Two more weeks to go – don’t miss this opportunity when you are in Europe

Click on the image to see the full and interesting agenda/

Last week, I have been participating in the biannual NEM network meeting, this time hosted by Vestas in Ringkøbing (Denmark).

Last week, I have been participating in the biannual NEM network meeting, this time hosted by Vestas in Ringkøbing (Denmark).

NEM (North European Modularization) is a network for industrial companies with a shared passion and drive for modular products and solutions.

NEM’s primary goal is to advance modular strategies by fostering collaboration, motivation, and mutual support among its diverse members.

During this two-day conference, there were approximately 80 attendees from around 15 companies, all with a serious interest and experience in modularity. The conference reminded me of the CIMdata Roadmap/PDT conferences, where most of the time a core group of experts meet to share their experiences and struggles.

During this two-day conference, there were approximately 80 attendees from around 15 companies, all with a serious interest and experience in modularity. The conference reminded me of the CIMdata Roadmap/PDT conferences, where most of the time a core group of experts meet to share their experiences and struggles.

The discussions are so much different compared to a generic PLM or software vendor conference where you only hear (marketing) success stories.

Modularity

When talking about modularity, many people will have Lego in mind, as with the Lego bricks, you can build all kinds of products without the need for special building blocks. In general, this is the concept of modularity.

When talking about modularity, many people will have Lego in mind, as with the Lego bricks, you can build all kinds of products without the need for special building blocks. In general, this is the concept of modularity.

With modularity, a company tries to reduce the amount of custom-made designs by dividing a product into modules with strict interfaces. Modularity aims to offer a wider variety of products to the customer – but configure these from a narrower assortment of modules to streamline manufacturing, sourcing and service. Modularity allows managing changes and new functionality within the modules without managing a new product.

From ETO (Engineering To Order) to BTO (Build To Order) or even CTO (Configure to Order) is a statement often heard when companies are investing in a new PLM system. The idea is that with the CTO model, you reduce the engineering costs and risks for new orders.

From ETO (Engineering To Order) to BTO (Build To Order) or even CTO (Configure to Order) is a statement often heard when companies are investing in a new PLM system. The idea is that with the CTO model, you reduce the engineering costs and risks for new orders.

With modularity, you can address more variants and options without investing in additional engineering efforts.

How the PLM system supports modularity is an often-heard question. How do you manage in the best way options and variants? The main issue here is that modularity is often considered an R&D effort – R&D must build the modular architecture. An R&D-only focus is a common mistake in the field similar to PLM. Both

PLM and Modularity suffer from the framing that it is about R&D and their tools, whereas in reality, PLM and Modularity are strategies concerning all departments in an enterprise, from sales & marketing, engineering, and manufacturing to customer service.

PLM and Modularity suffer from the framing that it is about R&D and their tools, whereas in reality, PLM and Modularity are strategies concerning all departments in an enterprise, from sales & marketing, engineering, and manufacturing to customer service.

PLM and Modularity

In 2021, I discussed the topic of Modularity with Björn Eriksson & Daniel Strandhammar, who had written during the COVID-19 pandemic their easy-to-read book: The Modular Way. In a blog post, PLM and Modularity, I discussed with Daniel the touchpoints with PLM. A little later, we had a Zoom discussion with Bjorn and Daniel, together with some of the readers of the book. You can find the info still here: The Modular Way – a follow-up discussion.

In 2021, I discussed the topic of Modularity with Björn Eriksson & Daniel Strandhammar, who had written during the COVID-19 pandemic their easy-to-read book: The Modular Way. In a blog post, PLM and Modularity, I discussed with Daniel the touchpoints with PLM. A little later, we had a Zoom discussion with Bjorn and Daniel, together with some of the readers of the book. You can find the info still here: The Modular Way – a follow-up discussion.

What was clear to me at that time is that, in particular, Sweden is a leading country when it comes to Modularity. Companies like Scania, Electrolux are known for their product modularity.

For me it was great to learn the Vestas modularization journey. For sure the Scandinavian region sets the tone. And in addition, there are LEGO and IKEA, also famous Scandinavian companies, but with other modularity concepts.

The exciting part of the conference was that all the significant modularity players were present. Hosted by Vestas and with a keynote speech from Leif Östling, a former CEO of Scania, all the ingredients were there for an excellent conference.

The exciting part of the conference was that all the significant modularity players were present. Hosted by Vestas and with a keynote speech from Leif Östling, a former CEO of Scania, all the ingredients were there for an excellent conference.

The NEM network

The conference started with Christian Eskildsen, CEO of the NEM organization, who has a long history of leading modularity at Electrolux. The NEM is not only a facilitator for modularity. They also conduct training, certification sessions, and coaching on various levels, as shown below.

Christian mentioned that there are around 400 followers on the NEM LinkedIn group. I can recommend this LinkedIn group as the group shares their activities here.

At this moment, you can find here the results of Workstream 7 – The Cost of Complexity.

Peter Greiner, NEM member, presented the details of this result during the conference on day 2. The conclusion of the workstream team was a preliminary estimate suggesting a minimum cost reduction of 2-5% in terms of the Cost Of Goods Sold (COGS) on top of traditional modularization savings. These estimates are based on real-world cases.

Understanding that the benefits are related to the COGS with a high contribution of the actual material costs, a 2 – 5 % range is significant. There is the intention to dig deeper into this topic.

Besides these workstreams, there are also other workstreams running or finished. The ones that interest me in the sustainability context are Workstream 1 Modular & Circular and Workstream 10 Modular PLM (Digital Thread).

Besides these workstreams, there are also other workstreams running or finished. The ones that interest me in the sustainability context are Workstream 1 Modular & Circular and Workstream 10 Modular PLM (Digital Thread).

The NEM network has an active group of members, making it an exciting network to follow and contribute as modularity is part of a sustainable future. More on this statement later.

Vestas

![]() The main part of day one was organized by our host, Vestas. Jens Demtröder, Chief Engineer at Vestas for the Modular Turbine Architecture and NEM board member, first introduced the business scope, complexity, and later the future challenges that Vestas is dealing with.

The main part of day one was organized by our host, Vestas. Jens Demtröder, Chief Engineer at Vestas for the Modular Turbine Architecture and NEM board member, first introduced the business scope, complexity, and later the future challenges that Vestas is dealing with.

First, wind energy is the best cost-competitive source for a green energy system, as the image shows when taking the full environmental impact into the equation. As the image below shows

From the outside, wind turbines all look the same; perhaps a difference between on-shore and off-shore? No way! There is a substantial evolution in the size and control of the wind turbine, and even more importantly, as the image shows, each country has its own regulations to certify a wind turbine. Vestas has to comply with 80+ different local regulations, and for that reason, modularity is vital to manage all the different demands efficiently.

A big challenge for the future will be the transport and installation of wind turbines.

The components become so big that they need to be assembled on-site, requiring new constraints on the structure to be solved.

The components become so big that they need to be assembled on-site, requiring new constraints on the structure to be solved.

As the image to the left, rotor sizes up to 250 m are expected and what about the transport of the nacelle itself?

Click on this link to get an impression.

The audience also participated in a (windy) walk through the manufacturing site to get an impression of the processes & components – an impression below.

Processes, organization and governance

Karl Axel Petursson, Senior Specialist in Architecture and Roadmap, gave insights into the processes, organization and governance needed for the modularity approach at Vestas.

The modularization efforts are always a balance between strategy and execution, where often execution wins. The focus on execution is a claim that I recognize when discussing modularity with the companies I am coaching.

Vestas also created an organization related to the functions it provides, being a follower of Conway’s law, as the image below shows:

With modularity, you will also realize that the modular architecture must rely on stable interfaces between the modules based on clear market needs.

Besides an organizational structure, often more and more a matrix organization, there are also additional roles to set up and maintain a modular approach. As the image below indicates, to integrate all the functions, there are various roles in Vestas, some specialized and some more holistic:

These roles are crucial when implementing and maintaining modularity in your organization. It is not just the job of a clever R&D team.

Just a clever R&D is a misconception I have often discovered in the field. Buying one or more tools that support modularity and then let brilliant engineers do the work. And this is a challenge. Engineers often do not like to be constrained by modular constraints when designing a new capability or feature.

For this reason Vestas has established an Organization Change Management initiative called Modular Minds to make engineers flourish in the organization.

Modular Minds

Madhuri Srinivasan Systems Engineering specialist and Hanh Le Business Transformation leader both at Vestas, presented their approach to the 2020 must-win battle for Modularisation, aiming with various means, like blogs, podcasts, etc., to educate the organization and create Modular Minds for all Vestas employees.

The team is applying the ADKAR model from Prosci to support this change. As you can see from the (clickable) image to the left, ADKAR is the abbreviation of Awareness, Desire, Knowledge, Ability and Reinforcement.

The team is applying the ADKAR model from Prosci to support this change. As you can see from the (clickable) image to the left, ADKAR is the abbreviation of Awareness, Desire, Knowledge, Ability and Reinforcement.

The ADKAR model focuses on driving change at the individual level and achieving organizational results. It is great to see such an approach applied to Modularity, and it would also be valuable in the domain of PLM, as I discussed with Share PLM in my network.

Scania

The 1 ½ hour keynote speech from Leif Östling supported by Karl-Johan Linghede was more of an interactive discussion with the audience than a speech. Leif took us to the origins of Scania, their collaboration in the beginning with learning the Toyota Way. – customer first, respect for people and focus on quality. And initial research and development together with Modular Management resulting in the MFD-methodology.

The 1 ½ hour keynote speech from Leif Östling supported by Karl-Johan Linghede was more of an interactive discussion with the audience than a speech. Leif took us to the origins of Scania, their collaboration in the beginning with learning the Toyota Way. – customer first, respect for people and focus on quality. And initial research and development together with Modular Management resulting in the MFD-methodology.

It led to the understanding that:

- The #1 cost driver is the amount of parts you need to manage,

- The #2 crucial point is to have standardized interfaces and keep the flexibility inside the module

With Ericsson, Scania yearly on partnered to work on the connected vehicle. If you are my age, you will remember connectivity at that time was not easy. The connected vehicle was the first step of what we now would call a digital twin

An interesting topic discussed was that Scania has approximately 25 interfaces at Change Level 1. This is a C-level/Executive discussion to approve potential interface changes. This level shows the commitment of the organization to keep modularity operational.

Another benefit mentioned was that the move to electrification of the vehicle was not such a significant change as in many automotive companies. Thanks to the modular structure and the well-defined interfaces, creating an electric truck was not a complete change of the truck design.

Another benefit mentioned was that the move to electrification of the vehicle was not such a significant change as in many automotive companies. Thanks to the modular structure and the well-defined interfaces, creating an electric truck was not a complete change of the truck design.

The session with Leif and Karl-Johan could have easily taken longer, giving the interesting question-and-answer dialogue with the curious audience. It was a great learning moment.

Digitization, Sustainability & Modularization

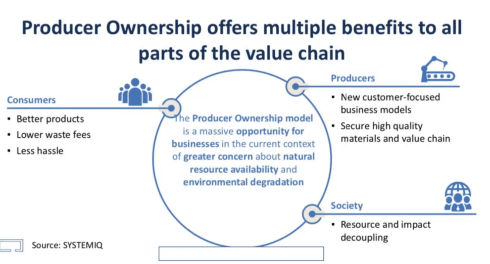

As a PLM person from the PLM Green Global Alliance, I was allowed to give a speech about the winning combination of Digitization, Sustainability and Modularization. You might have seen my PLM and Sustainability blog post recently; now, a zoom-in on the circular economy and modularity is included.

As a PLM person from the PLM Green Global Alliance, I was allowed to give a speech about the winning combination of Digitization, Sustainability and Modularization. You might have seen my PLM and Sustainability blog post recently; now, a zoom-in on the circular economy and modularity is included.

In this conference, I also focused on Modularity, when implemented based on model-based and data-driven approaches, which is a crucial component of the circular economy (image below) and the lifecycle analysis per module when defined as model-based (Digital Twin).

My entire presentation on SlideShare: Digitization, Sustainability & Modularization.

Conclusion

It was the first time I attended a conference focused on modularity purely, and I realized we are all fighting the same battle. Like the fact that PLM is a strategy and not an engineering system, modularity faces the same challenge. It is a strategy and not an R&D mission. It would be great to see modularity becoming a part of PLM conferences or Circular Economy events as there is so much to learn from each other – and we need them all.

Are you interested in the future of PLM and the meaning of Digital Threads.?

Click on the image to see the agenda and join us for 2 days of discussion & learning.

During May and June, I wrote a guest chapter for the next edition of John Stark’s book Product Lifecycle Management (Volume 2): The Devil is in the Details.

During May and June, I wrote a guest chapter for the next edition of John Stark’s book Product Lifecycle Management (Volume 2): The Devil is in the Details.

The book is considered a standard in the academic world when studying aspects of PLM.

Looking into the table of contents through the above link, it shows that understanding PLM in its full scope is broad. I wrote about it recently: PLM is Complex (and we have to accept it?), and Roger Tempest and others are still fighting to get the job as PLM Professional recognized Associate Yourself With Professional PLM.

To make the scope broader, John invited me to write a chapter about PLM and Sustainability, which is an actual topic in many organizations. As sustainability is my dedicated topic in the PLM Global Green Alliance (PGGA) core team, I was happy to accept this challenge.

To make the scope broader, John invited me to write a chapter about PLM and Sustainability, which is an actual topic in many organizations. As sustainability is my dedicated topic in the PLM Global Green Alliance (PGGA) core team, I was happy to accept this challenge.

This activity is challenging because writing a chapter on a current topic might make it outdated soon. For the same reason, I never wanted to write a PLM book as I wrote in my 2014 post: Did you notice PLM is changing?

The book, with the additional chapter, will be available later this year. I want to share with you in this post the topics I addressed in this chapter. Perhaps relevant for your organization or personal interests. Also, I am looking forward to learning if I missed any topics.

The book, with the additional chapter, will be available later this year. I want to share with you in this post the topics I addressed in this chapter. Perhaps relevant for your organization or personal interests. Also, I am looking forward to learning if I missed any topics.

Introduction

The chapter starts with defining the context. PLM is considered a strategy supported by a connected IT infrastructure, and for the definition of sustainability, I refer to the relevant SDGs as described on our PGGA theme page: PLM and Sustainability

Next, I discuss two major concepts indissoluble connected with sustainability.

The Circular Economy

On a planet with limited resources and still a growing consumption of raw materials, we need to follow the concepts of the circular economy in our businesses and lives. The circular economy section addresses mainly the hardware side of the butterfly as, here, PLM practices have the most significant impact.

The circular economy requires collaboration among various stakeholders, including businesses, governments and consumers. It involves rethinking production processes and establishing new consumption patterns. Policies and regulations will push for circular economy patterns, as seen in the following paragraphs.

Systems Thinking

A significant change in bringing products to the market will be the need to change how we look at our development processes. Historically, many of these processes were linear and only focused on time to market, cost and quality. Now, we have to look into other dimensions, like environmental impact, usage and impact on the planet. As I wrote in the past Systems Thinking – a must-have skill in the 21st century?

Systems Thinking is a cognitive approach that emphasizes understanding complex problems by considering interconnections, feedback loops, and emergent properties. It provides a holistic perspective and explores multiple viewpoints.

Systems Thinking guides problem-solving and decision-making and requires you to treat a solution with a mindset of a system interacting with other systems.

Regulations

More sustainable products and services will be driven primarily by existing and upcoming regulations. In this section, I refer to the success of the CFC (ChloroFluorCarbon) emission reduction, leading to slowly fixing the hole in the Ozon layer. Current regulations like WEEE, RoHS and REACH are already relevant for many companies, and compliance with these regulations is a good exercise for more stringent regulations related to Carbon emissions and upcoming related to the Digital Product Passport.

Making regulatory compliance a part of the concept phase ensures no late changes are needed to become compliant, saving time and costs. In addition, making regulatory compliance as much as possible with a data-driven approach reduces the overhead required to prove regulatory compliance. Both topics are part of a PLM strategy.

![]() In this context, see Lionel Grealou’s article 5 Brand Value Benefits at the Intersection of Sustainability and Product Compliance. The article has also been shared in our PGGA LinkedIn group.

In this context, see Lionel Grealou’s article 5 Brand Value Benefits at the Intersection of Sustainability and Product Compliance. The article has also been shared in our PGGA LinkedIn group.

Business

On the business side, the Greenhouse Gas Protocol is explained. How companies will have to report their Scope 1 and Scope 2 emissions and, ultimately, Scope 3 – see the image below for the details.

GHG reporting will support companies, investors and consumers to decide where to prioritize and put their money.

Ultimately, companies have to be profitable to survive in their business. The ESG framework is relevant in this context as it will allow investors to put their money not only based on short-term gains (as expected) but also on Environmental or Social parameters. There are a lot of discussions related to the ESG framework, as you might have read in Vincent de la Mar’s monthly newsletter, Sustainability & ESG Insights, which is also published in our PGGA group – a link below..

Besides ESG guidelines, there is also the drive by governments and consumers to push for a Product as a Service economy. Instead of owning products, consumers would pay for the usage of these products.

The concept is not new when considering lease cars, EV scooters, or streaming services like Spotify and Netflix. In the CIMdata PLM Roadmap/PDT Fall 2021 conference, we heard Kenn Webster explaining: In the future, you will own nothing & you will be happy.

Changing the business to a Product as a Service is not something done overnight. It requires repairable, upgradeable products. And business related, it requires a connected ecosystem of all stakeholders – the manufacturer, the finance company, and the operating entities.

Digital Transformation

All the subjects discussed before require real-time reporting and analysis combined with data access to compliance-related databases. More in the section related to Life Cycle Assessment. As I discussed last year in several conferences, a sustainability initiative starts with data-driven and model-based approaches during the concept phase, but when manufacturing and operating (connected) products in the field. You can read the entire story here: Sustainability and Data-Driven PLM – the Perfect Storm.

Life Cycle Analysis

Special attention is given in this chapter to Life Cycle Analysis, which seems to be a popular topic among PLM vendors. Here, they can provide tools to make a lifecycle assessment, and you can read an impression of these tools in a guest blog from Roger L. Franz titled PLM Tools to Design for Sustainability – PLM Green Global Alliance.

However, Lifecycle Analysis is not as simple. Looking at the ISO 14040 framework, which describes – having the right goals and scope in mind, allows you to do an LCA where the Product Category Rules (PCS) will enable companies to compare their products with others.

PCRs include the description of the product category, the goal of the LCA, functional units, system boundaries, cut-off criteria, allocation rules, impact categories, information on the use phase, units, calculation procedures, requirements for data quality, and other information on the lifecycle Inventory Phase.

So be aware there is more to do than installing a tool.

Digital Twin

This section describes the importance of implementing a digital twin for the design phase, allowing companies to develop, test and analyze their products and services first virtually. Trade-off studies on virtual products are much cheaper, and when they are done in a data-driven, model-based environment, it will be the most efficient environment. In my terminology, setting up such a collaboration environment might be considered a System of Engagement.

This section describes the importance of implementing a digital twin for the design phase, allowing companies to develop, test and analyze their products and services first virtually. Trade-off studies on virtual products are much cheaper, and when they are done in a data-driven, model-based environment, it will be the most efficient environment. In my terminology, setting up such a collaboration environment might be considered a System of Engagement.

The second crucial digital twin mentioned is the digital twin from a product in operation where performance can be monitored and usage can be optimized for a minimal environmental impact. Suppose a company is able to create a feedback loop between its products in the field and its product innovation platform. In that case, it can benchmark its design models and update the product behavior for better performance.

The manufacturing digital twin is also discussed in the context of environmental impact, as choosing the right processes and resources can significantly affect scope 3 emissions.

The chapter finishes with the story of a fictive company, WePack, where we can follow the impact and implementations of the topics described in this chapter.

Conclusion

As I described in the introduction, the topic of PLM and Sustainability is relatively new and constantly evolving. What do you think? Did I miss any dimensions?

Feel free to contribute to our PLM Global Green Alliance LinkedIn group.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

I noticed this year/last year that many companies are discussing or working on their future PLM. It is time to make progress after COVID, particularly in digitization.

And as most companies are avoiding the risk of a “big bang”, they are exploring how they can improve their businesses in an evolutionary mode.

PLM is no longer a system

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

More and more, PLM is considered a strategy, with which I fully agree. Therefore, implementing a PLM strategy requires holistic thinking and an infrastructure of different types of systems, where possible, digitally connected.

This trend is bad news for the PLM vendors as they continuously work on an end-to-end portfolio where every aspect of the PLM lifecycle is covered by one of their systems. The company’s IT department often supports the PLM vendors, as IT does not like a diverse landscape.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

However, are these capabilities implementable in an economical and user-friendly manner by actual companies or should PLM players need to change their strategy”?

A question I will try to answer in this post

The future of PLM

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

Some of the posts to refresh your memory are:

- Time to split PLM?

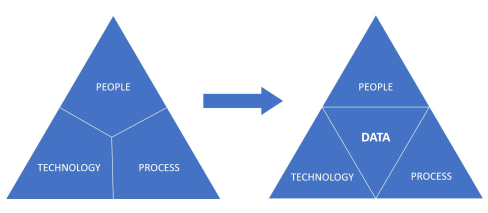

- People, Processes, Data and Tools?

- The rise and the fall of the BOM?

- The new side of PLM? Systems of Engagement!

To summarize what has been discussed in these posts are the following points:

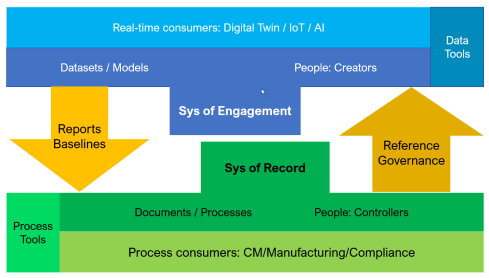

The As Is:

- The traditional PLM systems are examples of a System of Record, not designed to be end-user friendly but designed to have a traceable baseline for manufacturing, service and product compliance.

- The traditional PLM systems are tuned to a mechanical product introduction and release process in a coordinated manner, with a focus on BOM governance.

- The legacy information is stored in BOM structures and related specification files.

System of Record (ENOVIA image 2014)

The To Be:

- We are not talking about a PLM system anymore; a traditional System of Record will be digitally connected to different Systems of Engagement / Domains / Products, which have their own optimized environment for real-time collaboration.

- The BOM structures remain essential for the hardware part; however, overreaching structures are needed to manage software and hardware releases for a product. These structures depend on connected datasets.

- To support digital twins at the various lifecycle stages (design. Manufacturing, operations), product data needs to be based on and consumed by models.

- A future PLM infrastructure is hybrid, based on a Single Source of Change (SSoC) and an Authoritative Source of Truth (ASoT) instead of a Single Source of Truth (SSoT).

Various Systems of Engagement

Related podcasts

I relistened two podcasts before writing this post, and I think they are a must to listen to.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

Highlights: From System to Platform – the new norman. A Single Source of Truth doesn’t work anymore – it is about value streams. People in big companies fear making wrong PLM decisions, which is seen as a significant risk for your career.

There is no immediate need to change the current status quo.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

Highlights: The term Single Source of Change and the existing concepts of a hybrid PLM infrastructure based on his experiences at Daimler and now at NIO. Yousef stresses the importance of having the vision and the executive support to execute.

![]() The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

In addition, I want to point to the experiences provided by Erik Herzog in the Heliple project using OSLC interfaces as the “glue” to connect (in my terminology) the Systems of Engagement and the Systems of Record.

If you are interested in these concepts and want to learn and discuss them with your peers, more can be learned during the upcoming CIMdata PLM Roadmap / PDT Europe conference.

In particular, look at the agenda for day two if you are interested in this topic.

The future for the PLM vendors

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

They are more focused with their messaging on the transition from on-premise to the cloud, providing a SaaS offering with their portfolio.

I was slightly disappointed when I saw this article on Engineering.com provided by Autodesk: 5 PLM Best Practices from the Experiences of Autodesk and Its Customers.

The article is tool-centric, with statements that make sense and could be written by any PLM Vendor. However, Best Practice #1 Central Source of Truth Improves Productivity and Collaboration was the message that struck me. Collaboration comes from connecting people, not from the Single Source of Truth utopia.

I don’t believe PLM Vendors have to be afraid of losing their installed base rapidly with companies using their PLM as a System or Record. There is so much legacy stored in these systems that might still be relevant. The existence of legacy information, often documents, makes a migration or swap to another vendor almost impossible and unaffordable.

The System of Record is incompatible with data-driven PLM capabilities

I would like to see more clear developments of the PLM Vendors, creating a plug-and-play infrastructure for Systems of Engagement. Plug-and-play solutions could be based on a neutral partner collaboration hub like ShareAspace or the Systems of Engagement I discussed recently in my post and interview: The new side of PLM? Systems of Engagement!

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Some people believe AI, with the examples we have seen with ChatGPT, will be the future direction without needing interface standards.

I am curious about your thoughts and experiences in that area and am willing to learn.

Talking about learning?

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

As Martijn starts in the preface:

“I decided to write this book because, in my search for more knowledge on the topics of Part Re-Identification, Interchangeability, and Traceability, I could only find bits and pieces but not a comprehensive work that helps fundamentally understand these topics”.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

Martijn writes in an easily readable style and uses step-by-step examples to discuss the various options. There are even exercises at the end to use in a classroom or for your team to digest the content.

The good news is that the book is not about the past. You might also know Martijn for our joint discussion, The Future of Configuration Management, together with Maxime Gravel and Lisa Fenwick, on the impact of a model-based and data-driven approach to CM.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I recommend buying the book as a paperback so you can navigate easily between the diagrams and the text.

Conclusion

The trend for federated PLM is becoming more and more visible as companies start implementing these concepts. The end of monolithic PLM is a threat and an opportunity for the existing PLM Vendors. Will they work towards an open plug-and-play future, or will they keep their portfolios closed? What do you think?

Two weeks ago, I shared my post: Modern PLM is (too) complex on LinkedIn, and apparently, it was a topic that touched many readers. Almost a hundred likes, fifty comments and six shares. Not the usual thing you would expect from a PLM blog post.

Two weeks ago, I shared my post: Modern PLM is (too) complex on LinkedIn, and apparently, it was a topic that touched many readers. Almost a hundred likes, fifty comments and six shares. Not the usual thing you would expect from a PLM blog post.

In addition, the article led to offline discussions with peers, giving me an even better understanding of what people think. Here is a summary of the various talks.

What is PLM?

In particular, since the inception of Product Lifecycle Management, software vendors have battled with the various PLM definitions.

In particular, since the inception of Product Lifecycle Management, software vendors have battled with the various PLM definitions.

Initially, PLM was considered an engineering tool for product development, with an extensive potential set of capabilities supported by PowerPoint. Most companies actually implemented a collaborative PDM system at that time and named it PLM.

Was PLM really understood? Look at the infamous Autodesk CEO Carl Bass’s anti-PLM rap from 2007. Next, in 2012, Autodesk introduced its PLM solution called Autodesk PLM 360 as one of the first cloud solutions.

Only with growing connectivity and enterprise information sharing did the definition of PLM start to change.

Only with growing connectivity and enterprise information sharing did the definition of PLM start to change.

PLM became a product information backbone serving downstream deployment with product data – the traditional Teamcenter, Windchill and ENOVIA implementations are typical examples of this phase.

With a digitization effort taking place in the non-PLM domain, connecting product development, design and delivery data to a company’s digital business became necessary. You could say, and this is the CIMdata definition:

PLM is a strategic business approach that applies a consistent set of business solutions that support the collaborative creation, management, dissemination, and use of product definition information. PLM supports the extended enterprise (customers, design and supply partners, etc.)

I agree with this definition; perhaps 80 % of our PLM community does. But how many times have we been trapped again in the same thinking: PLM is a system.

The most recent example is the post from Oleg Shilovitsky last week where he claims: Discover why OpenBOM reigns supreme in the world of PLM!

Nothing wrong with that, as software vendors will always tweak definitions as they need marketing to make a profit, but PLM is not a system.

My main point is that PLM is a “vague” community label with many interpretations. Software vendors have the most significant marketing budget to push their unique definitions. However, also various practitioners in the field have their interpretations.

And maybe Martin Haket’s comment to the post says it all (partly quote):

I’m a bit late to this discussion, but in my opinion, the complexity is mainly due to the fact that the ownership of the processes and data models underlying PLM are not properly organized. ‘Everybody’ in the company is allowed to mix in the discussion and have their opinion; legacy drives departments to undesirable requirements leading to complex implementations.

My intermediate conclusion: Our legacy and lack of a single definition of PLM make it complex.

The PLM professional

On LinkedIn, there are approximately 14.000 PLM consultants in my first and second levels of connections. This number indicates that the label “PLM Consultant” has a specific recognition.

On LinkedIn, there are approximately 14.000 PLM consultants in my first and second levels of connections. This number indicates that the label “PLM Consultant” has a specific recognition.

During my “PLM is complex” discussion, I noticed Roger Tempest’s Professional PLM White paper and started the dialogue with him.

Roger Tempest is one of the co-founders of the PLM Interest Group. He has been trying to create a baseline for a foundational PLM certification with several others. We discussed the challenges of getting the PLM Professional recognized as an essential business role. Can we certify the PLM professional the same way as a certified Configuration Manager or certified Project Manager?

I shared my thoughts with Roger, claiming that our discipline is too vague and diverse and that finding a common baseline is hard.

Therefore, we are curious about your opinion too. Please tell us in the comments to this post what you think about recognizing the PLM professional and what skills should be the minimum. What are the basics of a PLM professional?

Therefore, we are curious about your opinion too. Please tell us in the comments to this post what you think about recognizing the PLM professional and what skills should be the minimum. What are the basics of a PLM professional?

In addition, I participated in some of the SharePLM podcast recordings with PLM experts from the field (follow us here). I raised the PLM professional question either during the podcast or during the preparation of the after-party. Also, there was no single unique answer.

So much is part of PLM: people (culture, skills), processes & data, tools & infrastructures (architectures, standards) combined with execution (waterfall/agile?)

So much is part of PLM: people (culture, skills), processes & data, tools & infrastructures (architectures, standards) combined with execution (waterfall/agile?)

My intermediate conclusion: The broadness of PLM makes it complex to have a common foundation.

More about complexity

PEOPLE: Let’s zoom in on the aspects of complexity. Starting from the People, Processes, Data and Tools discussion. The first thing mentioned is “the people,” organizations usually claim: “the most important assets in our organization are the people”.

PEOPLE: Let’s zoom in on the aspects of complexity. Starting from the People, Processes, Data and Tools discussion. The first thing mentioned is “the people,” organizations usually claim: “the most important assets in our organization are the people”.

However, people are usually the last dimension considered in business changes. Companies start with the tools, try to build the optimal processes and finally push the people into that framework by training, incentives or just force.

The reason for the last approach is that dealing with people is complex. People have their beliefs, their legacy and their motivation. And if people do not feel connected to the business (change), they will become an obstacle to change – look at the example below from my 2014 PI Apparel presentation:

To support the importance of people, I am excited to work with Share PLM and the Season 2 podcast series.

![]() In these episodes, we talk with successful PLM experts about their lessons learned during PLM implementation. You will discover it is a learning process, and connecting to people in different cultures is essential. As it is a learning process, you will find it takes time and human skills to master this complexity.

In these episodes, we talk with successful PLM experts about their lessons learned during PLM implementation. You will discover it is a learning process, and connecting to people in different cultures is essential. As it is a learning process, you will find it takes time and human skills to master this complexity.

Often human skills are called “soft skills”, but actually, they are “vital skills”!

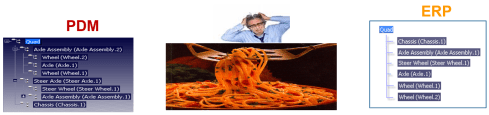

PROCESSES: Regarding the processes part, this is another challenging topic. Often we try to simplify processes to make them workable (sounds like a good idea). With many seasoned PLM practitioners coming from the mechanical product development world, it is not a surprise that many proposed PLM processes are BOM-centric – building on PDM and ERP capabilities.

PROCESSES: Regarding the processes part, this is another challenging topic. Often we try to simplify processes to make them workable (sounds like a good idea). With many seasoned PLM practitioners coming from the mechanical product development world, it is not a surprise that many proposed PLM processes are BOM-centric – building on PDM and ERP capabilities.

In my post: The rise and fall of the BOM? I started with this quote from Jan Bosch:

An excessive focus on the bill of materials leads to significant challenges for companies that are undergoing a digital transformation and adopting continuous value delivery. The lack of headroom, high coupling and versioning hell may easily cause an explosion of R&D expenditure over time.

Today’s organization and product complexity does not allow us to keep the processes simple to remain competitive. In that context, have a look at Erik Herzog’s comment on PLM complexity:

I believe a contributing factor to making PLM complex lies in our tendency to make too many simplifications. Do we understand a simple thing such as configuration change management in incremental development? At least in my organization, there is room for improvement.

In the comment, Erik also provided a link to his conference paper: Introducing the 4-Box Development Model describing the potential interaction between Systems Engineering and Configuration Management. A topic that is too complex for your current company; however, it illustrates that you cannot generalize and simplify PLM overall.

In addition to Erik’s comments, I want to mention again that we can change our business processes thanks to a modern, connected, data-driven infrastructure. From coordinated to connected working with a mix of Systems of Engagement (new) and Systems of Record (traditional). There are no solid best practices yet, but the real PLM geeks are becoming visible.

In addition to Erik’s comments, I want to mention again that we can change our business processes thanks to a modern, connected, data-driven infrastructure. From coordinated to connected working with a mix of Systems of Engagement (new) and Systems of Record (traditional). There are no solid best practices yet, but the real PLM geeks are becoming visible.

TOOLS & DATA: When discussing the future: From Coordinated to Connected, there has always been a discussion about the legacy.

TOOLS & DATA: When discussing the future: From Coordinated to Connected, there has always been a discussion about the legacy.

Should we migrate the legacy data and systems and replace them with new tools and data models? Or are there other options? The interaction of tools and data is often the domain of Enterprise Solution Architects. The Solution Architect’s role becomes increasingly important in a modern, data-driven company, and several are pretty active in PLM, if you know how to find them, because they are not in the mainstream of PLM.

This week we made a SharePLM podcast recording with Yousef Hooshmand. I wrote about his paper “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh” last year as Yousef describes the complex process, that time working at Daimler, to slowly replace old legacy infrastructure with a new modern user/role-centric data-driven infrastructure.

This week we made a SharePLM podcast recording with Yousef Hooshmand. I wrote about his paper “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh” last year as Yousef describes the complex process, that time working at Daimler, to slowly replace old legacy infrastructure with a new modern user/role-centric data-driven infrastructure.

Watch out for this recording to be published soon as Yousef shares various provoking experiences. Not to provoke our community but to create the awareness that a transformation is possible when you have the right long-term vision, strategy and C-level support.

Fighting complexity

Note: We have CM people involved in many of the PLM discussions. I think they are fighting similar complexity like others in the PLM domain. However, they have the benefit that their role: Configuration Manager, is recognized and supported by a commercial certification organization( the Institute of Process Excellence – IpX ).

Note: We have CM people involved in many of the PLM discussions. I think they are fighting similar complexity like others in the PLM domain. However, they have the benefit that their role: Configuration Manager, is recognized and supported by a commercial certification organization( the Institute of Process Excellence – IpX ).

While completing this post, I read this article from Oleg Shilovitsky: PLM User Groups and Communities. At first glance, you might think that PLM User Groups and Communities might be the solution to address the complexity.

And I think they do; there are within most PLM vendors orchestrated User Groups and Communities. Depending on your tool vendor, you will find like-minded people supported by vendor experts. Are they reducing the complexity? Probably not, as they are at the end of the People, Processes, Data and Tools discussion. You are already working within a specific boundary.

And I think they do; there are within most PLM vendors orchestrated User Groups and Communities. Depending on your tool vendor, you will find like-minded people supported by vendor experts. Are they reducing the complexity? Probably not, as they are at the end of the People, Processes, Data and Tools discussion. You are already working within a specific boundary.

Based on my experience as a core PLM Global Green Alliance member, I think PLM-neutral communities are not viable. There is very little interaction in this community, with currently 686 members, although the topics are very actual. Yes, people want to consume and learn, but making time available to share is, unfortunately, impossible when not financially motivated. Sharing opinions, yes, but working on topics: we are too busy.

Based on my experience as a core PLM Global Green Alliance member, I think PLM-neutral communities are not viable. There is very little interaction in this community, with currently 686 members, although the topics are very actual. Yes, people want to consume and learn, but making time available to share is, unfortunately, impossible when not financially motivated. Sharing opinions, yes, but working on topics: we are too busy.

Conclusion

The term PLM seems adequate to identify a group with a common interest (and skills?) Due to the broad scope and aspects – it is impossible to create a standard job description for the PLM professional, and we must learn to live with that- see my arguments.

What do you think?

Last week I enjoyed visiting LiveWorx 2023 on behalf of the PLM Global Green Alliance. PTC had invited us to understand their sustainability ambitions and meet with the relevant people from PTC, partners, customers and several of my analyst friends. It felt like a reunion.

Last week I enjoyed visiting LiveWorx 2023 on behalf of the PLM Global Green Alliance. PTC had invited us to understand their sustainability ambitions and meet with the relevant people from PTC, partners, customers and several of my analyst friends. It felt like a reunion.

In addition, I used the opportunity to understand better their Velocity SaaS offering with OnShape and Arena. The almost 4-days event, with approximately 5000 attendees, was massive and well-organized.

So many people were excited that this was again an in-person event after four years.

With PTC’s broad product portfolio, you could easily have a full agenda for the whole event, depending on your interests.

I was personally motivated that I had a relatively full schedule focusing purely on Sustainability, leaving all these other beautiful end-to-end concepts for another time.

Here are some of my observations

Jim Heppelman’s keynote

The primary presentation of such an event is the keynote from PTC’s CEO. This session allows you to understand the company’s key focus areas.

My takeaways:

- Need for Speed: Software-driven innovation, or as Jim said, Software is eating the BOM, reminding me of my recent blog post: The Rise and Fall of the BOM. Here Jim was referring to the integration with ALM (CodeBeamer) and IoT to have full traceability of products. However, including Software also requires agile ways of working.

- Need for Speed: Agile ways of working – the OnShape and Arena offerings are examples of agile working methods. A SaaS solution is easy to extend with suppliers or other stakeholders. PTC calls this their Velocity offering, typical Systems of Engagement, and I spoke later with people working on this topic. More in the future.

- Need for Speed: Model-based digital continuity – a theme I have discussed in my blog post too. Here Jim explains the interaction between Windchill and ServiceMax, both Systems of Record for product definition and Operation.

- Environmental Sustainability: introducing Catherine Kniker, PTC’s Chief Strategy and Sustainability Officer, announcing that PTC has committed to Science Based Targets, pledging near-term emissions reductions and long-term net-zero targets – see image below and more on Sustainability in the next section.

- A further investment in a SaaS architecture, announcing CREO+ as a SaaS solution supporting dynamic multi-user collaboration (a System of Engagement)

- A further investment in the partnership with Ansys fits the needs of a model-based future where modeling and simulation go hand in hand.

You can watch the full session Path to the Future: Products in the Age of Transformation here.

Sustainability

The PGGA spoke with Dave Duncan and James Norman last year about PTC’s sustainability initiatives. Remember: PLM and Sustainability: talking with PTC. Therefore, Klaus Brettschneider and I were happy to meet Dave and James in person just before the event and align on understanding what’s coming at PTC.

The PGGA spoke with Dave Duncan and James Norman last year about PTC’s sustainability initiatives. Remember: PLM and Sustainability: talking with PTC. Therefore, Klaus Brettschneider and I were happy to meet Dave and James in person just before the event and align on understanding what’s coming at PTC.

We agreed there is no “sustainability super app”; it is more about providing an open, digital infrastructure to connect data sources at any time of the product lifecycle, supporting decision-making and analysis. It is all about reliable data.

Product Sustainability 101

On Tuesday, Dave Duncan gave a great introductory session, Product Sustainability 101, addressing Business Drivers and Technical Opportunities. Dave started by explaining the business context aiming at greenhouse gas (GHG) reduction based on science-based targets, describing the content of Scope 1, Scope 2 and Scope 3 emissions.

The image above, which came back in several presentations later that week, nicely describes the mapping of lifecycle decisions and operations in the context of the GHG protocol.

Design for Sustainability (DfS)

On Wednesday, I started with a session moderated by James Norman titled Design for Sustainability: Harnessing Innovation for a Resilient Future. The panel consisted of Neil D’Souza (CEO Makersite), Tim Greiner (MD Pure Strategies), Francois Lamy (SVP Product Management PTC) and Asheen Phansey (Director ESG & Sustainability at PagerDuty). You can find the topic discussed below:

Some of the notes I took:

- No specific PLM modules are needed, LCA needs to become an additional practice for companies, and they rely on a connected infrastructure.

- Where to start? First, understand the current baseline based on data collection – what is your environmental impact? Next, decide where to start

- The importance of Design for Service – many companies design products for easy delivery, not for service. Being able to service products better will extend their lifetime, therefore reducing their environmental impact (manufacturing/decommissioning)

- There Is a value chain for carbon data. In addition, suppliers significantly impact reaching net zero, as many OEMs have an Assembly To Order process, and most of the emissions are done during part manufacturing.

DfS: an example from Cummins

Next, on Wednesday, I attended the session from David Genter from Cummins, who presented their Design for Sustainability (DfS) project.

Next, on Wednesday, I attended the session from David Genter from Cummins, who presented their Design for Sustainability (DfS) project.

Dave started by sharing their 2030 sustainability goals:

- On Facilities and Operations: A reduction of 50 % of GHG emissions, reducing water usage by 30 %, reducing waste by 25 % and reducing organic compound emissions by 50%

- Reducing Scope 3 emissions for new products by 25%

- In general, reducing Scope 3 emissions by 55M metric tons.

The benefits for products were documented using a standardized scorecard (example below) to ensure the benefits are real and not based on wishful thinking.

Many motivated people wanted to participate in the project, and the ultimate result demonstrated that DfS has both business value for Cummins and the environment.

The project has been very well described in this whitepaper: How Cummins Made Changes to Optimize Product Designs for the Environment – a recommended case study to read.

Tangible Strategies for Improving Product Sustainability

The session was a dialogue between Catherine Kniker and Dave Duncan, discussing the strategies to move forward with Sustainability.

They reiterated the three areas where we as a PLM community can improve: Material choice and usage, Addressing Energy Emissions and Reducing Waste. And it is worth addressing them all, as you can see below – it is not only about carbon reduction.

It was an informative dialogue going through the different aspects of where we, as an engineering/ PLM community, can contribute. You can watch their full dialog here: Tangible Strategies for Improving Product Sustainability.

Conclusion

It was encouraging to see that at such an event as LiveWorx, you could learn about Sustainability and discuss Sustainability with the audience and PTC partners. And as I mentioned before, we need to learn to measure (data-driven / reliable data), and we need to be able to work in a connected infrastructure (digital thread) to allow design, simulation, validation and feedback to go hand in hand. It requires adapting a business strategy, not just a tactical solution. With the PLM Global Green Alliance, we are looking forward to following up on these.

NOTE: PTC covered the expenses associated with my participation in this event but did not in any way influence the content of this post – I made my tour fully independent through the conference and got encouraged by all the conversations I had.

Imagine you are a supplier working for several customers, such as big OEMs or smaller companies. In Dec 2020, I wrote about PLM and the Supply Chain because it was an underexposed topic in many companies. Suppliers need their own PLM and IP protection and work as efficiently as possible with their customers, often the OEMs.