You are currently browsing the category archive for the ‘Digital Enterprise’ category.

A week ago I attended the joined CIMdata Roadmap and PDT Europe conference in Stuttgart as you can recall from last week’s post: The weekend after CIMdata Roadmap / PDT Europe 2018. As there was so much information to share, I had to split the report into two posts. This time the focus on the PDT Europe. In general, the PDT conferences have always been focusing on sharing experiences and developments related to standards. A topic you will not see at PLM Vendor conferences. Therefore, your chance to learn and take part if you believe in standards.

A week ago I attended the joined CIMdata Roadmap and PDT Europe conference in Stuttgart as you can recall from last week’s post: The weekend after CIMdata Roadmap / PDT Europe 2018. As there was so much information to share, I had to split the report into two posts. This time the focus on the PDT Europe. In general, the PDT conferences have always been focusing on sharing experiences and developments related to standards. A topic you will not see at PLM Vendor conferences. Therefore, your chance to learn and take part if you believe in standards.

This year’s theme: Collaboration in the Engineering and Manufacturing Supply Chain – the Extended Digital Thread and Smart Manufacturing. Industry 4.0 plays a significant role here.

Model-based X: What is it and what is the status?

I have seen Peter Bilello presenting this topic now several times, and every time there is a little more progress. The fact that there is still an acronym war illustrated that the various aspects of a model-based approach are not yet defined. Some critics will be stating that’s because we do not need model-based and it is only a vendor marketing trick again. Two comments here:

I have seen Peter Bilello presenting this topic now several times, and every time there is a little more progress. The fact that there is still an acronym war illustrated that the various aspects of a model-based approach are not yet defined. Some critics will be stating that’s because we do not need model-based and it is only a vendor marketing trick again. Two comments here:

- If you want to implement an end-to-end model-based approach including your customers and supply chain, you cannot avoid standard. More will become clear when you read the rest of this post. Vendors will not promote standards as it reduces their capabilities to deliver unique So standards must come from the market, not from the marketing.

- In 2007 Carl Bass, at that time CEO at Autodesk made his statement: “There are only three customers in the world that have a PLM problem; Dassault, PTC, and There are no other companies that say I have a PLM problem”. Have a look here. PLM is understood by now and even by Autodesk. The statement illustrates that in the beginning the PLM target was not clear and people thought PLM was a system instead of a strategic approach. Model-based ways of working have to go through the same learning path, hopefully, faster.

Peter’s presentation was a good walk-through pointing out what exists, where we focus and that there is still working to be done. Not by vendors but by companies. Therefore I wholeheartedly agree with Peter’s closing remarks – no time to sit back and watch if you want to benefit from model-based approaches.

Smart Manufacturing

Kenny Swope, known from his presentations related to Boeing, now spoke to us as the Chair of the ISO/TC 184/SC 4 workgroup related to Industrial Data. To say it in decoded mode: Kenny is heading Sub-committee 4 with a focus on Industrial Data. SC4 is part of a more prominent theme: Automation Systems and integration identified by TC 184 all as part of the ISO framework. The scope:

Standardization of the content, meaning, structure, representation and quality management of the information required to define an engineered product and its characteristics at any required level of detail at any part of its lifecycle from conception through disposal, together with the interfaces required to deliver and collect the information necessary to support any business or technical process or service related to that engineered product during its lifecycle.

Perhaps boring to read if you think about all the demos you have seen at trade shows related to Smart Manufacturing. If you want these demos to become true in a vendor-independent environment, you will need to agree on a common framework of definitions to ensure future continuity beyond the demo. And here lies the business excitement, the real competitive advantages companies can have implementing Smart Manufacturing in a Scaleable, future-oriented way.

One of the often heard statements is that standards are too slow or incomplete. Incomplete is not a problem when there is a need, the standard will follow. Compare it with language, we will always invent new words for new concepts.

One of the often heard statements is that standards are too slow or incomplete. Incomplete is not a problem when there is a need, the standard will follow. Compare it with language, we will always invent new words for new concepts.

Being slow might be the case in the past. Kenny showed the relative fast convergence from country-specific Smart Manufacturing standards into a joined ISO/IEC framework – all within three years. ISO and IEC have been teaming-up already to build Smart Manufacturing Reference models.

This is already a considerable effort, as the local reference models need to be studied and mapped to a common architecture. The target is to have a first Technical Specification for a joint standard final 2020 – quite fast!

Meinolf Gröpper from the German VDMA presented what they are doing to support Smart Manufacturing / Industrie 4.0. The VDMA is a well-known engineering federation with 3200 member companies, 85 % of them are Small and Medium Enterprises – the power of the German economy.

Meinolf Gröpper from the German VDMA presented what they are doing to support Smart Manufacturing / Industrie 4.0. The VDMA is a well-known engineering federation with 3200 member companies, 85 % of them are Small and Medium Enterprises – the power of the German economy.

The VDMA provides networking capabilities, readiness assessments for members to be the enabler for companies to transform. As Meinolf stated Industrie 4.0 is not about technology, it is about cross-border services and international cooperation. A strategy that every company has to develop and if possible implement at its own pace. Standards will accelerate the implementation of Industrie 4.0

The Smart Manufacturing session was concluded by Gunilla Sivard, Professor at KTH in Stockholm and Hampus Wranér, Consultant at Eurostep. They presented the work done on the DIgln project, targeting an infrastructure for Smart Manufacturing.

The presentation showed the implementation of the testbed using twittering bus communication and the ISO 10303-239 PLCS information standard as the persistent layer. The results were promising to further build capabilities on top of the infrastructure below:

The conclusion from the Smart Manufacturing session was that emerging and available standards can accelerate the deployment.

The conclusion from the Smart Manufacturing session was that emerging and available standards can accelerate the deployment.

Enabling digital continuity in the Factory of the Future

Alcibiades Gonzalez-Noval from Airbus shared challenges and the strategy for Airbus’s factory of the future based on digital continuity from the virtual world towards the physical world, connecting with PLM, ERP, and MOM. Concepts many companies are currently working on with various maturity stages.

I agree with his lessons learned. We cannot think in silos anymore in a digital future – everything is connected. And please forget the PoC, to gain time start piloting and fail or succeed fast. Companies have lost years because of just doing PoCs and not going into action. The last point, networks segregation for sure is an issue, relevant for plant operations. I experienced this also in the past when promoting PLM concepts for (nuclear) owners/operators of plants. Network security is for sure an issue to resolve.

I agree with his lessons learned. We cannot think in silos anymore in a digital future – everything is connected. And please forget the PoC, to gain time start piloting and fail or succeed fast. Companies have lost years because of just doing PoCs and not going into action. The last point, networks segregation for sure is an issue, relevant for plant operations. I experienced this also in the past when promoting PLM concepts for (nuclear) owners/operators of plants. Network security is for sure an issue to resolve.

Cross-Discipline Lifecycle Collaboration Forum

Setting up the digital thread across engineering and the value chain.

Peter Gerber, Chairman of CDLC Forum and Data Exchange & Integration Leader at Schaefller and Pierre Bodin at Senior Manager Mews Partners, presented their findings related to the challenge of managing complex products (mechanical, electrical, software using system engineering methodology) to work properly at affordable cost in a real-time mode, multidisciplinary and coordination across the whole value chain. Something you might expect could be done when reviewing all PLM Vendor’s marketing materials, something you might expect hard to do when remembering Martin Eigner’s statement that 95 % of the companies have not solved mechatronics collaboration yet. (See: The weekend after CIMdata PLM Roadmap and PDT Europe)

A demonstrator was defined, and various vendors participated in building a demonstrator based on their Out-Of-The-Box capabilities. The result showed that for all participants there were still gaps to resolve for full collaboration. A new version of the demonstrator is now planned for the middle of next year – curious to learn the results at that time. Multi-disciplinary collaboration is a (conceptual) pillar for future digital business – it needs to be possible.

A Digital Thread based on the PLCS standard.

Nigel Shaw, Eurostep’s managing director in the UK, took us through his evolution of PLCS (Product Life Cycle Support) and extension of the ISO 10303 STEP standard. (STEP Standard for Exchange of Product data). Nigel mentioned how over all these years, millions (and a lot of brain power) have been invested in PLCS to where it is now.

Nigel Shaw, Eurostep’s managing director in the UK, took us through his evolution of PLCS (Product Life Cycle Support) and extension of the ISO 10303 STEP standard. (STEP Standard for Exchange of Product data). Nigel mentioned how over all these years, millions (and a lot of brain power) have been invested in PLCS to where it is now.

PLCS has been extremely useful as an interface standard for contracting, provide product data in a neutral way. As an example, last year the Swedish Defense organization (FMV) and France’s DGA made PLCS DEXs as part of the contractual conditions. It would be too costly to have all product data for all defense systems in proprietary vendor formats and this over the product lifecycle.

PLCS has been extremely useful as an interface standard for contracting, provide product data in a neutral way. As an example, last year the Swedish Defense organization (FMV) and France’s DGA made PLCS DEXs as part of the contractual conditions. It would be too costly to have all product data for all defense systems in proprietary vendor formats and this over the product lifecycle.

Those following the standards in the process industry will rely on ISO 15926 / CFIHOS as this standard’s dictionary, and data model is more geared to process data- and in particular the exchange of data from the various contractors with the owner/operator.

Coming back to PLCS and the Digital Twin – it is all about digital continuity of information. Otherwise, if we have to recreate information in every lifecycle stage of a product (design/manufacturing / operations), it will be too costly and not digital connected. This illustrates the growing needs for standards. I had nothing to add to Nigel’s conclusions:

It is interesting to note that product management has moved a long way over the last 10-20 years however as we include more and more into PLM, there are all the time new concepts to be solved. The cases we discuss today in our PLM communities were most of the time visions 10 years ago. Nowadays we want to include Model-Based Systems Engineering, 3D Modeling and simulation, electronics and software and even aftermarket, product support in true PLM. This was not the case 20 years ago. The people involved in the development of PLCS were for sure visionaries as product data connectivity along the whole lifecycle is needed and enabled by the standard.

Investing in Industry 4.0?

Hard Realities of the Grand Vision.

![]() Marc Halpern from Gartner is one of the regular speakers at the PDT conference. Unfortunate he could not be with us that day, however, through a labor-intensive connection (mobile phone close to the speaker and Nigel Shaw trying to stay in sync with the presented slides) we could hear Marc speak about what we wanted to achieve too – a digital continuity.

Marc Halpern from Gartner is one of the regular speakers at the PDT conference. Unfortunate he could not be with us that day, however, through a labor-intensive connection (mobile phone close to the speaker and Nigel Shaw trying to stay in sync with the presented slides) we could hear Marc speak about what we wanted to achieve too – a digital continuity.

Marc restated the massive potential of Industrie 4.0 when it comes to scalability, agility, flexibility, and efficiency.

Although technologies are evolving rapidly, it is the existing legacy that inhibits fast adoption. A topic that was also central in my presentation. It is not just a change in technology, there is much more connected.

Marc recommends a changing role for IT, where they should focus more on business priorities and business leadership strategies. This as opposed to the classical role of the IT organization where IT needed to support the business, now they will be part of leading the business too.

To orchestrate such an IT evolution, Marc recommends a “systems of systems” planning and execution across IT and Business. One of my recent blog posts: Moving to a model-based enterprise: The business (information) model can be seen in that context.

To orchestrate such an IT evolution, Marc recommends a “systems of systems” planning and execution across IT and Business. One of my recent blog posts: Moving to a model-based enterprise: The business (information) model can be seen in that context.

How to deal with the incompatible future?

I was happy to conclude the sessions with the topic that concerns me the most at this time. Companies in their current business are already struggling to get aligned and coordinated between disciplines and external stakeholders, the gap to be connected is vast as it requires a master data management approach, an enterprise data model and model-based ways of working. Read my posts from the past ½ year starting here, and you get the picture.

Note: This image is based on Marc Halpern’s (Gartner) Technology/Maturity diagram from PDT 2015

I concluded with explaining companies need to learn to work in two modes. One mode will be the traditional way of working which I call the coordinated approach and a growing focus on operating in a connected mode. You can see my full presentation here on SlideShare: How to deal with the incompatible future.

Conclusion

The conference was closed with a panel discussion where we shared our concerns related to the challenges companies face to change their traditional ways of working meanwhile entering a digital era. The positive points are there – baby steps – PLM is becoming understood, the significance of standards is becoming more clear. The need: a long-term vision.

This concludes my review of an excellent conference – I learned again a lot and I hope to see you next year too. Thanks again to CIMdata and Eurostep for organizing this event

Last week I attended the long-awaited joined conference from CIMdata and Eurostep in Stuttgart. As I mentioned in earlier blog posts. I like this conference because it is a relatively small conference with a focused audience related to a chosen theme.

Last week I attended the long-awaited joined conference from CIMdata and Eurostep in Stuttgart. As I mentioned in earlier blog posts. I like this conference because it is a relatively small conference with a focused audience related to a chosen theme.

Instead of parallel sessions, all attendees follow the same tracks and after two days there is a common understanding for all. This time there were about 70 people discussing the themes: Digitalizing Reality—PLM’s role in enabling the digital revolution (CIMdata) and Collaboration in the Engineering and Manufacturing Supply Chain –the Extended Digital Thread and Smart Manufacturing (EuroStep)

Instead of parallel sessions, all attendees follow the same tracks and after two days there is a common understanding for all. This time there were about 70 people discussing the themes: Digitalizing Reality—PLM’s role in enabling the digital revolution (CIMdata) and Collaboration in the Engineering and Manufacturing Supply Chain –the Extended Digital Thread and Smart Manufacturing (EuroStep)

As you can see all about Digital. Here are my comments:

The State of the PLM Industry:

The Digital Revolution

Peter Bilello kicked off with providing an overview of the PLM industry. The PLM market showed an overall growth of 7.3 % toward 43.6 Billion dollars. Zooming in into the details cPDM grew with 2.9 %. The significant growth came from the PLM tools (7.7 %). The Digital Manufacturing sector grew at 6.2 %. These numbers show to my opinion that in particular, managing collaborating remains the challenging part for PLM. It is easier to buy tools than invest in cPDM.

Peter Bilello kicked off with providing an overview of the PLM industry. The PLM market showed an overall growth of 7.3 % toward 43.6 Billion dollars. Zooming in into the details cPDM grew with 2.9 %. The significant growth came from the PLM tools (7.7 %). The Digital Manufacturing sector grew at 6.2 %. These numbers show to my opinion that in particular, managing collaborating remains the challenging part for PLM. It is easier to buy tools than invest in cPDM.

Peter mentioned that at the board level you cannot sell PLM as this acronym is too much framed as an engineering tool. Also, people at the board have been trained to interpret transactional data and build strategies on that. They might embrace Digital Transformation. However, the Product innovation related domain is hard to define in numbers. What is the value of collaboration? How do you measure and value innovation coming from R&D? Recently we have seen more simplified approaches how to get more value from PLM. I agree with Peter, we need to avoid the PLM-framing and find better consumable value statements.

Nothing to add to Peter’s closing remarks:

An Alternative View of the Systems Engineering “V”

For me, the most interesting presentation of Day 1 was Don Farr’s presentation. Don and his Boeing team worked on depicting the Systems Engineering process for a Model-Based environment. The original “V” looks like a linear process and does not reflect the multi-dimensional iterations at various stages, the concept of a virtual twin and the various business domains that need to be supported.

For me, the most interesting presentation of Day 1 was Don Farr’s presentation. Don and his Boeing team worked on depicting the Systems Engineering process for a Model-Based environment. The original “V” looks like a linear process and does not reflect the multi-dimensional iterations at various stages, the concept of a virtual twin and the various business domains that need to be supported.

The result was the diamond symbol above. Don and his team have created a consistent story related to the depicted diamond which goes too far for this blog post. Current the diamond concept is copyrighted by Boeing, but I expect we will see more of this in the future as the classical systems engineering “V” was not design for our model-based view of the virtual and physical products to design AND maintain.

Sponsor vignette sessions

The vignette sponsors of the conference, Aras, ESI,-group, Granta Design, HCL, Oracle and TCS all got a ten minutes’ slot to introduce themselves, and the topics they believed were relevant for the audience. These slots served as a teaser to come to their booth during a break. Interesting for me was Granta Design who are bringing a complementary data service related to materials along the product lifecycle, providing a digital continuity for material information. See below.

The PLM – CLM Axis vital for Digitalization of Product Process

![]() Mikko Jokela, Head of Engineering Applications CoE, from ABB, completed the morning sessions and left me with a lot of questions. Mikko’s mission is to provide the ABB companies with an information infrastructure that is providing end-to-end digital services for the future, based on apps and platform thinking.

Mikko Jokela, Head of Engineering Applications CoE, from ABB, completed the morning sessions and left me with a lot of questions. Mikko’s mission is to provide the ABB companies with an information infrastructure that is providing end-to-end digital services for the future, based on apps and platform thinking.

Apparently, the digital continuity will be provided by all kind of BOM-structures as you can see below. In my post, Coordinated or Connected, related to a model-based enterprise I call this approach a coordinated approach, which is a current best practice, not an approach for the future. There we want a model-based enterprise instead of a BOM-centric approach to ensure a digital thread. See also Don Farr’s diamond. When I asked Mikko which data standard(s) ABB will use to implement their enterprise data model it became clear there was no concept yet in place. Perhaps an excellent opportunity to look at PLCS for the product related schema.

In my post, Coordinated or Connected, related to a model-based enterprise I call this approach a coordinated approach, which is a current best practice, not an approach for the future. There we want a model-based enterprise instead of a BOM-centric approach to ensure a digital thread. See also Don Farr’s diamond. When I asked Mikko which data standard(s) ABB will use to implement their enterprise data model it became clear there was no concept yet in place. Perhaps an excellent opportunity to look at PLCS for the product related schema.

A general comment: Many companies are thinking about building their own platform. Not all will build their platform from scratch. For those starting from scratch have a look at existing standards for your industry. And to manage the quality of data, you will need to implement Master Data Management, where for the product part the PLM system can play a significant role. See Master Data Management and PLM.

Systems of Systems Approach to Product Design

Professor Martin Eigner keynote presentation was about the concepts how new products and markets need a Systems of Systems approach combined with Model-Based Systems Engineering (MBSE) and Product Line Engineering (PLE) where the PLM system can be the backbone to support the MBSE artifacts in context. All these concepts require new ways of working as stated below:

And this is a challenge. A quick survey in the room (and coherent with my observations from the field) is the fact that most companies (95 %) haven’t even achieved to work integrated for mechatronics products. You can imagine the challenge to incorporate also Software, Simulation, and other business disciplines. Martin’s presentations are always an excellent conceptual framework for those who want to dive deeper a start point for discussion and learning.

Additive Manufacturing (Enabled Supply) at Moog

Moog Inc, a manufacturer of precision motion controls for various industries have made a strategic move towards Additive Manufacturing. Peter Kerl, Moog’s Engineering Systems Manager, gave a good introduction what is meant by Additive Manufacturing and how Moog is introducing Additive Manufacturing in their organization to create more value for their customer base and attract new customers in a less commodity domain. As you can image delivering products through Additive Manufacturing requires new skills (Design / Materials), new processes and a new organizational structure. And of course a new PLM infrastructure.

Moog Inc, a manufacturer of precision motion controls for various industries have made a strategic move towards Additive Manufacturing. Peter Kerl, Moog’s Engineering Systems Manager, gave a good introduction what is meant by Additive Manufacturing and how Moog is introducing Additive Manufacturing in their organization to create more value for their customer base and attract new customers in a less commodity domain. As you can image delivering products through Additive Manufacturing requires new skills (Design / Materials), new processes and a new organizational structure. And of course a new PLM infrastructure.

Jim van Oss, Moog’s PLM Architect and Strategist, explained how they have been involved in a technology solution for digital-enabled parts leveraging blockchain technology. Have a look at their VeriPart trademark. It was interesting to learn from Peter and Jim that they are actively working in a space that according to the Gartner’s hype curve is in the early transform phase. Peter and Jim’s presentation were very educational for the audience.

For me, it was also interesting to learn from Jim that at Moog they were really practicing the modes for PLM in their company. Two PLM implementations, one with the legacy data and the wrong data for the future and one with the new data model for the future. Both implementations build on the same PLM vendor’s release. A great illustration showing the past and the future data for PLM are not compatible

Value Creation through Synergies between PLM & Digital Transformation

![]() Daniel Dubreuil, Safran’s CDO for Products and Services gave an entertaining lecture related to Safran’s PLM journey and the introduction of new digital capabilities, moving from an inward PLM system towards a digital infrastructure supporting internal (model-based systems engineering / multiple BOMs) and external collaboration with their customers and suppliers introducing new business capabilities. Daniel gave a very precise walk-through with examples from the real world. The concluding slide: KEY SUCCESS FACTORS was a slide that we have seen so many times at PLM events.

Daniel Dubreuil, Safran’s CDO for Products and Services gave an entertaining lecture related to Safran’s PLM journey and the introduction of new digital capabilities, moving from an inward PLM system towards a digital infrastructure supporting internal (model-based systems engineering / multiple BOMs) and external collaboration with their customers and suppliers introducing new business capabilities. Daniel gave a very precise walk-through with examples from the real world. The concluding slide: KEY SUCCESS FACTORS was a slide that we have seen so many times at PLM events.

Apparently, the key success factors are known. However, most of the time one or more of these points are not possible to address due to various reasons. Then the question is: How to mitigate this risk as there will be issues ahead?

Bringing all the digital trends together. What’s next?

The day ended with a virtual Fire Place session between Peter Bilello and Martin Eigner, the audience did not see a fireplace however my augmented twitter feed did it for me:

Some interesting observations from this dialogue:

Peter: “Having studied physics is a good base for understanding PLM as you have to model things you cannot see” – As I studied physics I can agree.

Martin: “Germany is the center of knowledge for Mechanical, the US for Electronics and now China becoming the center for Electronics and Software” Interesting observation illustrating where the innovation will come from.

Both Peter and Martin spent serious time on the importance of multidisciplinary education. We are teaching people in silos, faculties work in silos. We all believe these silos must be broken down. It is hard to learn and experiment skills for the future. Where to start and lead?

Conclusion:

The PLM roadmap had some exciting presentations combined with CIMdata’s PLM update an excellent opportunity to learn and discuss reality. In particular for new methodologies and technologies beyond the hype. I want to thank CIMdata for the superb organization and allowing me to take part. Next week I will follow-up with a review of the PDT Europe conference part (Day 2)

Ontology example: description of the business entities and their relationships

In my recent posts, I have talked a lot about the model-based enterprise and already after my first post: Model-Based – an introduction I got a lot of feedback where most of the audience was automatically associating the words Model-Based to a 3D CAD Model.

In my recent posts, I have talked a lot about the model-based enterprise and already after my first post: Model-Based – an introduction I got a lot of feedback where most of the audience was automatically associating the words Model-Based to a 3D CAD Model.

Trying to clarify this through my post: Why Model-Based – the 3D CAD Model stirred up the discussion even more leading into: Model- Based: The confusion.

A Digital Twin of the Organization

At that time, I briefly touched on business models and business processes that also need to be reshaped and build for a digital enterprise. Business modeling is necessary if you want to understand and streamline large enterprises, where nobody can overview the overall company. This approach is like systems engineering where we try to understand and simulate complex systems.

![]() With this post, I want to close on the Model-Based series and focus on the aspects of the business model. I was caught by this catchy article: How would you like a digital twin of your organization? which provides a nice introduction to this theme. Also, I met with Steve Dunnico, Creator and co-founder of Clearvision, a Swedish startup company focusing on modern ways of business modeling.

With this post, I want to close on the Model-Based series and focus on the aspects of the business model. I was caught by this catchy article: How would you like a digital twin of your organization? which provides a nice introduction to this theme. Also, I met with Steve Dunnico, Creator and co-founder of Clearvision, a Swedish startup company focusing on modern ways of business modeling.

Introduction

Jos (VirtualDutchman): Steve can you give us an introduction to your company and the which parts of the model-based enterprise you are addressing with Clearvision?

Steve (Clearvision): Clearvision started as a concept over two decades ago – modeling complex situations across multiple domains needed a simplistic approach to create a copy of the complete ecosystem. Along the way, technology advancements have opened up big-data to everyone, and now we have Clearvision as a modeling tool/SaaS that creates a digital business ecosystem that enables better visibility to deliver transformation.

Steve (Clearvision): Clearvision started as a concept over two decades ago – modeling complex situations across multiple domains needed a simplistic approach to create a copy of the complete ecosystem. Along the way, technology advancements have opened up big-data to everyone, and now we have Clearvision as a modeling tool/SaaS that creates a digital business ecosystem that enables better visibility to deliver transformation.

As we all know, change is constant, so we must transition from the old silo projects and programs to a business world of continuous monitoring and transformation.

Clearvision enables this by connecting the disparate parts of an organization into a model linking people, competence, technology services, data flow, organization, and processes.

Complex inter-dependencies can be visualized, showing impact and opportunity to deliver corporate transformation goals in measured minimum viable transformation – many small changes, with measurable benefit, delivered frequently. This is what Clearvision enables!

Jos: What is your definition of business modeling?

Steve: Business modeling historically, has long been the domain of financial experts – taking the “business model” of the company (such as production, sales, support) and looking at cost, profit, margins for opportunity and remodeling to suit. Now, with the availability of increased digital data about many dimensions of a business, it is possible to model more than the financials.

Steve: Business modeling historically, has long been the domain of financial experts – taking the “business model” of the company (such as production, sales, support) and looking at cost, profit, margins for opportunity and remodeling to suit. Now, with the availability of increased digital data about many dimensions of a business, it is possible to model more than the financials.

This is the business modeling that we (Clearvision) work with – connecting all the entities that define a business so that a change is connected to process, people, data, technology and other dimensions such as cost, time, quality. So if we change a part, all of the connected parts are checked for impact and benefit.

Jos: What are the benefits of business modeling?

Steve: Connecting the disparate entities of a business opens up limitless opportunities to analyze “what is affected if I change this?”. This can be applied to simple static “as-is” gap analyses, to the more advanced studies needed to future forecast and move into predictive planning rather than reactive.

Steve: Connecting the disparate entities of a business opens up limitless opportunities to analyze “what is affected if I change this?”. This can be applied to simple static “as-is” gap analyses, to the more advanced studies needed to future forecast and move into predictive planning rather than reactive.

The benefits of using a digital model of the business ecosystem are applicable to the whole organization. The “C-suite” team get to see heat-maps for not only technology-project deliveries but can use workforce-culture maps to assess the company’s understanding and adoption of new ways of working and achievement of strategic goals. While at an operational level, teams can collaborate more effectively knowing which parts of the ecosystem help or hinder their deliveries and vice-versa.

Jos: Is business modeling applicable for any type or size of the company?

The complexity of business has driven us to silo our way of working, to simplify tasks to achieve our own goals, and it is larger organizations which can benefit from modeling their business ecosystems. On that basis, it is unlikely that a standalone small business would engage in its own digital ecosystem model. However, as a supplier to a larger organization, it can be beneficial for the larger organizations to model their smaller suppliers to ensure a holistic view of their ecosystem.

The complexity of business has driven us to silo our way of working, to simplify tasks to achieve our own goals, and it is larger organizations which can benefit from modeling their business ecosystems. On that basis, it is unlikely that a standalone small business would engage in its own digital ecosystem model. However, as a supplier to a larger organization, it can be beneficial for the larger organizations to model their smaller suppliers to ensure a holistic view of their ecosystem.

The core digital business ecosystem model delivers integrated views of dependencies, clashes, hot-spots to support transformation

Jos: How is business modeling related to digital transformation?

Digital transformation is an often heard topic in large corporations, by implication we should take advantage of the digital data we generate and collect in our businesses and connect it, so we benefit from the whole not work in silos. Therefore, using a digital model of a business ecosystem will help identify areas of connectivity and collaboration that can deliver best benefit but through Minimum Viable Transformation, not a multi-year program with a big-bang output (which sometimes misses its goals…).

Digital transformation is an often heard topic in large corporations, by implication we should take advantage of the digital data we generate and collect in our businesses and connect it, so we benefit from the whole not work in silos. Therefore, using a digital model of a business ecosystem will help identify areas of connectivity and collaboration that can deliver best benefit but through Minimum Viable Transformation, not a multi-year program with a big-bang output (which sometimes misses its goals…).

Today’s digital technology brings new capabilities to businesses and is driving competence changes in organizations and their partner companies. So another use of business modeling is to map competence of internal/external resources to the needed capabilities of digital transformation. Mapping competence rather than roles brings a better fit for resources to support transformation. Understanding which competencies we have and what the gaps are pr-requisite to plan and deliver transformation.

Jos: Then perhaps close with your Clearvision mission where you fit (uniquely)?

Having worked on early digital business ecosystem models in the late 90’s, we’ve cut our teeth on slow processing time, difficult to change data relationships and poor access to data, combined with a very silo’d work mentality. Clearvision is now positioned to help organizations realize that the value of the whole of their business is greater than the sum of their parts (silos) by enabling a holistic view of their business ecosystem that can be used to deliver measured transformation on a continual basis.

Having worked on early digital business ecosystem models in the late 90’s, we’ve cut our teeth on slow processing time, difficult to change data relationships and poor access to data, combined with a very silo’d work mentality. Clearvision is now positioned to help organizations realize that the value of the whole of their business is greater than the sum of their parts (silos) by enabling a holistic view of their business ecosystem that can be used to deliver measured transformation on a continual basis.

Jos: Thanks Steve for your contribution and with this completing the series of post related to a model-based enterprise with its various facets. I am aware this post the opinion from one company describing the importance of a model-based business in general. There are no commercial relations between the two of us and I recommend you to explore this topic further in case relevant for your situation.

Conclusion

Companies and their products are becoming more and more complex, most if it happening now, a lot more happening in the near future. In order to understand and manage this complexity models are needed to virtually define and analyze the real world without the high costs of making prototypes or changes in the real world. This applies for organizations, for systems, engineering and manufacturing coordination and finally in-field operating systems. They all can be described by – connected – models. This is the future of a model-based enterprise

Coming up next time: CIMdata PDM Roadmap Europe and PDT Europe. You can still register and meet a large group of people who care about the details of aspects of a digital enterprise

In my earlier posts, I explored the incompatibility between current PLM practices and future needs for digital PLM. Digital PLM is one of the terms I am using for future concepts. Actually, in a digital enterprise, system borders become vague, it is more about connected platforms and digital services. Current PLM practices can be considered as Coordinated where the future for PLM is aimed at Connected information. See also Coordinated or Connected.

Moving from current PLM practices toward modern ways of working is a transformation for several reasons.

- First, the scope of current PLM implementation is most of the time focusing on engineering. Digital PLM aims to offer product information services along the product lifecycle.

- Second, because the information in current PLM implementations is mainly stored in documents – drawings still being the leading In advanced PLM implementations BOM-structures, the EBOM and MBOM are information structures, again relying on related specification documents, either CAD- or Office files.

So let’s review the transformation challenges related to moving from current PLM to Digital PLM

Current PLM – document management

The first PLM implementations were most of the time advanced cPDM implementations, targeting sharing CAD models and drawings. Deployments started with the engineering department with the aim to centralize product design information. Integrations with mechanical CAD systems had the major priority including engineering change processes. The multidisciplinary collaboration was enabled by introducing the concept of the Engineering Bill of Materials (EBOM). Every discipline, mechanical, electrical and sometimes (embedded) software teams, linked their information to the EBOM. The product release process was driven by the EBOM. If the EBOM is released, the product is fully specified and can be manufactured.

Although people complain implementing PLM is complex, this type of implementation is relatively simple. The only added mental effort you are demanding from the PLM user is to work in a structured way and have a more controlled (rigid) way of working compared to a directory structure approach. For many people, this controlled way of working is already considered a limitation of their freedom. However, companies are not profitable because their employees are all artists working in full freedom. They become successful if they can deliver in some efficient way products with consistent quality. In a competitive, global market there is no room anymore for inefficient ways of working as labor costs are adding to the price.

Although people complain implementing PLM is complex, this type of implementation is relatively simple. The only added mental effort you are demanding from the PLM user is to work in a structured way and have a more controlled (rigid) way of working compared to a directory structure approach. For many people, this controlled way of working is already considered a limitation of their freedom. However, companies are not profitable because their employees are all artists working in full freedom. They become successful if they can deliver in some efficient way products with consistent quality. In a competitive, global market there is no room anymore for inefficient ways of working as labor costs are adding to the price.

The way people work in this cPDM environment is coordinated, meaning based on business processes the various stakeholders agree to offer complete sets of information (read: documents) to contribute to the full product definition. If all contributions are consistent depends on the time and effort people spent to verify and validate their consistency. Often this is not done thoroughly and errors are only discovered during manufacturing or later in the field. Costly but accepted as it has always been the case.

Next Step PLM – coordinated document management / item-centric

When the awareness exists that data needs to flow through an organization in a consistent manner, the next step of PLM implementations comes into the picture. Here I would state we are really talking about PLM as the target is to share product data outside the engineering department.

The first logical extension for PLM is moving information from an EBOM view (engineering) toward a Manufacturing Bill of Materials (MBOM) view. The MBOM is aiming to represent the manufacturing definition of the product and becomes a placeholder to link with the ERP system and suppliers directly. Having an integrated EBOM / MBOM process with your ERP system is already a big step forward as it creates an efficient way of working to connect engineering and manufacturing.

As all the information is now related to the EBOM and MBOM, this approach is often called the item-centric approach. The Item (or Part) is the information carrier linked to its specification documents.

Managing the right version of the information in relation to a specific version of the product is called configuration management. And the better you have your configuration management processes in place, the more efficient and with high confidence you can deliver and support your products. Configuration Management is again a typical example where we are talking about a coordinated approach to managing products and documents.

Implementing this type of PLM requires already more complex as it needs different disciplines to agree on a collective process across various (enterprise) systems. ERP integrations are technically not complicated, it is the agreement on a leading process that makes it difficult as the holistic view is often failing.

Next, next step PLM – the Digital Thread

Continuing reading might give you the impression that the next step in PLM evolution is the digital thread. And this can be the case depending on your definition of the digital thread. Oleg Shilovitsky recently published an article: Digital Thread – A new catchy phrase to replace PLM? related to his observations from ConX18 illustrate that there are many viewpoints to this concept. And of course, some vendors promote their perfect fit based on their unique definition. In general, I would classify the idea of Digital Thread in two approaches:

The Digital Thread – coordinated

In the Digital Thread – coordinated approach we are not revolutionizing the way of working in an enterprise. In the coordinated approach, the PLM environment is connected with another overlay, combining data from various disciplines into an environment where the dependencies are traceable. This can be the Aras overlay approach (here explained by Oleg Shilovitsky), the PTC Navigate approach or others, using a new extra layer to connect the various discipline data and create traceability in a more or less non-intrusive way. Similar concepts, but less intrusive can be done through Business Intelligence applications, although they are more read-only than a system approach.

In the Digital Thread – coordinated approach we are not revolutionizing the way of working in an enterprise. In the coordinated approach, the PLM environment is connected with another overlay, combining data from various disciplines into an environment where the dependencies are traceable. This can be the Aras overlay approach (here explained by Oleg Shilovitsky), the PTC Navigate approach or others, using a new extra layer to connect the various discipline data and create traceability in a more or less non-intrusive way. Similar concepts, but less intrusive can be done through Business Intelligence applications, although they are more read-only than a system approach.

The Digital Thread – connected

In the Digital Thread – connected approach the idea is that information is stored in an extremely granular way and shared among disciplines. Instead of the coordinated way, where every discipline can have its own data sources, here the target is to be data-driven (neutral/standard formats). I described this approach in the various aspects of the model-based enterprise. The challenge of a connected enterprise is the standardized data definition to make it available for all stakeholders.

Working in a connected enterprise is extremely difficult, in particular for people educated in the old-fashioned ways of working. If you have learned to work with shared documents, like Google Docs or Office documents in sharing mode, you will understand the mental change you have to go through. Continuous sharing of the information instead of waiting until you feel your part is complete.

Working in a connected enterprise is extremely difficult, in particular for people educated in the old-fashioned ways of working. If you have learned to work with shared documents, like Google Docs or Office documents in sharing mode, you will understand the mental change you have to go through. Continuous sharing of the information instead of waiting until you feel your part is complete.

In the software domain, companies are used to working this way and integrating data in a continuous stream. We have to learn to apply these practices also to a complete product lifecycle, where the product consists of hardware and software.

Still, the connect way of working is the vision that digital enterprises should aim for as it dramatically reduces the overhead of information conversion, overhead, and ambiguity. How we will implement in the context of PLM / Product Innovation is a learning process, where we should not be blocked by our echo chamber as Jan Bosch states in his latest post: Don’t Get Stuck In Your Company’s Echo Chamber

Jan Bosch is coming from the software world, promoting the Software-Centric Systems conference SC2 as a conference to open up your mind. I recommend you to take part in upcoming PLM-related events: CIMdata’s PLM roadmap Europe combined with PDT Europe on 24/25th October in Stuttgart, or if you are living in the US there is the upcoming PI PLMx CHICAGO 2018 on Nov 5/6th.

Jan Bosch is coming from the software world, promoting the Software-Centric Systems conference SC2 as a conference to open up your mind. I recommend you to take part in upcoming PLM-related events: CIMdata’s PLM roadmap Europe combined with PDT Europe on 24/25th October in Stuttgart, or if you are living in the US there is the upcoming PI PLMx CHICAGO 2018 on Nov 5/6th.

Conclusion

Learning and understanding are crucial and take time. A digital transformation has many aspects to learn – keep in mind the difference between coordinated (relatively easy) and connected (extraordinarily challenging but promising). Unfortunately there is no populist way to become digital.

Note:

If you want to continue learning, please read this post – The True Impact of Industry 4.0 Revealed -and its internal links to reference information from Martijn Dullaart – so relevant.

What I want to discuss this time is the challenging transformation related to product data that needs to take place.

The top image of this post illustrates the current PLM world on the left, and on the right, the potential future positioning of PLM in a digital enterprise. How the right side will behave is still vague – it can be a collection of platforms or a vast collection of small services, all contributing to the performance of the company. Some vendors might dream all these capabilities are defined in one system of systems, like the human body; all functions are available and connected.

The top image of this post illustrates the current PLM world on the left, and on the right, the potential future positioning of PLM in a digital enterprise. How the right side will behave is still vague – it can be a collection of platforms or a vast collection of small services, all contributing to the performance of the company. Some vendors might dream all these capabilities are defined in one system of systems, like the human body; all functions are available and connected.

Coordinated or connected?

This is THE big question for a future digital enterprise. In the current PLM approach, there are governance structures that allow people to share data along the product lifecycle in a structured way.

These governance structures can be project breakdown structures, where with a phase-gate approach, the full delivery is guided. Deliverables related to tasks and gates will make sure information is stored and available for every stakeholder. For example, a well-known process in the automotive industry, the Advanced Product Quality Process ( APQP process) is a standardized approach to make sure parts or products are introduced with the right quality for the customer.

Deliverables at any stage in the process can be reviewed or consumed by another stakeholder. The result is most of the time a collection of approved documents (Office-type, Design & Test files) stored centrally. This is what I would call a coordinated data approach.

In complex environments, besides the project governance, there will be product structures and Bill of Materials, where each object in such a structure will be the placeholder for related information. In case of a product structure it can be its specifications per component, in case of a Bill of Materials, it can be its design specification (usually in CAD models) and its manufacturing specifications, in case of an MBOM.

Although these structures contain information about the product composition themselves, the related information makes the content understandable/realizable.

Again it is a coordinated approach, and most PLM systems and implementations are focused on providing these structures.

Sometimes with their own system only – you need to follow the vendor portfolio to get the full benefit or sometimes, the system is positioned as an overlay to existing systems in the company, therefore less invasive.

Providing a single version of the truth is often associated with this approach. The question is: Is the green bin on the left the single version of the truth?

The Coordinated – Single Version of the Truth – problem

The challenge of a coordinated approach is that there is no thorough consistency in checking if the data delivered is representing the real truth. Through serious review procedures, we do our best to make sure every deliverable has the required content and quality. As information inside these deliverables is not connected to the outside world, there will be discrepancies between reality and what has been stored. Still, we feel comfortable enough as an organization to pretend we know where the risks are. Until the costly impossible happens!

The challenge of a coordinated approach is that there is no thorough consistency in checking if the data delivered is representing the real truth. Through serious review procedures, we do our best to make sure every deliverable has the required content and quality. As information inside these deliverables is not connected to the outside world, there will be discrepancies between reality and what has been stored. Still, we feel comfortable enough as an organization to pretend we know where the risks are. Until the costly impossible happens!

The connected enterprise

The ultimate dream of a digital enterprise is that everything relevant is connected in context. This means no more documents or files but a very granular information model for linking data and keeping it in context. We can apply algorithms and automation to connected data and use Artificial Intelligence to make sense of massive amounts of data.

Connected data allows us to share combined sets of information that are relevant to a particular role. Real-time dashboarding is one of the benefits of such an infrastructure. There are still a lot of challenges with this approach. How do we know which information is valid in the context of other information? What are the rules that describe a valid product or project baseline at a particular time?

Connected data allows us to share combined sets of information that are relevant to a particular role. Real-time dashboarding is one of the benefits of such an infrastructure. There are still a lot of challenges with this approach. How do we know which information is valid in the context of other information? What are the rules that describe a valid product or project baseline at a particular time?

Although all data is stored as unique information objects in a network of information, we cannot apply the old mechanisms for a coordinated approach all the time. Generated reports from a connected environment can still serve as baselines or records related to a specific state, such as when the design was approved for manufacturing, we can generate approved Product Baselines structures or Bill of Materials structures.

However, this linearity in the lifecycle for passing information through an enterprise will not exist anymore. It might be there are various design alternatives, and the delivery process is already part of the design phase. Through integrated virtual simulation and testing, we reach a state where the product satisfies the market for that moment, and the delivery process is known at the same time

Almost immediately and based on first experiences in the field, new features can be added virtually, tested and validated for the next stage. We need to design new PLM infrastructures that can support this granularity and, therefore, complexity.

The connected – Single Version of the Truth – problem

The concepts I described related to the connected enterprise made me realize that this is analog to how the brain works. Our brain is a giant network of connected information, dynamically maintaining associations, having different abstraction levels and always pretending there is one truth.

The concepts I described related to the connected enterprise made me realize that this is analog to how the brain works. Our brain is a giant network of connected information, dynamically maintaining associations, having different abstraction levels and always pretending there is one truth.

If you want to understand a potential model of the brain, please read On Intelligence from Jeff Hawkins. With the possible upcoming of the Quantum Computer, we might be able to create performing brain models.

In my earlier post: Are we blocking our future, I referred to the book; The Idiot Brain: What Your Head is Really Up To from Dean Burnett, where Dean is stating that due to the complexity of stored information, our brain continuously adapts “non-compliant” information to make sure the owner of the brain feels comfortable.

What we think that is the truth might be just the creation from the brain, combining the positive parts into a compelling story and suppressing or deleting information that does not fit. Although it sounds absurd, I believe if we are able to create a connected digital enterprise, we will face the same symptoms. Due to the complexity of connected information, we are looking for the best suitable version, and as all became so complex, ordinary human beings will no longer be able to distinguish this.

What we think that is the truth might be just the creation from the brain, combining the positive parts into a compelling story and suppressing or deleting information that does not fit. Although it sounds absurd, I believe if we are able to create a connected digital enterprise, we will face the same symptoms. Due to the complexity of connected information, we are looking for the best suitable version, and as all became so complex, ordinary human beings will no longer be able to distinguish this.

Conclusion:

As part of the preparation for the upcoming PDT Europe 2018, I was investigating the topics coordinated and connected enterprises to discover potential transformation steps. We all need to explore the future with an open mind, and the challenge is: WHERE and HOW FAST can we transform from coordinated to connected? I am curious if you have experiences or thoughts on this topic.

This is my concluding post related to the various aspects of the model-driven enterprise. We went through Model-Based Systems Engineering (MBSE) where the focus was on using models (functional / logical / physical / simulations) to define complex product (systems). Next we discussed Model Based Definition / Model-Based Enterprise (MBD/MBE), where the focus was on data continuity between engineering and manufacturing by using the 3D Model as a master for design, manufacturing and eventually service information.

This is my concluding post related to the various aspects of the model-driven enterprise. We went through Model-Based Systems Engineering (MBSE) where the focus was on using models (functional / logical / physical / simulations) to define complex product (systems). Next we discussed Model Based Definition / Model-Based Enterprise (MBD/MBE), where the focus was on data continuity between engineering and manufacturing by using the 3D Model as a master for design, manufacturing and eventually service information.

And last time we looked at the Digital Twin from its operational side, where the Digital Twin was applied for collecting and tuning physical assets in operation, which is not a typical PLM domain to my opinion.

Now we will focus on two areas where the Digital Twin touches aspects of PLM – the most challenging one and the most over-hyped areas I believe. These two areas are:

- The Digital Twin used to virtually define and optimize a new product/system or even a system of systems. For example, defining a new production line.

- The Digital Twin used to be the virtual replica of an asset in operation. For example, a turbine or engine.

Digital Twin to define a new Product/System

There might be some conceptual overlap if you compare the MBSE approach and the Digital Twin concept to define a new product or system to deliver. For me the differentiation would be that MBSE is used to master and define a complex system from the R&D point of view – unknown solution concepts – use hardware or software? Unknown constraints to be refined and optimized in an iterative manner.

In the Digital Twin concept, it is more about a defining a system that should work in the field. How to combine various systems into a working solution and each of the systems has already a pre-defined set of behavioral / operational parameters, which could be 3D related but also performance related.

In the Digital Twin concept, it is more about a defining a system that should work in the field. How to combine various systems into a working solution and each of the systems has already a pre-defined set of behavioral / operational parameters, which could be 3D related but also performance related.

You would define and analyze the new solution virtual to discover the ideal solution for performance, costs, feasibility and maintenance. Working in the context of a virtual model might take more time than traditional ways of working, however once the models are in place analyzing the solution and optimizing it takes hours instead of weeks, assuming the virtual model is based on a digital thread, not a sequential process of creating and passing documents/files. Virtual solutions allow a company to optimize the solution upfront instead of costly fixing during delivery, commissioning and maintenance.

Why aren’t we doing this already? It takes more skilled engineers instead of cheaper fixers downstream. The fact that we are used to fixing it later is also an inhibitor for change. Management needs to trust and understand the economic value instead of trying to reduce the number of engineers as they are expensive and hard to plan.

Why aren’t we doing this already? It takes more skilled engineers instead of cheaper fixers downstream. The fact that we are used to fixing it later is also an inhibitor for change. Management needs to trust and understand the economic value instead of trying to reduce the number of engineers as they are expensive and hard to plan.

In the construction industry, companies are discovering the power of BIM (Building Information Model) , introduced to enhance the efficiency and productivity of all stakeholders involved. Massive benefits can be achieved if the construction of the building and its future behavior and maintenance can be optimized virtually compared to fixing it in an expensive way in reality when issues pop up.

In the construction industry, companies are discovering the power of BIM (Building Information Model) , introduced to enhance the efficiency and productivity of all stakeholders involved. Massive benefits can be achieved if the construction of the building and its future behavior and maintenance can be optimized virtually compared to fixing it in an expensive way in reality when issues pop up.

The same concept applies to process plants or manufacturing plants where you could virtually run the (manufacturing) process. If the design is done with all the behavior defined (hardware-in-the-loop simulation and software-in-the-loop) a solution has been virtually tested and rapidly delivered with no late discoveries and costly fixes.

Of course it requires new ways of working. Working with digital connected models is not what engineering learn during their education time – we have just started this journey. Therefore organizations should explore on a smaller scale how to create a full Digital Twin based on connected data – this is the ultimate base for the next purpose.

Of course it requires new ways of working. Working with digital connected models is not what engineering learn during their education time – we have just started this journey. Therefore organizations should explore on a smaller scale how to create a full Digital Twin based on connected data – this is the ultimate base for the next purpose.

Digital Twin to match a product/system in the field

When you are after the topic of a Digital Twin through the materials provided by the various software vendors, you see all kinds of previews what is possible. Augmented Reality, Virtual Reality and more. All these presentations show that clicking somewhere in a 3D Model Space relevant information pops-up. Where does this relevant information come from?

When you are after the topic of a Digital Twin through the materials provided by the various software vendors, you see all kinds of previews what is possible. Augmented Reality, Virtual Reality and more. All these presentations show that clicking somewhere in a 3D Model Space relevant information pops-up. Where does this relevant information come from?

Most of the time information is re-entered in a new environment, sometimes derived from CAD but all the metadata comes from people collecting and validating data. Not the type of work we promote for a modern digital enterprise. These inefficiencies are good for learning and demos but in a final stage a company cannot afford silos where data is collected and entered again disconnected from the source.

The main problem: Legacy PLM information is stored in documents (drawings / excels) and not intended to be shared downstream with full quality.

Read also: Why PLM is the forgotten domain in digital transformation.

If a company has already implemented an end-to-end Digital Twin to deliver the solution as described in the previous section, we can understand the data has been entered somewhere during the design and delivery process and thanks to a digital continuity it is there.

How many companies have done this already? For sure not the companies that are already a long time in business as their current silos and legacy processes do not cater for digital continuity. By appointing a Chief Digital Officer, the journey might start, the biggest risk the Chief Digital Officer will be running another silo in the organization.

How many companies have done this already? For sure not the companies that are already a long time in business as their current silos and legacy processes do not cater for digital continuity. By appointing a Chief Digital Officer, the journey might start, the biggest risk the Chief Digital Officer will be running another silo in the organization.

So where does PLM support the concept of the Digital Twin operating in the field?

For me, the IoT part of the Digital Twin is not the core of a PLM. Defining the right sensors, controls and software are the first areas where IoT is used to define the measurable/controllable behavior of a Digital Twin. This topic has been discussed in the previous section.

The second part where PLM gets involved is twofold:

- Processing data from an individual twin

- Processing data from a collection of similar twins

Processing data from an individual twin

Data collected from an individual twin or collection of twins can be analyzed to extract or discover failure opportunities. An R&D organization is interested in learning what is happening in the field with their products. These analyses lead to better and more competitive solutions.

Data collected from an individual twin or collection of twins can be analyzed to extract or discover failure opportunities. An R&D organization is interested in learning what is happening in the field with their products. These analyses lead to better and more competitive solutions.

Predictive maintenance is not necessarily a part of that. When you know that certain parts will fail between 10.000 and 20.000 operating hours, you want to optimize the moment of providing service to reduce downtime of the process and you do not want to replace parts way too early.

The R&D part related to predictive maintenance could be that R&D develops sensors inside this serviceable part that signal the need for maintenance in a much smaller time from – maintenance needed within 100 hours instead of a bandwidth of 10.000 hours. Or R&D could develop new parts that need less service and guarantee a longer up-time.

For an R&D department the information from an individual Digital Twin might be only relevant if the Physical Twin is complex to repair and downtime for each individual too high. Imagine a jet engine, a turbine in a power plant or similar. Here a Digital Twin will allow service and R&D to prepare maintenance and simulate and optimize the actions for the physical world before.

The five potential platforms of a digital enterprise

The second part where R&D will be interested in, is in the behavior of similar products/systems in the field combined with their environmental conditions. In this way, R&D can discover improvement points for the whole range and give incremental innovation. The challenge for this R&D organization is to find a logical placeholder in their PLM environment to collect commonalities related to the individual modules or components. This is not an ERP or MES domain.

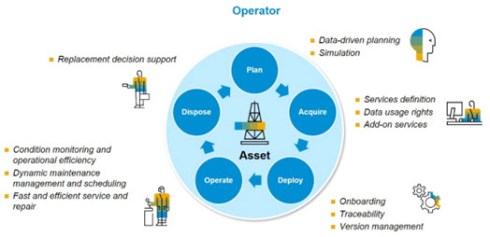

![]() Concepts of a logical product structure are already known in the oil & gas, process or nuclear industry and in 2017 I wrote about PLM for Owners/Operators mentioning Bjorn Fidjeland has always been active in this domain, you can find his concepts at plmPartner here or as an eLearning course at SharePLM.

Concepts of a logical product structure are already known in the oil & gas, process or nuclear industry and in 2017 I wrote about PLM for Owners/Operators mentioning Bjorn Fidjeland has always been active in this domain, you can find his concepts at plmPartner here or as an eLearning course at SharePLM.

To conclude:

- This post is way too long (sorry)

- PLM is not dead – it evolves into one of the crucial platforms for the future – The Product Innovation Platform

- Current BOM-centric approach within PLM is blocking progress to a full digital thread

More to come after the holidays (a European habit) with additional topics related to the digital enterprise

This is almost my last planned post related to the concepts of model-based. After having discussed Model-Based Systems Engineering (needed to develop complex products/systems including hardware and software) and Model-Based Definition (creating an efficient connection between Engineering and Manufacturing), my last post will be related to the most over-hyped topic: The Digital Twin

There are several reasons why the Digital Twin is overhyped. One of the reasons is that the Digital Twin is not necessarily considered as a PLM-related topic. Other vendors like SAP (the network of digital twins), Oracle (Digital Twins for IoT applications) and GE with their Predix platform also contributed to the hype related to the digital twin. The other reason is that the concept of Digital Twin is an excellent idea for marketers to shine above the clouds. Monica Schnitger’s recent comment says it all in her post 5 quick takeaways from Siemens Automation summit. Monica’s takeaway related to Digital Twin:

There are several reasons why the Digital Twin is overhyped. One of the reasons is that the Digital Twin is not necessarily considered as a PLM-related topic. Other vendors like SAP (the network of digital twins), Oracle (Digital Twins for IoT applications) and GE with their Predix platform also contributed to the hype related to the digital twin. The other reason is that the concept of Digital Twin is an excellent idea for marketers to shine above the clouds. Monica Schnitger’s recent comment says it all in her post 5 quick takeaways from Siemens Automation summit. Monica’s takeaway related to Digital Twin:

The whole digital twin concept is just starting to gain traction with automation users. In many cases, they don’t have a digital representation of the equipment on their lines; they may have some data from the equipment OEM or their automation contractors but it’s inconsistent and probably incomplete. The consensus seemed to be that this is a great idea but out of many attendees’ immediate reach. [But it is important to start down this path: model something critical, gather all the data you can, prove benefit then move on to a bigger project.]

Monica is aiming to the same point I have been mentioning several times. There is no digital representation and the existing data is inconsistent. Don’t wait: The importance of accurate data – act now !

What is a digital twin?

I think there are various definitions of the digital twin, and I do not want to go into a definition debate like we had before with the acronyms MBD/MBE (Model Based Definition/Enterprise – the confusion) or even the acronym PLM (classical PLM or digital PLM ?). Let’s agree on the following high-level statements:

- A digital twin is a virtual representation of a physical product

- The virtual part of the digital twin is defined by what you want to analyze, simulate, and predict related to the physical product

- One physical product can have multiple digital twins, but in the ideal world, there is potentially a unique digital twin for every physical product in the world

- When a product interacts with the environment, based on inputs and outputs, we normally call it a system. When I use Product, it will most of the time be a System, in particular in the context of a digital twin

Given the above statements, I will give some examples of digital twin concepts:

As a cyclist, I am active on platforms like Garmin and Strava, using a tracking device, heart monitor and a power meter. During every ride, my device plus the sensors measure my performance, and all the data is uploaded to the platform, providing me with a report where I drove, how fast, my heartbeat, cadence and power during the ride. On Strava, I can see the Flybys (other digital twins that crossed my path and their performances), and I can see per segment how I performed compared to others, and I can filter by age, by level, etc.)

As a cyclist, I am active on platforms like Garmin and Strava, using a tracking device, heart monitor and a power meter. During every ride, my device plus the sensors measure my performance, and all the data is uploaded to the platform, providing me with a report where I drove, how fast, my heartbeat, cadence and power during the ride. On Strava, I can see the Flybys (other digital twins that crossed my path and their performances), and I can see per segment how I performed compared to others, and I can filter by age, by level, etc.)

This is the easiest part of a digital twin. Every individual can monitor and analyze their personal behavior and discover trends. Additionally, the platform owner has all the intelligence about all cyclists worldwide, how they perform and what would be the best performance per location. And based on their Premium offering (where you pay), they can advise you on how to improve. The Strava business model brings value to the individual while learning from the behavior of thousands. Note that in this scenario, there is no 3D involved.

Another known digital twin story is related to plants in operation. In the past 10 years, I have advocated for Plant Lifecycle Management (PLM for Owner/Operators), describing the value of a virtual plant model using PLM capabilities combined with Maintenance, Repair and Overhaul (MRO) to reduce downtime. In a nuclear environment, the usage of 3D verification, simulation and even control software in a virtual environment can bring great benefit due to the fact that the physical twin is not always accessible, and downtime can be up to several million per week.

Another known digital twin story is related to plants in operation. In the past 10 years, I have advocated for Plant Lifecycle Management (PLM for Owner/Operators), describing the value of a virtual plant model using PLM capabilities combined with Maintenance, Repair and Overhaul (MRO) to reduce downtime. In a nuclear environment, the usage of 3D verification, simulation and even control software in a virtual environment can bring great benefit due to the fact that the physical twin is not always accessible, and downtime can be up to several million per week.

The above examples provide two types of digital twins. I will discuss some characteristics in the following paragraphs.

Digital Twin – performance focus

Companies like GE and SAP focus a lot on the digital twin in relation to asset performance. Measuring the performance of assets, comparing their performance with other similar assets and based on performance characteristics the collector of the data can sell predictive maintenance analysis, performance optimization guidance and potentially other value offerings to their customers.