You are currently browsing the category archive for the ‘Cloud’ category.

For those of you following my blog over the years, there is, every time after the PLM Roadmap PDT Europe conference, one or two blog posts, where the first starts with “The weekend after ….”

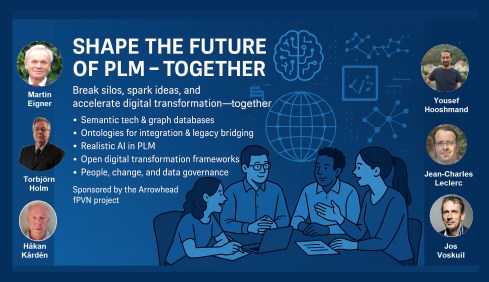

This time, November has been a hectic week for me, with first this engaging workshop “Shape the future of PLM – together” – you can read about it in my blog post or the latest post from Arrowhead fPVN, the sponsor of the workshop.

This time, November has been a hectic week for me, with first this engaging workshop “Shape the future of PLM – together” – you can read about it in my blog post or the latest post from Arrowhead fPVN, the sponsor of the workshop.

Last week, I celebrated with the core team from the PLM Green Global Alliance our 5th anniversary, during which we discussed sustainability in action. The term sustainability is currently under the radar, but if you want to learn what is happening, read this post with a link to the webinar recording.

Last week, I celebrated with the core team from the PLM Green Global Alliance our 5th anniversary, during which we discussed sustainability in action. The term sustainability is currently under the radar, but if you want to learn what is happening, read this post with a link to the webinar recording.

Last week, I was also active at the PTC/User Benelux conference, where I had many interesting discussions about PTC’s strategy and portfolio. A big and well-organized event in the town where I grew up in the world of teaching and data management.

Last week, I was also active at the PTC/User Benelux conference, where I had many interesting discussions about PTC’s strategy and portfolio. A big and well-organized event in the town where I grew up in the world of teaching and data management.

And now it is time for the PLM roadmap / PDT conference review

The conference

The conference is my favorite technical conference 😉 for learning what is happening in the field. Over the years, we have seen reports from the Aerospace & Defense PLM Action Groups, which systematically work on various themes related to a digital enterprise. The usage of standards, MBSE, Supplier Collaboration, Digital Thread & Digital Twin are all topics discussed.

The conference is my favorite technical conference 😉 for learning what is happening in the field. Over the years, we have seen reports from the Aerospace & Defense PLM Action Groups, which systematically work on various themes related to a digital enterprise. The usage of standards, MBSE, Supplier Collaboration, Digital Thread & Digital Twin are all topics discussed.

This time, the conference was sold out with 150+ attendees, just fitting in the conference space, and the two-day program started with a challenging day 1 of advanced topics, and on day 2 we saw more company experiences.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

PLM’s Integral Role in Digital Transformation

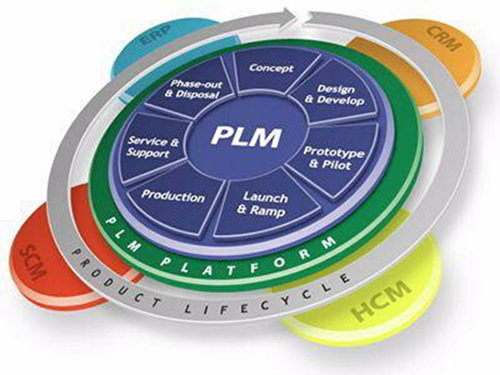

As usual, Peter Bilello, CIMdata’s President & CEO, kicked off the conference, and his message has not changed over the years. PLM should be understood as a strategic, enterprise-wide approach that manages intellectual assets and connects the entire product lifecycle.

As usual, Peter Bilello, CIMdata’s President & CEO, kicked off the conference, and his message has not changed over the years. PLM should be understood as a strategic, enterprise-wide approach that manages intellectual assets and connects the entire product lifecycle.

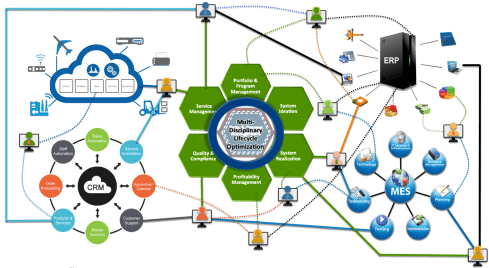

I like the image below explaining the WHY behind product lifecycle management.

It enables end-to-end digitalization, supports digital threads and twins, and provides the backbone for data governance, analytics, AI, and skills transformation.

Peter walked us briefly through CIMdata’s Critical Dozen (a YouTube recording is available here), all of which are relevant to the scope of digital transformation. Without strong PLM foundations and governance, digital transformation efforts will fail.

The Digital Thread as the Foundation of the Omniverse

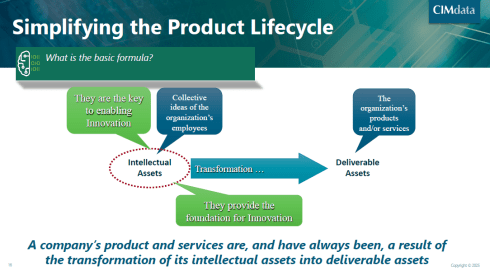

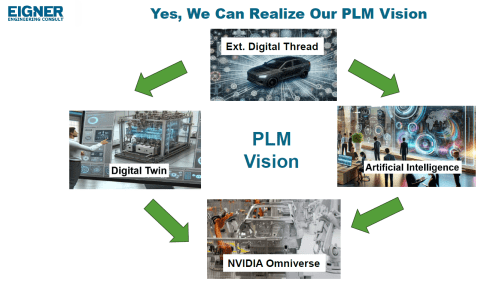

Prof. Dr.-Ing. Martin Eigner, well known for his lifetime passion and vision in product lifecycle management (PDM and PLM tools & methodology), shared insights from his 40-year journey, highlighting the growing complexity and ever-increasing fragmentation of customer solution landscapes.

Prof. Dr.-Ing. Martin Eigner, well known for his lifetime passion and vision in product lifecycle management (PDM and PLM tools & methodology), shared insights from his 40-year journey, highlighting the growing complexity and ever-increasing fragmentation of customer solution landscapes.

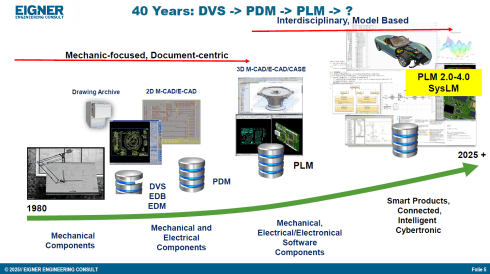

In his current eco-system, ERP (read SAP) is playing a significant role as an execution platform, complemented by PDM or ECTR capabilities. Few of his customers go for the broad PLM systems, and therefore, he stresses the importance of the so-called Extended Digital Thread.

Prof Eigner describes the EDT more precisely as an overlaying infrastructure implemented by a graph database that serves as a performant knowledge graph of the enterprise.

The EDT serves as the foundation for AI-driven applications, supporting impact analysis, change management, and natural-language interaction with product data. The presentation also provides a detailed view of Digital Twin concepts, ranging from component to system and process twins, and demonstrates how twins enhance predictive maintenance, sustainability, and process optimization.

Combined with the NVIDIA Omniverse as the next step toward immersive, real-time collaboration and simulation, enabling virtual factories and physics-accurate visualization. The outlook emphasizes that combining EDT, Digital Twin, AI, and Omniverse moves the industry closer to the original PLM vision: a unified, consistent Single Source of Truth 😮that boosts innovation, efficiency, and ROI.

![]() For me, hearing and reading the term Single Source of Truth still creates discomfort with reality and humanity, so we still have something to discuss.

For me, hearing and reading the term Single Source of Truth still creates discomfort with reality and humanity, so we still have something to discuss.

Semantic Digital Thread for Enhanced Systems Engineering in a Federated PLM Landscape

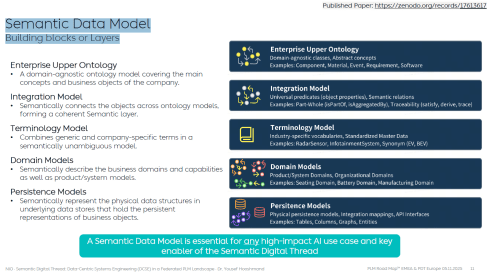

Dr. Yousef Hooshmand‘s presentation was a great continuation of the Extended Digital Thread theme discussed by Dr. Martin Eigner. Where the core of Martin’s EDT is based on traceability between artifacts and processes throughout the lifecycle, Yousef introduced a (for me) totally new concept: starting with managing and structuring the data to manage the knowledge, rather than starting from the models and tools to understand the knowledge.

Dr. Yousef Hooshmand‘s presentation was a great continuation of the Extended Digital Thread theme discussed by Dr. Martin Eigner. Where the core of Martin’s EDT is based on traceability between artifacts and processes throughout the lifecycle, Yousef introduced a (for me) totally new concept: starting with managing and structuring the data to manage the knowledge, rather than starting from the models and tools to understand the knowledge.

It is a fundamentally different approach to addressing the same problem of complexity. During our pre-conference workshop “Shape the future of PLM – together,” I already got a bit familiar with this approach, and Yousef’s recently released paper provides all the details.

All the relevant information can be found in his recent LinkedIn post here.

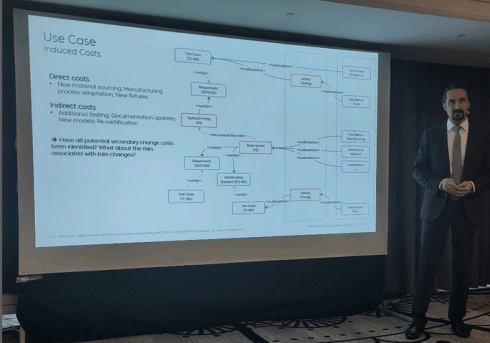

In his presentation during the conference, Yousef illustrated the value and applicability of the Semantic Digital Thread approach by presenting it in an automotive use case: Impact Analysis and Cost Estimation (image above)

To understand the Semantic Digital Thread, it is essential to understand the Semantic Data Model and its building blocks or layers, as illustrated in the image below:

In addition, such an infrastructure is ideal for AI applications and avoids vendor- or tool lock-in, providing a significant long-term advantage.

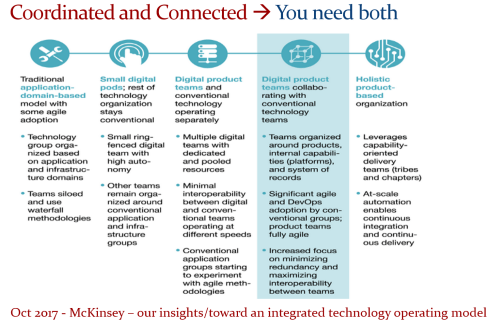

I am sure it will take time for us to digest the content if you are entering the domain of a data-driven enterprise (the connected approach) instead of a document-driven enterprise (the coordinated approach).

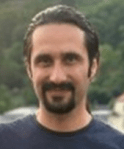

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

Where is your company on the path to becoming more data-driven?

Note: I just saw this post and the image above, which emphasizes the importance of the relationship between ontologies and the application of AI agents.

Evaluation of SysML v2 for use in Collaborative MBSE between OEMs and Suppliers

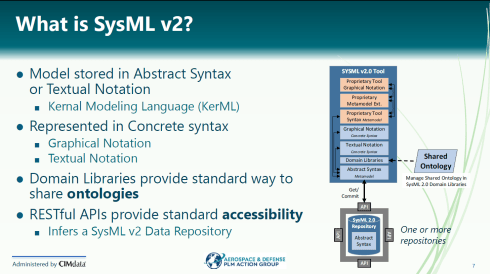

It was interesting to hear Chris Watkins’ speech, which presented the findings from the AD PLM Action Group MBSE Collaboration Working Group on digital collaboration based on SysML v2.

It was interesting to hear Chris Watkins’ speech, which presented the findings from the AD PLM Action Group MBSE Collaboration Working Group on digital collaboration based on SysML v2.

The topic they research is that currently there are no common methods and standards for exchanging digital model-based requirements and architecture deliverables for the design, procurement, and acceptance of aerospace systems equipment across the industry.

The action group explored the value of SysML v2 for data-driven collaboration between OEMs and suppliers, particularly in the early concept phases.

Chris started with a brief explanation of what SysXML v2 is – image below:

As the image illustrates, SysML v2-ready tools allow people to work in their proprietary interfaces while sharing results in common, defined structures and ontologies.

When analyzing various collaboration scenarios, one of the main challenges remained managing changes, the required ontologies, and working in a shared IT environment.

👉You can read the full report here: AD PAG reports: Model-Based Systems Engineering.

An interesting point of discussion here is that, in the report, participants note that, despite calling out significant gaps and concerns, a substantial majority of the industry indicated that their MBSE solution provider is a good partner. At the same time, only a small minority expressed a negative view.

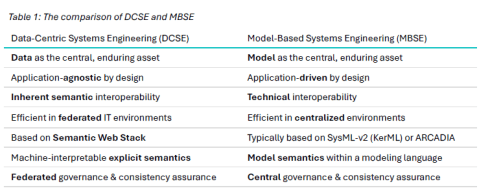

Would Data-Centric Systems Engineering change the discussion? See table 1 below from Yousef’s paper:

An illustration that there was enough food for discussion during the conference.

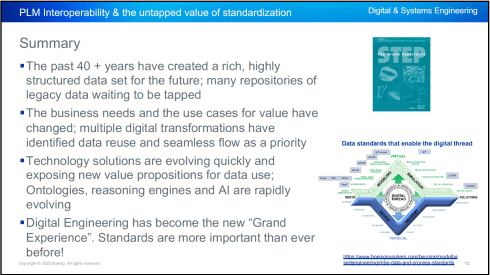

PLM Interoperability and the Untapped Value of 40 Years in Standardization

In the context of collaboration, two sessions fit together perfectly.

First, Kenny Swope from Boeing. Kenny is a longtime Boeing engineering leader and global industrial-data standards expert who oversees enterprise interoperability efforts, chairs ISO/TC 184/SC 4, and mentors youth in technology through 4-H and FIRST programs.

First, Kenny Swope from Boeing. Kenny is a longtime Boeing engineering leader and global industrial-data standards expert who oversees enterprise interoperability efforts, chairs ISO/TC 184/SC 4, and mentors youth in technology through 4-H and FIRST programs.

Kenny shared that over the past 40+ years, the understanding and value of this approach have become increasingly apparent, especially as organizations move toward a digital enterprise. In a digital enterprise, these standards are needed for efficient interoperability between various stakeholders. And the next session was an example of this.

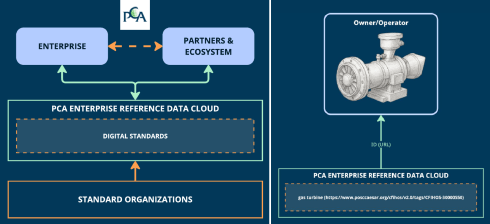

Unlocking Enterprise Knowledge

Fredrik Anthonisen, the CTO of the POSC Caesar Association (PCA), started his story about the potential value of efficient standard use.

Fredrik Anthonisen, the CTO of the POSC Caesar Association (PCA), started his story about the potential value of efficient standard use.

According to a Siemens report, “The true costs of downtime” a $1,4 trillion is lost to unplanned downtime.

The root cause is that, most of the time, the information needed to support the MRO activity is inaccessible or incomplete.

Making data available using standards can provide part of the answer, but static documents and slow consensus processes can’t keep up with the pace of change.

Therefore, PCA established the PCA enterprise reference data cloud, where all stakeholders in enterprise collaboration can relate their data to digital exposed standards, as the left side of the image shows.

Fredrik shared a use case (on the right side of the image) as an example. Also, he mentioned that the process for defining and making the digital reference data available to participants is ongoing. The reference data needs to become the trusted resource for the participants to monetize the benefits.

Summary

Day 1 had many more interesting and advanced concepts related to standards and the potential usage of AI.

Jean-Charles Leclerc, Head of Innovation & Standards at TotalEnergies, in his session, “Bringing Meaning Back To Data,” elaborated on the need to provide data in the context of the domain for which it is intended, rather than “indexed” LLM data.

Jean-Charles Leclerc, Head of Innovation & Standards at TotalEnergies, in his session, “Bringing Meaning Back To Data,” elaborated on the need to provide data in the context of the domain for which it is intended, rather than “indexed” LLM data.

Very much aligned with Yousef’s statement that there is a need to apply semantic technologies, and especially ontologies, to turn the data into knowledge.

More details can also be found in the “Shape the future of PLM – together” post, where Jean-Charles was one of the leading voices.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

“Reliable AI comes from a foundation that supports knowledge in its domain context.”

Conclusion

For the casual user, day 1 was tough – digital transformation in the product lifecycle domain requires skills that might not yet exist in smaller organizations. Understanding the need for ontologies (generic/domain-specific) and semantic models is essential to benefit from what AI can bring – a challenging and enjoyable journey to follow!

This week is busy for me as I am finalizing several essential activities related to my favorite hobby, product lifecycle management or is it PLM😉?

And most of these activities will result in lengthy blog posts, starting with:

“The week(end) after <<fill in the event>>”.

Here are the upcoming actions:

Click on each image if you want to see the details:

In this Future of PLM Podcast series, moderated by Michael Finocciaro, we will continue the debate on how to position PLM (as a system or a strategy) and move away from an engineering framing. Personally, I never saw PLM as a system and started talking more and more about product lifecycle management (the strategy) versus PLM/PDM (the systems).

Note: the intention is to be interactive with the audience, so feel free to post questions/remarks in the comments, either upfront or during the event.

You might have seen in the past two weeks some posts and discussions I had with the Share PLM team about a unique offering we are preparing: the PLM Awareness program. From our field experience, PLM is too often treated as a technical issue, handled by a (too) small team.

We believe every PLM program should start by fostering awareness of what people can expect nowadays, given the technology, experiences, and possibilities available. If you want to work with motivated people, you have to involve them and give them all the proper understanding to start with.

Join us for the online event to understand the value and ask your questions. We are looking forward to your participation.

This is another event related to the future of PLM; however, this time it is an in-person workshop, where, inspired by four PLM thought leaders, we will discuss and work on a common understanding of what is required for a modern PLM framework. The workshop, sponsored by the Arrowhead fPVN project, will be held in Paris on November 4th, preceding the PLM Roadmap/PDT Europe conference.

We will not discuss the term PLM; we will discuss business drivers, supporting technologies and more. My role as a moderator of this event is to assist with the workshop, and I will share its findings with a broader audience that wasn’t able to attend.

Be ready to learn more in the near future!

Suppose you have followed my blog posts for the past 10 years. In that case, you know this conference is always a place to get inspired, whether by leading companies across industries or by innovative and engaging new developments. This conference has always inspired and helped me gain a better understanding of digital transformation in the PLM domain and how larger enterprises are addressing their challenges.

This time, I will conclude the conference with a lecture focusing on the challenging side of digital transformation and AI: we humans cannot transform ourselves, so we need help.

At the end of this year, we will “celebrate” our fifth anniversary of the PLM Green Global Alliance. When we started the PGGA in 2020, there was an initial focus on the impact of carbon emissions on the climate, and in the years that followed, climate disasters around the world caused serious damage to countries and people.

How could we, as a PLM community, support each other in developing and sharing best practices for innovative, lower-carbon products and processes?

In parallel, driven by regulations, there was also a need to improve current PLM practices to efficiently support ESG reporting, lifecycle analysis, and, soon, the Digital Product Passport. Regulations that push for a modern data-driven infrastructure, and we discussed this with the major PLM vendors and related software or solution partners. See our YouTube channel @PLM_Green_Global_Alliance

In this online Zoom event, we invite you to join us to discuss the topics mentioned in the announcement. Join us in this event and help us celebrate!

I am closing that week at the PTC/User Benelux event in Eindhoven, the Netherlands, with a keynote speech about digital transformation in the PLM domain. Eindhoven is the city where I grew up, completed my amateur soccer career, ran my first and only marathon, and started my career in PLM with SmarTeam. The city and location feel like home. I am looking forward to discussing and meeting with the PTC user community to learn how they experience product lifecycle management, or is it PLM😉?

With all these upcoming events, I did not have the time to focus on a new blog post; however, luckily, in the 10x PLM discussion started by Oleg Shilovitsky there was an interesting comment from Rob Ferrone related to that triggered my mind. Quote:

With all these upcoming events, I did not have the time to focus on a new blog post; however, luckily, in the 10x PLM discussion started by Oleg Shilovitsky there was an interesting comment from Rob Ferrone related to that triggered my mind. Quote:

The big breakthrough will come from 1. advances in human-machine interface and 2. less % of work executed by human in the loop. Copy/paste, typing, voice recognition are all significant limits right now. It’s like trying to empty a bucket of water through a drinking straw. When tech becomes more intelligent and proactive then we will see at least 10x.

This remark reminded me of one of my first blog posts in 2008, when I was trying to predict what PLM would look like in 2050. I thought it is a nice moment to read it (again). Enjoy!

PLM in 2050

As the year ends, I decided to take my crystal ball to see what would happen with PLM in the future. It felt like a virtual experience, and this is what I saw:

As the year ends, I decided to take my crystal ball to see what would happen with PLM in the future. It felt like a virtual experience, and this is what I saw:

- Data is no longer replicated – every piece of information will have a Universal Unique ID, also known as a UUID. In 2020, this initiative became mature, thanks to the merger of some big PLM and ERP vendors, who brought this initiative to reality. This initiative dramatically reduced exchange costs in supply chains and led to bankruptcy for many companies that provided translation and exchange software.

- Companies store their data in ‘the cloud’ based on the concept outlined above. Only some old-fashioned companies still handle their own data storage and exchange, as they fear someone will access their data. Analysts compare this behavior with the situation in the year 1950, when people kept their money under a mattress, not trusting banks (and they were not always wrong)

- After 3D, a complete virtual world based on holography became the next step in product development and understanding. Thanks to the revolutionary quantum-3D technology, this concept could even be applied to life sciences. Before ordering a product, customers could first experience and describe their needs in a virtual environment.

- Finally, the cumbersome keyboard and mouse were replaced by voice and eye recognition. Initially, voice recognition

and eye tracking were cumbersome. Information was captured by talking to the system and by recording eye movements during hologram analysis. This made the life of engineers so much easier, as while researching and talking, their knowledge was stored and tagged for reuse. No need for designers to send old-fashioned emails or type their design decisions for future reuse - Due to the hologram technology, the world became greener. People did not need to travel around the world, and the standard became virtual meetings with global teams(airlines discontinued business class). Even holidays can be experienced in the virtual world thanks to a Dutch initiative inspired by coffee. The whole IT infrastructure was powered by efficient solar energy, drastically reducing the amount of carbon dioxide.

- Then, with a shock, I noticed PLM no longer existed. Companies were focusing on their core business processes. Systems/terms like PLM, ERP, and CRM no longer existed. Some older people still remembered the battle between those systems over data ownership and the political discomfort this caused within companies.

- As people were working so efficiently, there was no need to work all week. There were community time slots when everyone was active, but 50 per cent of the time, people had time to recreate (to re-create or recreate was the question). Some older French and German designers remembered the days when they had only 10 weeks holiday per year, unimaginable nowadays.

As we still have more than 40 years to reach this future, I wish you all a successful and excellent 2009.

I am looking forward to being part of the green future next year.

After a summer holiday in the south of Greece, it is time to resume my activities. The south of Crete is largely an analogue environment, far from any digital hype.

Tempted by LinkedIn posts, I noticed the summer was full of memories, with Martin Eigner sharing 40 years of PLM experience, Oleg Shilovitsky sharing 30 years of PDM Evolution, and Michael Finochario publishing posts on PLM vendors, CAD kernels, and more.

Tempted by LinkedIn posts, I noticed the summer was full of memories, with Martin Eigner sharing 40 years of PLM experience, Oleg Shilovitsky sharing 30 years of PDM Evolution, and Michael Finochario publishing posts on PLM vendors, CAD kernels, and more.

So where do I stand? While digesting all these historical experiences, I reflected on what we can learn from them and what we didn’t learn from them.

It started with technology.

From 1990 to 1999, I worked with mid-market companies, where data management was the most significant challenge. The introduction of MS Windows made data management more user-friendly, evolving from drawing management systems with version and status management capabilities.

From 1990 to 1999, I worked with mid-market companies, where data management was the most significant challenge. The introduction of MS Windows made data management more user-friendly, evolving from drawing management systems with version and status management capabilities.

Who remembers Automanager Workflow from Cyco, before SmarTeam came on the market?

For that reason, in the early days, PDM was an IT job. As the PDM system primarily dealt with engineering data, it was relatively easy to implement as an organizational change process. We transitioned from analogue to electronic in the department.

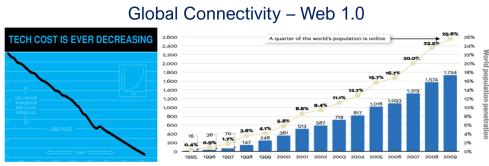

Connecting with other systems, particularly ERP, was a serious IT job and a financial challenge. Connecting with other systems, particularly ERP, was a serious IT job and a financial challenge. The rapid decline of IT components, combined with the rapid growth of global connectivity, has created new opportunities for collaboration.

As part of the Dassault/IBM/SmarTeam organization, I explained and taught these new capabilities worldwide.

In 2008, my VirtualDutchman blog and coaching journey began, evolving from explanations of technology to modern methodologies, which led to organizational change and expectation management – skills not traditionally associated with IT.

In 2008, my VirtualDutchman blog and coaching journey began, evolving from explanations of technology to modern methodologies, which led to organizational change and expectation management – skills not traditionally associated with IT.

Then came digital transformation

With growing connectivity, smartphones and Web 2.0 technology have led to more PLM-like discussions. PLM vendors expanded their scope and developed capabilities beyond mechanical engineering.

The expansion of capabilities was also the moment when the confusion about the term PLM reached its peak: a PLM strategy or a PLM system?

The expansion of capabilities was also the moment when the confusion about the term PLM reached its peak: a PLM strategy or a PLM system?

At the time, they were largely considered the same in discussions and advertisements..

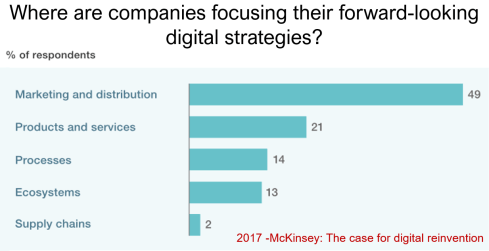

Meanwhile, digital transformation was occurring at the marketing and sales levels – companies invested in direct communication with their customers through the web.

Meanwhile, the internal ways of working for R&D, engineering, and manufacturing did not change significantly. Still, they were following linear processes, and despite the existence of 3D CAD, the 2D drawing remained the primary carrier of legal information between engineering, manufacturing, and suppliers.

Note: the option where the most benefits could be achieved – connected supply chains – had the lowest focus in 2017 – something that would change with COVID-19.

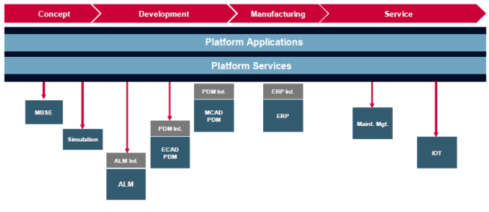

Fundamental digital transformation in the PLM domain occurred gradually. ARAS came with its overlay approach (the platform), connecting various disciplines and enterprise systems. In contrast, Dassault Systèmes introduced its 3DEXPERIENCE platform, utilizing its own software brands as platform components.

Most PLM vendors rapidly countered Aras’ overlay approach with their low-code offerings based on Mendix, ThingWorx or Netvibes, to enable data flows beyond the traditional PDM scope. The Coordinated Digital Thread was born.

The good news is that PLM has now clearly become a strategy based on a federated system infrastructure. The single PLM system no longer exists, although many of us still use the term’ PLM system’ to refer to the main component of a PLM infrastructure – the System of Record.

Moving to a federated PLM infrastructure is already a challenge for companies, not because of the available technology, but first of all because of the legacy data and, closely related to that, legacy processes and people skills.

Legacy is creating the inertia, not technology!

Next came the cloud – SaaS

With the availability of cloud solutions that support real-time interactions between stakeholders, either within an enterprise or in a value chain, a new paradigm has emerged: the connected enterprise.

With the availability of cloud solutions that support real-time interactions between stakeholders, either within an enterprise or in a value chain, a new paradigm has emerged: the connected enterprise.

A connected enterprise no longer needs interfaces to transfer data from one system to another.

Instead, with apps and dashboards, combined data from different online sources is presented in a single, user-friendly working environment – A combination of the Systems of Record with the new environments – the Systems of Engagement.

The technology used to create dashboards and apps is based on modern data-driven technologies and principles (ontologies, graph databases, and the semantic web). The Connected Digital Thread was born.

However, legacy systems play an essential role again, as some systems of engagement can be implemented in a complementary manner to the systems of record, allowing companies to work within an integrated technology model.

People will work in a particular mode, either coordinated or connected, but organizations can operate in both modes simultaneously. A story I have been sharing a lot – it is not about migrations but about an evolutionary approach towards an integrated technology model.

At this point, it becomes essential that business objectives drive the implementation of a PLM infrastructure. Of course, you hear me say we should start from the business; however, the big difference now is that a company should coordinate the technologies, systems, and tools it acquires to avoid isolated islands of information.

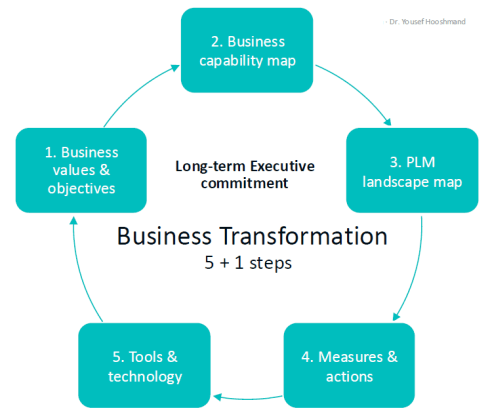

Follow Yousef Hooshmand‘s 5 + 1 business transformation steps.

An open SaaS infrastructure enables a company to let data flow almost in real-time. There is a lot of discussion related to data quality and governance, and if you have missed it, please read these three articles I created together with Rob Feronne, the product Digital PLuMber:

An open SaaS infrastructure enables a company to let data flow almost in real-time. There is a lot of discussion related to data quality and governance, and if you have missed it, please read these three articles I created together with Rob Feronne, the product Digital PLuMber:

- Data Quality and Data Governance – A hype? (part 1)

- Data Quality and Data Governance – the WHY and HOW (part 2)

- Building the Future: Data Quality and Governance in the Digital Age (part 3)

There are some great insights in this dialogue and the associated LinkedIn comments.

Despite the increasing availability of technology, it is the legacy of people, processes, and culture that is hindering progress.

Rob Feronne had a shocking lightbulb moment 😲 in our discussion about the future of PLM, where the participants – see below – answered a question related to the importance of technology in our PLM domain – shocking also for me.

My thumb was up because modern technology matters! The question inspired Oleg Shilovitsky to write a whole blog post on this topic. If you’re truly shocked, read his post, where I agree with the content; the question is too simple to answer with a thumbs up/down.

As technology has become more accessible than before, you no longer need an IT department to establish a PLM infrastructure. And then indeed, the people and process side needs and deserves much more attention..

As technology has become more accessible than before, you no longer need an IT department to establish a PLM infrastructure. And then indeed, the people and process side needs and deserves much more attention..

And now there is AI

If you haven’t read anything about AI recently, you must be living in an isolated location. Regardless of the business discussions you are following, it is all about the potential of AI.

If you haven’t read anything about AI recently, you must be living in an isolated location. Regardless of the business discussions you are following, it is all about the potential of AI.

Although AI is not a new concept, the fact that various AI capabilities have now reached the end-user level is what drives the hype. Currently, I believe we are at the peak of the hype.

Last week, I participated in an interesting discussion in the series: The Future of PLM moderated by Michael Finochario, this time talking with the analysts. Click on the link to see Michael’s excellent summary and access to the recording of the event.

It was an interesting discussion for a little more than an hour, and the majority of our discussion was about the potential impact of AI on businesses. First, the impact AI can have on the traditional work of an analyst and next, the effects on the PLM domain.

I believe we agreed that AI at this moment is mainly providing higher user efficiency and performance, very much aligned with the interesting research I have been reading in the MIT NANDA report with the title The GenAI Divide: STATE OF AI IN BUSINESS 2025

I believe we agreed that AI at this moment is mainly providing higher user efficiency and performance, very much aligned with the interesting research I have been reading in the MIT NANDA report with the title The GenAI Divide: STATE OF AI IN BUSINESS 2025

The report’s interesting findings included high adoption of tools but low transformation. Despite significant investment in Generative AI (GenAI), most organizations are not achieving meaningful business transformation.

- 95% of organizations report zero return on GenAI investments.

- Only 5% of integrated AI pilots generate millions in value.

- 80% of organizations have explored or piloted tools like ChatGPT, but these primarily enhance individual productivity.

- 60% of organizations evaluated enterprise-grade systems, but only 20% reached the pilot stage, and just 5% reached production.

- Key barriers include brittle workflows, a lack of contextual learning, and operational misalignment.

Therefore, the question is – Is current AI the next bubble?

In 2014, I wrote about the lack of digital transformation in the PLM domain, and two images (below) from a report by The Economist could be used again. The report can be found here: The Onrushing Wave.

Click on the image to read the 2013 predictions.

I realized that my current job, as a recreational therapist and firefighter at the time, was not at risk, and that some of the predictions from 10 years ago had become a reality. Who is still bothered by telemarketers or retail salespersons?

However, many of the AI symptoms mentioned in the MIT NANDA report are similar to the hype surrounding digital transformation.

The only reservation I have now – will it take a decade before we understand and demonstrate the value of AI, or are we accelerating?

In this context, the upcoming PLM Roadmap/PDT Europe conference on 5 – 6 November will be interesting, as here we will discuss reality.

For a few of you interested in more, there is the day before the conference, a (free) workshop where we will discuss with some thought leaders and experts from various companies how the future of PLM could look like – based on standards, AI tools and more. Click on the image below the conclusion.

Conclusion

The summertime was a nice moment to reflect, inspired by others in my network. What is clear is that there is a shift from technology towards people and change. The rapid expansion of AI tools, along with connected technologies, has created an overwhelming array of possibilities. Now it is time for business leadership to understand them and utilize them for significant business improvement, where the fear is that substantial change will always be slowed down by organizational inertia.

Just before or during the summer holidays, we were pleased to resume our interview series on PLM and Sustainability, where the PLM Green Global Alliance interviews PLM-related software vendors and service organizations, discussing their sustainability missions and offerings.

Just before or during the summer holidays, we were pleased to resume our interview series on PLM and Sustainability, where the PLM Green Global Alliance interviews PLM-related software vendors and service organizations, discussing their sustainability missions and offerings.

Following recent discussions in the PLM ecosystem, including PSC Transition Technologies (EcoPLM), CIMPA PLM services (LCA), and the Design for Sustainability working group (with multiple vendors & service partners), we now have the opportunity to catch up with Sustaira after almost three years.

In 2022, Sustaira was a startup company focused on building and providing data-driven, efficient support for sustainability reporting and analysis based on the Mendix platform, while engaging with their first potential customers. What has happened in those three years?

In 2022, Sustaira was a startup company focused on building and providing data-driven, efficient support for sustainability reporting and analysis based on the Mendix platform, while engaging with their first potential customers. What has happened in those three years?

SUSTAIRA

Sustaira provides a sustainability management software platform that helps organizations track, manage, and report their environmental, social, and governance (ESG) performance through customizable applications and dashboards.

Sustaira provides a sustainability management software platform that helps organizations track, manage, and report their environmental, social, and governance (ESG) performance through customizable applications and dashboards.

We spoke again with Vincent de la Mar, founder and CEO of Sustaira, and it was pretty clear from our conversation that they have evolved and grown in their business and value proposition for businesses. As you will discover by listening to the interview, they are not, per se, in the PLM domain.

Enjoy the 35-minute interview below.

Slides shown during the interview, combined with additional company information, can be found HERE.

What we have learned

- Sustaira is a modular, AI-driven sustainability platform. It offers approximately 150 “sustainability accelerators,” which are either complete Software as a Service (SaaS) products (such as carbon accounting, goal/KPI tracking, and disclosures) or adaptable SaaS products that allow for complete configuration of data models, logic, and user interfaces.

- Their strategy is based on three pillars:

- providing an end-to-end sustainability platform (Ports of Jersey),

- filling gaps in an enterprise architecture and business needs (Science-Based Target Initiatives)

- Co-creating new applications with partners (BCAF with Siemens Financial Services)

- The company has a pragmatic view on AI and thanks to its scalable, data-driven Mendix platform, it can bring integrated value compared to niche applications that might become obsolete due to changing regulations and practices (e.g., dedicated CSRD apps)

- The Sustainability Global Alliance, in partnership with Capgemini, is a strategic alliance that benefits both parties, with a focus on AI & Sustainability.

- The strong partnership with Siemens Digital Solutions.

- Their monthly Sustainability and ESG Insights newsletter, also published in our PGGA group, already has 55.000 subscribers.

Want to learn more?

The following links provide more information related to Sustaira:

- About Sustaira:

- Sustaira’s sustainability marketplace

- Siemens and Sustaira partnership

- Capgemini and Sustaira partnership

- Customer Case Stories

- The Sustainability Insights LinkedIn Newsletter

- Navigating CSRD

- Content Hub (requires registration)

Conclusion

It was great to observe how Sustaira has grown over the past three years, establishing a broad portfolio of sustainability-related solutions for various types of businesses. Their relationship with Siemens Digital Solutions enables them to bring value and add capabilities to the Siemens portfolio, as their platform can be applied to any company that needs a complementary data-driven service related to sustainability insights and reporting.

Follow the news around this event – click on the image to learn more.

Last week, I participated in the annual 3DEXPERIENCE User Conference, organized by the ENOVIA and NETVIBES brands. With approximately 250 attendees, the 2-day conference on the High-Tech Campus in Eindhoven was fully booked.

Last week, I participated in the annual 3DEXPERIENCE User Conference, organized by the ENOVIA and NETVIBES brands. With approximately 250 attendees, the 2-day conference on the High-Tech Campus in Eindhoven was fully booked.

My PDM/PLM career started in 1990 in Eindhoven.

First, I spent a significant part of my school life there, and later, I became a physics teacher in Eindhoven. Then, I got infected by CAD and data management, discovering SmarTeam, and the rest is history.

First, I spent a significant part of my school life there, and later, I became a physics teacher in Eindhoven. Then, I got infected by CAD and data management, discovering SmarTeam, and the rest is history.

As I wrote in my last year’s post, the 3DEXPERIENCE conference always feels like a reunion, as I have worked most of my time in the SmarTeam, ENOVIA, and 3DEXPERIENCE Eco-system.

Innovation Drivers in the Generative Economy

Stephane Declee and Morgan Zimmerman kicked off the conference with their keynote, talking about the business theme for 2024: the Generative Economy. Where the initial focus was on the Experience Economy and emotion, the Generative Economy includes Sustainability. It is a clever move as the word Sustainability, like Digital Transformation, has become such a generic term. The Generative Economy clearly explains that the aim is to be sustainable for the planet.

Stephane and Morgan talked about the importance of the virtual twin, which is different from digital twins. A virtual twin typically refers to a broader concept that encompasses not only the physical characteristics and behavior of an object or system but also its environment, interactions, and context within a virtual or simulated world. Virtual Twins are crucial to developing sustainable solutions.

Morgan concluded the session by describing the characteristics of the data-driven 3DEXPERIENCE platform and its AI fundamentals, illustrating all the facets of the mix of a System of Record (traditional PLM) and Systems of Record (MODSIM).

3DEXPERIENCE for All at automation.eXpress

Daniel Schöpf, CEO and founder of automation.eXpress GmbH, gave a passionate story about why, for his business, the 3DEXPERIENCE platform is the only environment for product development, collaboration and sales.

Automation.eXpress is a young but typical Engineering To Order company building special machinery and services in dedicated projects, which means that every project, from sales to delivery, requires a lot of communication.

Automation.eXpress is a young but typical Engineering To Order company building special machinery and services in dedicated projects, which means that every project, from sales to delivery, requires a lot of communication.

For that reason, Daniel insisted all employees to communicate using the 3DEXPERIENCE platform on the cloud. So, there are no separate emails, chats, or other siloed systems.

Everyone should work connected to the project and the product as they need to deliver projects as efficiently and fast as possible.

Daniel made this decision based on his 20 years of experience in traditional ways of working—the coordinated approach. Now, starting from scratch in a new company without a legacy, Daniel chose the connected approach, an ideal fit for his organization, and using the cloud solution as a scalable solution, an essential criterium for a startup company.

My conclusion is that this example shows the unique situation of an inspired leader with 20 years of experience in this business who does not choose ways of working from the past but starts a new company in the same industry, but now based on a modern platform approach instead of individual traditional tools.

My conclusion is that this example shows the unique situation of an inspired leader with 20 years of experience in this business who does not choose ways of working from the past but starts a new company in the same industry, but now based on a modern platform approach instead of individual traditional tools.

Augment Me Through Innovative Technology

Dr. Cara Antoine gave an inspiring keynote based on her own life experience and lessons learned from working in various industries, a major oil & gas company and major high-tech hardware and software brands. Currently, she is an EVP and the Chief Technology, Innovation & Portfolio Officer at Capgemini.

Dr. Cara Antoine gave an inspiring keynote based on her own life experience and lessons learned from working in various industries, a major oil & gas company and major high-tech hardware and software brands. Currently, she is an EVP and the Chief Technology, Innovation & Portfolio Officer at Capgemini.

She explained how a life-threatening infection that caused blindness in one of her eyes inspired her to find ways to augment herself to keep on functioning.

With that, she drew a parallel with humanity, who continuously have been augmenting themselves from the prehistoric day to now at an ever-increasing speed of change.

With that, she drew a parallel with humanity, who continuously have been augmenting themselves from the prehistoric day to now at an ever-increasing speed of change.

The current augmentation is the digital revolution. Digital technology is coming, and you need to be prepared to survive – it is Innovate of Abdicate.

Dr. Cara continued expressing the need to invest in innovation (me: it was not better in the past 😉 ) – and, of course, with an economic purpose; however, it should go hand in hand with social progress (gender diversity) and creating a sustainable planet (innovation is needed here).

Besides the focus on innovation drivers, Dr. Cara always connected her message to personal interaction. Her recently published book Make it Personal describes the importance of personal interaction, even if the topics can be very technical or complex.

Besides the focus on innovation drivers, Dr. Cara always connected her message to personal interaction. Her recently published book Make it Personal describes the importance of personal interaction, even if the topics can be very technical or complex.

I read the book with great pleasure, and it was one of the cornerstones of the panel discussion next.

It is all about people…

It might be strange to have a session like this in an ENOVIA/NETVIBES User Conference; however, it is another illustration that we are not just talking about technology and tools.

I was happy to introduce and moderate this panel discussion,also using the iconic Share PLM image, which is close to my heart.

I was happy to introduce and moderate this panel discussion,also using the iconic Share PLM image, which is close to my heart.

The panelists, Dr. Cara Antoine, Daniel Schöpf, and Florens Wolters, each actively led transformational initiatives with their companies.

We discussed questions related to culture, personal leadership and involvement and concluded with many insights, including “Create chemistry, identify a passion, empower diversity, and make a connection as it could make/break your relationship, were discussed.

And it is about processes.

Another trend I discovered is that cloud-based business platforms, like the 3DEXERIENCE platform, switch the focus from discussing functions and features in tools to establishing platform-based environments, where the focus is more on data-driven and connected processes.

Another trend I discovered is that cloud-based business platforms, like the 3DEXERIENCE platform, switch the focus from discussing functions and features in tools to establishing platform-based environments, where the focus is more on data-driven and connected processes.

Some examples:

Data Driven Quality at Suzlon Energy Ltd.

Florens Wolters, who also participated in the panel discussion “It is all about people ..” explained how he took the lead to reimagine the Sulon Energy Quality Management System using the 3DEXPERIENCE platform and ENOVIA from a disconnected, fragmented, document-driven Quality Management System with many findings in 2020 to a fully integrated data-driven management system with zero findings in 2023.

Florens Wolters, who also participated in the panel discussion “It is all about people ..” explained how he took the lead to reimagine the Sulon Energy Quality Management System using the 3DEXPERIENCE platform and ENOVIA from a disconnected, fragmented, document-driven Quality Management System with many findings in 2020 to a fully integrated data-driven management system with zero findings in 2023.

It is an illustration that a modern data-driven approach in a connected environment brings higher value to the organization as all stakeholders in the addressed solution work within an integrated, real-time environment. No time is wasted to search for related information.

It is an illustration that a modern data-driven approach in a connected environment brings higher value to the organization as all stakeholders in the addressed solution work within an integrated, real-time environment. No time is wasted to search for related information.

Of course, there is the organizational change management needed to convince people not to work in their favorite siloes system, which might be dedicated to the job, but not designed for a connected future.

Of course, there is the organizational change management needed to convince people not to work in their favorite siloes system, which might be dedicated to the job, but not designed for a connected future.

The image to the left was also a part of the “It is all about people”- session.

Enterprise Virtual Twin at Renault Group

The presentation of Renault was also an exciting surprise. Last year, they shared the scope of the Renaulution project at the conference (see also my post: The week after the 3DEXPERIENCE conference 2023).

The presentation of Renault was also an exciting surprise. Last year, they shared the scope of the Renaulution project at the conference (see also my post: The week after the 3DEXPERIENCE conference 2023).

Here, Renault mentioned that they would start using the 3DEXPERIENCE platform as an enterprise business platform instead of a traditional engineering tool.

Their presentation today, which was related to their Engineering Virtual Twin, was an example of that. Instead of using their document-based SCR (Système de Conception Renault – the Renault Design System) with over 1000 documents describing processes connected to over a hundred KPI, they have been modeling their whole business architecture and processes in UAF using a Systems of System Approach.

The image above shows Franck Gana, Renault’s engineering – transformation chief officer, explaining the approach. We could write an entire article about the details of how, again, the 3DEXPERIENCE platform can be used to provide a real-time virtual twin of the actual business processes, ensuring everyone is working on the same referential.

Bringing Business Collaboration to the Next Level with Business Experiences

To conclude this section about the shifting focus toward people and processes instead of system features, Alizée Meissonnier Aubin and Antoine Gravot introduced a new offering from 3DS, the marketplace for Business Experiences.

According to the HBR article, workers switch an average of 1200 times per day between applications, leading to 9 % of their time reorienting themselves after toggling.

1200 is a high number and a plea for working in a collaboration platform instead of siloed systems (the Systems of Engagement, in my terminology – data-driven, real-time connected). The story has been told before by Daniel Schöpf, Florens Wolters and Franck Gana, who shared the benefits of working in a connected collaboration environment.

The announced marketplace will be a place where customers can download Business Experiences.

There is was more ….

There were several engaging presentations and workshops during the conference. But, as we reach 1500 words, I will mention just two of them, which I hope to come back to in a later post with more detail.

- Delivering Sustainable & Eco Design with the 3DS LCA Solution

Valentin Tofana from Comau, an Italian multinational company in the automation and committed to more sustainable products. In the last context Valentin shared his experiences and lessons learned starting to use the 3DS LifeCycle Assessment tools on the 3DEXPERIENCE platform.

Valentin Tofana from Comau, an Italian multinational company in the automation and committed to more sustainable products. In the last context Valentin shared his experiences and lessons learned starting to use the 3DS LifeCycle Assessment tools on the 3DEXPERIENCE platform.

This session gave such a clear overview that we will come back with the PLM Green Global Alliance in a separate interview. - Beyond PLM. Productivity is the Key to Sustainable Business

Neerav MEHTA from L&T Energy Hydrocarbon demonstrated how they currently have implemented a virtual twin of the plant, allowing everyone to navigate, collaborate and explore all activities related to the plant.I was promoting this concept in 2013 also for Oil & Gas EPC companies, at that time, an immense performance and integration challenge. (PLM for all industries) Now, ten years later, thanks to the capabilities of the 3DEXPERIENCE platform, it has become a workable reality. Impressive.

Neerav MEHTA from L&T Energy Hydrocarbon demonstrated how they currently have implemented a virtual twin of the plant, allowing everyone to navigate, collaborate and explore all activities related to the plant.I was promoting this concept in 2013 also for Oil & Gas EPC companies, at that time, an immense performance and integration challenge. (PLM for all industries) Now, ten years later, thanks to the capabilities of the 3DEXPERIENCE platform, it has become a workable reality. Impressive.

Conclusion

Again, I learned a lot during these days, seeing the architecture of the 3DEXPERIENCE platform growing (image below). In addition, more and more companies are shifting their focus to real-time collaboration processes in the cloud on a connected platform. Their testimonies illustrate that to be sustainable in business, you have to augment yourself with digital.

Note: Dassault Systemes did not cover any of the cost for me attending this conference. I picked the topics close to my heart and got encouraged by all the conversations I had.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

I noticed this year/last year that many companies are discussing or working on their future PLM. It is time to make progress after COVID, particularly in digitization.

And as most companies are avoiding the risk of a “big bang”, they are exploring how they can improve their businesses in an evolutionary mode.

PLM is no longer a system

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

More and more, PLM is considered a strategy, with which I fully agree. Therefore, implementing a PLM strategy requires holistic thinking and an infrastructure of different types of systems, where possible, digitally connected.

This trend is bad news for the PLM vendors as they continuously work on an end-to-end portfolio where every aspect of the PLM lifecycle is covered by one of their systems. The company’s IT department often supports the PLM vendors, as IT does not like a diverse landscape.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

However, are these capabilities implementable in an economical and user-friendly manner by actual companies or should PLM players need to change their strategy”?

A question I will try to answer in this post

The future of PLM

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

Some of the posts to refresh your memory are:

- Time to split PLM?

- People, Processes, Data and Tools?

- The rise and the fall of the BOM?

- The new side of PLM? Systems of Engagement!

To summarize what has been discussed in these posts are the following points:

The As Is:

- The traditional PLM systems are examples of a System of Record, not designed to be end-user friendly but designed to have a traceable baseline for manufacturing, service and product compliance.

- The traditional PLM systems are tuned to a mechanical product introduction and release process in a coordinated manner, with a focus on BOM governance.

- The legacy information is stored in BOM structures and related specification files.

System of Record (ENOVIA image 2014)

The To Be:

- We are not talking about a PLM system anymore; a traditional System of Record will be digitally connected to different Systems of Engagement / Domains / Products, which have their own optimized environment for real-time collaboration.

- The BOM structures remain essential for the hardware part; however, overreaching structures are needed to manage software and hardware releases for a product. These structures depend on connected datasets.

- To support digital twins at the various lifecycle stages (design. Manufacturing, operations), product data needs to be based on and consumed by models.

- A future PLM infrastructure is hybrid, based on a Single Source of Change (SSoC) and an Authoritative Source of Truth (ASoT) instead of a Single Source of Truth (SSoT).

Various Systems of Engagement

Related podcasts

I relistened two podcasts before writing this post, and I think they are a must to listen to.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

Highlights: From System to Platform – the new norman. A Single Source of Truth doesn’t work anymore – it is about value streams. People in big companies fear making wrong PLM decisions, which is seen as a significant risk for your career.

There is no immediate need to change the current status quo.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

Highlights: The term Single Source of Change and the existing concepts of a hybrid PLM infrastructure based on his experiences at Daimler and now at NIO. Yousef stresses the importance of having the vision and the executive support to execute.

![]() The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

In addition, I want to point to the experiences provided by Erik Herzog in the Heliple project using OSLC interfaces as the “glue” to connect (in my terminology) the Systems of Engagement and the Systems of Record.

If you are interested in these concepts and want to learn and discuss them with your peers, more can be learned during the upcoming CIMdata PLM Roadmap / PDT Europe conference.

In particular, look at the agenda for day two if you are interested in this topic.

The future for the PLM vendors

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

They are more focused with their messaging on the transition from on-premise to the cloud, providing a SaaS offering with their portfolio.

I was slightly disappointed when I saw this article on Engineering.com provided by Autodesk: 5 PLM Best Practices from the Experiences of Autodesk and Its Customers.

The article is tool-centric, with statements that make sense and could be written by any PLM Vendor. However, Best Practice #1 Central Source of Truth Improves Productivity and Collaboration was the message that struck me. Collaboration comes from connecting people, not from the Single Source of Truth utopia.

I don’t believe PLM Vendors have to be afraid of losing their installed base rapidly with companies using their PLM as a System or Record. There is so much legacy stored in these systems that might still be relevant. The existence of legacy information, often documents, makes a migration or swap to another vendor almost impossible and unaffordable.

The System of Record is incompatible with data-driven PLM capabilities

I would like to see more clear developments of the PLM Vendors, creating a plug-and-play infrastructure for Systems of Engagement. Plug-and-play solutions could be based on a neutral partner collaboration hub like ShareAspace or the Systems of Engagement I discussed recently in my post and interview: The new side of PLM? Systems of Engagement!

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Some people believe AI, with the examples we have seen with ChatGPT, will be the future direction without needing interface standards.

I am curious about your thoughts and experiences in that area and am willing to learn.

Talking about learning?

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

As Martijn starts in the preface:

“I decided to write this book because, in my search for more knowledge on the topics of Part Re-Identification, Interchangeability, and Traceability, I could only find bits and pieces but not a comprehensive work that helps fundamentally understand these topics”.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

Martijn writes in an easily readable style and uses step-by-step examples to discuss the various options. There are even exercises at the end to use in a classroom or for your team to digest the content.

The good news is that the book is not about the past. You might also know Martijn for our joint discussion, The Future of Configuration Management, together with Maxime Gravel and Lisa Fenwick, on the impact of a model-based and data-driven approach to CM.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I recommend buying the book as a paperback so you can navigate easily between the diagrams and the text.

Conclusion

The trend for federated PLM is becoming more and more visible as companies start implementing these concepts. The end of monolithic PLM is a threat and an opportunity for the existing PLM Vendors. Will they work towards an open plug-and-play future, or will they keep their portfolios closed? What do you think?

Last week I had the opportunity to discuss the topic of Systems of Engagement in the context of the more extensive PLM landscape.

Last week I had the opportunity to discuss the topic of Systems of Engagement in the context of the more extensive PLM landscape.

I spoke with Andre Wegner from Authentise and their product Threads, MJ Smith from CoLab and Oleg Shilovitsky from OpenBOM.

I invited all three of them to discuss their background, their target customers, the significance of real-time collaboration outside discipline siloes, how they connect to existing PLM systems (Systems of Record), and finally, whether a company culture plays a role.

![]() Listen to this almost 45 min discussion here (save the m4a file first) or watch the discussion below on YouTube.

Listen to this almost 45 min discussion here (save the m4a file first) or watch the discussion below on YouTube.

What I learned from this conversation

- Systems of Engagement are bringing value to small enterprises but also as complementary systems to traditional PLM environments in larger companies.

- Thanks to their SaaS approach, they are easy to install and use to fulfill a need that would take weeks/months to implement in a traditional PLM environment. They can be implemented at a department level or by connecting a value chain of people.

- Due to their real-time collaboration capabilities, these systems provide fast and significant benefits.

- Systems of Engagement represent the trend that companies want to move away from monolithic systems and focus on working with the correct data connected to the users. A topic I will explore in a future blog post/

![]() I am curious to learn what you pick up from this conversation – are we missing other trends? Use the comments to this post.

I am curious to learn what you pick up from this conversation – are we missing other trends? Use the comments to this post.

Related to the company:

Visit Authentise.com

Related to the product:

Learn more about Collaborative Threads

Related to the reported benefits:

– Surgical robotics R&D team tracks 100% of their decisions and saves 150 hours in the first two weeks… doubling the effective size of their team:

Related to the company:

Visit Colabsoftware.com

Related to the product

Raise the bar for your design conversations

Related to the reported benefits

– How Mainspring used CoLab to achieve a 50% cost reduction redesign in half the time

– How Ford Pro Accelerated Time to Market by 30%

Related to the company:

Visit openbom.com

Related to the product:

Global Collaborative SaaS Platform For Industrial Companies

Related to reported benefits:

– OpenBOM makes the OKOS team 20% more efficient by helping to reduce inventory errors, costs, and streamlining supplier process

– VarTech Systems Optimizes Efficiency by Saving Two Hours of Engineering Time Daily with OpenBOM

Conclusion

I believe that Systems of Engagement are important for the digital transformation of a company.

They allow companies to learn what it means to work in a SaaS environment, potentially outside traditional company borders but with a focus on a specific value stream.

Thanks to their rapid deployment times, they help the company to grow its revenue even when the existing business is under threat due to newcomers.

The diagram below says it all. What are your favorite Systems of Engagement?

Hot from the press

Don’t miss the latest episode from the Share PLM podcast with Yousef Hooshmand – the discussion is very much connected to this discussion.

I was happy to present and participate at the 3DEXEPRIENCE User Conference held this year in Paris on 14-15 March. The conference was an evolution of the previous ENOVIA User conferences; this time, it was a joint event by both the ENOVIA and the NETVIBES brand.

I was happy to present and participate at the 3DEXEPRIENCE User Conference held this year in Paris on 14-15 March. The conference was an evolution of the previous ENOVIA User conferences; this time, it was a joint event by both the ENOVIA and the NETVIBES brand.

The conference was, for me, like a reunion. As I have worked for over 25 years in the SmarTeam, ENOVIA and 3DEXPERIENCE eco-system, now meeting people I have worked with and have not seen for over fifteen years.

My presentation: Sustainability Demands Virtualization – and it should happen fast was based on explaining the transformation from a coordinated (document-driven) to a connected (data-driven) enterprise.

There were 100+ attendees at the conference, mainly from Europe, and most of the presentations were coming from customers, where the breakout sessions gave the attendees a chance to dive deeper into the Dassault Systèmes portfolio.

Here are some of my impressions.

The power of ENOVIA and NETVIBES

I had a traditional view of the 3DEXPERIENCE platform based on my knowledge of ENOVIA, CATIA and SIMULIA, as many of my engagements were in the domain of MBSE or a model-based approach.

I had a traditional view of the 3DEXPERIENCE platform based on my knowledge of ENOVIA, CATIA and SIMULIA, as many of my engagements were in the domain of MBSE or a model-based approach.

However, at this conference, I discovered the data intelligence side that Dassault Systèmes is bringing with its NETVIBES brand.

Where I would classify the ENOVIA part of the 3DEXPERIENCE platform as a traditional System of Record infrastructure (see Time to Split PLM?).

I discovered that by adding NETVIBES on top of the 3DEXPERIENCE platform and other data sources, the potential scope had changed significantly. See the image below:

As we can see, the ontologies and knowledge graph layer make it possible to make sense of all the indexed data below, including the data from the 3DEXPERIENCE Platform, which provides a modern data-driven layer for its consumers and apps.

The applications on top of this layer, standard or developed, can be considered Systems of Engagement.

My curiosity now: will Dassault Systèmes keep supporting the “old” system of record approach – often based on BOM structures (see also my post: The Rise and Fall of the BOM) combined with the new data-driven environment? In that case, you would have both approaches within one platform.

My curiosity now: will Dassault Systèmes keep supporting the “old” system of record approach – often based on BOM structures (see also my post: The Rise and Fall of the BOM) combined with the new data-driven environment? In that case, you would have both approaches within one platform.

The Virtual Twin versus the Digital Twin

It is interesting to notice that Dassault Systèmes consistently differentiates between the definition of the Virtual Twin and the Digital Twin.

According to the 3DS.com website:

Digital Twins are simply a digital form of an object, a virtual version.

Unlike a digital twin prototype that focuses on one specific object, Virtual Twin Experiences let you visualize, model and simulate the entire environment of a sophisticated experience. As a result, they facilitate sustainable business innovation across the whole product lifecycle.

Understandably, Dassault Systemes makes this differentiation. With the implementation of the Unified Product Structure, they can connect CAD geometry as datasets to other non-CAD datasets, like eBOM and mBOM data.

The Unified Product Structure was not the topic of this event but is worthwhile to notice.

REE Automotive

![]() The presentation from Steve Atherton from REE Automotive was interesting because here we saw an example of an automotive startup that decided to go pure for the cloud.

The presentation from Steve Atherton from REE Automotive was interesting because here we saw an example of an automotive startup that decided to go pure for the cloud.

REE Automotive is an Israeli technology company that designs, develops, and produces electric vehicle platforms. Their mission is to provide a modular and scalable electric vehicle platform that can be used by a wide range of industries, including delivery and logistics, passenger cars, and autonomous vehicles.

Steve Atherton is the PLM 3DExperience lead for REE at the Engineering Centre in Coventry in the UK, where they have most designers. REE also has an R&D center in Tel Aviv with offshore support from India and satellite offices in the US

Steve Atherton is the PLM 3DExperience lead for REE at the Engineering Centre in Coventry in the UK, where they have most designers. REE also has an R&D center in Tel Aviv with offshore support from India and satellite offices in the US

REE decided from the start to implement its PLM backbone in the cloud, a logical choice for such a global spread company.

The cloud was also one of the conference’s central themes, and it was interesting to see that a startup company like REE is pushing for an end-to-end solution based on a cloud solution. So often, you see startups choosing traditional systems as the senior members of the startup to take their (legacy) PLM knowledge to their next company.

The cloud was also one of the conference’s central themes, and it was interesting to see that a startup company like REE is pushing for an end-to-end solution based on a cloud solution. So often, you see startups choosing traditional systems as the senior members of the startup to take their (legacy) PLM knowledge to their next company.

The current challenge for REE is implementing the manufacturing processes (EBOM- MBOM) and complying as much as possible with the out-of-the-box best practices to make their cloud implementation future-proof.

Groupe Renault

Olivier Mougin, Head of PLM at Groupe RENAULT, talked about their Renaulution Virtual Twin (RVT) program. Renault has always been a strategic partner of Dassault Systèmes.

Olivier Mougin, Head of PLM at Groupe RENAULT, talked about their Renaulution Virtual Twin (RVT) program. Renault has always been a strategic partner of Dassault Systèmes.

I remember them as one of the first references for the ENOVIA V6 backbone.

The Renaulution Virtual Twin ambition: from engineering to enterprise platform, is enormous, as you can see below:

Each of the three pillars has transformational aspects beyond traditional ways of working. For each pillar, Olivier explained the business drivers, expected benefits, and why a new approach is needed. I will not go into the details in this post.

However, you can see the transformation from an engineering backbone to an enterprise collaboration platform – The Renaulution!.

Ahmed Lguaouzi, head of marketing at NETVIBES, enforced the extended power of data intelligence on top of an engineering landscape as the target architecture.

Renault’s ambition is enormous – the ultimate dream of digital transformation for a company with a great legacy. The mission will challenge Renault and Dassault Systèmes to implement this vision, which can become a lighthouse for others.