You are currently browsing the tag archive for the ‘model-based’ tag.

It was a great pleasure to attend my favorite vendor-neutral PLM conference this year in Gothenburg—approximately 150 attendees, where most have expertise in the PLM domain.

It was a great pleasure to attend my favorite vendor-neutral PLM conference this year in Gothenburg—approximately 150 attendees, where most have expertise in the PLM domain.

We had the opportunity to learn new trends, discuss reality, and meet our peers.

The theme of the conference was:Value Drivers for Digitalization of the Product Lifecycle, a topic I have been discussing in my recent blog posts, as we need help and educate companies to understand the importance of digitalization for their business.

The two-day conference covered various lectures – view the agenda here – and of course the topic of AI was part of half of the lectures, giving the attendees a touch of reality.

The two-day conference covered various lectures – view the agenda here – and of course the topic of AI was part of half of the lectures, giving the attendees a touch of reality.

In this first post, I will cover the main highlight of Day 1.

Value Drivers for Digitalization of the Product Lifecycle

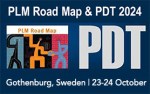

As usual, the conference started with Peter Bilello, president & CEO of CIMdata, stressing again that when implementing a PLM strategy, the maximum result comes from a holistic approach, meaning look at the big picture, don’t just focus on one topic.

As usual, the conference started with Peter Bilello, president & CEO of CIMdata, stressing again that when implementing a PLM strategy, the maximum result comes from a holistic approach, meaning look at the big picture, don’t just focus on one topic.

It was interesting to see again the classic graph (below) explaining the benefits of the end-to-end approach – I believe it is still valid for most companies; however, as I shared in my session the next day, implementing concepts of a Products Service System will require more a DevOp type of graph (more next week).

Next, Peter went through the CIMdata’s critical dozen with some updates. You can look at the updated 2024 image here.

Some of the changes: Digital Thread and Digital Twin are merged– as Digital Twins do not run on documents. And instead of focusing on Artificial Intelligence only, CIMdata introduced Augmented Intelligence as we should also consider solutions that augment human activities, not just replace them.

Some of the changes: Digital Thread and Digital Twin are merged– as Digital Twins do not run on documents. And instead of focusing on Artificial Intelligence only, CIMdata introduced Augmented Intelligence as we should also consider solutions that augment human activities, not just replace them.

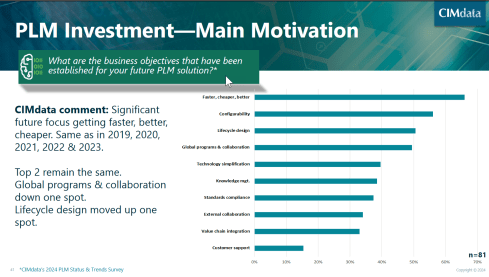

Peter also shared the results of a recent PLM survey where companies were asked about their main motivation for PLM investments. I found the result a little discouraging for several reasons:

The number one topic is still faster, cheaper and better – almost 65 % of the respondents see this as their priority. This number one topic illustrates that Sustainability has not reached the level of urgency, and perhaps the topic can be found in standards compliance.

Many of the companies with Sustainability in their mission should understand that a digital PLM infrastructure is the foundation for most initiatives, like Lifecycle Analysis (LCA). Sustainability is more than part of standards compliance, if it was mentioned anyway.

Many of the companies with Sustainability in their mission should understand that a digital PLM infrastructure is the foundation for most initiatives, like Lifecycle Analysis (LCA). Sustainability is more than part of standards compliance, if it was mentioned anyway.

The second disappointing observation for the understanding of PLM is that customer support is mentioned only by 15 % of the companies. Again, connecting your products to your customers is the first step to a DevOp approach, and you need to be able to optimize your product offering to what the customer really wants.

Digital Transformation of the Value Chain in Pharma

The second keynote was from Anders Romare, Chief Digital and Information Officer at Novo Nordisk. Anders has been participating in the PDT conference in the past. See my 2016 PLM Roadmap/PDT Europe post, where Anders presented on behalf of Airbus: Digital Transformation through an e2e PLM backbone.

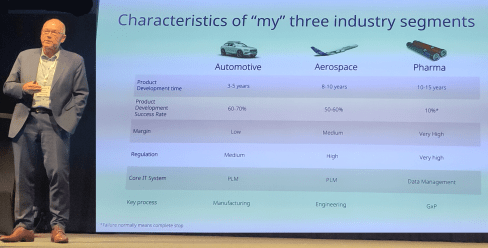

Anders started by sharing some of the main characteristics of the companies he has been working for. Volvo, Airbus and now Novo Nordisk. It is interesting to compare these characteristics as they say a lot about the industry’s focus. See below:

Anders is now responsible for digital transformation in Novo Nordisk, which is a challenge in a heavily regulated industry.

One of the focus areas for Novo Nordisk in 2024 is also Artificial Intelligence, as you can see from the image to the left (click on it for the details).

One of the focus areas for Novo Nordisk in 2024 is also Artificial Intelligence, as you can see from the image to the left (click on it for the details).

As many others in this conference, Anders mentioned AI can only be applicable when it runs on top of accurate data.

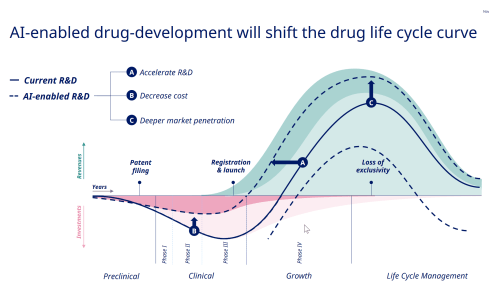

Understanding the potential of AI, they identified 59 areas where AI can create value for the business, and it is interesting to compare the traditional PLM curve Peter shared in his session with the potential AI-enabled drug-development curve as presented by Anders below:

Next, Anders shared some of the example cases of this exploration, and if you are interested in the details, visit their tech.life site.

When talking about the engineering framing of PLM, it was interesting to learn from Anders, who had a long history in PLM before Novo Nordisk, when he replied to a question from the audience that he would never talk about PLM at the management level. It’s very much aligned with my Don’t mention the P** word post.

A Strategy for the Management of Large Enterprise PLM Platforms

One of the highlights for me on Day 1 was Jorgen Dahl‘s presentation. Jorgen, a senior PLM director at GE Aerospace, shared their story towards a single PLM approach needed due to changes in businesses. And addressing the need for a digital thread also comes with an increased need for uptime.

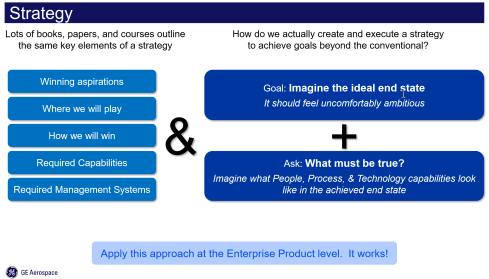

I like his strategy to execution approach, as shown in the image below, as it contains the most important topics. The business vision and understanding, the imagination of the end status and What must be True?

In my experience, the three blocks are iteratively connected. When describing the strategy, you might not be able to identify the required capabilities and management systems yet.

But then, when you start to imagine the ideal end state, you will have to consider them. And for companies, it is essential to be ambitious – or, as Jorgen stated, uncomfortable ambitious. Go for the 75 % to almost 100 % to be true. Also, asking What must be True is an excellent way to allow people to be involved and creatively explore the next steps.

Note: This approach does not provide all the details, as it will be a multiyear journey of learning and adjusting towards the future. Therefore, the strategy must be aligned with the culture to avoid continuous top-down governance of the details. In that context, Jorgen stated:

“Culture is what happens when you leave the room.”

It is a more positive statement than the famous Peter Drucker’s quote: “Culture eats strategy for breakfast.”

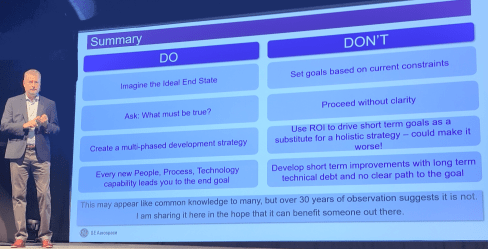

Jorgen’s concluding slide mentions potential common knowledge, although I believe the way Jorgen used the right easy-to-digest points will be helpful for all organizations to step back, look at their initiatives, and compare where they can improve.

How a Business Capability Model and Application Portfolio Management Support Through Changing Times

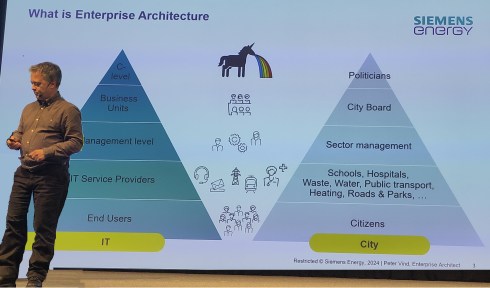

Peter Vind‘s presentation was nicely connected to the presentation from Jorgen Dahl. Peter, who is an enterprise architect at Siemens Energy, started by explaining where the enterprise architect fits in an organization and comparing it to a city.

In his entertaining session, he mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

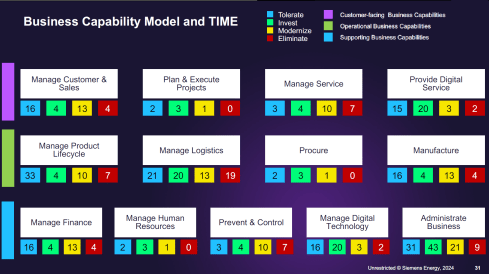

Peter explained how they used Business Capability Modeling when Siemens Energy went through various business stages. First, the carve-out from Siemens AG and later the merger with Siemens Gamesa. Their challenge is to understand which capabilities remain, which are new or overlapping, both during the carve-out and merging process.

The business capability modeling leads to a classification of the applications used at different levels of the organization, such as customer-facing, operational, or supporting business capabilities.

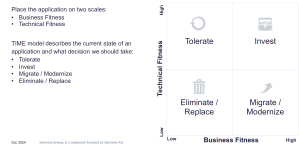

Next, for the lifecycle of the applications, the TIME approach was used, meaning that each application was mapped to business fitness and technical fitness. Click on the diagram to see the details.

The result could look like the mapping shown below – a comprehensive overview of where the action is

It is a rational approach; however, Peter mentioned that we also should be aware of the HIPPOs in an organization. If there is a HiPPO (Highest Paid Person’s Opinion) in play, you might face a political battle too.

It was a great educational session illustrating the need for an Enterprise Architect, the value of business capabilities modeling and the TIME concept.

And some more …

There were several other exciting presentations during day 1; however, as not all presentations are publicly available, I cannot discuss them in detail; I just looked at my notes.

Driving Trade Compliance and Efficiency

Peter Sandeck, Director of Project Management at TE Connectivity shared what they did to motivate engineers to endorse their Jurisdiction and Classification Assessment (JCA) process. Peter showed how, through a Minimal Viable Product (MVP) approach and listening to the end-users, they reached a higher Customer Satisfaction (CSAT) score after several iterations of the solution developed for the JCA process.

Peter Sandeck, Director of Project Management at TE Connectivity shared what they did to motivate engineers to endorse their Jurisdiction and Classification Assessment (JCA) process. Peter showed how, through a Minimal Viable Product (MVP) approach and listening to the end-users, they reached a higher Customer Satisfaction (CSAT) score after several iterations of the solution developed for the JCA process.

This approach is an excellent example of an agile method in which engineers are involved. My remaining question is still – are the same engineers in the short term also pushed to make lifecycle assessments? More work; however, I believe if you make it personal, the same MVP approach could work again.

Value of Model-Based Product Architecture

Jussi Sippola, Chief Expert, Product Architecture Management & Modularity at Wärtsilä, presented an excellent story related to the advantages of a more modular product architecture. Where historically, products were delivered based on customer requirements through the order fulfillment process, now there is in parallel the portfolio management process, defining the platform of modules, features and options.

Jussi Sippola, Chief Expert, Product Architecture Management & Modularity at Wärtsilä, presented an excellent story related to the advantages of a more modular product architecture. Where historically, products were delivered based on customer requirements through the order fulfillment process, now there is in parallel the portfolio management process, defining the platform of modules, features and options.

Jussi mentioned that they were able to reduce the number of parts by 50 % while still maintaining the same level of customer capabilities. In addition, thanks to modularity, they were able to reduce the production lead time by 40 % – essential numbers if you want to remain competitive.

Conclusion

Day 1 was a day where we learned a lot as an audience, and in addition, the networking time and dinner in the evening were precious for me and, I assume, also for many of the participants. In my next post, we will see more about new ways of working, the AI dream and Sustainability.

Again, a “The weekend after …” post related to my favorite event to which I have contributed since 2014.

Expectations were high this time from my side, in particular because we would have a serious discussion related to connected digital threads and federated PLM.

More about these topics in my post next week as all content is not yet available for sharing.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

The conference was sold out this time, and during the breaks, you had to navigate through the people to find your network opportunities. Also, the participation of the main PLM players as sponsors illustrated that everyone wanted to benefit from this opportunity to meet and learn from their industry peers.

Looking back to the conference, there were two noticeable streams.

- The stream where people share their current PLM experiences, traditionally the A&D action groups moderated by CIMdata, is part of this stream. This part I will cover in this post.

- There were forward-looking presentations related to standards, ontologies, and federated PLM—all with an AI flavor. This part I will cover in my next post(s).

The connection between all these sessions was the Digital Thread. The conference’s theme was: The Digital Thread in a Heterogeneous, Extended Enterprise Reality. Let’s start the review with the highlights from the first stream.

Digital Thread: Why Should We Care?

As usual, Peter Bilello from CIMdata kicked off the conference by setting the scene. Peter started by clarifying the two definitions of the Digital Thread.

- The first is a communication framework that allows a connected data flow and integrated view of an asset’s data (i.e., its Digital Twin) throughout its lifecycle across traditionally siloed functional perspectives.

In my terminology, the connected digital thread. - The second is a network of connected information sources around the product lifecycle supporting traceability and decision-making.

In my terminology, the coordinated digital thread is the most straightforward digital thread to achieve.

Peter recommends starting a digital thread by connecting at the beginning of product conceptualization, creating an environment where one can analyze the performance of the product portfolio and the product features and capabilities that need to be planned or how they perform in the field.

In addition, when defining the products, connect them with regulatory requirement databases as they have must-have requirements. A topic I addressed in my session too, besides the existing regulatory requirements, it is expected that in the upcoming years, due to environmental regulations, these requirements will increase, and it will be necessary to have them integrated with your digital thread.

Digital Threads require data governance and are the basis for the various digital twins. Peter discussed the multiple applications of the digital twin, primarily a relation between a virtual asset and a physical asset, except in the early concept phase.

The digital thread is still in the early phase of implementation at companies. A CIMdata survey showed that companies still focus primarily on implementing traditional PDM capabilities, although as the image above shows, there is a growing interest in short-term digital twin/thread implementations.

People, Process & Technology:

The Pillars of Digital Transformation Success

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

The second keynote was from Christine McMonagle, Director of Digital Engineering Systems at Textron Systems a services and products supplier for the Aerospace and Defense industry. Christine leads the digital evolution in Textron Systems and presents nicely how a digital transformation should start from the people.Traditionally this industry has enough budget on the OEM level and therefore companies will not take a revolutionary approach when it comes to digital transformation.

Having your people at all levels involved and make them understand the need for change is crucial. A change does not happen top-down. You must educate people and understand what is possible and achievable to change – in the right direction. One of her concluding slides highlights the main points.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

In the Q&A there to Christine’s sessions there was an interesting question related to the involvement of Human Resources (HR) in this project. There was a laugh that said it all – like in most companies HR is not focusing on organizational change, they focus more on operational issues – the Human is considered a Resource.

Between the regular sessions there were short sessions from sponsors: Altium, Contact Software, Dassault Systemes, ESI, inensia, Modular Management , PTC, SAP, Share PLM and Sinequa could pitch their value offering.

The Share PLM session, shortly after Christine’s presentation was a nice continuation of the focus on people. I loved the Share PLM image to the left explaining why people do not engage with our dreams.

Learn how LEONI is achieving Digital Continuity in the Automotive Industry.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Tobias Bauer, head of Product Data Standardization at LEONI talked about their FLOW project. FLOW is an acronym for Future Leoni Operating World. LEONI, well-known in the automotive industry produces cable and network solutions, including cable harnesses.

Recently it has gone through a serious financial crisis and the need for restructuring. This makes it always challenging for a “visionary” PLM project. Tobias mentioned that after disappointing engagements with consultancy firms, they decided on a bottom-up approach to analyze existing processes using BPML. They agreed on a to-be state, fixing bottlenecks and streamlining the flow of information.

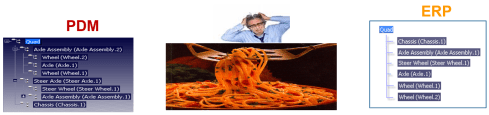

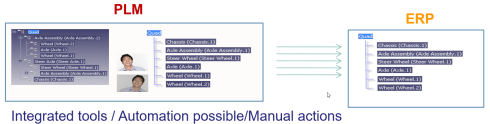

Tobias presented a smooth product data flow between their PLM system (PTC Windchill) and ERP (SAP S/4 HANA), clearly stating that the PLM system has become the controlled source of managing product changes.

Their key achievements reported so far were:

- related to BOM creation and routing (approx. 10x faster – from 2-3 days to ¼ day),

- better data consistency (fewer manual steps)

- complete traceability between the systems with PLM as the change management backbone.

The last point I would call the coordinated Digital Thread. The image below shows their current IT landscape in a simplified manner.

This solution might seem obvious for neutral PLM academics or experts, but it is an achievement to do this in an environment with SAP implemented. The eBOM-mBOM discussion is one of the most frequent held discussions – sometimes a battle.

Often, companies use their IT systems first and listen to the vendor’s experts to build integrations instead of starting from the natural business flow of information.

Aerospace & Defense Action groups outcomes

As usual, several Aerospace & Defense (A&D) action groups reported their progress during this conference. The A&D action groups are facilitated by CIMdata, and per topic, various OEMs and suppliers in the A&D industry study and analyze a particular topic, often inviting software vendors to demonstrate and discuss their capabilities with them.

Their activities and reports can be found on the A&D PLM Action page here; In the remainder of this post I will share briefly the ones presented. For a real deep dive in the topics I recommend to find the proceedings per topic on the A&D action page.

The Promise and Reality of the Digital Thread

James Roche CIMdata presented insights from industry research on The Promise and Reality of the Digital Thread. A total of 90 persons completed an in-depth survey about the status and implementation of digital thread concepts in their company. It is clear that the digital thread is still in its early days in this industry, and it is mainly about the coordinated digital thread. The image below reflects the highlights of the survey.

A&D Industry Digital Twin and Digital Thread Standards

Robert Rencher from Boeing explained the progress of their Digital Twin/Digital Thread project, where they had investigated the applicable standards to support a Digital Twin/Digital Thread (Phase 4 out of 7 currently planned). The image below shows that various standards may apply depending on business perspectives.

Their current findings are:

- Digital twin standards overlap, which is most likely a function of standards bodies representing their respective standards as an ongoing development from a historical perspective.

- The limited availability of mature digital twin/thread standards requires greater attention by standards organizations.

- The concept of the digital twin continues to evolve. This dynamic will be a challenge to standards bodies.

- The digital twin and the digital thread are distinct aspects of digital transformation. The corresponding digital twin and digital thread standards will be distinctly different.

- Coordinating the development of the respective standards between the digital twin/thread is needed.

- The digital twin’s organization, definition, and enablement depend on data and information provided by the digital thread.

Roadmap for Enabling Global Collaboration

Robert Gutwein (Pratt & Whitney Canada) and Agnes Gourillon-Jandot (Safran Aircraft Engines) reported their progress on the Global Collaboration project. Collaboration is challenged as exchange methods can vary, as well as dealing with the validation of exchanged information and governing the exchange of information in the context of IP protection.

One of the focal points was to introduce an approach to define standardized supplier agreements that anticipate modern model-based exchanges and collaboration methods.

Robert & Agnes presented the 8-step guideline for the aerospace industry in specific terms, explicitly mentioning the ISO44001 standard as being generic for all industries. An impression of the eight steps and sub-steps can be found below:

The 8-step approach will be supported by a 3rd-party Collaboration Management System (CMS app), which is not mandatory but recommended for use. When an interaction depends on a specific tool, it cannot become an ISO standard. The purpose of the methodology and app is to assist participants to ensure the collaboration aspect between stakeholders contains all the necessary steps & and people.

Model-based OEM/Supplier Collaboration Needs in Aviation Industry

Hartmut Hintze, working at Airbus Operations, presented the latest findings of the MBSE Data Interoperability working group and presented the model-based OEM/Supplier collaboration requirements and standards that need to be supported by the PLM/MBSE solution providers in the future. This collaboration goes beyond sharing CAD models, as you can see from the supplier engagement framework below:

As there are no standards-based tools, their first focus was looking into methodologies for model and behavior exchanges based on use cases. The use cases are then used to verify the state-of-the-art abilities of the various tools. At this moment, there is a focus on SysML V2 as a potential game-changer due to its new API support. As a relative novice on SysML, I cannot explain this topic in more simple words. I recommend that experts visit their presentations on the AD PAG publications page here.

Conclusions

The theme of the conference was related to the Digital Thread – and as you will discover it is valid for everyone. Learn to see the difference between the coordinated Digital Thread and the connected Digital Tread.This time, a lot of information about the Aerospace and Defense Action Groups (AD PAG), which are a fundamental part of this conference. The A&D industry has always been leading in advanced PLM concepts. However, more advanced concepts will come in my next post when touching the connected Digital Thread in the context of federated PLM and let’s not forget AI.

During May and June, I wrote a guest chapter for the next edition of John Stark’s book Product Lifecycle Management (Volume 2): The Devil is in the Details.

During May and June, I wrote a guest chapter for the next edition of John Stark’s book Product Lifecycle Management (Volume 2): The Devil is in the Details.

The book is considered a standard in the academic world when studying aspects of PLM.

Looking into the table of contents through the above link, it shows that understanding PLM in its full scope is broad. I wrote about it recently: PLM is Complex (and we have to accept it?), and Roger Tempest and others are still fighting to get the job as PLM Professional recognized Associate Yourself With Professional PLM.

To make the scope broader, John invited me to write a chapter about PLM and Sustainability, which is an actual topic in many organizations. As sustainability is my dedicated topic in the PLM Global Green Alliance (PGGA) core team, I was happy to accept this challenge.

To make the scope broader, John invited me to write a chapter about PLM and Sustainability, which is an actual topic in many organizations. As sustainability is my dedicated topic in the PLM Global Green Alliance (PGGA) core team, I was happy to accept this challenge.

This activity is challenging because writing a chapter on a current topic might make it outdated soon. For the same reason, I never wanted to write a PLM book as I wrote in my 2014 post: Did you notice PLM is changing?

The book, with the additional chapter, will be available later this year. I want to share with you in this post the topics I addressed in this chapter. Perhaps relevant for your organization or personal interests. Also, I am looking forward to learning if I missed any topics.

The book, with the additional chapter, will be available later this year. I want to share with you in this post the topics I addressed in this chapter. Perhaps relevant for your organization or personal interests. Also, I am looking forward to learning if I missed any topics.

Introduction

The chapter starts with defining the context. PLM is considered a strategy supported by a connected IT infrastructure, and for the definition of sustainability, I refer to the relevant SDGs as described on our PGGA theme page: PLM and Sustainability

Next, I discuss two major concepts indissoluble connected with sustainability.

The Circular Economy

On a planet with limited resources and still a growing consumption of raw materials, we need to follow the concepts of the circular economy in our businesses and lives. The circular economy section addresses mainly the hardware side of the butterfly as, here, PLM practices have the most significant impact.

The circular economy requires collaboration among various stakeholders, including businesses, governments and consumers. It involves rethinking production processes and establishing new consumption patterns. Policies and regulations will push for circular economy patterns, as seen in the following paragraphs.

Systems Thinking

A significant change in bringing products to the market will be the need to change how we look at our development processes. Historically, many of these processes were linear and only focused on time to market, cost and quality. Now, we have to look into other dimensions, like environmental impact, usage and impact on the planet. As I wrote in the past Systems Thinking – a must-have skill in the 21st century?

Systems Thinking is a cognitive approach that emphasizes understanding complex problems by considering interconnections, feedback loops, and emergent properties. It provides a holistic perspective and explores multiple viewpoints.

Systems Thinking guides problem-solving and decision-making and requires you to treat a solution with a mindset of a system interacting with other systems.

Regulations

More sustainable products and services will be driven primarily by existing and upcoming regulations. In this section, I refer to the success of the CFC (ChloroFluorCarbon) emission reduction, leading to slowly fixing the hole in the Ozon layer. Current regulations like WEEE, RoHS and REACH are already relevant for many companies, and compliance with these regulations is a good exercise for more stringent regulations related to Carbon emissions and upcoming related to the Digital Product Passport.

Making regulatory compliance a part of the concept phase ensures no late changes are needed to become compliant, saving time and costs. In addition, making regulatory compliance as much as possible with a data-driven approach reduces the overhead required to prove regulatory compliance. Both topics are part of a PLM strategy.

![]() In this context, see Lionel Grealou’s article 5 Brand Value Benefits at the Intersection of Sustainability and Product Compliance. The article has also been shared in our PGGA LinkedIn group.

In this context, see Lionel Grealou’s article 5 Brand Value Benefits at the Intersection of Sustainability and Product Compliance. The article has also been shared in our PGGA LinkedIn group.

Business

On the business side, the Greenhouse Gas Protocol is explained. How companies will have to report their Scope 1 and Scope 2 emissions and, ultimately, Scope 3 – see the image below for the details.

GHG reporting will support companies, investors and consumers to decide where to prioritize and put their money.

Ultimately, companies have to be profitable to survive in their business. The ESG framework is relevant in this context as it will allow investors to put their money not only based on short-term gains (as expected) but also on Environmental or Social parameters. There are a lot of discussions related to the ESG framework, as you might have read in Vincent de la Mar’s monthly newsletter, Sustainability & ESG Insights, which is also published in our PGGA group – a link below..

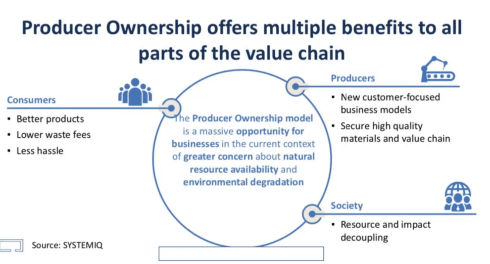

Besides ESG guidelines, there is also the drive by governments and consumers to push for a Product as a Service economy. Instead of owning products, consumers would pay for the usage of these products.

The concept is not new when considering lease cars, EV scooters, or streaming services like Spotify and Netflix. In the CIMdata PLM Roadmap/PDT Fall 2021 conference, we heard Kenn Webster explaining: In the future, you will own nothing & you will be happy.

Changing the business to a Product as a Service is not something done overnight. It requires repairable, upgradeable products. And business related, it requires a connected ecosystem of all stakeholders – the manufacturer, the finance company, and the operating entities.

Digital Transformation

All the subjects discussed before require real-time reporting and analysis combined with data access to compliance-related databases. More in the section related to Life Cycle Assessment. As I discussed last year in several conferences, a sustainability initiative starts with data-driven and model-based approaches during the concept phase, but when manufacturing and operating (connected) products in the field. You can read the entire story here: Sustainability and Data-Driven PLM – the Perfect Storm.

Life Cycle Analysis

Special attention is given in this chapter to Life Cycle Analysis, which seems to be a popular topic among PLM vendors. Here, they can provide tools to make a lifecycle assessment, and you can read an impression of these tools in a guest blog from Roger L. Franz titled PLM Tools to Design for Sustainability – PLM Green Global Alliance.

However, Lifecycle Analysis is not as simple. Looking at the ISO 14040 framework, which describes – having the right goals and scope in mind, allows you to do an LCA where the Product Category Rules (PCS) will enable companies to compare their products with others.

PCRs include the description of the product category, the goal of the LCA, functional units, system boundaries, cut-off criteria, allocation rules, impact categories, information on the use phase, units, calculation procedures, requirements for data quality, and other information on the lifecycle Inventory Phase.

So be aware there is more to do than installing a tool.

Digital Twin

This section describes the importance of implementing a digital twin for the design phase, allowing companies to develop, test and analyze their products and services first virtually. Trade-off studies on virtual products are much cheaper, and when they are done in a data-driven, model-based environment, it will be the most efficient environment. In my terminology, setting up such a collaboration environment might be considered a System of Engagement.

This section describes the importance of implementing a digital twin for the design phase, allowing companies to develop, test and analyze their products and services first virtually. Trade-off studies on virtual products are much cheaper, and when they are done in a data-driven, model-based environment, it will be the most efficient environment. In my terminology, setting up such a collaboration environment might be considered a System of Engagement.

The second crucial digital twin mentioned is the digital twin from a product in operation where performance can be monitored and usage can be optimized for a minimal environmental impact. Suppose a company is able to create a feedback loop between its products in the field and its product innovation platform. In that case, it can benchmark its design models and update the product behavior for better performance.

The manufacturing digital twin is also discussed in the context of environmental impact, as choosing the right processes and resources can significantly affect scope 3 emissions.

The chapter finishes with the story of a fictive company, WePack, where we can follow the impact and implementations of the topics described in this chapter.

Conclusion

As I described in the introduction, the topic of PLM and Sustainability is relatively new and constantly evolving. What do you think? Did I miss any dimensions?

Feel free to contribute to our PLM Global Green Alliance LinkedIn group.

Imagine you are a supplier working for several customers, such as big OEMs or smaller companies. In Dec 2020, I wrote about PLM and the Supply Chain because it was an underexposed topic in many companies. Suppliers need their own PLM and IP protection and work as efficiently as possible with their customers, often the OEMs.

Imagine you are a supplier working for several customers, such as big OEMs or smaller companies. In Dec 2020, I wrote about PLM and the Supply Chain because it was an underexposed topic in many companies. Suppliers need their own PLM and IP protection and work as efficiently as possible with their customers, often the OEMs.

Most PLM implementations always start by creating the ideal internal collaboration between functions in the enterprise. Historically starting with R&D and Engineering, next expanding to Manufacturing, Services and Marketing. Most of the time in this logical order.

In these implementations, people are not paying much attention to the total value chain, customers and suppliers. And that was one of the interesting findings at that time, supported by surveys from Gartner and McKinsey:

- Gartner: Companies reported improvements in the accuracy of product data and product development as the main benefit of their PLM implementation. They did not see so much of a reduced time to market or reduced product development costs. After analysis, Gartner believes the real issue is related to collaboration processes and supply chain practices. Here the lead times did not change, nor did the number of changes.

- McKinsey: In their article, The Case for Digital Reinvention, digital supply chains were mentioned as the area with the potential highest ROI; however, as the image shows below, it was the area with the lowest investment at that time.

In 2020 we were in the middle of broken supply chains and wishful thinking related to digital transformation, all due to COVID-19.

In 2020 we were in the middle of broken supply chains and wishful thinking related to digital transformation, all due to COVID-19.

Meanwhile, the further digitization in PLM (systems of engagement) and the new topic, Sustainability of the supply chain, became visible.

Therefore it is time to make a status again, also driven by discussions in the past few weeks.

The old “connected” approach (loose-loose).

A preferred way for OEMs in the past was to have the Supplier or partner directly work in their PLM environment. The OEM could keep control of the product development process and the incremental maturity of the BOM, where the Supplier could connect their part data and designs to the OEM environment. T

A preferred way for OEMs in the past was to have the Supplier or partner directly work in their PLM environment. The OEM could keep control of the product development process and the incremental maturity of the BOM, where the Supplier could connect their part data and designs to the OEM environment. T

The advantage for the OEM is clear – direct visibility of the supplier data when available. The benefit for the Supplier could also be immediate visibility of the broader context of the part they are responsible for.

However, the disadvantages for a supplier are more significant. Working in the OEM environment exposes all your IP and hinders knowledge capitalization from the Supplier. Not a big thing for perhaps a tier 3 supplier; however, the more advanced the products from the Supplier are, the higher the need to have its own PLM environment.

However, the disadvantages for a supplier are more significant. Working in the OEM environment exposes all your IP and hinders knowledge capitalization from the Supplier. Not a big thing for perhaps a tier 3 supplier; however, the more advanced the products from the Supplier are, the higher the need to have its own PLM environment.

Therefore the old connected approach is a loose-loose relationship in particular for the Supplier and even for the OEM (having less knowledgeable suppliers)

The modern “connected” approach (wins t.b.d.)

In this situation, the target infrastructure is a digital infrastructure, where datasets are connected in real-time, providing the various stakeholders in engagement access to a filtered set of data relevant to their roles.

In this situation, the target infrastructure is a digital infrastructure, where datasets are connected in real-time, providing the various stakeholders in engagement access to a filtered set of data relevant to their roles.

In my terminology, I refer to them as Systems of Engagement, as the target is that all stakeholders work in this environment.

The counterpart of Systems of Engagement is the Systems of Record, which provides a product baseline, manufacturing baseline, and configuration baseline of information consumed by other disciplines.

These baselines are often called Bills of Information, and the traditional PLM system has been designed as a System of Record. Major Bills of Information are the eBOM, the mBOM and sometimes people talk about the sBOM(service BOM).

These baselines are often called Bills of Information, and the traditional PLM system has been designed as a System of Record. Major Bills of Information are the eBOM, the mBOM and sometimes people talk about the sBOM(service BOM).

Typical examples of Systems of Engagement I have seen in alphabetical order are:

- Arena Solutions has a long-term experience in BOM collaboration between engineering teams, suppliers and contract manufacturers.

- CATENA-X might be a strange player in this list, as CATENA-X is more a German Automotive consortium targeting digital collaboration between stakeholders, ensuring security and IP protection.

- Colab is a provider of cloud-based collaboration software allowing design teams and suppliers to work in real time together.

- OnShape – a cloud-based collaborative product design environment for dispersed engineering teams and partners.

- OpenBOM – a SaaS solution focusing on BOM collaboration connected to various CAD systems along with design teams and their connected suppliers

These are some of the Systems of Engagement I am aware of. They focus on specific value streams that can improve the targeted time to market and product introduction efficiency. In companies with no extensive additional PLM infrastructure, they can become crucial systems of engagement.

The main challenge for these systems of engagement is how they will connect to traditional Systems or Records – the classical PLM systems that we know in the market (Aras, Dassault, PTC, Siemens).

The main challenge for these systems of engagement is how they will connect to traditional Systems or Records – the classical PLM systems that we know in the market (Aras, Dassault, PTC, Siemens).

Image on the left from a presentation done by Eric Herzog from SAAB at last year’s CIMdata/PDT conference.

You can read more about this here.

When establishing a mix of Systems of Engagement and Systems of Record in your organization digitally connected, we will see overall benefits. My earlier thoughts, in general, are here: Time to split PLM?

The almost Connected approach

As I mentioned, in most companies, it is already challenging to manage their internal System of Record, which is needed for current operations and the traceability of information. In addition, most of the data stored in these systems is document-driven, not designed for real-time collaboration. So how would these companies collaborate with their suppliers?

The Model-Based Enterprise

In the bigger image below, I am referring to an image published by Jennifer Herron from her book Re-use Your CAD, where she describes the various stages of interaction between engineering, manufacturing and the extended enterprise.

In the bigger image below, I am referring to an image published by Jennifer Herron from her book Re-use Your CAD, where she describes the various stages of interaction between engineering, manufacturing and the extended enterprise.

Her mission is to promote and educate organizations in moving to a Model-Based Definition and, in the long term, to a Model-Base Enterprise.

The ultimate target of information exchange in this diagram is that the OEM and the Supplier are separate entities. However, they can exchange Digital Product Definition Packages and TDPs over the web (electronically). In this exchange, we have a mix of systems of engagement and systems of record on the OEM and Supplier sides.

Depending on the type of industry, in my ecosystem of companies, many suppliers are still at level 2, dreaming or pushed to become level 3, illustrating there is a difficult job to do – learning new practices. And why would you move to the next level?

Depending on the type of industry, in my ecosystem of companies, many suppliers are still at level 2, dreaming or pushed to become level 3, illustrating there is a difficult job to do – learning new practices. And why would you move to the next level?

Every step can have significant benefits, as reported by companies that did this.

So what’s stopping your company from moving ahead? People, Processes, Skills, Work Pressure? It is one of the most common excuses: “We are too busy, no time to improve”.

A supply chain collaboration hub

On March 21, I discussed with Magnus Färneland from Eurostep their cloud-based PLM collaboration hub, ShareAspace. You can read the interview here: PLM and Supply Chain Collaboration

I believe this concept can be compelling for a connected enterprise. The OEM and the Supplier share (or connect) only the data they want to share, preferably based on the PLCS data schema (ISO 10303-239).

In a primitive approach, this can be BOM structures with related files; however, it could become a real model-based connection hub in the advanced mode. “

Now you ask yourself why this solution is not booming.

In my opinion, there are several points to consider:

- Who designs, operates and maintains the collaboration hub?

It is likely not the suppliers, and when the OEM takes ownership, they might believe there is no need for the extra hub; just use the existing PLM infrastructure. - Could a third party find a niche market for this? Eurostep has already been working on this for many years, but adopting the concept seems higher in de BIM or Asset Management domains. Here the owner/operator sees the importance of a collaboration hub.

A final remark, we are still far from a connected enterprise; concepts like Catena-X and others need to become mature to serve as a foundation – there is a lot of technology out there -now we need the skilled people and tested practices to use the right technology and tune solutions concepts.

A final remark, we are still far from a connected enterprise; concepts like Catena-X and others need to become mature to serve as a foundation – there is a lot of technology out there -now we need the skilled people and tested practices to use the right technology and tune solutions concepts.

Sustainability demands a connected enterprise.

I focused on the Supplier dilemma this time because it is one of the crucial aspects of a circular economy and sustainable product development.

I focused on the Supplier dilemma this time because it is one of the crucial aspects of a circular economy and sustainable product development.

Only by using virtual models of the To-Be products/systems can we seriously optimize them. Virtual models and Digital Twins do not run on documents; they require accurate data from anywhere connected.

You can read more details in my post earlier this year: MBSE and Sustainability or look at the PLM and Sustainability recording on our PLM Global Green Alliance YouTube channel.

Conclusion

Due to various discussions I recently had in the field, it became clear that the topic of supplier integration in a best-connected manner is one of the most important topics to address in the near future. We cannot focus longer on our company as an isolated entity – value streams implemented in a connected manner become a must.

And now I am going to enjoy Liveworx in Boston, learning, discussing and understanding more about what PTC is doing and planning in the context of digital transformation and sustainability. More about that in my next post: The week(end) after Liveworx 2023 (to come)

I am writing this post because one of my PLM peers recently asked me this question: “Is the BOM losing its position? He was in discussion with another colleague who told him:

I am writing this post because one of my PLM peers recently asked me this question: “Is the BOM losing its position? He was in discussion with another colleague who told him:

“If you own the BOM, you own the Product Lifecycle”.

This statement made me think of ä recent post from Jan Bosch recent post: Product Development fallacy #8: the bill of materials has the highest priority.

Software becomes increasingly an essential part of the final product, and combined with Jan’s expertise in software development, he wrote this article. I recommend reading the full post (4 min read) and next browse through the comments.

Software becomes increasingly an essential part of the final product, and combined with Jan’s expertise in software development, he wrote this article. I recommend reading the full post (4 min read) and next browse through the comments.

If you cannot afford these 10 minutes, here is my favorite quote from the article:

An excessive focus on the bill of materials leads to significant challenges for companies that are undergoing a digital transformation and adopting continuous value delivery. The lack of headroom, high coupling and versioning hell may easily cause an explosion of R&D expenditure over time.

Where did the BOM focus come from? A historical overview related to the rise (and fall) of the BOM.

In the beginning, there was the drawing.

Before the era of computers, there was “THE drawing”, describing assemblies, subassemblies or parts. And on the drawing, you can find the parts list if relevant. This parts list was the first Bill of Material, describing the parts/materials shown on the drawing.

Before the era of computers, there was “THE drawing”, describing assemblies, subassemblies or parts. And on the drawing, you can find the parts list if relevant. This parts list was the first Bill of Material, describing the parts/materials shown on the drawing.

Next came MRP/ERP

With the introduction of the MRP system (Material Requirement Planning), it was the first step that by using computers, people could collect the material requirements for one system as data and process.

With the introduction of the MRP system (Material Requirement Planning), it was the first step that by using computers, people could collect the material requirements for one system as data and process.

Entering new materials/parts described on drawings was still a manual process, as well as referring to existing parts on the drawing. Reuse of parts was a manual process based on individual knowledge.

In the nineties, MRP evolved into ERP (Enterprise Resource Planning), which included the MRP part and added resource and manufacturing planning and financial reporting.

The ERP system became the most significant IT system, the execution system of the company. As it was the first enterprise system implemented, it was the first moment we learned about implementation challenges – people change and budget overruns. However, as the ERP system brought visibility to the company’s execution, it became a “must-have” system for management.

The ERP system became the most significant IT system, the execution system of the company. As it was the first enterprise system implemented, it was the first moment we learned about implementation challenges – people change and budget overruns. However, as the ERP system brought visibility to the company’s execution, it became a “must-have” system for management.

The introduction of mainstream 2D CAD did not affect the company’s culture so much. Drawings became electronic drawings, and the methodology of the parts list on the drawing remained.

Sometimes the interaction with the MRP/ERP system was enhanced by an interface – sending the drawing BOM to ERP. The advantage of the interface: no manual transfer of data reducing typos and BOM errors. The disadvantages at that time: relatively expensive (connectivity between systems was a challenge) and mostly one direction.

Sometimes the interaction with the MRP/ERP system was enhanced by an interface – sending the drawing BOM to ERP. The advantage of the interface: no manual transfer of data reducing typos and BOM errors. The disadvantages at that time: relatively expensive (connectivity between systems was a challenge) and mostly one direction.

And then there was PDM.

In parallel with the introduction of ERP systems, mainstream 3D CAD systems became affordable, particularly SolidWorks, Solid Edge and Inventor. These 3D CAD systems allow sharing of parts and assemblies in different products, and the PDM database was the first aid to support part reuse, versioning and standardization.

In parallel with the introduction of ERP systems, mainstream 3D CAD systems became affordable, particularly SolidWorks, Solid Edge and Inventor. These 3D CAD systems allow sharing of parts and assemblies in different products, and the PDM database was the first aid to support part reuse, versioning and standardization.

By extracting the parts from the assemblies and subassemblies, it was possible to generate a BOM structure in the PDM system to be transferred or typed into the ERP system. We did not talk about EBOM or MBOM then, as there was only one BOM in the ERP system, and the PDM system was a tool to feed the ERP system.

Many companies still have based their processes on this approach. ERP (read SAP nowadays) is the central execution system, and PDM is an external system. You might remember the story and image from my previous post about people, processes and tools. The bad practice example: Asking the ERP system to provide a part number when starting to design a part.

Many companies still have based their processes on this approach. ERP (read SAP nowadays) is the central execution system, and PDM is an external system. You might remember the story and image from my previous post about people, processes and tools. The bad practice example: Asking the ERP system to provide a part number when starting to design a part.

And then products started to change.

In the early 2000s, I worked with SmarTeam to define the E&E (Electronics and Electrical) template. One of the new concepts was to synchronize all design data coming from different disciplines to a single BOM structure.

In the early 2000s, I worked with SmarTeam to define the E&E (Electronics and Electrical) template. One of the new concepts was to synchronize all design data coming from different disciplines to a single BOM structure.

It was the time we started to talk about the EBOM. A type of BOM, as the structure to consolidate all the design data, was based on parts.

The EBOM, most of the time, reflects the design intent in logical groups and sending the relevant parts in the correct order to the ERP system was a favorite expensive customization for service providers. How to transfer an engineering BOM view to an ERP system that only understands the manufacturing view?

Note: not all ERP systems have the data model to differentiate between engineering parts and manufacturing parts

The image below illustrates the challenge and the customer’s perception.

The automated link between the design side (EBOM) and manufacturing side (MBOM) was a mission impossible – too many exceptions for the (spaghetti) code.

And then came the MBOM.

The identified issues connecting PDM and ERP led to the concept of implementing the MBOM in the PLM system. The MBOM in PLM is one of the characteristics of a PLM implementation compared to a PDM implementation. In a traditional PLM system, there is an interaction and connection between the EBOM and MBOM. EBOM parts should end up as MBOM parts. This interaction can be supported by automation, however, as it is in the same system, still leaving manual changes possible.

The MBOM structure in PLM could then be the information structure to transfer to the ERP system; however, there is more, as Jörg W. Fischer wrote in his provoking post-Die MBOM muss weg (The MBOM must go). He rightly points out (in German) that the MBOM is not a structure on its own but a combination of different views based on Assembly Drawings, Process Planning and Material Requirements.

The MBOM structure in PLM could then be the information structure to transfer to the ERP system; however, there is more, as Jörg W. Fischer wrote in his provoking post-Die MBOM muss weg (The MBOM must go). He rightly points out (in German) that the MBOM is not a structure on its own but a combination of different views based on Assembly Drawings, Process Planning and Material Requirements.

His conclusion:

Calling these structures, MBOM is trying to squeeze all three structures into one. That usually doesn’t work and then leads to much more emotional discussions in the project. It also costs a lot of money. It is, therefore, better not to use the term MBOM at all.

And indeed, just having an MBOM in your PLM system might help you to prepare some of the manufacturing steps, the needed resources and parts. The MBOM result still has to be localized at the local plant where the manufacturing takes place. And here, the systems used are the ERP system and the MES system.

The main advantage of having the MBOM in the PLM system is the direct relation between specification and manufacturing intent, allowing manufacturing engineering to work collaboratively with engineering in the same environment.

- The first benefit is fewer iterations and a shorter time to production, thanks to early interaction and manufacturing involvement in the engineering process.

- The second benefit is: product knowledge is centralized in a single system. Consolidating your Product Knowledge in ERP does not make sense due to global localization and the missing capabilities to manage the iterative engineering processes on non-existing parts.

And then came the SBOM, the xBOM

Traditional PLM vendors and implementations kept using xBOM structures as placeholders for related specification data (mechanical designs, electrical, software deliverables, serialized products). Most of the time, related files.

Traditional PLM vendors and implementations kept using xBOM structures as placeholders for related specification data (mechanical designs, electrical, software deliverables, serialized products). Most of the time, related files.

And with this approach, talking about digital thread, PLM systems also touch on the concepts of Configuration Management.

And with this approach, talking about digital thread, PLM systems also touch on the concepts of Configuration Management.

I will not go into the details here but look at the two images by clicking on them and see a similar mindset.

It is about the traceability of information in structures and systems. These structures work well in a relatively static and linear product development and delivery environment, as illustrated below:

Engineering change and release processes are based on managing the changes in different structures from the left to the right.

And then came software!

Modern connected products are no longer mechanical products. The product’s functionality no longer depends on the mechanical properties but mainly on embedded electronics and software used. For example, look at the mechanical design of a telecom transmission tower – its behavior merely comes from non-mechanical components, and they can change over time. Still, the Bill of Material contains a lot of concrete and steel parts.

Modern connected products are no longer mechanical products. The product’s functionality no longer depends on the mechanical properties but mainly on embedded electronics and software used. For example, look at the mechanical design of a telecom transmission tower – its behavior merely comes from non-mechanical components, and they can change over time. Still, the Bill of Material contains a lot of concrete and steel parts.

The ultimate example is comparing a Tesla (software on wheels) with a traditional car. For modern connected products, electronics and software need to be part of the solution. Software and electronics allow the product to be upgraded over time. Managing these products in the same manner as mechanical products is impossible, inefficient and therefore threatening your company’s future business.

I requote Jan Bosch:

An excessive focus on the bill of materials leads to significant challenges for companies that are undergoing a digital transformation and adopting continuous value delivery. The lack of headroom, high coupling and versioning hell may easily cause an explosion of R&D expenditure over time.

The model-based, connected enterprise

I will not solve the puzzle of the future in this post. You can read my observations in my series: The road to model-based and connected PLM. We need a new infrastructure with at least two modes. One that still serves as a System of Record, storing information in a traditional manner, like a Bill of Materials for the static parts, as not everyone and everything can be connected.

In addition, we need various Systems of Engagement that enable close to real-time interaction between products (systems) and relevant stakeholders for the engagement scope(multidisciplinary / consumers).

In addition, we need various Systems of Engagement that enable close to real-time interaction between products (systems) and relevant stakeholders for the engagement scope(multidisciplinary / consumers).

Digital twins are examples of such environments. Currently, these Systems of Engagement often work disconnected from the System of Record due to the lack of understanding of how to connect. (standard connectors? / OSLC?)

Our mission is to explore, as I wrote in my post Time to split PLM and drop our mechanical mindset.

And while I was finalizing this post, I read a motivating post from Jan Bosch again for all of you working on understanding and pushing the digital transformation in your eco-system.

And while I was finalizing this post, I read a motivating post from Jan Bosch again for all of you working on understanding and pushing the digital transformation in your eco-system.

The title: Be the protagonist of your life: 15 rules A starting point for more to come.

Conclusion

The BOM is no longer the master of the product lifecycle when it comes to managing connected products, where functionality mainly depends on software. BOM structures with related documents are just one of the extracted baselines from a data-driven, connected enterprise. This traditional PLM infrastructure requires other, non-BOM-driven structures to represent the actual status of a virtual or physical product.

The BOM is not dead, but there is more ………

Your thoughts?

![]() In this post, I want to explain why Model-Based Systems Engineering (MBSE) and Sustainability are closely connected. I would claim sustainability in our PLM domain will depend on MBSE.

In this post, I want to explain why Model-Based Systems Engineering (MBSE) and Sustainability are closely connected. I would claim sustainability in our PLM domain will depend on MBSE.

Can we achieve Sustainability without MBSE? Yes, but it will be costly and slow. And as all businesses want to be efficient and agile, they should consider MBSE.

What is MBSE?

The abbreviation MBSE stands for Model-Based Systems Engineering, a specialized manner to perform Systems Engineering. Look at the Wikipedia definition in short:

MBSE is a technical approach to systems engineering that focuses on creating and exploiting domain models as the primary means of information exchange rather than on document-based information exchange.

Model-Based fits in the digital transformation scope of PLM – from a document-based approach to a data-driven, model-based one. In 2018, I focused on facets of the model-based enterprise and related to MBSE in this post: Model-Based: System Engineering (MBSE).

My conclusion in that post was:

Model-Based Systems Engineering might have been considered as a discipline for the automotive and aerospace industry only. As products become more and more complex, thanks to IoT-based applications and software, companies should consider evaluating the value of model-based systems engineering for their products/systems.

I drew this conclusion before I focused on sustainability and systems thinking. Implementing sustainability concepts, like the Circular Economy, require more complex engineering efforts, justifying a Model-Based Systems Engineering approach. Let’s have a look.

If you want to learn more about why we need MBSE, look at this excellent keynote speech lecture from Zhang Xin Guo at the Incose 2018 conference below:

The Mission / the stakeholders

A company might deliver products to the market with the best price/quality ratio and regulatory compliance, perceived and checked by the market. This approach is purely focusing on economic parameters.

There is no need for a system engineering approach as the complexity is manageable. The mission is more linear, a “job to do,” and a limited number of stakeholders are involved in this process.

… with sustainability

Once we start to include sustainability in our product’s mission, we need a systems engineering approach, as several factors will push for different considerations. The most obvious considerations are the choice of materials and the optimizing the production process (reducing carbon emissions).

Once we start to include sustainability in our product’s mission, we need a systems engineering approach, as several factors will push for different considerations. The most obvious considerations are the choice of materials and the optimizing the production process (reducing carbon emissions).

However, the repairability/serviceability of the product should be considered with a more extended lifetime vision.

What about upgradeability and reusing components? Will the customer pay for these extra sustainable benefits?

Probably Yes, when your customer has a long-term vision, as the overall lifecycle costs of the product will be lower.

Probably Yes, when your customer has a long-term vision, as the overall lifecycle costs of the product will be lower.

Probably No if none of your competitors delivers non-sustainable products much cheaper.

As long as regulations will not hurt traditional business models, there might be no significant change.

However, the change has already started. Higher energy prices will impact the production of specific resources and raise costs. In addition, energy-intensive manufacturing processes will lead to more expensive materials. Combined with raising carbon taxes, this will be a significant driver for companies to reconsider their product offering and manufacturing processes.

However, the change has already started. Higher energy prices will impact the production of specific resources and raise costs. In addition, energy-intensive manufacturing processes will lead to more expensive materials. Combined with raising carbon taxes, this will be a significant driver for companies to reconsider their product offering and manufacturing processes.

The more expensive it becomes to create new products, the more attractive repairable and upgradable products will become. And this brings us to the concept of the circular economy, which is one of the pillars of sustainability.

In short, looking at the diagram – the vertical flow from renewables and finite materials from part to product to product in service leads ultimately to wasted resources if there are no feedback loops. This is the traditional product delivery process that most companies are using.

In short, looking at the diagram – the vertical flow from renewables and finite materials from part to product to product in service leads ultimately to wasted resources if there are no feedback loops. This is the traditional product delivery process that most companies are using.

You can click on the image to the left to zoom in on the details.

The renewable loop on the left side of the diagram is the usage of renewables during production and the use of the product. The more we use renewables instead of fossil fuels, the more sustainable this loop will be. This is the area where engineers should use simulations to find the optimal manufacturing processes and product behavior. Again click on the image to zoom in on the details.

The renewable loop on the left side of the diagram is the usage of renewables during production and the use of the product. The more we use renewables instead of fossil fuels, the more sustainable this loop will be. This is the area where engineers should use simulations to find the optimal manufacturing processes and product behavior. Again click on the image to zoom in on the details.

The right side of the loop, related to the materials, is where we see the options for repairable, serviceable, upgradeable, and even further refurbishment and recycling to avoid leakage of precious materials. This is where mechanical engineers should dominate the activities. Focussing on each of the loops and how to enable them in the product. Click on the image to see the relevant loops.

The right side of the loop, related to the materials, is where we see the options for repairable, serviceable, upgradeable, and even further refurbishment and recycling to avoid leakage of precious materials. This is where mechanical engineers should dominate the activities. Focussing on each of the loops and how to enable them in the product. Click on the image to see the relevant loops.

Looking at the circular economy diagram, it is clear that we are no longer talking about a linear process – it has become the implementation of a system. Systems Engineering or MBSE?

The benefits of MBSE

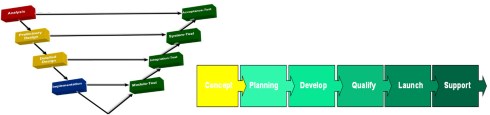

Developing products with the circular economy in mind is no longer a “job to do,” a simple linear exercise. Instead, if we walk down the systems engineering V-shape, there are a lot of modeling exercises to perform before we reach the final solution.

To illustrate the benefits of MBSE, let’s walk through the following scenario.

A well-known company sells lighting projects for stadiums and public infrastructure. Their current business model is based on reliable lighting equipment with a competitive price and range of products.

A well-known company sells lighting projects for stadiums and public infrastructure. Their current business model is based on reliable lighting equipment with a competitive price and range of products.

Most of the time, their contracts have clauses about performance/cost and maintenance. The company sells the products when they win the deal and deliver spare parts when needed.

Their current product design is quite linear – without systems engineering.

Now this company has decided to change its business model towards Product As A Service, or in their terminology LaaS (Lightening as a Service). For a certain amount per month, they will provide lighting to their customers, a stadium, a city, and a road infrastructure.

To implement this business model, this is how they used a Model-Based Systems Engineering approach.

Modeling the Mission

Before even delivering any products, the process starts with describing and analyzing the business model needed for Lightening as a Service.

Then, with modeling estimates about the material costs, there are exercises about the resources required to maintain the service, the potential market, and the possible price range.

It is the first step of using a model to define the mission of the service. After that, the model can be updated, adjusted, and used for a better go-to-market approach when the solution becomes more mature.

Part of the business modeling is also the intention to deliver serviceable and upgradeable products. As the company now owns the entire lifecycle, this is the cheapest way to guarantee a continuous or improved service over time.

Modeling the Functions

Providing Lighting as a Service also means you must be in touch with your installations in real time. Power consumption needs to be measured and analyzed in real-time for (predictive) maintenance, and the light-providing service should be as cheap as possible during operation.

Therefore LED technology is the most reliable, and connectivity functions need to be implemented in the solution. The functional design ensures installation, maintenance and service can be done in a connected manner (cheapest in operation – beneficial for the business).

Modeling the Logical components

As an owner of the solution, the design of the logical components of the lighting solution is also crucial. How to address various lighting demands efficiently? Modularity is one of the first topics to address. With modular components, it is possible to build customer-specific solutions with a reduced engineering effort. However, the work needs to be done by generically designing the solutions and focusing on the interfaces.

Such a design starts with a logical process and flow diagrams combined with behavior modeling. Without already having a physical definition, we can analyze the components’ behavior within an electrical scheme. Decisions on whether specific scenarios will be covered by hardware or software can be analyzed here. The company can define the lower-level requirements for the physical component by using virtual trade-offs on the logical models.

At this stage, we have used business modeling, functional modeling and logical modeling to understand our solution’s behavior.

Modeling the Physical product

The final stage of the solution design is to implement the logical components into a physical solution. The placement of components and interfaces between the components becomes essential. For the physical design, there are still a lot of sustainability requirements to verify:

- Repairability and serviceability – are the components reachable and replaceable? Reducing the lifecycle costs of the solution

- Upgradeability – are there components that can behave differently due to software choices, or are there components that can be replaced with improved functionality. Reducing the cost of creating entirely new solutions.

- Reuse & recyclable – are the materials used in the solution recyclable or reusable, reducing the cost of new materials or reducing the cost of dumping waste.

- RoHS/ REACH compliance

The image below from Zhang Xin Guo’s presentation nicely demonstrates the iterative steps before reaching a physical product

Before committing to a hardware implementation, the virtual product can be analyzed, behavior can be simulated, and it carbon impact can be calculated for the various potential variants.

Before committing to a hardware implementation, the virtual product can be analyzed, behavior can be simulated, and it carbon impact can be calculated for the various potential variants.

The manufacturing process and energy usage during operation are also a part of the carbon impact calculation. The best performing virtual solution, including its simulations models, can be chosen for the realization to ensure the most environmentally friendly solution.

The digital twin for follow-up

Once the solution has been realized, the company still has a virtual model of the solution. By connecting the physical product’s observed and measured behavior, the virtual side’s modeling can be improved or used to identify improvement candidates – maintenance or upgrades. At this stage, the virtual twin is the actual twin of the physical solution. Without going deeper into the digital twin at this stage, I hope you also realize MBSE is a starting point for implementing digital twins serving sustainability outcomes.

The image below, published by Boeing, illustrates the power of the connected virtual and physical world and the various types of modeling that help to assess the optimal solution.

Conclusion

For sustainability, it all starts with the design. The design decisions for the product contribute for 80 % to the carbon footprint of the solution. Afterward, optimization is possible within smaller margins. MBSE is the recommended approach to get a trustworthy understanding and follow-up of the product’s environmental impact.

What do you think can we create sustainable products without MBSE?

A  month ago, I wrote: It is time for BLM – PLM is not dead, which created an anticipated discussion. It is practically impossible to change a framed acronym. Like CRM and ERP, the term PLM is there to stay.

month ago, I wrote: It is time for BLM – PLM is not dead, which created an anticipated discussion. It is practically impossible to change a framed acronym. Like CRM and ERP, the term PLM is there to stay.

However, it was also interesting to see that people acknowledge that PLM should have a business scope and deserves a place at the board level.

The importance of PLM at business level is well illustrated by the discussion related to this LinkedIn post from Matthias Ahrens referring to the CIMdata roadmap conference CEO discussion.

My favorite quote:

Now it’s ‘lifecycle management,’ not just EDM or PDM or whatever they call it. Lifecycle management is no longer just about coming up with new stuff. We’re seeing more excitement and passion in our customers, and I think this is why.”

But it is not that simple

This is a perfect message for PLM vendors to justify their broad portfolio. However, as they do not focus so much on new methodologies and organizational change, their messages remain at the marketing level.

This is a perfect message for PLM vendors to justify their broad portfolio. However, as they do not focus so much on new methodologies and organizational change, their messages remain at the marketing level.

In the field, there is more and more awareness that PLM has a dual role. Just when I planned to write a post on this topic, Adam Keating, CEO en founder of CoLab, wrote the post System of Record meet System of Engagement.