You are currently browsing the tag archive for the ‘intelligence’ tag.

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

There were several interesting sessions which I will not mention here as I want to focus on forward-looking topics with a mix of (federated) data-driven PLM environments and the applicability of AI, staying around 1500 words.

R-evolutionizing PLM and ERP and Heliple

Cristina Paniagua from the Luleå University of Technology closed the first day of the conference, giving us food for thought to discuss over dinner. Her session, describing the Arrowhead fPTN project, fitted nicely with the concepts of the Federated PLM Heliple project presented by Erik Herzog also on Day 2.

They are both research products related to the future state of a digital enterprise. Therefore, it makes sense to treat them together.

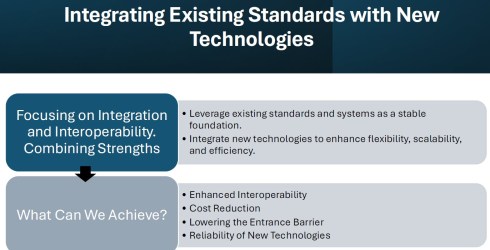

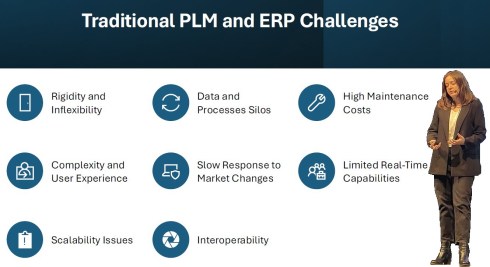

Cristina’s session started with sharing the challenges of traditional PLM and ERP systems:

These statements align with the drivers of the Heliple project. The PLM and ERP systems—Systems of Record—provide baselines and traceability. However, Systems of Record have not historically been designed to support real-time collaboration or to create an attractive user experience.

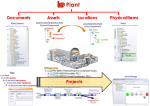

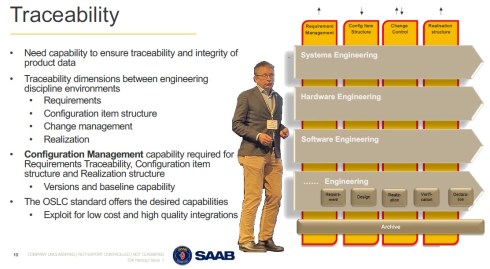

The Heliple project focuses on connecting various modules—the horizontal bars—for systems engineering, hardware engineering, etc., as real-time collaboration environments that can be highly customized and replaceable if needed. The Heliple project explored the usage of OSLC to connect these modules, the Systems of Engagement, with the Systems of Record.

![]() By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

Now, the Heliple team is aiming to expand their research with industry scale validation through the Demoiple project (Validate that the Heliple-2 technology can be implemented and accredited in Saab Aeronautics’ operational IT) combined with the Nextiple project, where they will investigate the role of heterogeneous information models/ontologies for heterogeneous analysis.

![]() If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

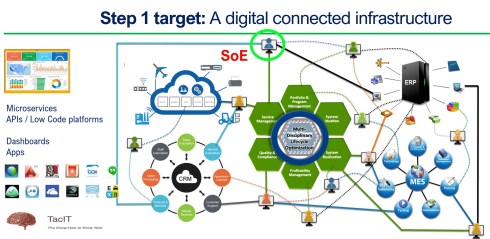

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

The resulting technology is projected to impact manufacturing productivity and flexibility substantially.

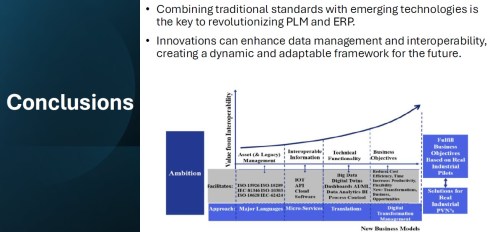

The exciting starting point of the Arrowhead project is that it wants to use existing standards and systems as a foundation and, on top of that, create a business and user-oriented layer, using modern technologies such as micro-services to support real-time processing and semantic technologies, ontologies, system modeling, and AI for data translations and learning—a much broader and ambitious scope than the Heliple project.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

Potentially, there are five platforms with their own internal ways of working, a mix of systems of record and systems of engagement, supported by an overlay of several Systems of Engagement environments.

![]() I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

See below my artist’s impression of how a Service Engineer would work in its app connected to CRM, PLM and ERP platform datasets:

The exciting part of the Arrowhead fPVN project is that it wants to explore the interactions between systems and user roles based on existing mature standards instead of leaving the connections to software developers.

Christina mentioned some of these standards below:

I greatly support this approach as, historically, much knowledge and effort has been put into developing standards to support interoperability. Maybe not in real-time, but the embedded knowledge in these standards will speed up the broader usage. Therefore, I concur with the concluding slide:

A final comment: Industrial users must push for these standards if they do not want a future vendor lock-in. Vendors will do what the majority of their customers ask for but will also keep their customers’ data in proprietary formats to prevent them from switching to another system.

Accelerated Product Development Enabled by Digitalization

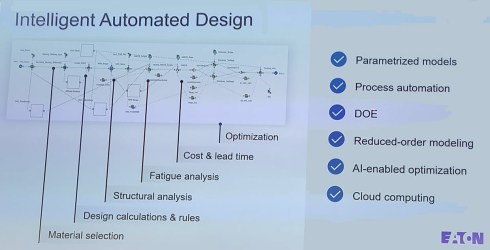

![]() The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

With its broad range of products, Eaton is exploring new, innovative ways to accelerate product design by modeling the design process and applying AI to narrow design decisions and customer-specific engineering work. The picture below shows the areas of attention needed to model the design processes. Uyiosa mentioned the significant beneficial results that have already been reached.

Together with generative design, Eaton works towards modern digital engineering processes built on models and knowledge. His session was complementary to the Heliple and Arrowhead story. To reach such a contemporary design engineering environment, it must be data-driven and built upon open PLM and software components to fully use AI and automation.

Next Gen” Life Cycle Management in Next-Gen Nuclear Power and LTO Legacy Plants

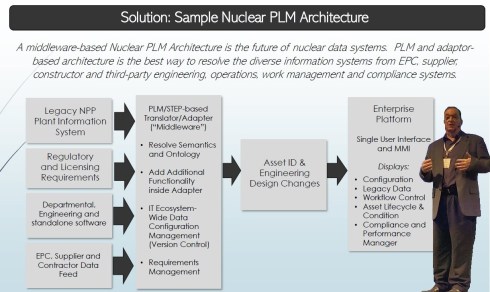

Kent Freeland‘s presentation was a trip into memory land when he discussed the issues with Long Term Operations of legacy nuclear plants.

I spent several years in Ringhals (Sweden) discussing and piloting the setup of a PLM front-end next to the MRO (Maintenance Repair Overhaul) system. As nuclear plants developed in the sixties, they required a longer than anticipated lifecycle, with access to the right design and operational data; maintenance and upgrade changes in the plant needed to be planned and controlled. The design data is often lacking; it resides at the EPC or has been stored in a document management system with limited retrieval capabilities.

I spent several years in Ringhals (Sweden) discussing and piloting the setup of a PLM front-end next to the MRO (Maintenance Repair Overhaul) system. As nuclear plants developed in the sixties, they required a longer than anticipated lifecycle, with access to the right design and operational data; maintenance and upgrade changes in the plant needed to be planned and controlled. The design data is often lacking; it resides at the EPC or has been stored in a document management system with limited retrieval capabilities.

See also my 2019 post: How PLM, ALM, and BIM converge thanks to the digital twin.

Kent described these experienced challenges – we must have worked in parallel universes – that now, for the future, we need a digitally connected infrastructure for both plant design and maintenance artifacts, as envisioned below:

The solution reminded me of a lecture I saw at the PI PLMx 2019 conference, where the Swedish ESS facility demonstrated its Asset Lifecycle Data Management solution based on the 3DEXPERIENCE platform.

The solution reminded me of a lecture I saw at the PI PLMx 2019 conference, where the Swedish ESS facility demonstrated its Asset Lifecycle Data Management solution based on the 3DEXPERIENCE platform.

You can still find the presentation here: Henrik Lindblad Ola Nanzell ESS – Enabling Predictive Maintenance Through PLM & IIOT.

Also, Kent focused on the relevant standards to support a “Single Source of Truth” concept, where I would say after all the federated PLM discussions, I would go for:

“The nearest source of truth and a single source of Change”

assuming this makes more sense in a digitally connected enterprise.

Why do you need to be SMART when contracting for information?

Rob Bodington‘s presentation was complementary to Kent Freeland’s presentation. Ron, a technical fellow at Eurostep, described the challenge of information acquisition when working with large assets that require access to the correct data once the asset is in operation. The large asset could be a nuclear plant or an aircraft carrier.

Rob Bodington‘s presentation was complementary to Kent Freeland’s presentation. Ron, a technical fellow at Eurostep, described the challenge of information acquisition when working with large assets that require access to the correct data once the asset is in operation. The large asset could be a nuclear plant or an aircraft carrier.

In the ideal world, the asset owner wants to have a digital twin of the asset fed by different data sources through a digital thread. Of course, this environment will only be reliable when accurate data is used and presented.

Getting accurate data starts with the information acquisition process, and Rob explained that this needed to be done SMARTly – see the image below:

Rob zoomed in on the SMART keywords and the challenge the various standards provide to make the information SMARTly accessible, like the ISO 10303 / PLCS standard, the CFIHOS exchange standard and more. And then there is the ISO 8000 standard about data quality.

Rob zoomed in on the SMART keywords and the challenge the various standards provide to make the information SMARTly accessible, like the ISO 10303 / PLCS standard, the CFIHOS exchange standard and more. And then there is the ISO 8000 standard about data quality.

Click on the image to get smart.

Rob believes that AI might be the silver bullet as it might help understand the data quality, ontology and context of the data and even improve contracting, generating data clauses for contracting….

And there was a lot of AI ….

![]() There was a dazzling presentation from Gary Langridge, engineering manager at Ocado, explaining their Ocado Smart Platform (OSP), which leverages AI, robotics, and automation to tackle the challenges of online grocery and allow their clients to excel in performance and customer responsiveness.

There was a dazzling presentation from Gary Langridge, engineering manager at Ocado, explaining their Ocado Smart Platform (OSP), which leverages AI, robotics, and automation to tackle the challenges of online grocery and allow their clients to excel in performance and customer responsiveness.

There was a significant AI component in his presentation, and if you are tired of reading, watch this video

![]() But here was more AI – from the 25 sessions in this conference, 19 of them mentioned the potential or usage of AI somewhere in their speech – this is more than 75 %!

But here was more AI – from the 25 sessions in this conference, 19 of them mentioned the potential or usage of AI somewhere in their speech – this is more than 75 %!

There was a dedicated closing panel discussion related to the real business value of Artificial Intelligence in the PLM domain, moderated by Peter Bilello and answered by selected speakers from the conference, Sandeep Natu (CIMdata), Lars Fossum (SAP), Diana Goenage (Dassault Systemes) and Uyiosa Abusomwan (Eaton).

The discussion was realistic and helpful for the audience. It is clear that to reap the benefits, companies must explore the technology and use it to create valuable business scenarios. One could argue that many AI tools are already available, but the challenge remains that they have to run on reliable data. The data foundation is crucial for a successful outcome.

An interesting point in the discussion was the statement from Diane Goenage, who repeatedly warned that using LLM-based solutions has an environmental impact due to the amount of energy they consume.

An interesting point in the discussion was the statement from Diane Goenage, who repeatedly warned that using LLM-based solutions has an environmental impact due to the amount of energy they consume.

We have a similar debate in the Netherlands – do we want the wind energy consumed by data centers (the big tech companies with a minimum workforce in the Netherlands), or should the Dutch citizens benefit from renewable energy resources?

Conclusion

There were even more interesting presentations during these two days, and you might have noticed that I did not advertise my content. This is because I have already reached 1600 words, but I also want to spend more time on the content separately.

It was about PLM and Sustainability, a topic often covered in this conference. Unfortunately, only 25 % of the presentations touched on sustainability, and AI over-hypes the topic.

Hopefully, it is not a sign of the time?

Last week I shared my thoughts related to my observation that the ROI of PLM is not directly visible or measurable, and I explained why. Also, I explained that the alignment of an organization requires a myth to make it happen. A majority of readers agreed with these observations. Some others either misinterpreted the headlines or twisted the story in favor of their opinion.

Last week I shared my thoughts related to my observation that the ROI of PLM is not directly visible or measurable, and I explained why. Also, I explained that the alignment of an organization requires a myth to make it happen. A majority of readers agreed with these observations. Some others either misinterpreted the headlines or twisted the story in favor of their opinion.

A few came from Oleg Shilovitsky and as Oleg is quite open in his discussions, it allows me to follow-up on his statements. Other people might share similar thoughts but they haven’t had the time or opportunity to be vocal. Feel free to share your thoughts/experiences too.

Some misinterpretations from Oleg’s post: PLM circa 2020 – How to stop selling Myths

- The title “How to stop selling Myths” is the first misinterpretation.

We are not selling myths – more below. - “Jos Voskuil’s recommendation is to create a myth. In his PLM ROI Myths article, he suggests that you should not work on a business case, value, or even technology” is the second misinterpretation, you still need a business case, you need value and you need technology.

And I got some feedback from Lionel Grealou, who’s post was a catalyst for me to write the PLM ROI Myth post. I agree I took some shortcuts based on his blog post. You can read his comments here. The misinterpretation is:

- “Good luck getting your CFO approve the business change or PLM investment based on some “myth” propaganda :-)” as it is the opposite, make your plan, support your plan with a business case and then use the myth to align

I am glad about these statements as they allow me to be more precise, avoiding misperceptions/myth-perceptions.

A Myth is bad

Some people might think that a myth is bad, as the myth is most of the time abstract. I think these people do not realize that there a lot of myths that they are following; it is a typical social human behavior to respond to myths. Some myths:

Some people might think that a myth is bad, as the myth is most of the time abstract. I think these people do not realize that there a lot of myths that they are following; it is a typical social human behavior to respond to myths. Some myths:

- How can you be religious without believing in myths?

- In this country/world, you can become anything if you want?

- In the past, life was better

- I make this country great again

The reason human beings need myths is that without them, it is impossible to align people around abstract themes. Try for each of the myths above to create an end-to-end logical story based on factual and concrete information. Impossible!

Read Yuval Harari’s book Sapiens about the power of myths. Read Steven Pinker’s book Enlightenment Now to understand that statistics show a lot of current myths are false. However, this does not mean a myth is bad. Human beings are driven by social influences and myths – it is our brain.

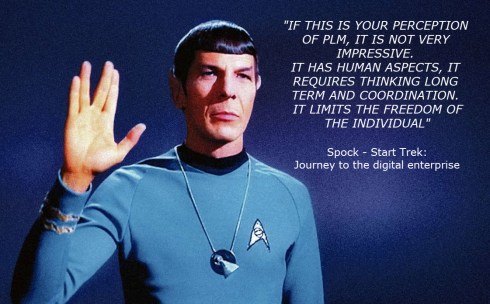

Unless you have no social interaction, you might be immune to myths. With brings me to quoting Oleg once more time:

“A long time ago when I was too naive and too technical, I thought that the best product (or technology) always wins. Well… I was wrong. “

I went through the same experience, having studied physics and mathematics makes you think extremely logical. Something I enjoyed while developing software. Later, when I started my journey as the virtualdutchman mediating in PLM implementations, I discovered logical alone does not work in businesses. The majority of decisions are done based on “gut feelings” still presented as reasonable cases.

Unless you have an audience of Vulcans, like Mr. Spock, you need to deal with the human brain. Consider the myth as the envelope to pass the PLM-project to the management. C-level acts by myths as so far I haven’t seen C-level management spending serious time on understanding PLM. I will end with a quote from Paul Empringham:

I sometimes wish companies would spend 6 months+ to educate themselves on what it takes to deliver incremental PLM success BEFORE engaging with software providers

You don’t need a business case

Lionel is also skeptical about some “Myth-propaganda” and I agree with him. The Myth is the envelope, inside needs to be something valuable, the strategy, the plan, and the business case. Here I want to stress one more time that most business cases for PLM are focusing on tool and collaboration efficiency. And from there projecting benefits. However, how well can we predict the future?

Lionel is also skeptical about some “Myth-propaganda” and I agree with him. The Myth is the envelope, inside needs to be something valuable, the strategy, the plan, and the business case. Here I want to stress one more time that most business cases for PLM are focusing on tool and collaboration efficiency. And from there projecting benefits. However, how well can we predict the future?

If you implement a process, let’s assume BOM-collaboration done with Excel by BOM-collaboration based on an Excel-on-the-cloud-like solution, you can measure the differences, assuming you can measure people’s efficiency. I guess this is what Oleg means when he explains OpenBOM has a real business case.

However, if you change the intent for people to work differently, for example, consult your supplier or manufacturing earlier in the design process, you touch human behavior. Why should I consult someone before I finish my job, I am measured on output not on collaboration or proactive response? Here is the real ROI challenge.

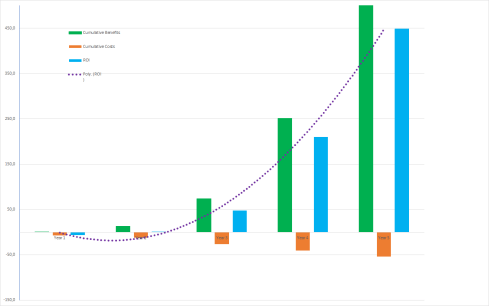

I have participated in dozens of business cases and at the end, they all look like the graph below:

The ROI is fantastic – after a little more than 2 years, we have a positive ROI, and the ROI only gets bigger. So if you trust the numbers, you would be a fool not to approve this project. Right?

And here comes the C-level gut-feeling. If I have a positive feeling (I follow the myth), then I will approve. If I do not like it, I will say I do not trust the numbers.

Needless to say that if there was a business case without ROI, we do not need to meet the C-level. Unless, and it happens incidental, at C-level, there was already a decision we need PLM from Vendor X because we played golf together, we are condemned together or we believe the same myths.

Needless to say that if there was a business case without ROI, we do not need to meet the C-level. Unless, and it happens incidental, at C-level, there was already a decision we need PLM from Vendor X because we played golf together, we are condemned together or we believe the same myths.

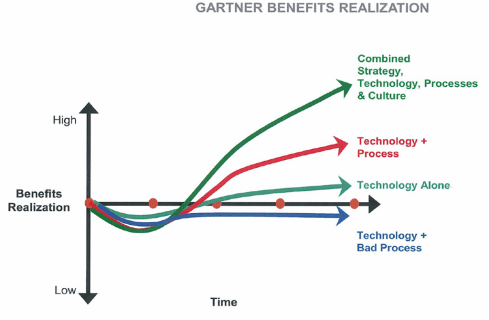

In reality, the old Gartner graph from realized benefits says it all. The impact of culture, processes, and people can make or break a plan.

You do not need an abstract story for PLM

Some people believe PLM on its own is a myth. You just need the right technology and people will start using it, spreading it out and see how we have improved business. Sometimes email is used as an example. Email is popular because you can with limited effort, collaborate with people, no matter where they are. Now twenty years later, companies are complaining about the lack of traceability, the lack of knowledge and understanding related to their products and processes.

PLM will always have the complexity of supporting traceability combined with real-time collaboration. If you focus only on traceability, people will complain that they are not a counter clerk. If you focus solely on collaboration, you miss the knowledge build-up and traceability.

That’s why PLM is a mix of governance, optimized processes to guarantee quality and collaboration, combined with a methodology to tune the existing processes implemented in tools that allow people to be confident and efficient. You cannot translate a business strategy into a function-feature list for a tool.

That’s why PLM is a mix of governance, optimized processes to guarantee quality and collaboration, combined with a methodology to tune the existing processes implemented in tools that allow people to be confident and efficient. You cannot translate a business strategy into a function-feature list for a tool.

Conclusion

Myths are part of the human social alignment of large groups of people. If a Myth is true or false, I will not judge. You can use the Myth as an envelope to package your business case. The business case should always be a combination of new ways of working (organizational change), optimized processes and finally, the best tools. A PLM tool-only business case is to my opinion far from realistic

Now preparing for PI PLMx London on 3-4 February – discussing Myths, Single BOMs and the PLM Green Alliance

This is the moment of the year to switch-off from the details. No more talking and writing about digital transformation or model-based approaches. It is time to sit back and relax. Two years ago I shared the PLM Songbook, now it is time to see one or more movies. Here are my favorite top five PLM movies:

Bruce Almighty

Bruce Nolan, an engineer in Buffalo, N.Y., is discontented with almost everything in the company despite his popularity and the love of his draftswoman Grace. At the end of the worst day of his life, Bruce angrily ridicules and rages against PLM and PLM responds. PLM appears in human form and, endowing Bruce with divine powers op collaboration, challenges Bruce to take on the big job to see if he can do it any better.

Bruce Nolan, an engineer in Buffalo, N.Y., is discontented with almost everything in the company despite his popularity and the love of his draftswoman Grace. At the end of the worst day of his life, Bruce angrily ridicules and rages against PLM and PLM responds. PLM appears in human form and, endowing Bruce with divine powers op collaboration, challenges Bruce to take on the big job to see if he can do it any better.

A movie that makes you modest and you realize there is more than your small ecosystem.

The good, the bad and the ugly

Blondie (The Good PLM consultant) is a professional who is out trying to earn a few dollars. Angel Eyes (The Bad PLM Vendor) is a PLM salesman who always commits to a task and sees it through, as long as he is paid to do so. And Tuco (The Ugly PLM Implementer) is a wanted outlaw trying to take care of his own hide. Tuco and Blondie share a partnership together making money off Tuco’s bounty, but when Blondie unties the partnership, Tuco tries to hunt down Blondie. When Blondie and Tuco come across a PLM implementation loaded with dead bodies, they soon learn from the only survivor (Bill Carson – the PLM admin) that he and a few other men have buried a stash of value on a file server. Unfortunately, Carson dies, and Tuco only finds out the name of the file server, while Blondie finds out the name on the hard disk. Now the two must keep each other alive in order to find the value. Angel Eyes (who had been looking for Bill Carson) discovers that Tuco and Blondie met with Carson and knows they know the location of the value. All he needs is for the two to ..

Blondie (The Good PLM consultant) is a professional who is out trying to earn a few dollars. Angel Eyes (The Bad PLM Vendor) is a PLM salesman who always commits to a task and sees it through, as long as he is paid to do so. And Tuco (The Ugly PLM Implementer) is a wanted outlaw trying to take care of his own hide. Tuco and Blondie share a partnership together making money off Tuco’s bounty, but when Blondie unties the partnership, Tuco tries to hunt down Blondie. When Blondie and Tuco come across a PLM implementation loaded with dead bodies, they soon learn from the only survivor (Bill Carson – the PLM admin) that he and a few other men have buried a stash of value on a file server. Unfortunately, Carson dies, and Tuco only finds out the name of the file server, while Blondie finds out the name on the hard disk. Now the two must keep each other alive in order to find the value. Angel Eyes (who had been looking for Bill Carson) discovers that Tuco and Blondie met with Carson and knows they know the location of the value. All he needs is for the two to ..

A movie that makes you realize that it is a challenging journey to find the value out of PLM. It is not only about execution – but it is also about all the politics of people involved – and there are good, bad and ugly people on a PLM journey.

The Grump

The Grump is a draftsman in Finland from the past. A man who knows that everything used to be so much better in the old days. Pretty much everything that’s been done after 1953 has always managed to ruin The Grump’s day. Our story unfolds The Grump opens a 3D Model on his computer, hurting his brain. He has to spend a weekend in Helsinki to attend a model-based therapy. Then the drama unfolds …….

The Grump is a draftsman in Finland from the past. A man who knows that everything used to be so much better in the old days. Pretty much everything that’s been done after 1953 has always managed to ruin The Grump’s day. Our story unfolds The Grump opens a 3D Model on his computer, hurting his brain. He has to spend a weekend in Helsinki to attend a model-based therapy. Then the drama unfolds …….

A movie that makes you realize that progress and innovation do not come from grumps. In every environment when you want to do a change of the status quo, grumps will appear. With the exciting Finish atmosphere, a perfect film for Christmas.

Deliverance

The Cahulawassee River Valley company in Northern Georgia is one of the last analog companies in the state, which will soon change with the imminent implementation of a PLM system in the company, breaking down silos everywhere. As such, four Atlanta city slickers, alpha male Lewis Medlock, generally even-keeled Ed Gentry, slightly condescending Bobby Trippe, and wide-eyed Drew Ballinger decide to implement PLM in one trip, with only Lewis and Ed having experience in CAD. They know going in that the area is ethnoculturally homogeneous and isolated, but don’t understand the full extent of such until they arrive and see what they believe is the result of generations of inbreeding. Their relatively peaceful trip takes a turn for the worse when half way through they encounter a couple of hillbilly moonshiners. That encounter not only makes the four battle their way out of the PLM project intact and alive but threatens the relationships of the four as they do.

The Cahulawassee River Valley company in Northern Georgia is one of the last analog companies in the state, which will soon change with the imminent implementation of a PLM system in the company, breaking down silos everywhere. As such, four Atlanta city slickers, alpha male Lewis Medlock, generally even-keeled Ed Gentry, slightly condescending Bobby Trippe, and wide-eyed Drew Ballinger decide to implement PLM in one trip, with only Lewis and Ed having experience in CAD. They know going in that the area is ethnoculturally homogeneous and isolated, but don’t understand the full extent of such until they arrive and see what they believe is the result of generations of inbreeding. Their relatively peaceful trip takes a turn for the worse when half way through they encounter a couple of hillbilly moonshiners. That encounter not only makes the four battle their way out of the PLM project intact and alive but threatens the relationships of the four as they do.

This movie, from 1972, makes you realize that in the early days of PLM starting a big-bang implementation journey into an area that is not ready for it, can be deadly, for your career and friendship. Not suitable for small children!

Diamonds Are Forever or Tron (legacy)

James Bond’s mission is to find out who has been drawing diamonds, which are appearing on blogs. He adopts another identity in the form of Don Farr. He joins up with CIMdata and acts as if he is developing diamonds, but everyone is hungry for these diamonds. He also has to avoid Mr. Brouwer and Mr. Kidd, the dangerous couple who do not leave anyone in their way when it comes to model-based. And Ernst Stavro Blofeld isn’t out of the question. He may have changed his looks, but is he linked with the V-shape? And if he is, can Bond finally defeat his ultimate enemy?

James Bond’s mission is to find out who has been drawing diamonds, which are appearing on blogs. He adopts another identity in the form of Don Farr. He joins up with CIMdata and acts as if he is developing diamonds, but everyone is hungry for these diamonds. He also has to avoid Mr. Brouwer and Mr. Kidd, the dangerous couple who do not leave anyone in their way when it comes to model-based. And Ernst Stavro Blofeld isn’t out of the question. He may have changed his looks, but is he linked with the V-shape? And if he is, can Bond finally defeat his ultimate enemy?

Sam Flynn, the tech-savvy 27-year-old son of Kevin Flynn, looks into his father’s disappearance and finds himself pulled into the same world of virtual twins and augmented reality where his father has been living for 20 years. Along with Kevin’s loyal confidant Quorra, father and son embark on a life-and-death journey across a visually-stunning cyber universe that has become far more advanced and exceedingly dangerous. Meanwhile, the malevolent program IoT, who dominates the digital world, plans to invade the real world and will stop at nothing to prevent their escape

Sam Flynn, the tech-savvy 27-year-old son of Kevin Flynn, looks into his father’s disappearance and finds himself pulled into the same world of virtual twins and augmented reality where his father has been living for 20 years. Along with Kevin’s loyal confidant Quorra, father and son embark on a life-and-death journey across a visually-stunning cyber universe that has become far more advanced and exceedingly dangerous. Meanwhile, the malevolent program IoT, who dominates the digital world, plans to invade the real world and will stop at nothing to prevent their escape

I could not decide about number five. The future is bright with Boeing’s new representation of Systems Engineering, see my post on CIMdata’s PLM Europe roadmap event where Don Farr presented his diamond(s). However, the future is also becoming a mix of real with virtual and here Tron (legacy) will help my readers to understand the beauty of a mixed virtual and real world. You can decide – or send me your favorite PLM movies.

Note: All movie reviews are based on IMBd.com story lines, and I thank the authors of these story lines for their contribution and hope they agree with the PLM-related twist. Click on the image to find the full details and original review.

Conclusion

2018 has been an exciting year with a lot of buzzwords combined with the reality that the current PLM approach is incompatible with the future. How we can address this issue more in 2019 – first at PI PLMx 2019 in London (be there – last chance to meet people in the UK when they are still Europeans and share/discuss plans for the upcoming year)

Wishing you all the best during the break and a happy and prosperous 2019

![]() Some weeks ago I wrote a post about non-intelligent part numbers (here) and this was (as expected) one of the topics that fired up other people to react. Thanks to Oleg Shilovitsky (here), Ed Lopategui (here), David Taber (here) for your contribution to this debate. For me, the interesting conclusion was that nobody denies the advantage of a non-intelligent part number anymore. Five to ten years ago this discussion would be more a debate between defenders of the old “intelligent” methodology and non-intelligent numbers. Now it was more about how to deal/wait/anticipate for the future. Great progress !!

Some weeks ago I wrote a post about non-intelligent part numbers (here) and this was (as expected) one of the topics that fired up other people to react. Thanks to Oleg Shilovitsky (here), Ed Lopategui (here), David Taber (here) for your contribution to this debate. For me, the interesting conclusion was that nobody denies the advantage of a non-intelligent part number anymore. Five to ten years ago this discussion would be more a debate between defenders of the old “intelligent” methodology and non-intelligent numbers. Now it was more about how to deal/wait/anticipate for the future. Great progress !!

Non-intelligent part number benefits

Again a short summary for those who have not read the posts referenced in the introduction. Non-intelligent part numbers provide the following advantages:

- Flexibility towards the future in case of mergers, new products, and technologies of number ranges not foreseen. Reduced risk of changes and maintenance for part numbers in the future.

- Reduced support for “brain-related connectivity” between systems (error-prone) and better support for automated connectivity (interfaces / digital scanning devices). Minimizing mistakes and learning time.

What’s next?

So when a company decides to move forward towards non-intelligent part numbers, there are still some more actions to take. As the part number becomes irrelevant for human beings, there is a need for more human-readable properties provided as metadata on screens or attributes in a report.

CLASSIFICATION: The first obvious need is to apply a part classification to your parts. Intelligent part numbers somehow were often a kind of classification based on the codes and position of numbers and characters inside the intelligent ID. The intelligent part number containing information about the type of part, perhaps the drawing format, the project, or the year it was issued the first time. You do not want to lose this information and therefore, make sure it is captured in attributes (e.g. part type/creation date) or in related information (e.g. drawing properties, model properties, customer, project). In a modern PLM system, all the intelligence of a part number needs to be at least stored as metadata and relations.

Which classification to use is hard to tell. It depends on your industry and the product you are making. Each industry has its standards which are probably the optimized target when you work in that industry. Classifications like UNSPC might be too generic. Although when you classify, do not invent a new classification yourself. People have spent thousands of hours (millions perhaps) on building the best classification for your industry – don’t be smarter unless you are a clever startup.

Which classification to use is hard to tell. It depends on your industry and the product you are making. Each industry has its standards which are probably the optimized target when you work in that industry. Classifications like UNSPC might be too generic. Although when you classify, do not invent a new classification yourself. People have spent thousands of hours (millions perhaps) on building the best classification for your industry – don’t be smarter unless you are a clever startup.

And next, do not rely on a single classification. Make sure your parts can adhere to multiple classifications as this is the best way to stay flexible for the future. Multiple classifications can offer support for a marketing view, a technology view (design and IP usage), a manufacturing view, and so on.

Legacy parts should be classified by using analytic tools and custom data manipulations to complete the part metadata in the future environment. There are standard tools in the market to support data discovery and quality improvement. Part similarity discovery is done by Exalead’s One Part and for more specific tools read Dick Bourke’s article on Engineering.com.

DOWNSTREAM USAGE: As Mathias Högberg commented on my post, the challenge of non-intelligent part numbers has its impact downstream on the shop floor. Production line scheduling for variants or production process steps for half-fabricates often depends on the intelligence of the part number. When moving to non-intelligent numbers, these capabilities have to be addressed too, either by additional attributes, immediately identifying product families, or by adding a more standardized description based on the initial attributes of the classification. Also David Taber in his post talked about two identifiers, one meaningless and fixed, and a second used for the outside world, which could be built by a concatenation of attributes and can change during the part lifecycle.

In the latter case, you might say, we remove intelligence from the part number and we bring intelligence back in the description. This is correct. Still human beings are better in mapping a description in their mind than a number.

In the latter case, you might say, we remove intelligence from the part number and we bring intelligence back in the description. This is correct. Still human beings are better in mapping a description in their mind than a number.

Do you know Jos Voskuil (a.k.a. virtualdutchman) or

Do you know NL 13.012.789 / 56 ?

Quality of data

Moving from “intelligent” part numbers towards meaningless part numbers enriched with classification and a standardized description, allow companies to gain significant benefits for just part reuse. This is what current enterprises are targeting. Discovering and eliminating similar parts already justifies this process. I consider this as a tactical advantage. The real strategic advantage will come in the next ten years when we will go more and more to a digital enterprise. In a digital enterprise, algorithms will play a significant role (see Gartner) amount of human interpretation and delays. However, algorithms only work on data with certain properties and reliable quality.

Conclusion

Introducing non-intelligent part numbers has its benefits and ROI to stay flexible for the future. However consider it also as a strategic step for the long-term future when information needs to flow in an integrated way through the enterprise with a minimum of human handling.

Happy New Year to all of you and I am wishing you all an understandable and digital future. This year I hope to entertain you again with a mix of future trends related to PLM combined with old PLM basics. This time, one of the topics that are popping up in almost every PLM implementation – numbering schemes – do we use numbers with a meaning, so-called intelligent numbers or can we work with insignificant numbers? And of course, the question what is the impact of changing from meaningful numbers towards unique meaningless numbers.

Happy New Year to all of you and I am wishing you all an understandable and digital future. This year I hope to entertain you again with a mix of future trends related to PLM combined with old PLM basics. This time, one of the topics that are popping up in almost every PLM implementation – numbering schemes – do we use numbers with a meaning, so-called intelligent numbers or can we work with insignificant numbers? And of course, the question what is the impact of changing from meaningful numbers towards unique meaningless numbers.

Why did we create “intelligent” numbers?

![]() Intelligent part numbers were used to help engineers and people on the shop floor for two different reasons. As in the early days, the majority of design work was based on mechanical design. Often companies had a one-to-one relation between the part and the drawing. This implied that the part number was identical to the drawing number. An intelligent part number could have the following format: A4-95-BE33K3-007.A

Intelligent part numbers were used to help engineers and people on the shop floor for two different reasons. As in the early days, the majority of design work was based on mechanical design. Often companies had a one-to-one relation between the part and the drawing. This implied that the part number was identical to the drawing number. An intelligent part number could have the following format: A4-95-BE33K3-007.A

Of course, I invented this part number as the format of an intelligent part number is only known to local experts. In my case, I was thinking about a part that was created in 1995, drawn on A4. Probably a bearing of the 33K3 standard (another intelligent code) and its index is 007 (checked in a numbering book). The version of the drawing (part) is A

A person, who is working in production, assembling the product and reading the BOM, immediately knows which part to use by its number and drawing. Of course the word “immediately” is only valid for people who have experience with using this part. And this was in the previous century not so painful as it is now. Products were not so sophisticated as they are now and variation in products was limited.

Later, when information became digital, intelligent numbers were also used by engineering to classify their parts. The classification digits would assist the engineer to find similar parts in a drawing directory or drawing list.

And if the world had not changed, there would be still intelligent part numbers.

Why no more intelligent part numbers?

There are several reasons why you would not use intelligent part numbers anymore.

An intelligent number scheme works in a perfect world where nothing is changing. In real life companies merge with other companies and then the question comes up: Do we introduce a new numbering scheme or is one of the schemes going to be the perfect scheme for the future?If this happened a few times, a company might think: Do we have to through this again and again? As probably topic #2 has also occurred.

An intelligent number scheme works in a perfect world where nothing is changing. In real life companies merge with other companies and then the question comes up: Do we introduce a new numbering scheme or is one of the schemes going to be the perfect scheme for the future?If this happened a few times, a company might think: Do we have to through this again and again? As probably topic #2 has also occurred.- The numbering scheme does not support current products and complexity anymore. Products change from mechanical towards systems, containing electronic components and embedded software. The original numbering system has never catered for that. Is there an overreaching numbering standard? It is getting complicated, perhaps we can change ? And here #3 comes in.

As we are now able to store information in a digital manner, we are able to link to this complex part number a few descriptive attributes that help us to identify the component. Here the number is becoming less important, still serving as access to the unique metadata. Consider it as a bar code on a product. Nobody reads the bar code without a device anymore and the device connected to an information system will provide the right information. This brings us to the last point #4.

As we are now able to store information in a digital manner, we are able to link to this complex part number a few descriptive attributes that help us to identify the component. Here the number is becoming less important, still serving as access to the unique metadata. Consider it as a bar code on a product. Nobody reads the bar code without a device anymore and the device connected to an information system will provide the right information. This brings us to the last point #4.- In a digital enterprise, where data is flowing between systems, we need unique identifiers to connect datasets between systems. The most obvious example is the part master data. Related to a unique ID you will find in the PDM or PLM system the attributes relevant for overall identification (Description, Revision, Status, Classification) and further attributes relevant for engineering (weight, material, volume, dimensions).

In the ERP system, you will find a dataset with the same ID and master attributes. However here they are extended with attributes related to logistics and finance. The unique identifier provides the guarantee that data is connected in the correct manner and that information can flow or connected between systems without human interpretation or human-spent processing time.

And this is one of the big benefits of a digital enterprise, reducing overhead in data handling, often reducing the cost of data handling with 50 % or more (people / customizations)

And this is one of the big benefits of a digital enterprise, reducing overhead in data handling, often reducing the cost of data handling with 50 % or more (people / customizations)

What to do now in your company?

There is no business justification just to start renumbering parts just for future purposes. You need a business reason. Otherwise, it will only increase costs and create a potential for migration errors. Moving to meaningless part numbers can be the best done at the moment a change is required. For example, when you implement a new PLM system or when your company merges with another company. At these moments, part numbering should be considered with the future in mind.

And the future is no longer about memorizing part classifications and numbers, even if you are from the generation that used to structure and manage everything inside your brain. Future businesses rely on digitally connected information, where a person based on machine interpretation of a unique ID will get the relevant and meaningful data. Augmented reality (picture above) is becoming more and more available. It is now about human beings that need to get ready for a modern future.

And the future is no longer about memorizing part classifications and numbers, even if you are from the generation that used to structure and manage everything inside your brain. Future businesses rely on digitally connected information, where a person based on machine interpretation of a unique ID will get the relevant and meaningful data. Augmented reality (picture above) is becoming more and more available. It is now about human beings that need to get ready for a modern future.

Conclusion

Intelligent part numbers are a best practice from the previous century. Start to think digital and connected and try to reduce the dependency of understanding the part number in all your business activities. Move towards providing the relevant data for a user. This can be an evolution smoothening a future PLM implementation step.

Looking forward to discussing this topic and many other PLM related practices with you face to face during the Product Innovation conference in Munich. I will talk about the PLM identity change and lead a focus group session about PLM and ERP integration. Looking from the high-level and working in the real world. The challenge of every PLM implementation.

Looking forward to discussing this topic and many other PLM related practices with you face to face during the Product Innovation conference in Munich. I will talk about the PLM identity change and lead a focus group session about PLM and ERP integration. Looking from the high-level and working in the real world. The challenge of every PLM implementation.

Human beings are a strange kind of creatures. We think we make a decision based on logic, and we think we act based on logic. In reality, however, we do not like to change, if it does not feel good, and we are lazy in changing our habits.

Human beings are a strange kind of creatures. We think we make a decision based on logic, and we think we act based on logic. In reality, however, we do not like to change, if it does not feel good, and we are lazy in changing our habits.

Disclaimer: It is a generalization which is valid for 99 % of the population. So if you feel offended by the previous statement, be happy as you are one of the happy few.

Our inability to change can be seen in the economy (only the happy few share). We see it in relation to global climate change. We see it in territorial fights all around the world.

Owning instead of sharing. ?

The cartoon below gives an interesting insight how personal interests are perceived more important than general interest.

It is our brain !

More and more I realize that the success of PLM is also related to his human behavior; we like to own and find it difficult to share. PLM primarily is about sharing data through all stages of the lifecycle. A valid point why sharing is rare , is that current PLM systems and their infrastructures are still too complex to deliver shared information with ease. However, the potential benefits are clear when a company is able to transform its business into a sharing model and therefore react and anticipate much faster on the outside world.

But sharing is not in our genes, as:

- In current business knowledge is power. Companies fight for their IP; individuals fight for their job security by keeping some specific IP to themselves.

- As a biological organism, composed of a collection of cells, we are focused on survival of our genes. Own body/family first is our biological message.

Breaking these habits is difficult, and I will give some examples that I noticed the past few weeks. Of course, it is not completely a surprise for readers of my blog, as a large number of my recent posts are related to the complexity of change. Some are related to human behavior:

August 2012: Our brain blocks PLM acceptance

April 2014: PLM and Blockers

Ed Lopategui, an interesting PLM blogger, see http://eng-eng.com, wrote a long comment to my PLM and Blockers post. The (long) quote below is exactly describing what makes PLM difficult to implement within a company full of blockers :

“I also know that I was focused on doing the right thing – even if cost me my position; and there were many blockers who plotted exactly that. I wore that determination as a sort of self-imposed diplomatic immunity and would use it to protect my team and concentrate any wrath on just myself. My partner in that venture, the chief IT architect admitted on several occasions that we wouldn’t have been successful if I had actually cared what happened to my position – since I had to throw myself and the project in front of so many trains. I owe him for believing in me.

But there was a balance. I could not allow myself to reach a point of arrogance; I would reserve enough empathy for the blockers to listen at just the right moments, and win them over. I spent more time in the trenches than most would reasonably allow. It was a ridiculously hard thing and was not without an intellectual and emotional cost.

In that crucible, I realized that finding people with such perspective (putting the ideal above their own position) within each corporation is *exceptionally* rare. People naturally don’t like to jump in front of trains. It can be career-limiting. That’s kind of a problem, don’t you think? It’s a limiting factor without a doubt, and not one that can be fulfilled with consultants alone. You often need someone with internal street cred and long-earned reputation to push through the tough parts”

Ed concludes that it is exceptionally rare to find people putting the ideal above their own position. Again referring to the opening statement that only a (happy) few are advocates for change

Now let´s look at some facts why it is exceptionally rare, so we feel less guilty.

On Intelligence

Last month I read the book On Intelligence from Jeff Hawkins well written by Sandra Blakeslee. (Thanks Joost Schut from KE-Works for pointing me to this book).

Last month I read the book On Intelligence from Jeff Hawkins well written by Sandra Blakeslee. (Thanks Joost Schut from KE-Works for pointing me to this book).

Although it was not the easiest book to read during a holiday, it was well written considering the complexity of the topic discussed. Jeff describes how the information architecture of the brain could work based on the neocortex layering.

In his model, he describes how the brain processes information from our senses, first in a specific manner but then more and more in an invariant approach. You have to read the book to get the full meaning of this model. The eye opener for me was that Jeff described the brain as a prediction engine. All the time the brain anticipates what is going to happen, based on years of learning. That’s why we need to learn and practice building and enrich this information model.

In his model, he describes how the brain processes information from our senses, first in a specific manner but then more and more in an invariant approach. You have to read the book to get the full meaning of this model. The eye opener for me was that Jeff described the brain as a prediction engine. All the time the brain anticipates what is going to happen, based on years of learning. That’s why we need to learn and practice building and enrich this information model.

And the more and more specialized you are on a particular topic, it can be knowledge but it can also be motoric skill, the deeper in the neocortex this pattern is anchored. This makes is hard to change (bad) practices.

The book goes much further, and I was reading it more in the context of how artificial intelligence or brain-like intelligence could support the boring PLM activities. I got nice insights from it, However the main side observation was; it is hard to change our patterns. So if you are not aware of it, your subconscious will always find reasons to reject a change. Follow the predictions !

Thinking Fast and Slow

And this is exactly the connection with another book I have read before: Thinking Fast and Slow from Daniel Kahneman. Daniel explains that our brain is running its activities on two systems:

And this is exactly the connection with another book I have read before: Thinking Fast and Slow from Daniel Kahneman. Daniel explains that our brain is running its activities on two systems:

System 1: makes fast and automatic decisions based on stereotypes and emotions. System 1 is what we are using most of the time, running often in subconscious mode. It does not cost us much energy to run in this mode.

System 2: takes more energy and time; therefore, it is slow and pushes us to be conscious and alert. Still system 2 can be influenced by various external, subconscious factors.

Thinking Fast and Slow nicely complements On Intelligence, where system 1 described by Daniel Kahneman is similar to the system Jeff Hawkins describes as the prediction engine. It runs in an subconscious mode, with optimal energy consumption allowing us to survive most of the time.

Fast thinking leads to boiling frogs

And this links again to the boiling frog syndrome. If you are not familiar with the term follow the link. In general it means that people (and businesses) are not reacting on (life threating) outside change when it goes slowly, but would react immediately if they are confronted with the end result. (no more business / no more competitive situation)

And this links again to the boiling frog syndrome. If you are not familiar with the term follow the link. In general it means that people (and businesses) are not reacting on (life threating) outside change when it goes slowly, but would react immediately if they are confronted with the end result. (no more business / no more competitive situation)

Conclusion: our brain by default wants to keep business in predictive mode, so implementing a business change is challenging, as all changes are painful and against our subconscious system.

So PLM is doomed, unless we change our brain behavior ?

The fact that we are not living in caves anymore illustrates that there have been always those happy few that took a risk and a next step into the future by questioning and changing comfortable habits. Daniel Kahneman´s system 2 and also Jeff Hawkins talk about the energy it takes to change habits, to learn new predictive mechanisms. But it can be done.

I see two major trends that will force the classical PLM to change:

- The amount of connected data becomes so huge, it does not make sense anymore to store it and structure the information in a single system. The time required to structure data does not deliver enough ROI in a fast moving society. The old “single system that stores all”-concept is dying.

- The newer generations (generation Y and beyond) grew up with the notion that it is impossible to learn, capture and own specific information. They developed different skills to interpret data available from various sources, not necessary own and manage it all.

These two trends lead to the point where it becomes clear that the future in system thinking becomes obsolete. It will be about connectivity and interpretation of connected data, used by apps, running on a platform. The openness of the platform towards other platform is crucial and will be the weakest link.

Conclusion:

The PLM vision is not doomed and with a new generations of knowledge workers the “brain change” has started. The challenge is to implement the vision across systems and silos in an organization. For that we need to be aware that it can be done and allocate the “happy few” in your company to enable it.

What do you think ???????????????????????????

This week I had a meeting in the Netherlands with three Dutch peers all interested and involved in Model-Based Definition – either from the coaching point of view or the “victim” point of view. We compared MBD-challenges with

This week I had a meeting in the Netherlands with three Dutch peers all interested and involved in Model-Based Definition – either from the coaching point of view or the “victim” point of view. We compared MBD-challenges with  We realized that human beings indeed are often the blocking reason why new ways of working cannot be introduced. Twenty-five years ago we had the discussion moving from 2D to 3D for design. Now due to the maturity of the solutions and the education of new engineers this is no longer an issue. Now we are in the next wave using the 3D Model as the base for manufacturing definition, and again a new mindset is needed.

We realized that human beings indeed are often the blocking reason why new ways of working cannot be introduced. Twenty-five years ago we had the discussion moving from 2D to 3D for design. Now due to the maturity of the solutions and the education of new engineers this is no longer an issue. Now we are in the next wave using the 3D Model as the base for manufacturing definition, and again a new mindset is needed.

[…] (The following post from PLM Green Global Alliance cofounder Jos Voskuil first appeared in his European PLM-focused blog HERE.) […]

[…] recent discussions in the PLM ecosystem, including PSC Transition Technologies (EcoPLM), CIMPA PLM services (LCA), and the Design for…

Jos, all interesting and relevant. There are additional elements to be mentioned and Ontologies seem to be one of the…

Jos, as usual, you've provided a buffet of "food for thought". Where do you see AI being trained by a…

Hi Jos. Thanks for getting back to posting! Is is an interesting and ongoing struggle, federation vs one vendor approach.…