You are currently browsing the category archive for the ‘Digital Enterprise’ category.

In the last two weeks, I have had mixed discussions related to PLM, where I realized the two different ways people can look at PLM. Are implementing PLM capabilities driven by a cost-benefit analysis and a business case? Or is implementing PLM capabilities driven by strategy providing business value for a company?

In the last two weeks, I have had mixed discussions related to PLM, where I realized the two different ways people can look at PLM. Are implementing PLM capabilities driven by a cost-benefit analysis and a business case? Or is implementing PLM capabilities driven by strategy providing business value for a company?

Most companies I am working with focus on the first option – there needs to be a business case.

This observation is a pleasant passageway into a broader discussion started by Rob Ferrone recently with his article Money for nothing and PLM for free. He explains the PDM cost of doing business, which goes beyond the software’s cost. Often, companies consider the other expenses inescapable.

This observation is a pleasant passageway into a broader discussion started by Rob Ferrone recently with his article Money for nothing and PLM for free. He explains the PDM cost of doing business, which goes beyond the software’s cost. Often, companies consider the other expenses inescapable.

At the same time, Benedict Smith wrote some visionary posts about the potential power of an AI-driven PLM strategy, the most recent article being PLM augmentation – Panning for Gold.

At the same time, Benedict Smith wrote some visionary posts about the potential power of an AI-driven PLM strategy, the most recent article being PLM augmentation – Panning for Gold.

It is a visionary article about what is possible in the PLM space (if there was no legacy ☹), based on Robust Reasoning and how you could even start with LLM Augmentation for PLM “Micro-Tasks.

Interestingly, the articles from both Rob and Benedict were supported by AI-generated images – I believe this is the future: Creating an AI image of the message you have in mind.

![]() When you have digested their articles, it is time to dive deeper into the different perspectives of value and costs for PLM.

When you have digested their articles, it is time to dive deeper into the different perspectives of value and costs for PLM.

From a system to a strategy

The biggest obstacle I have discovered is that people relate PLM to a system or, even worse, to an engineering tool. This 20-year-old misunderstanding probably comes from the fact that in the past, implementing PLM was more an IT activity – providing the best support for engineers and their data – than a business-driven set of capabilities needed to support the product lifecycle.

The biggest obstacle I have discovered is that people relate PLM to a system or, even worse, to an engineering tool. This 20-year-old misunderstanding probably comes from the fact that in the past, implementing PLM was more an IT activity – providing the best support for engineers and their data – than a business-driven set of capabilities needed to support the product lifecycle.

The System approach

Traditional organizations are siloed, and initially, PLM always had the challenge of supporting product information shared throughout the whole lifecycle, where there was no conventional focus per discipline to invest in sharing – every discipline has its P&L – and sharing comes with a cost.

At the management level, the financial data coming from the ERP system drives the business. ERP systems are transactional and can provide real-time data about the company’s performance. C-level management wants to be sure they can see what is happening, so there is a massive focus on implementing the best ERP system.

At the management level, the financial data coming from the ERP system drives the business. ERP systems are transactional and can provide real-time data about the company’s performance. C-level management wants to be sure they can see what is happening, so there is a massive focus on implementing the best ERP system.

In some cases, I noticed that the investment in ERP was twenty times more than the PLM investment.

Why would you invest in PLM? Although the ERP engine will slow down without proper PLM, the complexity of PLM compared to ERP is a reason for management to look at the costs, as the PLM benefits are hard to grasp and depend on so much more than just execution.

Why would you invest in PLM? Although the ERP engine will slow down without proper PLM, the complexity of PLM compared to ERP is a reason for management to look at the costs, as the PLM benefits are hard to grasp and depend on so much more than just execution.

See also my old 2015 article: How do you measure collaboration?

As I mentioned, the Cost of Non-Quality, too many iterations, time lost by searching, material scrap, manufacturing delays or customer complaints – often are considered inescapable parts of doing business (like everyone else) – it happens all the time..

The strategy approach

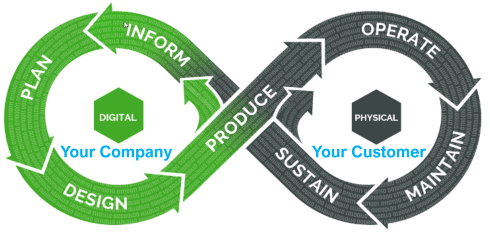

It is clear that when we accept the modern definition of PLM, we should be considering product lifecycle management as the management of the product lifecycle (as Patrick Hillberg says eloquently in our Share PLM podcast – see the image at the bottom of this post, too).

It is clear that when we accept the modern definition of PLM, we should be considering product lifecycle management as the management of the product lifecycle (as Patrick Hillberg says eloquently in our Share PLM podcast – see the image at the bottom of this post, too).

When you implement a strategy, it is evident that there should be a long(er) term vision behind it, which can be challenging for companies. Also, please read my previous article: The importance of a (PLM) vision.

I cannot believe that, although perhaps not fully understood, the importance of a data-driven approach will be discussed at many strategic board meetings. A data-driven approach is needed to implement a digital thread as the foundation for enhanced business models based on digital twins and to ensure data quality and governance supporting AI initiatives.

I cannot believe that, although perhaps not fully understood, the importance of a data-driven approach will be discussed at many strategic board meetings. A data-driven approach is needed to implement a digital thread as the foundation for enhanced business models based on digital twins and to ensure data quality and governance supporting AI initiatives.

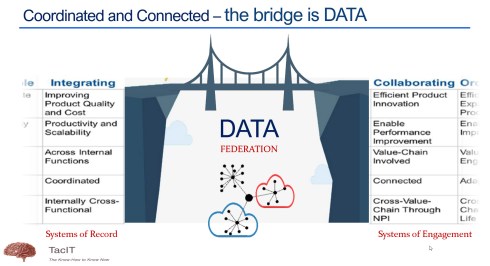

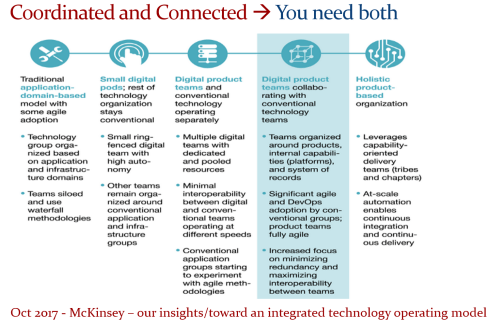

It is a process I have been preaching: From Coordinated to Coordinated and Connected.

We can be sure that at the board level, strategy discussions should be about value creation, not about reducing costs or avoiding risks as the future strategy.

Understanding the (PLM) value

The biggest challenge for companies is to understand how to modernize their PLM infrastructure to bring value.

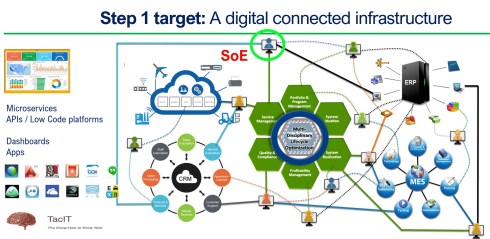

* Step 1 is obvious. Stop considering PLM as a system with capabilities, but investigate how you transform your infrastructure from a collection of systems and (document) interfaces towards a federated infrastructure of connected tools.

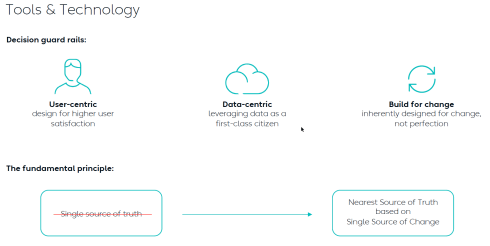

![]() Note: the paradigm shift from a Single Source of Truth (in my system) towards a Nearest Source of Truth and a Single Source of Change.

Note: the paradigm shift from a Single Source of Truth (in my system) towards a Nearest Source of Truth and a Single Source of Change.

* Step 2 is education. A data-driven approach creates new opportunities and impacts how companies should run their business. Different skills are needed, and other organizational structures are required, from disciplines working in siloes to hybrid organizations where people can work in domain-driven environments (the Systems of Record) and product-centric teams (the System of Engagement). AI tools and capabilities will likely create an effortless flow of information within the enterprise.

* Step 3 is building a compelling story to implement the vision. Implementing new ways of working based on new technical capabilities requires also organizational change. If your organization keeps working similarly, you might gain some percentage of efficiency improvements.

The real benefits come from doing things differently, and technology allows you to do it differently. However, this requires people to work differently, too, and this is the most common mistake in transformational projects.

The real benefits come from doing things differently, and technology allows you to do it differently. However, this requires people to work differently, too, and this is the most common mistake in transformational projects.

Companies understand the WHY and WHAT but leave the HOW to the middle management.

People are squeezed into an ideal performance without taking them on the journey. For that reason, it is essential to build a compelling story that motivates individuals to join the transformation. Assisting companies in building compelling story lines is one of the areas where I specialize.

People are squeezed into an ideal performance without taking them on the journey. For that reason, it is essential to build a compelling story that motivates individuals to join the transformation. Assisting companies in building compelling story lines is one of the areas where I specialize.

Feel free to contact me to explore the opportunity for your business.

It is not the technology!

With the upcoming availability of AI tools, implementing a PLM strategy will no longer depend on how IT understands the technology, the systems and the interfaces needed.

As Yousef Hooshmand‘s above image describes, a federated infrastructure of connected (SaaS) solutions will enable companies to focus on accurate data (priority #1) and people creating and using accurate data (priority #1). As you can see, people and data in modern PLM are the highest priority.

Therefore, I look forward to participating in the upcoming Share PLM Summit on 27-28 May in Jerez.

It will be a breakthrough – where traditional PLM conferences focus on technology and best practices. This conference will focus on how we can involve and motivate people. Regardless of which industry you are active in, it is a universal topic for any company that wants to transform.

Conclusion

Returning to this article’s introduction, modern PLM is an opportunity to transform the business and make it future-proof. It needs to be done for sure now or in the near future. Therefore PLM initiatives should be considered from the value point first instead of focusing on the costs. How well are you connected to your management’s vision to make PLM a value discussion?

Enjoy the podcast – several topics discuss relate to this post.

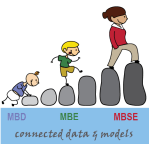

Four years ago, I wrote a series of posts with the common theme: The road to model-based and connected PLM. I discussed the various aspects of model-based and the transition from considering PLM as a system towards considering PLM as a strategy to implement a connected infrastructure.

Four years ago, I wrote a series of posts with the common theme: The road to model-based and connected PLM. I discussed the various aspects of model-based and the transition from considering PLM as a system towards considering PLM as a strategy to implement a connected infrastructure.

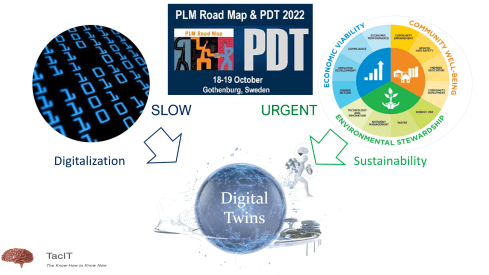

Since then, a lot has happened. The terminology of Digital Twin and Digital Thread has become better understood. The difference between Coordinated and Connected ways of working has become more apparent. Spoiler: You need both ways. And at this moment, Artificial Intelligence (AI) has become a new hype.

Many current discussions in the PLM domain are about structures and data connectivity, Bills of Materials (BOM), or Bills of Information(BOI) combined with the new term Digital Thread as a Service (DTaaS) introduced by Oleg Shilovitsky and Rob Ferrone. Here, we envision a digitally connected enterprise, based connected services.

Many current discussions in the PLM domain are about structures and data connectivity, Bills of Materials (BOM), or Bills of Information(BOI) combined with the new term Digital Thread as a Service (DTaaS) introduced by Oleg Shilovitsky and Rob Ferrone. Here, we envision a digitally connected enterprise, based connected services.

A lot can be explored in this direction; also relevant Lionel Grealou’s article in Engineering.com: RIP SaaS, long live AI-as-a-service and follow-up discussions related tot his topic. I chimed in with Data, Processes and AI.

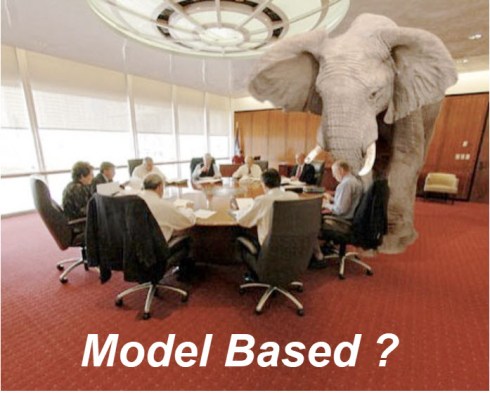

However, we also need to focus on the term model-based or model-driven. When we talk about models currently, Large Language Models (LMM) are the hype, and when you are working in the design space, 3D CAD models might be your first association.

There is still confusion in the PLM domain: what do we mean by model-based, and where are we progressing with working model-based?

A topic I want to explore in this post.

It is not only Model-Based Definition (MBD)

Before I started The Road to Model-Based series, there was already the misunderstanding that model-based means 3D CAD model-based. See my post from that time: Model-Based – the confusion.

Model-Based Definition (MBD) is an excellent first step in understanding information continuity, in this case primarily between engineering and manufacturing, where the annotated model is used as the source for manufacturing.

In this way, there is no need for separate 2D drawings with manufacturing details, reducing the extra need to keep the engineering and manufacturing information in sync and, in addition, reducing the chance of misinterpretations.

MBD is a common practice in aerospace and particularly in the automotive industry. Other industries are struggling to introduce MBD, either because the OEM is not ready or willing to share information in a different format than 3D + 2D drawings, or their supplier consider MBD too complex for them compared to their current document-driven approach.

MBD is a common practice in aerospace and particularly in the automotive industry. Other industries are struggling to introduce MBD, either because the OEM is not ready or willing to share information in a different format than 3D + 2D drawings, or their supplier consider MBD too complex for them compared to their current document-driven approach.

In its current practice, we must remember that MBD is part of a coordinated approach.

Companies exchange technical data packages based on potential MBD standards (ASME Y14.47 /ISO 16792 but also JT and 3D PDF). It is not yet part of the connected enterprise, but it connects engineering and manufacturing using the 3D Model as the core information carrier.

As I wrote, learning to work with MBD is a stepping stone in understanding a modern model-based and data-driven enterprise. See my 2022 post: Why Model-based Definition is important for us all.

As I wrote, learning to work with MBD is a stepping stone in understanding a modern model-based and data-driven enterprise. See my 2022 post: Why Model-based Definition is important for us all.

To conclude on MBD, Model-based definition is a crucial practice to improve collaboration between engineering, manufacturing, and suppliers, and it might be parallel to collaborative BOM structures.

And it is transformational as the following benefits are reported through ChatGPT:

- Up to 30% faster in product development cycles due to reduced need for 2D drawings and fewer design iterations. Boeing reported a 50% reduction in engineering change requests by using MBD.

- Companies using MBD see a 20–50% reduction in manufacturing errors caused by misinterpretations of 2D drawings. Caterpillar reported a 30% improvement in first-pass yield due to better communication between design and manufacturing teams.

- MBD can reduce product launch time by 20–50% by eliminating bottlenecks related to traditional drawings and manual data entry.

- 20–30% reduction in documentation costs by eliminating or reducing 2D drawings. Up to 60% savings on rework and scrap costs by reducing errors and inconsistencies.

Over five years, Lockheed Martin achieved a $300 million cost savings by implementing MBD across parts of its supply chain.

MBSE is not a silo.

For many people, Model-Based Systems Engineering(MBSE) seems to be something not relevant to their business, or it is a discipline for a small group of specialists that are conducting system engineering practices, not in the traditional document-driven V-shape approach but in an iterative process following the V-shape, meanwhile using models to predict and verify assumptions.

And what is the value connected in a PLM environment?

A quick heads up – what is a model

A model is a simplified representation of a system, process, or concept used to understand, predict, or optimize real-world phenomena. Models can be mathematical, computational, or conceptual.

We need models to:

- Simplify Complexity – Break down intricate systems into manageable components and focus on the main components.

- Make Predictions – Forecast outcomes in science, engineering, and economics by simulating behavior – Large Language Models, Machine Learning.

- Optimize Decisions – Improve efficiency in various fields like AI, finance, and logistics by running simulations and find the best virtual solution to apply.

- Test Hypotheses – Evaluate scenarios without real-world risks or costs for example a virtual crash test..

It is important to realize models are as accurate as the data elements they are running on – every modeling practices has a certain need for base data, be it measurements, formulas, statistics.

I watched and listened to the interesting podcast below, where Jonathan Scott and Pat Coulehan discuss this topic: Bridging MBSE and PLM: Overcoming Challenges in Digital Engineering. If you have time – watch it to grasp the challenges.

The challenge in an MBSE environment is that it is not a single tool with a single version of the truth; it is merely a federated environment of shared datasets that are interpreted by modeling applications to understand and define the behavior of a product.

In addition, an interesting article from Nicolas Figay might help you understand the value for a broader audience. Read his article: MBSE: Beyond Diagrams – Unlocking Model Intelligence for Computer-Aided Engineering.

In addition, an interesting article from Nicolas Figay might help you understand the value for a broader audience. Read his article: MBSE: Beyond Diagrams – Unlocking Model Intelligence for Computer-Aided Engineering.

Ultimately, and this is the agreement I found on many PLM conferences, we agree that MBSE practices are the foundation for downstream processes and operations.

We need a data-driven modeling environment to implement Digital Twins, which can span multiple systems and diagrams.

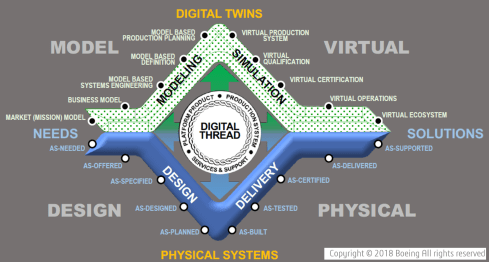

In this context, I like the Boeing diamond presented by Don Farr at the 2018 PLM Roadmap EMEA conference. It is a model view of a system, where between the virtual and the physical flow, we will have data flowing through a digital thread.

Where this image describes a model-based, data-driven infrastructure to deliver a solution, we can, in addition, apply the DevOp approach to the bigger picture for solutions in operation, as depicted by the PTC image below.

Model-based the foundation of the digital twins

![]() To conclude on MBSE, I hope that it is clear why I am promoting considering MBSE not only as the environment to conceptualize a solution but also as the foundation for a digital enterprise where information is connected through digital threads and AI models (**new**)

To conclude on MBSE, I hope that it is clear why I am promoting considering MBSE not only as the environment to conceptualize a solution but also as the foundation for a digital enterprise where information is connected through digital threads and AI models (**new**)

The data borders between traditional system domains will disappear – the single source of change and the nearest source of truth – paradigm, and this post, The Big Blocks of Future Lifecycle Management, from Prof. Dr. Jörg Fischer, are all about data domains.

However, having accessible data using all kinds of modern data sources and tools are necessary to build digital twins – either to simulate and predict a physical solution or to analyze a physical solution and, based on the analysis, either adjust the solutions or improve your virtual simulations.

Digital Twins at any stage of the product life cycle are crucial to developing and maintaining sustainable solutions, as I discussed in previous lectures. See the image below:

Conclusion

Data quality and architecture are the future of a modern digital enterprise – the building blocks. And there is a lot of discussion related to Artificial Intelligence. This will only work when we master the methodology and practices related to a data-driven and sustainable approach using models. MBD is not new, MBSE perhaps still new, building blocks for a model-based approach. Where are you in your lifecycle?

Most times in this PLM and Sustainability series, Klaus Brettschneider and Jos Voskuil from the PLM Green Global Alliance core team speak with PLM related vendors or service partners.

Most times in this PLM and Sustainability series, Klaus Brettschneider and Jos Voskuil from the PLM Green Global Alliance core team speak with PLM related vendors or service partners.

This year we have been speaking with Transition Technologies PSC, Configit, aPriori, Makersite and the PLM Vendors PTC, Siemens and SAP.

Where the first group of companies provided complementary software offerings to support sustainability – “the fourth dimension”– the PLM vendors focused more on the solutions within their portfolio.

This time we spoke with , CIMPA PLM services, a company supporting their customers with PLM and Sustainability challenges, offering an end-to-end support.

What makes them special is that they are also core partner of the PLM Global Green Alliance, where they moderate the Circular Economy theme – read their introduction here: PLM and Circular Economy.

CIMPA PLM services

We spoke with Pierre DAVID and Mahdi BESBES from CIMPA PLM services. Pierre is an environmental engineer and Mahdi is a consulting manager focusing on parts/components traceability in the context of sustainability and a circular economy. Many of the activities described by Pierre and Mahdi were related to the aerospace industry.

We spoke with Pierre DAVID and Mahdi BESBES from CIMPA PLM services. Pierre is an environmental engineer and Mahdi is a consulting manager focusing on parts/components traceability in the context of sustainability and a circular economy. Many of the activities described by Pierre and Mahdi were related to the aerospace industry.

We had an enjoyable and in-depth discussion of sustainability, as the aerospace industry is well-advanced in traceability during the upstream design processes. Good digital traceability is an excellent foundation to extend for sustainability purposes.

CSRD, LCA, DPP, AI and more

A bunch of abbreviations you will have to learn. We went through the need for a data-driven PLM infrastructure to support sustainability initiatives, like Life Cycle Assessments and more. We zoomed in on the current Corporate Sustainability Reporting Directive(CSRD) highlighting the challenges with the CSRD guidelines and how to connect the strategy (why we do the CSRD) to its execution (providing reports and KPIs that make sense to individuals).

In addition, we discussed the importance of using the proper methodology and databases for lifecycle assessments. Looking forward, we discussed the potential of AI and the value of the Digital Product Passport for products in service.

Enjoy the 37 minutes discussion and you are always welcome to comment or start a discussion with us.

What we learned

- Sustainability initiatives are quite mature in the aerospace industry and thanks to its nature of traceability, this industry is leading in methodology and best practices.

- The various challenges with the CSRD directive – standardization, strategy and execution.

- The importance of the right databases when performing lifecycle analysis.

- CIMPA is working on how AI can be used for assessing environmental impacts and the value of the Digital Product Passport for products in service to extend its traceability

Want to learn more?

Here are some links related to the topics discussed in our meeting:

- CIMPA’s theme page on the PLM Green website: PLM and Circular Economy

- CIMPA’s commitments towards A sustainable, human and guiding approach

- Sopra Steria, CIMPA’s parent company: INSIDE #8 magazine

Conclusion

The discussion was insightful, given the advanced environment in which CIMPA consultants operate compared to other manufacturing industries. Our dialogue offered valuable lessons in the aerospace industry, that others can draw on to advance and better understand their sustainability initiatives

Recently, I noticed I reduced my blogging activities as many topics have already been discussed and repeatably published without new content.

Recently, I noticed I reduced my blogging activities as many topics have already been discussed and repeatably published without new content.

With the upcoming of Gen AI and ChatGPT, I believe my PLM feeds are flooded by AI-generated blog posts.

The ChatGPT option

Most companies are not frontrunners in using extremely modern PLM concepts, so you can type risk-free questions and get common-sense answers.

Most companies are not frontrunners in using extremely modern PLM concepts, so you can type risk-free questions and get common-sense answers.

I just tried these five questions:

- Why do we need an MBOM in PLM, and which industries benefit the most?

- What is the difference between a PLM system and a PLM strategy?

- Why do so many PLM projects fail?

- Why do so many ERP projects fail?

- What are the changes and benefits of a model-based approach to product lifecycle management?

![]() Note: Questions 3 and 4 have almost similar causes and impacts, although slightly different, which is to be expected given the scope of the domain.

Note: Questions 3 and 4 have almost similar causes and impacts, although slightly different, which is to be expected given the scope of the domain.

All these questions provided enough information for a blog post based on the answer. This illustrates that if you are writing about what are current best practices in the field – stop writing – the knowledge is there.

PLM in the real life

Recently, I had several discussions about which skills a PLM expert should have or which topics a PLM project should address.

PLM for the individual

For the individual, there are often certifications to obtain. Roger Tempest has been fighting for PLM professional recognition through certification – a challenge due to the broad scope and possibilities. Read more about Roger’s work in this post: PLM is complex (and we have to accept it?)

For the individual, there are often certifications to obtain. Roger Tempest has been fighting for PLM professional recognition through certification – a challenge due to the broad scope and possibilities. Read more about Roger’s work in this post: PLM is complex (and we have to accept it?)

PLM vendors and system integrators often certify their staff or resellers to guarantee the quality of their solution delivery. Potential topics will be missed as they do not fulfill the vendor’s or integrator’s business purpose.

Asking ChatGPT about the required skills for a PLM expert, these were the top 5 answers:

- Technical skills

- Domain Knowledge

- Analytical and Problem-Solving Skills

- Interpersonal and Management Skills

- Strategic Thinking

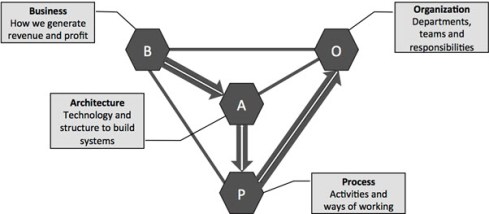

It was interesting to see the order proposed by ChatGPT. Fist the tools (technology), then the processes (domain knowledge / analytical thinking), and last the people and business (strategy and interpersonal and management skills) It is hard to find individuals with all these skills in a single person.

It was interesting to see the order proposed by ChatGPT. Fist the tools (technology), then the processes (domain knowledge / analytical thinking), and last the people and business (strategy and interpersonal and management skills) It is hard to find individuals with all these skills in a single person.

Although we want people to be that broad in their skills, job offerings are mainly looking for the expert in one domain, be it strategy, communication, industry or technology. To get an impression of the skills read my PLM and Education concluding blog post.

Now, let’s see what it means for an organization.

PLM for the organization

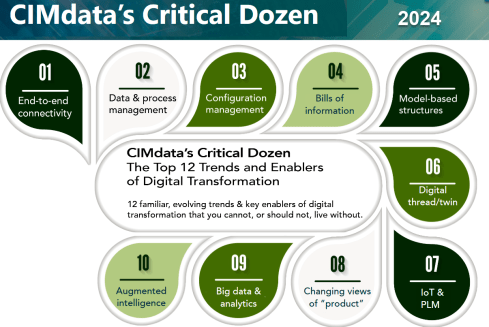

In this area, one of the most consistent frameworks I have seen over time is CIMdata‘s Critical Dozen. Although they refer less to skills and more to trends and enablers, a company should invest in – educate people & build skills – to support a successful digital transformation in the PLM domain.

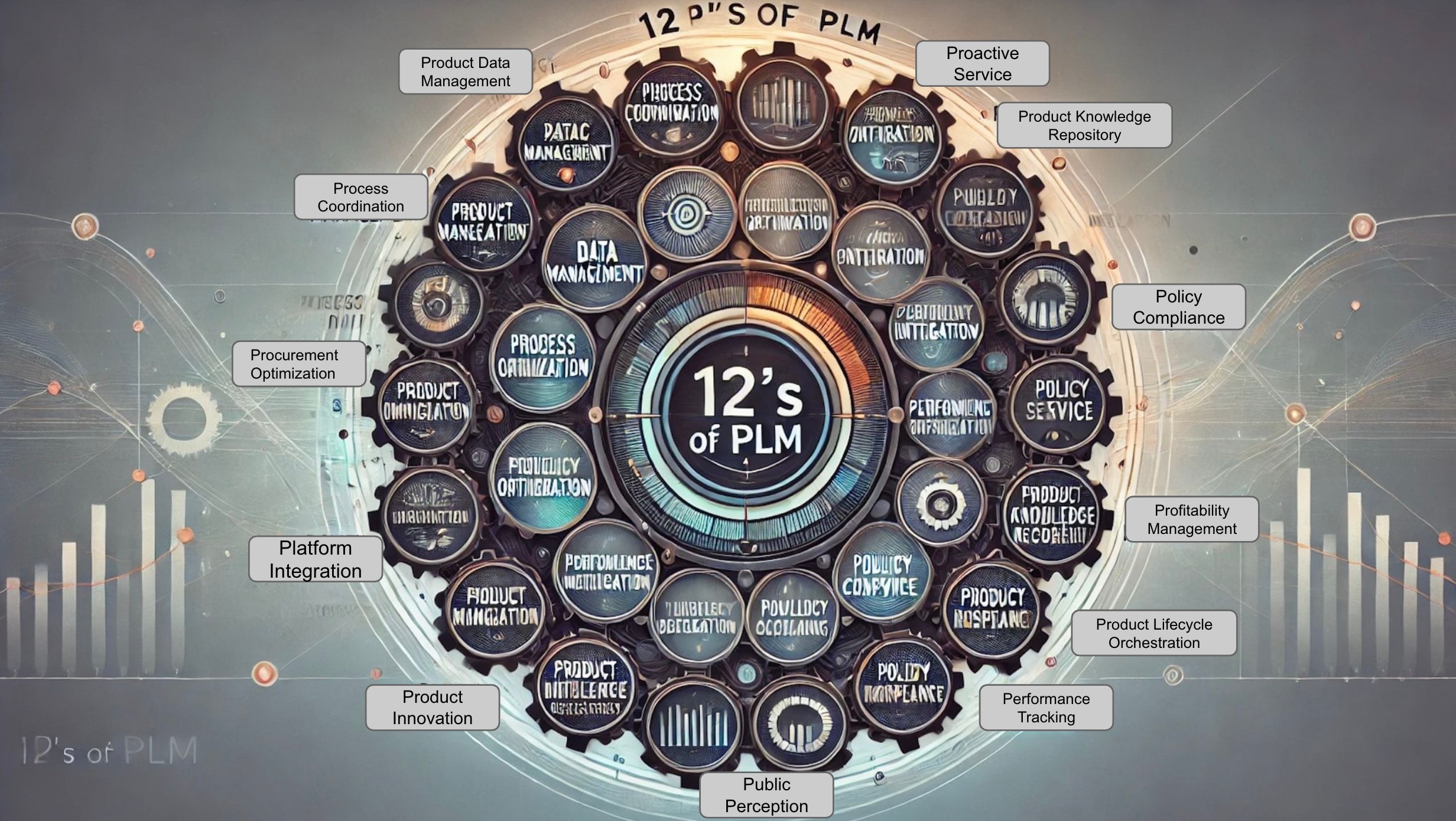

Oleg Shilovitsky’s recent blog post, The 12 “P” s of PLM Explained by Role: How to Make PLM More Than Just a Buzzword describes in an AI manner the various aspects of the term PLM, using 12 P**-words, reacting to Lionel Grealou’ s post: Making PLM Great Again

Oleg Shilovitsky’s recent blog post, The 12 “P” s of PLM Explained by Role: How to Make PLM More Than Just a Buzzword describes in an AI manner the various aspects of the term PLM, using 12 P**-words, reacting to Lionel Grealou’ s post: Making PLM Great Again

The challenge I see with these types of posts is: “OK, what to do now? Where to start?”

I believe where to start at the first place is a commonly agreed topic.

Everything starts from having a purpose and a vision. And this vision should be supported by a motivating story about the WHY that inspires everyone.

It is teamwork to define such a strategy, communicate it through a compelling story and make it personal. An excellent book to read is Make it personal from Dr. Cara Antoine – click on the image to discover the content and find my review why I believe this book is so compelling.

It is teamwork to define such a strategy, communicate it through a compelling story and make it personal. An excellent book to read is Make it personal from Dr. Cara Antoine – click on the image to discover the content and find my review why I believe this book is so compelling.

An important reason why we have to make transformations personal is because we are dealing first of all with human beings. And human beings are driven by emotions first even before ratio kicks in. We see it everywhere and unfortunately also in politics.

The HOW from real-life

This question cannot be answered by external PLM vendors, consultants or system integrators. Forget the Out-of-the-Box templates or the industry best practices (from the past), but start from your company’s culture and vision, introducing step-by-step new technologies, ways of working and business models to move towards the company’s vision target.

This question cannot be answered by external PLM vendors, consultants or system integrators. Forget the Out-of-the-Box templates or the industry best practices (from the past), but start from your company’s culture and vision, introducing step-by-step new technologies, ways of working and business models to move towards the company’s vision target.

Building the HOW is not an easy journey, and to illustrate the variety of skills needed to be successful, I worked with Share PLM on their Series 2 podcast. You can find the complete overview here. There is one more to come to conclude this year.

Our focus was to speak only with PLM experts from the field, understanding their day-to-day challenges with a focus on HOW they did it and WHAT they learned.

Our focus was to speak only with PLM experts from the field, understanding their day-to-day challenges with a focus on HOW they did it and WHAT they learned.

And this is what we learned:

Unveiling FLSmidth’s Industrial Equipment PLM Transformation: From Projects to Products

It was our first episode of Series 2, and we spoke with Johan Mikkelä, Head of the PLM Solution Architecture at FLSmidth.

It was our first episode of Series 2, and we spoke with Johan Mikkelä, Head of the PLM Solution Architecture at FLSmidth.

FLSmidth provides the global mining and cement industries with equipment and services, which is very much an ETO business moving towards CTO.

We discussed their Industrial Equipment PLM Transformation and the impact it has made.

Start With People: ABB’s Engineering Approach to Digital Transformation

We spoke with Issam Darraj, who shared his thoughts on human-centric digitalization. Issam talks us through ABB’s engineering perspective on driving transformation and discusses the importance of focusing on your people. Our favorite quote:

We spoke with Issam Darraj, who shared his thoughts on human-centric digitalization. Issam talks us through ABB’s engineering perspective on driving transformation and discusses the importance of focusing on your people. Our favorite quote:

To grow, you need to focus on your people. If your people are happy, you will automatically grow. If your people are unhappy, they will leave you or work against you.

Enabling change: Exploring the human side of digital transformations

We spoke with Antonio Casaschi as he shared his thoughts on the human side of digital transformation. When discussing the PLM expert, he agrees it is difficult. Our favorite part here:

We spoke with Antonio Casaschi as he shared his thoughts on the human side of digital transformation. When discussing the PLM expert, he agrees it is difficult. Our favorite part here:

“I see a PLM expert as someone with a lot of experience in organizational change management. Of course, maybe people with a different background can see a PLM expert with someone with a lot of knowledge of how you develop products, all the best practices around products, etc. We first need to agree on what a PLM expert is, and then we can agree on how you become an expert in such a domain.”

Revolutionizing PLM: Insights from Yousef Hooshmand

With Dr. Yousef Hooshmand, writer of the paper: From a Monolithic PLM Landscape to a Federated Domain and

With Dr. Yousef Hooshmand, writer of the paper: From a Monolithic PLM Landscape to a Federated Domain and

Data Mesh, with over 15 years of experience in the PLM domain, currently PLM Lead at NIO, we discussed the complexity of digital transformation in the PLM domain and How to deal with legacy, meanwhile implementing a user-centric, data-driven future.

My favorite quote: The End of Single Source of Truth, now it is about The nearest Source of Truth and Single Source of Change.

Steadfast Consistency: Delving into Configuration Management with Martijn Dullaart

Martijn Dullaart, who is the man behind the blog MDUX: The Future of CM and author of the book The Essential Guide to Part Re-Identification: Unleash the Power of Interchangeability and Traceability, has been active both in the PLM and CM domain and with Martijn the similarities and differences between PLM and CM and why organizations need to be educated on the topic of CM

Martijn Dullaart, who is the man behind the blog MDUX: The Future of CM and author of the book The Essential Guide to Part Re-Identification: Unleash the Power of Interchangeability and Traceability, has been active both in the PLM and CM domain and with Martijn the similarities and differences between PLM and CM and why organizations need to be educated on the topic of CM

The ROI of Digitalization: A Deep Dive into Business Value with Susanna Maëntausta

With Susanna Maëntausta, we discussed how to implement PLM in non-traditional manufacturing industries, such as the chemical and pharmaceutical industries.

With Susanna Maëntausta, we discussed how to implement PLM in non-traditional manufacturing industries, such as the chemical and pharmaceutical industries.

Susanna teaches us to ensure PLM projects are value-driven, connecting business objectives and KPIs to the implementation and execution steps in the field. Susanna is highly skilled in connecting people at any level of the organization.

Narratives of Change: Grundfos Transformation Tales with Björn Axling

As Head of PLM and part of the Group Innovation management team at Grundfos, Bjorn Axling aims to drive a Group-wide, cross-functional transformation into more innovative, more efficient, and data-driven ways of working through the product lifecycle from ideation to end-of-life.

As Head of PLM and part of the Group Innovation management team at Grundfos, Bjorn Axling aims to drive a Group-wide, cross-functional transformation into more innovative, more efficient, and data-driven ways of working through the product lifecycle from ideation to end-of-life.

In this episode, you will learn all the various aspects that come together when leading such a transformation in terms of culture, people, communication, and modern technology.

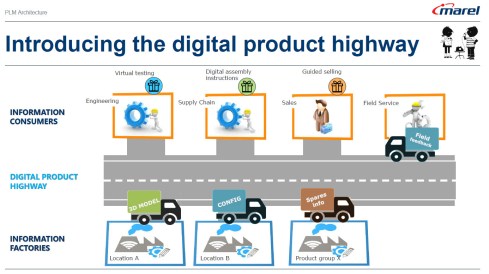

The Next Lane: Marel and the Digital Product Highway with Roger Kabo

With Roger Kabo, we discussed the steps needed to replace a legacy PLM environment and be open to a modern, federated, and data-driven future.

With Roger Kabo, we discussed the steps needed to replace a legacy PLM environment and be open to a modern, federated, and data-driven future.

Step 1: Start with the end in mind. Every successful business starts with a clear and compelling vision. Your vision should be specific, inspiring, and something your team can rally behind.

Next, build on value and do it step by step.

How do you manage technology and data when you have a diverse product portfolio?

We talked with Jim van Oss, the former CIO of Moog Inc., for a deep dive into the fascinating world of technology transformations.

We talked with Jim van Oss, the former CIO of Moog Inc., for a deep dive into the fascinating world of technology transformations.

Key Takeaway: Evolving technology requires a clear strategy!

Jim underscores the importance of having a north star to guide your technological advancements, ensuring you remain focused and adaptable in an ever-changing landscape.

Diverse Products, Unified Systems: MBSE Insights with Max Gravel from Moog

We discussed the future of the Model-Based approaches with Max Gravel – MBD at Gulfstream and MBSE at Moog.

We discussed the future of the Model-Based approaches with Max Gravel – MBD at Gulfstream and MBSE at Moog.

Max Gravel, Manager of Model-Based Engineering at Moog Inc., who is also active in modern CM, emphasizes that understanding your company’s goals with MBD is crucial.

There’s no one-size-fits-all solution: it’s about tailoring the strategy to drive real value for your business. The tools are available, but the key lies in addressing the right questions and focusing on what matters most. A great, motivating story containing all the aspects of digital transformation in the PLM domain/

Customer-First PLM: Insights on Digital Transformation and Leadership

With Helene Arlander, who has been involved in big transformation projects in the telecom industry. Starting from a complex legacy environment, implementing new data-driven approaches. We discussed the importance of managing product portfolios end-to-end and the leadership strategies needed for engaging people in charge.

With Helene Arlander, who has been involved in big transformation projects in the telecom industry. Starting from a complex legacy environment, implementing new data-driven approaches. We discussed the importance of managing product portfolios end-to-end and the leadership strategies needed for engaging people in charge.

We also discussed the role of AI in shaping the future of PLM and the importance of vision, diverse skill sets, and teamwork in transformations.

Conclusion

I believe the time of traditional blogging is over – current PLM concepts and issues can be easily queried by using ChatGPT-like solutions. The fundamental understanding of what you can do now comes from learning and listening to people, not as fast as a TikTok video or Insta message. For me, a podcast is a comfortable method of holistic learning.

Let us know what you think and who should be in Season 3

And for my friends in the United States – Happy Thanksgiving and think about the day after ……..

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

There were several interesting sessions which I will not mention here as I want to focus on forward-looking topics with a mix of (federated) data-driven PLM environments and the applicability of AI, staying around 1500 words.

R-evolutionizing PLM and ERP and Heliple

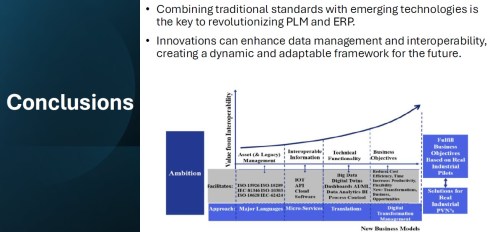

Cristina Paniagua from the Luleå University of Technology closed the first day of the conference, giving us food for thought to discuss over dinner. Her session, describing the Arrowhead fPTN project, fitted nicely with the concepts of the Federated PLM Heliple project presented by Erik Herzog also on Day 2.

They are both research products related to the future state of a digital enterprise. Therefore, it makes sense to treat them together.

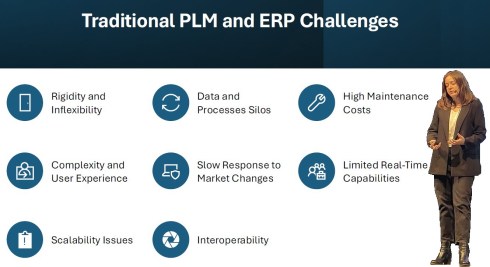

Cristina’s session started with sharing the challenges of traditional PLM and ERP systems:

These statements align with the drivers of the Heliple project. The PLM and ERP systems—Systems of Record—provide baselines and traceability. However, Systems of Record have not historically been designed to support real-time collaboration or to create an attractive user experience.

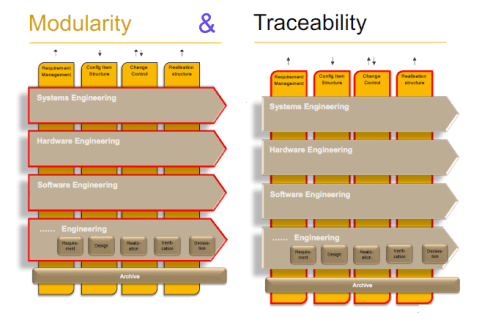

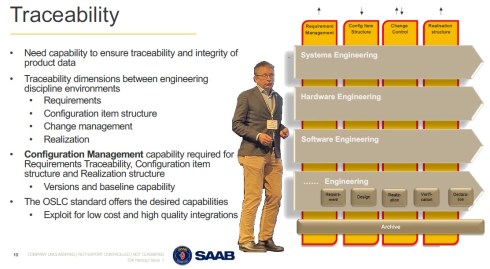

The Heliple project focuses on connecting various modules—the horizontal bars—for systems engineering, hardware engineering, etc., as real-time collaboration environments that can be highly customized and replaceable if needed. The Heliple project explored the usage of OSLC to connect these modules, the Systems of Engagement, with the Systems of Record.

![]() By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

Now, the Heliple team is aiming to expand their research with industry scale validation through the Demoiple project (Validate that the Heliple-2 technology can be implemented and accredited in Saab Aeronautics’ operational IT) combined with the Nextiple project, where they will investigate the role of heterogeneous information models/ontologies for heterogeneous analysis.

![]() If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

The resulting technology is projected to impact manufacturing productivity and flexibility substantially.

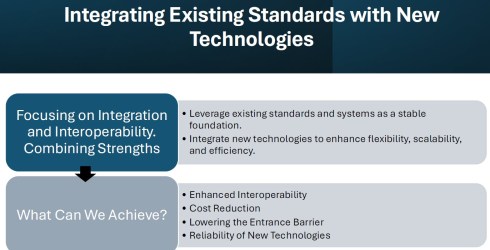

The exciting starting point of the Arrowhead project is that it wants to use existing standards and systems as a foundation and, on top of that, create a business and user-oriented layer, using modern technologies such as micro-services to support real-time processing and semantic technologies, ontologies, system modeling, and AI for data translations and learning—a much broader and ambitious scope than the Heliple project.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

Potentially, there are five platforms with their own internal ways of working, a mix of systems of record and systems of engagement, supported by an overlay of several Systems of Engagement environments.

![]() I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

See below my artist’s impression of how a Service Engineer would work in its app connected to CRM, PLM and ERP platform datasets:

The exciting part of the Arrowhead fPVN project is that it wants to explore the interactions between systems and user roles based on existing mature standards instead of leaving the connections to software developers.

Christina mentioned some of these standards below:

I greatly support this approach as, historically, much knowledge and effort has been put into developing standards to support interoperability. Maybe not in real-time, but the embedded knowledge in these standards will speed up the broader usage. Therefore, I concur with the concluding slide:

A final comment: Industrial users must push for these standards if they do not want a future vendor lock-in. Vendors will do what the majority of their customers ask for but will also keep their customers’ data in proprietary formats to prevent them from switching to another system.

Accelerated Product Development Enabled by Digitalization

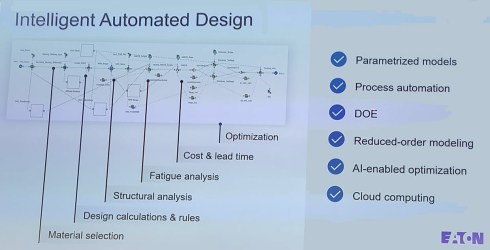

![]() The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

With its broad range of products, Eaton is exploring new, innovative ways to accelerate product design by modeling the design process and applying AI to narrow design decisions and customer-specific engineering work. The picture below shows the areas of attention needed to model the design processes. Uyiosa mentioned the significant beneficial results that have already been reached.

Together with generative design, Eaton works towards modern digital engineering processes built on models and knowledge. His session was complementary to the Heliple and Arrowhead story. To reach such a contemporary design engineering environment, it must be data-driven and built upon open PLM and software components to fully use AI and automation.

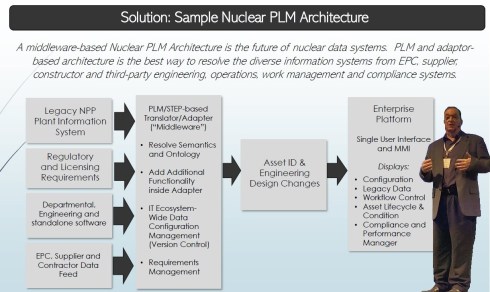

Next Gen” Life Cycle Management in Next-Gen Nuclear Power and LTO Legacy Plants

Kent Freeland‘s presentation was a trip into memory land when he discussed the issues with Long Term Operations of legacy nuclear plants.

I spent several years in Ringhals (Sweden) discussing and piloting the setup of a PLM front-end next to the MRO (Maintenance Repair Overhaul) system. As nuclear plants developed in the sixties, they required a longer than anticipated lifecycle, with access to the right design and operational data; maintenance and upgrade changes in the plant needed to be planned and controlled. The design data is often lacking; it resides at the EPC or has been stored in a document management system with limited retrieval capabilities.

I spent several years in Ringhals (Sweden) discussing and piloting the setup of a PLM front-end next to the MRO (Maintenance Repair Overhaul) system. As nuclear plants developed in the sixties, they required a longer than anticipated lifecycle, with access to the right design and operational data; maintenance and upgrade changes in the plant needed to be planned and controlled. The design data is often lacking; it resides at the EPC or has been stored in a document management system with limited retrieval capabilities.

See also my 2019 post: How PLM, ALM, and BIM converge thanks to the digital twin.

Kent described these experienced challenges – we must have worked in parallel universes – that now, for the future, we need a digitally connected infrastructure for both plant design and maintenance artifacts, as envisioned below:

The solution reminded me of a lecture I saw at the PI PLMx 2019 conference, where the Swedish ESS facility demonstrated its Asset Lifecycle Data Management solution based on the 3DEXPERIENCE platform.

The solution reminded me of a lecture I saw at the PI PLMx 2019 conference, where the Swedish ESS facility demonstrated its Asset Lifecycle Data Management solution based on the 3DEXPERIENCE platform.

You can still find the presentation here: Henrik Lindblad Ola Nanzell ESS – Enabling Predictive Maintenance Through PLM & IIOT.

Also, Kent focused on the relevant standards to support a “Single Source of Truth” concept, where I would say after all the federated PLM discussions, I would go for:

“The nearest source of truth and a single source of Change”

assuming this makes more sense in a digitally connected enterprise.

Why do you need to be SMART when contracting for information?

Rob Bodington‘s presentation was complementary to Kent Freeland’s presentation. Ron, a technical fellow at Eurostep, described the challenge of information acquisition when working with large assets that require access to the correct data once the asset is in operation. The large asset could be a nuclear plant or an aircraft carrier.

Rob Bodington‘s presentation was complementary to Kent Freeland’s presentation. Ron, a technical fellow at Eurostep, described the challenge of information acquisition when working with large assets that require access to the correct data once the asset is in operation. The large asset could be a nuclear plant or an aircraft carrier.

In the ideal world, the asset owner wants to have a digital twin of the asset fed by different data sources through a digital thread. Of course, this environment will only be reliable when accurate data is used and presented.

Getting accurate data starts with the information acquisition process, and Rob explained that this needed to be done SMARTly – see the image below:

Rob zoomed in on the SMART keywords and the challenge the various standards provide to make the information SMARTly accessible, like the ISO 10303 / PLCS standard, the CFIHOS exchange standard and more. And then there is the ISO 8000 standard about data quality.

Rob zoomed in on the SMART keywords and the challenge the various standards provide to make the information SMARTly accessible, like the ISO 10303 / PLCS standard, the CFIHOS exchange standard and more. And then there is the ISO 8000 standard about data quality.

Click on the image to get smart.

Rob believes that AI might be the silver bullet as it might help understand the data quality, ontology and context of the data and even improve contracting, generating data clauses for contracting….

And there was a lot of AI ….

![]() There was a dazzling presentation from Gary Langridge, engineering manager at Ocado, explaining their Ocado Smart Platform (OSP), which leverages AI, robotics, and automation to tackle the challenges of online grocery and allow their clients to excel in performance and customer responsiveness.

There was a dazzling presentation from Gary Langridge, engineering manager at Ocado, explaining their Ocado Smart Platform (OSP), which leverages AI, robotics, and automation to tackle the challenges of online grocery and allow their clients to excel in performance and customer responsiveness.

There was a significant AI component in his presentation, and if you are tired of reading, watch this video

![]() But here was more AI – from the 25 sessions in this conference, 19 of them mentioned the potential or usage of AI somewhere in their speech – this is more than 75 %!

But here was more AI – from the 25 sessions in this conference, 19 of them mentioned the potential or usage of AI somewhere in their speech – this is more than 75 %!

There was a dedicated closing panel discussion related to the real business value of Artificial Intelligence in the PLM domain, moderated by Peter Bilello and answered by selected speakers from the conference, Sandeep Natu (CIMdata), Lars Fossum (SAP), Diana Goenage (Dassault Systemes) and Uyiosa Abusomwan (Eaton).

The discussion was realistic and helpful for the audience. It is clear that to reap the benefits, companies must explore the technology and use it to create valuable business scenarios. One could argue that many AI tools are already available, but the challenge remains that they have to run on reliable data. The data foundation is crucial for a successful outcome.

An interesting point in the discussion was the statement from Diane Goenage, who repeatedly warned that using LLM-based solutions has an environmental impact due to the amount of energy they consume.

An interesting point in the discussion was the statement from Diane Goenage, who repeatedly warned that using LLM-based solutions has an environmental impact due to the amount of energy they consume.

We have a similar debate in the Netherlands – do we want the wind energy consumed by data centers (the big tech companies with a minimum workforce in the Netherlands), or should the Dutch citizens benefit from renewable energy resources?

Conclusion

There were even more interesting presentations during these two days, and you might have noticed that I did not advertise my content. This is because I have already reached 1600 words, but I also want to spend more time on the content separately.

It was about PLM and Sustainability, a topic often covered in this conference. Unfortunately, only 25 % of the presentations touched on sustainability, and AI over-hypes the topic.

Hopefully, it is not a sign of the time?

I am sharing another follow-up interview about PLM and Sustainability with a software vendor or implementer. Last year, in November 2023, Klaus Brettschneider and Jos Voskuil from the PLM Green Global Alliance core team spoke with Transition Technologies PSC about their GreenPLM offering and their first experiences in the field.

I am sharing another follow-up interview about PLM and Sustainability with a software vendor or implementer. Last year, in November 2023, Klaus Brettschneider and Jos Voskuil from the PLM Green Global Alliance core team spoke with Transition Technologies PSC about their GreenPLM offering and their first experiences in the field.

As we noticed with most first interviews, sustainability was a topic of discussion in the PLM domain, but it was still in the early discovery phases for all of us.

![]() Last week, we spoke again with Erik Rieger and Rafał Witkowski, both working for Transition Technologies PSC, a global IT solution integrator in the PLM world known for their PTC implementation services. The exciting part of this discussion is that system integrators are usually more directly connected to their customers in the field and, therefore, can be the source of understanding of what is happening.

Last week, we spoke again with Erik Rieger and Rafał Witkowski, both working for Transition Technologies PSC, a global IT solution integrator in the PLM world known for their PTC implementation services. The exciting part of this discussion is that system integrators are usually more directly connected to their customers in the field and, therefore, can be the source of understanding of what is happening.

ecoPLM and more

Where Erik is a and he is a long term PLM expert and Rafal is the PLM Practice Lead for Industrial Sustainability. In the interview below they shared their experiences with a first implementation pilot in the field, the value of their _ecoPLM offering in the context of the broader PTC portfolio. And of course we discussed topics closely related to these points and put them into a broader context of sustainably.

Enjoy the 34 minutes discussion and you are always welcome to comment or start a discussion with us.

The slides shown in this presentation and some more can be downloaded HERE.

What I learned

- The GreenPLM offering has changed its name into ecoPLM as TT PSC customers are focusing on developing sustainable products, with currently supporting designer to understand the carbon footprint of their products.

- They are actually in a MVP approach with a Tier 1 automotive supplier to validate and improve their solution and more customers are adding Design for Sustainability to their objective, besides Time to Market, Quality and Cost.

- Erik will provide a keynote speech at the Green PLM conference on November 14th in Berlin – The conference is targeting a German speaking audience although the papers are in English. You can still register and find more info here

- TT PSC is one of the partners completing the PTC sustainability offering and working close with their product management.

- A customer quote: “Sustainability makes PLM sexy again”

Want to learn more?

Here are some links related to the topics discussed in our meeting:

- YouTube: ecoPLM: your roadmap for eco-friendly product development

- ecoPLM – a sustainable product development website

- YouTube: Win the Net-Zero Race with PLM (and PTC)

Conclusions

We are making great progress in the support to design and deliver more sustainable products – sustainability goes beyond marketing as Rafal Witkowski mentioned – the journey has started. What do you see in your company?

It was a great pleasure to attend my favorite vendor-neutral PLM conference this year in Gothenburg—approximately 150 attendees, where most have expertise in the PLM domain.

It was a great pleasure to attend my favorite vendor-neutral PLM conference this year in Gothenburg—approximately 150 attendees, where most have expertise in the PLM domain.

We had the opportunity to learn new trends, discuss reality, and meet our peers.

The theme of the conference was:Value Drivers for Digitalization of the Product Lifecycle, a topic I have been discussing in my recent blog posts, as we need help and educate companies to understand the importance of digitalization for their business.

The two-day conference covered various lectures – view the agenda here – and of course the topic of AI was part of half of the lectures, giving the attendees a touch of reality.

The two-day conference covered various lectures – view the agenda here – and of course the topic of AI was part of half of the lectures, giving the attendees a touch of reality.

In this first post, I will cover the main highlight of Day 1.

Value Drivers for Digitalization of the Product Lifecycle

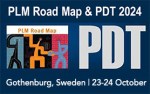

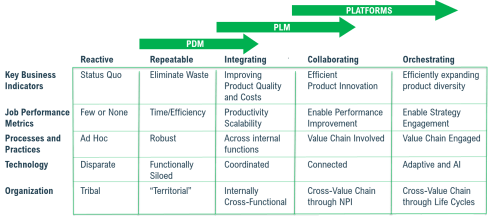

As usual, the conference started with Peter Bilello, president & CEO of CIMdata, stressing again that when implementing a PLM strategy, the maximum result comes from a holistic approach, meaning look at the big picture, don’t just focus on one topic.

As usual, the conference started with Peter Bilello, president & CEO of CIMdata, stressing again that when implementing a PLM strategy, the maximum result comes from a holistic approach, meaning look at the big picture, don’t just focus on one topic.

It was interesting to see again the classic graph (below) explaining the benefits of the end-to-end approach – I believe it is still valid for most companies; however, as I shared in my session the next day, implementing concepts of a Products Service System will require more a DevOp type of graph (more next week).

Next, Peter went through the CIMdata’s critical dozen with some updates. You can look at the updated 2024 image here.

Some of the changes: Digital Thread and Digital Twin are merged– as Digital Twins do not run on documents. And instead of focusing on Artificial Intelligence only, CIMdata introduced Augmented Intelligence as we should also consider solutions that augment human activities, not just replace them.

Some of the changes: Digital Thread and Digital Twin are merged– as Digital Twins do not run on documents. And instead of focusing on Artificial Intelligence only, CIMdata introduced Augmented Intelligence as we should also consider solutions that augment human activities, not just replace them.

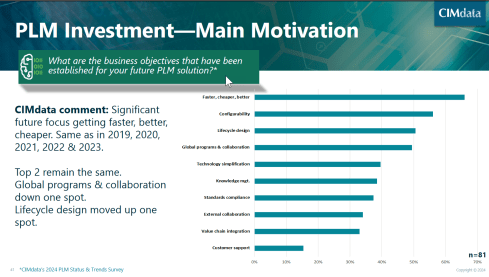

Peter also shared the results of a recent PLM survey where companies were asked about their main motivation for PLM investments. I found the result a little discouraging for several reasons:

The number one topic is still faster, cheaper and better – almost 65 % of the respondents see this as their priority. This number one topic illustrates that Sustainability has not reached the level of urgency, and perhaps the topic can be found in standards compliance.

Many of the companies with Sustainability in their mission should understand that a digital PLM infrastructure is the foundation for most initiatives, like Lifecycle Analysis (LCA). Sustainability is more than part of standards compliance, if it was mentioned anyway.

Many of the companies with Sustainability in their mission should understand that a digital PLM infrastructure is the foundation for most initiatives, like Lifecycle Analysis (LCA). Sustainability is more than part of standards compliance, if it was mentioned anyway.

The second disappointing observation for the understanding of PLM is that customer support is mentioned only by 15 % of the companies. Again, connecting your products to your customers is the first step to a DevOp approach, and you need to be able to optimize your product offering to what the customer really wants.

Digital Transformation of the Value Chain in Pharma

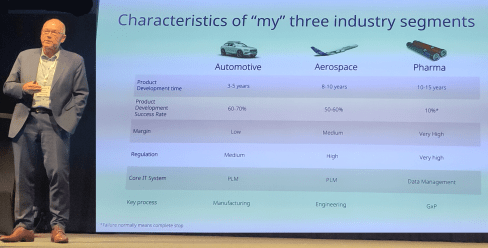

The second keynote was from Anders Romare, Chief Digital and Information Officer at Novo Nordisk. Anders has been participating in the PDT conference in the past. See my 2016 PLM Roadmap/PDT Europe post, where Anders presented on behalf of Airbus: Digital Transformation through an e2e PLM backbone.

Anders started by sharing some of the main characteristics of the companies he has been working for. Volvo, Airbus and now Novo Nordisk. It is interesting to compare these characteristics as they say a lot about the industry’s focus. See below:

Anders is now responsible for digital transformation in Novo Nordisk, which is a challenge in a heavily regulated industry.

One of the focus areas for Novo Nordisk in 2024 is also Artificial Intelligence, as you can see from the image to the left (click on it for the details).

One of the focus areas for Novo Nordisk in 2024 is also Artificial Intelligence, as you can see from the image to the left (click on it for the details).

As many others in this conference, Anders mentioned AI can only be applicable when it runs on top of accurate data.

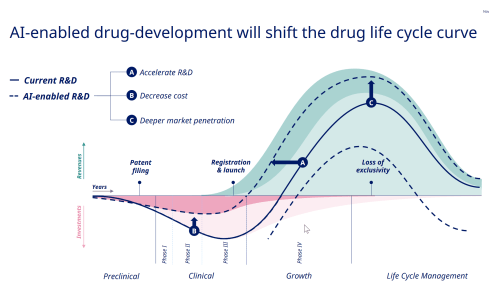

Understanding the potential of AI, they identified 59 areas where AI can create value for the business, and it is interesting to compare the traditional PLM curve Peter shared in his session with the potential AI-enabled drug-development curve as presented by Anders below:

Next, Anders shared some of the example cases of this exploration, and if you are interested in the details, visit their tech.life site.

When talking about the engineering framing of PLM, it was interesting to learn from Anders, who had a long history in PLM before Novo Nordisk, when he replied to a question from the audience that he would never talk about PLM at the management level. It’s very much aligned with my Don’t mention the P** word post.

A Strategy for the Management of Large Enterprise PLM Platforms

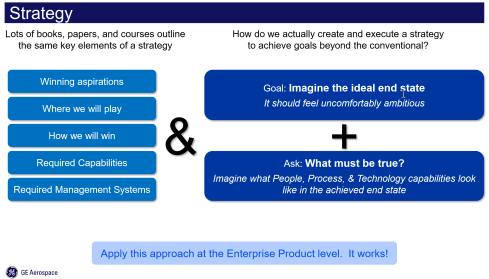

One of the highlights for me on Day 1 was Jorgen Dahl‘s presentation. Jorgen, a senior PLM director at GE Aerospace, shared their story towards a single PLM approach needed due to changes in businesses. And addressing the need for a digital thread also comes with an increased need for uptime.

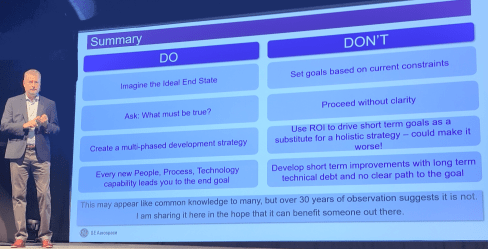

I like his strategy to execution approach, as shown in the image below, as it contains the most important topics. The business vision and understanding, the imagination of the end status and What must be True?

In my experience, the three blocks are iteratively connected. When describing the strategy, you might not be able to identify the required capabilities and management systems yet.

But then, when you start to imagine the ideal end state, you will have to consider them. And for companies, it is essential to be ambitious – or, as Jorgen stated, uncomfortable ambitious. Go for the 75 % to almost 100 % to be true. Also, asking What must be True is an excellent way to allow people to be involved and creatively explore the next steps.

Note: This approach does not provide all the details, as it will be a multiyear journey of learning and adjusting towards the future. Therefore, the strategy must be aligned with the culture to avoid continuous top-down governance of the details. In that context, Jorgen stated:

“Culture is what happens when you leave the room.”

It is a more positive statement than the famous Peter Drucker’s quote: “Culture eats strategy for breakfast.”

Jorgen’s concluding slide mentions potential common knowledge, although I believe the way Jorgen used the right easy-to-digest points will be helpful for all organizations to step back, look at their initiatives, and compare where they can improve.

How a Business Capability Model and Application Portfolio Management Support Through Changing Times

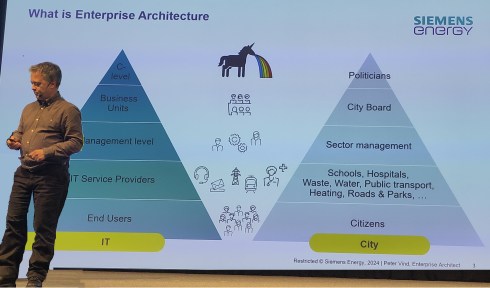

Peter Vind‘s presentation was nicely connected to the presentation from Jorgen Dahl. Peter, who is an enterprise architect at Siemens Energy, started by explaining where the enterprise architect fits in an organization and comparing it to a city.

In his entertaining session, he mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

Peter explained how they used Business Capability Modeling when Siemens Energy went through various business stages. First, the carve-out from Siemens AG and later the merger with Siemens Gamesa. Their challenge is to understand which capabilities remain, which are new or overlapping, both during the carve-out and merging process.

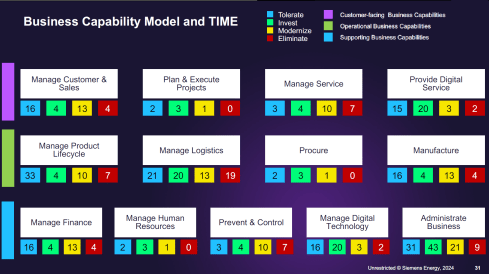

The business capability modeling leads to a classification of the applications used at different levels of the organization, such as customer-facing, operational, or supporting business capabilities.

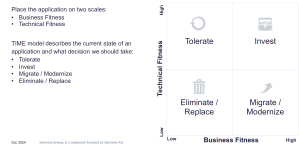

Next, for the lifecycle of the applications, the TIME approach was used, meaning that each application was mapped to business fitness and technical fitness. Click on the diagram to see the details.

The result could look like the mapping shown below – a comprehensive overview of where the action is

It is a rational approach; however, Peter mentioned that we also should be aware of the HIPPOs in an organization. If there is a HiPPO (Highest Paid Person’s Opinion) in play, you might face a political battle too.

It was a great educational session illustrating the need for an Enterprise Architect, the value of business capabilities modeling and the TIME concept.

And some more …

There were several other exciting presentations during day 1; however, as not all presentations are publicly available, I cannot discuss them in detail; I just looked at my notes.

Driving Trade Compliance and Efficiency

Peter Sandeck, Director of Project Management at TE Connectivity shared what they did to motivate engineers to endorse their Jurisdiction and Classification Assessment (JCA) process. Peter showed how, through a Minimal Viable Product (MVP) approach and listening to the end-users, they reached a higher Customer Satisfaction (CSAT) score after several iterations of the solution developed for the JCA process.

Peter Sandeck, Director of Project Management at TE Connectivity shared what they did to motivate engineers to endorse their Jurisdiction and Classification Assessment (JCA) process. Peter showed how, through a Minimal Viable Product (MVP) approach and listening to the end-users, they reached a higher Customer Satisfaction (CSAT) score after several iterations of the solution developed for the JCA process.

This approach is an excellent example of an agile method in which engineers are involved. My remaining question is still – are the same engineers in the short term also pushed to make lifecycle assessments? More work; however, I believe if you make it personal, the same MVP approach could work again.

Value of Model-Based Product Architecture

Jussi Sippola, Chief Expert, Product Architecture Management & Modularity at Wärtsilä, presented an excellent story related to the advantages of a more modular product architecture. Where historically, products were delivered based on customer requirements through the order fulfillment process, now there is in parallel the portfolio management process, defining the platform of modules, features and options.

Jussi Sippola, Chief Expert, Product Architecture Management & Modularity at Wärtsilä, presented an excellent story related to the advantages of a more modular product architecture. Where historically, products were delivered based on customer requirements through the order fulfillment process, now there is in parallel the portfolio management process, defining the platform of modules, features and options.

Jussi mentioned that they were able to reduce the number of parts by 50 % while still maintaining the same level of customer capabilities. In addition, thanks to modularity, they were able to reduce the production lead time by 40 % – essential numbers if you want to remain competitive.

Conclusion

Day 1 was a day where we learned a lot as an audience, and in addition, the networking time and dinner in the evening were precious for me and, I assume, also for many of the participants. In my next post, we will see more about new ways of working, the AI dream and Sustainability.

Recently, I attended several events related to the various aspects of product lifecycle management; most of them were tool-centric, explaining the benefits and values of their products.

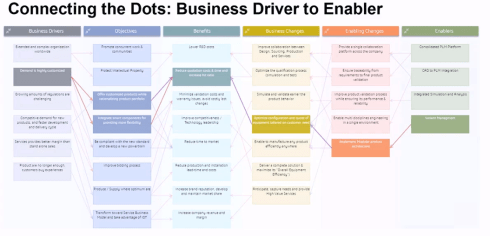

In parallel, I am working with several companies, assisting their PLM teams to make their plans understood by the upper management, which has always been my mission in the past.

In parallel, I am working with several companies, assisting their PLM teams to make their plans understood by the upper management, which has always been my mission in the past.

However, nowadays, people working in the business are feeling more and more challenged and pained by not acting adequately to the upcoming business demands.

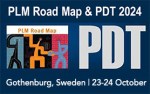

The image below has been shown so many times, and every time, the context becomes more relevant.

Too often, an evolutionary mindset with small steps is considered instead of looking toward the future and reasoning back for what needs to be done.

Let me share some experiences and potential solutions.

Don’t use the P** word!

The title of this post is one of the most essential points to consider. By using the term PLM, the discussion is most of the time framed in a debate related to the purchase or installation of a system, the PLM system, which is an engineering tool.

The title of this post is one of the most essential points to consider. By using the term PLM, the discussion is most of the time framed in a debate related to the purchase or installation of a system, the PLM system, which is an engineering tool.

PLM vendors, like Dassault Systèmes and Siemens, have recognized this, and the word PLM is no longer on their home pages.

They are now delivering experiences or digital industries software.

Other companies, such as PTC and Aras, broadened the discussion by naming other domains, such as manufacturing and services, all connected through a digital thread.

The challenge for all these software vendors is why a company would consider buying their products. A growing issue for them is also why would they like to change their existing PLM system to another one, as there is so much legacy.

The challenge for all these software vendors is why a company would consider buying their products. A growing issue for them is also why would they like to change their existing PLM system to another one, as there is so much legacy.

For all of these vendors, success can come if champions inside the targeted company understand the technology and can translate its needs into their daily work.

Here, we meet the internal PLM team, which is motivated by the technology and wants to spread the message to the organization. Often, with no or limited success, as the value and the context they are considering are not understood or felt as urgent.

Here, we meet the internal PLM team, which is motivated by the technology and wants to spread the message to the organization. Often, with no or limited success, as the value and the context they are considering are not understood or felt as urgent.

Lesson 1:

Don’t use the word PLM in your management messaging.

In some of the current projects I have seen, people talk about the digital highway or a digital infrastructure to take this hurdle. For example, listen to the SharePLM podcast with Roger Kabo from Marel, who talks about their vision and digital product highway.

In some of the current projects I have seen, people talk about the digital highway or a digital infrastructure to take this hurdle. For example, listen to the SharePLM podcast with Roger Kabo from Marel, who talks about their vision and digital product highway.

As soon as you use the word PLM, most people think about a (costly) system, as this is how PLM is framed. Engineering, like IT, is often considered a cost center, as money is made by manufacturing and selling products.

According to experts (CIMdata/Gartner), Product Lifecycle Management is considered a strategic approach. However, the majority of people talk about a PLM system. Of course, vendors and system integrators will speak about their PLM offerings.

According to experts (CIMdata/Gartner), Product Lifecycle Management is considered a strategic approach. However, the majority of people talk about a PLM system. Of course, vendors and system integrators will speak about their PLM offerings.

To avoid this framing, first of all, try to explain what you want to establish for the business. The terms Digital Product Highway or Digital Infrastructure, for example, avoid thinking in systems.

Lesson 2:

Don’t tell your management why they need to reward your project – they should tell you what they need.

This might seem like a bit of strange advice; however, you have to realize that most of the time, people do not talk about the details at the management level. At the management level, there are strategies and business objectives, and you will only get attention when your proposal addresses the business needs. At the management level, there should be an understanding of the business need and its potential value for the organization. Next, analyzing the business changes and required tools will lead to an understanding of what value the PLM team can bring.

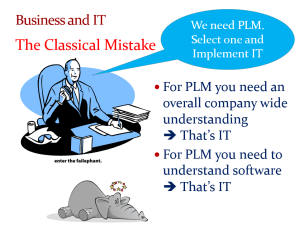

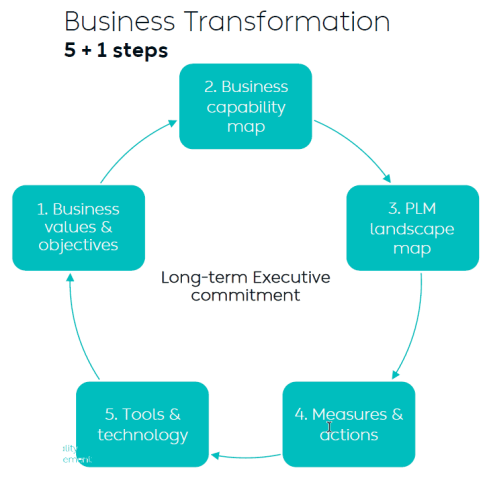

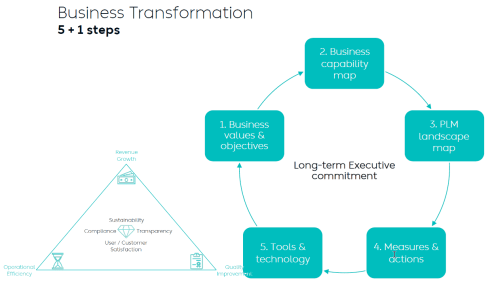

Yousef Hooshmand’s 5 + 1 approach illustrates this perfectly. It is crucial to note that long-term executive commitment is needed to have a serious project, and therefore, the connection to their business objective is vital.

Therefore, if you can connect your project to the business objectives of someone in management, you have the opportunity to get executive sponsorship. A crucial advice you hear all the time when discussing successful PLM projects.

Therefore, if you can connect your project to the business objectives of someone in management, you have the opportunity to get executive sponsorship. A crucial advice you hear all the time when discussing successful PLM projects.

Lesson 3:

Alignment must come from within the organization.

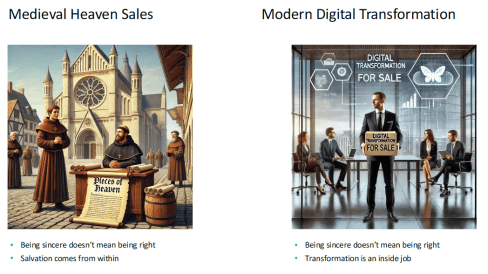

Last week, at the 20th anniversary of the Dutch PLM platform, Yousef Hooshmand gave the keynote speech starting with the images below:

On the left side, we see the medieval Catholic church sincerely selling salvation through indulgences, where the legend says Luther bought the hell, demonstrating salvation comes from inside, not from external activities – read the legend here.

On the right side, we see the Digital Transformation expert sincerely selling digital transformation to companies. According to LinkedIn, there are about 1.170.000 people with the term Digital Transformation in their profile.

On the right side, we see the Digital Transformation expert sincerely selling digital transformation to companies. According to LinkedIn, there are about 1.170.000 people with the term Digital Transformation in their profile.

As Yousef mentioned, the intentions of these people can be sincere, but also, here, the transformation must come from inside (the company).