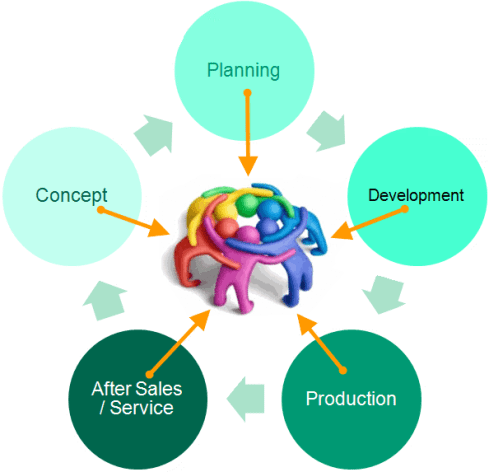

This is my concluding post related to the various aspects of the model-driven enterprise. We went through Model-Based Systems Engineering (MBSE) where the focus was on using models (functional / logical / physical / simulations) to define complex product (systems). Next we discussed Model Based Definition / Model-Based Enterprise (MBD/MBE), where the focus was on data continuity between engineering and manufacturing by using the 3D Model as a master for design, manufacturing and eventually service information.

This is my concluding post related to the various aspects of the model-driven enterprise. We went through Model-Based Systems Engineering (MBSE) where the focus was on using models (functional / logical / physical / simulations) to define complex product (systems). Next we discussed Model Based Definition / Model-Based Enterprise (MBD/MBE), where the focus was on data continuity between engineering and manufacturing by using the 3D Model as a master for design, manufacturing and eventually service information.

And last time we looked at the Digital Twin from its operational side, where the Digital Twin was applied for collecting and tuning physical assets in operation, which is not a typical PLM domain to my opinion.

Now we will focus on two areas where the Digital Twin touches aspects of PLM – the most challenging one and the most over-hyped areas I believe. These two areas are:

- The Digital Twin used to virtually define and optimize a new product/system or even a system of systems. For example, defining a new production line.

- The Digital Twin used to be the virtual replica of an asset in operation. For example, a turbine or engine.

Digital Twin to define a new Product/System

There might be some conceptual overlap if you compare the MBSE approach and the Digital Twin concept to define a new product or system to deliver. For me the differentiation would be that MBSE is used to master and define a complex system from the R&D point of view – unknown solution concepts – use hardware or software? Unknown constraints to be refined and optimized in an iterative manner.

In the Digital Twin concept, it is more about a defining a system that should work in the field. How to combine various systems into a working solution and each of the systems has already a pre-defined set of behavioral / operational parameters, which could be 3D related but also performance related.

In the Digital Twin concept, it is more about a defining a system that should work in the field. How to combine various systems into a working solution and each of the systems has already a pre-defined set of behavioral / operational parameters, which could be 3D related but also performance related.

You would define and analyze the new solution virtual to discover the ideal solution for performance, costs, feasibility and maintenance. Working in the context of a virtual model might take more time than traditional ways of working, however once the models are in place analyzing the solution and optimizing it takes hours instead of weeks, assuming the virtual model is based on a digital thread, not a sequential process of creating and passing documents/files. Virtual solutions allow a company to optimize the solution upfront instead of costly fixing during delivery, commissioning and maintenance.

Why aren’t we doing this already? It takes more skilled engineers instead of cheaper fixers downstream. The fact that we are used to fixing it later is also an inhibitor for change. Management needs to trust and understand the economic value instead of trying to reduce the number of engineers as they are expensive and hard to plan.

Why aren’t we doing this already? It takes more skilled engineers instead of cheaper fixers downstream. The fact that we are used to fixing it later is also an inhibitor for change. Management needs to trust and understand the economic value instead of trying to reduce the number of engineers as they are expensive and hard to plan.

In the construction industry, companies are discovering the power of BIM (Building Information Model) , introduced to enhance the efficiency and productivity of all stakeholders involved. Massive benefits can be achieved if the construction of the building and its future behavior and maintenance can be optimized virtually compared to fixing it in an expensive way in reality when issues pop up.

In the construction industry, companies are discovering the power of BIM (Building Information Model) , introduced to enhance the efficiency and productivity of all stakeholders involved. Massive benefits can be achieved if the construction of the building and its future behavior and maintenance can be optimized virtually compared to fixing it in an expensive way in reality when issues pop up.

The same concept applies to process plants or manufacturing plants where you could virtually run the (manufacturing) process. If the design is done with all the behavior defined (hardware-in-the-loop simulation and software-in-the-loop) a solution has been virtually tested and rapidly delivered with no late discoveries and costly fixes.

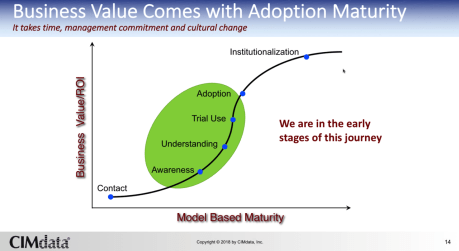

Of course it requires new ways of working. Working with digital connected models is not what engineering learn during their education time – we have just started this journey. Therefore organizations should explore on a smaller scale how to create a full Digital Twin based on connected data – this is the ultimate base for the next purpose.

Of course it requires new ways of working. Working with digital connected models is not what engineering learn during their education time – we have just started this journey. Therefore organizations should explore on a smaller scale how to create a full Digital Twin based on connected data – this is the ultimate base for the next purpose.

Digital Twin to match a product/system in the field

When you are after the topic of a Digital Twin through the materials provided by the various software vendors, you see all kinds of previews what is possible. Augmented Reality, Virtual Reality and more. All these presentations show that clicking somewhere in a 3D Model Space relevant information pops-up. Where does this relevant information come from?

When you are after the topic of a Digital Twin through the materials provided by the various software vendors, you see all kinds of previews what is possible. Augmented Reality, Virtual Reality and more. All these presentations show that clicking somewhere in a 3D Model Space relevant information pops-up. Where does this relevant information come from?

Most of the time information is re-entered in a new environment, sometimes derived from CAD but all the metadata comes from people collecting and validating data. Not the type of work we promote for a modern digital enterprise. These inefficiencies are good for learning and demos but in a final stage a company cannot afford silos where data is collected and entered again disconnected from the source.

The main problem: Legacy PLM information is stored in documents (drawings / excels) and not intended to be shared downstream with full quality.

Read also: Why PLM is the forgotten domain in digital transformation.

If a company has already implemented an end-to-end Digital Twin to deliver the solution as described in the previous section, we can understand the data has been entered somewhere during the design and delivery process and thanks to a digital continuity it is there.

How many companies have done this already? For sure not the companies that are already a long time in business as their current silos and legacy processes do not cater for digital continuity. By appointing a Chief Digital Officer, the journey might start, the biggest risk the Chief Digital Officer will be running another silo in the organization.

How many companies have done this already? For sure not the companies that are already a long time in business as their current silos and legacy processes do not cater for digital continuity. By appointing a Chief Digital Officer, the journey might start, the biggest risk the Chief Digital Officer will be running another silo in the organization.

So where does PLM support the concept of the Digital Twin operating in the field?

For me, the IoT part of the Digital Twin is not the core of a PLM. Defining the right sensors, controls and software are the first areas where IoT is used to define the measurable/controllable behavior of a Digital Twin. This topic has been discussed in the previous section.

The second part where PLM gets involved is twofold:

- Processing data from an individual twin

- Processing data from a collection of similar twins

Processing data from an individual twin

Data collected from an individual twin or collection of twins can be analyzed to extract or discover failure opportunities. An R&D organization is interested in learning what is happening in the field with their products. These analyses lead to better and more competitive solutions.

Data collected from an individual twin or collection of twins can be analyzed to extract or discover failure opportunities. An R&D organization is interested in learning what is happening in the field with their products. These analyses lead to better and more competitive solutions.

Predictive maintenance is not necessarily a part of that. When you know that certain parts will fail between 10.000 and 20.000 operating hours, you want to optimize the moment of providing service to reduce downtime of the process and you do not want to replace parts way too early.

The R&D part related to predictive maintenance could be that R&D develops sensors inside this serviceable part that signal the need for maintenance in a much smaller time from – maintenance needed within 100 hours instead of a bandwidth of 10.000 hours. Or R&D could develop new parts that need less service and guarantee a longer up-time.

For an R&D department the information from an individual Digital Twin might be only relevant if the Physical Twin is complex to repair and downtime for each individual too high. Imagine a jet engine, a turbine in a power plant or similar. Here a Digital Twin will allow service and R&D to prepare maintenance and simulate and optimize the actions for the physical world before.

The five potential platforms of a digital enterprise

The second part where R&D will be interested in, is in the behavior of similar products/systems in the field combined with their environmental conditions. In this way, R&D can discover improvement points for the whole range and give incremental innovation. The challenge for this R&D organization is to find a logical placeholder in their PLM environment to collect commonalities related to the individual modules or components. This is not an ERP or MES domain.

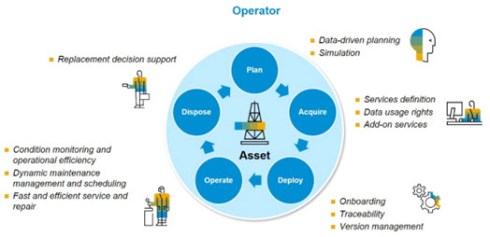

![]() Concepts of a logical product structure are already known in the oil & gas, process or nuclear industry and in 2017 I wrote about PLM for Owners/Operators mentioning Bjorn Fidjeland has always been active in this domain, you can find his concepts at plmPartner here or as an eLearning course at SharePLM.

Concepts of a logical product structure are already known in the oil & gas, process or nuclear industry and in 2017 I wrote about PLM for Owners/Operators mentioning Bjorn Fidjeland has always been active in this domain, you can find his concepts at plmPartner here or as an eLearning course at SharePLM.

To conclude:

- This post is way too long (sorry)

- PLM is not dead – it evolves into one of the crucial platforms for the future – The Product Innovation Platform

- Current BOM-centric approach within PLM is blocking progress to a full digital thread

More to come after the holidays (a European habit) with additional topics related to the digital enterprise

There are several reasons why the Digital Twin is overhyped. One of the reasons is that the Digital Twin is not necessarily considered as a PLM-related topic. Other vendors like SAP (

There are several reasons why the Digital Twin is overhyped. One of the reasons is that the Digital Twin is not necessarily considered as a PLM-related topic. Other vendors like SAP ( As a cyclist, I am active on platforms like

As a cyclist, I am active on platforms like  Another known digital twin story is related to plants in operation. In the past 10 years, I have advocated for Plant Lifecycle Management (

Another known digital twin story is related to plants in operation. In the past 10 years, I have advocated for Plant Lifecycle Management ( Companies like GE and SAP focus a lot on the digital twin in relation to asset performance. Measuring the performance of assets, comparing their performance with other similar assets and based on performance characteristics the collector of the data can sell predictive maintenance analysis, performance optimization guidance and potentially other value offerings to their customers.

Companies like GE and SAP focus a lot on the digital twin in relation to asset performance. Measuring the performance of assets, comparing their performance with other similar assets and based on performance characteristics the collector of the data can sell predictive maintenance analysis, performance optimization guidance and potentially other value offerings to their customers.

Due to the fact that I already reach more than 1000 words, I will focus in my next post on the most relevant digital twin for PLM. Here, all disciplines come together. The 3D Mechanical model, the behavior models, the embedded and control software, (manufacturing simulation and more. This is to create an almost perfect virtual copy of a real product or system in the physical world. There, we will see that this is not as easy as concepts depend on accurate data and reliable models, which is not the case currently in most companies in their engineering environment.

Due to the fact that I already reach more than 1000 words, I will focus in my next post on the most relevant digital twin for PLM. Here, all disciplines come together. The 3D Mechanical model, the behavior models, the embedded and control software, (manufacturing simulation and more. This is to create an almost perfect virtual copy of a real product or system in the physical world. There, we will see that this is not as easy as concepts depend on accurate data and reliable models, which is not the case currently in most companies in their engineering environment.

This presentation matched nicely with

This presentation matched nicely with  The presentations were followed by a (long) panel discussion. The common theme in both discussions is that companies need to educate and organize themselves to become educated for the future. New technologies, new ways of working need time and resources which small and medium enterprises often do not have. Therefore, universities, governments and interest groups are crucial.

The presentations were followed by a (long) panel discussion. The common theme in both discussions is that companies need to educate and organize themselves to become educated for the future. New technologies, new ways of working need time and resources which small and medium enterprises often do not have. Therefore, universities, governments and interest groups are crucial.

I hope to elaborate on experiences related to this bimodal or phased approach during the conference. If you or your company wants to contribute to this conference, please let the program committee know. There is already a good set of content planned. However, one or two inspiring presentations from the field are always welcome.

I hope to elaborate on experiences related to this bimodal or phased approach during the conference. If you or your company wants to contribute to this conference, please let the program committee know. There is already a good set of content planned. However, one or two inspiring presentations from the field are always welcome.

This post is my two-hundredth blog post, and this week it is exactly ten years ago that I started blogging related to the topic of PLM.

This post is my two-hundredth blog post, and this week it is exactly ten years ago that I started blogging related to the topic of PLM. The past 5 years you will recognize a shift more to the people side of PLM (what does PLM mean / impact my daily life/my organization), what makes sense/ nonsense of the new hypes mainly about the potential and risks related to becoming a digital enterprise. I learned and discussed these themes mostly through larger enterprises, as usually, they cannot change that fast. Therefore they have to be on the lookout for threats and trends earlier.

The past 5 years you will recognize a shift more to the people side of PLM (what does PLM mean / impact my daily life/my organization), what makes sense/ nonsense of the new hypes mainly about the potential and risks related to becoming a digital enterprise. I learned and discussed these themes mostly through larger enterprises, as usually, they cannot change that fast. Therefore they have to be on the lookout for threats and trends earlier.

When you need to define a complex product, that has to interact in various ways in a safe manner with the outside world, like an airplane or a car, systems engineering is the recommended approach to define the product. In 2004, when I spoke at a generic PLM conference about the possibilities to extend SmarTeam with a system engineering data model:

When you need to define a complex product, that has to interact in various ways in a safe manner with the outside world, like an airplane or a car, systems engineering is the recommended approach to define the product. In 2004, when I spoke at a generic PLM conference about the possibilities to extend SmarTeam with a system engineering data model: If you want to dive deeper into an unambiguous explanation of systems engineering, follow

If you want to dive deeper into an unambiguous explanation of systems engineering, follow  Trade-off studies eliminate alternatives and create the base for the final design which will be more and more detailed and specific over time. You need a functional and logical decomposition before jumping into the design phase for mechanical electrical and software components. Therefore, jumping from requirements directly into building a solution is not real systems engineering. You use this approach only if you already know the products solutions concept and logical components. Something perhaps possible when there is no involvement of electronics and software.

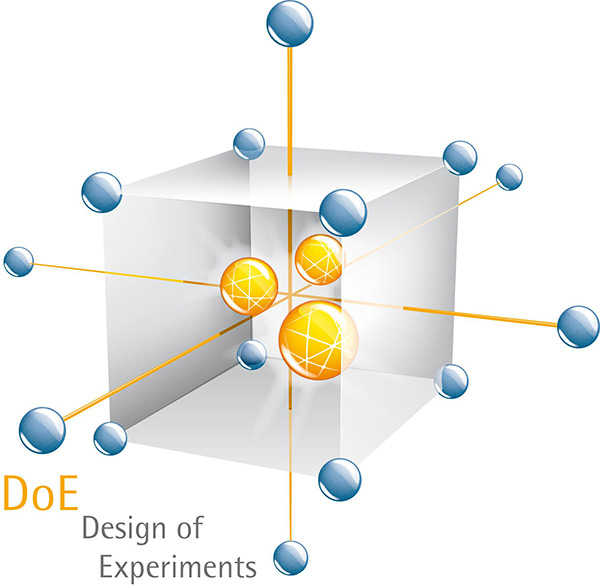

Trade-off studies eliminate alternatives and create the base for the final design which will be more and more detailed and specific over time. You need a functional and logical decomposition before jumping into the design phase for mechanical electrical and software components. Therefore, jumping from requirements directly into building a solution is not real systems engineering. You use this approach only if you already know the products solutions concept and logical components. Something perhaps possible when there is no involvement of electronics and software. In model-based systems engineering the most efficient way of working is to use parameters for requirements, logical and physical settings. Next decide on lower-level requirements and constraints the concept “Design of Experiments” is used, where the performance of a product is simulated by varying several design parameters. The results of a Design of Experiment assist the engineering teams to select the optimized solution, of course based on the model used.

In model-based systems engineering the most efficient way of working is to use parameters for requirements, logical and physical settings. Next decide on lower-level requirements and constraints the concept “Design of Experiments” is used, where the performance of a product is simulated by varying several design parameters. The results of a Design of Experiment assist the engineering teams to select the optimized solution, of course based on the model used.

In the domain of PLM, there is a bigger challenge as here we are suffering from the fact that the word “Model” immediately gets associated with a 3D Model. In addition to the 3D CAD Model, there is still a lot of useful legacy data that does not match with the concepts of a digital enterprise. I wrote and spoke about this topic a year ago. Among others at PI 2017 Berlin and you can check this presentation on SlideShare:

In the domain of PLM, there is a bigger challenge as here we are suffering from the fact that the word “Model” immediately gets associated with a 3D Model. In addition to the 3D CAD Model, there is still a lot of useful legacy data that does not match with the concepts of a digital enterprise. I wrote and spoke about this topic a year ago. Among others at PI 2017 Berlin and you can check this presentation on SlideShare:  My second post:

My second post:  Oleg’s post unleashed several reactions of people who shared his opinion (

Oleg’s post unleashed several reactions of people who shared his opinion (

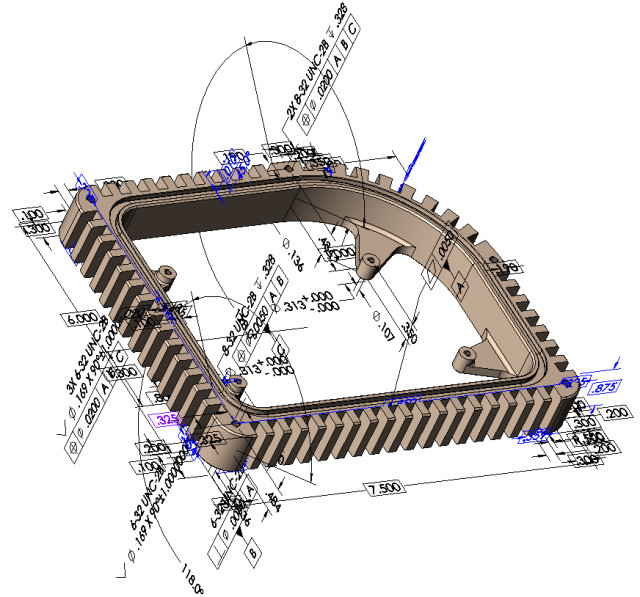

At the time 3D CAD was introduced for the mid-market, the main reason why 3D CAD was introduced was to provide a better understanding of the designed product. Visualization and creating cross-sections of the design became easy although the “old” generation of 2D draftsmen had to a challenge to transform their way of working. This lead often to 3D CAD models setup with the mindset to generate 2D Manufacturing drawings, not taking real benefits from the 3D CAD Model. Let’s first focus on Model-Based Definition.

At the time 3D CAD was introduced for the mid-market, the main reason why 3D CAD was introduced was to provide a better understanding of the designed product. Visualization and creating cross-sections of the design became easy although the “old” generation of 2D draftsmen had to a challenge to transform their way of working. This lead often to 3D CAD models setup with the mindset to generate 2D Manufacturing drawings, not taking real benefits from the 3D CAD Model. Let’s first focus on Model-Based Definition. According to an eBook, sponsored by SolidWorks and published by Tech-Clarity: “

According to an eBook, sponsored by SolidWorks and published by Tech-Clarity: “

A parametric model, combined with business rules can be accessed and controlled by other applications in a digital enterprise. In this way, without the intervention of individuals a set of product variants can be managed and not only from the design point of view. Geometry and manufacturing parameters are also connected and accessible. This is one of the concepts where Industry 4.0 is focusing on: intelligent and flexible manufacturing by exchanging parameters

A parametric model, combined with business rules can be accessed and controlled by other applications in a digital enterprise. In this way, without the intervention of individuals a set of product variants can be managed and not only from the design point of view. Geometry and manufacturing parameters are also connected and accessible. This is one of the concepts where Industry 4.0 is focusing on: intelligent and flexible manufacturing by exchanging parameters

This is however in big contrast with reality in the field. In February this year I moderated a focus group related to PLM and the Model-Based approach and the main conclusion from the audience was that everyone was looking at it, and only a few started practicing. Therefore, I promised to provide some step-by-step education related to model-based as like PLM we need to get a grip on what it means and how it impacts your company. As I am not an academic person, it will be a little bit like model-based for dummies, however as model-based in all aspects is not yet a wide-spread common practice, we are all learning.

This is however in big contrast with reality in the field. In February this year I moderated a focus group related to PLM and the Model-Based approach and the main conclusion from the audience was that everyone was looking at it, and only a few started practicing. Therefore, I promised to provide some step-by-step education related to model-based as like PLM we need to get a grip on what it means and how it impacts your company. As I am not an academic person, it will be a little bit like model-based for dummies, however as model-based in all aspects is not yet a wide-spread common practice, we are all learning. Just designing a product in 3D and then generating 2D drawings for manufacturing is not really game-changing and bringing big benefits. 3D Models provide a better understanding of the product, mechanical simulations allow the engineer to discover clashes and/or conflicts and this approach will contribute to a better understanding of the form & fit of a product. Old generations of designers know how to read a 2D drawing and in their mind understand the 3D Model.

Just designing a product in 3D and then generating 2D drawings for manufacturing is not really game-changing and bringing big benefits. 3D Models provide a better understanding of the product, mechanical simulations allow the engineer to discover clashes and/or conflicts and this approach will contribute to a better understanding of the form & fit of a product. Old generations of designers know how to read a 2D drawing and in their mind understand the 3D Model. A model-based enterprise has to rely on data, so the 3D Model should rely on parameters that allow other applications to read them. These parameters can contribute to simulation analysis and product optimization or they can contribute to manufacturing. In both cases the parameters provide data continuity between the various disciplines, eliminating the need to create new representations in different formats. I will come back in a future post to the requirements for the 3D CAD model in the context of the model-based enterprise, where I will zoom in on Model-Based Definition and the concepts of Industry 4.0.

A model-based enterprise has to rely on data, so the 3D Model should rely on parameters that allow other applications to read them. These parameters can contribute to simulation analysis and product optimization or they can contribute to manufacturing. In both cases the parameters provide data continuity between the various disciplines, eliminating the need to create new representations in different formats. I will come back in a future post to the requirements for the 3D CAD model in the context of the model-based enterprise, where I will zoom in on Model-Based Definition and the concepts of Industry 4.0. The mathematical model of a product allows companies to analyze and optimize the behavior of a product. When companies design a product they often start from a conceptual model and by running simulations they can optimize the product and define low-level requirements within a range that optimizes the product performance. The relation between design and simulation in a virtual model is crucial to be as efficient as possible. In the current ways of working, often design and simulation are not integrated and therefore the amount of simulations is relative low, as time-to-market is the key driver to introduce a new product.

The mathematical model of a product allows companies to analyze and optimize the behavior of a product. When companies design a product they often start from a conceptual model and by running simulations they can optimize the product and define low-level requirements within a range that optimizes the product performance. The relation between design and simulation in a virtual model is crucial to be as efficient as possible. In the current ways of working, often design and simulation are not integrated and therefore the amount of simulations is relative low, as time-to-market is the key driver to introduce a new product. There is still a debate if the Digital Twin is part of PLM or should be connected to PLM. A digital twin can be based on a set of parameters that represent the product performance in the field. There is no need to have a 3D representation, despite the fact that many marketing videos always show a virtual image to visualize the twin.

There is still a debate if the Digital Twin is part of PLM or should be connected to PLM. A digital twin can be based on a set of parameters that represent the product performance in the field. There is no need to have a 3D representation, despite the fact that many marketing videos always show a virtual image to visualize the twin.

For me “Keep process and organizational silos ….. “ is exactly the current state of classical PLM, where PLM concepts are implemented to provide data continuity within a siloed organization. When you can stay close to the existing processes the implementation becomes easier. Less business change needed and mainly a focus on efficiency gains by creating access to information.

For me “Keep process and organizational silos ….. “ is exactly the current state of classical PLM, where PLM concepts are implemented to provide data continuity within a siloed organization. When you can stay close to the existing processes the implementation becomes easier. Less business change needed and mainly a focus on efficiency gains by creating access to information. And if you know SAP, they go even further. Their mantra is that when using SAP PLM, you even do not need to integrate with ERP. You can still have long discussions with companies when it comes to PLM and ERP integrations. The main complexity is not the technical interface but the agreement who is responsible for which data sets during the product lifecycle. This should be clarified even before you start talking about a technical implementation. SAP claims that this effort is not needed in their environment, however they just shift the problem more towards the CAD-side. Engineers do not feel comfortable with SAP PLM when engineering is driving the success of the company. It is like the Swiss knife; every tool is there but do you want to use it for your daily work?

And if you know SAP, they go even further. Their mantra is that when using SAP PLM, you even do not need to integrate with ERP. You can still have long discussions with companies when it comes to PLM and ERP integrations. The main complexity is not the technical interface but the agreement who is responsible for which data sets during the product lifecycle. This should be clarified even before you start talking about a technical implementation. SAP claims that this effort is not needed in their environment, however they just shift the problem more towards the CAD-side. Engineers do not feel comfortable with SAP PLM when engineering is driving the success of the company. It is like the Swiss knife; every tool is there but do you want to use it for your daily work? What is really needed for the 21st century is to break down the organizational silos as current ways of working are becoming less and less applicable to a modern enterprise. The usage of software has the major impact on how we can work in the future. Software does not follow the linear product process. Software comes with incremental deliveries all the time and yes the software requires still hardware to perform. Modern enterprises try to become agile, being able to react quickly to trends and innovation options to bring higher and different value to their customers. Related to product innovation this means that the linear, sequential go-to-market process is too slow, requires too much data manipulation by non-value added activities.

What is really needed for the 21st century is to break down the organizational silos as current ways of working are becoming less and less applicable to a modern enterprise. The usage of software has the major impact on how we can work in the future. Software does not follow the linear product process. Software comes with incremental deliveries all the time and yes the software requires still hardware to perform. Modern enterprises try to become agile, being able to react quickly to trends and innovation options to bring higher and different value to their customers. Related to product innovation this means that the linear, sequential go-to-market process is too slow, requires too much data manipulation by non-value added activities. All leading companies in the industry are learning to work in a more agile mode with multidisciplinary teams that work like startups. Find an incremental benefit, rapidly develop test and interact with the market and deliver it. These teams require real-time data coming from all stakeholders, therefore the need for data continuity. But also the need for data quality as there is no time to validate data all the time – too expensive – too slow.

All leading companies in the industry are learning to work in a more agile mode with multidisciplinary teams that work like startups. Find an incremental benefit, rapidly develop test and interact with the market and deliver it. These teams require real-time data coming from all stakeholders, therefore the need for data continuity. But also the need for data quality as there is no time to validate data all the time – too expensive – too slow. When talking with companies in the real world, they are not driven by technology – they are driven by processes. They do not like to break down the silos as it creates discomfort and the need for business transformation. And there is no clear answer at this moment. What is clear that leading companies invest in business change first before looking into the technology.

When talking with companies in the real world, they are not driven by technology – they are driven by processes. They do not like to break down the silos as it creates discomfort and the need for business transformation. And there is no clear answer at this moment. What is clear that leading companies invest in business change first before looking into the technology.

[…] (The following post from PLM Green Global Alliance cofounder Jos Voskuil first appeared in his European PLM-focused blog HERE.) […]

[…] recent discussions in the PLM ecosystem, including PSC Transition Technologies (EcoPLM), CIMPA PLM services (LCA), and the Design for…

Jos, all interesting and relevant. There are additional elements to be mentioned and Ontologies seem to be one of the…

Jos, as usual, you've provided a buffet of "food for thought". Where do you see AI being trained by a…

Hi Jos. Thanks for getting back to posting! Is is an interesting and ongoing struggle, federation vs one vendor approach.…