You are currently browsing the category archive for the ‘Minimum Viable Product’ category.

Last week, my memory was triggered by this LinkedIn post and discussion started by Oleg Shilovitsky: Rethinking the Data vs. Process Debate in the Age of Digital Transformation and AI.

me, 1989

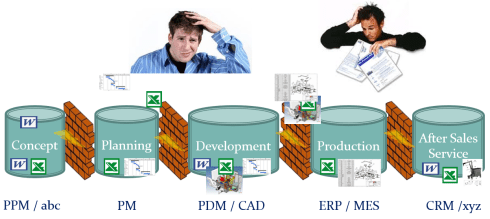

In the past twenty years, the debate in the PLM community has changed a lot. PLM started as a central file repository, combined with processes to ensure the correct status and quality of the information.

Then, digital transformation in the PLM domain became achievable and there was a focus shift towards (meta)data. Now, we are entering the era of artificial intelligence, reshaping how we look at data.

In this technology evolution, there are lessons learned that are still valid for 2025, and I want to share some of my experiences in this post.

In addition, it was great to read Martin Eigner’s great reflection on the past 40 years of PDM/PLM. Martin shared his experiences and insights, not directly focusing on the data and processes debate, but very complementary and helping to understand the future.

In addition, it was great to read Martin Eigner’s great reflection on the past 40 years of PDM/PLM. Martin shared his experiences and insights, not directly focusing on the data and processes debate, but very complementary and helping to understand the future.

It started with processes (for me 2003-2014)

In the early days when I worked with SmarTeam, one of my main missions was to develop templates on top of the flexible toolkit SmarTeam.

For those who do not know SmarTeam, it was one of the first Windows PDM/PLM systems, and thanks to its open API (COM-based), companies could easily customize and adapt it. It came with standard data elements and behaviors like Projects, Documents (CAD-specific and Generic), Items and later Products.

For those who do not know SmarTeam, it was one of the first Windows PDM/PLM systems, and thanks to its open API (COM-based), companies could easily customize and adapt it. It came with standard data elements and behaviors like Projects, Documents (CAD-specific and Generic), Items and later Products.

On top of this foundation, almost every customer implemented their business logic (current practices).

And there the problems came …..

The implementations became too much a highly customized environment, not necessarily thought-through as every customer worked differently based on their (paper) history. Thanks to learning from the discussions in the field supporting stalled implementations, I was also assigned to develop templates (e.g. SmarTeam Design Express) and standard methodology (the FDA toolkit), as the mid-market customers requested. The focus was on standard processes.

The implementations became too much a highly customized environment, not necessarily thought-through as every customer worked differently based on their (paper) history. Thanks to learning from the discussions in the field supporting stalled implementations, I was also assigned to develop templates (e.g. SmarTeam Design Express) and standard methodology (the FDA toolkit), as the mid-market customers requested. The focus was on standard processes.

You can read my 2009 observations here: Can chaos become order through PLM?

The need for standardization?

When developing templates (the right data model and processes), it was also essential to provide template processes for releasing a product and controlling the status and product changes – from Engineering Change Request to Engineering Change Order. Many companies had their processes described in their ISO 900x manual, but were they followed correctly?

In 2010, I wrote ECR/ECO for Dummies, and it has been my second most-read post over the years. Only the 2019 post The importance of EBOM and MBOM in PLM (reprise) had more readers. These statistics show that many people are, and were, seeking education on general PLM processes and data model principles.

In 2010, I wrote ECR/ECO for Dummies, and it has been my second most-read post over the years. Only the 2019 post The importance of EBOM and MBOM in PLM (reprise) had more readers. These statistics show that many people are, and were, seeking education on general PLM processes and data model principles.

It was also the time when the PLM communities discussed out-of-the-box or flexible processes as Oleg referred to in his post..

You would expect companies to follow these best practices, and many small and medium enterprises that started with PLM did so. However, I discovered there was and still is the challenge with legacy (people and process), particularly in larger enterprises.

The challenge with legacy

The technology was there, the usability was not there. Many implementations of a PLM system go through a critical stage. Are companies willing to change their methodology and habits to align with common best practices, or do they still want to implement their unique ways of working (from the past)?

The technology was there, the usability was not there. Many implementations of a PLM system go through a critical stage. Are companies willing to change their methodology and habits to align with common best practices, or do they still want to implement their unique ways of working (from the past)?

“The embedded process is limiting our freedom, we need to be flexible”

is an often-heard statement. When every step is micro-managed in the PLM system, you create a bureaucracy detested by the user. In general, when the processes are implemented in a way first focusing on crucial steps with the option to improve later, you will get the best results and acceptance. Nowadays, we could call it an MVP approach.

I have seen companies that created a task or issue for every single activity a person should do. Managers loved the (demo) dashboard. It never lead to success as the approach created frustration at the end user level as their To-Do list grew and grew.

I have seen companies that created a task or issue for every single activity a person should do. Managers loved the (demo) dashboard. It never lead to success as the approach created frustration at the end user level as their To-Do list grew and grew.

Another example of the micro-management mindset is when I worked with a company that had the opposite definition of Version and Revision in their current terminology. Initially, they insisted that the new PLM system should support this, meaning everywhere in the interface where Revisions was mentioned should be Version and the reverse for Version and Revision.

Another example of the micro-management mindset is when I worked with a company that had the opposite definition of Version and Revision in their current terminology. Initially, they insisted that the new PLM system should support this, meaning everywhere in the interface where Revisions was mentioned should be Version and the reverse for Version and Revision.

Can you imagine the cost of implementing and maintaining this legacy per upgrade?

And then came data (for me 2014 – now)

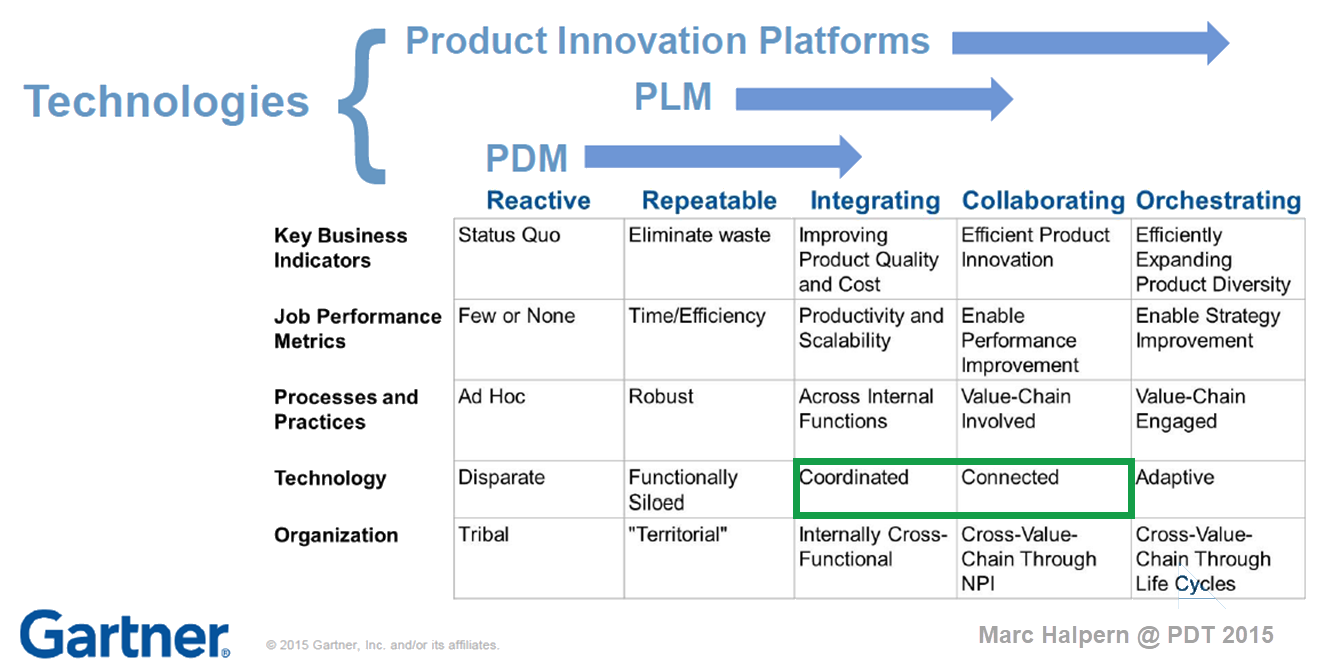

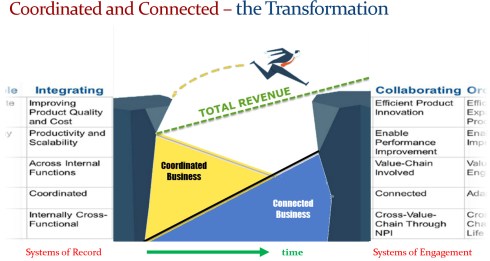

In 2015, during the pivotal PLM Roadmap/PDT conference related to Product Innovation Platforms, it brought the idea of framing digital transformation in the PLM domain in a single sentence: From Coordinated to Connected. See the original image from Marc Halpern here below and those who have read my posts over the years have seen this terminology’s evolution. Now I would say (till 2024): From Coordinated to Coordinated and Connected.

A data-driven approach was not new at that time. Roughly speaking, around 2006 – close to the introduction of the Smartphone – there was already a trend spurred by better global data connectivity at lower cost. Easy connectivity allowed PLM to expand into industries that were not closely connected to 3D CAD systems(CATIA, CREO or NX). Agile PLM, Aras, and SAP PLM became visible – PLM is no longer for design management but also for go-to-market governance in the CPG and apparel industry.

However, a data-driven approach was still rare in mainstream manufacturing companies, where drawings, office documents, email and Excel were the main information carriers next to the dominant ERP system.

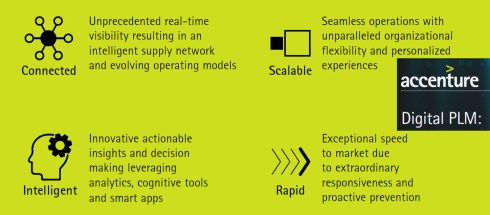

A data-driven approach was a consultant’s dream, and when looking at the impact of digital transformation in other parts of the business, why not for PLM, too? My favorite and still valid 2014 image is the one below from Accenture describing Digital PLM. Here business and PLM come together – the WHY!

Again, the challenge with legacy

At that time, I saw a few companies linking their digital transformation to implementing a new PLM system. Those were the days the PLM vendors were battling for the big enterprise deals, sometimes motivated by an IT mindset that unifying the existing PDM/PLM systems would fulfill the digital dream. Science was not winning, but emotion. Read the PLM blame game – still actual.

At that time, I saw a few companies linking their digital transformation to implementing a new PLM system. Those were the days the PLM vendors were battling for the big enterprise deals, sometimes motivated by an IT mindset that unifying the existing PDM/PLM systems would fulfill the digital dream. Science was not winning, but emotion. Read the PLM blame game – still actual.

One of my key observations is that companies struggle when they approach PLM transformation with a migration mindset. Moving from Coordinated to Connected isn’t just about technology—it’s about fundamentally changing how we work. Instead of a document-driven approach, organizations must embrace a data-driven, connected way of working.

The PLM community increasingly agrees that PLM isn’t a single system; it’s a strategy that requires a federated approach—whether through SaaS or even beyond it.

Before AI became a hype, we discussed the digital thread, digital twins, graph databases, ontologies, and data meshes. Legacy – people (skills), processes(rigid) and data(not reliable) – are the elephant in the room. Yet, the biggest challenge remains: many companies see PLM transformation as just buying new tools.

Before AI became a hype, we discussed the digital thread, digital twins, graph databases, ontologies, and data meshes. Legacy – people (skills), processes(rigid) and data(not reliable) – are the elephant in the room. Yet, the biggest challenge remains: many companies see PLM transformation as just buying new tools.

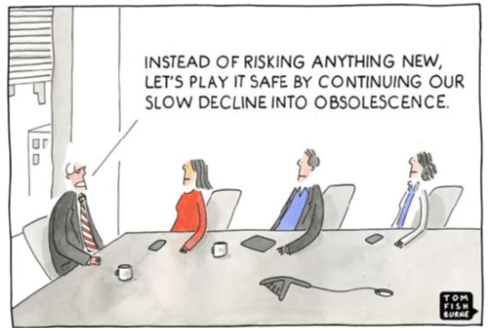

A fundamental transformation requires a hybrid approach—maintaining traditional operations while enabling multidisciplinary, data-driven teams. However, this shift demands new skills and creates the need to learn and adapt, and many organizations hesitate to take that risk.

In his Product Data Plumber Perspective on 2025. Rob Ferrone addressed the challenge to move forward too, and I liked one of his responses in the underlying discussion that says it all – it is hard to get out of your day to day comfort (and data):

Rob Ferrone’s quote:

Transformations are announced, followed by training, then communication fades. Plans shift, initiatives are replaced, and improvements are delayed for the next “fix-all” solution. Meanwhile, employees feel stuck, their future dictated by a distant, ever-changing strategy team.

And then there is Artificial Intelligence (2024 ……)

In the past two years, I have been reading and digesting much news related to AI, particularly generative AI.

In the past two years, I have been reading and digesting much news related to AI, particularly generative AI.

Initially, I was a little skeptical because of all the hallucinations and hype; however, the progress in this domain is enormous.

I believe that AI has the potential to change our digital thread and digital twin concepts dramatically where the focus was on digital continuity of data.

Now this digital continuity might not be required, reading articles like The End of SaaS (a more and more louder voice), usage of the Fusion Strategy (the importance of AI) and an (academic) example, on a smaller scale, I about learned last year the Swedish Arrowhead™ fPVN project.

I hope that five years from now, there will not be a paragraph with the title Pity there was again legacy.

We should have learned from the past that there is always the first wave of tools – they come with a big hype and promise – think about the Startgate Project but also Deepseek.

Still remember, the change comes from doing things differently, not from efficiency gains. To do things differently you need an educated, visionary management with the power and skills to take a company in a new direction. If not, legacy will win (again)

Still remember, the change comes from doing things differently, not from efficiency gains. To do things differently you need an educated, visionary management with the power and skills to take a company in a new direction. If not, legacy will win (again)

Conclusion

In my 25 years of working in the data management domain, now known as PLM, I have seen several impressive new developments – from 2D to 3D, from documents to data, from physical prototypes to models and more. All these developments took decades to become mainstream. Whilst the technology was there, the legacy kept us back. Will this ever change? Your thoughts?

The pivotal 2015 PLM Roadmap / PDT conference

It was a great pleasure to attend my favorite vendor-neutral PLM conference this year in Gothenburg—approximately 150 attendees, where most have expertise in the PLM domain.

It was a great pleasure to attend my favorite vendor-neutral PLM conference this year in Gothenburg—approximately 150 attendees, where most have expertise in the PLM domain.

We had the opportunity to learn new trends, discuss reality, and meet our peers.

The theme of the conference was:Value Drivers for Digitalization of the Product Lifecycle, a topic I have been discussing in my recent blog posts, as we need help and educate companies to understand the importance of digitalization for their business.

The two-day conference covered various lectures – view the agenda here – and of course the topic of AI was part of half of the lectures, giving the attendees a touch of reality.

The two-day conference covered various lectures – view the agenda here – and of course the topic of AI was part of half of the lectures, giving the attendees a touch of reality.

In this first post, I will cover the main highlight of Day 1.

Value Drivers for Digitalization of the Product Lifecycle

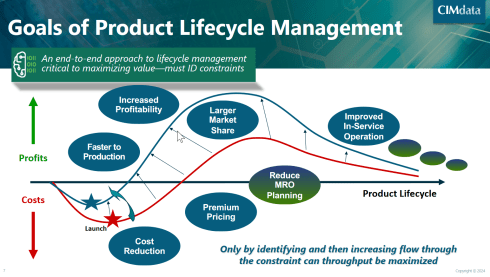

As usual, the conference started with Peter Bilello, president & CEO of CIMdata, stressing again that when implementing a PLM strategy, the maximum result comes from a holistic approach, meaning look at the big picture, don’t just focus on one topic.

As usual, the conference started with Peter Bilello, president & CEO of CIMdata, stressing again that when implementing a PLM strategy, the maximum result comes from a holistic approach, meaning look at the big picture, don’t just focus on one topic.

It was interesting to see again the classic graph (below) explaining the benefits of the end-to-end approach – I believe it is still valid for most companies; however, as I shared in my session the next day, implementing concepts of a Products Service System will require more a DevOp type of graph (more next week).

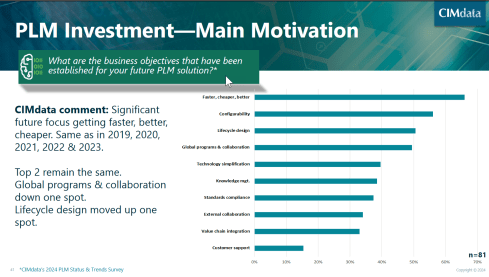

Next, Peter went through the CIMdata’s critical dozen with some updates. You can look at the updated 2024 image here.

Some of the changes: Digital Thread and Digital Twin are merged– as Digital Twins do not run on documents. And instead of focusing on Artificial Intelligence only, CIMdata introduced Augmented Intelligence as we should also consider solutions that augment human activities, not just replace them.

Some of the changes: Digital Thread and Digital Twin are merged– as Digital Twins do not run on documents. And instead of focusing on Artificial Intelligence only, CIMdata introduced Augmented Intelligence as we should also consider solutions that augment human activities, not just replace them.

Peter also shared the results of a recent PLM survey where companies were asked about their main motivation for PLM investments. I found the result a little discouraging for several reasons:

The number one topic is still faster, cheaper and better – almost 65 % of the respondents see this as their priority. This number one topic illustrates that Sustainability has not reached the level of urgency, and perhaps the topic can be found in standards compliance.

Many of the companies with Sustainability in their mission should understand that a digital PLM infrastructure is the foundation for most initiatives, like Lifecycle Analysis (LCA). Sustainability is more than part of standards compliance, if it was mentioned anyway.

Many of the companies with Sustainability in their mission should understand that a digital PLM infrastructure is the foundation for most initiatives, like Lifecycle Analysis (LCA). Sustainability is more than part of standards compliance, if it was mentioned anyway.

The second disappointing observation for the understanding of PLM is that customer support is mentioned only by 15 % of the companies. Again, connecting your products to your customers is the first step to a DevOp approach, and you need to be able to optimize your product offering to what the customer really wants.

Digital Transformation of the Value Chain in Pharma

The second keynote was from Anders Romare, Chief Digital and Information Officer at Novo Nordisk. Anders has been participating in the PDT conference in the past. See my 2016 PLM Roadmap/PDT Europe post, where Anders presented on behalf of Airbus: Digital Transformation through an e2e PLM backbone.

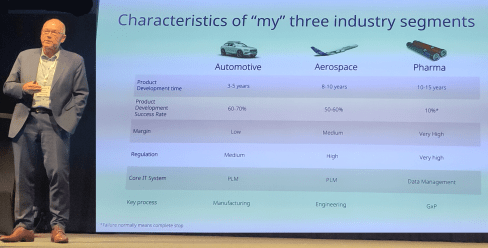

Anders started by sharing some of the main characteristics of the companies he has been working for. Volvo, Airbus and now Novo Nordisk. It is interesting to compare these characteristics as they say a lot about the industry’s focus. See below:

Anders is now responsible for digital transformation in Novo Nordisk, which is a challenge in a heavily regulated industry.

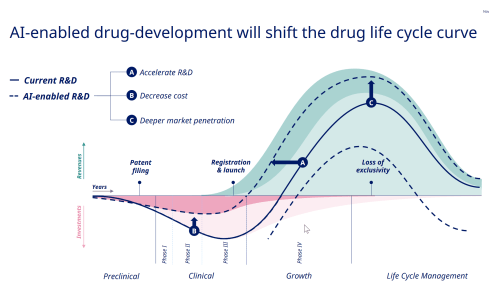

One of the focus areas for Novo Nordisk in 2024 is also Artificial Intelligence, as you can see from the image to the left (click on it for the details).

One of the focus areas for Novo Nordisk in 2024 is also Artificial Intelligence, as you can see from the image to the left (click on it for the details).

As many others in this conference, Anders mentioned AI can only be applicable when it runs on top of accurate data.

Understanding the potential of AI, they identified 59 areas where AI can create value for the business, and it is interesting to compare the traditional PLM curve Peter shared in his session with the potential AI-enabled drug-development curve as presented by Anders below:

Next, Anders shared some of the example cases of this exploration, and if you are interested in the details, visit their tech.life site.

When talking about the engineering framing of PLM, it was interesting to learn from Anders, who had a long history in PLM before Novo Nordisk, when he replied to a question from the audience that he would never talk about PLM at the management level. It’s very much aligned with my Don’t mention the P** word post.

A Strategy for the Management of Large Enterprise PLM Platforms

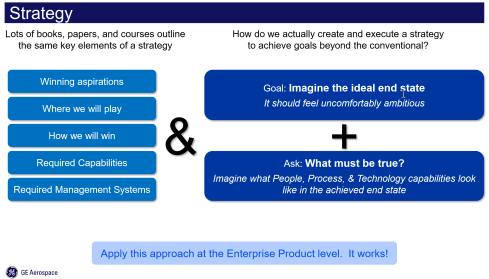

One of the highlights for me on Day 1 was Jorgen Dahl‘s presentation. Jorgen, a senior PLM director at GE Aerospace, shared their story towards a single PLM approach needed due to changes in businesses. And addressing the need for a digital thread also comes with an increased need for uptime.

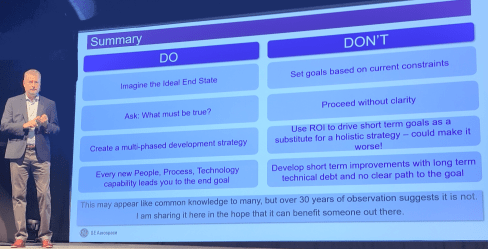

I like his strategy to execution approach, as shown in the image below, as it contains the most important topics. The business vision and understanding, the imagination of the end status and What must be True?

In my experience, the three blocks are iteratively connected. When describing the strategy, you might not be able to identify the required capabilities and management systems yet.

But then, when you start to imagine the ideal end state, you will have to consider them. And for companies, it is essential to be ambitious – or, as Jorgen stated, uncomfortable ambitious. Go for the 75 % to almost 100 % to be true. Also, asking What must be True is an excellent way to allow people to be involved and creatively explore the next steps.

Note: This approach does not provide all the details, as it will be a multiyear journey of learning and adjusting towards the future. Therefore, the strategy must be aligned with the culture to avoid continuous top-down governance of the details. In that context, Jorgen stated:

“Culture is what happens when you leave the room.”

It is a more positive statement than the famous Peter Drucker’s quote: “Culture eats strategy for breakfast.”

Jorgen’s concluding slide mentions potential common knowledge, although I believe the way Jorgen used the right easy-to-digest points will be helpful for all organizations to step back, look at their initiatives, and compare where they can improve.

How a Business Capability Model and Application Portfolio Management Support Through Changing Times

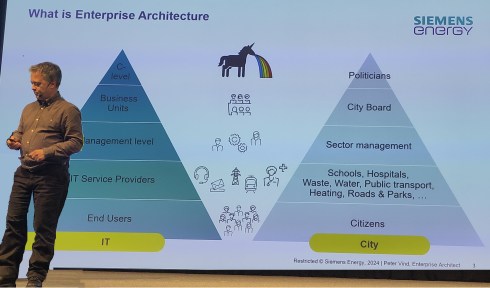

Peter Vind‘s presentation was nicely connected to the presentation from Jorgen Dahl. Peter, who is an enterprise architect at Siemens Energy, started by explaining where the enterprise architect fits in an organization and comparing it to a city.

In his entertaining session, he mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

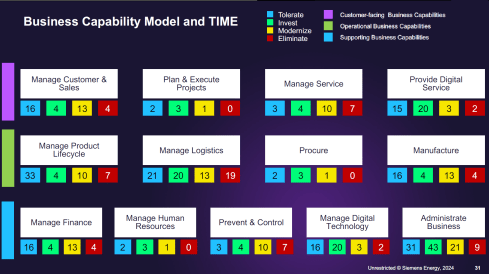

Peter explained how they used Business Capability Modeling when Siemens Energy went through various business stages. First, the carve-out from Siemens AG and later the merger with Siemens Gamesa. Their challenge is to understand which capabilities remain, which are new or overlapping, both during the carve-out and merging process.

The business capability modeling leads to a classification of the applications used at different levels of the organization, such as customer-facing, operational, or supporting business capabilities.

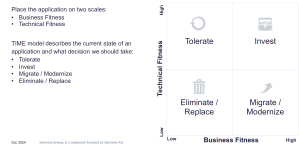

Next, for the lifecycle of the applications, the TIME approach was used, meaning that each application was mapped to business fitness and technical fitness. Click on the diagram to see the details.

The result could look like the mapping shown below – a comprehensive overview of where the action is

It is a rational approach; however, Peter mentioned that we also should be aware of the HIPPOs in an organization. If there is a HiPPO (Highest Paid Person’s Opinion) in play, you might face a political battle too.

It was a great educational session illustrating the need for an Enterprise Architect, the value of business capabilities modeling and the TIME concept.

And some more …

There were several other exciting presentations during day 1; however, as not all presentations are publicly available, I cannot discuss them in detail; I just looked at my notes.

Driving Trade Compliance and Efficiency

Peter Sandeck, Director of Project Management at TE Connectivity shared what they did to motivate engineers to endorse their Jurisdiction and Classification Assessment (JCA) process. Peter showed how, through a Minimal Viable Product (MVP) approach and listening to the end-users, they reached a higher Customer Satisfaction (CSAT) score after several iterations of the solution developed for the JCA process.

Peter Sandeck, Director of Project Management at TE Connectivity shared what they did to motivate engineers to endorse their Jurisdiction and Classification Assessment (JCA) process. Peter showed how, through a Minimal Viable Product (MVP) approach and listening to the end-users, they reached a higher Customer Satisfaction (CSAT) score after several iterations of the solution developed for the JCA process.

This approach is an excellent example of an agile method in which engineers are involved. My remaining question is still – are the same engineers in the short term also pushed to make lifecycle assessments? More work; however, I believe if you make it personal, the same MVP approach could work again.

Value of Model-Based Product Architecture

Jussi Sippola, Chief Expert, Product Architecture Management & Modularity at Wärtsilä, presented an excellent story related to the advantages of a more modular product architecture. Where historically, products were delivered based on customer requirements through the order fulfillment process, now there is in parallel the portfolio management process, defining the platform of modules, features and options.

Jussi Sippola, Chief Expert, Product Architecture Management & Modularity at Wärtsilä, presented an excellent story related to the advantages of a more modular product architecture. Where historically, products were delivered based on customer requirements through the order fulfillment process, now there is in parallel the portfolio management process, defining the platform of modules, features and options.

Jussi mentioned that they were able to reduce the number of parts by 50 % while still maintaining the same level of customer capabilities. In addition, thanks to modularity, they were able to reduce the production lead time by 40 % – essential numbers if you want to remain competitive.

Conclusion

Day 1 was a day where we learned a lot as an audience, and in addition, the networking time and dinner in the evening were precious for me and, I assume, also for many of the participants. In my next post, we will see more about new ways of working, the AI dream and Sustainability.

Last week, I shared my first impressions from my favorite conference, in the post: The weekend after PLM Roadmap/PDT Europe 2023, where most impressions could be classified as traditional PLM and model-based.

Last week, I shared my first impressions from my favorite conference, in the post: The weekend after PLM Roadmap/PDT Europe 2023, where most impressions could be classified as traditional PLM and model-based.

There is nothing wrong with conventional PLM, as there is still much to do within this scope. A model-based approach for MBSE (Model-Based Systems Engineering) and MBD (Model-Based Definition) and efficient supplier collaboration are not topics you solve by implementing a new system.

Ultimately, to have a business-sustainable PLM infrastructure, you need to structure your company internally and connect to the outside world with a focus on standards to avoid a vendor lock-in or a dead end.

Ultimately, to have a business-sustainable PLM infrastructure, you need to structure your company internally and connect to the outside world with a focus on standards to avoid a vendor lock-in or a dead end.

In short, this is what I described so far in The weekend after ….part 1.

Now, let’s look at the relatively new topics for this audience.

Enabling the Marketing, Engineering & Manufacturing Digital Thread

Cyril Bouillard, the PLM & CAD Tools Referent at the Mersen Electrical Protection (EP) business unit, shared his experience implementing an end-to-end digital backbone from marketing through engineering and manufacturing.

Cyril Bouillard, the PLM & CAD Tools Referent at the Mersen Electrical Protection (EP) business unit, shared his experience implementing an end-to-end digital backbone from marketing through engineering and manufacturing.

Cyril showed the benefits of a modern PLM infrastructure that is not CAD-centric and focused on engineering only. The advantages of this approach are a seamless integrated flow of PLM and PIM (Product Information Management).

I wrote about this topic in 2019: PLM and PIM – the complementary value in a digital enterprise. Combining the concepts of PLM and PIM in an integrated, connected environment could also provide a serious benefit when collaborating with external parties.

Another benefit Cyril demonstrated was the integration of RoHS compliance to the BOM as an integrated environment. In my session, I also addressed integrated RoHS compliance as a stepping stone to efficiency in future compliance needs.

Another benefit Cyril demonstrated was the integration of RoHS compliance to the BOM as an integrated environment. In my session, I also addressed integrated RoHS compliance as a stepping stone to efficiency in future compliance needs.

Read more later or in this post: Material Compliance – as a stepping-stone towards Life Cycle Assessment (LCA)

Cyril concluded with some lessons learned.

Data quality is essential in such an environment, and there are significant time savings implementing the connected Digital Thread.

Meeting the Challenges of Sustainability in Critical Transport Infrastructures

Etienne Pansart, head of digital engineering for construction at SYSTRA, explained how they address digital continuity with PLM throughout the built assets’ lifecycle.

Etienne Pansart, head of digital engineering for construction at SYSTRA, explained how they address digital continuity with PLM throughout the built assets’ lifecycle.

Etienne’s story was related to the complexity of managing a railway infrastructure, which is a linear and vertical distribution at multiple scales; it needs to be predictable and under constant monitoring; it is a typical system of systems network, and on top of that, maintenance and operational conditions need to be continued up to date.

Regarding railway assets – a railway needs renewal every two years, bridges are designed to last a hundred years, and train stations should support everyday use.

When complaining about disturbances, you might have a little more respect now (depending on your country). However, on top of these challenges, Etienne also talked about the additional difficulties expected due to climate change: floods, fire, earth movements, and droughts, all of which will influence the availability of the rail infrastructure.

In that context, Etienne talked about the MINERVE project – see image below:

As you can see from the main challenges, there is an effort of digitalization for both the assets and a need to provide digital continuity over the entire asset lifecycle. This is not typically done in an environment with many different partners and suppliers delivering a part of the information.

Etienne explained in more detail how they aim to establish digital twins and MBSE practices to build and maintain a data-driven, model-based environment.

Having digital twins allows much more granular monitoring and making accurate design decisions, mainly related to sustainability, without the need to study the physical world.

His presentation was again a proof point that through digitalization and digital twins, the traditional worlds of Product Lifecycle Management and Asset Information Management become part of the same infrastructure.

And it may be clear that in such a collaboration environment, standards are crucial to connect the various stakeholder’s data sources – Etienne mentioned ISO 16739 (IFC), IFC Rail, and ISO 19650 (BIM) as obvious standards but also ISO 10303 (PLCS) to support the digital thread leveraged by OSLC.

And it may be clear that in such a collaboration environment, standards are crucial to connect the various stakeholder’s data sources – Etienne mentioned ISO 16739 (IFC), IFC Rail, and ISO 19650 (BIM) as obvious standards but also ISO 10303 (PLCS) to support the digital thread leveraged by OSLC.

I am curious to learn more about the progress of such a challenging project – having worked with the high-speed railway project in the Netherlands in 1995 – no standards at that time (BIM did not exist) – mainly a location reference structure with documents. Nothing digital.

The connected Digital Thread

The theme of the conference was The Digital Thread in a Heterogeneous, Extended Enterprise Reality, and in the next section, I will zoom in on some of the inspiring sessions for the future, where collaboration or information sharing is all based on a connected Digital Thread – a term I will explain in more depth in my next blog post.

Transforming the PLM Landscape:

The Gateway to Business Transformation

Yousef Hooshmand‘s presentation was the highlight of this conference for me.

Yousef Hooshmand‘s presentation was the highlight of this conference for me.

Yousef is the PLM Architect and Lead for the Modernization of the PLM Landscape at NIO, and he has been active before in the IT-landscape transformation at Daimler, on which he published the paper: From a monolithic PLM landscape to a federated domain and data mesh.

If you read my blog or follow Share PLM, you might seen the reference to Yousef’s work before, or recently, you can hear the full story at the Share PLM Podcast: Episode 6: Revolutionizing PLM: Insights.

If you read my blog or follow Share PLM, you might seen the reference to Yousef’s work before, or recently, you can hear the full story at the Share PLM Podcast: Episode 6: Revolutionizing PLM: Insights.

It was the first time I met Yousef in 3D after several virtual meetings, and his passion for the topic made it hard to fit in the assigned 30 minutes.

There is so much to share on this topic, and part of it we already did before the conference in a half-day workshop related to Federated PLM (more on this in the following review).

First, Yousef started with the five steps of the business transformation at NIO, where long-term executive commitment is a must.

His statement: “If you don’t report directly to the board, your project is not important”, caused some discomfort in the audience.

As the image shows, a business transformation should start with a systematic description and analysis of which business values and objectives should be targeted, where they fit in the business and IT landscape, what are the measures and how they can be tracked or assessed and ultimately, what we need as tools and technology.

In his paper From a Monolithic PLM Landscape to a Federated Domain and Data Mesh, Yousef described the targeted federated landscape in the image below.

And now some vendors might say, we have all these domains in our product portfolio (or we have slides for that) – so buy our software, and you are good.

And here Yousef added his essential message, illustrated by the image below.

Start by delivering the best user-centric solutions (in an MVP manner – days/weeks – not months/years). Next, be data-centric in all your choices and ultimately build an environment ready for change. As Yousef mentioned: “Make sure you own the data – people and tools can leave!”

And to conclude reporting about his passionate plea for Federated PLM:

“Stop talking about the Single Source of Truth, start Thinking of the Nearest Source of Truth based on the Single Source of Change”.

Heliple-2 PLM Federation:

A Call for Action & Contributions

A great follow-up on Yousef’s session was Erik Herzog‘s presentation about the final findings of the Heliple 2 project, where SAAB Aeronautics, together with Volvo, Eurostep, KTH, IBM and Lynxwork, are investigating a new way of federated PLM, by using an OSLC-based, heterogeneous linked product lifecycle environment.

A great follow-up on Yousef’s session was Erik Herzog‘s presentation about the final findings of the Heliple 2 project, where SAAB Aeronautics, together with Volvo, Eurostep, KTH, IBM and Lynxwork, are investigating a new way of federated PLM, by using an OSLC-based, heterogeneous linked product lifecycle environment.

Heliple stands for HEterogeneous LInked Product Lifecycle Environment

The image below, which I shared several times before, illustrates the mindset of the project.

Last year, during the previous conference in Gothenburg, Erik introduced the concept of federated PLM – read more in my post: The week after PLM Roadmap / PDT Europe 2022, mentioning two open issues to be investigated: Operational feasibility (is it maintainable over time) and Realisation effectivity (is it affordable and maintainable at a reasonable cost)

As you can see from the slide below, the results were positive and encouraged SAAB to continue on this path.

One of the points to mention was that during this project, Lynxwork was used to speed up the development of the OSLC adapter, reducing costs, time and needed skills.

After this successful effort, Erik and several others who joined us at the pre-conference workshop agreed that this initiative is valid to be tested, discussed and exposed outside Sweden.

After this successful effort, Erik and several others who joined us at the pre-conference workshop agreed that this initiative is valid to be tested, discussed and exposed outside Sweden.

Therefore, the Federated PLM Interest Group was launched to join people worldwide who want to contribute to this concept with their experiences and tools.

A first webinar from the group is already scheduled for December 12th at 4 PM CET – you can join and register here.

More to come

Given the length of this blog post, I want to stop here.

Topics to share in the next post are related to my contribution at the conference The Need for a Governance Digital Thread, where I addressed the need for federated PLM capabilities with the upcoming regulations and practices related to sustainability, which require a connected Digital.

Topics to share in the next post are related to my contribution at the conference The Need for a Governance Digital Thread, where I addressed the need for federated PLM capabilities with the upcoming regulations and practices related to sustainability, which require a connected Digital.

I want to combine this post with the findings that Mattias Johansson, CEO of Eurostep, shared in his session: Why a Digital Thread makes a lot of sense, goes beyond manufacturing, and should be standards-based.

I want to combine this post with the findings that Mattias Johansson, CEO of Eurostep, shared in his session: Why a Digital Thread makes a lot of sense, goes beyond manufacturing, and should be standards-based.

There are some interesting findings in these two presentations.

And there was the introduction of AI at the conference, with some experts’ talks and thoughts. Perhaps at this stage, it is too high on Gartner’s hype cycle to go into details. It will surely be THE topic of discussion or interest you must have noticed.

And there was the introduction of AI at the conference, with some experts’ talks and thoughts. Perhaps at this stage, it is too high on Gartner’s hype cycle to go into details. It will surely be THE topic of discussion or interest you must have noticed.

The recent turmoil at OpenAI is an example of that. More to come for sure in the future.

Conclusion

The PLM Roadmap/PDT Europe conference was significant for me because I discovered that companies are working on concepts for a data-driven infrastructure for PLM and are (working on) implementing them. The end of monolithic PLM is visible, and companies need to learn to master data using ontologies, standards and connected digital threads.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

During my summer holiday in my “remote” office, I had the chance to digest what I recently read, heard, saw and discussed related to the future of PLM.

I noticed this year/last year that many companies are discussing or working on their future PLM. It is time to make progress after COVID, particularly in digitization.

And as most companies are avoiding the risk of a “big bang”, they are exploring how they can improve their businesses in an evolutionary mode.

PLM is no longer a system

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

The most significant change I noticed in my discussions is the growing awareness that PLM is no longer covered by a single system.

More and more, PLM is considered a strategy, with which I fully agree. Therefore, implementing a PLM strategy requires holistic thinking and an infrastructure of different types of systems, where possible, digitally connected.

This trend is bad news for the PLM vendors as they continuously work on an end-to-end portfolio where every aspect of the PLM lifecycle is covered by one of their systems. The company’s IT department often supports the PLM vendors, as IT does not like a diverse landscape.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

The main question is: “Every PLM Vendor has a rich portfolio on PowerPoint mentioning all phases of the product lifecycle.

However, are these capabilities implementable in an economical and user-friendly manner by actual companies or should PLM players need to change their strategy”?

A question I will try to answer in this post

The future of PLM

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

I have discussed several observed changes related to the effects of digitization in my recent blog posts, referencing others who have studied these topics in their organizations.

Some of the posts to refresh your memory are:

- Time to split PLM?

- People, Processes, Data and Tools?

- The rise and the fall of the BOM?

- The new side of PLM? Systems of Engagement!

To summarize what has been discussed in these posts are the following points:

The As Is:

- The traditional PLM systems are examples of a System of Record, not designed to be end-user friendly but designed to have a traceable baseline for manufacturing, service and product compliance.

- The traditional PLM systems are tuned to a mechanical product introduction and release process in a coordinated manner, with a focus on BOM governance.

- The legacy information is stored in BOM structures and related specification files.

System of Record (ENOVIA image 2014)

The To Be:

- We are not talking about a PLM system anymore; a traditional System of Record will be digitally connected to different Systems of Engagement / Domains / Products, which have their own optimized environment for real-time collaboration.

- The BOM structures remain essential for the hardware part; however, overreaching structures are needed to manage software and hardware releases for a product. These structures depend on connected datasets.

- To support digital twins at the various lifecycle stages (design. Manufacturing, operations), product data needs to be based on and consumed by models.

- A future PLM infrastructure is hybrid, based on a Single Source of Change (SSoC) and an Authoritative Source of Truth (ASoT) instead of a Single Source of Truth (SSoT).

Various Systems of Engagement

Related podcasts

I relistened two podcasts before writing this post, and I think they are a must to listen to.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

The Peer Check podcast from Colab episode 17 — The State of PLM in 2022 w/Oleg Shilovitsky. Adam and Oleg have a great discussion about the future of PLM.

Highlights: From System to Platform – the new norman. A Single Source of Truth doesn’t work anymore – it is about value streams. People in big companies fear making wrong PLM decisions, which is seen as a significant risk for your career.

There is no immediate need to change the current status quo.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

The Share PLM Podcast – Episode 6: Revolutionizing PLM: Insights from Yousef Hooshmand. Yousef talked with Helena and me about proven ways to migrate an old PLM landscape to a modern PLM/Business landscape.

Highlights: The term Single Source of Change and the existing concepts of a hybrid PLM infrastructure based on his experiences at Daimler and now at NIO. Yousef stresses the importance of having the vision and the executive support to execute.

![]() The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

The time of “big bangs” is over, and Yousef provided links to relevant content, which you can find here in the comments.

In addition, I want to point to the experiences provided by Erik Herzog in the Heliple project using OSLC interfaces as the “glue” to connect (in my terminology) the Systems of Engagement and the Systems of Record.

If you are interested in these concepts and want to learn and discuss them with your peers, more can be learned during the upcoming CIMdata PLM Roadmap / PDT Europe conference.

In particular, look at the agenda for day two if you are interested in this topic.

The future for the PLM vendors

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

If you look at the messaging of the current PLM Vendors, none of them is talking about this federated concept.

They are more focused with their messaging on the transition from on-premise to the cloud, providing a SaaS offering with their portfolio.

I was slightly disappointed when I saw this article on Engineering.com provided by Autodesk: 5 PLM Best Practices from the Experiences of Autodesk and Its Customers.

The article is tool-centric, with statements that make sense and could be written by any PLM Vendor. However, Best Practice #1 Central Source of Truth Improves Productivity and Collaboration was the message that struck me. Collaboration comes from connecting people, not from the Single Source of Truth utopia.

I don’t believe PLM Vendors have to be afraid of losing their installed base rapidly with companies using their PLM as a System or Record. There is so much legacy stored in these systems that might still be relevant. The existence of legacy information, often documents, makes a migration or swap to another vendor almost impossible and unaffordable.

The System of Record is incompatible with data-driven PLM capabilities

I would like to see more clear developments of the PLM Vendors, creating a plug-and-play infrastructure for Systems of Engagement. Plug-and-play solutions could be based on a neutral partner collaboration hub like ShareAspace or the Systems of Engagement I discussed recently in my post and interview: The new side of PLM? Systems of Engagement!

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Plug-and-play systems of engagement require interface standards, and PLM Vendors will only move in this direction if customers are pushing for that, and this is the chicken-and-egg discussion. And probably, their initiatives are too fragmented at the moment to come to a standard. However, don’t give up; keep building MVPs to learn and share.

Some people believe AI, with the examples we have seen with ChatGPT, will be the future direction without needing interface standards.

I am curious about your thoughts and experiences in that area and am willing to learn.

Talking about learning?

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

Besides reading posts and listening to podcasts, I also read an excellent book this summer. Martijn Dullaart, often participating in PLM and CM discussions, had decided to write a book based on the various discussions related to part (re-)identification (numbering, revisioning).

As Martijn starts in the preface:

“I decided to write this book because, in my search for more knowledge on the topics of Part Re-Identification, Interchangeability, and Traceability, I could only find bits and pieces but not a comprehensive work that helps fundamentally understand these topics”.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

I believe the book should become standard literature for engineering schools that deal with PLM and CM, for software vendors and implementers and last but not least companies that want to improve or better clarify their change processes.

Martijn writes in an easily readable style and uses step-by-step examples to discuss the various options. There are even exercises at the end to use in a classroom or for your team to digest the content.

The good news is that the book is not about the past. You might also know Martijn for our joint discussion, The Future of Configuration Management, together with Maxime Gravel and Lisa Fenwick, on the impact of a model-based and data-driven approach to CM.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I plan to come back with a more dedicated discussion at some point with Martijn soon. Meanwhile, start reading the book. Get your free chapter if needed by following the link at the bottom of this article.

I recommend buying the book as a paperback so you can navigate easily between the diagrams and the text.

Conclusion

The trend for federated PLM is becoming more and more visible as companies start implementing these concepts. The end of monolithic PLM is a threat and an opportunity for the existing PLM Vendors. Will they work towards an open plug-and-play future, or will they keep their portfolios closed? What do you think?

In the past few weeks, together with Share PLM, we recorded and prepared a few podcasts to be published soon. As you might have noticed, for Season 2, our target is to discuss the human side of PLM and PLM best practices and less the technology side. Meaning:

In the past few weeks, together with Share PLM, we recorded and prepared a few podcasts to be published soon. As you might have noticed, for Season 2, our target is to discuss the human side of PLM and PLM best practices and less the technology side. Meaning:

- How to align and motivate people around a PLM initiative?

- What are the best practices when running a PLM initiative?

- What are the crucial skills you need to have as a PLM lead?

And as there are always many success stories to learn on the internet, we also challenged our guests to share the moments where they got experienced.

As the famous quote says:

Experience is what you get when you don’t get what you expect!

We recently published our with Antonio Casaschi from Assa Abloy, a Swedish company you might have never noticed, although their products and services are a part of your daily life.

It was a discussion to my heart. We discussed the various aspects of PLM. What makes a person a PLM professional? And if you have no time to listen for these 35 minutes, read and scan the recording transcript on the transcription tab.

At 0:24:00, Antonio mentioned the concept of Proof of Concept as he had good experiences with them in the past. The remark triggered me to share some observations that a Proof of Concept (POC) is an old-fashioned way to drive change within organizations. Not discussed in this podcast but based on my experience, companies have been using the Proof Of Concepts to win time, as they were afraid to make a decision.

A POC to gain time?

Company A

When working with a well-known company in 2014, I learned they were planning approximately ten POC per year to explore new ways of working or new technologies. As it was a POC based on an annual time scheme, the evaluation at the end of the year was often very discouraging.

When working with a well-known company in 2014, I learned they were planning approximately ten POC per year to explore new ways of working or new technologies. As it was a POC based on an annual time scheme, the evaluation at the end of the year was often very discouraging.

Most of the time, the conclusion was: “Interesting, we should explore this further” /“What are the next POCs for the upcoming year?”

There was no commitment to follow-up; it was more of a learning exercise not connected to any follow-up.

Company B

During one of the PDT events, a company presented that two years POC with the three leading PLM vendors, exploring supplier collaboration. I understood the PLM vendors had invested much time and resources to support this POC, expecting a big deal. However, the team mentioned it was an interesting exercise, and they learned a lot about supplier collaboration.

During one of the PDT events, a company presented that two years POC with the three leading PLM vendors, exploring supplier collaboration. I understood the PLM vendors had invested much time and resources to support this POC, expecting a big deal. However, the team mentioned it was an interesting exercise, and they learned a lot about supplier collaboration.

And nothing happened afterward ………

In 2019

At the 2019 Product Innovation Conference in London, when discussing Digital Transformation within the PLM domain, I shared in my conclusion that the POC was mainly a waste of time as it does not push you to transform; it is an option to win time but is uncommitted.

My main reason for not pushing a POC is that it is more of a limited feasibility study.

- Often to push people and processes into the technical capabilities of the systems used. A focus starting from technology is the opposite of what I have been pushing for longer: First, focus on the value stream – people and processes- and then study which tools and technologies support these demands.

- Second, the POC approach often blocks innovation as the incumbent system providers will claim the desired capabilities will come (soon) within their systems—a safe bet.

The Minimum Viable Product approach (MVP)

With the awareness that we need to work differently and benefit from digital capabilities also came the term Minimum Viable Product or MVP.

The abbreviation MVP is not to be confused with the minimum valuable products or most valuable players.

There are two significant differences with the POC approach:

- You admit the solution does not exist anywhere – so it cannot be purchased or copied.

- You commit to the fact that this new approach will be the right direction to take and agree that a perfect fit solution is not blocking you from starting for real.

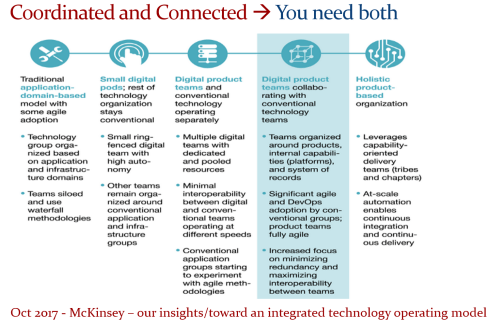

These two differences highlight the main challenges of digital transformation in the PLM domain. Digital Transformation is a learning process – it takes time for organizations to acquire and master the needed skills. And secondly, it cannot be a big bang, and I have often referred to the 2017 article from McKinsey: Toward an integrated technology operating model. Image below.

We will soon hear more about digital transformation within the PLM domain during the next episode of our SharePLM podcast. We spoke with Yousef Hooshmand, currently working for NIO, a Chinese multinational automobile manufacturer specializing in designing and developing electric vehicles, as their PLM data lead.

You might have discovered Yousef earlier when he published his paper: “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh”. It is highly recommended that to read the paper if you are interested in a potential PLM future infrastructure. I wrote about this whitepaper in 2022: A new PLM paradigm discussing the upcoming Systems of Engagement on top of a Systems or Record infrastructure.

You might have discovered Yousef earlier when he published his paper: “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh”. It is highly recommended that to read the paper if you are interested in a potential PLM future infrastructure. I wrote about this whitepaper in 2022: A new PLM paradigm discussing the upcoming Systems of Engagement on top of a Systems or Record infrastructure.

To align our terminology with Yousef’s wording, his domains align with the Systems of Engagement definition.

As we discovered and discussed with Yousef, technology is not the blocking issue to start. You must understand the target infrastructure well and where each domain’s activities fit. Yousef mentions that there is enough literature about this topic, and I can refer to the SAAB conference paper: Genesis -an Architectural Pattern for Federated PLM.

For a less academic impression, read my blog post, The week after PLM Roadmap / PDT Europe 2022, where I share the highlights of Erik Herzog’s presentation: Heterogeneous and Federated PLM – is it feasible?

For a less academic impression, read my blog post, The week after PLM Roadmap / PDT Europe 2022, where I share the highlights of Erik Herzog’s presentation: Heterogeneous and Federated PLM – is it feasible?

There is much to learn and discover which standards will be relevant, as both Yousef and Erik mention the importance of standards.

The podcast with Yousef (soon to be found HERE) was not so much about organizational change management and people.

However, Yousef mentioned the most crucial success factor for the transformation project he supported at Daimler. It was C-level support, trust and understanding of the approach, knowing it will be many years, an unavoidable journey if you want to remain competitive.

However, Yousef mentioned the most crucial success factor for the transformation project he supported at Daimler. It was C-level support, trust and understanding of the approach, knowing it will be many years, an unavoidable journey if you want to remain competitive.

And with the journey aspect comes the importance of the Minimal Viable Product. You are starting a journey with an end goal in mind (top-of-the-mountain), and step by step (from base camp to base camp), people will be better covered in their day-to-day activities thanks to digitization.

And with the journey aspect comes the importance of the Minimal Viable Product. You are starting a journey with an end goal in mind (top-of-the-mountain), and step by step (from base camp to base camp), people will be better covered in their day-to-day activities thanks to digitization.

A POC would not help you make the journey; perhaps a small POC would understand what it takes to cross a barrier.

Conclusion

The concept of POCs is outdated in a fast-changing environment where technology is not necessary the blocking issue. Developing practices, new architectures and using the best-fit standards is the future. Embrace the Minimal Viable Product approach. Are you?

[…] (The following post from PLM Green Global Alliance cofounder Jos Voskuil first appeared in his European PLM-focused blog HERE.) […]

[…] recent discussions in the PLM ecosystem, including PSC Transition Technologies (EcoPLM), CIMPA PLM services (LCA), and the Design for…

Jos, all interesting and relevant. There are additional elements to be mentioned and Ontologies seem to be one of the…

Jos, as usual, you've provided a buffet of "food for thought". Where do you see AI being trained by a…

Hi Jos. Thanks for getting back to posting! Is is an interesting and ongoing struggle, federation vs one vendor approach.…