You are currently browsing the category archive for the ‘Data centric’ category.

Just before or during the summer holidays, we were pleased to resume our interview series on PLM and Sustainability, where the PLM Green Global Alliance interviews PLM-related software vendors and service organizations, discussing their sustainability missions and offerings.

Just before or during the summer holidays, we were pleased to resume our interview series on PLM and Sustainability, where the PLM Green Global Alliance interviews PLM-related software vendors and service organizations, discussing their sustainability missions and offerings.

Following recent discussions in the PLM ecosystem, including PSC Transition Technologies (EcoPLM), CIMPA PLM services (LCA), and the Design for Sustainability working group (with multiple vendors & service partners), we now have the opportunity to catch up with Sustaira after almost three years.

In 2022, Sustaira was a startup company focused on building and providing data-driven, efficient support for sustainability reporting and analysis based on the Mendix platform, while engaging with their first potential customers. What has happened in those three years?

In 2022, Sustaira was a startup company focused on building and providing data-driven, efficient support for sustainability reporting and analysis based on the Mendix platform, while engaging with their first potential customers. What has happened in those three years?

SUSTAIRA

Sustaira provides a sustainability management software platform that helps organizations track, manage, and report their environmental, social, and governance (ESG) performance through customizable applications and dashboards.

Sustaira provides a sustainability management software platform that helps organizations track, manage, and report their environmental, social, and governance (ESG) performance through customizable applications and dashboards.

We spoke again with Vincent de la Mar, founder and CEO of Sustaira, and it was pretty clear from our conversation that they have evolved and grown in their business and value proposition for businesses. As you will discover by listening to the interview, they are not, per se, in the PLM domain.

Enjoy the 35-minute interview below.

Slides shown during the interview, combined with additional company information, can be found HERE.

What we have learned

- Sustaira is a modular, AI-driven sustainability platform. It offers approximately 150 “sustainability accelerators,” which are either complete Software as a Service (SaaS) products (such as carbon accounting, goal/KPI tracking, and disclosures) or adaptable SaaS products that allow for complete configuration of data models, logic, and user interfaces.

- Their strategy is based on three pillars:

- providing an end-to-end sustainability platform (Ports of Jersey),

- filling gaps in an enterprise architecture and business needs (Science-Based Target Initiatives)

- Co-creating new applications with partners (BCAF with Siemens Financial Services)

- The company has a pragmatic view on AI and thanks to its scalable, data-driven Mendix platform, it can bring integrated value compared to niche applications that might become obsolete due to changing regulations and practices (e.g., dedicated CSRD apps)

- The Sustainability Global Alliance, in partnership with Capgemini, is a strategic alliance that benefits both parties, with a focus on AI & Sustainability.

- The strong partnership with Siemens Digital Solutions.

- Their monthly Sustainability and ESG Insights newsletter, also published in our PGGA group, already has 55.000 subscribers.

Want to learn more?

The following links provide more information related to Sustaira:

- About Sustaira:

- Sustaira’s sustainability marketplace

- Siemens and Sustaira partnership

- Capgemini and Sustaira partnership

- Customer Case Stories

- The Sustainability Insights LinkedIn Newsletter

- Navigating CSRD

- Content Hub (requires registration)

Conclusion

It was great to observe how Sustaira has grown over the past three years, establishing a broad portfolio of sustainability-related solutions for various types of businesses. Their relationship with Siemens Digital Solutions enables them to bring value and add capabilities to the Siemens portfolio, as their platform can be applied to any company that needs a complementary data-driven service related to sustainability insights and reporting.

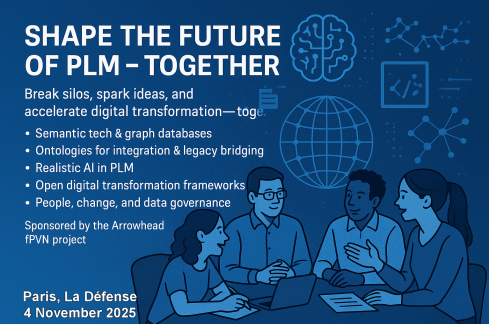

Follow the news around this event – click on the image to learn more.

![]() In my first discussion with Rob Ferrone, the original Product Data PLuMber, we discussed the necessary foundation for implementing a Digital Thread or leveraging AI capabilities beyond the hype. This is important because all these concepts require data quality and data governance as essential elements.

In my first discussion with Rob Ferrone, the original Product Data PLuMber, we discussed the necessary foundation for implementing a Digital Thread or leveraging AI capabilities beyond the hype. This is important because all these concepts require data quality and data governance as essential elements.

If you missed part 1, here is the link: Data Quality and Data Governance – A hype?

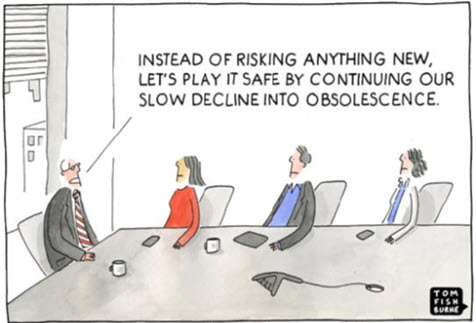

Rob, did you receive any feedback related to part 1? I spoke with a company that emphasized the importance of data quality; however, they were more interested in applying plasters, as they consider a broader approach too disruptive to their current business. Do you see similar situations?

Rob, did you receive any feedback related to part 1? I spoke with a company that emphasized the importance of data quality; however, they were more interested in applying plasters, as they consider a broader approach too disruptive to their current business. Do you see similar situations?

Honestly, not much feedback. Data Governance isn’t as sexy or exciting as discussions on Designing, Engineering, Manufacturing, or PLM Technology. HOWEVER, as the saying goes, all roads lead to Rome, and all Digital Engineering discussions ultimately lead to data.

Honestly, not much feedback. Data Governance isn’t as sexy or exciting as discussions on Designing, Engineering, Manufacturing, or PLM Technology. HOWEVER, as the saying goes, all roads lead to Rome, and all Digital Engineering discussions ultimately lead to data.

Cristina Jimenez Pavo’s comment illustrates that the question is in the air.:

Everyone knows that it should be better; high-performing businesses have good data governance, but most people don’t know how to systematically and sustainably improve their data quality. It’s hard and not glamorous (for most), so people tend to focus on buying new systems, which they believe will magically resolve their underlying issues.

Data governance as a strategy

Thanks for the clarification. I imagine it is similar to Configuration Management, i.e., with different needs per industry. I have seen ISO 8000 in the aerospace industry, but it has not spread further to other businesses. What about data governance as a strategy, similar to CM?

Thanks for the clarification. I imagine it is similar to Configuration Management, i.e., with different needs per industry. I have seen ISO 8000 in the aerospace industry, but it has not spread further to other businesses. What about data governance as a strategy, similar to CM?

That’s a great idea. Do you mind if I steal it?

That’s a great idea. Do you mind if I steal it?

If you ask any PLM or ERP vendor, they’ll claim to have a master product data governance template for every industry. While the core principles—ownership, control, quality, traceability, and change management, as in Configuration Management—are consistent, their application must vary based on the industry context, data types, and business priorities.

Designing effective data governance involves tailoring foundational elements, including data stewardship, standards, lineage, metadata, glossaries, and quality rules. These elements must reflect the realities of operations, striking a balance between trade-offs such as speed versus rigor or openness versus control.

Designing effective data governance involves tailoring foundational elements, including data stewardship, standards, lineage, metadata, glossaries, and quality rules. These elements must reflect the realities of operations, striking a balance between trade-offs such as speed versus rigor or openness versus control.

The challenge is that both configuration management (CM) and data governance often suffer from a perception problem, being viewed as abstract or compliance-heavy. In truth, they must be practical, embedded in daily workflows, and treated as dynamic systems central to business operations, rather than static documents.

Think of it like the difference between stepping on a scale versus using a smartwatch that tracks your weight, heart rate, and activity, schedules workouts, suggests meals, and aligns with your goals.

![]() Governance should function the same way:

Governance should function the same way:

responsive, integrated, and outcome-driven.

Who is responsible for data quality?

I have seen companies simplifying data quality as an enhancement step for everyone in the organization, like a “You have to be more accurate” message, similar perhaps to configuration management. Here we touch people and organizational change. How do you make improving data quality happen beyond the wish?

I have seen companies simplifying data quality as an enhancement step for everyone in the organization, like a “You have to be more accurate” message, similar perhaps to configuration management. Here we touch people and organizational change. How do you make improving data quality happen beyond the wish?

In most companies, managing product data is a responsibility shared among all employees. But increasingly complex systems and processes are not designed around people, making the work challenging, unpleasant, and often poorly executed.

In most companies, managing product data is a responsibility shared among all employees. But increasingly complex systems and processes are not designed around people, making the work challenging, unpleasant, and often poorly executed.

I like to quote Larry English – The Father of Information Quality:

“Information producers will create information only to the quality level for which they are trained, measured and held accountable.”

A common reaction is to add data “police” or transactional administrators, who unintentionally create more noise or burden those generating the data.

The real solution lies in embedding capable, proactive individuals throughout the product lifecycle who care about data quality as much as others care about the product itself – it was the topic I discussed at the 2025 Share PLM summit in Jerez – Rob Ferrone – Bill O-Materials also presented in part 1 of our discussion.

These data professionals collaborate closely with designers, engineers, procurement, manufacturing, supply chain, maintenance, and repair teams. They take ownership of data quality in systems, without relieving engineers of their responsibility for the accuracy of source data.

Some data, like component weight, is best owned by engineers, while others—such as BoM structure—may be better managed by system specialists. The emphasis should be on giving data professionals precise requirements and the authority to deliver.

They not only understand what good data looks like in their domain but also appreciate the needs of adjacent teams. This results in improved data quality across the business, not just within silos. They also work with IT and process teams to manage system changes and lead continuous improvement efforts.

![]() The real challenge is finding leaders with the vision and drive to implement this approach.

The real challenge is finding leaders with the vision and drive to implement this approach.

The costs or benefits associated with good or poor data quality

At the peak of interest in being data-driven, large consulting firms published numerous studies and analyses, proving that data-driven companies achieve better results than their data-averse competitors. Have you seen situations where the business case for improving “product data” quality has led to noticeable business benefits, and if so, in what range? Double digit, single digit?

At the peak of interest in being data-driven, large consulting firms published numerous studies and analyses, proving that data-driven companies achieve better results than their data-averse competitors. Have you seen situations where the business case for improving “product data” quality has led to noticeable business benefits, and if so, in what range? Double digit, single digit?

Improving data quality in isolation delivers limited value. Data quality is a means to an end. To realise real benefits, you must not only know how to improve it, but also how to utilise high-quality data in conjunction with other levers to drive improved business outcomes.

Improving data quality in isolation delivers limited value. Data quality is a means to an end. To realise real benefits, you must not only know how to improve it, but also how to utilise high-quality data in conjunction with other levers to drive improved business outcomes.

I built a company whose premise was that good-quality product data flowing efficiently throughout the business delivered dividends due to improved business performance. We grew because we delivered results that outweighed our fees.

Last year’s turnover was €35M, so even with a conservatively estimated average in-year ROI of 3:1, the company delivered over € 100 M of cost savings or additional revenue per year to clients, with the majority of these benefits being sustainable.

There is also the potential to unlock new value and business models through data-driven innovation.

For example, connecting disparate product data sources into a unified view and taking steps to sustainably improve data quality enables faster, more accurate, and easier collaboration between OEMs, fleet operators, spare parts providers, workshops, and product users, which leads to a new value proposition around minimizing painful operational downtime.

For example, connecting disparate product data sources into a unified view and taking steps to sustainably improve data quality enables faster, more accurate, and easier collaboration between OEMs, fleet operators, spare parts providers, workshops, and product users, which leads to a new value proposition around minimizing painful operational downtime.

AI and Data Quality

Currently, we are seeing numerous concepts emerge where AI, particularly AI agents, can be highly valuable for PLM. However, we also know that in legacy environments, the overall quality of data is poor. How do you envision AI supporting PLM processes, and where should you start? Or has it already started?

Currently, we are seeing numerous concepts emerge where AI, particularly AI agents, can be highly valuable for PLM. However, we also know that in legacy environments, the overall quality of data is poor. How do you envision AI supporting PLM processes, and where should you start? Or has it already started?

It’s like mining for rare elements—sifting through massive amounts of legacy data to find the diamonds. Is it worth the effort, especially when diamonds can now be manufactured? AI certainly makes the task faster and easier. Interestingly, Elon Musk recently announced plans to use AI to rewrite legacy data and create a new, high-quality knowledge base. This suggests a potential market for trusted, validated, and industry-specific legacy training data.

It’s like mining for rare elements—sifting through massive amounts of legacy data to find the diamonds. Is it worth the effort, especially when diamonds can now be manufactured? AI certainly makes the task faster and easier. Interestingly, Elon Musk recently announced plans to use AI to rewrite legacy data and create a new, high-quality knowledge base. This suggests a potential market for trusted, validated, and industry-specific legacy training data.

Will OEMs sell it as valuable IP, or will it be made open source like Tesla’s patents?

AI also offers enormous potential for data quality and governance. From live monitoring to proactive guidance, adopting this approach will become a much easier business strategy. One can imagine AI forming the core of a company’s Digital Thread—no longer requiring rigidly hardwired systems and data flows, but instead intelligently comparing team data and flagging misalignments.

AI also offers enormous potential for data quality and governance. From live monitoring to proactive guidance, adopting this approach will become a much easier business strategy. One can imagine AI forming the core of a company’s Digital Thread—no longer requiring rigidly hardwired systems and data flows, but instead intelligently comparing team data and flagging misalignments.

That said, data alignment remains complex, as discrepancies can be valid depending on context.

A practical starting point?

Data Quality as a Service. My former company, Quick Release, is piloting an AI-enabled service focused on EBoM to MBoM alignment. It combines a data quality platform with expert knowledge, collecting metadata from PLM, ERP, MES, and other systems to map engineering data models.

Experts define quality rules (completeness, consistency, relationship integrity), and AI enables automated anomaly detection. Initially, humans triage issues, but over time, as trust in AI grows, more of the process can be automated. Eventually, no oversight may be needed; alerts could be sent directly to those empowered to act, whether human or AI.

Experts define quality rules (completeness, consistency, relationship integrity), and AI enables automated anomaly detection. Initially, humans triage issues, but over time, as trust in AI grows, more of the process can be automated. Eventually, no oversight may be needed; alerts could be sent directly to those empowered to act, whether human or AI.

Summary

We hope the discussions in parts 1 and 2 helped you understand where to begin. It doesn’t need to stay theoretical or feel unachievable.

- The first step is simple: recognise product data as an asset that powers performance, not just admin.

Then treat it accordingly. - You don’t need a 5-year roadmap or a board-approved strategy before you begin. Start by identifying the product data that supports your most critical workflows, the stuff that breaks things when it’s wrong or missing. Work out what “good enough” looks like for that data at each phase of the lifecycle.

Then look around your business: who owns it, who touches it, and who cares when it fails? - From there, establish the roles, rules, and routines that help this data improve over time, even if it’s manual and messy to begin with. Add tooling where it helps.

- Use quality KPIs that reflect the business, not the system. Focus your governance efforts where there’s friction, waste, or rework.

- And where are you already getting value? Lock it in. Scale what works.

Conclusion

It’s not about perfection or policies; it’s about momentum and value. Data quality is a lever. Data governance is how you pull it.

Just start pulling- and then get serious with your AI applications!

Are you attending the PLM Roadmap/PDT Europe 2025 conference on

November 5th & 6th in Paris, La Defense?

There is an opportunity to discuss the future of PLM in a workshop before the event.

More information will be shared soon; please mark November 4th in the afternoon on your agenda.

The title of this post is chosen influenced by one of Jan Bosch’s daily reflections # 156: Hype-as-a-Service. You can read his full reflection here.

His post reminded me of a topic that I frequently mention when discussing modern PLM concepts with companies and peers in my network. Data Quality and Data Governance, sometimes, in the context of the connected digital thread, and more recently, about the application of AI in the PLM domain.

I’ve noticed that when I emphasize the importance of data quality and data governance, there is always a lot of agreement from the audience. However, when discussing these topics with companies, the details become vague.

Yes, there is a desire to improve data quality, and yes, we push our people to improve the quality processes of the information they produce. Still, I was curious if there is an overall strategy for companies.

And who to best talk to? Rob Ferrone, well known as “The original Product Data PLuMber” – together, we will discuss the topic of data quality and governance in two posts. Here is part one – defining the playground.

The need for Product Data People

During the Share PLM Summit, I was inspired by Rob’s theatre play, “The Engineering Murder Mystery.” Thanks to the presence of Michael Finocchiaro, you might have seen the play already on LinkedIn – if you have 20 minutes, watch it now.

Rob’s ultimate plea was to add product data people to your company to make the data reliable and flow. So, for me, he is the person to understand what we mean by data quality and data governance in reality – or is it still hype?

What is data?

Hi Rob, thank you for having this conversation. Before discussing quality and governance, could you share with us what you consider ‘data’ within our PLM scope? Is it all the data we can imagine?

Hi Rob, thank you for having this conversation. Before discussing quality and governance, could you share with us what you consider ‘data’ within our PLM scope? Is it all the data we can imagine?

I propose that relevant PLM data encompasses all product-related information across the lifecycle, from conception to retirement. Core data includes part or item details, usage, function, revision/version, effectivity, suppliers, attributes (e.g., cost, weight, material), specifications, lifecycle state, configuration, and serial number.

I propose that relevant PLM data encompasses all product-related information across the lifecycle, from conception to retirement. Core data includes part or item details, usage, function, revision/version, effectivity, suppliers, attributes (e.g., cost, weight, material), specifications, lifecycle state, configuration, and serial number.

Secondary data supports lifecycle stages and includes requirements, structure, simulation results, release dates, orders, delivery tracking, validation reports, documentation, change history, inventory, and repair data.

Tertiary data, such as customer information, can provide valuable support for marketing or design insights. HR data is generally outside the scope, although it may be referenced when evaluating the impact of PLM on engineering resources.

What is data quality?

Now that we have a data scope in mind, I can imagine that there is also some nuance in the term’ data quality’. Do we strive for 100% correct data, and is the term “100 % correct” perhaps too ambitious? How would you define and address data quality?

Now that we have a data scope in mind, I can imagine that there is also some nuance in the term’ data quality’. Do we strive for 100% correct data, and is the term “100 % correct” perhaps too ambitious? How would you define and address data quality?

You shouldn’t just want data quality for data quality’s sake. You should want it because your business processes depend on it. As for 100%, not all data needs to be accurate and available simultaneously. It’s about having the proper maturity of data at the right time.

You shouldn’t just want data quality for data quality’s sake. You should want it because your business processes depend on it. As for 100%, not all data needs to be accurate and available simultaneously. It’s about having the proper maturity of data at the right time.

For example, when you begin designing a component, you may not need to have a nominated supplier, and estimated costs may be sufficient. However, missing supplier nomination or estimated costs would count against data quality when it is time to order parts.

And these deliverable timings will vary across components, so 100% quality might only be achieved when the last standard part has been identified and ordered.

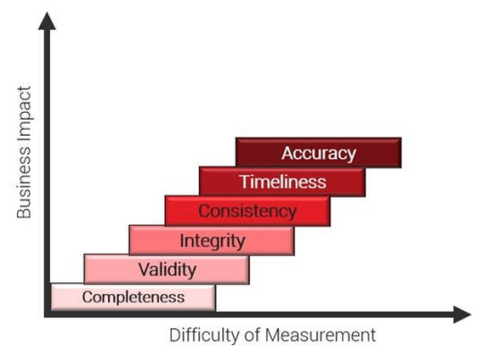

It is more important to know when you have reached the required data quality objective for the top-priority content. The image below explains the data quality dimensions:

- Completeness (Are all required fields filled in?)

KPI Example: % of product records that include all mandatory fields (e.g., part number, description, lifecycle status, unit of measure)

- Validity (Do values conform to expected formats, rules, or domains?)

KPI Example: % of customer addresses that conform to ISO 3166 country codes and contain no invalid characters

- Integrity (Do relationships between data records hold?)

KPI Example: % of BOM records where all child parts exist in the Parts Master and are not marked obsolete

- Consistency (Is data consistent across systems or domains?)

KPI Example: % of product IDs with matching descriptions and units across PLM and ERP systems

- Timeliness (Is data available and updated when needed?)

KPI Example: % of change records updated within 24 hours of approval or effective date

- Accuracy (Does the data reflect real-world truth?)

KPI Example: % of asset location records that match actual GPS coordinates from service technician visits

Define data quality KPIs based on business process needs, ensuring they drive meaningful actions aligned with project goals.

While defining quality is one challenge, detecting issues is another. Data quality problems vary in severity and detection difficulty, and their importance can shift depending on the development stage. It’s vital not to prioritize one measure over others, e.g., having timely data doesn’t guarantee that it has been validated.

While defining quality is one challenge, detecting issues is another. Data quality problems vary in severity and detection difficulty, and their importance can shift depending on the development stage. It’s vital not to prioritize one measure over others, e.g., having timely data doesn’t guarantee that it has been validated.

Like the VUCA framework, effective data quality management begins by understanding the nature of the issue: is it volatile, uncertain, complex, or ambiguous?

Not all “bad” data is flawed, some may be valid estimates, changes, or system-driven anomalies. Each scenario requires a tailored response; treating all issues the same can lead to wasted effort or overlooked insights.

Furthermore, data quality goes beyond the data itself—it also depends on clear definitions, ownership, monitoring, maintenance, and governance. A holistic approach ensures more accurate insights and better decision-making throughout the product lifecycle.

KPIs?

In many (smaller) companies KPI do not exist; they adjust their business based on experience and financial results. Are companies ready for these KPIs, or do they need to establish a data governance baseline first?

In many (smaller) companies KPI do not exist; they adjust their business based on experience and financial results. Are companies ready for these KPIs, or do they need to establish a data governance baseline first?

Many companies already use data to run parts of their business, often with little or no data governance. They may track program progress, but rarely systematically monitor data quality. Attention tends to focus on specific data types during certain project phases, often employing audits or spot checks without establishing baselines or implementing continuous monitoring.

Many companies already use data to run parts of their business, often with little or no data governance. They may track program progress, but rarely systematically monitor data quality. Attention tends to focus on specific data types during certain project phases, often employing audits or spot checks without establishing baselines or implementing continuous monitoring.

This reactive approach means issues are only addressed once they cause visible problems.

When data problems emerge, trust in the system declines. Teams revert to offline analysis, build parallel reports, and generate conflicting data versions. A lack of trust worsens data quality and wastes time resolving discrepancies, making it difficult to restore confidence. Leaders begin to question whether the data can be trusted at all.

Data governance typically evolves; it’s challenging to implement from the start. Organizations must understand their operations before they can govern data effectively.

Data governance typically evolves; it’s challenging to implement from the start. Organizations must understand their operations before they can govern data effectively.

In start-ups, governance is challenging. While they benefit from a clean slate, their fast-paced, prototype-driven environment prioritizes innovation over stable governance. Unlike established OEMs with mature processes, start-ups focus on agility and innovation, making it challenging to implement structured governance in the early stages.

Data governance is a business strategy, similar to Product Lifecycle Management.

Before they go on the journey of creating data management capabilities, companies must first understand:

- The cost of not doing it.

- The value of doing it.

- The cost of doing it.

What is the cost associated with not doing data quality and governance?

Similar to configuration management, companies might find it a bureaucratic overhead that is hard to justify. As long as things are going well (enough) and the company’s revenue or reputation is not at risk, why add this extra work?

Similar to configuration management, companies might find it a bureaucratic overhead that is hard to justify. As long as things are going well (enough) and the company’s revenue or reputation is not at risk, why add this extra work?

Product data quality is either a tax or a dividend. In Part 2, I will discuss the benefits. In Part 1, this discussion, I will focus on the cost of not doing it.

Product data quality is either a tax or a dividend. In Part 2, I will discuss the benefits. In Part 1, this discussion, I will focus on the cost of not doing it.

Every business has stories of costly failures caused by incorrect part orders, uncommunicated changes, or outdated service catalogs. It’s a systematic disease in modern, complex organisations. It’s part of our day-to-day working lives: multiple files with slightly different file names, important data hidden in lengthy email chains, and various sources for the same information (where the value differs across sources), among other challenges.

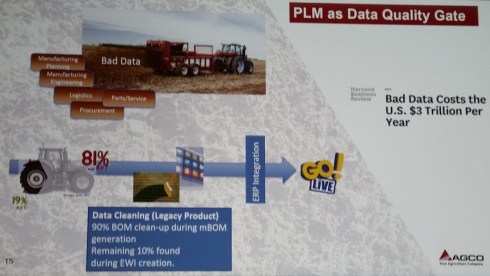

Above image from Susan Lauda’s presentation at the PLMx 2018 conference in Hamburg, where she shared the hidden costs of poor data. Please read about it in my blog post: The weekend after PLMx Hamburg.

Above image from Susan Lauda’s presentation at the PLMx 2018 conference in Hamburg, where she shared the hidden costs of poor data. Please read about it in my blog post: The weekend after PLMx Hamburg.

Poor product data can impact more than most teams realize. It wastes time—people chase missing info, duplicate work, and rerun reports. It delays builds, decisions, and delivery, hurting timelines and eroding trust. Quality drops due to incorrect specifications, resulting in rework and field issues. Financial costs manifest as scrap, excess inventory, freight, warranty claims, and lost revenue.

Poor product data can impact more than most teams realize. It wastes time—people chase missing info, duplicate work, and rerun reports. It delays builds, decisions, and delivery, hurting timelines and eroding trust. Quality drops due to incorrect specifications, resulting in rework and field issues. Financial costs manifest as scrap, excess inventory, freight, warranty claims, and lost revenue.

Worse, poor data leads to poor decisions, wrong platforms, bad supplier calls, and unrealistic timelines. It also creates compliance risks and traceability gaps that can trigger legal trouble. When supply chain visibility is lost, the consequences aren’t just internal, they become public.

For example, in Tony’s Chocolonely’s case, despite their ethical positioning, they were removed from the Slave Free Chocolate list after 1,700 child labour cases were discovered in their supplier network.

The good news is that most of the unwanted costs are preventable. There are often very early indicators that something was going to be a problem. They are just not being looked at.

Better data governance equals better decision-making power.

Visibility prevents the inevitable.

Conclusion of part 1

Thanks to Rob’s answers, I am confident that you now have a better understanding of what Data Quality and Data Governance mean in the context of your business. In addition, we discussed the cost of doing nothing. In Part 2, we will explore how to implement it in your company, and Rob will share some examples of the benefits.

Feel free to post your questions for the original Product Data PLuMber in the comments.

Four years ago, during the COVID-19 pandemic, we discussed the critical role of a data plumber.

Wow, what a tremendous amount of impressions to digest when traveling back from Jerez de la Frontera, where Share PLM held its first PLM conference. You might have seen the energy from the messages on LinkedIn, as this conference had a new and unique daring starting point: Starting from human-led transformations.

Wow, what a tremendous amount of impressions to digest when traveling back from Jerez de la Frontera, where Share PLM held its first PLM conference. You might have seen the energy from the messages on LinkedIn, as this conference had a new and unique daring starting point: Starting from human-led transformations.

Look what Jens Chemnitz, Linda Kangastie, Martin Eigner, Jakob Äsell or Oleg Shilovitsky had to say.

For over twenty years, I have attended all kinds of PLM events, either vendor-neutral or from specific vendors. None of these conferences created so many connections between the attendees and the human side of PLM implementation.

We can present perfect PLM concepts, architectures and methodologies, but the crucial success factor is the people—they can make or break a transformative project.

Here are some of the first highlights for those who missed the event and feel sorry they missed the vibe. I might follow up in a second post with more details. And sorry for the reduced quality—I am still enjoying Spain and refuse to use AI to generate this human-centric content.

The scenery

Approximately 75 people have been attending the event in a historic bodega, Bodegas Fundador, in the historic center of Jerez. It is not a typical place for PLM experts, but an excellent place for humans with an Andalusian atmosphere. It was great to see companies like Razorleaf, Technia, Aras, XPLM and QCM sponsor the event, confirming their commitment. You cannot start a conference from scratch alone.

The next great differentiator was the diversity of the audience. Almost 50 % of the attendees were women, all working on the human side of PLM.

Another brilliant idea was to have the summit breakfast in the back of the stage area, so before the conference days started, you could mingle and mix with the people instead of having a lonely breakfast in your hotel.

Another brilliant idea was to have the summit breakfast in the back of the stage area, so before the conference days started, you could mingle and mix with the people instead of having a lonely breakfast in your hotel.

Now, let’s go into some of the highlights; there were more.

A warm welcome from Share PLM

Beatriz Gonzalez, CEO and co-founder of Share PLM, kicked off the conference, explaining the importance of human-led transformations and organizational change management and sharing some of their best practices that have led to success for their customers.

You might have seen this famous image in the past, explaining why you must address people’s emotions.

Working with Design Sprints?

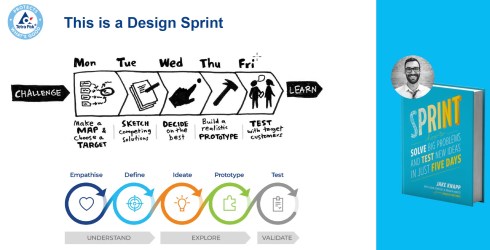

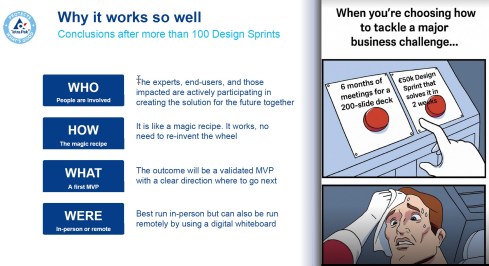

Have you ever heard of design sprints as a methodology for problem-solving within your company? If not, you should read the book by Jake Knapp- Creator of Design Sprint.

Andrea Järvén, program manager at Tetra Pak and closely working with the PLM team, recommended this to us. She explained how Tetra Pak successfully used design sprints to implement changes. You would use design sprints when development cycles run too looong, Teams lose enthusiasm and focus, work is fragmented, and the challenges are too complex.

Andrea Järvén, program manager at Tetra Pak and closely working with the PLM team, recommended this to us. She explained how Tetra Pak successfully used design sprints to implement changes. You would use design sprints when development cycles run too looong, Teams lose enthusiasm and focus, work is fragmented, and the challenges are too complex.

Instead of a big waterfall project, you run many small design sprints with the relevant stakeholders per sprint, coming step by step closer to the desired outcome.

The sprints are short – five days of the full commitment of a team targeting a business challenge, where every day has a dedicated goal, as you can see from the image above.

It was an eye-opener, and I am eager to learn where this methodology can be used in the PLM projects I contribute.

Unlocking Success: Building a Resilient Team for Your PLM Journey

Johan Mikkelä from FLSmidth shared a great story about the skills, capacities, and mindset needed for a PLM transformational project.

Johan Mikkelä from FLSmidth shared a great story about the skills, capacities, and mindset needed for a PLM transformational project.

Johan brought up several topics to consider when implementing a PLM project based on his experiences.

One statement that resonated well with the audience of this conference was:

The more diversified your team is, the faster you can adapt to changes.

He mentioned that PLM projects feel like a marathon, and I believe it is true when you talk about a single project.

However, instead of a marathon, we should approach PLM activities as a never-ending project, but a pleasant journey that is not about reaching a finish but about step-by-step enjoying, observing, and changing a little direction when needed.

However, instead of a marathon, we should approach PLM activities as a never-ending project, but a pleasant journey that is not about reaching a finish but about step-by-step enjoying, observing, and changing a little direction when needed.

Strategic Shift of Focus – a human-centric perspective

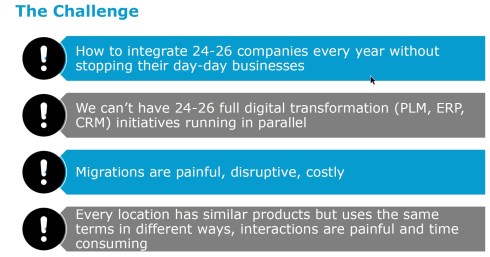

Besides great storytelling, Antonio Casaschi‘s PLM learning journey at Assa Abloy was a perfect example of why PLM theory and reality often do not match. With much energy and experience, he came to Assa Abloy to work on the PLM strategy.

Besides great storytelling, Antonio Casaschi‘s PLM learning journey at Assa Abloy was a perfect example of why PLM theory and reality often do not match. With much energy and experience, he came to Assa Abloy to work on the PLM strategy.

He started his PLM strategies top-down, trying to rationalize the PLM infrastructure within Assa Abloy with a historically bad perception of a big Teamcenter implementation from the past. Antonio and his team were the enemies disrupting the day-to-day life of the 200+ companies under the umbrella of Assa Abloy.

A logical lesson learned here is that aiming top-down for a common PLM strategy is impossible in a company that acquires another six new companies per quarter.

His final strategy is a bottom-up strategy, where he and the team listen to and work with the end-users in the native environments. They have become trusted advisors now as they have broad PLM experience but focus on current user pains. With the proper interaction, his team of trusted advisors can help each of the individual companies move towards a more efficient and future-focused infrastructure at their own pace.

The great lessons I learned from Antonio are:

- If your plan does not work out, be open to failure. Learn from your failures and aim for the next success.

- Human relations—I trust you, understand you, and know what to do—are crucial in such a complex company landscape.

Navigating Change: Lessons from My First Year as a Program Manager

Linda Kangastie from Valmet Technologies Oy in Finland shared her experiences within the company, from being a PLM key user to now being a PLM program manager for the PAP Digi Roadmap, containing PLM, sales tools, installed base, digitalization, process harmonization and change management, business transformation—a considerable scope.

Linda Kangastie from Valmet Technologies Oy in Finland shared her experiences within the company, from being a PLM key user to now being a PLM program manager for the PAP Digi Roadmap, containing PLM, sales tools, installed base, digitalization, process harmonization and change management, business transformation—a considerable scope.

The recommendations she gave should be a checklist for most PLM projects – if you are missing one of them, ask yourself what you are missing:

- THE ROADMAP and THE BIG PICTURE – is your project supported by a vision and a related roadmap of milestones to achieve?

- Biggest Buy-in comes with money! – The importance of a proper business case describing the value of the PLM activities and working with use cases demonstrating the value.

- Identify the correct people in the organization – the people that help you win, find sparring partners in your organization and make sure you have a common language.

- Repetition – taking time to educate, learn new concepts and have informal discussions with people –is a continuous process.

As you can see, there is no discussion about technology– it is about business and people.

To conclude, other speakers mentioned this topic too; it is about being honest and increasing trust.

The Future Is Human: Leading with Soul in a World of AI

Helena Guitierez‘s keynote on day two was the one that touched me the most as she shared her optimistic vision of the future where AI will allow us to be so more efficient in using our time, combined, of course, with new ways of working and behaviors.

Helena Guitierez‘s keynote on day two was the one that touched me the most as she shared her optimistic vision of the future where AI will allow us to be so more efficient in using our time, combined, of course, with new ways of working and behaviors.

As an example, she demonstrated she had taken an academic paper from Martin Eigner, and by using an AI tool, the German paper was transformed into an English learning course, including quizzes. And all of this with ½ day compared to the 3 to 4 days it would take the Share PLM team for that.

With the time we save for non-value-added work, we should not remain addicted to passive entertainment behind a flat screen. There is the opportunity to restore human and social interactions in person in areas and places where we want to satisfy our human curiosity.

I agree with her optimism. During Corona and the introduction of teams and Zoom sessions, I saw people become resources who popped up at designated times behind a flat screen.

I agree with her optimism. During Corona and the introduction of teams and Zoom sessions, I saw people become resources who popped up at designated times behind a flat screen.

The real human world was gone, with people talking in the corridors at the coffee machine. These are places where social interactions and innovation happen. Coffee stimulates our human brain; we are social beings, not resources.

Death on the Shop Floor: A PLM Murder Mystery

Rob Ferrone‘s theatre play was an original way of explaining and showing that everyone in the company does their best. The product was found dead, and Andrea Järvén alias Angie Neering, Oleg Shilovitsky alias Per Chasing, Patrick Willemsen alias Manny Facturing, Linda Kangastie alias Gannt Chartman and Antonio Casaschi alias Archie Tect were either pleaded guilty by the public jury or not guilty, mainly on the audience’s prejudices.

You can watch the play here, thanks to Michael Finocchiaro :

According to Rob, the absolute need to solve these problems that allow products to die is the missing discipline of product data people, who care for the flow, speed, and quality of product data. Rob gave some examples of his experience with Quick Release project he had worked with.

My learnings from this presentation are that you can make PLM stories fun, but even more important, instead of focusing on data quality by pushing each individual to be more accurate—it seems easy to push, but we know the quality; you should implement a workforce with this responsibility. The ROI for these people is clear.

![]() Note: I believe that once companies become more mature in working with data-driven tools and processes, AI will slowly take over the role of these product data people.

Note: I believe that once companies become more mature in working with data-driven tools and processes, AI will slowly take over the role of these product data people.

Conclusion

I greatly respect Helena Guitierez and the Share PLM team. I appreciate how they demonstrated that organizing a human-centric PLM summit brings much more excitement than traditional technology—or industry-focused PLM conferences. Starting from the human side of the transformation, the audience was much more diverse and connected.

Closing the conference with a fantastic flamenco performance was perhaps another excellent demonstration of the human-centric approach. The raw performance, a combination of dance, music, and passion, went straight into the heart of the audience – this is how PLM should be (not every day)

There is so much more to share. Meanwhile, you can read more highlights through Michal Finocchiaro’s overview channel here.

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

Often, PLM implementations are either IT-driven or business-driven to implement a need, and yes, there are people who need to work with it as the closing topic. Time and budget are spent on technology and process definitions, and people get trained. Often, only train the trainer, as there is no more budget or time to let the organization adapt, and rapid ROI is expected.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

The conference aims to start with the people, sharing human-related experiences and enabling networking between people – not only about the industry practices (there will be sessions and discussions on this topic too).

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

And if you are interested and have the opportunity, join us and meet some great thought leaders and others with this shared interest.

Why is modern PLM a dream?

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

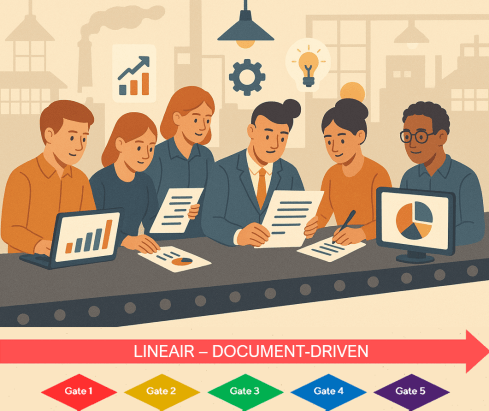

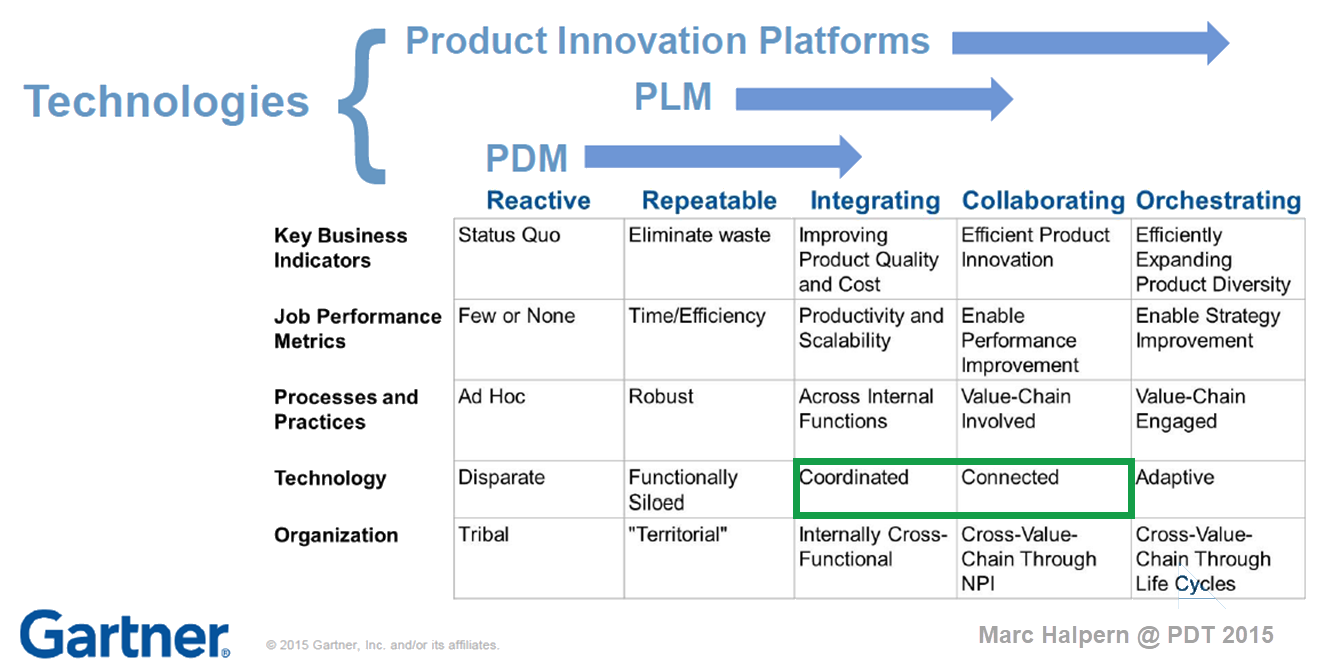

Traditional PLM

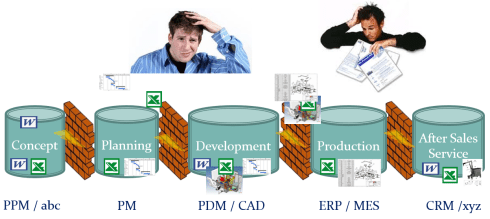

Traditional PLM is often associated with implementing a PLM system, mainly serving engineering. Downstream engineering data usage is usually pushed manually or through interfaces to other enterprise systems, like ERP, MES and service systems.

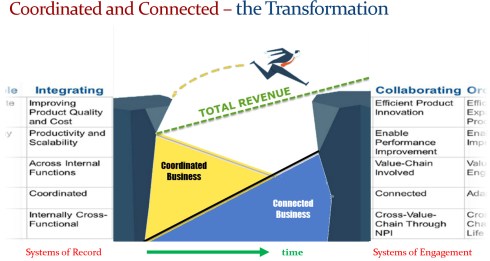

Traditional PLM is closely connected to the coordinated way of working: a linear process based on passing documents (drawings) and datasets (BOMs). Historically, CAD integrations have been the most significant characteristic of these systems.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

Unfortunately, most PLM implementations in the field are based on this approach and are sometimes characterized as advanced PDM.

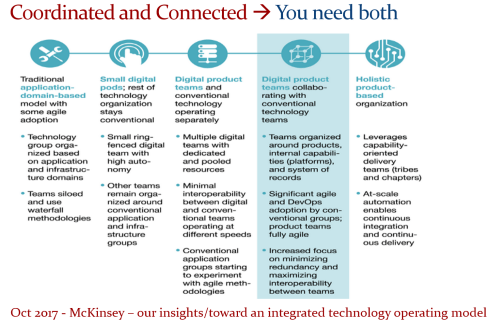

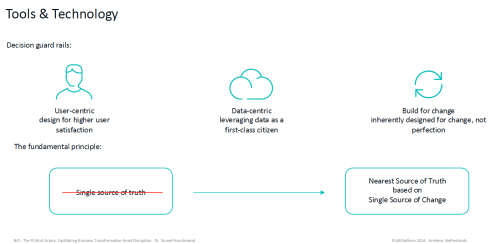

You recognize traditional PLM thinking when people talk about the single source of truth.

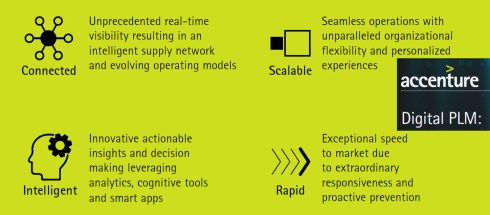

Modern PLM

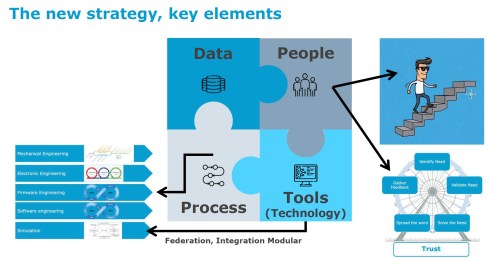

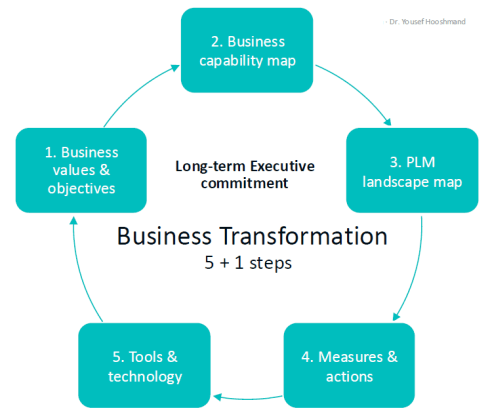

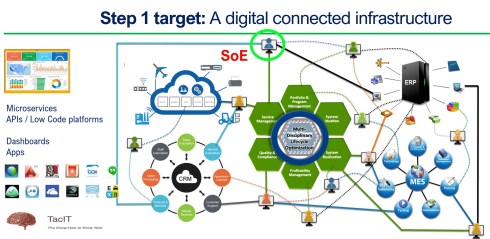

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

More on this challenge will be discussed later, as in our PLM community, most discussions are IT-driven: architectures, ontologies, and technologies – the WHAT.

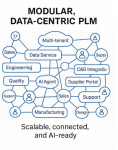

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

I think this article from Oleg Shilovitsky, “Rethinking PLM: Is It Time to Move Beyond the Monolith?“ AND the discussion thread in this post is a must-read. I will not quote the content here again.

After reading Oleg’s post and the comments, come back here

The reason for this approach: It is a perfect example of the connected approach. Instead of collecting all the information inside one post (book ?), the information can be accessed by following digital threads. It also illustrates that in a connected environment, you do not own the data; the data comes from accountable people.

Building such a modern infrastructure is challenging when your company depends mainly on its legacy—the people, processes and systems. Where to change, how to change and when to change are questions that should be answered at the top and require a vision and evolutionary implementation strategy.

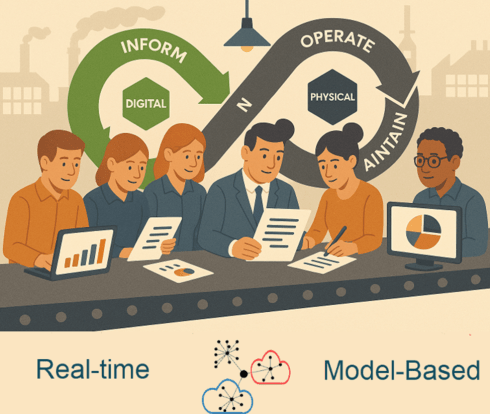

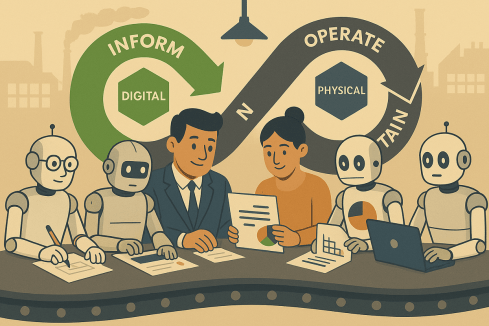

A company should build a layer of connected data on top of the coordinated infrastructure to support users in their new business roles. Implementing a digital twin has significant business benefits if the twin is used to connect with real-time stakeholders from both the virtual and physical worlds.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

Again, there are no quotes from the article; you know how to dive deeper into the connected topic.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

You might recognize modern PLM thinking when people talk about the nearest source of truth and the single source of change.

Is Intelligent PLM the next step?

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

The advantage of these platforms is that they are all created data-driven, not suffering from legacy issues.

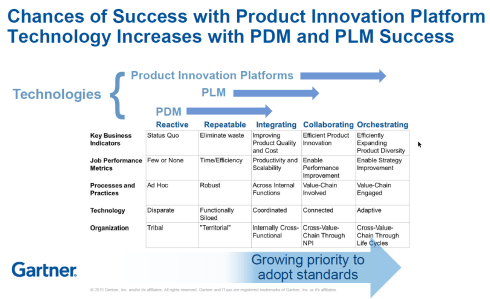

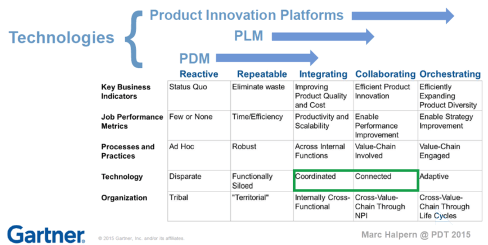

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

At that time, Peter Bilello shared the CIMdata perspective, Marc Halpern (Gartner) showed my favorite positioning slide (below), and Martin Eigner presented, according to my notes, this digital trend in PLM in his session:” What becomes different for PLM/SysLM?”

2015 Marc Halpern – the Product Innovation Platform (PIP)

While concepts started to become clearer, businesses mainly remained the same. The coordinated approach is the most convenient, as you do not need to reshape your organization. And then came the LLMs that changed everything.

Suddenly, it became possible for organizations to unlock knowledge hidden in their company and make it accessible to people.

Without drastically changing the organization, companies could now improve people’s performance and output (theoretically); therefore, it became a topic of interest for management. One big challenge for reaping the benefits is the quality of the data and information accessed.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

You might recognize intelligent PLM thinking when people and AI agents talk about the most likely truth.

Conclusion

Are you interested in these topics and their meaning for your business and career? Join me at the Share PLM conference, where I will discuss “The dilemma: Humans cannot transform—help them!” Time to work on your dreams!

In the last two weeks, I had some interesting observations and discussions related to the need to have a (PLM) vision. I placed the word PLM between brackets, as PLM is no longer an isolated topic in an organization. A PLM strategy should align with the business strategy and vision.

In the last two weeks, I had some interesting observations and discussions related to the need to have a (PLM) vision. I placed the word PLM between brackets, as PLM is no longer an isolated topic in an organization. A PLM strategy should align with the business strategy and vision.

To be clear, if you or your company wants to survive in the future, you need a sustainable vision and a matching strategy as the times they are a changing, again!

I love the text: “Don’t criticize what you can’t understand” – a timeless quote.

First, there was Rob Ferrone’s article: Multi-view. Perspectives that shape PLM – a must-read to understand who to talk to about which dimension of PLM – and it is worth browsing through the comments too – there you will find the discussions, and it helps you to understand the PLM players.

First, there was Rob Ferrone’s article: Multi-view. Perspectives that shape PLM – a must-read to understand who to talk to about which dimension of PLM – and it is worth browsing through the comments too – there you will find the discussions, and it helps you to understand the PLM players.

Note: it is time that AI-generated images become more creative 😉

Next, there is still the discussion started by Gareth Webb, Digital Thread and the Knowledge Graph, further stirred by Oleg Shilovitsky.

Next, there is still the discussion started by Gareth Webb, Digital Thread and the Knowledge Graph, further stirred by Oleg Shilovitsky.

Based on the likes and comments, it is clearly a topic that creates interaction – people are thinking and talking about it – the Digital Thread as a Service.

One of the remaining points in this debate is still the HOW and WHEN companies decide to implement a Digital Thread, a Knowledge Graph and other modern data concepts.

So far my impression is that most companies implement their digital enhancements (treads/graphs) in a bottom-up approach, not driven by a management vision but more like band-aids or places where it fits well, without a strategy or vision.

So far my impression is that most companies implement their digital enhancements (treads/graphs) in a bottom-up approach, not driven by a management vision but more like band-aids or places where it fits well, without a strategy or vision.

The same week, we, Beatriz Gonzáles and I, recorded a Share PLM podcast session with Paul Kaiser from MHP Americas as a guest. Paul is the head of the Digital Core & Technology department, where he leads management and IT consulting services focused on end-to-end business transformation.

During our discussion, Paul mentioned the challenge in engagements when the company has no (PLM) vision. These companies expect external consultants to formulate and implement the vision – a recipe for failure due to wrong expectations.

During our discussion, Paul mentioned the challenge in engagements when the company has no (PLM) vision. These companies expect external consultants to formulate and implement the vision – a recipe for failure due to wrong expectations.

The podcast can be found HERE , and the session inspired me to write this post.

“We just want to be profitable“.

I believe it is a typical characteristic of small and medium enterprises that people are busy with their day-to-day activities. In addition, these companies rarely appoint new top management, which could shake up the company in a positive direction. These companies evolve …..

You often see a stable management team with members who grew up with the company and now monitor and guide it, watching its finances and competition. They know how the current business is running.

Based on these findings, there will be classical efficiency plans, i.e., cutting costs somewhere, dropping some non-performing products, or investing in new technology that they cannot resist. Still, minor process changes and fundamental organizational changes are not expected.

Based on these findings, there will be classical efficiency plans, i.e., cutting costs somewhere, dropping some non-performing products, or investing in new technology that they cannot resist. Still, minor process changes and fundamental organizational changes are not expected.

Most of the time, the efficiency plans provide single-digit benefits.

Everyone is happy when the company feels stable and profitable, even if the margins are under pressure. The challenge for this type of company without a vision is that they navigate in the dark when the outside world changes – like nowadays.

Everyone is happy when the company feels stable and profitable, even if the margins are under pressure. The challenge for this type of company without a vision is that they navigate in the dark when the outside world changes – like nowadays.

The world is changing drastically.

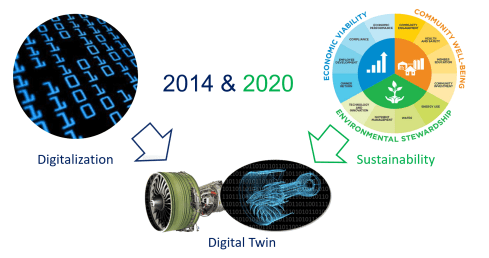

Since 2014, I have advocated for digital transformation in the PLM domain and explained it simply using the statement: From Coordinated to Connected, which already implies much complexity.

Moving from document/files to datasets and models, from a linear delivery model to a DevOps model, from waterfall to agile and many other From-To statements.

Moving From-To is a transformational journey, which means you will learn and adapt to new ways of working during the journey. Still, the journey should have a target, directed by a vision.

However, not many companies have started this journey because they just wanted to be profitable.

“Why should we go in an unknown direction?”

With the emergence of sustainability regulations, e.g., GHG and ESG reporting, carbon taxes, material reporting, and the Digital Product Passport, which goes beyond RoHS and REACH and applies to much more industries, there came the realization that there is a need to digitize the product lifecycle processes and data beyond documents. Manual analysis and validation are too expensive and unreliable.

At this stage, there is already a visible shift between companies that have proactively implemented a digitally connected infrastructure and companies that still see compliance with regulations as an additional burden. The first group brings products to the market faster and more sustainably than the second group because sustainability is embedded in their product lifecycle management.

![]() And just when companies felt they could manage the transition from Coordinated to Coordinated and Connected, there was the fundamental disruption of embedded AI in everything, including the PLM domain.

And just when companies felt they could manage the transition from Coordinated to Coordinated and Connected, there was the fundamental disruption of embedded AI in everything, including the PLM domain.

- Large Language Models LLMs can go through all the structured and unstructured data, providing real-time access to information, which would take experts years of learning. Suddenly, everyone can behave experienced.

- The rigidness of traditional databases can be complemented by graph databases, which visualize knowledge that can be added and discovered on the fly without IT experts. Suddenly, an enterprise is no longer a collection of interfaced systems but a digital infrastructure where data flows – some call it Digital Thread as a Service (DTaaS)

- Suddenly, people feel overwhelmed by complexity, leading to fear and doing nothing, a killing attitude.

I cannot predict what will happen in the next 5 to 10 years, but I am sure the current change is one we have never seen before. Be prepared and flexible to act—to be on top of the wave, you need the skills to get there.

Building the vision

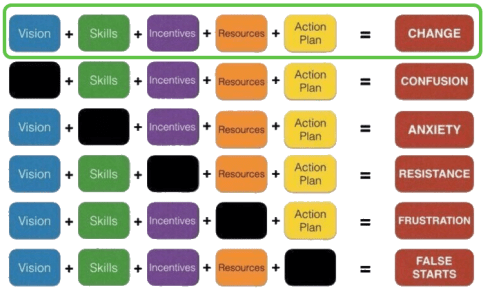

The image below might not be new to you, but it illustrates how companies could manage a complex change.

I will focus only on the first two elements, Vision and Skills, as they are the two elements we as individuals can influence. The other elements are partly related to financial and business constraints.

Vision and Skills are closely related because you can have a fantastic vision. Still, to realize the vision, you need a strategy driven by relevant skills to define and implement the vision. With the upcoming AI, traditional knowledge-based skills will suddenly no longer be a guarantee for future jobs.

AI brings a new dimension for everyone working in a company. To remain relevant, you must develop your unique human skills that make you different from robots or libraries. The importance of human skills might not be new, but now it has become apparent with the explosion of available AI tools.

AI brings a new dimension for everyone working in a company. To remain relevant, you must develop your unique human skills that make you different from robots or libraries. The importance of human skills might not be new, but now it has become apparent with the explosion of available AI tools.

Look at this 2013 table about predicted skills for the future – You can read the details in their paper, The Future of Employment, by Carl Benedikt Frey & Michael Osborne(2013) – click on the image to see the details.

In my 2015 PLM lectures, I joked when showing this image that my job as a PLM coach was secured, because you are a recreational therapist and firefighter combined.

It has become a reality, and many of my coaching engagements nowadays focus on explaining and helping companies formulate and understand their possible path forward. Helping them align and develop a vision of progressing in a volatile world – the technology is there, the skills and the vision are often not yet there.

![]() Combining business strategy with in-depth PLM concepts is a relatively unique approach in our domain. Many of my peers have other primary goals, such as Rob Ferrone’s article: Multi-view. Perspectives that shape PLM explains.

Combining business strategy with in-depth PLM concepts is a relatively unique approach in our domain. Many of my peers have other primary goals, such as Rob Ferrone’s article: Multi-view. Perspectives that shape PLM explains.

And then there is …..

The Share PLM Summit 2025

Modern times need new types of information building and sharing, and therefore, I am eager to participate in the upcoming Share PLM Summit at the end of May in Jerez (Spain).

See the link to the event here: The Share PLM Summit 2025 – with the theme: Where People Take Center Stage to Drive Human-Centric Transformations in PLM and Lead the Future of Digital Innovation.

In my lecture, I will focus on how humans can participate in/anticipate this digital AI-based transformation. But even more, I look forward to the lectures and discussions with other peers, as more people-centric thought leaders and technology leaders will join us:

Quoting Oleg Shilovitsky:

PLM was built to manage data, but too often, it makes people work for the data instead of working the other way around. At Share PLM Summit 2025, I’ll discuss how PLM must evolve from rigid, siloed systems to intelligent, connected, and people-centric data architectures.

We need both, and I hope to see you at the end of May at this unique PLM conference.

Conclusion

We are at a decisive point of the digital transformation as AI will challenge people skills, knowledge and existing ways of working. Combined with a turbulent world order, we need to prepare to be flexible and resilient. Therefore instead of focusing on current best practices we need to prepare for the future – a vision developed by skilled people. How will you or your company work on that? Join us if you have questions or ideas.

In my business ecosystem, I have seen a lot of discussions about technical and architectural topics since last year that are closely connected to the topic of artificial intelligence. We are discussing architectures and solutions that will make our business extremely effective. The discussion is mostly software vendor-driven as vendors usually do not have to deal with the legacy, and they can imagine focusing on the ultimate result.

Legacy (people, skills, processes and data) is the mean inhibitor for fast forward in such situations, as I wrote in my previous post: Data, Processes and AI.

However, there are also less visible discussions about business efficiency – methodology and business models – and future sustainability.

These discussions are more challenging to follow as you need a broader and long-term vision, as implementing solutions/changes takes much longer than buying tools.

These discussions are more challenging to follow as you need a broader and long-term vision, as implementing solutions/changes takes much longer than buying tools.

This time, I want to revisit the discussion on modularity and the need for business efficiency and sustainability.

Modularity – what is it?

Modularity is a design principle that breaks a system into smaller, independent, and interchangeable components, or modules, that function together as a whole. Each module performs a specific task and can be developed, tested, and maintained separately, improving flexibility and scalability.

Modularity is a design principle that breaks a system into smaller, independent, and interchangeable components, or modules, that function together as a whole. Each module performs a specific task and can be developed, tested, and maintained separately, improving flexibility and scalability.

Modularity is a best practice in software development. Although modular thinking takes a higher initial effort, the advantages are enormous for reuse, flexibility, optimization, or adding new functionality. And as software code has no material cost or scrap, modular software solutions excel in delivery and maintenance.

In the hardware world, this is different. Often, companies have a history of delivering a specific (hardware) solution, and the product has been improved by adding features and options where the top products remain the company’s flagships.

In the hardware world, this is different. Often, companies have a history of delivering a specific (hardware) solution, and the product has been improved by adding features and options where the top products remain the company’s flagships.

Modularity enables easy upgrades and replacements in hardware and engineering, reducing costs and complexity. As I work mainly with manufacturing companies in my network, I will focus on modularity in the hardware world.

Modularity – the business goal

How often have you heard that a business aims to transition from Engineering to Order (ETO) to Configure/Build to Order (BTO) or Assemble to Order (ATO)? Companies often believe that the starting point of implementing a PLM system is enough, as it will help identify commonalities in product variations, therefore leading to more modular products.

The primary targeted business benefits often include reduced R&D time and cost but also reduced risk due to component reuse and reuse of experience. However, the ultimate goal for CTO/ATO companies is to minimize R&D involvement in their sales and delivery process.

The primary targeted business benefits often include reduced R&D time and cost but also reduced risk due to component reuse and reuse of experience. However, the ultimate goal for CTO/ATO companies is to minimize R&D involvement in their sales and delivery process.

More options can be offered to potential customers without spending more time on engineering.

Four years ago, I discussed modularity with Björn Eriksson and Daniel Strandhammar, who wrote “The Modular Way” during the COVID-19 pandemic. I liked the book because it is excellent for understanding the broader scope of modularity along with marketing, sales, and long-term strategy. Each business type has its modularity benefits.

Four years ago, I discussed modularity with Björn Eriksson and Daniel Strandhammar, who wrote “The Modular Way” during the COVID-19 pandemic. I liked the book because it is excellent for understanding the broader scope of modularity along with marketing, sales, and long-term strategy. Each business type has its modularity benefits.

I had a follow-up discussion with panelists active in modularization and later with Daniel Strandhammar about the book’s content in this blog post: PLM and Modularity.

Next, I got involved with the North European Modularity Network (NEM) group, a group of Scandinavian companies that share modularization experiences and build common knowledge.

Historically, modularization has been a popular topic in North Europe, and meanwhile, the group is expanding beyond Scandinavia. Participants in the group focus on education-sharing strategies rather than tools.

Historically, modularization has been a popular topic in North Europe, and meanwhile, the group is expanding beyond Scandinavia. Participants in the group focus on education-sharing strategies rather than tools.

The 2023 biannual meeting I attended hosted by Vestas in Ringkobing was an eye-opener for me.

We should work more integrated, not only on the topic of Modularity and PLM but also on a third important topic: Sustainability in the context of the Circular Economy.

You can review my impression of the event and presentation in my post: “The week after North European Modularity (NEM)“

That post concludes that Modularity, like PLM, is a strategy rather than an R&D mission. Integrating modularity topics into PLM conferences or Circular Economy events would facilitate mutual learning and collaboration.

That post concludes that Modularity, like PLM, is a strategy rather than an R&D mission. Integrating modularity topics into PLM conferences or Circular Economy events would facilitate mutual learning and collaboration.

Modularity and Sustainability

The PLM Green Global Alliance started in 2020 initially had few members. However, after significant natural disasters and the announcement of regulations related to the European Green Deal, sustainability became a management priority. Greenwashing was no longer sufficient.

The PLM Green Global Alliance started in 2020 initially had few members. However, after significant natural disasters and the announcement of regulations related to the European Green Deal, sustainability became a management priority. Greenwashing was no longer sufficient.

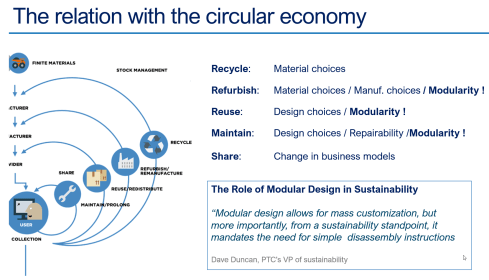

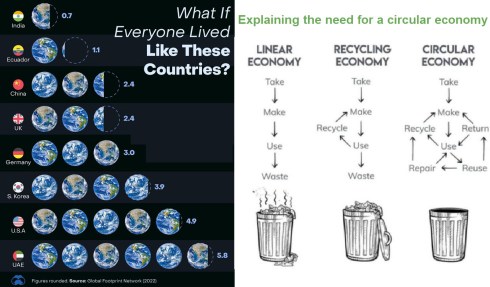

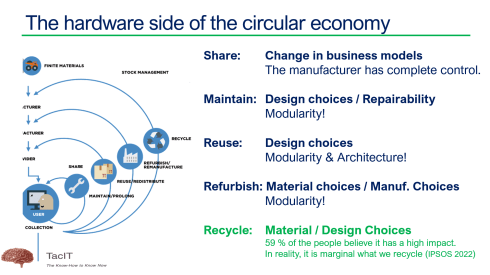

One key topic discussed in the PLM Green Global Alliance is the circular economy moderated by CIMPA PLM services. The circular economy is crucial as our current consumption of Earth’s resources is unsustainable.

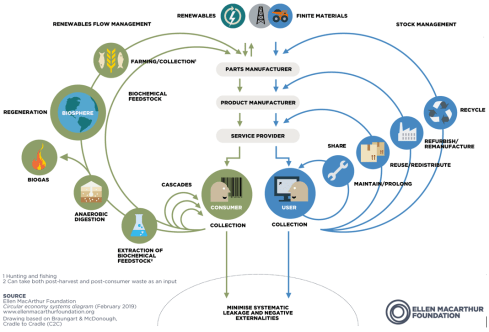

The well-known butterfly diagram from the Ellen MacArthur Foundation below, illustrates the higher complexity of a circular economy, both for the renewables (left) and the hardware (right)

In a circular economy, modularity is essential. The SHARE loop focuses on a Product Service Model, where companies provide services based on products used by different users. This approach requires a new business model, customer experience, and durable hardware. After Black Friday last year, I wrote about this transition: The Product Service System and a Circular Economy.

Modularity is vital in the MAINTAIN/PROLONG loop. Modular products can be upgraded without replacing the entire product, and modules are easier to repair. An example is Fairphone from the Netherlands, where users can repair and upgrade their smartphones, contributing to sustainability.

In the REUSE/REMANUFACTURE loop, modularity allows for reusing hardware parts when electronics or software components are upgraded. This approach reduces waste and supports sustainability.

The REFURBISH/REMANUFACTURE loop also benefits from modularity, though to a lesser extent. This loop helps preserve scarce materials, such as batteries, reducing the need for resource extraction from places like the moon, Mars, or Greenland.

A call for action

If you reached this point of the article, my question is now to reflect on your business or company. Modularity is, for many companies, a dream (or vision) and will become, for most companies, a must to provide a sustainable business.

If you reached this point of the article, my question is now to reflect on your business or company. Modularity is, for many companies, a dream (or vision) and will become, for most companies, a must to provide a sustainable business.

Modularity does not depend on PLM technology, as famous companies like Scania, Electrolux and Vestas have shown (in my reference network).

Where is your company and its business offerings?

IMPORTANT:

IMPORTANT: