You are currently browsing the category archive for the ‘Digital Twin’ category.

For those of you following my blog over the years, there is, every time after the PLM Roadmap PDT Europe conference, one or two blog posts, where the first starts with “The weekend after ….”

This time, November has been a hectic week for me, with first this engaging workshop “Shape the future of PLM – together” – you can read about it in my blog post or the latest post from Arrowhead fPVN, the sponsor of the workshop.

This time, November has been a hectic week for me, with first this engaging workshop “Shape the future of PLM – together” – you can read about it in my blog post or the latest post from Arrowhead fPVN, the sponsor of the workshop.

Last week, I celebrated with the core team from the PLM Green Global Alliance our 5th anniversary, during which we discussed sustainability in action. The term sustainability is currently under the radar, but if you want to learn what is happening, read this post with a link to the webinar recording.

Last week, I celebrated with the core team from the PLM Green Global Alliance our 5th anniversary, during which we discussed sustainability in action. The term sustainability is currently under the radar, but if you want to learn what is happening, read this post with a link to the webinar recording.

Last week, I was also active at the PTC/User Benelux conference, where I had many interesting discussions about PTC’s strategy and portfolio. A big and well-organized event in the town where I grew up in the world of teaching and data management.

Last week, I was also active at the PTC/User Benelux conference, where I had many interesting discussions about PTC’s strategy and portfolio. A big and well-organized event in the town where I grew up in the world of teaching and data management.

And now it is time for the PLM roadmap / PDT conference review

The conference

The conference is my favorite technical conference 😉 for learning what is happening in the field. Over the years, we have seen reports from the Aerospace & Defense PLM Action Groups, which systematically work on various themes related to a digital enterprise. The usage of standards, MBSE, Supplier Collaboration, Digital Thread & Digital Twin are all topics discussed.

The conference is my favorite technical conference 😉 for learning what is happening in the field. Over the years, we have seen reports from the Aerospace & Defense PLM Action Groups, which systematically work on various themes related to a digital enterprise. The usage of standards, MBSE, Supplier Collaboration, Digital Thread & Digital Twin are all topics discussed.

This time, the conference was sold out with 150+ attendees, just fitting in the conference space, and the two-day program started with a challenging day 1 of advanced topics, and on day 2 we saw more company experiences.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

PLM’s Integral Role in Digital Transformation

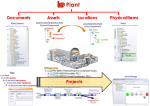

As usual, Peter Bilello, CIMdata’s President & CEO, kicked off the conference, and his message has not changed over the years. PLM should be understood as a strategic, enterprise-wide approach that manages intellectual assets and connects the entire product lifecycle.

As usual, Peter Bilello, CIMdata’s President & CEO, kicked off the conference, and his message has not changed over the years. PLM should be understood as a strategic, enterprise-wide approach that manages intellectual assets and connects the entire product lifecycle.

I like the image below explaining the WHY behind product lifecycle management.

It enables end-to-end digitalization, supports digital threads and twins, and provides the backbone for data governance, analytics, AI, and skills transformation.

Peter walked us briefly through CIMdata’s Critical Dozen (a YouTube recording is available here), all of which are relevant to the scope of digital transformation. Without strong PLM foundations and governance, digital transformation efforts will fail.

The Digital Thread as the Foundation of the Omniverse

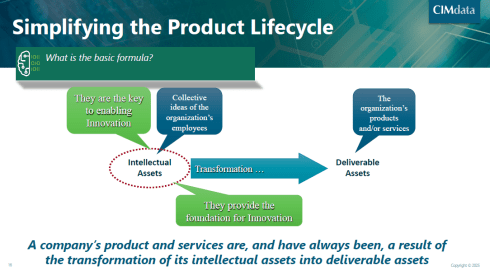

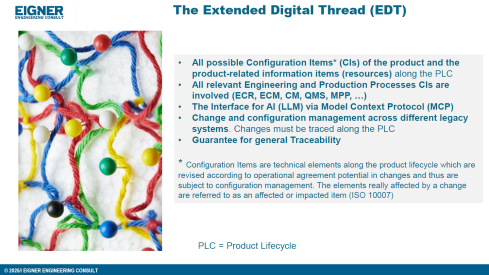

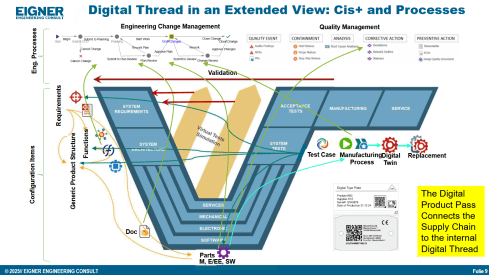

Prof. Dr.-Ing. Martin Eigner, well known for his lifetime passion and vision in product lifecycle management (PDM and PLM tools & methodology), shared insights from his 40-year journey, highlighting the growing complexity and ever-increasing fragmentation of customer solution landscapes.

Prof. Dr.-Ing. Martin Eigner, well known for his lifetime passion and vision in product lifecycle management (PDM and PLM tools & methodology), shared insights from his 40-year journey, highlighting the growing complexity and ever-increasing fragmentation of customer solution landscapes.

In his current eco-system, ERP (read SAP) is playing a significant role as an execution platform, complemented by PDM or ECTR capabilities. Few of his customers go for the broad PLM systems, and therefore, he stresses the importance of the so-called Extended Digital Thread.

Prof Eigner describes the EDT more precisely as an overlaying infrastructure implemented by a graph database that serves as a performant knowledge graph of the enterprise.

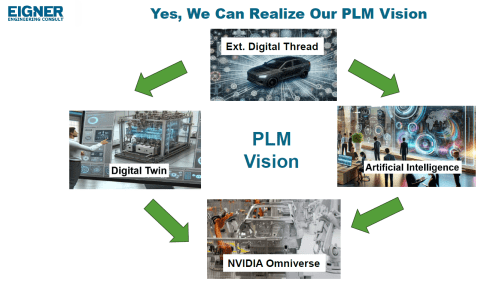

The EDT serves as the foundation for AI-driven applications, supporting impact analysis, change management, and natural-language interaction with product data. The presentation also provides a detailed view of Digital Twin concepts, ranging from component to system and process twins, and demonstrates how twins enhance predictive maintenance, sustainability, and process optimization.

Combined with the NVIDIA Omniverse as the next step toward immersive, real-time collaboration and simulation, enabling virtual factories and physics-accurate visualization. The outlook emphasizes that combining EDT, Digital Twin, AI, and Omniverse moves the industry closer to the original PLM vision: a unified, consistent Single Source of Truth 😮that boosts innovation, efficiency, and ROI.

![]() For me, hearing and reading the term Single Source of Truth still creates discomfort with reality and humanity, so we still have something to discuss.

For me, hearing and reading the term Single Source of Truth still creates discomfort with reality and humanity, so we still have something to discuss.

Semantic Digital Thread for Enhanced Systems Engineering in a Federated PLM Landscape

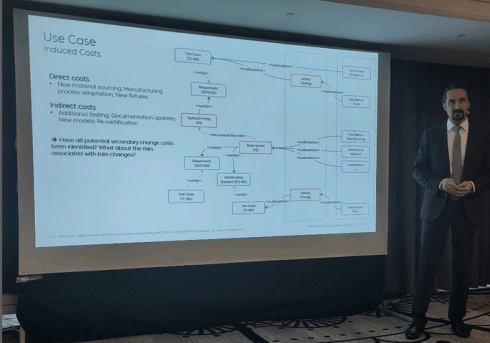

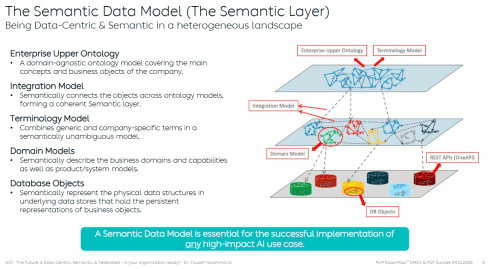

Dr. Yousef Hooshmand‘s presentation was a great continuation of the Extended Digital Thread theme discussed by Dr. Martin Eigner. Where the core of Martin’s EDT is based on traceability between artifacts and processes throughout the lifecycle, Yousef introduced a (for me) totally new concept: starting with managing and structuring the data to manage the knowledge, rather than starting from the models and tools to understand the knowledge.

Dr. Yousef Hooshmand‘s presentation was a great continuation of the Extended Digital Thread theme discussed by Dr. Martin Eigner. Where the core of Martin’s EDT is based on traceability between artifacts and processes throughout the lifecycle, Yousef introduced a (for me) totally new concept: starting with managing and structuring the data to manage the knowledge, rather than starting from the models and tools to understand the knowledge.

It is a fundamentally different approach to addressing the same problem of complexity. During our pre-conference workshop “Shape the future of PLM – together,” I already got a bit familiar with this approach, and Yousef’s recently released paper provides all the details.

All the relevant information can be found in his recent LinkedIn post here.

In his presentation during the conference, Yousef illustrated the value and applicability of the Semantic Digital Thread approach by presenting it in an automotive use case: Impact Analysis and Cost Estimation (image above)

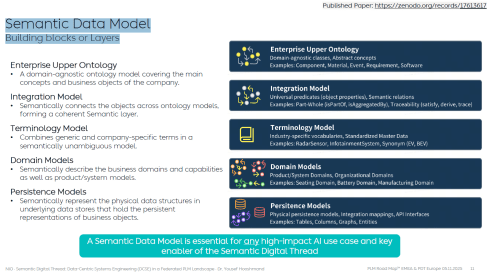

To understand the Semantic Digital Thread, it is essential to understand the Semantic Data Model and its building blocks or layers, as illustrated in the image below:

In addition, such an infrastructure is ideal for AI applications and avoids vendor- or tool lock-in, providing a significant long-term advantage.

I am sure it will take time for us to digest the content if you are entering the domain of a data-driven enterprise (the connected approach) instead of a document-driven enterprise (the coordinated approach).

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

Where is your company on the path to becoming more data-driven?

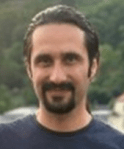

Note: I just saw this post and the image above, which emphasizes the importance of the relationship between ontologies and the application of AI agents.

Evaluation of SysML v2 for use in Collaborative MBSE between OEMs and Suppliers

It was interesting to hear Chris Watkins’ speech, which presented the findings from the AD PLM Action Group MBSE Collaboration Working Group on digital collaboration based on SysML v2.

It was interesting to hear Chris Watkins’ speech, which presented the findings from the AD PLM Action Group MBSE Collaboration Working Group on digital collaboration based on SysML v2.

The topic they research is that currently there are no common methods and standards for exchanging digital model-based requirements and architecture deliverables for the design, procurement, and acceptance of aerospace systems equipment across the industry.

The action group explored the value of SysML v2 for data-driven collaboration between OEMs and suppliers, particularly in the early concept phases.

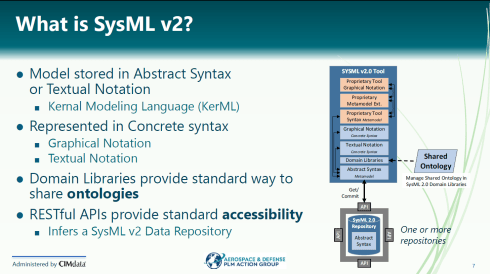

Chris started with a brief explanation of what SysXML v2 is – image below:

As the image illustrates, SysML v2-ready tools allow people to work in their proprietary interfaces while sharing results in common, defined structures and ontologies.

When analyzing various collaboration scenarios, one of the main challenges remained managing changes, the required ontologies, and working in a shared IT environment.

👉You can read the full report here: AD PAG reports: Model-Based Systems Engineering.

An interesting point of discussion here is that, in the report, participants note that, despite calling out significant gaps and concerns, a substantial majority of the industry indicated that their MBSE solution provider is a good partner. At the same time, only a small minority expressed a negative view.

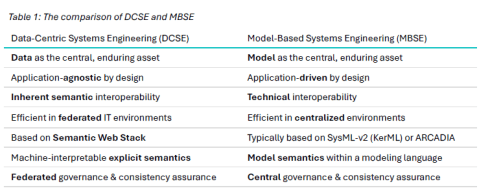

Would Data-Centric Systems Engineering change the discussion? See table 1 below from Yousef’s paper:

An illustration that there was enough food for discussion during the conference.

PLM Interoperability and the Untapped Value of 40 Years in Standardization

In the context of collaboration, two sessions fit together perfectly.

First, Kenny Swope from Boeing. Kenny is a longtime Boeing engineering leader and global industrial-data standards expert who oversees enterprise interoperability efforts, chairs ISO/TC 184/SC 4, and mentors youth in technology through 4-H and FIRST programs.

First, Kenny Swope from Boeing. Kenny is a longtime Boeing engineering leader and global industrial-data standards expert who oversees enterprise interoperability efforts, chairs ISO/TC 184/SC 4, and mentors youth in technology through 4-H and FIRST programs.

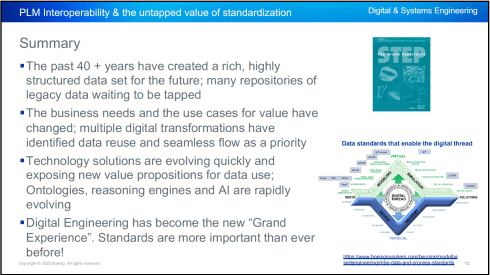

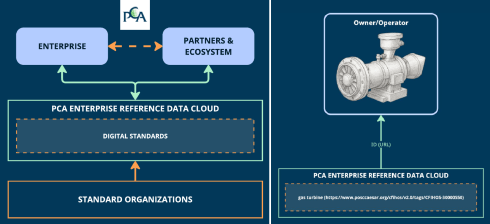

Kenny shared that over the past 40+ years, the understanding and value of this approach have become increasingly apparent, especially as organizations move toward a digital enterprise. In a digital enterprise, these standards are needed for efficient interoperability between various stakeholders. And the next session was an example of this.

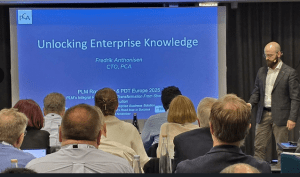

Unlocking Enterprise Knowledge

Fredrik Anthonisen, the CTO of the POSC Caesar Association (PCA), started his story about the potential value of efficient standard use.

Fredrik Anthonisen, the CTO of the POSC Caesar Association (PCA), started his story about the potential value of efficient standard use.

According to a Siemens report, “The true costs of downtime” a $1,4 trillion is lost to unplanned downtime.

The root cause is that, most of the time, the information needed to support the MRO activity is inaccessible or incomplete.

Making data available using standards can provide part of the answer, but static documents and slow consensus processes can’t keep up with the pace of change.

Therefore, PCA established the PCA enterprise reference data cloud, where all stakeholders in enterprise collaboration can relate their data to digital exposed standards, as the left side of the image shows.

Fredrik shared a use case (on the right side of the image) as an example. Also, he mentioned that the process for defining and making the digital reference data available to participants is ongoing. The reference data needs to become the trusted resource for the participants to monetize the benefits.

Summary

Day 1 had many more interesting and advanced concepts related to standards and the potential usage of AI.

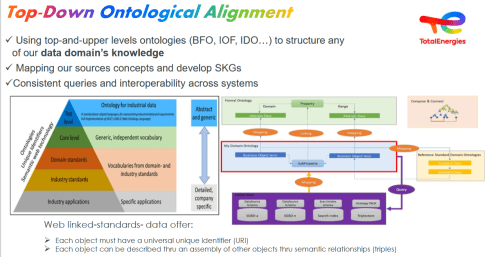

Jean-Charles Leclerc, Head of Innovation & Standards at TotalEnergies, in his session, “Bringing Meaning Back To Data,” elaborated on the need to provide data in the context of the domain for which it is intended, rather than “indexed” LLM data.

Jean-Charles Leclerc, Head of Innovation & Standards at TotalEnergies, in his session, “Bringing Meaning Back To Data,” elaborated on the need to provide data in the context of the domain for which it is intended, rather than “indexed” LLM data.

Very much aligned with Yousef’s statement that there is a need to apply semantic technologies, and especially ontologies, to turn the data into knowledge.

More details can also be found in the “Shape the future of PLM – together” post, where Jean-Charles was one of the leading voices.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

“Reliable AI comes from a foundation that supports knowledge in its domain context.”

Conclusion

For the casual user, day 1 was tough – digital transformation in the product lifecycle domain requires skills that might not yet exist in smaller organizations. Understanding the need for ontologies (generic/domain-specific) and semantic models is essential to benefit from what AI can bring – a challenging and enjoyable journey to follow!

Together with Håkan Kårdén, we had the pleasure of bringing together 32 passionate professionals on November 4th to explore the future of PLM (Product Lifecycle Management) and ALM (Asset Lifecycle Management), inspired by insights from four leading thinkers in the field. Please, click on the image for more details.

Together with Håkan Kårdén, we had the pleasure of bringing together 32 passionate professionals on November 4th to explore the future of PLM (Product Lifecycle Management) and ALM (Asset Lifecycle Management), inspired by insights from four leading thinkers in the field. Please, click on the image for more details.

The meeting had two primary purposes.

- Firstly, we aimed to create an environment where these concepts could be discussed and presented to a broader audience, comprising academics, industrial professionals, and software developers. The group’s feedback could serve as a benchmark for them.

- The second goal was to bring people together and create a networking opportunity, either during the PLM Roadmap/PDT Europe conference, the day after, or through meetings established after this workshop.

Personally, it was a great pleasure to meet some people in person whose LinkedIn articles I had admired and read.

The meeting was sponsored by the Arrowhead fPVN project, a project I discussed in a previous blog post related to the PLM Roadmap/PDT Europe 2024 conference last year. Together with the speakers, we have begun working on a more in-depth paper that describes the similarities and the lessons learned that are relevant. This activity will take some time.

Therefore, this post only includes the abstracts from the speakers and links to their presentations. It concludes with a few observations from some attendees.

Reasoning Machines: Semantic Integration in Cyber-Physical Environments

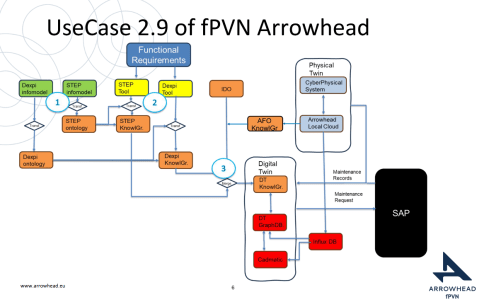

Torbjörn Holm / Jan van Deventer: The presentation discussed the transition from requirements to handover and operations, emphasizing the role of knowledge graphs in unifying standards and technologies for a flexible product value network

Torbjörn Holm / Jan van Deventer: The presentation discussed the transition from requirements to handover and operations, emphasizing the role of knowledge graphs in unifying standards and technologies for a flexible product value network

The presentation outlines the phases of the product and production lifecycle, including requirements, specification, design, build-up, handover, and operations. It raises a question about unifying these phases and their associated technologies and standards, emphasizing that the most extended phase, which involves operation, maintenance, failure, and evolution until retirement, should be the primary focus.

It also discusses seamless integration, outlining a partial list of standards and technologies categorized into three sections: “Modelling & Representation Standards,” “Communication & Integration Protocols,” and “Architectural & Security Standards.” Each section contains a table listing various technology standards, their purposes, and references. Additionally, the presentation includes a “Conceptual Layer Mapping” table that details the different layers (Knowledge, Service, Communication, Security, and Data), along with examples, functions, and references.

The presentation outlines an approach for utilizing semantic technologies to ensure interoperability across heterogeneous datasets throughout a product’s lifecycle. Key strategies include using OWL 2 DL for semantic consistency, aligning domain-specific knowledge graphs with ISO 23726-3, applying W3C Alignment techniques, and leveraging Arrowhead’s microservice-based architecture and Framework Ontology for scalable and interoperable system integration.

The utilized software architecture system, including three main sections: “Functional Requirements,” “Physical Twin,” and “Digital Twin,” each containing various interconnected components, will be presented. The Architecture includes today several Knowledge Graphs (KG): A DEXPI KG, A STEP (ISO 10303) KG, An Arrowhead Framework KG and under work the CFIHOS Semantics Ontology, all aligned.

👉The presentation: W3C Major standard interoperability_Paris

Beyond Handover: Building Lifecycle-Ready Semantic Interoperability

Jean-Charles Leclerc argued that Industrial data standards must evolve beyond the narrow scope of handover and static interoperability. To truly support digital transformation, they must embrace lifecycle semantics or, at the very least, be designed for future extensibility.

This shift enables technical objects and models to be reused, orchestrated, and enriched across internal and external processes, unlocking value for all stakeholders and managing the temporal evolution of properties throughout the lifecycle. A key enabler is the “pattern of change”, a dynamic framework that connects data, knowledge, and processes over time. It allows semantic models to reflect how things evolve, not just how they are delivered.

By grounding semantic knowledge graphs (SKGs) in such rigorous logic and aligning them with W3C standards, we ensure they are both robust and adaptable. This approach supports sustainable knowledge management across domains and disciplines, bridging engineering, operations, and applications.

Ultimately, it’s not just about technology; it’s about governance.

Being Sustainab’OWL (Web Ontology Language) by Design! means building semantic ecosystems that are reliable, scalable, and lifecycle-ready by nature.

Additional Insight: From Static Models to Living Knowledge

To transition from static information to living knowledge, organizations must reassess how they model and manage technical data. Lifecycle-ready interoperability means enabling continuous alignment between evolving assets, processes, and systems. This requires not only semantic precision but also a governance framework that supports change, traceability, and reuse, turning standards into operational levers rather than compliance checkboxes.

👉The presentation: Beyond Handover – Building Lifecycle Ready Semantic Interoperability

The first two presentations had a lot in common as they both come from the Asset Lifecycle Management domain and focus on an infrastructure to support assets over a long lifetime. This is particularly visible in the usage and references to standards such as DEXPI, STEP, and CFIHOS, which are typical for this domain.

The first two presentations had a lot in common as they both come from the Asset Lifecycle Management domain and focus on an infrastructure to support assets over a long lifetime. This is particularly visible in the usage and references to standards such as DEXPI, STEP, and CFIHOS, which are typical for this domain.

How can we achieve our vision of PLM – the Single Source of Truth?

Martin Eigner stated that Product Lifecycle Management (PLM) has long promised to serve as the Single Source of Truth for organizations striving to manage product data, processes, and knowledge across their entire value chain. Yet, realizing this vision remains a complex challenge.

Achieving a unified PLM environment requires more than just implementing advanced software systems—it demands cultural alignment, organizational commitment, and seamless integration of diverse technologies. Central to this vision is data consistency: ensuring that stakeholders across engineering, manufacturing, supply chain, and service have access to accurate, up-to-date, and contextualized information along the Product Lifecycle. This involves breaking down silos, harmonizing data models, and establishing governance frameworks that enforce standards without limiting flexibility.

Emerging technologies and methodologies, such as Extended Digital Thread, Digital Twins, cloud-based platforms, and Artificial Intelligence, offer new opportunities to enhance collaboration and integrated data management.

However, their success depends on strong change management and a shared understanding of PLM as a strategic enabler rather than a purely technical solution. By fostering cross-functional collaboration, investing in interoperability, and adopting scalable architectures, organizations can move closer to a trustworthy single source of truth. Ultimately, realizing the vision of PLM requires striking a balance between innovation and discipline—ensuring trust in data while empowering agility in product development and lifecycle management.

👉The presentation: Martin – Workshop PLM Future 04_10_25

The Future is Data-Centric, Semantic, and Federated … Is your organization ready?

Yousef Hooshmand, who is currently working at NIO as PLM & R&D Toolchain Lead Architect, discussed the must-have relations between a data-centric approach, semantic models and a federated environment as the image below illustrates:

Why This Matters for the Future?

- Engineering is under unprecedented pressure: products are becoming increasingly complex, customers are demanding personalization, and development cycles must be accelerated to meet these demands. Traditional, siloed methods can no longer keep up.

- The way forward is a data-centric, semantic, and federated approach that transforms overwhelming complexity into actionable insights, reduces weeks of impact analysis to minutes, and connects fragmented silos to create a resilient ecosystem.

- This is not just an evolution, but a fundamental shift that will define the future of systems engineering. Is your organization ready to embrace it?

👉The presentation: The Future is Data-Centric, Semantic, and Federated.

Some of first impressions

👉 Bhanu Prakash Ila from Tata Consultancy Services– you can find his original comment in this LinkedIn post

Key points:

- Traditional PLM architectures struggle with the fundamental challenge of managing increasingly complex relationships between product data, process information, and enterprise systems.

- Ontology-Based Semantic Models – The Way Forward for PLM Digital Thread Integration: Ontology-based semantic models address this by providing explicit, machine-interpretable representations of domain knowledge that capture both concepts and their relationships. These lay the foundations for AI-related capabilities.

It’s clear that as AI, semantic technologies, and data intelligence mature, the way we think and talk about PLM must evolve too – from system-centric to value-driven, from managing data to enabling knowledge and decisions.

A quick & temporary conclusion

Typically, I conclude my blog posts with a summary. However, this time the conclusion is not there yet. There is work to be done to align concepts and understand for which industry they are most applicable. Using standards or avoiding standards as they move too slowly for the business is a point of ongoing discussion. The takeaway for everyone in the workshop was that data without context has no value. Ontologies, semantic models and domain-specific methodologies are mandatory for modern data-driven enterprises. You cannot avoid this learning path by just installing a graph database.

Recently, we initiated the Design for Sustainability workgroup, an initiative from two of our PGGA members, Erik Rieger and Matthew Sullivan. You can find a recording of the kick-off here on our YouTube channel.

Recently, we initiated the Design for Sustainability workgroup, an initiative from two of our PGGA members, Erik Rieger and Matthew Sullivan. You can find a recording of the kick-off here on our YouTube channel.

Thanks to the launch of the Design for Sustainability workgroup, we were introduced to Dr. Elvira Rakova, founder and CEO of the startup company Direktin.

Her mission is to build the Digital Ecosystem of engineering tools and simulation for Compressed Air Systems. As typical PLM professionals with a focus on product design, we were curious to learn about developments in the manufacturing space. And it was an interesting discussion, almost a lecture.

Compressed air and Direktin

Dr. Elvira Rakova has been working with compressed air in manufacturing plants for several years, during which she has observed the inefficiency of how compressed air is utilized in these facilities. It is an available resource for all kinds of machines in the plant, often overdimensioned and a significant source of wasted energy.

Dr. Elvira Rakova has been working with compressed air in manufacturing plants for several years, during which she has observed the inefficiency of how compressed air is utilized in these facilities. It is an available resource for all kinds of machines in the plant, often overdimensioned and a significant source of wasted energy.

To address this waste of energy, linked to CO2 emissions, she started her company to help companies scale, dimension, and analyse their compressed air usage. A mix of software and consultancy to make manufacturing processes using compressed air responsible for less carbon emissions, and for the plant owners, saving significant money related to energy usage.

For us, it was an educational discussion, and we recommend that you watch or listen to the next 36 minutes

What I learned

- The use of compressed air and its energy/environmental impact were like dark matter to me.

I never noticed it when visiting customers as a significant source to become more sustainable. - Although the topic of compressed air seems easy to understand, its usage and impact are all tough to address quickly and easily, due to legacy in plants, lack of visibility on compressed air (energy usage) and needs and standardization among the providers of machinery.

- The need for data analysis is crucial in addressing the reporting challenges of Scope 3 emissions, and it is also increasingly important as part of the Digital Product Passport data to be provided. Companies must invest in the digitalization of their plants to better analyze and improve energy usage, such as in the case of compressed air.

- In the end, we concluded that for sustainability, it is all about digital partnerships connecting the design world and the manufacturing world and for that reason, Elvira is personally motivated to join and support the Design for Sustainability workgroup

Want to learn more?

- Another educational webinar: Design Review Culture and Sustainability

- Explore the Direktin website to learn more

Conclusions

The PLM Green Global Alliance is not only about designing products; we have also seen lifecycle assessments for manufacturing, as discussed with Makersite and aPriori. These companies focused more on traditional operations in a manufacturing plant. Through our lecture/discussion on the use of compressed air in manufacturing plants, we identified a new domain that requires attention.

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

First, an important announcement. In the last two weeks, I have finalized preparations for the upcoming Share PLM Summit in Jerez on 27-28 May. With the Share PLM team, we have been working on a non-typical PLM agenda. Share PLM, like me, focuses on organizational change management and the HOW of PLM implementations; there will be more emphasis on the people side.

Often, PLM implementations are either IT-driven or business-driven to implement a need, and yes, there are people who need to work with it as the closing topic. Time and budget are spent on technology and process definitions, and people get trained. Often, only train the trainer, as there is no more budget or time to let the organization adapt, and rapid ROI is expected.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

This approach neglects that PLM implementations are enablers for business transformation. Instead of doing things slightly more efficiently, significant gains can be made by doing things differently, starting with the people and their optimal new way of working, and then providing the best tools.

The conference aims to start with the people, sharing human-related experiences and enabling networking between people – not only about the industry practices (there will be sessions and discussions on this topic too).

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

If you are curious about the details, listen to the podcast recording we published last week to understand the difference – click on the image on the left.

And if you are interested and have the opportunity, join us and meet some great thought leaders and others with this shared interest.

Why is modern PLM a dream?

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

If you are connected to the LinkedIn posts in my PLM feed, you might have the impression that everyone is gearing up for modern PLM. Articles often created with AI support spark vivid discussions. Before diving into them with my perspective, I want to set the scene by explaining what I mean by modern PLM and traditional PLM.

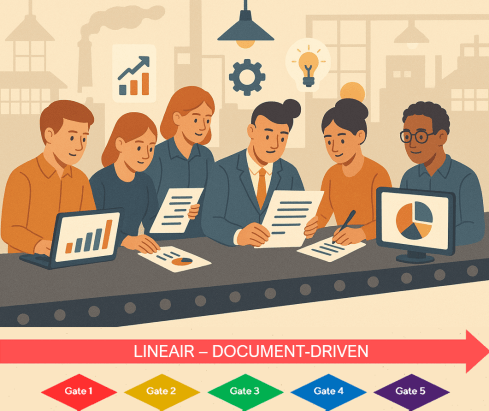

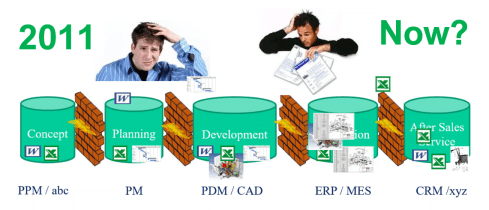

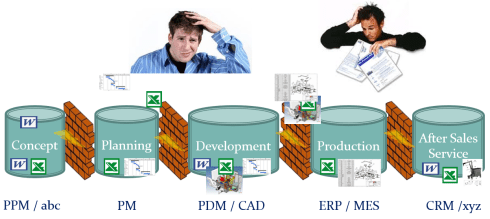

Traditional PLM

Traditional PLM is often associated with implementing a PLM system, mainly serving engineering. Downstream engineering data usage is usually pushed manually or through interfaces to other enterprise systems, like ERP, MES and service systems.

Traditional PLM is closely connected to the coordinated way of working: a linear process based on passing documents (drawings) and datasets (BOMs). Historically, CAD integrations have been the most significant characteristic of these systems.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

The coordinated approach fits people working within their authoring tools and, through integrations, sharing data. The PLM system becomes a system of record, and working in a system of record is not designed to be user-friendly.

Unfortunately, most PLM implementations in the field are based on this approach and are sometimes characterized as advanced PDM.

You recognize traditional PLM thinking when people talk about the single source of truth.

Modern PLM

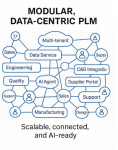

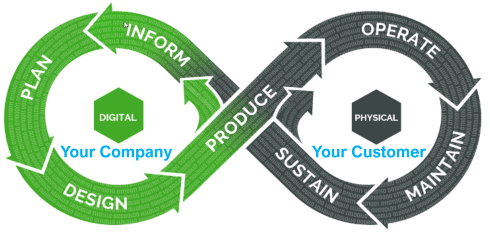

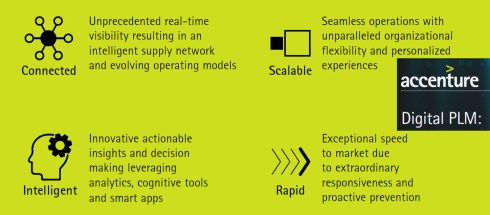

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

When I talk about modern PLM, it is no longer about a single system. Modern PLM starts from a business strategy implemented by a data-driven infrastructure. The strategy part remains a challenge at the board level: how do you translate PLM capabilities into business benefits – the WHY?

More on this challenge will be discussed later, as in our PLM community, most discussions are IT-driven: architectures, ontologies, and technologies – the WHAT.

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

For the WHAT, there seems to be a consensus that modern PLM is based on a federated

I think this article from Oleg Shilovitsky, “Rethinking PLM: Is It Time to Move Beyond the Monolith?“ AND the discussion thread in this post is a must-read. I will not quote the content here again.

After reading Oleg’s post and the comments, come back here

The reason for this approach: It is a perfect example of the connected approach. Instead of collecting all the information inside one post (book ?), the information can be accessed by following digital threads. It also illustrates that in a connected environment, you do not own the data; the data comes from accountable people.

Building such a modern infrastructure is challenging when your company depends mainly on its legacy—the people, processes and systems. Where to change, how to change and when to change are questions that should be answered at the top and require a vision and evolutionary implementation strategy.

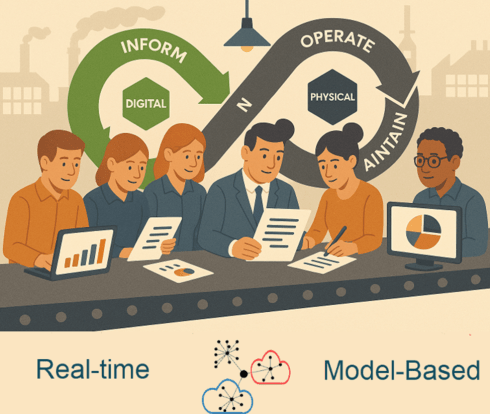

A company should build a layer of connected data on top of the coordinated infrastructure to support users in their new business roles. Implementing a digital twin has significant business benefits if the twin is used to connect with real-time stakeholders from both the virtual and physical worlds.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

But there is more than digital threads with real-time data. On top of this infrastructure, a company can run all kinds of modeling tools, automation and analytics. I noticed that in our PLM community, we might focus too much on the data and not enough on the importance of combining it with a model-based business approach. For more details, read my recent post: Model-based: the elephant in the room.

Again, there are no quotes from the article; you know how to dive deeper into the connected topic.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

Despite the considerable legacy pressure there are already companies implementing a coordinated and connected approach. An excellent description of a potential approach comes from Yousef Hooshmand‘s paper: From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.

You might recognize modern PLM thinking when people talk about the nearest source of truth and the single source of change.

Is Intelligent PLM the next step?

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

So far in this article, I have not mentioned AI as the solution to all our challenges. I see an analogy here with the introduction of the smartphone. 2008 was the moment that platforms were introduced, mainly for consumers. Airbnb, Uber, Amazon, Spotify, and Netflix have appeared and disrupted the traditional ways of selling products and services.

The advantage of these platforms is that they are all created data-driven, not suffering from legacy issues.

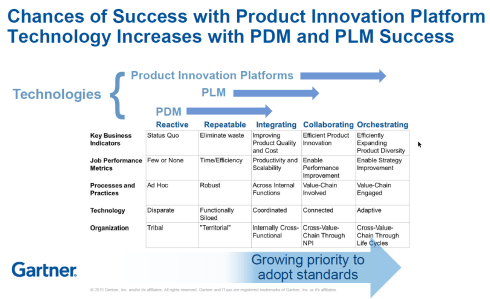

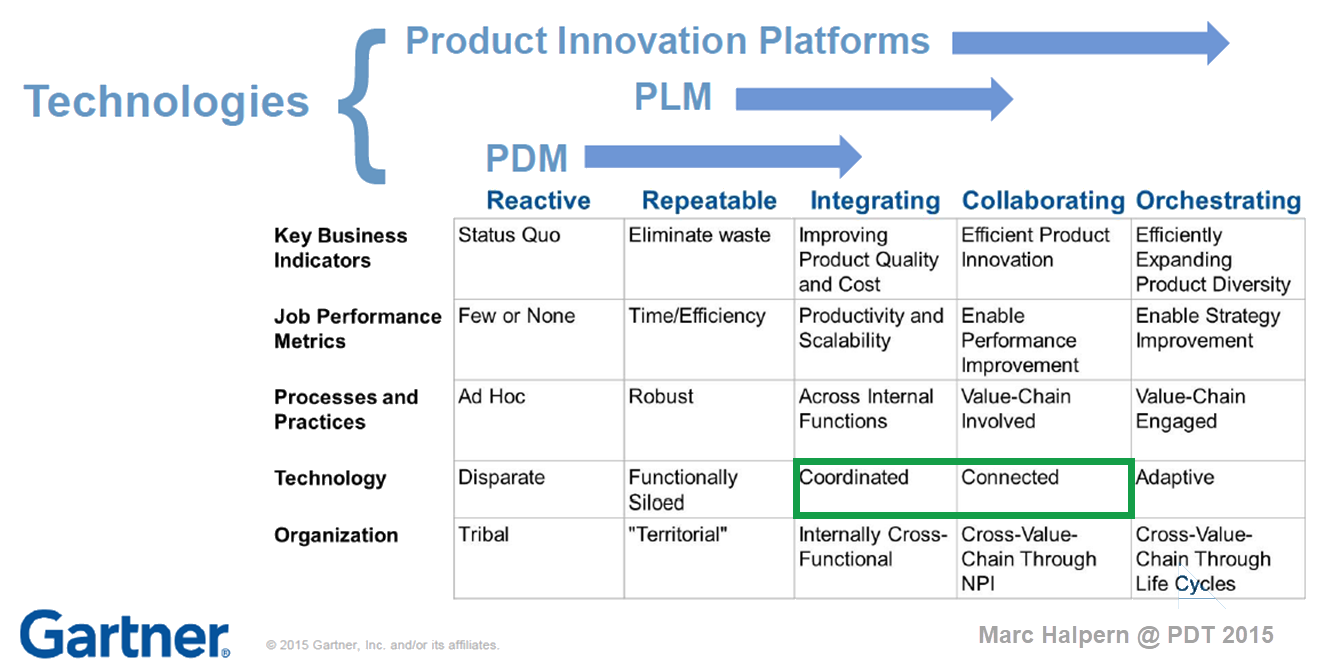

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

In our PLM domain, it took more than 10 years for platforms to become a topic of discussion for businesses. The 2015 PLM Roadmap/PDT conference was the first step in discussing the Product Innovation Platform – see my The Weekend after PDT 2015 post.

At that time, Peter Bilello shared the CIMdata perspective, Marc Halpern (Gartner) showed my favorite positioning slide (below), and Martin Eigner presented, according to my notes, this digital trend in PLM in his session:” What becomes different for PLM/SysLM?”

2015 Marc Halpern – the Product Innovation Platform (PIP)

While concepts started to become clearer, businesses mainly remained the same. The coordinated approach is the most convenient, as you do not need to reshape your organization. And then came the LLMs that changed everything.

Suddenly, it became possible for organizations to unlock knowledge hidden in their company and make it accessible to people.

Without drastically changing the organization, companies could now improve people’s performance and output (theoretically); therefore, it became a topic of interest for management. One big challenge for reaping the benefits is the quality of the data and information accessed.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

I will not dive deeper into this topic today, as Benedict Smith, in his article Intelligent PLM – CFO’s 2025 Vision, did all the work, and I am very much aligned with his statements. It is a long read (7000 words) and a great starting point for discovering the aspects of Intelligent PLM and the connection to the CFO.

You might recognize intelligent PLM thinking when people and AI agents talk about the most likely truth.

Conclusion

Are you interested in these topics and their meaning for your business and career? Join me at the Share PLM conference, where I will discuss “The dilemma: Humans cannot transform—help them!” Time to work on your dreams!

Last week, my memory was triggered by this LinkedIn post and discussion started by Oleg Shilovitsky: Rethinking the Data vs. Process Debate in the Age of Digital Transformation and AI.

me, 1989

In the past twenty years, the debate in the PLM community has changed a lot. PLM started as a central file repository, combined with processes to ensure the correct status and quality of the information.

Then, digital transformation in the PLM domain became achievable and there was a focus shift towards (meta)data. Now, we are entering the era of artificial intelligence, reshaping how we look at data.

In this technology evolution, there are lessons learned that are still valid for 2025, and I want to share some of my experiences in this post.

In addition, it was great to read Martin Eigner’s great reflection on the past 40 years of PDM/PLM. Martin shared his experiences and insights, not directly focusing on the data and processes debate, but very complementary and helping to understand the future.

In addition, it was great to read Martin Eigner’s great reflection on the past 40 years of PDM/PLM. Martin shared his experiences and insights, not directly focusing on the data and processes debate, but very complementary and helping to understand the future.

It started with processes (for me 2003-2014)

In the early days when I worked with SmarTeam, one of my main missions was to develop templates on top of the flexible toolkit SmarTeam.

For those who do not know SmarTeam, it was one of the first Windows PDM/PLM systems, and thanks to its open API (COM-based), companies could easily customize and adapt it. It came with standard data elements and behaviors like Projects, Documents (CAD-specific and Generic), Items and later Products.

For those who do not know SmarTeam, it was one of the first Windows PDM/PLM systems, and thanks to its open API (COM-based), companies could easily customize and adapt it. It came with standard data elements and behaviors like Projects, Documents (CAD-specific and Generic), Items and later Products.

On top of this foundation, almost every customer implemented their business logic (current practices).

And there the problems came …..

The implementations became too much a highly customized environment, not necessarily thought-through as every customer worked differently based on their (paper) history. Thanks to learning from the discussions in the field supporting stalled implementations, I was also assigned to develop templates (e.g. SmarTeam Design Express) and standard methodology (the FDA toolkit), as the mid-market customers requested. The focus was on standard processes.

The implementations became too much a highly customized environment, not necessarily thought-through as every customer worked differently based on their (paper) history. Thanks to learning from the discussions in the field supporting stalled implementations, I was also assigned to develop templates (e.g. SmarTeam Design Express) and standard methodology (the FDA toolkit), as the mid-market customers requested. The focus was on standard processes.

You can read my 2009 observations here: Can chaos become order through PLM?

The need for standardization?

When developing templates (the right data model and processes), it was also essential to provide template processes for releasing a product and controlling the status and product changes – from Engineering Change Request to Engineering Change Order. Many companies had their processes described in their ISO 900x manual, but were they followed correctly?

In 2010, I wrote ECR/ECO for Dummies, and it has been my second most-read post over the years. Only the 2019 post The importance of EBOM and MBOM in PLM (reprise) had more readers. These statistics show that many people are, and were, seeking education on general PLM processes and data model principles.

In 2010, I wrote ECR/ECO for Dummies, and it has been my second most-read post over the years. Only the 2019 post The importance of EBOM and MBOM in PLM (reprise) had more readers. These statistics show that many people are, and were, seeking education on general PLM processes and data model principles.

It was also the time when the PLM communities discussed out-of-the-box or flexible processes as Oleg referred to in his post..

You would expect companies to follow these best practices, and many small and medium enterprises that started with PLM did so. However, I discovered there was and still is the challenge with legacy (people and process), particularly in larger enterprises.

The challenge with legacy

The technology was there, the usability was not there. Many implementations of a PLM system go through a critical stage. Are companies willing to change their methodology and habits to align with common best practices, or do they still want to implement their unique ways of working (from the past)?

The technology was there, the usability was not there. Many implementations of a PLM system go through a critical stage. Are companies willing to change their methodology and habits to align with common best practices, or do they still want to implement their unique ways of working (from the past)?

“The embedded process is limiting our freedom, we need to be flexible”

is an often-heard statement. When every step is micro-managed in the PLM system, you create a bureaucracy detested by the user. In general, when the processes are implemented in a way first focusing on crucial steps with the option to improve later, you will get the best results and acceptance. Nowadays, we could call it an MVP approach.

I have seen companies that created a task or issue for every single activity a person should do. Managers loved the (demo) dashboard. It never lead to success as the approach created frustration at the end user level as their To-Do list grew and grew.

I have seen companies that created a task or issue for every single activity a person should do. Managers loved the (demo) dashboard. It never lead to success as the approach created frustration at the end user level as their To-Do list grew and grew.

Another example of the micro-management mindset is when I worked with a company that had the opposite definition of Version and Revision in their current terminology. Initially, they insisted that the new PLM system should support this, meaning everywhere in the interface where Revisions was mentioned should be Version and the reverse for Version and Revision.

Another example of the micro-management mindset is when I worked with a company that had the opposite definition of Version and Revision in their current terminology. Initially, they insisted that the new PLM system should support this, meaning everywhere in the interface where Revisions was mentioned should be Version and the reverse for Version and Revision.

Can you imagine the cost of implementing and maintaining this legacy per upgrade?

And then came data (for me 2014 – now)

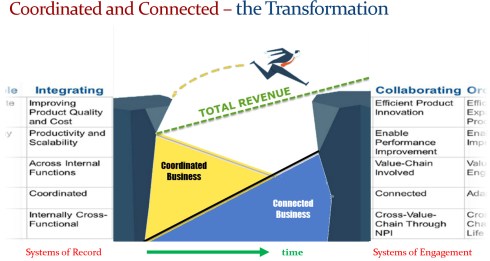

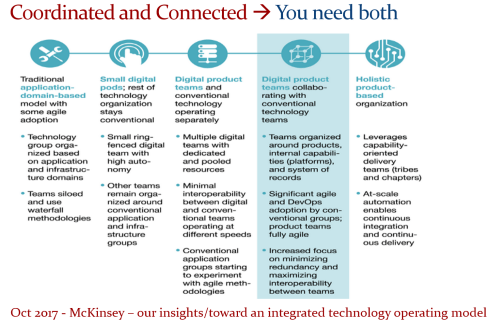

In 2015, during the pivotal PLM Roadmap/PDT conference related to Product Innovation Platforms, it brought the idea of framing digital transformation in the PLM domain in a single sentence: From Coordinated to Connected. See the original image from Marc Halpern here below and those who have read my posts over the years have seen this terminology’s evolution. Now I would say (till 2024): From Coordinated to Coordinated and Connected.

A data-driven approach was not new at that time. Roughly speaking, around 2006 – close to the introduction of the Smartphone – there was already a trend spurred by better global data connectivity at lower cost. Easy connectivity allowed PLM to expand into industries that were not closely connected to 3D CAD systems(CATIA, CREO or NX). Agile PLM, Aras, and SAP PLM became visible – PLM is no longer for design management but also for go-to-market governance in the CPG and apparel industry.

However, a data-driven approach was still rare in mainstream manufacturing companies, where drawings, office documents, email and Excel were the main information carriers next to the dominant ERP system.

A data-driven approach was a consultant’s dream, and when looking at the impact of digital transformation in other parts of the business, why not for PLM, too? My favorite and still valid 2014 image is the one below from Accenture describing Digital PLM. Here business and PLM come together – the WHY!

Again, the challenge with legacy

At that time, I saw a few companies linking their digital transformation to implementing a new PLM system. Those were the days the PLM vendors were battling for the big enterprise deals, sometimes motivated by an IT mindset that unifying the existing PDM/PLM systems would fulfill the digital dream. Science was not winning, but emotion. Read the PLM blame game – still actual.

At that time, I saw a few companies linking their digital transformation to implementing a new PLM system. Those were the days the PLM vendors were battling for the big enterprise deals, sometimes motivated by an IT mindset that unifying the existing PDM/PLM systems would fulfill the digital dream. Science was not winning, but emotion. Read the PLM blame game – still actual.

One of my key observations is that companies struggle when they approach PLM transformation with a migration mindset. Moving from Coordinated to Connected isn’t just about technology—it’s about fundamentally changing how we work. Instead of a document-driven approach, organizations must embrace a data-driven, connected way of working.

The PLM community increasingly agrees that PLM isn’t a single system; it’s a strategy that requires a federated approach—whether through SaaS or even beyond it.

Before AI became a hype, we discussed the digital thread, digital twins, graph databases, ontologies, and data meshes. Legacy – people (skills), processes(rigid) and data(not reliable) – are the elephant in the room. Yet, the biggest challenge remains: many companies see PLM transformation as just buying new tools.

Before AI became a hype, we discussed the digital thread, digital twins, graph databases, ontologies, and data meshes. Legacy – people (skills), processes(rigid) and data(not reliable) – are the elephant in the room. Yet, the biggest challenge remains: many companies see PLM transformation as just buying new tools.

A fundamental transformation requires a hybrid approach—maintaining traditional operations while enabling multidisciplinary, data-driven teams. However, this shift demands new skills and creates the need to learn and adapt, and many organizations hesitate to take that risk.

In his Product Data Plumber Perspective on 2025. Rob Ferrone addressed the challenge to move forward too, and I liked one of his responses in the underlying discussion that says it all – it is hard to get out of your day to day comfort (and data):

Rob Ferrone’s quote:

Transformations are announced, followed by training, then communication fades. Plans shift, initiatives are replaced, and improvements are delayed for the next “fix-all” solution. Meanwhile, employees feel stuck, their future dictated by a distant, ever-changing strategy team.

And then there is Artificial Intelligence (2024 ……)

In the past two years, I have been reading and digesting much news related to AI, particularly generative AI.

In the past two years, I have been reading and digesting much news related to AI, particularly generative AI.

Initially, I was a little skeptical because of all the hallucinations and hype; however, the progress in this domain is enormous.

I believe that AI has the potential to change our digital thread and digital twin concepts dramatically where the focus was on digital continuity of data.

Now this digital continuity might not be required, reading articles like The End of SaaS (a more and more louder voice), usage of the Fusion Strategy (the importance of AI) and an (academic) example, on a smaller scale, I about learned last year the Swedish Arrowhead™ fPVN project.

I hope that five years from now, there will not be a paragraph with the title Pity there was again legacy.

We should have learned from the past that there is always the first wave of tools – they come with a big hype and promise – think about the Startgate Project but also Deepseek.

Still remember, the change comes from doing things differently, not from efficiency gains. To do things differently you need an educated, visionary management with the power and skills to take a company in a new direction. If not, legacy will win (again)

Still remember, the change comes from doing things differently, not from efficiency gains. To do things differently you need an educated, visionary management with the power and skills to take a company in a new direction. If not, legacy will win (again)

Conclusion

In my 25 years of working in the data management domain, now known as PLM, I have seen several impressive new developments – from 2D to 3D, from documents to data, from physical prototypes to models and more. All these developments took decades to become mainstream. Whilst the technology was there, the legacy kept us back. Will this ever change? Your thoughts?

The pivotal 2015 PLM Roadmap / PDT conference

Most times in this PLM and Sustainability series, Klaus Brettschneider and Jos Voskuil from the PLM Green Global Alliance core team speak with PLM related vendors or service partners.

Most times in this PLM and Sustainability series, Klaus Brettschneider and Jos Voskuil from the PLM Green Global Alliance core team speak with PLM related vendors or service partners.

This year we have been speaking with Transition Technologies PSC, Configit, aPriori, Makersite and the PLM Vendors PTC, Siemens and SAP.

Where the first group of companies provided complementary software offerings to support sustainability – “the fourth dimension”– the PLM vendors focused more on the solutions within their portfolio.

This time we spoke with , CIMPA PLM services, a company supporting their customers with PLM and Sustainability challenges, offering an end-to-end support.

What makes them special is that they are also core partner of the PLM Global Green Alliance, where they moderate the Circular Economy theme – read their introduction here: PLM and Circular Economy.

CIMPA PLM services

We spoke with Pierre DAVID and Mahdi BESBES from CIMPA PLM services. Pierre is an environmental engineer and Mahdi is a consulting manager focusing on parts/components traceability in the context of sustainability and a circular economy. Many of the activities described by Pierre and Mahdi were related to the aerospace industry.

We spoke with Pierre DAVID and Mahdi BESBES from CIMPA PLM services. Pierre is an environmental engineer and Mahdi is a consulting manager focusing on parts/components traceability in the context of sustainability and a circular economy. Many of the activities described by Pierre and Mahdi were related to the aerospace industry.

We had an enjoyable and in-depth discussion of sustainability, as the aerospace industry is well-advanced in traceability during the upstream design processes. Good digital traceability is an excellent foundation to extend for sustainability purposes.

CSRD, LCA, DPP, AI and more

A bunch of abbreviations you will have to learn. We went through the need for a data-driven PLM infrastructure to support sustainability initiatives, like Life Cycle Assessments and more. We zoomed in on the current Corporate Sustainability Reporting Directive(CSRD) highlighting the challenges with the CSRD guidelines and how to connect the strategy (why we do the CSRD) to its execution (providing reports and KPIs that make sense to individuals).

In addition, we discussed the importance of using the proper methodology and databases for lifecycle assessments. Looking forward, we discussed the potential of AI and the value of the Digital Product Passport for products in service.

Enjoy the 37 minutes discussion and you are always welcome to comment or start a discussion with us.

What we learned

- Sustainability initiatives are quite mature in the aerospace industry and thanks to its nature of traceability, this industry is leading in methodology and best practices.

- The various challenges with the CSRD directive – standardization, strategy and execution.

- The importance of the right databases when performing lifecycle analysis.

- CIMPA is working on how AI can be used for assessing environmental impacts and the value of the Digital Product Passport for products in service to extend its traceability

Want to learn more?

Here are some links related to the topics discussed in our meeting:

- CIMPA’s theme page on the PLM Green website: PLM and Circular Economy

- CIMPA’s commitments towards A sustainable, human and guiding approach

- Sopra Steria, CIMPA’s parent company: INSIDE #8 magazine

Conclusion

The discussion was insightful, given the advanced environment in which CIMPA consultants operate compared to other manufacturing industries. Our dialogue offered valuable lessons in the aerospace industry, that others can draw on to advance and better understand their sustainability initiatives

Congratulations if you have shown you can resist the psychological and emotional pressure and did not purchase anything in the context of Black Friday. However, we must not forget that another big part of the world cannot afford this behavior, as they do not have the means to do so – ultimate Black Friday might be their dream and a fast track to more enormous challenges.

Congratulations if you have shown you can resist the psychological and emotional pressure and did not purchase anything in the context of Black Friday. However, we must not forget that another big part of the world cannot afford this behavior, as they do not have the means to do so – ultimate Black Friday might be their dream and a fast track to more enormous challenges.

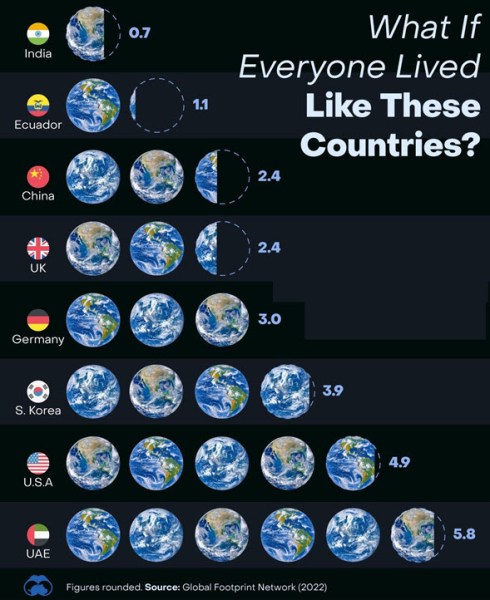

The difference between our societies, all living on the same planet, is illustrated in the image below, illustrating the unfairness of this situation

What the image also shows is a warning that we all have to act, as step by step, we will reach planet boundaries for resources.

Or we need more planets, and I understand a brilliant guy is already working on it. Let’s go to Mars and enjoy life there.

Or we need more planets, and I understand a brilliant guy is already working on it. Let’s go to Mars and enjoy life there.

For those generations staying on this planet, there is only one option: we need to change our economy of unlimited growth and reconsider how we use our natural resources.

The circular economy?

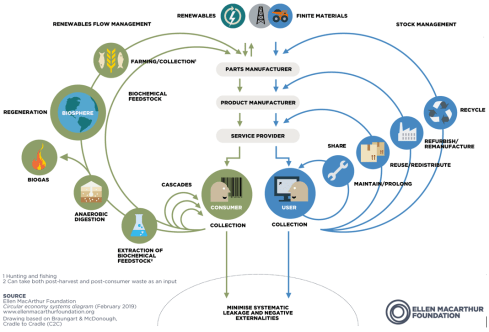

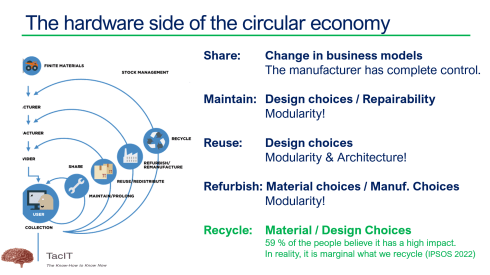

You are probably familiar with the butterfly diagram from the Ellen MacArthur Foundation, where we see the linear process: Take-Make-Use-Waste in the middle.

This approach should be replaced by more advanced regeneration loops on the left side and the five R’s on the right: Reduce, Repair, Reuse, Refurbish and Recycle as the ultimate goal is the minimum leakage of Earth resources.

Closely related to the Circular Economy concept is the complementary Cradle-To-Cradle design approach. In this case, while designing our products, we also consider the end of life of a product as the start for other products to be created based on the materials used.

The CE butterfly diagram’s right side is where product design plays a significant role and where we, as a PLM community, should be active. Each loop has its own characteristics, and the SHARE loop is the one I focused on during the recent PLM Roadmap / PDT Europe conference in Gothenburg.

As you can see, the Maintain, Reuse, Refurbish and Recycle loops depend on product design strategies, in particular, modularity and, of course, depending on material choices.

It is important to note that the recycle loop is the most overestimated loop, where we might contribute to recycling (glass, paper, plastic) in our daily lives; however, other materials, like composites often with embedded electronics, have a much more significant impact.

It is important to note that the recycle loop is the most overestimated loop, where we might contribute to recycling (glass, paper, plastic) in our daily lives; however, other materials, like composites often with embedded electronics, have a much more significant impact.

Watch the funny meme in this post: “We did everything we could– we brought our own bags.”

![]() The title of my presentation was: Products as a Service – The Ultimate Sustainable Economy?

The title of my presentation was: Products as a Service – The Ultimate Sustainable Economy?

You can find my presentation on SlideShare here.

Let’s focus on the remainder of the presentation’s topic: Product As A Service.

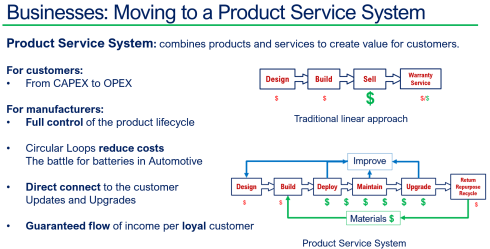

The Product Service System

Where Product As A Service might be the ultimate dream for an almost wasteless society, Ida Auken, a Danish member of the parliament, gave a thought-provoking lecture in that context at the 2016 World Economic Forum. Her lecture was summarized afterward as

Where Product As A Service might be the ultimate dream for an almost wasteless society, Ida Auken, a Danish member of the parliament, gave a thought-provoking lecture in that context at the 2016 World Economic Forum. Her lecture was summarized afterward as

“In the future, you will own nothing and be happy.”

A theme also picked up by conspiracy thinkers during the COVID pandemic, claiming “they” are making us economic slaves and consumers. With Black Friday in mind, I do not think there is a conspiracy; it is the opposite.

Closer to implementing everywhere Product as a Service for our whole economy, we might be going into Product Service Systems.

As the image shows, a product service system is a combination of providing a product with related services to create value for the customer.

In the ultimate format, the manufacturer owns the products and provides the services, keeping full control of the performance and materials during the product lifecycle. The benefits for the customer are that they pay only for the usage of the product and, therefore, do not need to invest upfront in the solution (CAPEX), but they only pay when using the solution (OPEX).

A great example of this concept is Spotify or other streaming services. You do not pay for the disc/box anymore; you pay for the usage, and the model is a win-win for consumers (many titles) and producers (massive reach).

A great example of this concept is Spotify or other streaming services. You do not pay for the disc/box anymore; you pay for the usage, and the model is a win-win for consumers (many titles) and producers (massive reach).

Although the Product Service System will probably reach consumers later, the most significant potential is currently in the B2B business model, e.g., transportation as a service and special equipment usage as a service. Examples are popping up in various industries.

My presentation focused on three steps that manufacturing companies need to consider now and in the future when moving to a Product Service System.

My presentation focused on three steps that manufacturing companies need to consider now and in the future when moving to a Product Service System.

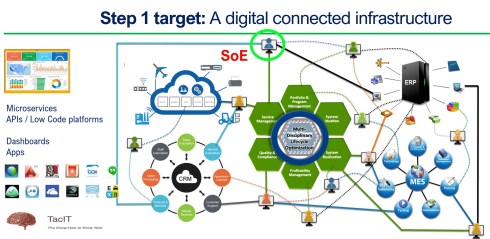

Step 1: Get (digital) connected to your Product and customer

A foundational step companies must take is to create a digital infrastructure to support all stakeholders in the product service offering. Currently, many companies have a siloed approach where each discipline Marketing/Sales, R&D, Engineering, Manufacturing and Sales will have their own systems.

Digital Transformation in the PLM domain is needed here – where are you on this level?

But it is not only the technical silos that impede the end-to-end visibility of information. If there are no business targets to create and maintain the end-to-end information sharing, you can not expect it to happen.

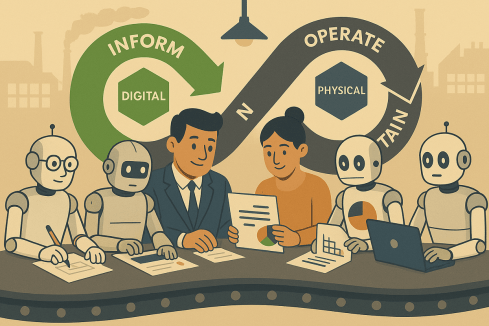

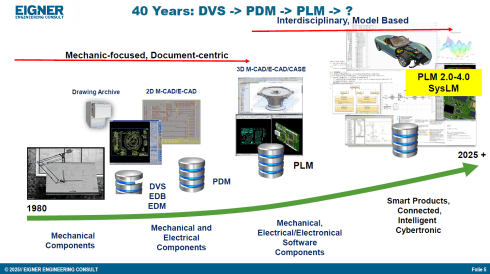

Therefore, companies should invest in the digitalization of their ways of working, implementing an end-to-end digital thread AND changing their linear New Product Development process into a customer-driven DevOp approach. The PTC image below shows the way to imagine a end-to-end connected environment

In a Product Service System, the customer is the solution user, and the solution provider is responsible for the uptime and improvement of the solution over time.

As an upcoming bonus and a must, companies need to use AI to run their Product Service System as it will improve customer knowledge and trends. Don’t forget that AI (and Digital Twins) runs best on reliable data.

As an upcoming bonus and a must, companies need to use AI to run their Product Service System as it will improve customer knowledge and trends. Don’t forget that AI (and Digital Twins) runs best on reliable data.

Step 2 From Product to Experience

A Product Service System is not business as usual by providing products with some additional services. Besides concepts such as Digital Thread and Digital Twins of the solution, there is also the need to change the company’s business model.

In the old way, customers buy the product; in the Product Service System, the customer becomes a user. We should align the company and business to become user-centric and keep the user inspired by the experience of the Product Service System.

In the old way, customers buy the product; in the Product Service System, the customer becomes a user. We should align the company and business to become user-centric and keep the user inspired by the experience of the Product Service System.

In this context, there are two interesting articles to read:

- Jan Bosch: From Agile to Radical: Business Model

- Chris Seiler: How to escape the vicious circle in times of transformation?

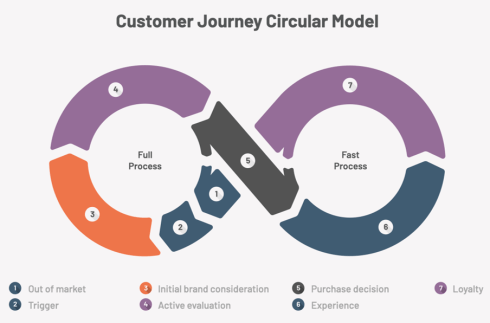

The change in business model means that companies should think about a circular customer journey.

As the company will remain the product owner, it is crucial to understand what happens when the customers stop using the service or how to ensure maintenance and upgrades.

In addition, to keep the customer satisfied, it remains vital to discover the customer KPIs and how additional services could potentially improve the relationship. Again, AI can help find relationships that are not yet digitally established.

Step 2: From product to experience can already significantly impact organizations. The traditional salesperson’s role will disappear and be replaced by excellence in marketing, services and product management.

Step 2: From product to experience can already significantly impact organizations. The traditional salesperson’s role will disappear and be replaced by excellence in marketing, services and product management.

This will not happen quickly as, besides the vision, there needs to be an evolutionary path to the new business model.

Therefore, companies must analyze their portfolio and start experimenting with a small product, converting it into a product service system. Starting simple allows companies to learn and be prepared for scaling up.

A Product Service System also influences a company’s cash flow as revenue streams will change.

When scaling up slowly, the company might be able to finance this transition themselves. Another option, already happening, is for a third party to finance the Product Service System – think about car leasing, power by the hour, or some industrial equipment vendors.

When scaling up slowly, the company might be able to finance this transition themselves. Another option, already happening, is for a third party to finance the Product Service System – think about car leasing, power by the hour, or some industrial equipment vendors.

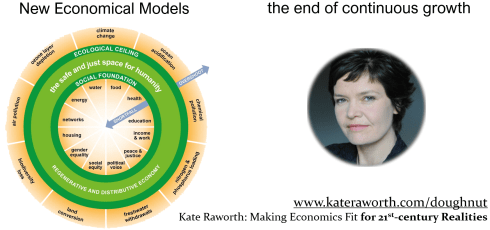

Step 3 Towards a doughnut economy?

The last step is probably a giant step or even a journey. An economic mindset shift is needed from the ever-growing linear economy towards an economy flourishing for everyone within economic, environmental and social boundaries.

Unlimited growth is the biggest misconception on a planet reaching its borders. Either we need more planets, or we need to adjust our society.

In that context, I read the book “The Doughnut Economy” by Kate Raworth, a recognized thought leader who explains how a future economic model can flourish, including a circular economy, and you will be happy.

But we must abandon the old business models and habits – there will be a lot of resistance to change before people are forced to change. This change can take generations as the outside world will not change without a reason, and the established ones will fight for their privileges.

But we must abandon the old business models and habits – there will be a lot of resistance to change before people are forced to change. This change can take generations as the outside world will not change without a reason, and the established ones will fight for their privileges.

It is a logical process where people and boundaries will learn to find a new balance. Will it be in a Doughnut Economy, or did we overlook some bright other concepts?

Conclusion

The week after Black Friday and hopefully the month after all the Christmas presents, it is time to formulate your good intentions for 2025. As humans, we should consume less; as companies, we should direct our future to a sustainable future by exploring the potential of the Product Service System and beyond.

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

There were several interesting sessions which I will not mention here as I want to focus on forward-looking topics with a mix of (federated) data-driven PLM environments and the applicability of AI, staying around 1500 words.

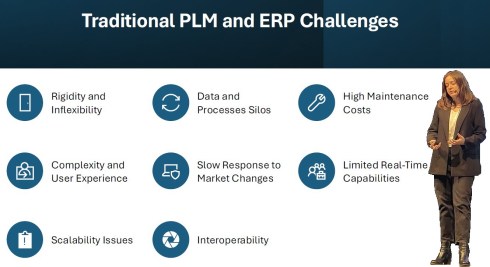

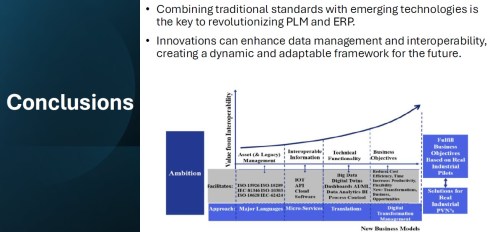

R-evolutionizing PLM and ERP and Heliple

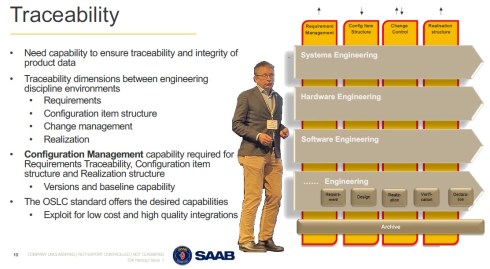

Cristina Paniagua from the Luleå University of Technology closed the first day of the conference, giving us food for thought to discuss over dinner. Her session, describing the Arrowhead fPTN project, fitted nicely with the concepts of the Federated PLM Heliple project presented by Erik Herzog also on Day 2.

They are both research products related to the future state of a digital enterprise. Therefore, it makes sense to treat them together.

Cristina’s session started with sharing the challenges of traditional PLM and ERP systems:

These statements align with the drivers of the Heliple project. The PLM and ERP systems—Systems of Record—provide baselines and traceability. However, Systems of Record have not historically been designed to support real-time collaboration or to create an attractive user experience.

The Heliple project focuses on connecting various modules—the horizontal bars—for systems engineering, hardware engineering, etc., as real-time collaboration environments that can be highly customized and replaceable if needed. The Heliple project explored the usage of OSLC to connect these modules, the Systems of Engagement, with the Systems of Record.

![]() By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

Now, the Heliple team is aiming to expand their research with industry scale validation through the Demoiple project (Validate that the Heliple-2 technology can be implemented and accredited in Saab Aeronautics’ operational IT) combined with the Nextiple project, where they will investigate the role of heterogeneous information models/ontologies for heterogeneous analysis.

![]() If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

The resulting technology is projected to impact manufacturing productivity and flexibility substantially.

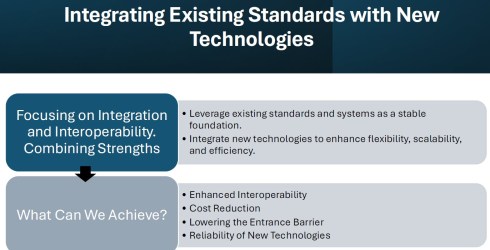

The exciting starting point of the Arrowhead project is that it wants to use existing standards and systems as a foundation and, on top of that, create a business and user-oriented layer, using modern technologies such as micro-services to support real-time processing and semantic technologies, ontologies, system modeling, and AI for data translations and learning—a much broader and ambitious scope than the Heliple project.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

Potentially, there are five platforms with their own internal ways of working, a mix of systems of record and systems of engagement, supported by an overlay of several Systems of Engagement environments.

![]() I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

See below my artist’s impression of how a Service Engineer would work in its app connected to CRM, PLM and ERP platform datasets:

The exciting part of the Arrowhead fPVN project is that it wants to explore the interactions between systems and user roles based on existing mature standards instead of leaving the connections to software developers.

Christina mentioned some of these standards below:

I greatly support this approach as, historically, much knowledge and effort has been put into developing standards to support interoperability. Maybe not in real-time, but the embedded knowledge in these standards will speed up the broader usage. Therefore, I concur with the concluding slide:

A final comment: Industrial users must push for these standards if they do not want a future vendor lock-in. Vendors will do what the majority of their customers ask for but will also keep their customers’ data in proprietary formats to prevent them from switching to another system.

Accelerated Product Development Enabled by Digitalization

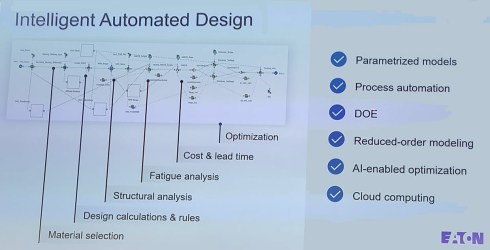

![]() The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

With its broad range of products, Eaton is exploring new, innovative ways to accelerate product design by modeling the design process and applying AI to narrow design decisions and customer-specific engineering work. The picture below shows the areas of attention needed to model the design processes. Uyiosa mentioned the significant beneficial results that have already been reached.

Together with generative design, Eaton works towards modern digital engineering processes built on models and knowledge. His session was complementary to the Heliple and Arrowhead story. To reach such a contemporary design engineering environment, it must be data-driven and built upon open PLM and software components to fully use AI and automation.

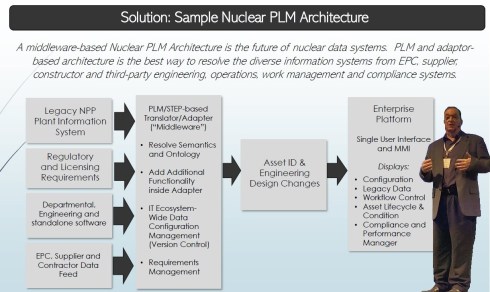

Next Gen” Life Cycle Management in Next-Gen Nuclear Power and LTO Legacy Plants