You are currently browsing the tag archive for the ‘Artificial Intelligence’ tag.

Last week, my memory was triggered by this LinkedIn post and discussion started by Oleg Shilovitsky: Rethinking the Data vs. Process Debate in the Age of Digital Transformation and AI.

me, 1989

In the past twenty years, the debate in the PLM community has changed a lot. PLM started as a central file repository, combined with processes to ensure the correct status and quality of the information.

Then, digital transformation in the PLM domain became achievable and there was a focus shift towards (meta)data. Now, we are entering the era of artificial intelligence, reshaping how we look at data.

In this technology evolution, there are lessons learned that are still valid for 2025, and I want to share some of my experiences in this post.

In addition, it was great to read Martin Eigner’s great reflection on the past 40 years of PDM/PLM. Martin shared his experiences and insights, not directly focusing on the data and processes debate, but very complementary and helping to understand the future.

In addition, it was great to read Martin Eigner’s great reflection on the past 40 years of PDM/PLM. Martin shared his experiences and insights, not directly focusing on the data and processes debate, but very complementary and helping to understand the future.

It started with processes (for me 2003-2014)

In the early days when I worked with SmarTeam, one of my main missions was to develop templates on top of the flexible toolkit SmarTeam.

For those who do not know SmarTeam, it was one of the first Windows PDM/PLM systems, and thanks to its open API (COM-based), companies could easily customize and adapt it. It came with standard data elements and behaviors like Projects, Documents (CAD-specific and Generic), Items and later Products.

For those who do not know SmarTeam, it was one of the first Windows PDM/PLM systems, and thanks to its open API (COM-based), companies could easily customize and adapt it. It came with standard data elements and behaviors like Projects, Documents (CAD-specific and Generic), Items and later Products.

On top of this foundation, almost every customer implemented their business logic (current practices).

And there the problems came …..

The implementations became too much a highly customized environment, not necessarily thought-through as every customer worked differently based on their (paper) history. Thanks to learning from the discussions in the field supporting stalled implementations, I was also assigned to develop templates (e.g. SmarTeam Design Express) and standard methodology (the FDA toolkit), as the mid-market customers requested. The focus was on standard processes.

The implementations became too much a highly customized environment, not necessarily thought-through as every customer worked differently based on their (paper) history. Thanks to learning from the discussions in the field supporting stalled implementations, I was also assigned to develop templates (e.g. SmarTeam Design Express) and standard methodology (the FDA toolkit), as the mid-market customers requested. The focus was on standard processes.

You can read my 2009 observations here: Can chaos become order through PLM?

The need for standardization?

When developing templates (the right data model and processes), it was also essential to provide template processes for releasing a product and controlling the status and product changes – from Engineering Change Request to Engineering Change Order. Many companies had their processes described in their ISO 900x manual, but were they followed correctly?

In 2010, I wrote ECR/ECO for Dummies, and it has been my second most-read post over the years. Only the 2019 post The importance of EBOM and MBOM in PLM (reprise) had more readers. These statistics show that many people are, and were, seeking education on general PLM processes and data model principles.

In 2010, I wrote ECR/ECO for Dummies, and it has been my second most-read post over the years. Only the 2019 post The importance of EBOM and MBOM in PLM (reprise) had more readers. These statistics show that many people are, and were, seeking education on general PLM processes and data model principles.

It was also the time when the PLM communities discussed out-of-the-box or flexible processes as Oleg referred to in his post..

You would expect companies to follow these best practices, and many small and medium enterprises that started with PLM did so. However, I discovered there was and still is the challenge with legacy (people and process), particularly in larger enterprises.

The challenge with legacy

The technology was there, the usability was not there. Many implementations of a PLM system go through a critical stage. Are companies willing to change their methodology and habits to align with common best practices, or do they still want to implement their unique ways of working (from the past)?

The technology was there, the usability was not there. Many implementations of a PLM system go through a critical stage. Are companies willing to change their methodology and habits to align with common best practices, or do they still want to implement their unique ways of working (from the past)?

“The embedded process is limiting our freedom, we need to be flexible”

is an often-heard statement. When every step is micro-managed in the PLM system, you create a bureaucracy detested by the user. In general, when the processes are implemented in a way first focusing on crucial steps with the option to improve later, you will get the best results and acceptance. Nowadays, we could call it an MVP approach.

I have seen companies that created a task or issue for every single activity a person should do. Managers loved the (demo) dashboard. It never lead to success as the approach created frustration at the end user level as their To-Do list grew and grew.

I have seen companies that created a task or issue for every single activity a person should do. Managers loved the (demo) dashboard. It never lead to success as the approach created frustration at the end user level as their To-Do list grew and grew.

Another example of the micro-management mindset is when I worked with a company that had the opposite definition of Version and Revision in their current terminology. Initially, they insisted that the new PLM system should support this, meaning everywhere in the interface where Revisions was mentioned should be Version and the reverse for Version and Revision.

Another example of the micro-management mindset is when I worked with a company that had the opposite definition of Version and Revision in their current terminology. Initially, they insisted that the new PLM system should support this, meaning everywhere in the interface where Revisions was mentioned should be Version and the reverse for Version and Revision.

Can you imagine the cost of implementing and maintaining this legacy per upgrade?

And then came data (for me 2014 – now)

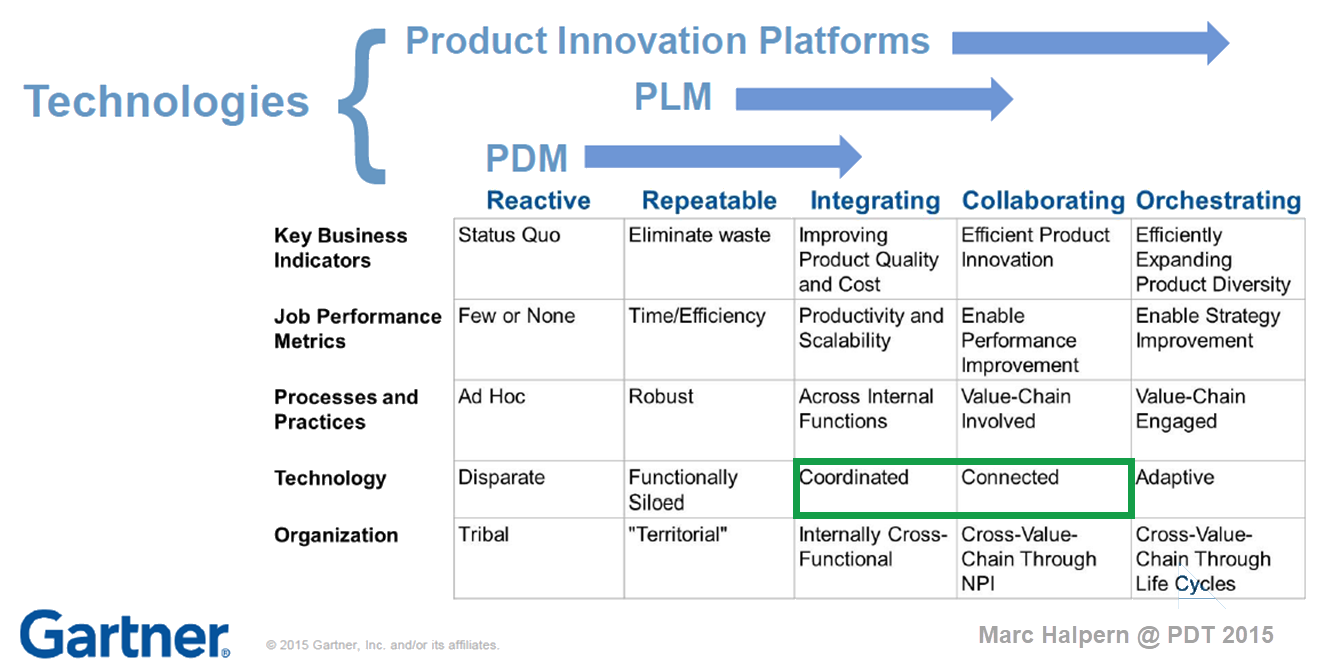

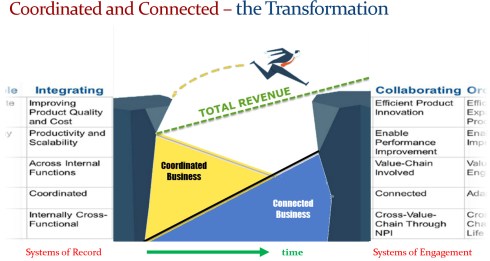

In 2015, during the pivotal PLM Roadmap/PDT conference related to Product Innovation Platforms, it brought the idea of framing digital transformation in the PLM domain in a single sentence: From Coordinated to Connected. See the original image from Marc Halpern here below and those who have read my posts over the years have seen this terminology’s evolution. Now I would say (till 2024): From Coordinated to Coordinated and Connected.

A data-driven approach was not new at that time. Roughly speaking, around 2006 – close to the introduction of the Smartphone – there was already a trend spurred by better global data connectivity at lower cost. Easy connectivity allowed PLM to expand into industries that were not closely connected to 3D CAD systems(CATIA, CREO or NX). Agile PLM, Aras, and SAP PLM became visible – PLM is no longer for design management but also for go-to-market governance in the CPG and apparel industry.

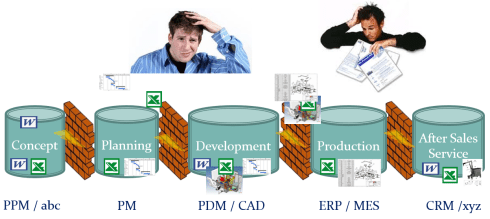

However, a data-driven approach was still rare in mainstream manufacturing companies, where drawings, office documents, email and Excel were the main information carriers next to the dominant ERP system.

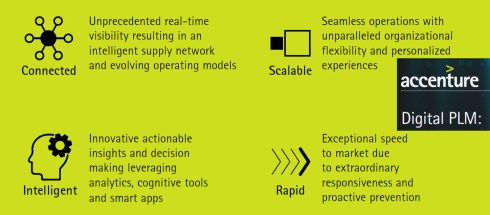

A data-driven approach was a consultant’s dream, and when looking at the impact of digital transformation in other parts of the business, why not for PLM, too? My favorite and still valid 2014 image is the one below from Accenture describing Digital PLM. Here business and PLM come together – the WHY!

Again, the challenge with legacy

At that time, I saw a few companies linking their digital transformation to implementing a new PLM system. Those were the days the PLM vendors were battling for the big enterprise deals, sometimes motivated by an IT mindset that unifying the existing PDM/PLM systems would fulfill the digital dream. Science was not winning, but emotion. Read the PLM blame game – still actual.

At that time, I saw a few companies linking their digital transformation to implementing a new PLM system. Those were the days the PLM vendors were battling for the big enterprise deals, sometimes motivated by an IT mindset that unifying the existing PDM/PLM systems would fulfill the digital dream. Science was not winning, but emotion. Read the PLM blame game – still actual.

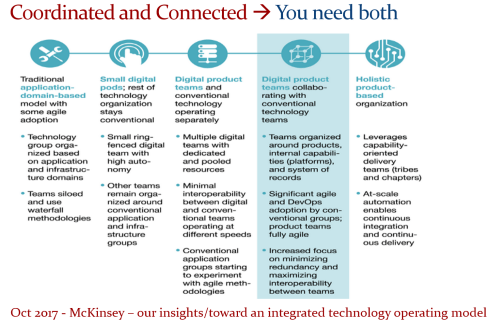

One of my key observations is that companies struggle when they approach PLM transformation with a migration mindset. Moving from Coordinated to Connected isn’t just about technology—it’s about fundamentally changing how we work. Instead of a document-driven approach, organizations must embrace a data-driven, connected way of working.

The PLM community increasingly agrees that PLM isn’t a single system; it’s a strategy that requires a federated approach—whether through SaaS or even beyond it.

Before AI became a hype, we discussed the digital thread, digital twins, graph databases, ontologies, and data meshes. Legacy – people (skills), processes(rigid) and data(not reliable) – are the elephant in the room. Yet, the biggest challenge remains: many companies see PLM transformation as just buying new tools.

Before AI became a hype, we discussed the digital thread, digital twins, graph databases, ontologies, and data meshes. Legacy – people (skills), processes(rigid) and data(not reliable) – are the elephant in the room. Yet, the biggest challenge remains: many companies see PLM transformation as just buying new tools.

A fundamental transformation requires a hybrid approach—maintaining traditional operations while enabling multidisciplinary, data-driven teams. However, this shift demands new skills and creates the need to learn and adapt, and many organizations hesitate to take that risk.

In his Product Data Plumber Perspective on 2025. Rob Ferrone addressed the challenge to move forward too, and I liked one of his responses in the underlying discussion that says it all – it is hard to get out of your day to day comfort (and data):

Rob Ferrone’s quote:

Transformations are announced, followed by training, then communication fades. Plans shift, initiatives are replaced, and improvements are delayed for the next “fix-all” solution. Meanwhile, employees feel stuck, their future dictated by a distant, ever-changing strategy team.

And then there is Artificial Intelligence (2024 ……)

In the past two years, I have been reading and digesting much news related to AI, particularly generative AI.

In the past two years, I have been reading and digesting much news related to AI, particularly generative AI.

Initially, I was a little skeptical because of all the hallucinations and hype; however, the progress in this domain is enormous.

I believe that AI has the potential to change our digital thread and digital twin concepts dramatically where the focus was on digital continuity of data.

Now this digital continuity might not be required, reading articles like The End of SaaS (a more and more louder voice), usage of the Fusion Strategy (the importance of AI) and an (academic) example, on a smaller scale, I about learned last year the Swedish Arrowhead™ fPVN project.

I hope that five years from now, there will not be a paragraph with the title Pity there was again legacy.

We should have learned from the past that there is always the first wave of tools – they come with a big hype and promise – think about the Startgate Project but also Deepseek.

Still remember, the change comes from doing things differently, not from efficiency gains. To do things differently you need an educated, visionary management with the power and skills to take a company in a new direction. If not, legacy will win (again)

Still remember, the change comes from doing things differently, not from efficiency gains. To do things differently you need an educated, visionary management with the power and skills to take a company in a new direction. If not, legacy will win (again)

Conclusion

In my 25 years of working in the data management domain, now known as PLM, I have seen several impressive new developments – from 2D to 3D, from documents to data, from physical prototypes to models and more. All these developments took decades to become mainstream. Whilst the technology was there, the legacy kept us back. Will this ever change? Your thoughts?

The pivotal 2015 PLM Roadmap / PDT conference

Another year passed, and as usual, I took the time to look back. I always feel that things are going so much slower than expected. But that’s reality – there is always friction, and in particular, in the PLM domain, there is so much legacy we cannot leave behind.

Another year passed, and as usual, I took the time to look back. I always feel that things are going so much slower than expected. But that’s reality – there is always friction, and in particular, in the PLM domain, there is so much legacy we cannot leave behind.

It is better to plan what we can do in 2024 to be prepared for the next steps or, if lucky, even implement the next steps in progress.

In this post, I will discuss four significant areas of attention (AI – DATA – PEOPLE – SUSTAINABILITY) in an alphabetic order, not prioritized.

Here are some initial thoughts. In the upcoming weeks I will elaborate further on them and look forward to your input.

AI (Artificial Intelligence)

![]() Where would I be without talking about AI?

Where would I be without talking about AI?

When you look at the image below, the Gartner Hype Cycle for AI in 2023, you see the potential coming on the left, with Generative AI at the peak.

Part of the hype comes from the availability of generative AI tools in the public domain, allowing everyone to play with them or use them. Some barriers are gone, but what does it mean? Many AI tools can make our lives easier, and there is for sure no threat if our job does not depend on standard practices.

AI and People

When I was teaching physics in high school, it was during the introduction of the pocket calculator, which replaced the slide rule.You need to be skilled to uyse the slide rule, now there was a device that gave immediate answers. Was this bad for the pupils?

When I was teaching physics in high school, it was during the introduction of the pocket calculator, which replaced the slide rule.You need to be skilled to uyse the slide rule, now there was a device that gave immediate answers. Was this bad for the pupils?

If you do not know a slide rule, it was en example of new technology replacing old tools, providing more time for other details. Click on the image or read more about the slide rule here on Wiki.

![]() Or today you would ask the question about the slide rule to ChatGPT? Does generative AI mean the end of Wikipedia? Or does generative AI need the common knowledge of sites like Wikipedia?

Or today you would ask the question about the slide rule to ChatGPT? Does generative AI mean the end of Wikipedia? Or does generative AI need the common knowledge of sites like Wikipedia?

AI can empower people in legacy environments, when working with disconnected systems. AI will be a threat for to people and companies that rely on people and processes to bring information together without adding value. These activities will disappear soon and you must consider using this innovative approach.

During the recent holiday period, there was an interesting discussion about why companies are reluctant to change and implement better solution concepts. Initially launched by Alex Bruskin here on LinkedIn , the debate spilled over into the topic of TECHNICAL DEBT , well addressed here by Lionel Grealou.

During the recent holiday period, there was an interesting discussion about why companies are reluctant to change and implement better solution concepts. Initially launched by Alex Bruskin here on LinkedIn , the debate spilled over into the topic of TECHNICAL DEBT , well addressed here by Lionel Grealou.

![]() Both articles and the related discussion in the comments are recommended to follow and learn.

Both articles and the related discussion in the comments are recommended to follow and learn.

AI and Sustainability

![]() Similar to the introduction of Bitcoin using blockchain technology, some people are warning about the vast energy consumption required for training and interaction with Large Language Models (LLM), as Sasha Luccioni explains in her interesting TED talk when addressing sustainability.

Similar to the introduction of Bitcoin using blockchain technology, some people are warning about the vast energy consumption required for training and interaction with Large Language Models (LLM), as Sasha Luccioni explains in her interesting TED talk when addressing sustainability.

She proposes that tech companies should be more transparent on this topic, the size and the type of the LLM matters, as the indicative picture below illustrates.

Carbon Emissions of LLMs compared

In addition, I found an interesting article discussing the pros and cons of AI related to Sustainability. The image below from the article Risks and Benefits of Large Language Models for the Environment illustrates nicely that we must start discussing and balancing these topics.

![]() To conclude, in discussing AI related to sustainability, I see the significant advantage of using generative AI for ESG reporting.

To conclude, in discussing AI related to sustainability, I see the significant advantage of using generative AI for ESG reporting.

ESG reporting is currently a very fragmented activity for organizations, based on (marketing) people’s goodwill and currently these reports are not always be evidence-based.

Data

The transformation from a coordinated, document-driven enterprise towards a hybrid coordinated/connected enterprise using a data-driven approach became increasingly visible in 2023. I expect this transformation to grow faster in 2024 – the momentum is here.

The transformation from a coordinated, document-driven enterprise towards a hybrid coordinated/connected enterprise using a data-driven approach became increasingly visible in 2023. I expect this transformation to grow faster in 2024 – the momentum is here.

We saw last year that the discussions related to Federated PLM nicely converged at the PLM Roadmap / PDT Europe conference in Paris. I shared most of the topics in this post: The week after PLM Roadmap / PDT Europe 2023. In addition, there is now the Heliple Federated PLM LinkedIn group with regular discussions planned.

We saw last year that the discussions related to Federated PLM nicely converged at the PLM Roadmap / PDT Europe conference in Paris. I shared most of the topics in this post: The week after PLM Roadmap / PDT Europe 2023. In addition, there is now the Heliple Federated PLM LinkedIn group with regular discussions planned.

In addition, if you read here Jan Bosch’s reflection on 2023, he mentions (quote):

… 2023 was the year where many of the companies in the center became serious about the use of data. Whether it is historical analysis, high-frequency data collection during R&D, A/B testing or data pipelines, I notice a remarkable shift from a focus on software to a focus on data. The notion of data as a product, for now predominantly for internal use, is increasingly strong in the companies we work with

I am a big fan of Jan’s posting; coming from the software world, he describes the same issues that we have in the PLM world, except he does not carry the hardware legacy that much and, therefore, acts faster than us in the PLM world.

I am a big fan of Jan’s posting; coming from the software world, he describes the same issues that we have in the PLM world, except he does not carry the hardware legacy that much and, therefore, acts faster than us in the PLM world.

An interesting illustration of the slow pace to a data-driven environment is the revival of the PLM and ERP integration discussion. Prof. Jörg Fischer and Martin Eigner contributed to the broader debate of a modern enterprise infrastructure, not based on systems (PLM, ERP, MES, ….) but more on the flow of data through the lifecycle and an organization.

It is a great restart of the debate, showing we should care more about data semantics and the flow of information.

The articles: The Future of PLM & ERP: Bridging the Gap. An Epic Battle of Opinions! and Is part master in PLM and ERP equal or not) combined with the comments to these posts, are a must read to follow this change towards a more connected flow of information.

While writing this post, Andreas Lindenthal expanded the discussion with his post: PLM and Configuration Management Best Practices: Part Traceability and Revisions. Again thanks to data-driven approaches, there is an extending support for the entire product lifecycle. Product Lifecycle Management, Configuration Management and AIM (Asset Information Management) have come together.

![]() PLM and CM are more and more overlapping as I discussed some time ago with Martijn Dullaart, Maxime Gravel and Lisa Fenwick in the The future of Configuration Management. This topic will be “hot”in 2024.

PLM and CM are more and more overlapping as I discussed some time ago with Martijn Dullaart, Maxime Gravel and Lisa Fenwick in the The future of Configuration Management. This topic will be “hot”in 2024.

People

From the people’s perspective towards AI, DATA and SUSTAINABILITY, there is a noticeable divide between generations. Of course, for the sake of the article, I am generalizing, assuming most people do not like to change their habits or want to reprogram themselves.

From the people’s perspective towards AI, DATA and SUSTAINABILITY, there is a noticeable divide between generations. Of course, for the sake of the article, I am generalizing, assuming most people do not like to change their habits or want to reprogram themselves.

Unfortunate, we have to adapt our skills as our environment is changing. Most of my generation was brought up with the single source of truth idea, documented and supported by science papers.

In my terminology, information processing takes place in our head by combining all the information we learned or collected through documents/books/newspapers – the coordinated approach.

For people living in this mindset, AI can become a significant threat, as their brain is no longer needed to make a judgment, and they are not used to differentiate between facts and fake news as they were never trained to do so

For people living in this mindset, AI can become a significant threat, as their brain is no longer needed to make a judgment, and they are not used to differentiate between facts and fake news as they were never trained to do so

The same is valid for practices like the model-based approach, working data-centric, or considering sustainability. It is not in the DNA of the older generations and, therefore, hard to change.

The older generation is mostly part of an organization’s higher management, so we are returning to the technical debt discussion.

Later generations that grew up as digital natives are used to almost real-time interaction, and when applied consistently in a digital enterprise, people will benefit from the information available to them in a rich context – in my terminology – the connected approach.

AI is a blessing for people living in this mindset as they do not need to use old-fashioned methods to acquire information.

AI is a blessing for people living in this mindset as they do not need to use old-fashioned methods to acquire information.

“Let ChatGPT write my essay.”

However, their challenge could be what I would call “processing time”. Because data is available, it does not necessarily mean it is the correct information. For that reason it remains important to spend time digesting the impact of information you are reading – don’t click “Like”based on the tittle, read the full article and then decide.

Experience is what you get, when you don’t get what you expect.

meaning you only become experienced if you learn from failures.

Sustainability

Unfortunately, sustainability is not only the last topic in alphabetic order, as when you look at the image below, you see that discussions related to sustainability are in a slight decline at C-level at the moment.

Unfortunately, sustainability is not only the last topic in alphabetic order, as when you look at the image below, you see that discussions related to sustainability are in a slight decline at C-level at the moment.

I share this observation in my engagements when discussing sustainability with the companies I interact with.

The PLM software and services providers are all on a trajectory of providing tools and an infrastructure to support a transition to a more circular economy and better traceability of materials and carbon emissions.

The PLM software and services providers are all on a trajectory of providing tools and an infrastructure to support a transition to a more circular economy and better traceability of materials and carbon emissions.

In the PLM Global Green Alliance, we talked with Aras, Autodesk, Dassault Systems, PTC, SAP, Sustaira, TTPSC(Green PLM) and more to come in 2024. The solution offerings in the PLM domain are available to start, now the people and processes.

For sure, AI tools will help companies to get a better understanding of their sustainability efforts. As mentioned before AI could help companies in understanding their environmental impact and build more accurate ESG reports.

Next, being DATA-driven will be crucial. As discussed during the latest PLM Roadmap/PDT Europe conference: The Need for a Governance Digital Thread.

And regarding PEOPLE, the good news is that younger generations want to take care of their future. They are in a position to choose the company to work for or influence companies by their consumer behavior. Unfortunately, climate disasters will remind us continuously in the upcoming decades that we are in a critical phase.

With the PLM Global Green Alliance, we strive to bring people together with a PLM mindset, sharing news and information on how to move forward to a sustainable future.

![]() Mark Reisig (CIMdata – moderator for Sustainability & Energy) and Patrice Quencez (CIMPA – moderator for the Circular Economy) joined the PGGA last year and you will experience their inputs this year.

Mark Reisig (CIMdata – moderator for Sustainability & Energy) and Patrice Quencez (CIMPA – moderator for the Circular Economy) joined the PGGA last year and you will experience their inputs this year.

Conclusion

As you can see from this long post, there is so much to learn. The topics described are all actual, and each topic requires education, experience (success & failures) combined with understanding of the technology concepts. Make sure you consider all of them, as focusing on a single topic will not make move faster forward – they are all related. Please share your experiences this year—Happy New Year of Learning.

Happy New Year to all of you, and may this year be a year of progress in understanding and addressing the challenges ahead of us.

Happy New Year to all of you, and may this year be a year of progress in understanding and addressing the challenges ahead of us.

To help us focus, I selected three major domains I will explore further this year. These domains are connected – of course – as nothing is isolated in a world of System Thinking. Also, I wrote about these domains in the past, as usually, noting happens out of the blue.

Meanwhile, there are a lot of discussions related to Artificial Intelligence (AI), in particular ChatGPT (openAI). But can AI provide the answers? I believe not, as AI is mainly about explicit knowledge, the knowledge you can define by (learning) algorithms.

Meanwhile, there are a lot of discussions related to Artificial Intelligence (AI), in particular ChatGPT (openAI). But can AI provide the answers? I believe not, as AI is mainly about explicit knowledge, the knowledge you can define by (learning) algorithms.

Expert knowledge, often called Tacit knowledge, is the knowledge of the expert, combining information from different domains into innovative solutions.

![]() I started my company, TacIT, in 1999 because I thought (and still think) that Tacit knowledge is the holy grail for companies.

I started my company, TacIT, in 1999 because I thought (and still think) that Tacit knowledge is the holy grail for companies.

Let’s see with openAI how far we get ……

Digitization of the PLM domain

The PLM domain is suffering from its legacy data (documents), legacy processes (linear – mechanical focus) and legacy people (siloed). The statement is a generalization.

The PLM domain is suffering from its legacy data (documents), legacy processes (linear – mechanical focus) and legacy people (siloed). The statement is a generalization.

More details can be found in my blog series: The road to model-based and connected PLM.

So why should companies move to a model-based and connected approach for their PLM infrastructure?

![]() There are several reasons why companies may want to move to a model-based and connected approach for their Product Lifecycle Management (PLM) infrastructure:

There are several reasons why companies may want to move to a model-based and connected approach for their Product Lifecycle Management (PLM) infrastructure:

- Increased efficiency: A model-based approach allows for creating a digital twin of the product, which can be used to simulate and test various design scenarios, reducing the need for physical prototypes and testing. This can lead to faster and more efficient product development.

- Improved collaboration: A connected PLM infrastructure allows for better collaboration between different teams and departments, as all product-related information is stored in a central location and can be accessed by authorized personnel. This can improve communication and decision-making within the organization.

- Enhanced visibility: A model-based PLM system provides a single source of truth for all product-related data, giving management a clear and comprehensive view of the product development process. This can help identify bottlenecks and areas for improvement.

- Reduced risk: By keeping all product-related information in a centralized location, the risk of data loss or inconsistencies is reduced. This can help ensure that the product is developed in accordance with regulatory requirements and company standards.

- Increased competitiveness: A model-based and connected PLM infrastructure can help companies bring new products to market faster and with fewer errors, giving them a competitive advantage in their industry.

The text in italics was created by ChatGPT. After three learning cycles, this was the best answer I got. What we are missing in this answer is the innovative and transformative part that modern PLM can bring. Where is the concept of different ways of working, and new business models, both drivers for digitalization in many businesses?

Expert knowledge related to Federated PLM (or Killing the PLM Monolith) are topics you will not find through AI. This is, for me, the most interesting part to explore.

Expert knowledge related to Federated PLM (or Killing the PLM Monolith) are topics you will not find through AI. This is, for me, the most interesting part to explore.

We see the need but lack a common understanding of the HOW.

Algorithms will not innovate; for that, you need Tacit intelligence & Curiosity instead of Artificial Intelligence. More exploration of Federated PLM this year.

PLM and Sustainability

Last year as part of the PLM Global Green Alliance, we spoke with six different PLM solution providers to understand their sustainability goals, targets, and planned support for Sustainability. All of them confirmed Sustainability has become an important issue for their customers in 2022. Sustainability is on everyone’s agenda.

Last year as part of the PLM Global Green Alliance, we spoke with six different PLM solution providers to understand their sustainability goals, targets, and planned support for Sustainability. All of them confirmed Sustainability has become an important issue for their customers in 2022. Sustainability is on everyone’s agenda.

Why is PLM important for Sustainability?

PLM is important for Sustainability because a PLM helps organizations manage the entire lifecycle of a product, from its conception and design to its manufacture, distribution, use, and disposal. PLM can be important for Sustainability because it can help organizations make more informed decisions about the environmental impacts of their products and take steps to minimize those impacts throughout the product’s lifecycle.

PLM is important for Sustainability because a PLM helps organizations manage the entire lifecycle of a product, from its conception and design to its manufacture, distribution, use, and disposal. PLM can be important for Sustainability because it can help organizations make more informed decisions about the environmental impacts of their products and take steps to minimize those impacts throughout the product’s lifecycle.

For example, using PLM, an organization can consider the environmental impacts of the materials that are used in a product, the energy consumption of the manufacturing process, the product’s end-of-life disposal, and other factors that may affect its overall Sustainability. By considering these factors early in the design process, organizations can make more sustainable choices that reduce the environmental impact of their products over their lifecycle.

In addition, PLM can help organizations track and measure the Sustainability of their products over time, allowing them to continuously improve and optimize their products for Sustainability. This can be particularly important for organizations that are looking to meet regulatory requirements or consumer demand for more sustainable products.

Again not a wrong answer, but there is no mention of supporting the concepts of the circular economy, the potential of using digital twins at the various lifecycle stages of the products to perform trade-off studies in the virtual world, or to analyze the product behavior from the physical world in the virtual world for optimization.

This is (again), for me, the most interesting part to explore.

We see the need but lack the sense of urgency on the NOW.

More on Sustainability this year.

PLM Education at all levels

Historically PLM was framed as an “engineering thing” or a system needed for R&D/Engineering. Modern PLM is much broader and, unfortunately, more hard to grasp. I support the CIMdata definition:

CIMdata defines PLM as:

-

A strategic business approach that applies a consistent set of business solutions that support the collaborative creation, management, dissemination, and use of product definition information

-

Supporting the extended enterprise (customers, design and supply partners, etc.)

-

Spanning from concept to end of life of a product or plant

-

Integrating people, processes, business systems, and information

And even here, I would say this definition is challenging as the scope is extensive and could touch a lot of activities inside an enterprise. As I wrote last year in my series of posts related to PLM and Education, there is:

And even here, I would say this definition is challenging as the scope is extensive and could touch a lot of activities inside an enterprise. As I wrote last year in my series of posts related to PLM and Education, there is:

Strategy education (what are the trends/potential of digital transformation in the business domain related to the company’s products)

Methodology/ Academic Education (independent from tools, what are the best practices, where they reside, potential lessons learned and pitfalls to avoid)

Organisational Change Management (the Education and support needed to enable a business transformation – modern PLM is a transformation enabler)

By asking ChatGPT for their opinion, we learn the following:

Product Lifecycle Management (PLM) education refers to training and development programs that focus on teaching individuals how to effectively manage the lifecycle of a product within an organization. This can include a wide range of topics, such as product design, development, and engineering; supply chain management; quality control and assurance; and product retirement and disposal.

Product Lifecycle Management (PLM) education refers to training and development programs that focus on teaching individuals how to effectively manage the lifecycle of a product within an organization. This can include a wide range of topics, such as product design, development, and engineering; supply chain management; quality control and assurance; and product retirement and disposal.

Some specific types of PLM education that a company may need to provide to its employees include:

- Introduction to PLM: This type of training is designed to provide a general overview of PLM concepts and practices and may be suitable for employees who are new to the field or who have limited experience with PLM.

- PLM software training: Many companies use specialized PLM software to manage their product lifecycle processes. Employees who will be using this software will need training on how to use it effectively.

- Industry-specific PLM training: Depending on the industry in which a company operates, there may be specific PLM practices and regulations that employees need to be familiar with. For example, a company in the aerospace industry may need to provide training on PLM practices that are specific to the aerospace industry.

- Leadership and management training: For employees who are responsible for leading and managing PLM projects or teams, additional training in leadership and project management may be necessary.

Overall, the types of PLM education that a company needs will depend on the specific needs and goals of the organization, as well as the roles and responsibilities of its employees.

In general, we see from the answer that strategy and organizational change management beyond the tool are not considered.

This is precisely the area where a PLM Expert can help.

We see the need for Education, but we lack the willingness to invest in it.

Conclusion

It was an exciting exercise to combine my blogging thoughts with the answers from OpenAI. I am impressed by the given answers, knowing that the topics discussed about PLM are not obvious. On the other hand, I am not worried that AI will take over the job of the PLM consultant. As I mentioned before, the difference between Explicit Knowledge and Tacit Knowledge is clear, and business transformations will largely depend on the usage of Tacit knowledge.

I am curious about your experiences and will follow the topics mentioned in this post and write about them with great interest.

[…] (The following post from PLM Green Global Alliance cofounder Jos Voskuil first appeared in his European PLM-focused blog HERE.) […]

[…] recent discussions in the PLM ecosystem, including PSC Transition Technologies (EcoPLM), CIMPA PLM services (LCA), and the Design for…

Jos, all interesting and relevant. There are additional elements to be mentioned and Ontologies seem to be one of the…

Jos, as usual, you've provided a buffet of "food for thought". Where do you see AI being trained by a…

Hi Jos. Thanks for getting back to posting! Is is an interesting and ongoing struggle, federation vs one vendor approach.…