You are currently browsing the category archive for the ‘PLM’ category.

This blog post is especially written for our PLM Global Green Alliance LinkedIn members — a message from a “boomer” to the next generation of PLM enthusiasts.

This blog post is especially written for our PLM Global Green Alliance LinkedIn members — a message from a “boomer” to the next generation of PLM enthusiasts.

If you belong to that next generation, please read until the end and share your thoughts.

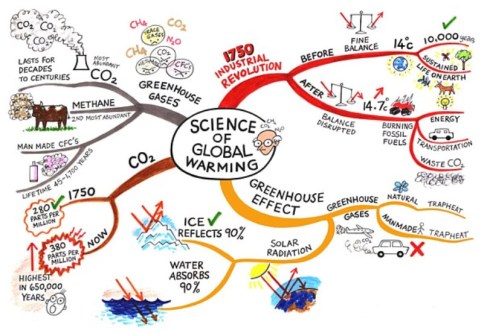

With last week’s announcement from the US government, no longer treating greenhouse gas emissions as a threat to the planet or climate.

We see a push to remove regulations that limit companies from continuing or expanding business without considering the broader consequences for other countries and future generations.

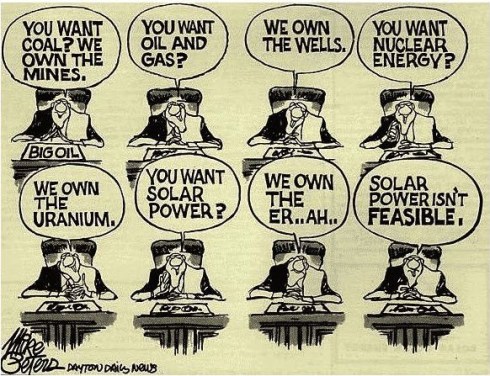

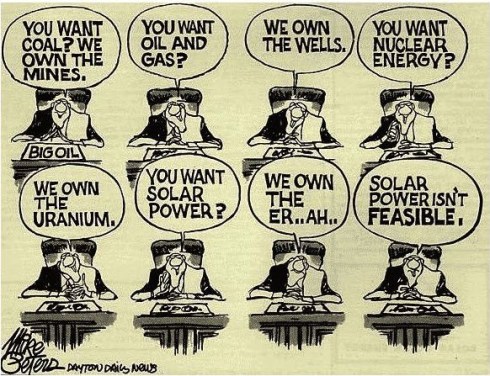

It feels like a short-term, greedy decision, largely influenced by those who benefit from fossil-carbon economies. Decisions like this make the energy transition harder, because the path of least resistance is always the easiest to follow.

Transitions are never simple. But when science is ignored, data is removed, and opinions replace facts, we are no longer supporting a transition — we are actively working against it.

My Story

When I started working in the PLM domain in 1999, climate change already existed in the background of society. The 1972 Limits to Growth report by the Club of Rome had created waves long before, encouraging some people to rethink business and lifestyle choices.

When I started working in the PLM domain in 1999, climate change already existed in the background of society. The 1972 Limits to Growth report by the Club of Rome had created waves long before, encouraging some people to rethink business and lifestyle choices.

For me, however, it stayed outside my daily focus. I was at the beginning of my career, excited about the new challenges.

And important to notice that connecting to the internet with a 28k modem was the standard, a world without social media constantly reminding us of global issues.

I enjoyed my role as the “Flying Dutchman,” travelling around the world to support PLM implementations and discussions. Flying was simply part of the job. Real communication meant being in the same room; early phone and video calls were expensive, awkward, and often ineffective. PLM was — and still is — a human business.

I enjoyed my role as the “Flying Dutchman,” travelling around the world to support PLM implementations and discussions. Flying was simply part of the job. Real communication meant being in the same room; early phone and video calls were expensive, awkward, and often ineffective. PLM was — and still is — a human business.

Back then, the effects of carbon emissions and global warming felt distant, almost abstract. Only around 2014 did the conversation become more mainstream for me, helped by social media, before algorithms and bots began driving polarization.

In 2015, while writing about PLM and global warming, I realized something that still resonates today: even when we understand change is needed, we often stick to familiar habits, because investments in the future rarely deliver immediate ROI for ourselves or our shareholders.

The PLM Green Global Alliance

When Rich McFall approached me in 2019 with the idea of creating an alliance where people and companies could share ideas and experiences around sustainability in the PLM domain, I was immediately interested — for two reasons.

- First, there was a certain sense of responsibility related to my past activities as the Flying Dutchman. Not guilt — life is about learning and gaining insight — but awareness that I needed to change, even if the past could not be changed.

- Second, and more importantly, the PLM Green Global Alliance offered a way to contribute. It gave me a reason to act — for personal peace of mind and for future generations. Not only for my children or grandchildren, but for all those who will share this planet with them.

In the first years of the PGGA, we saw strong engagement from younger professionals. Over time, however, we noticed that career priorities often came first — which is understandable.

Like me at the start of my career, many focus first on building their future. Career and sustainability can coexist, but investing extra time in long-term change is not easy when daily responsibilities already demand so much.

Your Chance to Work on the Future

The real challenge lies with those willing to go the extra mile — staying focused on today’s business while also investing energy in the long-term future.

The real challenge lies with those willing to go the extra mile — staying focused on today’s business while also investing energy in the long-term future.

At the same time, I understand that not everyone is in a position to speak out or dedicate time to sustainability initiatives. Circumstances differ. For many, current responsibilities leave little space for additional commitments.

Still, for those willing to join us, we have two requests to better understand your expectations.

Two weeks ago, I connected with our 40 newest members of the PLM Green Global Alliance. We are now close to 1,600 members — up from around 1,500 in September 2025, as mentioned in Working on the Long Term.

That post was a gentle call to action. Seeing our PGGA membership continue to grow is encouraging — and naturally raises a question:

1. What motivates people to join the PGGA LinkedIn group?

So far, only a small number of the recent new members have completed a survey that was especially sent to them to explore changing priorities. Due to the low response, we extended the invitation to all members. We are curious about your expectations — and quietly hopeful about your involvement.

If you haven’t filled in the survey yet, please click here and share your feedback. The survey is anonymous unless you choose to leave your details for follow-up. We will share the results in approximately 2 weeks from now.

If you haven’t filled in the survey yet, please click here and share your feedback. The survey is anonymous unless you choose to leave your details for follow-up. We will share the results in approximately 2 weeks from now.

2. Design for Sustainability – your contribution?

Last year, Erik Rieger and Matthew Sullivan launched a new workgroup within the PLM Green Global Alliance focused on Design for Sustainability. While the initial energy was strong, changes in personal priorities meant the team could not continue at the pace they hoped. Since many new members have joined since last May, we decided to relaunch the initiative.

If you are interested in contributing to the revival of Design for Sustainability, please take five minutes to complete the short survey. Your input will help shape the direction of the DfS working group and frame future discussions.

If you are interested in contributing to the revival of Design for Sustainability, please take five minutes to complete the short survey. Your input will help shape the direction of the DfS working group and frame future discussions.

Note: If you are worried about clicking on the links for the survey, you can always contact us directly (in private) to share your ambition

Conclusion

The outside world often pushes us to focus only on daily business. In some places, there is even active pressure to avoid long-term sustainability investments. Remember that pressure often comes from those invested in keeping the current system unchanged.

If you care about the future — your generation and those that follow — stay engaged. Small actions by millions of people can create meaningful change.

We look forward to your input and participation.

— says the boomer who still cares 😉

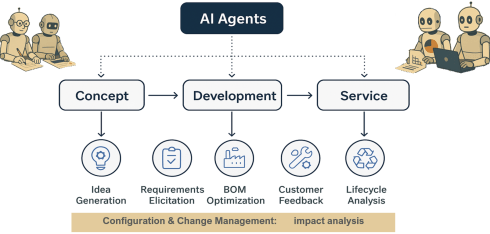

The last month, it seems like in my ecosystem, people are incredibly focused on “THE BOM” combined with AI agents working around the clock. One of the reasons I have this impression, of course, is my irregular participation in the Future of PLM panel discussions, moderated and organized by Michael Finocharrio.

Yesterday, the continuously growing Future of PLM team held another interesting discussion: “A BOMversation”. You can watch the replay and the comments during the debate here: To BOM or Not to BOM: A BOMversation

On the other hand, there is Prof. Jorg Fischer with his provocative post: 📌 2026 – The year we have to unlearn BOMs! –

On the other hand, there is Prof. Jorg Fischer with his provocative post: 📌 2026 – The year we have to unlearn BOMs! –

Sounds like a dramatic opening, but when you read his post and my post below, you will learn that there is a lot of (conceptual) alignment.

Then there are PLM vendors who announce “next-generation BOM management,” startup companies that promise AI-powered configuration engines, and consultants who explain how the BOM has become the foundation of digital transformation. (I do not think so)

And as Anup Karumanchi states, BOMs can be the reason if production keeps breaking.

I must confess that I also have a strong opinion about the various BOMs and their application in multiple industries.

I must confess that I also have a strong opinion about the various BOMs and their application in multiple industries.

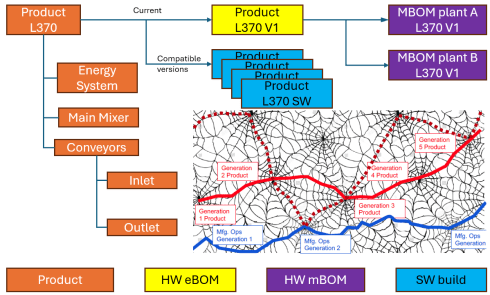

My 2019 blog post: The importance of EBOM and MBOM is in the top 3 of most-read posts. BOM discussions, single BOM, multiview BOM, etc., always attract an audience.

I continuously observe a big challenge at the companies I am working with – the difference between theory and reality.

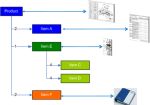

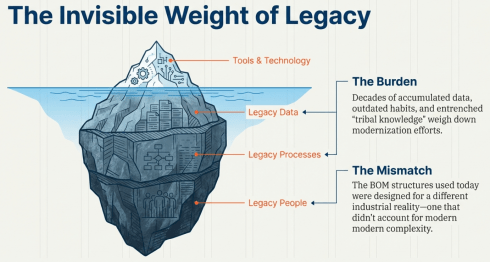

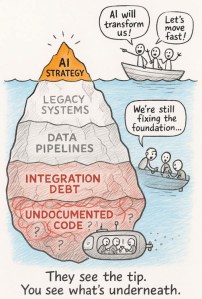

![]() If the BOM is so important, why do so many organizations still struggle to make it work across engineering, manufacturing, supply chain, and service?

If the BOM is so important, why do so many organizations still struggle to make it work across engineering, manufacturing, supply chain, and service?

The answer is two-fold: LEGACY DATA, PROCESSES and PEOPLE, and the understanding that the BOM we are using today was designed for a different industrial reality.

Let me share my experiences, which take longer to digest than an entertaining webinar.

Some BOM history and theory

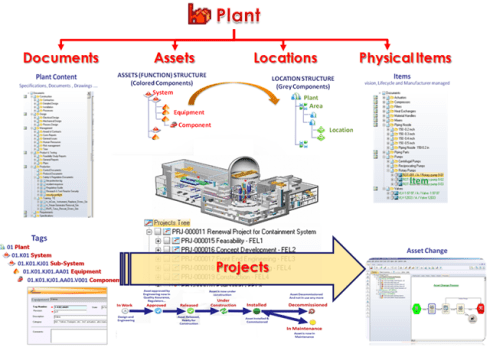

Historically, the BOM was a production artifact. It described what was needed to build something and in what quantities. When PLM systems emerged, the 3D CAD model structure became the authoritative structure representing product definition, driven mainly by the PLM vendors with dominant 3D CAD tools in their portfolio.

Historically, the BOM was a production artifact. It described what was needed to build something and in what quantities. When PLM systems emerged, the 3D CAD model structure became the authoritative structure representing product definition, driven mainly by the PLM vendors with dominant 3D CAD tools in their portfolio.

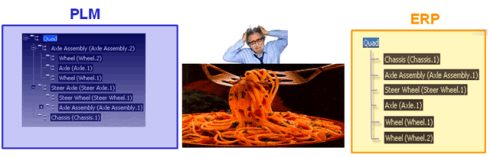

As the various disciplines in the company were not integrated at all, the BOM structure derived from the 3D CAD model was often a simplified way to prepare a BOM for ERP. The transfer to ERP was done manually (retype the structure in ERP), advanced (using Excel export and import with some manipulation) or advanced through an “intelligent” interface.

![]() There are still a lot of companies working this way, probably because, due to the siloed organization, there is no one owning or driving a smooth flow of information in the company.

There are still a lot of companies working this way, probably because, due to the siloed organization, there is no one owning or driving a smooth flow of information in the company.

The need for an eBOM and mBOM

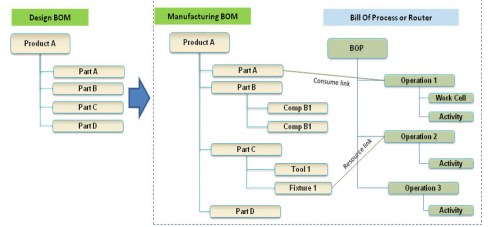

When companies become more mature and start to implement a PLM system, they will discover, depending on their core business processes, that it makes sense to split the BOM concept into a specification structure, the eBOM and a manufacturing structure for ERP, the mBOM.

The advantage of this split is that the engineering specification can remain stable over time, as it provides a functional view of the product with its functional assemblies and part definitions.

This definition needs to be resolved and adapted for a specific plant with its local suppliers and resources. PLM systems often support the transformation from the eBOM to a proposed mBOM, and if done more completely with a Bill of Process.

This definition needs to be resolved and adapted for a specific plant with its local suppliers and resources. PLM systems often support the transformation from the eBOM to a proposed mBOM, and if done more completely with a Bill of Process.

The advantages of a split in an eBOM and an mBOM are:

- Reduced the number of engineering changes when supplier parts change

- Centralized control of all product IP related to its specifications (eBOM/3DCAD)

- Efficient support for modularity, as each module has its own lifecycle and can be used in multiple products.

Implementing an eBOM/mBOM concept

The theory, the methodology and implementation are clear, and you can ask ChatGPT and others to support you in this step.

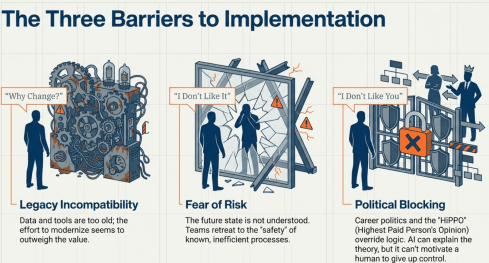

However where ChatGPT or service providers often fail is to motivate a company to move to this next steps, as either their legacy data and tools are incompatible (WHY CHANGE?), the future is not understood and feels risky (I DON’T LIKE IT) or for political career reasons a change is blocked (I DON’T LIKE YOU or the HIPPO says differently)

Extending to the sBOM

When you sell products in large volumes, like cars or consumer products, companies have discovered and organized a well-established service business, as the margins are high here.

Companies that sell almost unique solutions for customers, batch-size 1 or small series, are also discovering or asked by their customers to come up with service plans and related pricing.

The challenge for these companies is that there is a lot of guesswork to be done, as the service business was not planned in their legacy business. A quick and dirty solution was to use the mBOM in ERP as the source of information. However, the ERP system usually does not provide any context information, such as where the part is located and what potential other parts need to be replaced—a challenging job for service engineers.

The challenge for these companies is that there is a lot of guesswork to be done, as the service business was not planned in their legacy business. A quick and dirty solution was to use the mBOM in ERP as the source of information. However, the ERP system usually does not provide any context information, such as where the part is located and what potential other parts need to be replaced—a challenging job for service engineers.

A less quick and still a little dirty solution was create a new structure in the PLM system, which provided the service kits and service parts for the defined product, preferably done based on the eBOM, if an eBOM exists.

![]() The ideal solution would be that service engineers are working in parallel and in the same environment as the other engineers, but this requires an organisational change.

The ideal solution would be that service engineers are working in parallel and in the same environment as the other engineers, but this requires an organisational change.

The organization often becomes the blocker.

As long as the PLM system is considered a tool for engineering, advanced extensions to other disciplines will be hard to achieve.

A linear organization aligned with a traditional release process will have difficulties changing to work with a common PLM backbone that satisfies engineering, manufacturing engineering and service engineering at the same time.

Now, the term PLM becomes Product Lifecycle MANAGEMENT and this brings us to the core issue: the BOM is too often reduced to a parts list without understanding the broader context of the product, needed for service or operation support where artifacts can be hardware and software in a system.

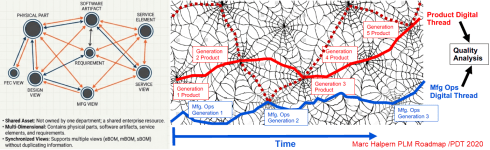

What is really needed is an extended data model with at least a logical product structure that can represent multiple views of the same product: engineering intent, manufacturing reality, service configuration, software composition, and operational context. These views should not be separate silos connected by fragile integrations. They should be derived from a shared, consistent digital infrastructure – this is what I extract from Prof. Jorg Fischer’s post, be it that he comes with a strong SAP background and focus on CTO+

Most companies are still organized around linear processes with a focus on mechanical products: engineering hands over to manufacturing, manufacturing hands over to service, and feedback loops are weak or nonexistent.

Most companies are still organized around linear processes with a focus on mechanical products: engineering hands over to manufacturing, manufacturing hands over to service, and feedback loops are weak or nonexistent.

Changing the BOM without changing the organization is like repainting a house with structural cracks. It may look better, but the underlying issues remain.

Listen to this snippet from the BOMversation where Patrick Hilberg touches this point too.

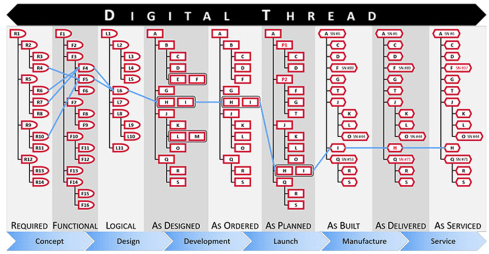

With this approach, the digital thread becomes more than a buzzword. A digital thread must provide digital continuity, which means that changes propagate across domains, that data is contextualized, and that lifecycle feedback flows back into product development. Without this continuity, digital twins concepts remain isolated models rather than living representations of real products.

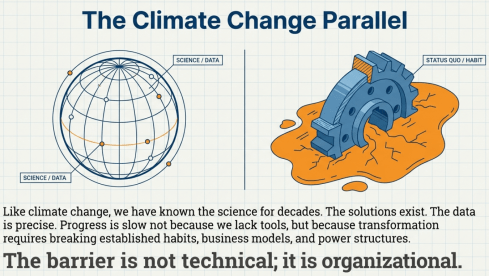

![]() However, the most significant barrier is not technical. It is organizational. There is an interesting parallel with how we address climate change and are willing to take action against it.

However, the most significant barrier is not technical. It is organizational. There is an interesting parallel with how we address climate change and are willing to take action against it.

For decades, we have known what needs to change. The science is precise. The solutions exist. Yet progress is slow because transformation requires breaking established habits, business models, and power structures.

Digital transformation in product lifecycle management follows a similar pattern. Everyone agrees that data silos are a problem. Everyone wants “end-to-end visibility.” Yet few organizations are willing to rethink ownership of product data and processes fundamentally.

So what does the future BOM look like?

It is not a single hierarchical tree. It is part of a maze; some will say it is a graph. It is a connected network of product-related information: physical components, software artifacts, service elements, configurations, requirements, and operational data. It supports multiple synchronized views without duplicating information. It evolves as products change when operated in the field.

Most importantly, it is not owned by one department. It becomes a shared enterprise asset – with shared accountability for various datasets. But we should not abandon the BOM concept. On the contrary, the BOM remains essential and managing BOMs consistently is already a challenge.

But its role must shift from being a collection of static structures to becoming part of the digital product definition infrastructure, extended by a logical product structure and beyond – the MBSE question.

![]() The BOM is not dead. But the traditional BOM mindset is no longer sufficient. The question is not whether the BOM will change. It already is. The real question is whether organizations are ready to change with it.

The BOM is not dead. But the traditional BOM mindset is no longer sufficient. The question is not whether the BOM will change. It already is. The real question is whether organizations are ready to change with it.

Conclusion

Inspired by various BOMversations and AI graphical support, I tried to reflect the business reality, observed for over 10++ years. Technology and the Academic truth do not create breakthroughs in organisations due to the big legacy and fear of failure. Will AI fix this gap, as many software vendors believe, or do we need a new generation with no legacy PLM experience, as some others suggest? Your thoughts?

p.s. My trick to join the BOMversation without being thrown from the balcony 🙃

This post is for the 1,564 members of the PLM Green Global Alliance LinkedIn group—and for those wondering why they should join.

Since October 2019, when Rich McFall launched the group alongside the PLM Green Global Alliance website, we’ve been building a shared space to consolidate insights, discussions, and lessons learned.

Since October 2019, when Rich McFall launched the group alongside the PLM Green Global Alliance website, we’ve been building a shared space to consolidate insights, discussions, and lessons learned.

Today, the core team—Jos Voskuil, Klaus Brettschneider, Mark Reisig, Evgeniya Burimskaya, and Erik Rieger—continues to contribute deep expertise across sustainability, LCA, energy, circular economy, and design.

In November 2025, we marked our fifth anniversary with a webinar reflecting on past learnings and looking ahead to 2026. The conversation is still worth revisiting.

Now, as 2026 unfolds, the mood may feel mixed. Progress is never automatic. There are ups, downs, and work to be done.

Rich McFall stepping back!

In early December, it became clear that Rich would no longer be able to support the PGGA for personal reasons. We respect his decision and thank Rich for the energy and private money he has put into setting up the website, pushing the moderators to remain active and publishing the newsletter every month. From the frequency of the newsletter over the last year, you might have noticed Rich struggled to be active.

In early December, it became clear that Rich would no longer be able to support the PGGA for personal reasons. We respect his decision and thank Rich for the energy and private money he has put into setting up the website, pushing the moderators to remain active and publishing the newsletter every month. From the frequency of the newsletter over the last year, you might have noticed Rich struggled to be active.

Rich, we all wish you a happy and healthy continuation of your life and hope we will see you back once in a while as a contributor.

Meanwhile, Klaus Brettschneider is working on taking over and upgrading the PGGA website to bring our mission to the next level.

PGGA – the challenge is in the name

When you launch a  product or start an alliance, the name can be excellent at the start, but later it might work against you. I believe we are facing this situation too with our PGGA (PLM Green Global Alliance)

product or start an alliance, the name can be excellent at the start, but later it might work against you. I believe we are facing this situation too with our PGGA (PLM Green Global Alliance)

Let’s analyze the name.

The P stands for PLM.

Whether a business delivers products or services, most of the environmental impact is locked in during the design phase—often quoted at close to 80%. That makes design a strategic responsibility not only for engineering.

Whether a business delivers products or services, most of the environmental impact is locked in during the design phase—often quoted at close to 80%. That makes design a strategic responsibility not only for engineering.

Any company with a long-term vision—call it sustainable in the broad sense—must understand the environmental footprint of its offerings across manufacturing, operation, and repurposing.

Today’s “we don’t care” wave may be loud and egoistic, but it lacks durability. Forward-looking countries and companies already know this.

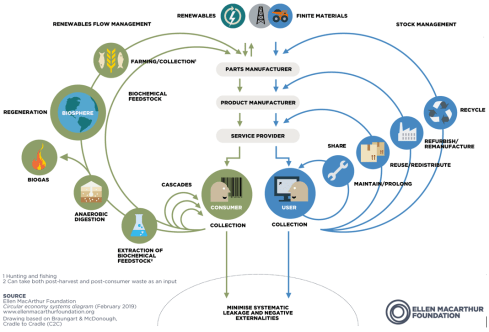

Moving toward a circular, fossil-free economy demands innovation. In that future, waste and recycling are costly options, pushed out by regulation and resource scarcity alike. Better to change your product or service delivery to align with a circular economy where possible. You can read more in my 2024 post: The Product Service System and a Circular Economy

Changing business models only possible by changing your PLM approach is often overlooked at the board level. PLM as a strategy shapes outcomes—and ultimately drives business success.

![]() Therefore, the P remains our primary focus area.

Therefore, the P remains our primary focus area.

The G for Green

Green has gradually acquired a negative connotation, weakened by early marketing hype and repeated greenwashing exposures. For many, green has lost its attractiveness.

Green has gradually acquired a negative connotation, weakened by early marketing hype and repeated greenwashing exposures. For many, green has lost its attractiveness.

After the 2015 United Nations Climate Change Conference, countries began exploring how to contribute to a healthier planet, increasingly at risk from rising carbon emissions caused by fossil fuels. This momentum led Europe, in 2020, to launch the Green Deal.

The spirit was right, but as with every new direction, failures followed. Lessons were learned, and perhaps too much academic work was done without involving businesses or the populations affected.

It resembles old, failing PLM projects, where management launches a big-bang implementation without grasping the friction and complexity of a fundamental transformation. Behavioral change, as always, is hard, as the iconic Share PLM image illustrates – click on the image for the details.

It resembles old, failing PLM projects, where management launches a big-bang implementation without grasping the friction and complexity of a fundamental transformation. Behavioral change, as always, is hard, as the iconic Share PLM image illustrates – click on the image for the details.

Meanwhile, threatened industries stirred resistance, science came under pressure, and a brown movement began pushing the green one aside.

The good news is that sustainability is still alive, progressing quietly. Long-term sustainability means reducing dependence on fossil fuels, while a new battle emerges over scarce materials for the energy transition. Those who understand this are already acting—now calling it future risk avoidance.

![]() Green has gone, replaced by proactive business sustainability.

Green has gone, replaced by proactive business sustainability.

The G from Global

When reading or listening to the news, it seems that globalization is over and imperialism is back with a primary focus on economic control. For some countries, this means even control over people’s information and thoughts, by restricting access to information, deleting scientific data and meanwhile dividing humanity into good and bad people.

When reading or listening to the news, it seems that globalization is over and imperialism is back with a primary focus on economic control. For some countries, this means even control over people’s information and thoughts, by restricting access to information, deleting scientific data and meanwhile dividing humanity into good and bad people.

And while these countries might control 25 % of their population, the majority of people still have the opportunity to fight climate change within their reach, work on a more sustainable economy and world.

As a global community, we have to fight and ensure that what we do is based on science and sharing facts and findings is crucial. As a coincidence, while writing this post, this article from my fellow Dutchman Robert Dijkgraaf, who is a leader in research and policy in many roles, including as minister of Education, Culture, and Science of the Netherlands (2022-2024) and director of the Institute for Advanced Study in Princeton (2012-2022).

As a global community, we have to fight and ensure that what we do is based on science and sharing facts and findings is crucial. As a coincidence, while writing this post, this article from my fellow Dutchman Robert Dijkgraaf, who is a leader in research and policy in many roles, including as minister of Education, Culture, and Science of the Netherlands (2022-2024) and director of the Institute for Advanced Study in Princeton (2012-2022).

His article: The fight to keep science global fits the mindset of our PGGA – take the time to read it and reflect on it.

![]() Global remains our goal, and given the global population, we are looking for more voices from Asia and Africa.

Global remains our goal, and given the global population, we are looking for more voices from Asia and Africa.

The A from Alliance

With more than 1500 members in our LinkedIn group, we are curious why you joined the PLM Global Green Alliance and assuming your positive intent to contribute to a sustainable future, where can we help you best?

With more than 1500 members in our LinkedIn group, we are curious why you joined the PLM Global Green Alliance and assuming your positive intent to contribute to a sustainable future, where can we help you best?

There are the contributions from the leading PLM software vendors (PTC, Dassault Systèmes, SAP, Siemens, Aras, Autodesk), solution providers(Sustaira, Makersite, aPriori, Direktin) and service partners (CIMPA, ecoPLM, CIMdata) that focus on providing capabilities, methodologies and consultancy to support the transition to a more sustainable economy and bearable climate.

![]() Let us know your questions, struggles, and intentions, either through personalized or anonymized interactions on LinkedIn – together we are an alliance.

Let us know your questions, struggles, and intentions, either through personalized or anonymized interactions on LinkedIn – together we are an alliance.

Conclusion

Although the political climate is currently in the same condition as the planetary climate, there is still work to be done. Being an observer is the worst option, and changing the world as an individual is also a mission impossible.

However, with our niche group, The PLM Green Global Alliance, we can be part of the generation that actively pursues a sustainable future for ourselves and the next generations.

And as you can learn from this podcast, you are not alone.

Last week, I wrote about the first day of the crowded PLM Roadmap/PDT Europe conference.

Last week, I wrote about the first day of the crowded PLM Roadmap/PDT Europe conference.

You can still read my post here in case you missed it: A very long week after PLM Roadmap / PDT Europe 2025

My conclusion from that post was that day 1 was a challenging day if you are a newbie in the domain of PLM and data-driven practices. We discussed and learned about relevant standards that support a digital enterprise, as well as the need for ontologies and semantic models to give data meaning and serve as a foundation for potential AI tools and use cases.

My conclusion from that post was that day 1 was a challenging day if you are a newbie in the domain of PLM and data-driven practices. We discussed and learned about relevant standards that support a digital enterprise, as well as the need for ontologies and semantic models to give data meaning and serve as a foundation for potential AI tools and use cases.

This post will focus on the other aspects of product lifecycle management – the evolving methodologies and the human side.

Note: I try to avoid the abbreviation PLM, as many of us in the field associate PLM with a system, where, for me, the system is more of an IT solution, where the strategy and practices are best named as product lifecycle management.

Note: I try to avoid the abbreviation PLM, as many of us in the field associate PLM with a system, where, for me, the system is more of an IT solution, where the strategy and practices are best named as product lifecycle management.

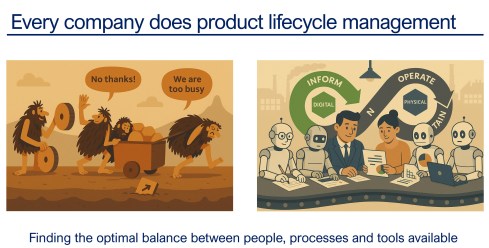

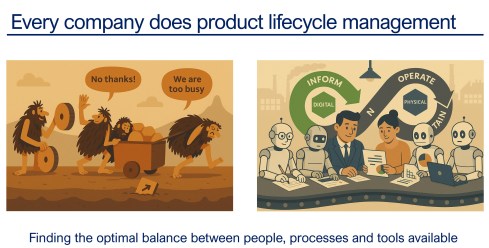

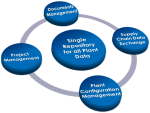

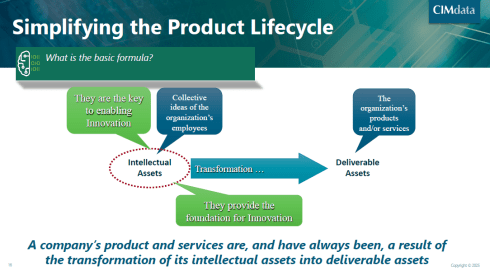

And as a reminder, I used the image above in other conversations. Every company does product lifecycle management; only the number of people, their processes, or their tools might differ. As Peter Billelo mentioned in his opening speech, the products are why the company exists.

Unlocking Efficiency with Model-Based Definition

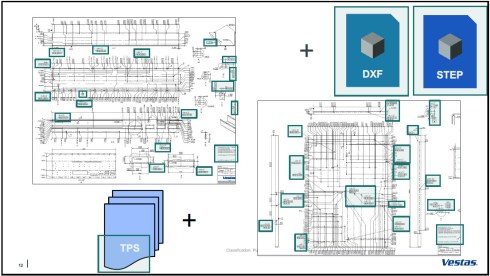

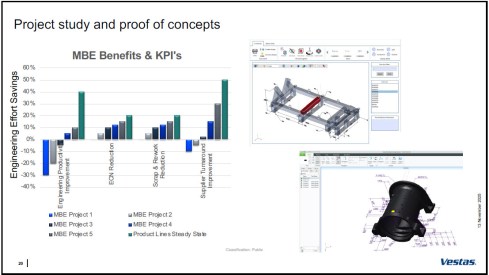

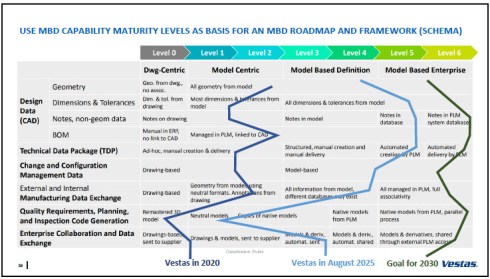

![]() Day 2 started energetically with Dennys Gomes‘ keynote, which introduced model-based definition (MBD) at Vestas, a world-leading OEM for wind turbines.

Day 2 started energetically with Dennys Gomes‘ keynote, which introduced model-based definition (MBD) at Vestas, a world-leading OEM for wind turbines.

Personally, I consider MBD as one of the stepping stones to learning and mastering a model-based enterprise, although do not be confused by the term “model”. In MBD, we use the 3D CAD model as the source to manage and support a data-driven connection among engineering, manufacturing, and suppliers. The business benefits are clear, as reported by companies that follow this approach.

However, it also involves changes in technology, methodology, skills, and even contractual relations.

Dennys started sharing the analysis they conducted on the amount of information in current manufacturing drawings. The image below shows that only the green marker information was used, so the time and effort spent creating the drawings were wasted.

It was an opportunity to explore model-based definition, and the team ran several pilots to learn how to handle MBD, improve their skills, methodologies, and tool usage. As mentioned before, it is a profound change to move from coordinated to connected ways of working; it does not happen by simply installing a new tool.

The image above shows the learning phases and the ultimate benefits accomplished. Besides moving to a model-based definition of the information, Dennys mentioned they used the opportunity to simplify and automate the generation of the information.

Vestas is on a clear path, and it is interesting to see their ambition in the MBD roadmap below.

An inspirational story, hopefully motivating other companies to make this first step to a model-based enterprise. Perhaps difficult at the beginning from the people’s perspective, but as a business, it is a profitable and required direction.

Bridging The Gap Between IT and Business

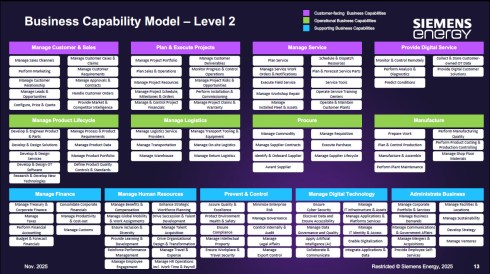

It was a great pleasure to listen again to Peter Vind from Siemens Energy, who first explained to the audience how to position the role of an enterprise architect in a company compared to society. He mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

It was a great pleasure to listen again to Peter Vind from Siemens Energy, who first explained to the audience how to position the role of an enterprise architect in a company compared to society. He mentioned he has to deal with the unicorns at the C-level, who, like politicians in a city, sometimes have the most “innovative” ideas – can they be realized?

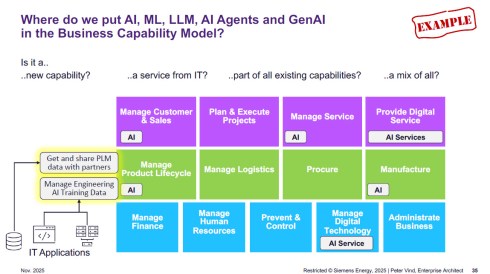

To answer these questions, Peter is referring to the Business Capability Model (BCM) he uses as an Enterprise Architect.

Business Capabilities define ‘what’ a company needs to do to execute its strategy, are structured into logical clusters, and should be the foundation for the enterprise, on which both IT and business can come to a common approach.

The detailed image above is worth studying if you are interested in the levels and the mappings of the capabilities. The BCM approach was beneficial when the company became disconnected from Siemens AG, enabling it to rationalize its application portfolio.

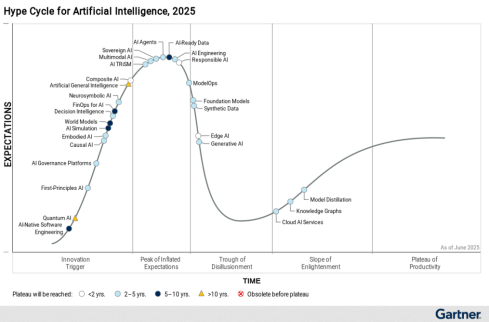

Next, Peter zoomed in on some of the examples of how a BCM and structured application portfolio management can help to rationalize the AI hype/demand – where is it applicable, where does AI have impact – and as he illustrated, it is not that simple. With the BCM, you have a base for further analysis.

Other future-relevant topics he shared included how to address the introduction of the digital product passport and how the BCM methodology supports the shift in business models toward a modern “Power-as-a-Service” model.

He concludes that having a Business Capability Model gives you a stable foundation for managing your enterprise architecture now and into the future. The BCM complements other methodologies that connect business strategy to (IT) execution. See also my 2024 post: Don’t use the P** word! – 5 lessons learned.

He concludes that having a Business Capability Model gives you a stable foundation for managing your enterprise architecture now and into the future. The BCM complements other methodologies that connect business strategy to (IT) execution. See also my 2024 post: Don’t use the P** word! – 5 lessons learned.

Holistic PLM in Action.

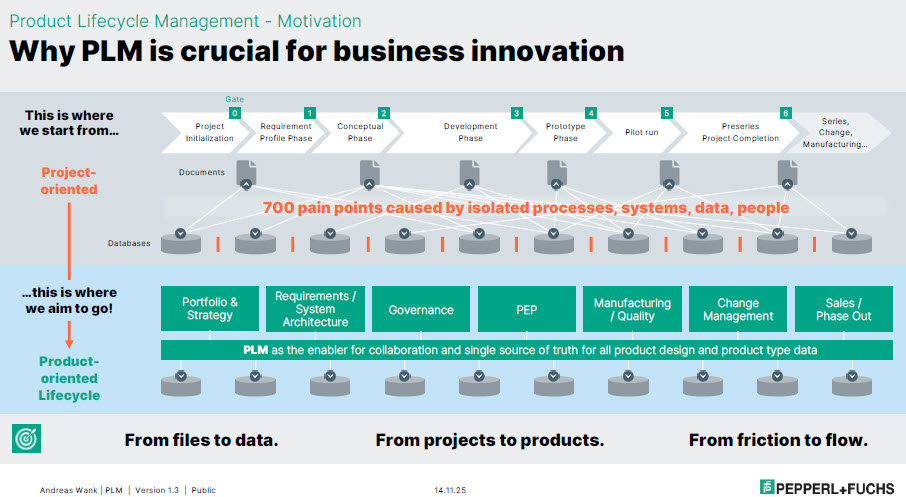

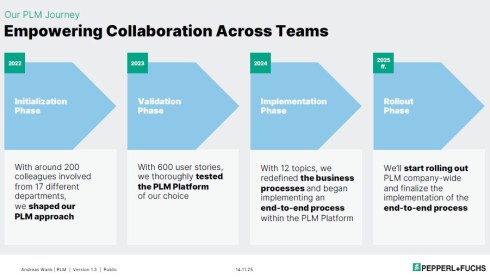

or companies struggling with their digital transformation in the PLM domain, Andreas Wank, Head of Smart Innovation at Pepperl+Fuchs SE, shared his journey so far. All the essential aspects of such a transformation were mentioned. Pepperl+Fuchs has a portfolio of approximately 15,000 products that combine hardware and software.

or companies struggling with their digital transformation in the PLM domain, Andreas Wank, Head of Smart Innovation at Pepperl+Fuchs SE, shared his journey so far. All the essential aspects of such a transformation were mentioned. Pepperl+Fuchs has a portfolio of approximately 15,000 products that combine hardware and software.

It started with the WHY. With such a massive portfolio, business innovation is under pressure without a PLM infrastructure. Too many changes, fragmented data, no single source of truth, and siloed ways of working lead to much rework, errors, and iterations that keep the company busy while missing the global value drivers.

Next, the journey!

The above image is an excellent way to communicate the why, what, and how to a broader audience. All the main messages are in the image, which helps people align with them.

The first phase of the project, creating digital continuity, is also an excellent example of digital transformation in traditional document-driven enterprises. From files to data align with the From Coordinated To Connected theme.

Next, the focus was to describe these new ways of working with all stakeholders involved before starting the selection and implementation of PLM tools. This approach is so crucial, as one of my big lessons learned from the past is: “Never start a PLM implementation in R&D.”

If you start in R&D, the priority shifts away from the easy flow of data between all stakeholders; it becomes an R&D System that others will have to live with.

You never get a second, first impression!

Pepperl+Fuchs spends a long time validating its PLM selection – something you might only see in privately owned companies that are not driven by shareholder demands, but take the time to prepare and understand their next move.

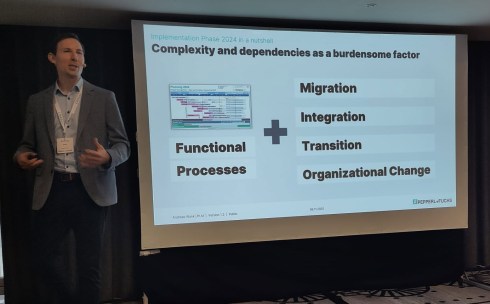

As Andreas also explained, it is not only about the functional processes. As the image shows, migration (often the elephant in the room) and integration with the other enterprise systems also need to be considered. And all of this is combined with managing the transition and the necessary organizational change.

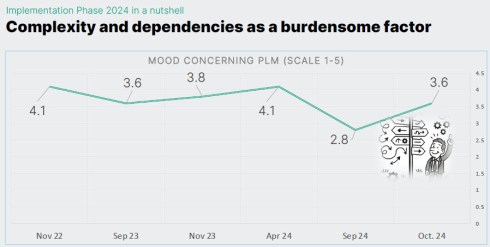

Andreas shared some best practices illustrating the focus on the transition and human aspects. They have implemented a regular survey to measure the PLM mood in the company. And when the mood went radical down on Sept 24, from 4.1 to 2.8 on a scale of 1 to 5, it was time to act.

They used one week at a separate location, where 30 of his colleagues worked on the reported issues in one room, leading to 70 decisions that week. And the result was measurable, as shown in the image below.

Andreas’s story was such a perfect fit for the discussions we have in the Share PLM podcast series that we asked him to tell it in more detail, also for those who have missed it. Subscribe and stay tuned for the podcast, coming soon.

Andreas’s story was such a perfect fit for the discussions we have in the Share PLM podcast series that we asked him to tell it in more detail, also for those who have missed it. Subscribe and stay tuned for the podcast, coming soon.

Trust, Small Changes, and Transformation.

Ashwath Sooriyanarayanan and Sofia Lindgren, both active at the corporate level in the PLM domain at Assa Abloy, came with an interesting story about their PLM lessons learned.

Ashwath Sooriyanarayanan and Sofia Lindgren, both active at the corporate level in the PLM domain at Assa Abloy, came with an interesting story about their PLM lessons learned.

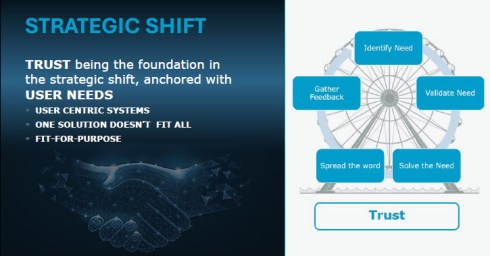

To understand their story, it is essential to comprehend Assa Abloy as a special company, as the image below explains. With over 1000 sites, 200 production facilities, and, last year, on average every two weeks, a new acquisition, it is hard to standardize the company, driven by a corporate organization.

However, this was precisely what Assa Abloy has been trying to do over the past few years. Working towards a single PLM system, with generic processes for all, spending a lot of time integrating and migrating data from the different entities became a mission impossible.

To increase user acceptance, they fell into the trap of customizing the system ever more to meet many user demands. A dead end, as many other companies have probably experienced similarly.

And then they came with a strategic shift. Instead of holding on to the past and the money invested in technology, they shifted to the human side.

The PLM group became a trusted organisation supporting the individual entities. Instead of telling them what to do (Top-Down), they talked with the local business and provided standardized PLM knowledge and capabilities where needed (Bottom-Up).

This “modular” approach made the PLM group the trusted partner of the individual business. A unique approach, making us realize that the human aspect remains part of implementing PLM

Humans cannot be transformed

Given the length of this blog post, I will not spend too much text on my closing presentation at the conference. After a technical start on DAY 1, we gradually moved to broader, human-related topics in the latter part.

Given the length of this blog post, I will not spend too much text on my closing presentation at the conference. After a technical start on DAY 1, we gradually moved to broader, human-related topics in the latter part.

You can find my presentation here on SlideShare as usual, and perhaps the best summary from my session was given in this post from Paul Comis. Enjoy his conclusion.

Conclusion

Two and a half intensive days in Paris again at the PLM Roadmap / PDT Europe conference, where some of the crucial aspects of PLM were shared in detail. The value of the conference lies in the stories and discussions with the participants. Only slides do not provide enough education. You need to be curious and active to discover the best perspective.

For those celebrating: Wishing you a wonderful Thanksgiving!

For those of you following my blog over the years, there is, every time after the PLM Roadmap PDT Europe conference, one or two blog posts, where the first starts with “The weekend after ….”

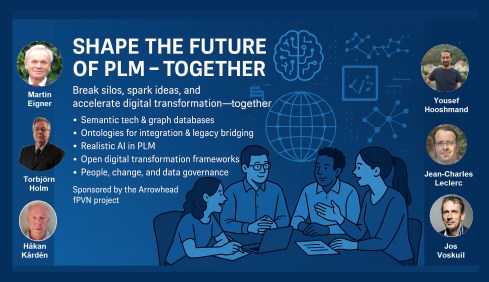

This time, November has been a hectic week for me, with first this engaging workshop “Shape the future of PLM – together” – you can read about it in my blog post or the latest post from Arrowhead fPVN, the sponsor of the workshop.

This time, November has been a hectic week for me, with first this engaging workshop “Shape the future of PLM – together” – you can read about it in my blog post or the latest post from Arrowhead fPVN, the sponsor of the workshop.

Last week, I celebrated with the core team from the PLM Green Global Alliance our 5th anniversary, during which we discussed sustainability in action. The term sustainability is currently under the radar, but if you want to learn what is happening, read this post with a link to the webinar recording.

Last week, I celebrated with the core team from the PLM Green Global Alliance our 5th anniversary, during which we discussed sustainability in action. The term sustainability is currently under the radar, but if you want to learn what is happening, read this post with a link to the webinar recording.

Last week, I was also active at the PTC/User Benelux conference, where I had many interesting discussions about PTC’s strategy and portfolio. A big and well-organized event in the town where I grew up in the world of teaching and data management.

Last week, I was also active at the PTC/User Benelux conference, where I had many interesting discussions about PTC’s strategy and portfolio. A big and well-organized event in the town where I grew up in the world of teaching and data management.

And now it is time for the PLM roadmap / PDT conference review

The conference

The conference is my favorite technical conference 😉 for learning what is happening in the field. Over the years, we have seen reports from the Aerospace & Defense PLM Action Groups, which systematically work on various themes related to a digital enterprise. The usage of standards, MBSE, Supplier Collaboration, Digital Thread & Digital Twin are all topics discussed.

The conference is my favorite technical conference 😉 for learning what is happening in the field. Over the years, we have seen reports from the Aerospace & Defense PLM Action Groups, which systematically work on various themes related to a digital enterprise. The usage of standards, MBSE, Supplier Collaboration, Digital Thread & Digital Twin are all topics discussed.

This time, the conference was sold out with 150+ attendees, just fitting in the conference space, and the two-day program started with a challenging day 1 of advanced topics, and on day 2 we saw more company experiences.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

PLM’s Integral Role in Digital Transformation

As usual, Peter Bilello, CIMdata’s President & CEO, kicked off the conference, and his message has not changed over the years. PLM should be understood as a strategic, enterprise-wide approach that manages intellectual assets and connects the entire product lifecycle.

As usual, Peter Bilello, CIMdata’s President & CEO, kicked off the conference, and his message has not changed over the years. PLM should be understood as a strategic, enterprise-wide approach that manages intellectual assets and connects the entire product lifecycle.

I like the image below explaining the WHY behind product lifecycle management.

It enables end-to-end digitalization, supports digital threads and twins, and provides the backbone for data governance, analytics, AI, and skills transformation.

Peter walked us briefly through CIMdata’s Critical Dozen (a YouTube recording is available here), all of which are relevant to the scope of digital transformation. Without strong PLM foundations and governance, digital transformation efforts will fail.

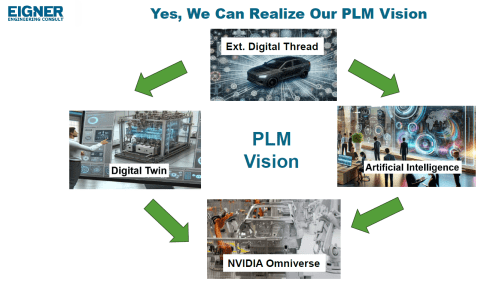

The Digital Thread as the Foundation of the Omniverse

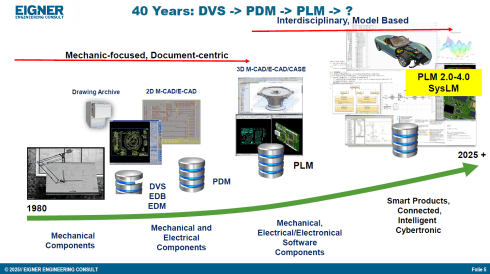

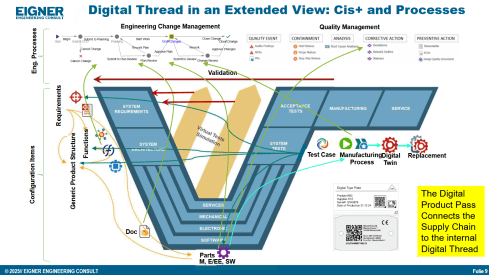

Prof. Dr.-Ing. Martin Eigner, well known for his lifetime passion and vision in product lifecycle management (PDM and PLM tools & methodology), shared insights from his 40-year journey, highlighting the growing complexity and ever-increasing fragmentation of customer solution landscapes.

Prof. Dr.-Ing. Martin Eigner, well known for his lifetime passion and vision in product lifecycle management (PDM and PLM tools & methodology), shared insights from his 40-year journey, highlighting the growing complexity and ever-increasing fragmentation of customer solution landscapes.

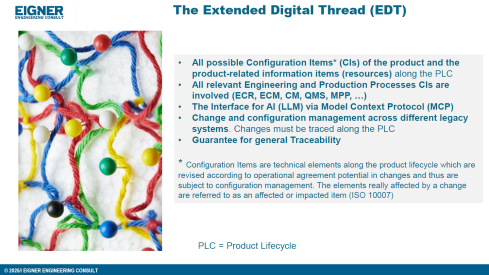

In his current eco-system, ERP (read SAP) is playing a significant role as an execution platform, complemented by PDM or ECTR capabilities. Few of his customers go for the broad PLM systems, and therefore, he stresses the importance of the so-called Extended Digital Thread.

Prof Eigner describes the EDT more precisely as an overlaying infrastructure implemented by a graph database that serves as a performant knowledge graph of the enterprise.

The EDT serves as the foundation for AI-driven applications, supporting impact analysis, change management, and natural-language interaction with product data. The presentation also provides a detailed view of Digital Twin concepts, ranging from component to system and process twins, and demonstrates how twins enhance predictive maintenance, sustainability, and process optimization.

Combined with the NVIDIA Omniverse as the next step toward immersive, real-time collaboration and simulation, enabling virtual factories and physics-accurate visualization. The outlook emphasizes that combining EDT, Digital Twin, AI, and Omniverse moves the industry closer to the original PLM vision: a unified, consistent Single Source of Truth 😮that boosts innovation, efficiency, and ROI.

![]() For me, hearing and reading the term Single Source of Truth still creates discomfort with reality and humanity, so we still have something to discuss.

For me, hearing and reading the term Single Source of Truth still creates discomfort with reality and humanity, so we still have something to discuss.

Semantic Digital Thread for Enhanced Systems Engineering in a Federated PLM Landscape

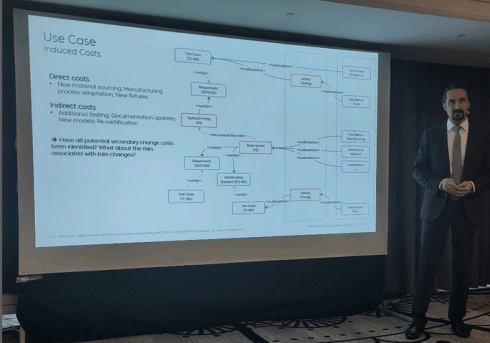

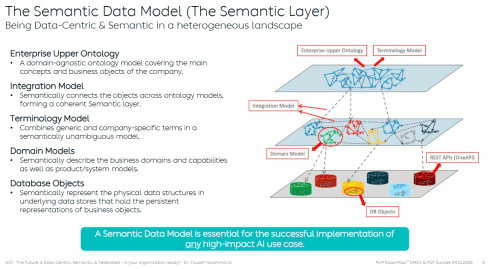

Dr. Yousef Hooshmand‘s presentation was a great continuation of the Extended Digital Thread theme discussed by Dr. Martin Eigner. Where the core of Martin’s EDT is based on traceability between artifacts and processes throughout the lifecycle, Yousef introduced a (for me) totally new concept: starting with managing and structuring the data to manage the knowledge, rather than starting from the models and tools to understand the knowledge.

Dr. Yousef Hooshmand‘s presentation was a great continuation of the Extended Digital Thread theme discussed by Dr. Martin Eigner. Where the core of Martin’s EDT is based on traceability between artifacts and processes throughout the lifecycle, Yousef introduced a (for me) totally new concept: starting with managing and structuring the data to manage the knowledge, rather than starting from the models and tools to understand the knowledge.

It is a fundamentally different approach to addressing the same problem of complexity. During our pre-conference workshop “Shape the future of PLM – together,” I already got a bit familiar with this approach, and Yousef’s recently released paper provides all the details.

All the relevant information can be found in his recent LinkedIn post here.

In his presentation during the conference, Yousef illustrated the value and applicability of the Semantic Digital Thread approach by presenting it in an automotive use case: Impact Analysis and Cost Estimation (image above)

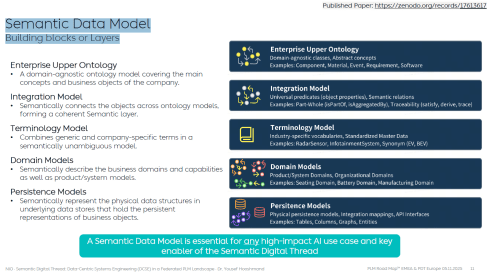

To understand the Semantic Digital Thread, it is essential to understand the Semantic Data Model and its building blocks or layers, as illustrated in the image below:

In addition, such an infrastructure is ideal for AI applications and avoids vendor- or tool lock-in, providing a significant long-term advantage.

I am sure it will take time for us to digest the content if you are entering the domain of a data-driven enterprise (the connected approach) instead of a document-driven enterprise (the coordinated approach).

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

Where is your company on the path to becoming more data-driven?

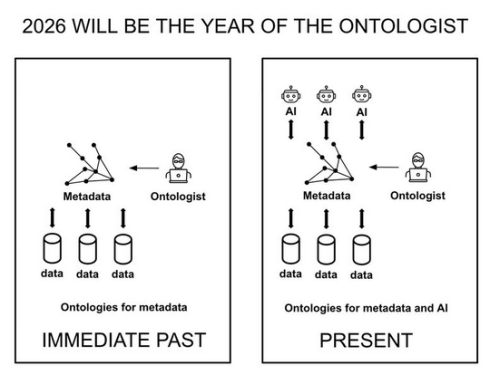

Note: I just saw this post and the image above, which emphasizes the importance of the relationship between ontologies and the application of AI agents.

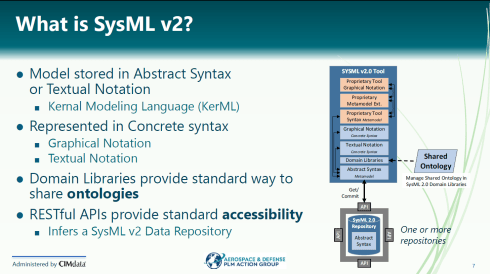

Evaluation of SysML v2 for use in Collaborative MBSE between OEMs and Suppliers

It was interesting to hear Chris Watkins’ speech, which presented the findings from the AD PLM Action Group MBSE Collaboration Working Group on digital collaboration based on SysML v2.

It was interesting to hear Chris Watkins’ speech, which presented the findings from the AD PLM Action Group MBSE Collaboration Working Group on digital collaboration based on SysML v2.

The topic they research is that currently there are no common methods and standards for exchanging digital model-based requirements and architecture deliverables for the design, procurement, and acceptance of aerospace systems equipment across the industry.

The action group explored the value of SysML v2 for data-driven collaboration between OEMs and suppliers, particularly in the early concept phases.

Chris started with a brief explanation of what SysXML v2 is – image below:

As the image illustrates, SysML v2-ready tools allow people to work in their proprietary interfaces while sharing results in common, defined structures and ontologies.

When analyzing various collaboration scenarios, one of the main challenges remained managing changes, the required ontologies, and working in a shared IT environment.

👉You can read the full report here: AD PAG reports: Model-Based Systems Engineering.

An interesting point of discussion here is that, in the report, participants note that, despite calling out significant gaps and concerns, a substantial majority of the industry indicated that their MBSE solution provider is a good partner. At the same time, only a small minority expressed a negative view.

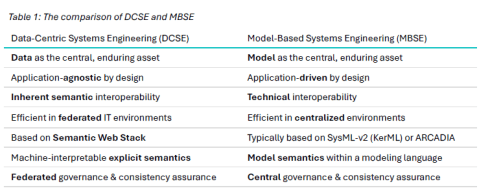

Would Data-Centric Systems Engineering change the discussion? See table 1 below from Yousef’s paper:

An illustration that there was enough food for discussion during the conference.

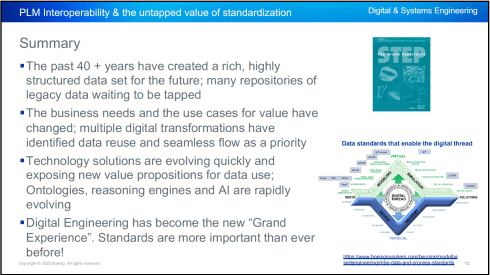

PLM Interoperability and the Untapped Value of 40 Years in Standardization

In the context of collaboration, two sessions fit together perfectly.

First, Kenny Swope from Boeing. Kenny is a longtime Boeing engineering leader and global industrial-data standards expert who oversees enterprise interoperability efforts, chairs ISO/TC 184/SC 4, and mentors youth in technology through 4-H and FIRST programs.

First, Kenny Swope from Boeing. Kenny is a longtime Boeing engineering leader and global industrial-data standards expert who oversees enterprise interoperability efforts, chairs ISO/TC 184/SC 4, and mentors youth in technology through 4-H and FIRST programs.

Kenny shared that over the past 40+ years, the understanding and value of this approach have become increasingly apparent, especially as organizations move toward a digital enterprise. In a digital enterprise, these standards are needed for efficient interoperability between various stakeholders. And the next session was an example of this.

Unlocking Enterprise Knowledge

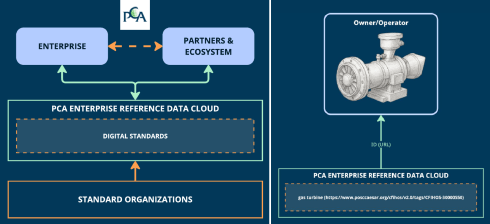

Fredrik Anthonisen, the CTO of the POSC Caesar Association (PCA), started his story about the potential value of efficient standard use.

Fredrik Anthonisen, the CTO of the POSC Caesar Association (PCA), started his story about the potential value of efficient standard use.

According to a Siemens report, “The true costs of downtime” a $1,4 trillion is lost to unplanned downtime.

The root cause is that, most of the time, the information needed to support the MRO activity is inaccessible or incomplete.

Making data available using standards can provide part of the answer, but static documents and slow consensus processes can’t keep up with the pace of change.

Therefore, PCA established the PCA enterprise reference data cloud, where all stakeholders in enterprise collaboration can relate their data to digital exposed standards, as the left side of the image shows.

Fredrik shared a use case (on the right side of the image) as an example. Also, he mentioned that the process for defining and making the digital reference data available to participants is ongoing. The reference data needs to become the trusted resource for the participants to monetize the benefits.

Summary

Day 1 had many more interesting and advanced concepts related to standards and the potential usage of AI.

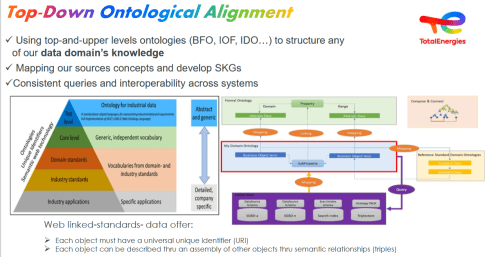

Jean-Charles Leclerc, Head of Innovation & Standards at TotalEnergies, in his session, “Bringing Meaning Back To Data,” elaborated on the need to provide data in the context of the domain for which it is intended, rather than “indexed” LLM data.

Jean-Charles Leclerc, Head of Innovation & Standards at TotalEnergies, in his session, “Bringing Meaning Back To Data,” elaborated on the need to provide data in the context of the domain for which it is intended, rather than “indexed” LLM data.

Very much aligned with Yousef’s statement that there is a need to apply semantic technologies, and especially ontologies, to turn the data into knowledge.

More details can also be found in the “Shape the future of PLM – together” post, where Jean-Charles was one of the leading voices.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

“Reliable AI comes from a foundation that supports knowledge in its domain context.”

Conclusion

For the casual user, day 1 was tough – digital transformation in the product lifecycle domain requires skills that might not yet exist in smaller organizations. Understanding the need for ontologies (generic/domain-specific) and semantic models is essential to benefit from what AI can bring – a challenging and enjoyable journey to follow!

On November 11th, we celebrated our 5th anniversary of the PLM Green Global Alliance (PGGA) with a webinar where ♻️ Jos Voskuil (me) interviewed the five other PGGA core team members about developments and experiences in their focus domain, potentially allowing for a broader discussion.

On November 11th, we celebrated our 5th anniversary of the PLM Green Global Alliance (PGGA) with a webinar where ♻️ Jos Voskuil (me) interviewed the five other PGGA core team members about developments and experiences in their focus domain, potentially allowing for a broader discussion.

In our discussion, we focused on the trends and future directions of the PLM Green Global Alliance, emphasizing the intersection of Product Lifecycle Management (PLM) and sustainability.

Probably, November 11th was not the best day for broad attendance, and therefore, we hope that the recording of this webinar will allow you to connect and comment on this post.

Probably, November 11th was not the best day for broad attendance, and therefore, we hope that the recording of this webinar will allow you to connect and comment on this post.

Enjoy the discussion – watch it, or listen to it, as this time we did not share any visuals in the debate. Still, we hope to get your reflections and feedback on the interview related to the LinkedIn post.

The discussion centered on the trends and future directions of the PLM Green Global Alliance, with a focus on the intersection of Product Lifecycle Management (PLM) and sustainability.

Short Summary

♻️ Rich McFall shared his motivations for founding the alliance, highlighting the need for a platform that connects individuals committed to sustainability and addresses the previously limited discourse on PLM’s role in promoting environmental responsibility. He noted a significant variance in vendor engagement with sustainability, indicating that while some companies are proactive, others remain hesitant.

The conversation delved into the growing awareness and capabilities of how to perform a Life Cycle Assessment (LCA) with ♻️ Klaus Brettschneider, followed by the importance of integrating sustainability into PLM strategies, with ♻️ Mark Reisig discussing the ongoing energy transition and the growing investments in green technologies, particularly in China and Europe.

♻️ Evgeniya Burimskaya raised concerns about implementing circular economy principles in the aerospace industry, emphasizing the necessity of lifecycle analysis and the upcoming digital product passport requirements. The dialogue also touched on the Design for Sustainability initiative, led by ♻️ Erik Rieger, which aims to embed sustainability into the product design phase, necessitating a cultural shift in engineering education to prioritize sustainability.

Conclusion

We concluded with understanding the urgent realities of climate change, but also advocating for an optimistic mindset in the face of challenges – it is perhaps not as bad as it seems in the new media. There are significant investments in green energy, serving as a beacon of hope, which encourage people to remain committed to collaborative efforts in advancing sustainable practices.

We agreed on the long-term nature of behavioral change within organizations and the role of the Green Alliance in fostering this transformation, concluding with a positive outlook on the potential for future generations to drive necessary changes in sustainability.

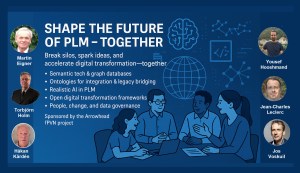

Together with Håkan Kårdén, we had the pleasure of bringing together 32 passionate professionals on November 4th to explore the future of PLM (Product Lifecycle Management) and ALM (Asset Lifecycle Management), inspired by insights from four leading thinkers in the field. Please, click on the image for more details.

Together with Håkan Kårdén, we had the pleasure of bringing together 32 passionate professionals on November 4th to explore the future of PLM (Product Lifecycle Management) and ALM (Asset Lifecycle Management), inspired by insights from four leading thinkers in the field. Please, click on the image for more details.

The meeting had two primary purposes.

- Firstly, we aimed to create an environment where these concepts could be discussed and presented to a broader audience, comprising academics, industrial professionals, and software developers. The group’s feedback could serve as a benchmark for them.

- The second goal was to bring people together and create a networking opportunity, either during the PLM Roadmap/PDT Europe conference, the day after, or through meetings established after this workshop.

Personally, it was a great pleasure to meet some people in person whose LinkedIn articles I had admired and read.

The meeting was sponsored by the Arrowhead fPVN project, a project I discussed in a previous blog post related to the PLM Roadmap/PDT Europe 2024 conference last year. Together with the speakers, we have begun working on a more in-depth paper that describes the similarities and the lessons learned that are relevant. This activity will take some time.

Therefore, this post only includes the abstracts from the speakers and links to their presentations. It concludes with a few observations from some attendees.

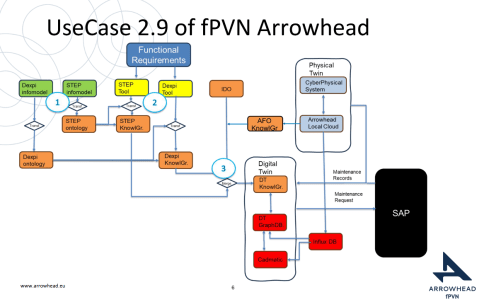

Reasoning Machines: Semantic Integration in Cyber-Physical Environments

Torbjörn Holm / Jan van Deventer: The presentation discussed the transition from requirements to handover and operations, emphasizing the role of knowledge graphs in unifying standards and technologies for a flexible product value network

Torbjörn Holm / Jan van Deventer: The presentation discussed the transition from requirements to handover and operations, emphasizing the role of knowledge graphs in unifying standards and technologies for a flexible product value network

The presentation outlines the phases of the product and production lifecycle, including requirements, specification, design, build-up, handover, and operations. It raises a question about unifying these phases and their associated technologies and standards, emphasizing that the most extended phase, which involves operation, maintenance, failure, and evolution until retirement, should be the primary focus.

It also discusses seamless integration, outlining a partial list of standards and technologies categorized into three sections: “Modelling & Representation Standards,” “Communication & Integration Protocols,” and “Architectural & Security Standards.” Each section contains a table listing various technology standards, their purposes, and references. Additionally, the presentation includes a “Conceptual Layer Mapping” table that details the different layers (Knowledge, Service, Communication, Security, and Data), along with examples, functions, and references.

The presentation outlines an approach for utilizing semantic technologies to ensure interoperability across heterogeneous datasets throughout a product’s lifecycle. Key strategies include using OWL 2 DL for semantic consistency, aligning domain-specific knowledge graphs with ISO 23726-3, applying W3C Alignment techniques, and leveraging Arrowhead’s microservice-based architecture and Framework Ontology for scalable and interoperable system integration.

The utilized software architecture system, including three main sections: “Functional Requirements,” “Physical Twin,” and “Digital Twin,” each containing various interconnected components, will be presented. The Architecture includes today several Knowledge Graphs (KG): A DEXPI KG, A STEP (ISO 10303) KG, An Arrowhead Framework KG and under work the CFIHOS Semantics Ontology, all aligned.

👉The presentation: W3C Major standard interoperability_Paris

Beyond Handover: Building Lifecycle-Ready Semantic Interoperability

Jean-Charles Leclerc argued that Industrial data standards must evolve beyond the narrow scope of handover and static interoperability. To truly support digital transformation, they must embrace lifecycle semantics or, at the very least, be designed for future extensibility.

This shift enables technical objects and models to be reused, orchestrated, and enriched across internal and external processes, unlocking value for all stakeholders and managing the temporal evolution of properties throughout the lifecycle. A key enabler is the “pattern of change”, a dynamic framework that connects data, knowledge, and processes over time. It allows semantic models to reflect how things evolve, not just how they are delivered.

By grounding semantic knowledge graphs (SKGs) in such rigorous logic and aligning them with W3C standards, we ensure they are both robust and adaptable. This approach supports sustainable knowledge management across domains and disciplines, bridging engineering, operations, and applications.

Ultimately, it’s not just about technology; it’s about governance.

Being Sustainab’OWL (Web Ontology Language) by Design! means building semantic ecosystems that are reliable, scalable, and lifecycle-ready by nature.

Additional Insight: From Static Models to Living Knowledge

To transition from static information to living knowledge, organizations must reassess how they model and manage technical data. Lifecycle-ready interoperability means enabling continuous alignment between evolving assets, processes, and systems. This requires not only semantic precision but also a governance framework that supports change, traceability, and reuse, turning standards into operational levers rather than compliance checkboxes.

👉The presentation: Beyond Handover – Building Lifecycle Ready Semantic Interoperability

The first two presentations had a lot in common as they both come from the Asset Lifecycle Management domain and focus on an infrastructure to support assets over a long lifetime. This is particularly visible in the usage and references to standards such as DEXPI, STEP, and CFIHOS, which are typical for this domain.

The first two presentations had a lot in common as they both come from the Asset Lifecycle Management domain and focus on an infrastructure to support assets over a long lifetime. This is particularly visible in the usage and references to standards such as DEXPI, STEP, and CFIHOS, which are typical for this domain.

How can we achieve our vision of PLM – the Single Source of Truth?

Martin Eigner stated that Product Lifecycle Management (PLM) has long promised to serve as the Single Source of Truth for organizations striving to manage product data, processes, and knowledge across their entire value chain. Yet, realizing this vision remains a complex challenge.

Achieving a unified PLM environment requires more than just implementing advanced software systems—it demands cultural alignment, organizational commitment, and seamless integration of diverse technologies. Central to this vision is data consistency: ensuring that stakeholders across engineering, manufacturing, supply chain, and service have access to accurate, up-to-date, and contextualized information along the Product Lifecycle. This involves breaking down silos, harmonizing data models, and establishing governance frameworks that enforce standards without limiting flexibility.

Emerging technologies and methodologies, such as Extended Digital Thread, Digital Twins, cloud-based platforms, and Artificial Intelligence, offer new opportunities to enhance collaboration and integrated data management.

However, their success depends on strong change management and a shared understanding of PLM as a strategic enabler rather than a purely technical solution. By fostering cross-functional collaboration, investing in interoperability, and adopting scalable architectures, organizations can move closer to a trustworthy single source of truth. Ultimately, realizing the vision of PLM requires striking a balance between innovation and discipline—ensuring trust in data while empowering agility in product development and lifecycle management.

👉The presentation: Martin – Workshop PLM Future 04_10_25

The Future is Data-Centric, Semantic, and Federated … Is your organization ready?

Yousef Hooshmand, who is currently working at NIO as PLM & R&D Toolchain Lead Architect, discussed the must-have relations between a data-centric approach, semantic models and a federated environment as the image below illustrates:

Why This Matters for the Future?

- Engineering is under unprecedented pressure: products are becoming increasingly complex, customers are demanding personalization, and development cycles must be accelerated to meet these demands. Traditional, siloed methods can no longer keep up.

- The way forward is a data-centric, semantic, and federated approach that transforms overwhelming complexity into actionable insights, reduces weeks of impact analysis to minutes, and connects fragmented silos to create a resilient ecosystem.

- This is not just an evolution, but a fundamental shift that will define the future of systems engineering. Is your organization ready to embrace it?

👉The presentation: The Future is Data-Centric, Semantic, and Federated.

Some of first impressions

👉 Bhanu Prakash Ila from Tata Consultancy Services– you can find his original comment in this LinkedIn post

Key points:

- Traditional PLM architectures struggle with the fundamental challenge of managing increasingly complex relationships between product data, process information, and enterprise systems.

- Ontology-Based Semantic Models – The Way Forward for PLM Digital Thread Integration: Ontology-based semantic models address this by providing explicit, machine-interpretable representations of domain knowledge that capture both concepts and their relationships. These lay the foundations for AI-related capabilities.

It’s clear that as AI, semantic technologies, and data intelligence mature, the way we think and talk about PLM must evolve too – from system-centric to value-driven, from managing data to enabling knowledge and decisions.

A quick & temporary conclusion

Typically, I conclude my blog posts with a summary. However, this time the conclusion is not there yet. There is work to be done to align concepts and understand for which industry they are most applicable. Using standards or avoiding standards as they move too slowly for the business is a point of ongoing discussion. The takeaway for everyone in the workshop was that data without context has no value. Ontologies, semantic models and domain-specific methodologies are mandatory for modern data-driven enterprises. You cannot avoid this learning path by just installing a graph database.

This week is busy for me as I am finalizing several essential activities related to my favorite hobby, product lifecycle management or is it PLM😉?

And most of these activities will result in lengthy blog posts, starting with:

“The week(end) after <<fill in the event>>”.

Here are the upcoming actions:

Click on each image if you want to see the details:

In this Future of PLM Podcast series, moderated by Michael Finocciaro, we will continue the debate on how to position PLM (as a system or a strategy) and move away from an engineering framing. Personally, I never saw PLM as a system and started talking more and more about product lifecycle management (the strategy) versus PLM/PDM (the systems).

Note: the intention is to be interactive with the audience, so feel free to post questions/remarks in the comments, either upfront or during the event.

You might have seen in the past two weeks some posts and discussions I had with the Share PLM team about a unique offering we are preparing: the PLM Awareness program. From our field experience, PLM is too often treated as a technical issue, handled by a (too) small team.

We believe every PLM program should start by fostering awareness of what people can expect nowadays, given the technology, experiences, and possibilities available. If you want to work with motivated people, you have to involve them and give them all the proper understanding to start with.

Join us for the online event to understand the value and ask your questions. We are looking forward to your participation.

This is another event related to the future of PLM; however, this time it is an in-person workshop, where, inspired by four PLM thought leaders, we will discuss and work on a common understanding of what is required for a modern PLM framework. The workshop, sponsored by the Arrowhead fPVN project, will be held in Paris on November 4th, preceding the PLM Roadmap/PDT Europe conference.

We will not discuss the term PLM; we will discuss business drivers, supporting technologies and more. My role as a moderator of this event is to assist with the workshop, and I will share its findings with a broader audience that wasn’t able to attend.

Be ready to learn more in the near future!

Suppose you have followed my blog posts for the past 10 years. In that case, you know this conference is always a place to get inspired, whether by leading companies across industries or by innovative and engaging new developments. This conference has always inspired and helped me gain a better understanding of digital transformation in the PLM domain and how larger enterprises are addressing their challenges.

This time, I will conclude the conference with a lecture focusing on the challenging side of digital transformation and AI: we humans cannot transform ourselves, so we need help.

At the end of this year, we will “celebrate” our fifth anniversary of the PLM Green Global Alliance. When we started the PGGA in 2020, there was an initial focus on the impact of carbon emissions on the climate, and in the years that followed, climate disasters around the world caused serious damage to countries and people.

How could we, as a PLM community, support each other in developing and sharing best practices for innovative, lower-carbon products and processes?

In parallel, driven by regulations, there was also a need to improve current PLM practices to efficiently support ESG reporting, lifecycle analysis, and, soon, the Digital Product Passport. Regulations that push for a modern data-driven infrastructure, and we discussed this with the major PLM vendors and related software or solution partners. See our YouTube channel @PLM_Green_Global_Alliance

In this online Zoom event, we invite you to join us to discuss the topics mentioned in the announcement. Join us in this event and help us celebrate!

I am closing that week at the PTC/User Benelux event in Eindhoven, the Netherlands, with a keynote speech about digital transformation in the PLM domain. Eindhoven is the city where I grew up, completed my amateur soccer career, ran my first and only marathon, and started my career in PLM with SmarTeam. The city and location feel like home. I am looking forward to discussing and meeting with the PTC user community to learn how they experience product lifecycle management, or is it PLM😉?

With all these upcoming events, I did not have the time to focus on a new blog post; however, luckily, in the 10x PLM discussion started by Oleg Shilovitsky there was an interesting comment from Rob Ferrone related to that triggered my mind. Quote:

With all these upcoming events, I did not have the time to focus on a new blog post; however, luckily, in the 10x PLM discussion started by Oleg Shilovitsky there was an interesting comment from Rob Ferrone related to that triggered my mind. Quote:

The big breakthrough will come from 1. advances in human-machine interface and 2. less % of work executed by human in the loop. Copy/paste, typing, voice recognition are all significant limits right now. It’s like trying to empty a bucket of water through a drinking straw. When tech becomes more intelligent and proactive then we will see at least 10x.

This remark reminded me of one of my first blog posts in 2008, when I was trying to predict what PLM would look like in 2050. I thought it is a nice moment to read it (again). Enjoy!

PLM in 2050

As the year ends, I decided to take my crystal ball to see what would happen with PLM in the future. It felt like a virtual experience, and this is what I saw:

As the year ends, I decided to take my crystal ball to see what would happen with PLM in the future. It felt like a virtual experience, and this is what I saw: