You are currently browsing the tag archive for the ‘AI’ tag.

The title of this post is chosen influenced by one of Jan Bosch’s daily reflections # 156: Hype-as-a-Service. You can read his full reflection here.

His post reminded me of a topic that I frequently mention when discussing modern PLM concepts with companies and peers in my network. Data Quality and Data Governance, sometimes, in the context of the connected digital thread, and more recently, about the application of AI in the PLM domain.

I’ve noticed that when I emphasize the importance of data quality and data governance, there is always a lot of agreement from the audience. However, when discussing these topics with companies, the details become vague.

Yes, there is a desire to improve data quality, and yes, we push our people to improve the quality processes of the information they produce. Still, I was curious if there is an overall strategy for companies.

And who to best talk to? Rob Ferrone, well known as “The original Product Data PLuMber” – together, we will discuss the topic of data quality and governance in two posts. Here is part one – defining the playground.

The need for Product Data People

During the Share PLM Summit, I was inspired by Rob’s theatre play, “The Engineering Murder Mystery.” Thanks to the presence of Michael Finocchiaro, you might have seen the play already on LinkedIn – if you have 20 minutes, watch it now.

Rob’s ultimate plea was to add product data people to your company to make the data reliable and flow. So, for me, he is the person to understand what we mean by data quality and data governance in reality – or is it still hype?

What is data?

Hi Rob, thank you for having this conversation. Before discussing quality and governance, could you share with us what you consider ‘data’ within our PLM scope? Is it all the data we can imagine?

Hi Rob, thank you for having this conversation. Before discussing quality and governance, could you share with us what you consider ‘data’ within our PLM scope? Is it all the data we can imagine?

I propose that relevant PLM data encompasses all product-related information across the lifecycle, from conception to retirement. Core data includes part or item details, usage, function, revision/version, effectivity, suppliers, attributes (e.g., cost, weight, material), specifications, lifecycle state, configuration, and serial number.

I propose that relevant PLM data encompasses all product-related information across the lifecycle, from conception to retirement. Core data includes part or item details, usage, function, revision/version, effectivity, suppliers, attributes (e.g., cost, weight, material), specifications, lifecycle state, configuration, and serial number.

Secondary data supports lifecycle stages and includes requirements, structure, simulation results, release dates, orders, delivery tracking, validation reports, documentation, change history, inventory, and repair data.

Tertiary data, such as customer information, can provide valuable support for marketing or design insights. HR data is generally outside the scope, although it may be referenced when evaluating the impact of PLM on engineering resources.

What is data quality?

Now that we have a data scope in mind, I can imagine that there is also some nuance in the term’ data quality’. Do we strive for 100% correct data, and is the term “100 % correct” perhaps too ambitious? How would you define and address data quality?

Now that we have a data scope in mind, I can imagine that there is also some nuance in the term’ data quality’. Do we strive for 100% correct data, and is the term “100 % correct” perhaps too ambitious? How would you define and address data quality?

You shouldn’t just want data quality for data quality’s sake. You should want it because your business processes depend on it. As for 100%, not all data needs to be accurate and available simultaneously. It’s about having the proper maturity of data at the right time.

You shouldn’t just want data quality for data quality’s sake. You should want it because your business processes depend on it. As for 100%, not all data needs to be accurate and available simultaneously. It’s about having the proper maturity of data at the right time.

For example, when you begin designing a component, you may not need to have a nominated supplier, and estimated costs may be sufficient. However, missing supplier nomination or estimated costs would count against data quality when it is time to order parts.

And these deliverable timings will vary across components, so 100% quality might only be achieved when the last standard part has been identified and ordered.

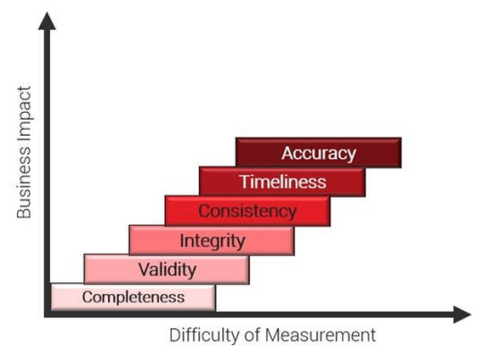

It is more important to know when you have reached the required data quality objective for the top-priority content. The image below explains the data quality dimensions:

- Completeness (Are all required fields filled in?)

KPI Example: % of product records that include all mandatory fields (e.g., part number, description, lifecycle status, unit of measure)

- Validity (Do values conform to expected formats, rules, or domains?)

KPI Example: % of customer addresses that conform to ISO 3166 country codes and contain no invalid characters

- Integrity (Do relationships between data records hold?)

KPI Example: % of BOM records where all child parts exist in the Parts Master and are not marked obsolete

- Consistency (Is data consistent across systems or domains?)

KPI Example: % of product IDs with matching descriptions and units across PLM and ERP systems

- Timeliness (Is data available and updated when needed?)

KPI Example: % of change records updated within 24 hours of approval or effective date

- Accuracy (Does the data reflect real-world truth?)

KPI Example: % of asset location records that match actual GPS coordinates from service technician visits

Define data quality KPIs based on business process needs, ensuring they drive meaningful actions aligned with project goals.

While defining quality is one challenge, detecting issues is another. Data quality problems vary in severity and detection difficulty, and their importance can shift depending on the development stage. It’s vital not to prioritize one measure over others, e.g., having timely data doesn’t guarantee that it has been validated.

While defining quality is one challenge, detecting issues is another. Data quality problems vary in severity and detection difficulty, and their importance can shift depending on the development stage. It’s vital not to prioritize one measure over others, e.g., having timely data doesn’t guarantee that it has been validated.

Like the VUCA framework, effective data quality management begins by understanding the nature of the issue: is it volatile, uncertain, complex, or ambiguous?

Not all “bad” data is flawed, some may be valid estimates, changes, or system-driven anomalies. Each scenario requires a tailored response; treating all issues the same can lead to wasted effort or overlooked insights.

Furthermore, data quality goes beyond the data itself—it also depends on clear definitions, ownership, monitoring, maintenance, and governance. A holistic approach ensures more accurate insights and better decision-making throughout the product lifecycle.

KPIs?

In many (smaller) companies KPI do not exist; they adjust their business based on experience and financial results. Are companies ready for these KPIs, or do they need to establish a data governance baseline first?

In many (smaller) companies KPI do not exist; they adjust their business based on experience and financial results. Are companies ready for these KPIs, or do they need to establish a data governance baseline first?

Many companies already use data to run parts of their business, often with little or no data governance. They may track program progress, but rarely systematically monitor data quality. Attention tends to focus on specific data types during certain project phases, often employing audits or spot checks without establishing baselines or implementing continuous monitoring.

Many companies already use data to run parts of their business, often with little or no data governance. They may track program progress, but rarely systematically monitor data quality. Attention tends to focus on specific data types during certain project phases, often employing audits or spot checks without establishing baselines or implementing continuous monitoring.

This reactive approach means issues are only addressed once they cause visible problems.

When data problems emerge, trust in the system declines. Teams revert to offline analysis, build parallel reports, and generate conflicting data versions. A lack of trust worsens data quality and wastes time resolving discrepancies, making it difficult to restore confidence. Leaders begin to question whether the data can be trusted at all.

Data governance typically evolves; it’s challenging to implement from the start. Organizations must understand their operations before they can govern data effectively.

Data governance typically evolves; it’s challenging to implement from the start. Organizations must understand their operations before they can govern data effectively.

In start-ups, governance is challenging. While they benefit from a clean slate, their fast-paced, prototype-driven environment prioritizes innovation over stable governance. Unlike established OEMs with mature processes, start-ups focus on agility and innovation, making it challenging to implement structured governance in the early stages.

Data governance is a business strategy, similar to Product Lifecycle Management.

Before they go on the journey of creating data management capabilities, companies must first understand:

- The cost of not doing it.

- The value of doing it.

- The cost of doing it.

What is the cost associated with not doing data quality and governance?

Similar to configuration management, companies might find it a bureaucratic overhead that is hard to justify. As long as things are going well (enough) and the company’s revenue or reputation is not at risk, why add this extra work?

Similar to configuration management, companies might find it a bureaucratic overhead that is hard to justify. As long as things are going well (enough) and the company’s revenue or reputation is not at risk, why add this extra work?

Product data quality is either a tax or a dividend. In Part 2, I will discuss the benefits. In Part 1, this discussion, I will focus on the cost of not doing it.

Product data quality is either a tax or a dividend. In Part 2, I will discuss the benefits. In Part 1, this discussion, I will focus on the cost of not doing it.

Every business has stories of costly failures caused by incorrect part orders, uncommunicated changes, or outdated service catalogs. It’s a systematic disease in modern, complex organisations. It’s part of our day-to-day working lives: multiple files with slightly different file names, important data hidden in lengthy email chains, and various sources for the same information (where the value differs across sources), among other challenges.

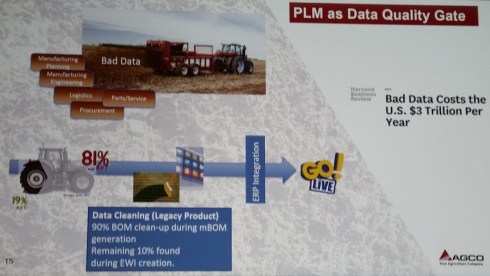

Above image from Susan Lauda’s presentation at the PLMx 2018 conference in Hamburg, where she shared the hidden costs of poor data. Please read about it in my blog post: The weekend after PLMx Hamburg.

Above image from Susan Lauda’s presentation at the PLMx 2018 conference in Hamburg, where she shared the hidden costs of poor data. Please read about it in my blog post: The weekend after PLMx Hamburg.

Poor product data can impact more than most teams realize. It wastes time—people chase missing info, duplicate work, and rerun reports. It delays builds, decisions, and delivery, hurting timelines and eroding trust. Quality drops due to incorrect specifications, resulting in rework and field issues. Financial costs manifest as scrap, excess inventory, freight, warranty claims, and lost revenue.

Poor product data can impact more than most teams realize. It wastes time—people chase missing info, duplicate work, and rerun reports. It delays builds, decisions, and delivery, hurting timelines and eroding trust. Quality drops due to incorrect specifications, resulting in rework and field issues. Financial costs manifest as scrap, excess inventory, freight, warranty claims, and lost revenue.

Worse, poor data leads to poor decisions, wrong platforms, bad supplier calls, and unrealistic timelines. It also creates compliance risks and traceability gaps that can trigger legal trouble. When supply chain visibility is lost, the consequences aren’t just internal, they become public.

For example, in Tony’s Chocolonely’s case, despite their ethical positioning, they were removed from the Slave Free Chocolate list after 1,700 child labour cases were discovered in their supplier network.

The good news is that most of the unwanted costs are preventable. There are often very early indicators that something was going to be a problem. They are just not being looked at.

Better data governance equals better decision-making power.

Visibility prevents the inevitable.

Conclusion of part 1

Thanks to Rob’s answers, I am confident that you now have a better understanding of what Data Quality and Data Governance mean in the context of your business. In addition, we discussed the cost of doing nothing. In Part 2, we will explore how to implement it in your company, and Rob will share some examples of the benefits.

Feel free to post your questions for the original Product Data PLuMber in the comments.

Four years ago, during the COVID-19 pandemic, we discussed the critical role of a data plumber.

In the last two weeks, I had some interesting observations and discussions related to the need to have a (PLM) vision. I placed the word PLM between brackets, as PLM is no longer an isolated topic in an organization. A PLM strategy should align with the business strategy and vision.

In the last two weeks, I had some interesting observations and discussions related to the need to have a (PLM) vision. I placed the word PLM between brackets, as PLM is no longer an isolated topic in an organization. A PLM strategy should align with the business strategy and vision.

To be clear, if you or your company wants to survive in the future, you need a sustainable vision and a matching strategy as the times they are a changing, again!

I love the text: “Don’t criticize what you can’t understand” – a timeless quote.

First, there was Rob Ferrone’s article: Multi-view. Perspectives that shape PLM – a must-read to understand who to talk to about which dimension of PLM – and it is worth browsing through the comments too – there you will find the discussions, and it helps you to understand the PLM players.

First, there was Rob Ferrone’s article: Multi-view. Perspectives that shape PLM – a must-read to understand who to talk to about which dimension of PLM – and it is worth browsing through the comments too – there you will find the discussions, and it helps you to understand the PLM players.

Note: it is time that AI-generated images become more creative 😉

Next, there is still the discussion started by Gareth Webb, Digital Thread and the Knowledge Graph, further stirred by Oleg Shilovitsky.

Next, there is still the discussion started by Gareth Webb, Digital Thread and the Knowledge Graph, further stirred by Oleg Shilovitsky.

Based on the likes and comments, it is clearly a topic that creates interaction – people are thinking and talking about it – the Digital Thread as a Service.

One of the remaining points in this debate is still the HOW and WHEN companies decide to implement a Digital Thread, a Knowledge Graph and other modern data concepts.

So far my impression is that most companies implement their digital enhancements (treads/graphs) in a bottom-up approach, not driven by a management vision but more like band-aids or places where it fits well, without a strategy or vision.

So far my impression is that most companies implement their digital enhancements (treads/graphs) in a bottom-up approach, not driven by a management vision but more like band-aids or places where it fits well, without a strategy or vision.

The same week, we, Beatriz Gonzáles and I, recorded a Share PLM podcast session with Paul Kaiser from MHP Americas as a guest. Paul is the head of the Digital Core & Technology department, where he leads management and IT consulting services focused on end-to-end business transformation.

During our discussion, Paul mentioned the challenge in engagements when the company has no (PLM) vision. These companies expect external consultants to formulate and implement the vision – a recipe for failure due to wrong expectations.

During our discussion, Paul mentioned the challenge in engagements when the company has no (PLM) vision. These companies expect external consultants to formulate and implement the vision – a recipe for failure due to wrong expectations.

The podcast can be found HERE , and the session inspired me to write this post.

“We just want to be profitable“.

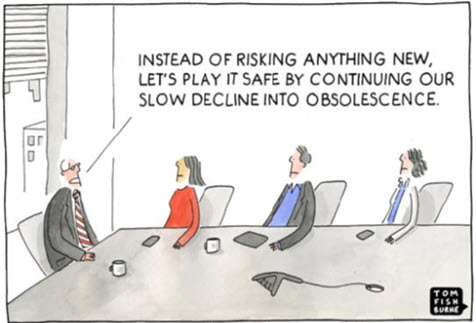

I believe it is a typical characteristic of small and medium enterprises that people are busy with their day-to-day activities. In addition, these companies rarely appoint new top management, which could shake up the company in a positive direction. These companies evolve …..

You often see a stable management team with members who grew up with the company and now monitor and guide it, watching its finances and competition. They know how the current business is running.

Based on these findings, there will be classical efficiency plans, i.e., cutting costs somewhere, dropping some non-performing products, or investing in new technology that they cannot resist. Still, minor process changes and fundamental organizational changes are not expected.

Based on these findings, there will be classical efficiency plans, i.e., cutting costs somewhere, dropping some non-performing products, or investing in new technology that they cannot resist. Still, minor process changes and fundamental organizational changes are not expected.

Most of the time, the efficiency plans provide single-digit benefits.

Everyone is happy when the company feels stable and profitable, even if the margins are under pressure. The challenge for this type of company without a vision is that they navigate in the dark when the outside world changes – like nowadays.

Everyone is happy when the company feels stable and profitable, even if the margins are under pressure. The challenge for this type of company without a vision is that they navigate in the dark when the outside world changes – like nowadays.

The world is changing drastically.

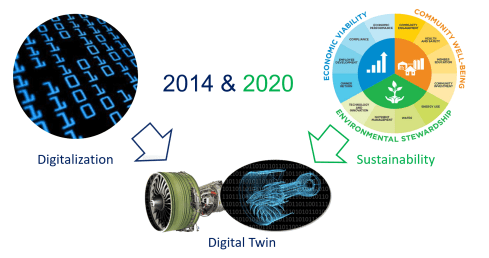

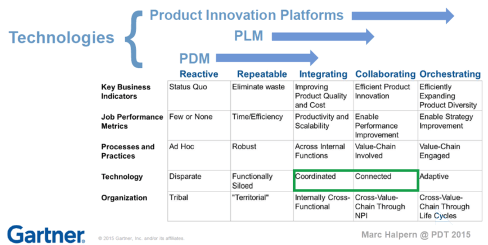

Since 2014, I have advocated for digital transformation in the PLM domain and explained it simply using the statement: From Coordinated to Connected, which already implies much complexity.

Moving from document/files to datasets and models, from a linear delivery model to a DevOps model, from waterfall to agile and many other From-To statements.

Moving From-To is a transformational journey, which means you will learn and adapt to new ways of working during the journey. Still, the journey should have a target, directed by a vision.

However, not many companies have started this journey because they just wanted to be profitable.

“Why should we go in an unknown direction?”

With the emergence of sustainability regulations, e.g., GHG and ESG reporting, carbon taxes, material reporting, and the Digital Product Passport, which goes beyond RoHS and REACH and applies to much more industries, there came the realization that there is a need to digitize the product lifecycle processes and data beyond documents. Manual analysis and validation are too expensive and unreliable.

At this stage, there is already a visible shift between companies that have proactively implemented a digitally connected infrastructure and companies that still see compliance with regulations as an additional burden. The first group brings products to the market faster and more sustainably than the second group because sustainability is embedded in their product lifecycle management.

![]() And just when companies felt they could manage the transition from Coordinated to Coordinated and Connected, there was the fundamental disruption of embedded AI in everything, including the PLM domain.

And just when companies felt they could manage the transition from Coordinated to Coordinated and Connected, there was the fundamental disruption of embedded AI in everything, including the PLM domain.

- Large Language Models LLMs can go through all the structured and unstructured data, providing real-time access to information, which would take experts years of learning. Suddenly, everyone can behave experienced.

- The rigidness of traditional databases can be complemented by graph databases, which visualize knowledge that can be added and discovered on the fly without IT experts. Suddenly, an enterprise is no longer a collection of interfaced systems but a digital infrastructure where data flows – some call it Digital Thread as a Service (DTaaS)

- Suddenly, people feel overwhelmed by complexity, leading to fear and doing nothing, a killing attitude.

I cannot predict what will happen in the next 5 to 10 years, but I am sure the current change is one we have never seen before. Be prepared and flexible to act—to be on top of the wave, you need the skills to get there.

Building the vision

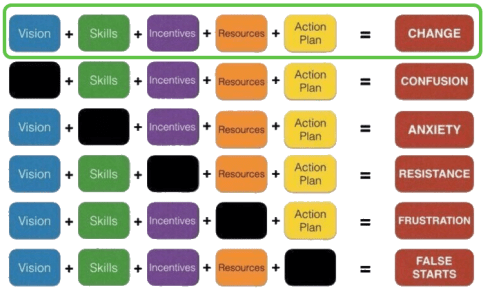

The image below might not be new to you, but it illustrates how companies could manage a complex change.

I will focus only on the first two elements, Vision and Skills, as they are the two elements we as individuals can influence. The other elements are partly related to financial and business constraints.

Vision and Skills are closely related because you can have a fantastic vision. Still, to realize the vision, you need a strategy driven by relevant skills to define and implement the vision. With the upcoming AI, traditional knowledge-based skills will suddenly no longer be a guarantee for future jobs.

AI brings a new dimension for everyone working in a company. To remain relevant, you must develop your unique human skills that make you different from robots or libraries. The importance of human skills might not be new, but now it has become apparent with the explosion of available AI tools.

AI brings a new dimension for everyone working in a company. To remain relevant, you must develop your unique human skills that make you different from robots or libraries. The importance of human skills might not be new, but now it has become apparent with the explosion of available AI tools.

Look at this 2013 table about predicted skills for the future – You can read the details in their paper, The Future of Employment, by Carl Benedikt Frey & Michael Osborne(2013) – click on the image to see the details.

In my 2015 PLM lectures, I joked when showing this image that my job as a PLM coach was secured, because you are a recreational therapist and firefighter combined.

It has become a reality, and many of my coaching engagements nowadays focus on explaining and helping companies formulate and understand their possible path forward. Helping them align and develop a vision of progressing in a volatile world – the technology is there, the skills and the vision are often not yet there.

![]() Combining business strategy with in-depth PLM concepts is a relatively unique approach in our domain. Many of my peers have other primary goals, such as Rob Ferrone’s article: Multi-view. Perspectives that shape PLM explains.

Combining business strategy with in-depth PLM concepts is a relatively unique approach in our domain. Many of my peers have other primary goals, such as Rob Ferrone’s article: Multi-view. Perspectives that shape PLM explains.

And then there is …..

The Share PLM Summit 2025

Modern times need new types of information building and sharing, and therefore, I am eager to participate in the upcoming Share PLM Summit at the end of May in Jerez (Spain).

See the link to the event here: The Share PLM Summit 2025 – with the theme: Where People Take Center Stage to Drive Human-Centric Transformations in PLM and Lead the Future of Digital Innovation.

In my lecture, I will focus on how humans can participate in/anticipate this digital AI-based transformation. But even more, I look forward to the lectures and discussions with other peers, as more people-centric thought leaders and technology leaders will join us:

Quoting Oleg Shilovitsky:

PLM was built to manage data, but too often, it makes people work for the data instead of working the other way around. At Share PLM Summit 2025, I’ll discuss how PLM must evolve from rigid, siloed systems to intelligent, connected, and people-centric data architectures.

We need both, and I hope to see you at the end of May at this unique PLM conference.

Conclusion

We are at a decisive point of the digital transformation as AI will challenge people skills, knowledge and existing ways of working. Combined with a turbulent world order, we need to prepare to be flexible and resilient. Therefore instead of focusing on current best practices we need to prepare for the future – a vision developed by skilled people. How will you or your company work on that? Join us if you have questions or ideas.

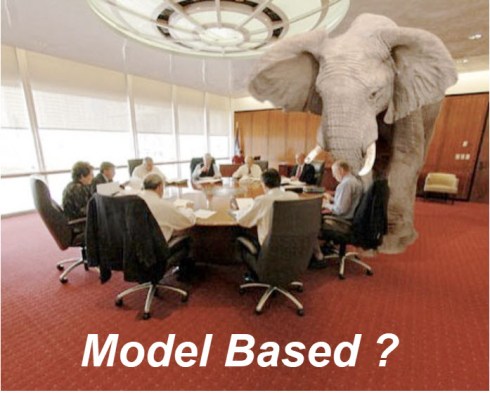

Four years ago, I wrote a series of posts with the common theme: The road to model-based and connected PLM. I discussed the various aspects of model-based and the transition from considering PLM as a system towards considering PLM as a strategy to implement a connected infrastructure.

Four years ago, I wrote a series of posts with the common theme: The road to model-based and connected PLM. I discussed the various aspects of model-based and the transition from considering PLM as a system towards considering PLM as a strategy to implement a connected infrastructure.

Since then, a lot has happened. The terminology of Digital Twin and Digital Thread has become better understood. The difference between Coordinated and Connected ways of working has become more apparent. Spoiler: You need both ways. And at this moment, Artificial Intelligence (AI) has become a new hype.

Many current discussions in the PLM domain are about structures and data connectivity, Bills of Materials (BOM), or Bills of Information(BOI) combined with the new term Digital Thread as a Service (DTaaS) introduced by Oleg Shilovitsky and Rob Ferrone. Here, we envision a digitally connected enterprise, based connected services.

Many current discussions in the PLM domain are about structures and data connectivity, Bills of Materials (BOM), or Bills of Information(BOI) combined with the new term Digital Thread as a Service (DTaaS) introduced by Oleg Shilovitsky and Rob Ferrone. Here, we envision a digitally connected enterprise, based connected services.

A lot can be explored in this direction; also relevant Lionel Grealou’s article in Engineering.com: RIP SaaS, long live AI-as-a-service and follow-up discussions related tot his topic. I chimed in with Data, Processes and AI.

However, we also need to focus on the term model-based or model-driven. When we talk about models currently, Large Language Models (LMM) are the hype, and when you are working in the design space, 3D CAD models might be your first association.

There is still confusion in the PLM domain: what do we mean by model-based, and where are we progressing with working model-based?

A topic I want to explore in this post.

It is not only Model-Based Definition (MBD)

Before I started The Road to Model-Based series, there was already the misunderstanding that model-based means 3D CAD model-based. See my post from that time: Model-Based – the confusion.

Model-Based Definition (MBD) is an excellent first step in understanding information continuity, in this case primarily between engineering and manufacturing, where the annotated model is used as the source for manufacturing.

In this way, there is no need for separate 2D drawings with manufacturing details, reducing the extra need to keep the engineering and manufacturing information in sync and, in addition, reducing the chance of misinterpretations.

MBD is a common practice in aerospace and particularly in the automotive industry. Other industries are struggling to introduce MBD, either because the OEM is not ready or willing to share information in a different format than 3D + 2D drawings, or their supplier consider MBD too complex for them compared to their current document-driven approach.

MBD is a common practice in aerospace and particularly in the automotive industry. Other industries are struggling to introduce MBD, either because the OEM is not ready or willing to share information in a different format than 3D + 2D drawings, or their supplier consider MBD too complex for them compared to their current document-driven approach.

In its current practice, we must remember that MBD is part of a coordinated approach.

Companies exchange technical data packages based on potential MBD standards (ASME Y14.47 /ISO 16792 but also JT and 3D PDF). It is not yet part of the connected enterprise, but it connects engineering and manufacturing using the 3D Model as the core information carrier.

As I wrote, learning to work with MBD is a stepping stone in understanding a modern model-based and data-driven enterprise. See my 2022 post: Why Model-based Definition is important for us all.

As I wrote, learning to work with MBD is a stepping stone in understanding a modern model-based and data-driven enterprise. See my 2022 post: Why Model-based Definition is important for us all.

To conclude on MBD, Model-based definition is a crucial practice to improve collaboration between engineering, manufacturing, and suppliers, and it might be parallel to collaborative BOM structures.

And it is transformational as the following benefits are reported through ChatGPT:

- Up to 30% faster in product development cycles due to reduced need for 2D drawings and fewer design iterations. Boeing reported a 50% reduction in engineering change requests by using MBD.

- Companies using MBD see a 20–50% reduction in manufacturing errors caused by misinterpretations of 2D drawings. Caterpillar reported a 30% improvement in first-pass yield due to better communication between design and manufacturing teams.

- MBD can reduce product launch time by 20–50% by eliminating bottlenecks related to traditional drawings and manual data entry.

- 20–30% reduction in documentation costs by eliminating or reducing 2D drawings. Up to 60% savings on rework and scrap costs by reducing errors and inconsistencies.

Over five years, Lockheed Martin achieved a $300 million cost savings by implementing MBD across parts of its supply chain.

MBSE is not a silo.

For many people, Model-Based Systems Engineering(MBSE) seems to be something not relevant to their business, or it is a discipline for a small group of specialists that are conducting system engineering practices, not in the traditional document-driven V-shape approach but in an iterative process following the V-shape, meanwhile using models to predict and verify assumptions.

And what is the value connected in a PLM environment?

A quick heads up – what is a model

A model is a simplified representation of a system, process, or concept used to understand, predict, or optimize real-world phenomena. Models can be mathematical, computational, or conceptual.

We need models to:

- Simplify Complexity – Break down intricate systems into manageable components and focus on the main components.

- Make Predictions – Forecast outcomes in science, engineering, and economics by simulating behavior – Large Language Models, Machine Learning.

- Optimize Decisions – Improve efficiency in various fields like AI, finance, and logistics by running simulations and find the best virtual solution to apply.

- Test Hypotheses – Evaluate scenarios without real-world risks or costs for example a virtual crash test..

It is important to realize models are as accurate as the data elements they are running on – every modeling practices has a certain need for base data, be it measurements, formulas, statistics.

I watched and listened to the interesting podcast below, where Jonathan Scott and Pat Coulehan discuss this topic: Bridging MBSE and PLM: Overcoming Challenges in Digital Engineering. If you have time – watch it to grasp the challenges.

The challenge in an MBSE environment is that it is not a single tool with a single version of the truth; it is merely a federated environment of shared datasets that are interpreted by modeling applications to understand and define the behavior of a product.

In addition, an interesting article from Nicolas Figay might help you understand the value for a broader audience. Read his article: MBSE: Beyond Diagrams – Unlocking Model Intelligence for Computer-Aided Engineering.

In addition, an interesting article from Nicolas Figay might help you understand the value for a broader audience. Read his article: MBSE: Beyond Diagrams – Unlocking Model Intelligence for Computer-Aided Engineering.

Ultimately, and this is the agreement I found on many PLM conferences, we agree that MBSE practices are the foundation for downstream processes and operations.

We need a data-driven modeling environment to implement Digital Twins, which can span multiple systems and diagrams.

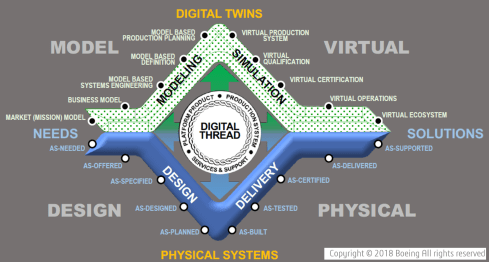

In this context, I like the Boeing diamond presented by Don Farr at the 2018 PLM Roadmap EMEA conference. It is a model view of a system, where between the virtual and the physical flow, we will have data flowing through a digital thread.

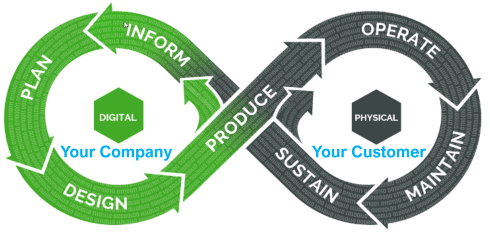

Where this image describes a model-based, data-driven infrastructure to deliver a solution, we can, in addition, apply the DevOp approach to the bigger picture for solutions in operation, as depicted by the PTC image below.

Model-based the foundation of the digital twins

![]() To conclude on MBSE, I hope that it is clear why I am promoting considering MBSE not only as the environment to conceptualize a solution but also as the foundation for a digital enterprise where information is connected through digital threads and AI models (**new**)

To conclude on MBSE, I hope that it is clear why I am promoting considering MBSE not only as the environment to conceptualize a solution but also as the foundation for a digital enterprise where information is connected through digital threads and AI models (**new**)

The data borders between traditional system domains will disappear – the single source of change and the nearest source of truth – paradigm, and this post, The Big Blocks of Future Lifecycle Management, from Prof. Dr. Jörg Fischer, are all about data domains.

However, having accessible data using all kinds of modern data sources and tools are necessary to build digital twins – either to simulate and predict a physical solution or to analyze a physical solution and, based on the analysis, either adjust the solutions or improve your virtual simulations.

Digital Twins at any stage of the product life cycle are crucial to developing and maintaining sustainable solutions, as I discussed in previous lectures. See the image below:

Conclusion

Data quality and architecture are the future of a modern digital enterprise – the building blocks. And there is a lot of discussion related to Artificial Intelligence. This will only work when we master the methodology and practices related to a data-driven and sustainable approach using models. MBD is not new, MBSE perhaps still new, building blocks for a model-based approach. Where are you in your lifecycle?

First, I wish you all a prosperous 2025 and hope you will take the time to digest information beyond headlines.

First, I wish you all a prosperous 2025 and hope you will take the time to digest information beyond headlines.

Taking time to digest information is my number one principle now, which means you will see fewer blog posts from my side and potentially more podcast recordings.

My theme for 2025 : “It is all about people, data,

a sustainable business and a smooth digital transformation”.

Fewer blog posts

Fewer blog posts, as although AI might be a blessing for content writers, it becomes as exciting as Wikipedia pages. Here, I think differently than Oleg Shilovitsky, whose posts brought innovative thoughts to our PLM community – “Just my thoughts”.

![]() Now Oleg endorses AI, as you can read in his post: PLM in 2025: A new chapter of blogging transformation. I asked ChatGPT to summarize my post in 50 words, and this is the answer I got – it saves you reading the rest:

Now Oleg endorses AI, as you can read in his post: PLM in 2025: A new chapter of blogging transformation. I asked ChatGPT to summarize my post in 50 words, and this is the answer I got – it saves you reading the rest:

The author’s 2025 focus emphasizes digesting information deeply, reducing blog posts, and increasing podcast recordings exploring real-life PLM applications. They stress balancing people and data-centric strategies, sustainable digital transformation, AI’s transformative role, and forward-looking concepts like Fusion Strategy. Success requires prioritizing business needs, people, and accurate data to harness AI’s potential.

![]() Summarizing blog posts with AI saves you time. Thinking about AI-generated content, I understand that when you work in marketing, you want to create visibility for your brand or offer.

Summarizing blog posts with AI saves you time. Thinking about AI-generated content, I understand that when you work in marketing, you want to create visibility for your brand or offer.

Do we need a blogging transformation? I am used to browsing through marketing content and then looking for the reality beyond it – facts and figures. Now it will be harder to discover innovative thoughts in this AI-generated domain.

Am I old fashioned? Time will tell.

More podcast recordings

As I wrote in a recent post, “PLM in real life and Gen AI“, I believe we can learn much from exploring real-life examples. You can always find the theory somewhere and many of the articles make sense and address common points. Some random examples:

As I wrote in a recent post, “PLM in real life and Gen AI“, I believe we can learn much from exploring real-life examples. You can always find the theory somewhere and many of the articles make sense and address common points. Some random examples:

- Top 4 Reasons Why PLM Implementations Fail

- 13 Common PLM Implementation Problems And How to Avoid Them

- 10 steps to a Successful PLM implementation

- 11 Essential Product Lifecycle Management Best Practices for Success

Similar recommendations exist for topics like ERP, MES, CRM or Digital Transformation (one of the most hyped terms).

Similar recommendations exist for topics like ERP, MES, CRM or Digital Transformation (one of the most hyped terms).

They all describe WHAT to do or not to do. The challenge however is: HOW to apply this knowledge in your unique environment, considering people, skills, politics and culture.

With the focus on the HOW, I worked with Helena Gutierrez last year on the Share PLM podcast series 2. In this series, we interviewed successful individuals from various organizations to explore HOW they approached PLM within their companies. Our goal was to gain insights from their experiences, particularly those moments when things didn’t go as planned, as these are often the most valuable learning opportunities.

I am excited to announce that the podcast will continue this year with Series 3! Joining me this season will be Beatriz Gonzales, Share PLM’s co-founder and new CEO. For Series 3, we’ve decided to broaden the scope of our interviews. In addition to featuring professionals working within companies, we’ll also speak with external experts, such as coaches and implementation partners, who support organizations in their PLM journey.

I am excited to announce that the podcast will continue this year with Series 3! Joining me this season will be Beatriz Gonzales, Share PLM’s co-founder and new CEO. For Series 3, we’ve decided to broaden the scope of our interviews. In addition to featuring professionals working within companies, we’ll also speak with external experts, such as coaches and implementation partners, who support organizations in their PLM journey.

Our goal is to uncover not only best practices from these experts but also insights into emerging “next practices.”

Stay tuned for series 3!

#datacentric or #peoplecentric ?

The title of the paragraph covers topics from the previous paragraphs and it was also the theme from a recent post shared through LinkedIn from Lionel Grealou: Driving Transformation: Data or People First?

The title of the paragraph covers topics from the previous paragraphs and it was also the theme from a recent post shared through LinkedIn from Lionel Grealou: Driving Transformation: Data or People First?

We all agree here that it is not either one or the other, and as the discussion related to the post further clarifies, it is about a business strategy that leads to both of these aspects.

This is the challenge with strategies. A strategy can be excellent – on paper – the success comes from the execution.

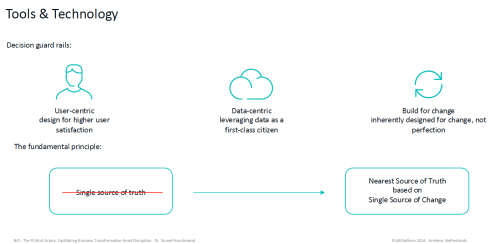

This discussion reminds me of the lecture Yousef Hooshmand gave at the PLM platform in the Netherlands last year – two of his images that could cover the whole debate:

Whatever you implement starts from the user experience, giving the data-centric approach the highest priority and designing the solution for change, meaning avoiding embedded hard-coded ways of working.

![]() While companies strive to standardize processes to provide efficiency and traceability, the processes should be reconfigurable or adaptable when needed, reconfigured on reliable data sources.

While companies strive to standardize processes to provide efficiency and traceability, the processes should be reconfigurable or adaptable when needed, reconfigured on reliable data sources.

Jan Bosch shared this last thought too in his Digital Reflection #5: Cog in the Machine. My favorite quote from this refection

“However, in a world where change is accelerating, we need to organize ourselves in ways that make it easy to incorporate change and not ulcer-inducing hard. How do we get there?”

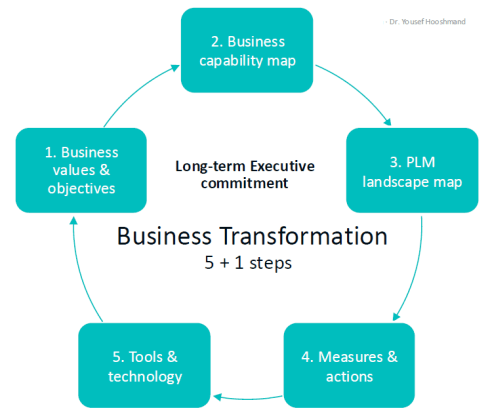

Of course, before we reach tools and technology, the other image Yousef Hooshmand shared below gives a guiding principle that I believe everyone should follow in their context.

It starts with having a C-level long-term commitment when you want to perform a business transformation, and then, in an MVP approach, you start from the business, which will ultimately lead you to the tools and technologies.

The challenge seen in this discussion is that:

The challenge seen in this discussion is that:

most manufacturing companies are still too focused on investing in what they are good at now and do not explore the future enough.

This behavior is why Industry 4.0 is still far from being implemented, and the current German manufacturing industry is in a crisis.

It requires an organization that understands the big picture and has a (fusion) strategy.

Fusion Strategy ?

Is the Fusion Strategy the next step, as Steef Klein often mentions in our PLM discussions? The Fusion Strategy, introduced by world-renowned innovation guru Vijay Govindarajan (The Three Box Solution) and digital strategy expert Venkat Venkatraman (Fusion Strategy), offers a roadmap that will help industrial companies combine what they do best – creating physical products – with what digital technology companies do best – capturing and analyzing data through algorithms and AI.

Is the Fusion Strategy the next step, as Steef Klein often mentions in our PLM discussions? The Fusion Strategy, introduced by world-renowned innovation guru Vijay Govindarajan (The Three Box Solution) and digital strategy expert Venkat Venkatraman (Fusion Strategy), offers a roadmap that will help industrial companies combine what they do best – creating physical products – with what digital technology companies do best – capturing and analyzing data through algorithms and AI.

It is a topic I want to explore this year and see how to connect it to companies in my ecosystem. It is an unknown phenomenon as most of them struggle with a data-driven foundation and skills and focus on the right AI applications.

It is a topic I want to explore this year and see how to connect it to companies in my ecosystem. It is an unknown phenomenon as most of them struggle with a data-driven foundation and skills and focus on the right AI applications.

The End of SaaS?

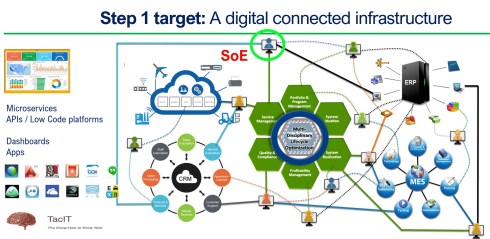

A potential interesting trend als related to AI I want to clarify further is the modern enterprise architecture . Over the past two years, we have seen a growing understanding that we should not think in systems connected through interfaces but towards a digitally connected infrastructure where APIs, low-code platforms or standardized interfaces will be responsible for real-time collaboration.

A potential interesting trend als related to AI I want to clarify further is the modern enterprise architecture . Over the past two years, we have seen a growing understanding that we should not think in systems connected through interfaces but towards a digitally connected infrastructure where APIs, low-code platforms or standardized interfaces will be responsible for real-time collaboration.

I wrote about these concepts in my PLM Roadmap / PDT Europe review. Look at the section: R-evolutionizing PLM and ERP and Heliple. At that time, I shared the picture below, which illustrates the digital enterprise.

The five depicted platforms in the image ( IIoT, CRM, PLM, ERP, MES) are not necessarily a single system. They can be an ecosystem of applications and services providing capabilities in that domain. In modern ways of thinking, each platform could be built upon a SaaS portfolio, ensuring optimal and scalable collaboration based on the company’s needs.

Implementing such an enterprise based on a combination of SaaS offerings might be a strategy for companies to eliminate IT overhead.

However, known forward-thinking experts like Vijay Govindarajan and Venkat Venkatraman with their Fusion Strategy. Also, Satya Nadella, CEO of Microsoft, imagines instead of connected platforms a future with an AI layer taking care of the context of the information – the Microsoft Copilot message. Some of his statements:

This transformation is poised to disrupt traditional tools and workflows, paving the way for a new generation of applications.

The business logic is all going to these AI agents. They’re not going to discriminate between what the backend is — they’ll update multiple databases, and all the logic will be in the AI tier.

Software as a Business Weapon?

Interesting thoughts to follow and to combine with this Forbes article, The End Of The SaaS Era: Rethinking Software’s Role In Business by Josipa Majic Predin. She introduces the New Paradigm: Software as a Business Weapon.

Quote:

Instead of focusing solely on selling software subscriptions, innovative companies are using software to enhance and transform existing businesses. The goal is to leverage technology to make certain businesses significantly more valuable, efficient, and competitive.

This approach involves developing software that can improve the operations of “real world” businesses by 20-30% or more. By creating such powerful tools, technology companies can position themselves to acquire or partner with the businesses they’ve enhanced, thereby capturing a larger share of the value they’ve created.

It is interesting to see these thoughts popping up, usually 10 to 20 years ahead before companies adopt them. However, I believe with AI’s unleashed power, this is where we should be active and learn. It is an exciting area where terms like eBOM or mBOM sound hackneyed.

It is interesting to see these thoughts popping up, usually 10 to 20 years ahead before companies adopt them. However, I believe with AI’s unleashed power, this is where we should be active and learn. It is an exciting area where terms like eBOM or mBOM sound hackneyed.

Sustainability?

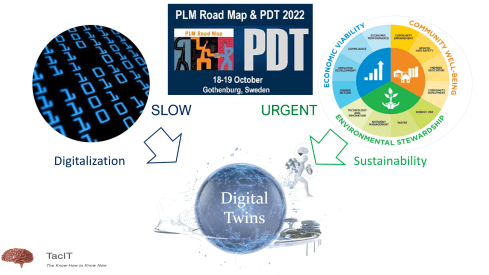

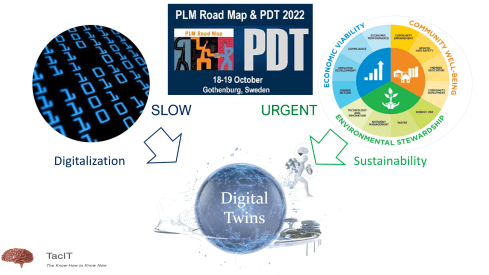

As a PLM Green Global Alliance member, I will continue to explore topics related to PLM and how they can serve Sustainability. They are connected as the image from the 2022 PLM Roadmap/PDT Europe indicates:

I will keep on focusing on separate areas within my PGGA network.

Conclusion

I believe 2025 will be the year to focus on understanding the practical applications of AI. Amid the hype and noise, there lies significant potential to re-imagine our PLM landscape and vision. However, success begins with prioritizing the business, empowering people, and ensuring accurate data.

Another year passed, and as usual, I took the time to look back. I always feel that things are going so much slower than expected. But that’s reality – there is always friction, and in particular, in the PLM domain, there is so much legacy we cannot leave behind.

Another year passed, and as usual, I took the time to look back. I always feel that things are going so much slower than expected. But that’s reality – there is always friction, and in particular, in the PLM domain, there is so much legacy we cannot leave behind.

It is better to plan what we can do in 2024 to be prepared for the next steps or, if lucky, even implement the next steps in progress.

In this post, I will discuss four significant areas of attention (AI – DATA – PEOPLE – SUSTAINABILITY) in an alphabetic order, not prioritized.

Here are some initial thoughts. In the upcoming weeks I will elaborate further on them and look forward to your input.

AI (Artificial Intelligence)

![]() Where would I be without talking about AI?

Where would I be without talking about AI?

When you look at the image below, the Gartner Hype Cycle for AI in 2023, you see the potential coming on the left, with Generative AI at the peak.

Part of the hype comes from the availability of generative AI tools in the public domain, allowing everyone to play with them or use them. Some barriers are gone, but what does it mean? Many AI tools can make our lives easier, and there is for sure no threat if our job does not depend on standard practices.

AI and People

When I was teaching physics in high school, it was during the introduction of the pocket calculator, which replaced the slide rule.You need to be skilled to uyse the slide rule, now there was a device that gave immediate answers. Was this bad for the pupils?

When I was teaching physics in high school, it was during the introduction of the pocket calculator, which replaced the slide rule.You need to be skilled to uyse the slide rule, now there was a device that gave immediate answers. Was this bad for the pupils?

If you do not know a slide rule, it was en example of new technology replacing old tools, providing more time for other details. Click on the image or read more about the slide rule here on Wiki.

![]() Or today you would ask the question about the slide rule to ChatGPT? Does generative AI mean the end of Wikipedia? Or does generative AI need the common knowledge of sites like Wikipedia?

Or today you would ask the question about the slide rule to ChatGPT? Does generative AI mean the end of Wikipedia? Or does generative AI need the common knowledge of sites like Wikipedia?

AI can empower people in legacy environments, when working with disconnected systems. AI will be a threat for to people and companies that rely on people and processes to bring information together without adding value. These activities will disappear soon and you must consider using this innovative approach.

During the recent holiday period, there was an interesting discussion about why companies are reluctant to change and implement better solution concepts. Initially launched by Alex Bruskin here on LinkedIn , the debate spilled over into the topic of TECHNICAL DEBT , well addressed here by Lionel Grealou.

During the recent holiday period, there was an interesting discussion about why companies are reluctant to change and implement better solution concepts. Initially launched by Alex Bruskin here on LinkedIn , the debate spilled over into the topic of TECHNICAL DEBT , well addressed here by Lionel Grealou.

![]() Both articles and the related discussion in the comments are recommended to follow and learn.

Both articles and the related discussion in the comments are recommended to follow and learn.

AI and Sustainability

![]() Similar to the introduction of Bitcoin using blockchain technology, some people are warning about the vast energy consumption required for training and interaction with Large Language Models (LLM), as Sasha Luccioni explains in her interesting TED talk when addressing sustainability.

Similar to the introduction of Bitcoin using blockchain technology, some people are warning about the vast energy consumption required for training and interaction with Large Language Models (LLM), as Sasha Luccioni explains in her interesting TED talk when addressing sustainability.

She proposes that tech companies should be more transparent on this topic, the size and the type of the LLM matters, as the indicative picture below illustrates.

Carbon Emissions of LLMs compared

In addition, I found an interesting article discussing the pros and cons of AI related to Sustainability. The image below from the article Risks and Benefits of Large Language Models for the Environment illustrates nicely that we must start discussing and balancing these topics.

![]() To conclude, in discussing AI related to sustainability, I see the significant advantage of using generative AI for ESG reporting.

To conclude, in discussing AI related to sustainability, I see the significant advantage of using generative AI for ESG reporting.

ESG reporting is currently a very fragmented activity for organizations, based on (marketing) people’s goodwill and currently these reports are not always be evidence-based.

Data

The transformation from a coordinated, document-driven enterprise towards a hybrid coordinated/connected enterprise using a data-driven approach became increasingly visible in 2023. I expect this transformation to grow faster in 2024 – the momentum is here.

The transformation from a coordinated, document-driven enterprise towards a hybrid coordinated/connected enterprise using a data-driven approach became increasingly visible in 2023. I expect this transformation to grow faster in 2024 – the momentum is here.

We saw last year that the discussions related to Federated PLM nicely converged at the PLM Roadmap / PDT Europe conference in Paris. I shared most of the topics in this post: The week after PLM Roadmap / PDT Europe 2023. In addition, there is now the Heliple Federated PLM LinkedIn group with regular discussions planned.

We saw last year that the discussions related to Federated PLM nicely converged at the PLM Roadmap / PDT Europe conference in Paris. I shared most of the topics in this post: The week after PLM Roadmap / PDT Europe 2023. In addition, there is now the Heliple Federated PLM LinkedIn group with regular discussions planned.

In addition, if you read here Jan Bosch’s reflection on 2023, he mentions (quote):

… 2023 was the year where many of the companies in the center became serious about the use of data. Whether it is historical analysis, high-frequency data collection during R&D, A/B testing or data pipelines, I notice a remarkable shift from a focus on software to a focus on data. The notion of data as a product, for now predominantly for internal use, is increasingly strong in the companies we work with

I am a big fan of Jan’s posting; coming from the software world, he describes the same issues that we have in the PLM world, except he does not carry the hardware legacy that much and, therefore, acts faster than us in the PLM world.

I am a big fan of Jan’s posting; coming from the software world, he describes the same issues that we have in the PLM world, except he does not carry the hardware legacy that much and, therefore, acts faster than us in the PLM world.

An interesting illustration of the slow pace to a data-driven environment is the revival of the PLM and ERP integration discussion. Prof. Jörg Fischer and Martin Eigner contributed to the broader debate of a modern enterprise infrastructure, not based on systems (PLM, ERP, MES, ….) but more on the flow of data through the lifecycle and an organization.

It is a great restart of the debate, showing we should care more about data semantics and the flow of information.

The articles: The Future of PLM & ERP: Bridging the Gap. An Epic Battle of Opinions! and Is part master in PLM and ERP equal or not) combined with the comments to these posts, are a must read to follow this change towards a more connected flow of information.

While writing this post, Andreas Lindenthal expanded the discussion with his post: PLM and Configuration Management Best Practices: Part Traceability and Revisions. Again thanks to data-driven approaches, there is an extending support for the entire product lifecycle. Product Lifecycle Management, Configuration Management and AIM (Asset Information Management) have come together.

![]() PLM and CM are more and more overlapping as I discussed some time ago with Martijn Dullaart, Maxime Gravel and Lisa Fenwick in the The future of Configuration Management. This topic will be “hot”in 2024.

PLM and CM are more and more overlapping as I discussed some time ago with Martijn Dullaart, Maxime Gravel and Lisa Fenwick in the The future of Configuration Management. This topic will be “hot”in 2024.

People

From the people’s perspective towards AI, DATA and SUSTAINABILITY, there is a noticeable divide between generations. Of course, for the sake of the article, I am generalizing, assuming most people do not like to change their habits or want to reprogram themselves.

From the people’s perspective towards AI, DATA and SUSTAINABILITY, there is a noticeable divide between generations. Of course, for the sake of the article, I am generalizing, assuming most people do not like to change their habits or want to reprogram themselves.

Unfortunate, we have to adapt our skills as our environment is changing. Most of my generation was brought up with the single source of truth idea, documented and supported by science papers.

In my terminology, information processing takes place in our head by combining all the information we learned or collected through documents/books/newspapers – the coordinated approach.

For people living in this mindset, AI can become a significant threat, as their brain is no longer needed to make a judgment, and they are not used to differentiate between facts and fake news as they were never trained to do so

For people living in this mindset, AI can become a significant threat, as their brain is no longer needed to make a judgment, and they are not used to differentiate between facts and fake news as they were never trained to do so

The same is valid for practices like the model-based approach, working data-centric, or considering sustainability. It is not in the DNA of the older generations and, therefore, hard to change.

The older generation is mostly part of an organization’s higher management, so we are returning to the technical debt discussion.

Later generations that grew up as digital natives are used to almost real-time interaction, and when applied consistently in a digital enterprise, people will benefit from the information available to them in a rich context – in my terminology – the connected approach.

AI is a blessing for people living in this mindset as they do not need to use old-fashioned methods to acquire information.

AI is a blessing for people living in this mindset as they do not need to use old-fashioned methods to acquire information.

“Let ChatGPT write my essay.”

However, their challenge could be what I would call “processing time”. Because data is available, it does not necessarily mean it is the correct information. For that reason it remains important to spend time digesting the impact of information you are reading – don’t click “Like”based on the tittle, read the full article and then decide.

Experience is what you get, when you don’t get what you expect.

meaning you only become experienced if you learn from failures.

Sustainability

Unfortunately, sustainability is not only the last topic in alphabetic order, as when you look at the image below, you see that discussions related to sustainability are in a slight decline at C-level at the moment.

Unfortunately, sustainability is not only the last topic in alphabetic order, as when you look at the image below, you see that discussions related to sustainability are in a slight decline at C-level at the moment.

I share this observation in my engagements when discussing sustainability with the companies I interact with.

The PLM software and services providers are all on a trajectory of providing tools and an infrastructure to support a transition to a more circular economy and better traceability of materials and carbon emissions.

The PLM software and services providers are all on a trajectory of providing tools and an infrastructure to support a transition to a more circular economy and better traceability of materials and carbon emissions.

In the PLM Global Green Alliance, we talked with Aras, Autodesk, Dassault Systems, PTC, SAP, Sustaira, TTPSC(Green PLM) and more to come in 2024. The solution offerings in the PLM domain are available to start, now the people and processes.

For sure, AI tools will help companies to get a better understanding of their sustainability efforts. As mentioned before AI could help companies in understanding their environmental impact and build more accurate ESG reports.

Next, being DATA-driven will be crucial. As discussed during the latest PLM Roadmap/PDT Europe conference: The Need for a Governance Digital Thread.

And regarding PEOPLE, the good news is that younger generations want to take care of their future. They are in a position to choose the company to work for or influence companies by their consumer behavior. Unfortunately, climate disasters will remind us continuously in the upcoming decades that we are in a critical phase.

With the PLM Global Green Alliance, we strive to bring people together with a PLM mindset, sharing news and information on how to move forward to a sustainable future.

![]() Mark Reisig (CIMdata – moderator for Sustainability & Energy) and Patrice Quencez (CIMPA – moderator for the Circular Economy) joined the PGGA last year and you will experience their inputs this year.

Mark Reisig (CIMdata – moderator for Sustainability & Energy) and Patrice Quencez (CIMPA – moderator for the Circular Economy) joined the PGGA last year and you will experience their inputs this year.

Conclusion

As you can see from this long post, there is so much to learn. The topics described are all actual, and each topic requires education, experience (success & failures) combined with understanding of the technology concepts. Make sure you consider all of them, as focusing on a single topic will not make move faster forward – they are all related. Please share your experiences this year—Happy New Year of Learning.

[…] (The following post from PLM Green Global Alliance cofounder Jos Voskuil first appeared in his European PLM-focused blog HERE.) […]

[…] recent discussions in the PLM ecosystem, including PSC Transition Technologies (EcoPLM), CIMPA PLM services (LCA), and the Design for…

Jos, all interesting and relevant. There are additional elements to be mentioned and Ontologies seem to be one of the…

Jos, as usual, you've provided a buffet of "food for thought". Where do you see AI being trained by a…

Hi Jos. Thanks for getting back to posting! Is is an interesting and ongoing struggle, federation vs one vendor approach.…