You are currently browsing the tag archive for the ‘System of Record’ tag.

Those who have been following my blog posts over the past two years may have discovered that I consistently use the terms “System of Engagement” and “System of Record” in the context of a Coordinated and Connected PLM infrastructure.

Understanding the distinction between ‘System of Engagement‘ and ‘System of Record‘ is crucial for comprehending the type of collaboration and business purpose in a PLM infrastructure. When explored in depth, these terms will reveal the underlying technology.

The concept

A year ago, I had an initial discussion with three representatives of a typical system of engagement. I spoke with Andre Wegner from Authentise, MJ Smith from CoLab and Oleg Shilovitsky from OpenBOM. You can read and see the interview here: The new side of PLM? Systems of Engagement!

A year ago, I had an initial discussion with three representatives of a typical system of engagement. I spoke with Andre Wegner from Authentise, MJ Smith from CoLab and Oleg Shilovitsky from OpenBOM. You can read and see the interview here: The new side of PLM? Systems of Engagement!

As a follow-up, I had a more detailed interview with Taylor Young, the Chief Strategy Officer of CoLab, early this year.

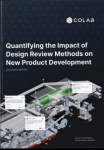

CoLab introduced the term Design Engagement System (DES), a new TLA. Based on a survey among 250 global engineering leaders, we discussed the business impact and value of their DES System of Engagement.

CoLab introduced the term Design Engagement System (DES), a new TLA. Based on a survey among 250 global engineering leaders, we discussed the business impact and value of their DES System of Engagement.

You can read the discussion here: Where traditional PLM fails.

The business benefits

I like that CoLab’s external messaging focuses on the business capabilities and opportunities, which reminded me of the old Steve Jobs recording: Don’t talk about the product!

There are so many discussions on LinkedIn about the usage of various technologies and concepts without a connection to the business. I’ll let you explore and decide.

It’s worth noting that while the ‘System of Engagement’ offers significant business benefits, it’s not a standalone solution. The right technology is crucial for translating these benefits into tangible business results.

This was a key takeaway from my follow-up discussion with MJ Smith, CMO at CoLab, about the difference between Configuration and Customization.

Why configurability?

Hello MJ, it has been a while since we spoke, and this time, I am curious to learn how CoLab fits in an enterprise PLM infrastructure, zooming in on the aspects of configuration and customization.

Hello MJ, it has been a while since we spoke, and this time, I am curious to learn how CoLab fits in an enterprise PLM infrastructure, zooming in on the aspects of configuration and customization.

Using configurability, we can make a smaller number of features work for more use cases or business processes. Users do not want to learn and adopt many different features, and a system of engagement should make it easy to participate in a business process, even for infrequent or irregular users.

Using configurability, we can make a smaller number of features work for more use cases or business processes. Users do not want to learn and adopt many different features, and a system of engagement should make it easy to participate in a business process, even for infrequent or irregular users.

In design review, this means cross-functional teams and suppliers who are not the core users of CAD or PLM.

I agree, and for that reason, we see the discussion of Systems of Record (not user-friendly and working in a coordinated mode) and Systems of Engagement (focus on the end-user and working in a connected mode). How do you differentiate with CoLab?

I agree, and for that reason, we see the discussion of Systems of Record (not user-friendly and working in a coordinated mode) and Systems of Engagement (focus on the end-user and working in a connected mode). How do you differentiate with CoLab?

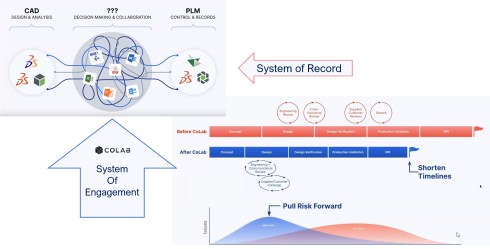

From a technology perspective, as a System of Engagement, CoLab wants to eliminate complex, multi-step workflows that require users to navigate between 5-10+ different point solutions to complete a review.

From a technology perspective, as a System of Engagement, CoLab wants to eliminate complex, multi-step workflows that require users to navigate between 5-10+ different point solutions to complete a review.

For example:

- SharePoint for sending data

- CAD viewers for interrogating models

- PowerPoint for documenting markups – using screenshots

- Email or Teams meetings for discussing issues

- Spreadsheets for issue tracking

- Traditional PLM systems for consolidation

As mentioned before, the participants can be infrequent or irregular users from different companies. This gap exists today, with only 20% of suppliers and 49% of cross-functional teams providing valuable design feedback (see the 2023 report here). To prevent errors and increase design quality, NPD teams must capture helpful feedback from these SMEs, many of whom only participate in 2 to 3 design reviews each year.

As mentioned before, the participants can be infrequent or irregular users from different companies. This gap exists today, with only 20% of suppliers and 49% of cross-functional teams providing valuable design feedback (see the 2023 report here). To prevent errors and increase design quality, NPD teams must capture helpful feedback from these SMEs, many of whom only participate in 2 to 3 design reviews each year.

Configuration and Customization

Back to the interaction between the System of Engagement (CoLab) and Systems or Records, in this case, probably the traditional PLM system. I think it is important to define the differences between Configuration and Customization first.

Back to the interaction between the System of Engagement (CoLab) and Systems or Records, in this case, probably the traditional PLM system. I think it is important to define the differences between Configuration and Customization first.

These would be my definitions:

- Configuration involves setting up standard options and features in software to meet specific needs without altering the code, such as adjusting settings or using built-in tools.

- Customization involves modifying the software’s code or adding new features to tailor it more precisely to unique requirements, which can include creating custom scripts, plugins, or changes to the user interface.

Both configuration and customization activities can be complex depending on the system we are discussing.

It’s also interesting to consider how configurability and customization can go hand in hand. What starts as a customization for one customer could become a configurable feature later.

It’s also interesting to consider how configurability and customization can go hand in hand. What starts as a customization for one customer could become a configurable feature later.

For software providers like CoLab, the key is to stay close to your customers so that you can understand the difference between a niche use case – where customization may be the best solution – vs. something that could be broadly applicable.

In my definition of customization, I first thought of connecting to the various PLM and CAD systems. Are these interfaces standardized, or are they open to configuration and customization?

In my definition of customization, I first thought of connecting to the various PLM and CAD systems. Are these interfaces standardized, or are they open to configuration and customization?

CoLab offers out-of-the-box integrations with PLM systems, including Windchill, Teamcenter, and 3DX Enovia. By integrating PLM with CoLab, companies can share files straight from PLM to CoLab without having to export or convert to a neutral format like STP.

CoLab offers out-of-the-box integrations with PLM systems, including Windchill, Teamcenter, and 3DX Enovia. By integrating PLM with CoLab, companies can share files straight from PLM to CoLab without having to export or convert to a neutral format like STP.

By sharing CAD from PLM to CoLab, companies make it possible for non-PLM users – inside the company and outside (e.g., suppliers, customers) to participate in design reviews. This use case is an excellent example of how a system of engagement can be used as the connection point between two companies, each with its own system of record.

CoLab can also send data back to PLM. For example, you can see whether there is an open review on a part from within Windchill PLM and how many unresolved comments exist on a file shared with CoLab from PLM. Right now, there are some configurable aspects to our integrations – such as file-sharing controls for Windchill users.

We plan to invest more in the configurability of the PLM to DES interface. We will also invest in our REST API, which customers can use to build custom integrations if they like, instead of using our OOTB integrations.

To get an impression, look at this 90-second demo of CoLab’s Windchill integration for reference.

Talking about IP security is a topic that is always mentioned when companies interact with each other, and in particular in a connected mode. Can you tell us more about how Colab deals with IP protection?

Talking about IP security is a topic that is always mentioned when companies interact with each other, and in particular in a connected mode. Can you tell us more about how Colab deals with IP protection?

CoLab has enterprise customers, like Schaeffler, implementing attribute-based access controls so that users can only access files in CoLab that they would otherwise have access to in Windchill.

CoLab has enterprise customers, like Schaeffler, implementing attribute-based access controls so that users can only access files in CoLab that they would otherwise have access to in Windchill.

We also have customers who integrate CoLab with their ERP system to auto-provision guest accounts for suppliers so they can participate in design reviews.

This means that the OEM is responsible for identifying which data is shared within CoLab. I am curious: Are these kinds of IP-sharing activities standardized because you have a configurable interface to the PLM/ERP, or is this still a customization?

This means that the OEM is responsible for identifying which data is shared within CoLab. I am curious: Are these kinds of IP-sharing activities standardized because you have a configurable interface to the PLM/ERP, or is this still a customization?

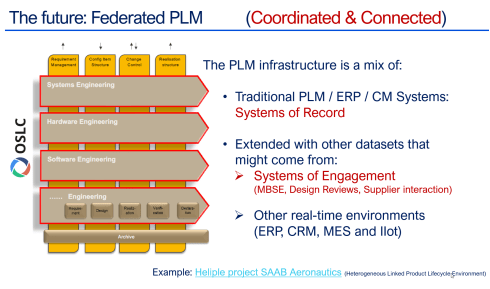

I am referring to this point in the Federated PLM Interest Group. We discuss using OSLC as one of the connecting interfaces between the System of Record and the System of Engagement (Modules)—it’s still in the early days, as you can read in this article—but we see encouraging similar results. Is this a topic of attention for CoLab, too?

The interface between CoLab and PLM is the same for every customer (not custom) but can be configured with attributes-based access controls. End users who have access must explicitly share files. Further access controls can also be put in place on the CoLab side to protect IP.

The interface between CoLab and PLM is the same for every customer (not custom) but can be configured with attributes-based access controls. End users who have access must explicitly share files. Further access controls can also be put in place on the CoLab side to protect IP.

We are taking a similar approach to integrating as outlined by OSLC. The OSLC concept is interesting to us, as it appears to provide a framework for better-supporting concepts such as versions and variants. The interface delegation concepts are also of interest.

Conclusion

It was great to dive deeper into the complementary value of CoLab as a typical System of Engagement. Their customers are end-users who want to collaborate efficiently during design reviews. By letting their customers work in a dedicated but connected environment, they are released from working in a traditional, more administrative PLM system.

Interfacing between these two environments will be an interesting topic to follow in the future. Will it be, for example, OSLC-based, or do you see other candidates to standardize?

Two weeks ago, I shared my first post about PDM/PLM migration challenges on LinkedIn: How to avoid Migration Migraine – part 1. Most of the content discussed was about data migrations.

Two weeks ago, I shared my first post about PDM/PLM migration challenges on LinkedIn: How to avoid Migration Migraine – part 1. Most of the content discussed was about data migrations.

Starting from moving data stored in relational databases to modern object-oriented environments – the technology upgrade. But also the challenges a company can have when merging different data siloes (CAD & BOM related) into a single PLM backbone to extend the support of product data beyond engineering.

Luckily, the post generated a lot of reactions and feedback through LinkedIn and personal interactions last week.

Luckily, the post generated a lot of reactions and feedback through LinkedIn and personal interactions last week.

The amount of interaction illustrated the relevance of the topic for people; they recognized the elephant in the room, too.

Working with a partner

Data migrations and consolidation are typically not part of a company’s core business, so it is crucial to find the right partner for a migration project. The challenge with migrations is that there is potentially a lot to do technically, but only your staff can assess the quality and value of migrations.

Data migrations and consolidation are typically not part of a company’s core business, so it is crucial to find the right partner for a migration project. The challenge with migrations is that there is potentially a lot to do technically, but only your staff can assess the quality and value of migrations.

Therefore, when planning a migration, make sure you work on it iteratively with an experienced partner who can provide a set of tools and best practices. Often, vendors or service partners have migration tools that still need to be tuned to your As-Is and To-Be environment.

To get an impression of what a PLM service partner can do and which topics or tools are relevant in the context of mid-market PLM, you can watch this xLM webinar on YouTube. So make sure you select a partner who is familiar with your PDM/PLM infrastructure and who has the experience to assess complexity.

To get an impression of what a PLM service partner can do and which topics or tools are relevant in the context of mid-market PLM, you can watch this xLM webinar on YouTube. So make sure you select a partner who is familiar with your PDM/PLM infrastructure and who has the experience to assess complexity.

Migration lessons learned

In my PLM coaching career I have seen many migrations. In the early days they were more related to technology upgrades, consolidation of data and system replacements. Nowadays the challenges are more related to become more data-driven. Here are 5 lessons that I learned in the past twenty years:

In my PLM coaching career I have seen many migrations. In the early days they were more related to technology upgrades, consolidation of data and system replacements. Nowadays the challenges are more related to become more data-driven. Here are 5 lessons that I learned in the past twenty years:

- A fixed price for the migration can be a significant risk as the quality of the data and the result are hard to comprehend upfront. In case of a fixed price, either you would pay for the moon (taking all the risk), or your service partner would lose a lot of money. In a sustainable business model, there should be no losers.

- Start (even now) with checking and fixing your data quality. For example, when you are aware of a mismatch between CAD assemblies and BOM data, analyze and fix discrepancies even before the migration.

- One immediate action to take when moving from CAD assemblies to BOM structures is to check or fill the properties in the CAD system to support a smooth transition. Filling properties might be a temporary action, as later, when becoming more data-driven, some of these properties, e.g., material properties or manufacturer part numbers, should not be maintained in the CAD system anymore. However, they might help migration tools to extract a richer dataset.

- Focus on implementing an environment ready for the future. Don’t let your past data quality compromise complexity. In such a case, learn to live with legacy issues that will be fixed only when needed. A 100 % matching migration is not likely to happen because the source data might also be incorrect, even after further analysis.

- The product should probably not be configured in the CAD environment, even because the CAD tool allows it. I had this experience with SolidWorks in the past. PDM became the enemy because the users managed all configuration options in the assembly files, making it hard to use it on the BOM or Product level (the connected digital thread).

The future is data-driven

In addition, these migration discussions made me aware again that so many companies are still in the early phases of creating a unified PLM infrastructure in their company and implementing the coordinated approach – an observation I shared in my report on the PDSFORUM 2024 conference.

In addition, these migration discussions made me aware again that so many companies are still in the early phases of creating a unified PLM infrastructure in their company and implementing the coordinated approach – an observation I shared in my report on the PDSFORUM 2024 conference.

Due to sustainability-related regulations and the need to understand product behavior in the field (Digital Twin / Product As A Service), becoming data-driven is an unavoidable target in the near future. Implementing a connected digital thread is crucial to remaining competitive and sustainable in business.

However, the first step is to gain insights about the available data (formats and systems) and its quality. Therefore, implementing a coordinated PLM backbone should immediately contain activities to improve data quality and implement a data governance policy to avoid upcoming migration issues.

However, the first step is to gain insights about the available data (formats and systems) and its quality. Therefore, implementing a coordinated PLM backbone should immediately contain activities to improve data quality and implement a data governance policy to avoid upcoming migration issues.

Data-driven environments, the Systems of Engagement, bring the most value when connected through a digital thread with the Systems of Record (PLM. ERP and others), therefore, design your processes, even current ones, user-centric, data-centric and build for change (see Yousef Hooshmand‘s story in this post – also image below).

The data-driven Future is not a migration.

The last part of this article will focus on what I believe is a future PLM architecture for companies. To be more precise, it is not only a PLM architecture anymore. It should become a business architecture based on connected platforms (the systems of record) and inter-platform connected value streams (the systems of engagement).

The discussion is ongoing, and from the technical and business side, I recommend reading Prof Dr. Jorg Fischer’s recent articles, for example. The Crisis of Digitalization – Why We All Must Change Our Mindset! or The MBOM is the Steering Wheel of the Digital Supply Chain! A lot of academic work has been done in the context of TeamCenter and SAP.

The discussion is ongoing, and from the technical and business side, I recommend reading Prof Dr. Jorg Fischer’s recent articles, for example. The Crisis of Digitalization – Why We All Must Change Our Mindset! or The MBOM is the Steering Wheel of the Digital Supply Chain! A lot of academic work has been done in the context of TeamCenter and SAP.

Also, Martin Eigner recently described in The Constant Conflict Between PLM and ERP a potential digital future of enterprise within the constraints of existing legacy systems.

Also, Martin Eigner recently described in The Constant Conflict Between PLM and ERP a potential digital future of enterprise within the constraints of existing legacy systems.

In my terminology, they are describing a hybrid enterprise dominated by major Systems of Record complemented by Systems of Engagement to support optimized digital value streams.

Whereas Oleg Shilovitsky, coming from the System of Engagement side with OpenBOM, describes the potential technologies to build a digital enterprise as you can read from one of his recent posts: How to Unlock the Future of Manufacturing by Opening PLM/ERP to Connect Processes and Optimize Decision Support.

Whereas Oleg Shilovitsky, coming from the System of Engagement side with OpenBOM, describes the potential technologies to build a digital enterprise as you can read from one of his recent posts: How to Unlock the Future of Manufacturing by Opening PLM/ERP to Connect Processes and Optimize Decision Support.

All three thought leaders talk about the potential of connected aspects in a future enterprise. For those interested in the details there is a lot to learn and understand.

For the sake of the migration story I stay out of the details. However interesting to mention, they also do not mention data migration—is it the elephant in the room?

I believe moving from a coordinated enterprise to a integrated (coordinated and connected) enterprise is not a migration, as we are no longer talking about a single system that serves the whole enterprise.

I believe moving from a coordinated enterprise to a integrated (coordinated and connected) enterprise is not a migration, as we are no longer talking about a single system that serves the whole enterprise.

The future of a digital enterprise is a federated environment where existing systems need to become more data-driven, and additional collaboration environments will have their internally connected capabilities to support value streams.

With this in mind you can understand the 2017 McKinsey article– Our insights/toward an integrated technology operating model – the leading image below:

And when it comes to realization of such a concept, I have described the Heliple-2 project a few times before as an example of such an environment, where the target is to have a connection between the two layers through standardized interfaces, starting from OSLC. Or visit the Heliple Federated PLM LinkedIn group.

Data architecture and governance are crucial.

The image above generalizes the federated PLM concept and illustrates the two different systems connected through data bridges. As data must flow between the two sides without human intervention, the chosen architecture must be well-defined.

Here, I want to use a famous quote from Youssef Housmand’s paper From a Monolithic PLM Landscape to a Federated Domain and Data Mesh. Click on the image to listen to the Share PLM podcast with Yousef.

Here, I want to use a famous quote from Youssef Housmand’s paper From a Monolithic PLM Landscape to a Federated Domain and Data Mesh. Click on the image to listen to the Share PLM podcast with Yousef.

From a Single Source of Truth towards a principle of the Nearest Source of Truth based on a Single Source of Change

- If you agree with this quote, you have a future mindset of federated PLM.

- If you still advocate the Single Source of Truth, you are still in the Monolithic PLM phase.

It’s not a problem if you are aware that the next step should be federated and you are not ready yet.

However, in particular, environmental regulations and sustainability initiatives can only be performed in data-driven, federated environments. Think about the European Green Deal with its upcoming Ecodesign for Sustainable Products Directive (ESPR), which demands digital traceability of products, their environmental impact, and reuse /recycle options, expressed in the Digital Product Passport.

Reporting, Greenhouse Gas Reporting and ESG reporting are becoming more and more mandatory for companies, either by regulations or by the customers. Only a data-driven connected infrastructure can deal with this efficiently. Sustaira, a company we interviewed with the PLM Green Global Alliance last year, delivers such a connected infrastructure.

Reporting, Greenhouse Gas Reporting and ESG reporting are becoming more and more mandatory for companies, either by regulations or by the customers. Only a data-driven connected infrastructure can deal with this efficiently. Sustaira, a company we interviewed with the PLM Green Global Alliance last year, delivers such a connected infrastructure.

Read the challenges they meet in their blog post: Is inaccurate sustainability data holding you back?

Finally, to perform Life Cycle Assessments for design options or Life Cycle Analyses for operational products, you need connections to data sources in real-time. The virtual design twin or the digital twin in operation does not run on documents.

Conclusion

Data migration and consolidation to modern systems is probably a painful and challenging process. However, the good news is that with the right mindset to continue and with a focus on data quality and governance, the next step to a integrated coordinated and connected enterprise will not be that painful. It can be an evolutionary process, as the McKinsey article describes it.

One year ago, I wrote the post: Time to Split PLM, which reflected a noticeable trend in 2022 and 2023.

One year ago, I wrote the post: Time to Split PLM, which reflected a noticeable trend in 2022 and 2023.

If you still pursue the Single Source of Truth or think that PLM should be supported by a single system, the best you can buy, then you are living in the past.

It is now the time to move from a Monolithic PLM Landscape to a Federated Domain and Data Mesh (Yousef Hooshmand) or the Heliple Federated PLM project (Erik Herzog) – you may have read about these concepts.

When moving from a traditional coordinated, document-driven business towards a modern, connected, and data-driven business, there is a paradigm shift. In many situations, we still need the document-driven approach to manage baselines for governance and traceability, where we create the required truth for manufacturing, compliance, and configuration management.

However, we also need an infrastructure for multidisciplinary collaboration nowadays. Working with systems, a combination of hardware and software, requires a model-based approach and multidisciplinary collaboration. This infrastructure needs to be data-driven to remain competitive despite more complexity, connecting stakeholders along value streams.

Traditional PLM vendors still push all functionality into one system, often leading to frustration among the end-users, complaining about lack of usability, bureaucracy, and the challenge of connecting to external stakeholders, like customers, suppliers, design or service partners.

Traditional PLM vendors still push all functionality into one system, often leading to frustration among the end-users, complaining about lack of usability, bureaucracy, and the challenge of connecting to external stakeholders, like customers, suppliers, design or service partners.

Systems of Engagement

It is in modern PLM infrastructures where I started the positioning of Systems or Record (the traditional enterprise siloes – PDM/PLM, ERP, CM) and the Systems of Engagement (modern environments designed for close to real-time collaboration between stakeholders within a domain/value stream). In the Heliple project (image below), the Systems of Record are the vertical bars, and the Systems of Engagement are the horizontal bars.

The power of a System of Engagement is the data-driven connection between stakeholders even when working in different enterprises. Last year, I discussed with Andre Wegner from Authentise, MJ Smith from CoLab, and Oleg Shilovitsky from OpenBOM.

They all focus on modern, data-driven, multidisciplinary collaboration. You can find the discussion here: The new side of PLM? Systems of Engagement!

Where is the money?

Business leaders usually are not interested in a technology or architectural discussion – too many details and complexity, they look for the business case. Look at this recent post and comments on LinkedIn – “When you try to explain PLM to your C-suite, and they just don’t get it.”

Business leaders usually are not interested in a technology or architectural discussion – too many details and complexity, they look for the business case. Look at this recent post and comments on LinkedIn – “When you try to explain PLM to your C-suite, and they just don’t get it.”

It is hard to build evidence for the need for systems of engagement, as the concepts are relatively new and experiences from the field are bubbling up slowly. With the Heliple team, we are now working on building the business case for Federated PLM in the context of the Heliple project scope.

Therefore, I was excited to read the results of this survey: Quantifying the impact of design review methods on NPD, a survey among 250 global engineering leaders initiated by CoLab.

![]() CoLab is one of those companies that focus on providing a System of Engagement, and their scope is design collaboration. In this post, I am discussing the findings of this survey with Taylor Young, Chief Strategy Officer of CoLab.

CoLab is one of those companies that focus on providing a System of Engagement, and their scope is design collaboration. In this post, I am discussing the findings of this survey with Taylor Young, Chief Strategy Officer of CoLab.

CoLab – the company /the mission

Taylor, thanks for helping me explain the complementary value of CoLab based on some of the key findings from the survey. But first of all, can you briefly introduce CoLab as a company and the unique value you are offering to your clients?

Taylor, thanks for helping me explain the complementary value of CoLab based on some of the key findings from the survey. But first of all, can you briefly introduce CoLab as a company and the unique value you are offering to your clients?

Hi Jos, CoLab is a Design Engagement System – we exist to help engineering teams make design decisions.

Hi Jos, CoLab is a Design Engagement System – we exist to help engineering teams make design decisions.

Product decision-making has never been more challenging – or more essential – to get right – that’s why we built CoLab. In today’s world of product complexity, excellent decision-making requires specialized expertise. That means decision-making is no longer just about people engaging with product data – it’s about people engaging with other people.

PLM provides a strong foundation where product data is controlled (and therefore reliable). But PLM has a rigid architecture that’s optimized for data (and for human-to-data interaction). To deal with increased complexity in product design, engineers now need a system that’s built for human-to-human interaction complimentary to PLM.

CoLab allows you to interrogate a rich dataset, even an extended team, outside your company borders in real-time or asynchronously. With CoLab, decisions are made with context, input from the right people, and as early as possible in the process. Reviews and decision-making get tracked automatically and can be synced back to PLM. Engineers can do what they do best, and CoLab will support them by documenting everything in the background.

Design Review Quality

It is known that late-stage design errors are very costly, both impacting product launches and profitability. The report shows design review quality has been rated as the #1 most important factor affecting an engineering team’s ability to deliver projects on time.

It is known that late-stage design errors are very costly, both impacting product launches and profitability. The report shows design review quality has been rated as the #1 most important factor affecting an engineering team’s ability to deliver projects on time.

Is it a severe problem for companies, and what are they trying to do to improve the quality of design reviews? Can you quantify the problem?

Our survey report demonstrated that late-stage design errors delay product launches for 90% of companies. The impact varies significantly from organization to organization, but we know that for large manufacturing companies, just one late-stage design error can be a six to seven-figure problem.

Our survey report demonstrated that late-stage design errors delay product launches for 90% of companies. The impact varies significantly from organization to organization, but we know that for large manufacturing companies, just one late-stage design error can be a six to seven-figure problem.

There are a few factors that lead to a “quality” design review – some of the most important ones we see leading manufacturing companies doing differently are:

- Who they include – the highest performing teams include manufacturing, suppliers, and customers within the proper context.

- When they happen – the highest performing teams focus on getting CAD to these stakeholders early in the process (during detailed design) and paralleling these processes (i.e., they don’t wait for one group to sign off before getting early info to the next)

- Rethinking the Design Review meeting – the highest performing teams aren’t having issue-generation meetings – they have problem-solving meetings. Feedback is collected from a broad audience upfront, and meetings are used to solve nuanced problems – not to get familiar with the project for the first time.

Multidisciplinary collaboration

An interesting observation is that providing feedback to engineers mainly comes from peers or within the company. Having suppliers or customers involved seems to be very difficult. Why do you think this is happening, and how can we improve their contribution?

An interesting observation is that providing feedback to engineers mainly comes from peers or within the company. Having suppliers or customers involved seems to be very difficult. Why do you think this is happening, and how can we improve their contribution?

When we talk to manufacturing companies about “collaboration” or how engineers engage with other engineers – however good or bad the processes are internally, it almost always is significantly more challenging/less effective when they go external. External teams often use different CAD systems, work in different time zones, speak other first languages, and have varying levels of access to core engineering information.

When we talk to manufacturing companies about “collaboration” or how engineers engage with other engineers – however good or bad the processes are internally, it almost always is significantly more challenging/less effective when they go external. External teams often use different CAD systems, work in different time zones, speak other first languages, and have varying levels of access to core engineering information.

However, as we can read from the HBR article What the Most Productive Companies Do Differently, we know that the most productive manufacturing companies “collaborate with suppliers and customers to form new ecosystems that benefit from agglomeration effects and create shared pools of value”.

They leverage the expertise of their suppliers and customers to do things more effectively. But manufacturing companies struggle to create engaging, high-value, external collaboration and ‘co-design’ without the tools purpose-built for person-to-person external communication.

Traceability and PLM

One of the findings is that keeping track of the feedback and design issues is failing in companies. One of my recommendations from the past was to integrate Issue management into your PLM systems – why is this not working?

One of the findings is that keeping track of the feedback and design issues is failing in companies. One of my recommendations from the past was to integrate Issue management into your PLM systems – why is this not working?

We believe that the task of completing a design review and the task of documenting the output of that review should not be two separate efforts. Suppose we are to reduce the amount of time engineers spend on admin work and decrease the number of issues that are never tracked or documented (43%, according to our survey).

We believe that the task of completing a design review and the task of documenting the output of that review should not be two separate efforts. Suppose we are to reduce the amount of time engineers spend on admin work and decrease the number of issues that are never tracked or documented (43%, according to our survey).

In that case, we need to introduce a purpose-built, engaging design review system that is self-documenting. It is crucial for the quality of design reviews that issues aren’t tracked in a separate system from where they are raised/developed, but that instead, they are automatically tracked just by doing the work.

Learn More?

Below is the recording of a recent webinar, where Taylor said that your PLM alone isn’t enough: Why you need a Design Engagement System during product development.

- A traditional PLM system is the system of record for product data – from ideation through sustaining engineering.

- However one set of critical data never makes it to the PLM. For many manufacturing companies today, design feedback and decisions live almost exclusively in emails, spreadsheets, and PowerPoint decks. At the same time, 90% of engineering teams state that product launches are delayed due to late-stage changes.

- Engineering teams need to implement a true Design Engagement System (DES) for more effective product development and a more holistic digital thread.

Conclusion

Traditional PLM systems have always been used to provide quality and data governance along the product lifecycle. However, most end users dislike the PLM system as it is a bureaucratic overhead to their ways of working. CoLab, with its DES solution, provides a System of Engagement focusing on design reviews, speed, and quality of multidisciplinary collaboration complementary to the PLM System of Record – a modern example of how digitization is transforming the PLM domain.

This year started for me with a discussion related to federated PLM. A topic that I highlighted as one of the imminent trends of 2022. A topic relevant for PLM consultants and implementers. If you are working in a company struggling with PLM, this topic might be hard to introduce in your company.

This year started for me with a discussion related to federated PLM. A topic that I highlighted as one of the imminent trends of 2022. A topic relevant for PLM consultants and implementers. If you are working in a company struggling with PLM, this topic might be hard to introduce in your company.

Before going into the discussion’s topics and arguments, let’s first describe the historical context.

The traditional PLM frame.

Historically PLM has been framed first as a system for engineering to manage their product data. So you could call it PDM first. After that, PLM systems were introduced and used to provide access to product data, upstream and downstream. The most common usage was the relation with manufacturing, leading to EBOM and MBOM discussions.

IT landscape simplification often led to an infrastructure of siloed solutions – PLM, ERP, CRM and later, MES. IT was driving the standardization of systems and defining interfaces between systems. System capabilities were leading, not the flow of information.

As many companies are still in this stage, I would call it PLM 1.0

PLM 1.0 systems serve mainly as a System of Record for the organization, where disciplines consolidate their data in a given context, the Bills of Information. The Bill of Information then is again the place to connect specification documents, i.e., CAD models, drawings and other documents, providing a Digital Thread.

The actual engineering work is done with specialized tools, MCAD/ECAD, CAE, Simulation, Planning tools and more. Therefore, each person could work in their discipline-specific environment and synchronize their data to the PLM system in a coordinated manner.

The actual engineering work is done with specialized tools, MCAD/ECAD, CAE, Simulation, Planning tools and more. Therefore, each person could work in their discipline-specific environment and synchronize their data to the PLM system in a coordinated manner.

However, this interaction is not easy for some of the end-users. For example, the usability of CAD integrations with the PLM system is constantly debated.

Many of my implementation discussions with customers were in this context. For example, suppose your products are relatively simple, or your company is relatively small. In that case, the opinion is that the System or Record approach is overkill.

Many of my implementation discussions with customers were in this context. For example, suppose your products are relatively simple, or your company is relatively small. In that case, the opinion is that the System or Record approach is overkill.

That’s why many small and medium enterprises do not see the value of a PLM backbone.

This could be true till recently. However, the threats to this approach are digitization and regulations.

Customers, partners, and regulators all expect more accurate and fast responses on specific issues, preferably instantly. In addition, sustainability regulations might push your company to implement a System of Record.

Customers, partners, and regulators all expect more accurate and fast responses on specific issues, preferably instantly. In addition, sustainability regulations might push your company to implement a System of Record.

PLM as a business strategy

For the past fifteen years, we have discussed PLM more as a business strategy implemented with business systems and an infrastructure designed for sharing. Therefore, I choose these words carefully to avoid overhanging the expression: PLM as a business strategy.

For the past fifteen years, we have discussed PLM more as a business strategy implemented with business systems and an infrastructure designed for sharing. Therefore, I choose these words carefully to avoid overhanging the expression: PLM as a business strategy.

The reason for this prudence is that, in reality, I have seen many PLM implementations fail due to the ambiguity of PLM as a system or strategy. Many enterprises have previously selected a preferred PLM Vendor solution as a starting point for their “PLM strategy”.

One of the most neglected best practices.

In reality, this means there was no strategy but a hope that with this impressive set of product demos, the company would find a way to support its business needs. Instead of people, process and then tools to implement the strategy, most of the time, it was starting with the tools trying to implement the processes and transform the people. That is not really the definition of business transformation.

In my opinion, this is happening because, at the management level, decisions are made based on financials.

In my opinion, this is happening because, at the management level, decisions are made based on financials.

Developing a PLM-related business strategy requires management understanding and involvement at all levels of the organization.

This is often not the case; the middle management has to solve the connection between the strategy and the execution. By design, however, the middle management will not restructure the organization. By design, they will collect the inputs van the end users.

And it is clear what end users want – no disruption in their comfortable way of working.

And it is clear what end users want – no disruption in their comfortable way of working.

Halfway conclusion:

Rebranding PLM as a business strategy has not really changed the way companies work. PLM systems remain a System of Record mainly for governance and traceability.

To understand the situation in your company, look at who is responsible for PLM.

- If IT is responsible, then most likely, PLM is not considered a business strategy but more an infrastructure.

- If engineering is responsible for PLM, then you are still in the early days of PLM, the engineering tools to be consulted by others upstream or downstream.

Only when PLM accountability is at the upper management level, it might be a business strategy (assume the upper management understands the details)

Connected is the game changer

Connecting all stakeholders in an engagement has been a game changer in the world. With the introduction of platforms and the smartphone as a connected device, consumers could suddenly benefit from direct responses to desired service requests (Spotify, iTunes, Uber, Amazon, Airbnb, Booking, Netflix, …).

Connecting all stakeholders in an engagement has been a game changer in the world. With the introduction of platforms and the smartphone as a connected device, consumers could suddenly benefit from direct responses to desired service requests (Spotify, iTunes, Uber, Amazon, Airbnb, Booking, Netflix, …).

The business change: connecting real-time all stakeholders to deliver highly rapid results.

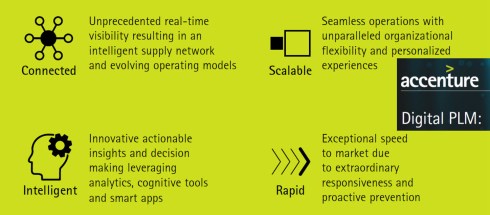

What would be the game changer in PLM was the question? The image below describes the 2014 Accenture description of digital PLM and its potential benefits.

Is connected PLM a utopia?

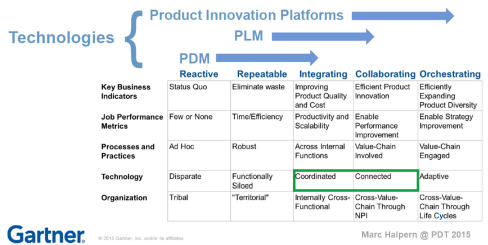

Marc Halpern from Gartner shared in 2015 the slide below that you might have seen many times before. Digital Transformation is really moving from a coordinated to a connected technology, it seems.

The image below gives an impression of an evolution.

I have been following this concept till I was triggered by a 2017 McKinsey publication: “our insights/toward an integrated technology operating model“.

This was the first notion for me that the future should be hybrid, a combination of traditional PLM (system of record) complemented with teams that work digitally connected; McKinsey called them pods that become product-centric (multidisciplinary team focusing on a product) instead of discipline-centric (marketing/engineering/manufacturing/service)

In 2019 I wrote the post: The PLM migration dilemma supporting the “shocking” conclusion “Don’t think about migration when moving to data-driven, connected ways of working. You need both environments.”

One of the main arguments behind this conclusion was that legacy product data and processes were not designed to ensure data accuracy and quality on such a level that it could become connected data. As a result, converting documents into reliable datasets would be a costly, impossible exercise with no real ROI.

One of the main arguments behind this conclusion was that legacy product data and processes were not designed to ensure data accuracy and quality on such a level that it could become connected data. As a result, converting documents into reliable datasets would be a costly, impossible exercise with no real ROI.

The second argument was that the outside world, customers, regulatory bodies and other non-connected stakeholders still need documents as standardized deliverables.

The conclusion led to the image below.

Systems of Record (left) and Systems of Engagement (right)

Splitting PLM?

In 2021 these thoughts became more mature through various publications and players in the PLM domain.

We saw the upcoming of Systems of Engagement – I discussed OpenBOM, Colab and potentially Configit in the post: A new PLM paradigm. These systems can be characterized as connected solutions across the enterprise and value chain, focusing on a platform experience for the stakeholders.

We saw the upcoming of Systems of Engagement – I discussed OpenBOM, Colab and potentially Configit in the post: A new PLM paradigm. These systems can be characterized as connected solutions across the enterprise and value chain, focusing on a platform experience for the stakeholders.

These are all environments addressing the needs of a specific group of users as efficiently and as friendly as possible.

A System of Engagement will not fit naturally in a traditional PLM backbone; the System of Record.

Erik Herzog with SAAB Aerospace and Yousef Houshmand at that time with Daimler published that year papers related to “Federated PLM” or “The end of monolithic PLM.”. They acknowledged a company needs to focus on more than a single PLM solution. The presentation from Erik Herzog at the PLM Roadmap/PDT conference was interesting because Erik talked about the Systems of Engagement and the Systems of Record. He proposed using OSLC as the standard to connect these two types of PLM.

Erik Herzog with SAAB Aerospace and Yousef Houshmand at that time with Daimler published that year papers related to “Federated PLM” or “The end of monolithic PLM.”. They acknowledged a company needs to focus on more than a single PLM solution. The presentation from Erik Herzog at the PLM Roadmap/PDT conference was interesting because Erik talked about the Systems of Engagement and the Systems of Record. He proposed using OSLC as the standard to connect these two types of PLM.

It was a clear example of an attempt to combine the two kinds of PLM.

And here comes my question: Do we need to split PLM?

When I look at PLM implementations in the field, almost all are implemented as a System of Record, an information backbone proved by a single vendor PLM. The various disciplines deliver their content through interfaces to the backbone (Coordinated approach).

However, there is low usability or support for multidisciplinary collaboration; the PLM backbone is not designed for that.

Due to concepts of Model-Based Systems Engineering (MBSE) and Model-Based Definition (MBD), there are now solutions on the market that allow different disciplines to work jointly related to connected datasets that can be manipulated using modeling software (1D, 2D, 3D, 4D,…).

These environments, often a mix of software and hardware tools, are the Systems of Engagement and provide speedy results with high quality in the virtual world. Digital Twins are running on Systems of Engagements, not on Systems of Records.

Systems of Engagement do not need to come from the same vendor, as they serve different purposes. But how to explain this to your management, who wants simplicity. I can imagine the IT organization has a better understanding of this concept as, at the end of 2015, Gartner introduced the concept of the bimodal approach.

Systems of Engagement do not need to come from the same vendor, as they serve different purposes. But how to explain this to your management, who wants simplicity. I can imagine the IT organization has a better understanding of this concept as, at the end of 2015, Gartner introduced the concept of the bimodal approach.

Their definition:

Mode 1 is optimized for areas that are more well-understood. It focuses on exploiting what is known. This includes renovating the legacy environment so it is fit for a digital world. Mode 2 is exploratory, potentially experimenting to solve new problems. Mode 2 is optimized for areas of uncertainty. Mode 2 often works on initiatives that begin with a hypothesis that is tested and adapted during a process involving short iterations.

No Conclusion – but a question this time:

At the management level, unfortunately, there is most of the time still the “Single PLM”-mindset due to a lack of understanding of the business. Clearly splitting your PLM seems the way forward. IT could be ready for this, but will the business realize this opportunity?

What are your thoughts?

A  month ago, I wrote: It is time for BLM – PLM is not dead, which created an anticipated discussion. It is practically impossible to change a framed acronym. Like CRM and ERP, the term PLM is there to stay.

month ago, I wrote: It is time for BLM – PLM is not dead, which created an anticipated discussion. It is practically impossible to change a framed acronym. Like CRM and ERP, the term PLM is there to stay.

However, it was also interesting to see that people acknowledge that PLM should have a business scope and deserves a place at the board level.

The importance of PLM at business level is well illustrated by the discussion related to this LinkedIn post from Matthias Ahrens referring to the CIMdata roadmap conference CEO discussion.

My favorite quote:

Now it’s ‘lifecycle management,’ not just EDM or PDM or whatever they call it. Lifecycle management is no longer just about coming up with new stuff. We’re seeing more excitement and passion in our customers, and I think this is why.”

But it is not that simple

This is a perfect message for PLM vendors to justify their broad portfolio. However, as they do not focus so much on new methodologies and organizational change, their messages remain at the marketing level.

This is a perfect message for PLM vendors to justify their broad portfolio. However, as they do not focus so much on new methodologies and organizational change, their messages remain at the marketing level.

In the field, there is more and more awareness that PLM has a dual role. Just when I planned to write a post on this topic, Adam Keating, CEO en founder of CoLab, wrote the post System of Record meet System of Engagement.

Read the post and the comments on LinkedIn. Adam points to PLM as a System of Engagement, meaning an environment where the actual work is done all the time. The challenge I see for CoLab, like other modern platforms, e.g., OpenBOM, is how it can become an established solution within an organization. Their challenge is they are positioned in the engineering scope.

I believe for these solutions to become established in a broader customer base, we must realize that there is a need for a System of Record AND System(s) of Engagement.

In my discussions related to digital transformation in the PLM domain, I addressed them as separate, incompatible environments.

See the image below:

Now let’s have a closer look at both of them

What is a System of Record?

For me, PLM has always been the System of Record for product information. In the coordinated manner, engineers were working in their own systems. At a certain moment in the process, they needed to publish shareable information, a document(e.g., PDF) or BOM-table (e.g., Excel). The PLM system would support New Product Introduction processes, Release and Change Processes and the PLM system would be the single point of reference for product data.

For me, PLM has always been the System of Record for product information. In the coordinated manner, engineers were working in their own systems. At a certain moment in the process, they needed to publish shareable information, a document(e.g., PDF) or BOM-table (e.g., Excel). The PLM system would support New Product Introduction processes, Release and Change Processes and the PLM system would be the single point of reference for product data.

The reason I use the bin-image is that companies, most of the time, do not have an advanced information-sharing policy. If the information is in the bin, the experts will find it. Others might recreate the same information elsewhere, due to a lack of awareness.

Most of the time, engineers did not like PLM systems caused by integrations with their tools. Suddenly they were losing a lot of freedom due to check-in / check-out / naming conventions/attributes and more. Current PLM systems are good for a relatively stable product, but what happens when the product has a lot of parallel iterations (hardware & software, for example). How to deal with Work In Progress?

Most of the time, engineers did not like PLM systems caused by integrations with their tools. Suddenly they were losing a lot of freedom due to check-in / check-out / naming conventions/attributes and more. Current PLM systems are good for a relatively stable product, but what happens when the product has a lot of parallel iterations (hardware & software, for example). How to deal with Work In Progress?

Last week I visited the startup company PAL-V in the context of the Dutch PDM Platform. As you can see from the image, PAL-V is working on the world’s first Flying Car Production Model. Their challenge is to be certified for flying (here, the focus is on the design) and to be certified for driving (here, the focus is on manufacturing reliability/quality).

Last week I visited the startup company PAL-V in the context of the Dutch PDM Platform. As you can see from the image, PAL-V is working on the world’s first Flying Car Production Model. Their challenge is to be certified for flying (here, the focus is on the design) and to be certified for driving (here, the focus is on manufacturing reliability/quality).

During the PDM platform session, they showed their current Windchill implementation, which focused on managing and providing evidence for certification. For this type of company, the System of Record is crucial.

Their (mainly) SolidWorks users are trained to work in a controlled environment. The Aerospace and Automotive industries have started this way, which we can see reflected in current PLM systems.

And to finish with a PLM buzzword: modern systems of record provide a digital thread.

What is a System of Engagement?

The characteristic of a system of engagement is that it supports the user in real-time. This could be an environment for work in progress. Still, more importantly, all future concepts from MBSE, Industry 4.0 and Digital Twins rely on connected and real-time data.

As I previously mentioned, Digital Twins do not run on documents; they run on reliable data.

A system of engagement is an environment where different disciplines work together, using models and datasets. I described such an environment in my series The road to model-based and connected PLM. The System of Engagement environment must be user-friendly enough for these experts to work.

Due to the different targets of a system engagement, I believe we have to talk about Systems of Engagement as there will be several engagement models on a connected (federated) set of data.

Yousef Hooshmand shared the Daimler paper: “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh” in that context. Highly recommended to read if you are interested in a potential PLM future infrastructure.

Let’s look at two typical Systems of Engagement without going into depth.

The MBSE System of Engagement

In this environment, systems engineering is performed in a connected manner, building connected artifacts that should be available in real-time, allowing engineers to perform analysis and simulations to construct the optimal virtual solution before committing to physical solutions.

In this environment, systems engineering is performed in a connected manner, building connected artifacts that should be available in real-time, allowing engineers to perform analysis and simulations to construct the optimal virtual solution before committing to physical solutions.

It is an iterative environment. Click on the image for an impression.

The MBSE space will also be the place where sustainability needs to start. Environmental impact, the planet as a stakeholder, should be added to the engineering process. Life Cycle Assessment (LCA) defining the process and material choices will be fed by external data sources, for example, managed by ecoinvent, Higg and others to come. It is a new emergent market.

The MBSE space will also be the place where sustainability needs to start. Environmental impact, the planet as a stakeholder, should be added to the engineering process. Life Cycle Assessment (LCA) defining the process and material choices will be fed by external data sources, for example, managed by ecoinvent, Higg and others to come. It is a new emergent market.

The Digital Twin

In any phase of the product lifecycle, we can consider a digital twin, a virtual data-driven environment to analyze, define and optimize a product or a process. For example, we can have a digital twin for manufacturing, fulfilling the Industry 4.0 dreams.

In any phase of the product lifecycle, we can consider a digital twin, a virtual data-driven environment to analyze, define and optimize a product or a process. For example, we can have a digital twin for manufacturing, fulfilling the Industry 4.0 dreams.

We can have a digital twin for operation, analyzing, monitoring and optimizing a physical product in the field. These digital twins will only work if they use connected and federated data from multiple sources. Otherwise, the operating costs for such a digital twin will be too high (due to the inefficiency of accurate data)

In the end, you would like to have these digital twins running in a connected manner. To visualize the high-level concept, I like Boeing’s diamond presented by Don Farr at the PDT conference in 2018 – Image below:

Combined with the Daimler paper “From a Monolithic PLM Landscape to a Federated Domain and Data Mesh.” or the latest post from Oleg Shilovistky How PLM Can Build Ontologies? we can start to imagine a Systems of Engagement infrastructure.

You need both

And now the unwanted message for companies – you need both: a system of record and potential one or more systems of engagement. A System of Record will remain as long as we are not all connected in a blockchain manner. So we will keep producing reports, certificates and baselines to share information with others.

And now the unwanted message for companies – you need both: a system of record and potential one or more systems of engagement. A System of Record will remain as long as we are not all connected in a blockchain manner. So we will keep producing reports, certificates and baselines to share information with others.

It looks like the Gartner bimodal approach.

An example: If you manage your product requirements in your PLM system as connected objects to your product portfolio, you will and still can generate a product specification document to share with a supplier, a development partner or a certification company.

So do not throw away your current System of Record. Instead, imagine which types of Systems of Engagement your company needs. Most Systems of Engagement might look like a siloed solution; however, remember they are designed for the real-time collaboration of a certain community – designers, engineers, operators, etc.

So do not throw away your current System of Record. Instead, imagine which types of Systems of Engagement your company needs. Most Systems of Engagement might look like a siloed solution; however, remember they are designed for the real-time collaboration of a certain community – designers, engineers, operators, etc.

The real challenge will be connecting them efficiently with your System of Record backbone, which is preferable to using standard interface protocols and standards.

The Hybrid Approach

For those of you following my digital transformation story related to PLM, this is the point where the McKinsey report from 2017 becomes actual again.

Conclusion

The concepts are evolving and maturing for a digital enterprise using a System of Record and one or more Systems of Engagement. Early adopters are now needed to demonstrate these concepts to agree on standards and solution-specific needs. It is time to experiment (fast). Where are you in this process of learning?

[…] (The following post from PLM Green Global Alliance cofounder Jos Voskuil first appeared in his European PLM-focused blog HERE.) […]

[…] recent discussions in the PLM ecosystem, including PSC Transition Technologies (EcoPLM), CIMPA PLM services (LCA), and the Design for…

Jos, all interesting and relevant. There are additional elements to be mentioned and Ontologies seem to be one of the…

Jos, as usual, you've provided a buffet of "food for thought". Where do you see AI being trained by a…

Hi Jos. Thanks for getting back to posting! Is is an interesting and ongoing struggle, federation vs one vendor approach.…