Together with Håkan Kårdén, we had the pleasure of bringing together 32 passionate professionals on November 4th to explore the future of PLM (Product Lifecycle Management) and ALM (Asset Lifecycle Management), inspired by insights from four leading thinkers in the field. Please, click on the image for more details.

Together with Håkan Kårdén, we had the pleasure of bringing together 32 passionate professionals on November 4th to explore the future of PLM (Product Lifecycle Management) and ALM (Asset Lifecycle Management), inspired by insights from four leading thinkers in the field. Please, click on the image for more details.

The meeting had two primary purposes.

- Firstly, we aimed to create an environment where these concepts could be discussed and presented to a broader audience, comprising academics, industrial professionals, and software developers. The group’s feedback could serve as a benchmark for them.

- The second goal was to bring people together and create a networking opportunity, either during the PLM Roadmap/PDT Europe conference, the day after, or through meetings established after this workshop.

Personally, it was a great pleasure to meet some people in person whose LinkedIn articles I had admired and read.

The meeting was sponsored by the Arrowhead fPVN project, a project I discussed in a previous blog post related to the PLM Roadmap/PDT Europe 2024 conference last year. Together with the speakers, we have begun working on a more in-depth paper that describes the similarities and the lessons learned that are relevant. This activity will take some time.

Therefore, this post only includes the abstracts from the speakers and links to their presentations. It concludes with a few observations from some attendees.

Reasoning Machines: Semantic Integration in Cyber-Physical Environments

Torbjörn Holm / Jan van Deventer: The presentation discussed the transition from requirements to handover and operations, emphasizing the role of knowledge graphs in unifying standards and technologies for a flexible product value network

Torbjörn Holm / Jan van Deventer: The presentation discussed the transition from requirements to handover and operations, emphasizing the role of knowledge graphs in unifying standards and technologies for a flexible product value network

The presentation outlines the phases of the product and production lifecycle, including requirements, specification, design, build-up, handover, and operations. It raises a question about unifying these phases and their associated technologies and standards, emphasizing that the most extended phase, which involves operation, maintenance, failure, and evolution until retirement, should be the primary focus.

It also discusses seamless integration, outlining a partial list of standards and technologies categorized into three sections: “Modelling & Representation Standards,” “Communication & Integration Protocols,” and “Architectural & Security Standards.” Each section contains a table listing various technology standards, their purposes, and references. Additionally, the presentation includes a “Conceptual Layer Mapping” table that details the different layers (Knowledge, Service, Communication, Security, and Data), along with examples, functions, and references.

The presentation outlines an approach for utilizing semantic technologies to ensure interoperability across heterogeneous datasets throughout a product’s lifecycle. Key strategies include using OWL 2 DL for semantic consistency, aligning domain-specific knowledge graphs with ISO 23726-3, applying W3C Alignment techniques, and leveraging Arrowhead’s microservice-based architecture and Framework Ontology for scalable and interoperable system integration.

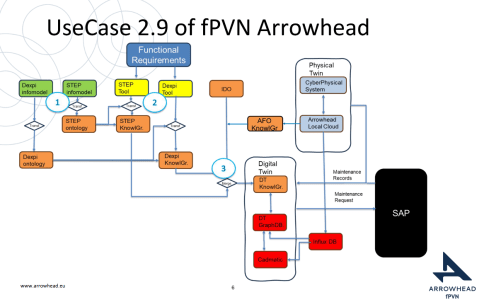

The utilized software architecture system, including three main sections: “Functional Requirements,” “Physical Twin,” and “Digital Twin,” each containing various interconnected components, will be presented. The Architecture includes today several Knowledge Graphs (KG): A DEXPI KG, A STEP (ISO 10303) KG, An Arrowhead Framework KG and under work the CFIHOS Semantics Ontology, all aligned.

👉The presentation: W3C Major standard interoperability_Paris

Beyond Handover: Building Lifecycle-Ready Semantic Interoperability

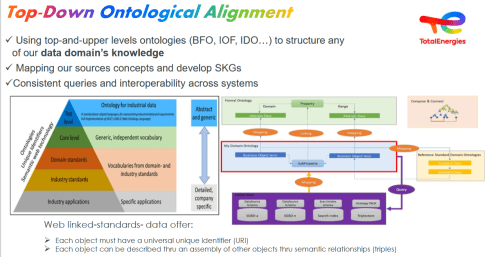

Jean-Charles Leclerc argued that Industrial data standards must evolve beyond the narrow scope of handover and static interoperability. To truly support digital transformation, they must embrace lifecycle semantics or, at the very least, be designed for future extensibility.

This shift enables technical objects and models to be reused, orchestrated, and enriched across internal and external processes, unlocking value for all stakeholders and managing the temporal evolution of properties throughout the lifecycle. A key enabler is the “pattern of change”, a dynamic framework that connects data, knowledge, and processes over time. It allows semantic models to reflect how things evolve, not just how they are delivered.

By grounding semantic knowledge graphs (SKGs) in such rigorous logic and aligning them with W3C standards, we ensure they are both robust and adaptable. This approach supports sustainable knowledge management across domains and disciplines, bridging engineering, operations, and applications.

Ultimately, it’s not just about technology; it’s about governance.

Being Sustainab’OWL (Web Ontology Language) by Design! means building semantic ecosystems that are reliable, scalable, and lifecycle-ready by nature.

Additional Insight: From Static Models to Living Knowledge

To transition from static information to living knowledge, organizations must reassess how they model and manage technical data. Lifecycle-ready interoperability means enabling continuous alignment between evolving assets, processes, and systems. This requires not only semantic precision but also a governance framework that supports change, traceability, and reuse, turning standards into operational levers rather than compliance checkboxes.

👉The presentation: Beyond Handover – Building Lifecycle Ready Semantic Interoperability

The first two presentations had a lot in common as they both come from the Asset Lifecycle Management domain and focus on an infrastructure to support assets over a long lifetime. This is particularly visible in the usage and references to standards such as DEXPI, STEP, and CFIHOS, which are typical for this domain.

The first two presentations had a lot in common as they both come from the Asset Lifecycle Management domain and focus on an infrastructure to support assets over a long lifetime. This is particularly visible in the usage and references to standards such as DEXPI, STEP, and CFIHOS, which are typical for this domain.

How can we achieve our vision of PLM – the Single Source of Truth?

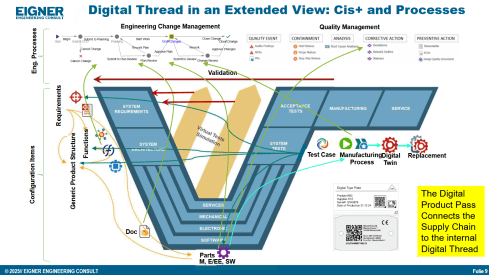

Martin Eigner stated that Product Lifecycle Management (PLM) has long promised to serve as the Single Source of Truth for organizations striving to manage product data, processes, and knowledge across their entire value chain. Yet, realizing this vision remains a complex challenge.

Achieving a unified PLM environment requires more than just implementing advanced software systems—it demands cultural alignment, organizational commitment, and seamless integration of diverse technologies. Central to this vision is data consistency: ensuring that stakeholders across engineering, manufacturing, supply chain, and service have access to accurate, up-to-date, and contextualized information along the Product Lifecycle. This involves breaking down silos, harmonizing data models, and establishing governance frameworks that enforce standards without limiting flexibility.

Emerging technologies and methodologies, such as Extended Digital Thread, Digital Twins, cloud-based platforms, and Artificial Intelligence, offer new opportunities to enhance collaboration and integrated data management.

However, their success depends on strong change management and a shared understanding of PLM as a strategic enabler rather than a purely technical solution. By fostering cross-functional collaboration, investing in interoperability, and adopting scalable architectures, organizations can move closer to a trustworthy single source of truth. Ultimately, realizing the vision of PLM requires striking a balance between innovation and discipline—ensuring trust in data while empowering agility in product development and lifecycle management.

👉The presentation: Martin – Workshop PLM Future 04_10_25

The Future is Data-Centric, Semantic, and Federated … Is your organization ready?

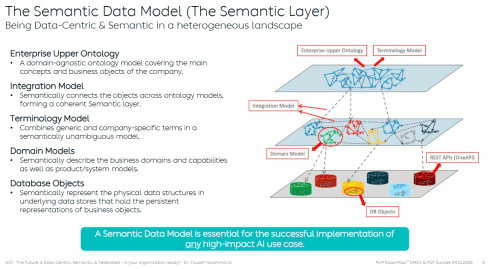

Yousef Hooshmand, who is currently working at NIO as PLM & R&D Toolchain Lead Architect, discussed the must-have relations between a data-centric approach, semantic models and a federated environment as the image below illustrates:

Why This Matters for the Future?

- Engineering is under unprecedented pressure: products are becoming increasingly complex, customers are demanding personalization, and development cycles must be accelerated to meet these demands. Traditional, siloed methods can no longer keep up.

- The way forward is a data-centric, semantic, and federated approach that transforms overwhelming complexity into actionable insights, reduces weeks of impact analysis to minutes, and connects fragmented silos to create a resilient ecosystem.

- This is not just an evolution, but a fundamental shift that will define the future of systems engineering. Is your organization ready to embrace it?

👉The presentation: The Future is Data-Centric, Semantic, and Federated.

Some of first impressions

👉 Bhanu Prakash Ila from Tata Consultancy Services– you can find his original comment in this LinkedIn post

Key points:

- Traditional PLM architectures struggle with the fundamental challenge of managing increasingly complex relationships between product data, process information, and enterprise systems.

- Ontology-Based Semantic Models – The Way Forward for PLM Digital Thread Integration: Ontology-based semantic models address this by providing explicit, machine-interpretable representations of domain knowledge that capture both concepts and their relationships. These lay the foundations for AI-related capabilities.

It’s clear that as AI, semantic technologies, and data intelligence mature, the way we think and talk about PLM must evolve too – from system-centric to value-driven, from managing data to enabling knowledge and decisions.

A quick & temporary conclusion

Typically, I conclude my blog posts with a summary. However, this time the conclusion is not there yet. There is work to be done to align concepts and understand for which industry they are most applicable. Using standards or avoiding standards as they move too slowly for the business is a point of ongoing discussion. The takeaway for everyone in the workshop was that data without context has no value. Ontologies, semantic models and domain-specific methodologies are mandatory for modern data-driven enterprises. You cannot avoid this learning path by just installing a graph database.

Leave a comment

Comments feed for this article