For those of you following my blog over the years, there is, every time after the PLM Roadmap PDT Europe conference, one or two blog posts, where the first starts with “The weekend after ….”

This time, November has been a hectic week for me, with first this engaging workshop “Shape the future of PLM – together” – you can read about it in my blog post or the latest post from Arrowhead fPVN, the sponsor of the workshop.

This time, November has been a hectic week for me, with first this engaging workshop “Shape the future of PLM – together” – you can read about it in my blog post or the latest post from Arrowhead fPVN, the sponsor of the workshop.

Last week, I celebrated with the core team from the PLM Green Global Alliance our 5th anniversary, during which we discussed sustainability in action. The term sustainability is currently under the radar, but if you want to learn what is happening, read this post with a link to the webinar recording.

Last week, I celebrated with the core team from the PLM Green Global Alliance our 5th anniversary, during which we discussed sustainability in action. The term sustainability is currently under the radar, but if you want to learn what is happening, read this post with a link to the webinar recording.

Last week, I was also active at the PTC/User Benelux conference, where I had many interesting discussions about PTC’s strategy and portfolio. A big and well-organized event in the town where I grew up in the world of teaching and data management.

Last week, I was also active at the PTC/User Benelux conference, where I had many interesting discussions about PTC’s strategy and portfolio. A big and well-organized event in the town where I grew up in the world of teaching and data management.

And now it is time for the PLM roadmap / PDT conference review

The conference

The conference is my favorite technical conference 😉 for learning what is happening in the field. Over the years, we have seen reports from the Aerospace & Defense PLM Action Groups, which systematically work on various themes related to a digital enterprise. The usage of standards, MBSE, Supplier Collaboration, Digital Thread & Digital Twin are all topics discussed.

The conference is my favorite technical conference 😉 for learning what is happening in the field. Over the years, we have seen reports from the Aerospace & Defense PLM Action Groups, which systematically work on various themes related to a digital enterprise. The usage of standards, MBSE, Supplier Collaboration, Digital Thread & Digital Twin are all topics discussed.

This time, the conference was sold out with 150+ attendees, just fitting in the conference space, and the two-day program started with a challenging day 1 of advanced topics, and on day 2 we saw more company experiences.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

Combined with the traditional dinner in the middle, it was again a great networking event to charge the brain. We still need the brain besides AI. Some of the highlights of day 1 in this post.

PLM’s Integral Role in Digital Transformation

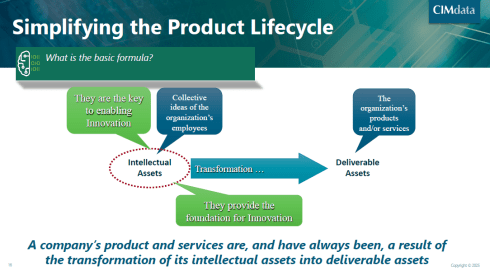

As usual, Peter Bilello, CIMdata’s President & CEO, kicked off the conference, and his message has not changed over the years. PLM should be understood as a strategic, enterprise-wide approach that manages intellectual assets and connects the entire product lifecycle.

As usual, Peter Bilello, CIMdata’s President & CEO, kicked off the conference, and his message has not changed over the years. PLM should be understood as a strategic, enterprise-wide approach that manages intellectual assets and connects the entire product lifecycle.

I like the image below explaining the WHY behind product lifecycle management.

It enables end-to-end digitalization, supports digital threads and twins, and provides the backbone for data governance, analytics, AI, and skills transformation.

Peter walked us briefly through CIMdata’s Critical Dozen (a YouTube recording is available here), all of which are relevant to the scope of digital transformation. Without strong PLM foundations and governance, digital transformation efforts will fail.

The Digital Thread as the Foundation of the Omniverse

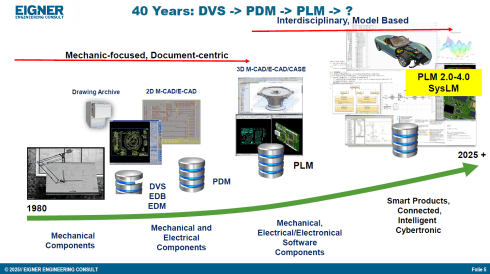

Prof. Dr.-Ing. Martin Eigner, well known for his lifetime passion and vision in product lifecycle management (PDM and PLM tools & methodology), shared insights from his 40-year journey, highlighting the growing complexity and ever-increasing fragmentation of customer solution landscapes.

Prof. Dr.-Ing. Martin Eigner, well known for his lifetime passion and vision in product lifecycle management (PDM and PLM tools & methodology), shared insights from his 40-year journey, highlighting the growing complexity and ever-increasing fragmentation of customer solution landscapes.

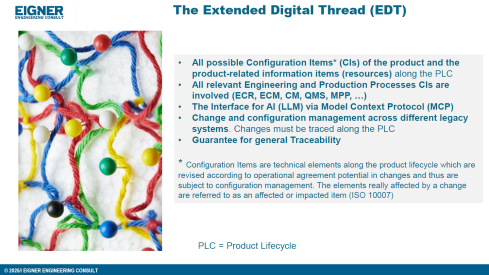

In his current eco-system, ERP (read SAP) is playing a significant role as an execution platform, complemented by PDM or ECTR capabilities. Few of his customers go for the broad PLM systems, and therefore, he stresses the importance of the so-called Extended Digital Thread.

Prof Eigner describes the EDT more precisely as an overlaying infrastructure implemented by a graph database that serves as a performant knowledge graph of the enterprise.

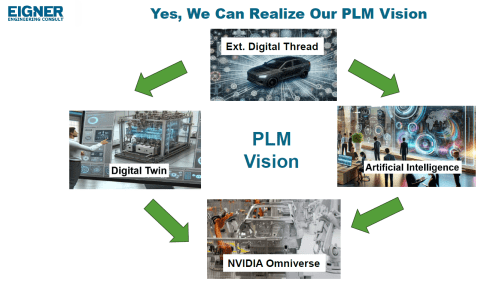

The EDT serves as the foundation for AI-driven applications, supporting impact analysis, change management, and natural-language interaction with product data. The presentation also provides a detailed view of Digital Twin concepts, ranging from component to system and process twins, and demonstrates how twins enhance predictive maintenance, sustainability, and process optimization.

Combined with the NVIDIA Omniverse as the next step toward immersive, real-time collaboration and simulation, enabling virtual factories and physics-accurate visualization. The outlook emphasizes that combining EDT, Digital Twin, AI, and Omniverse moves the industry closer to the original PLM vision: a unified, consistent Single Source of Truth 😮that boosts innovation, efficiency, and ROI.

![]() For me, hearing and reading the term Single Source of Truth still creates discomfort with reality and humanity, so we still have something to discuss.

For me, hearing and reading the term Single Source of Truth still creates discomfort with reality and humanity, so we still have something to discuss.

Semantic Digital Thread for Enhanced Systems Engineering in a Federated PLM Landscape

Dr. Yousef Hooshmand‘s presentation was a great continuation of the Extended Digital Thread theme discussed by Dr. Martin Eigner. Where the core of Martin’s EDT is based on traceability between artifacts and processes throughout the lifecycle, Yousef introduced a (for me) totally new concept: starting with managing and structuring the data to manage the knowledge, rather than starting from the models and tools to understand the knowledge.

Dr. Yousef Hooshmand‘s presentation was a great continuation of the Extended Digital Thread theme discussed by Dr. Martin Eigner. Where the core of Martin’s EDT is based on traceability between artifacts and processes throughout the lifecycle, Yousef introduced a (for me) totally new concept: starting with managing and structuring the data to manage the knowledge, rather than starting from the models and tools to understand the knowledge.

It is a fundamentally different approach to addressing the same problem of complexity. During our pre-conference workshop “Shape the future of PLM – together,” I already got a bit familiar with this approach, and Yousef’s recently released paper provides all the details.

All the relevant information can be found in his recent LinkedIn post here.

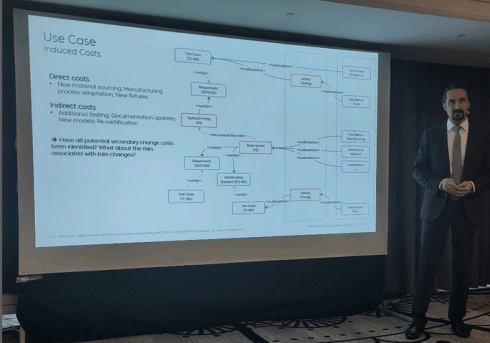

In his presentation during the conference, Yousef illustrated the value and applicability of the Semantic Digital Thread approach by presenting it in an automotive use case: Impact Analysis and Cost Estimation (image above)

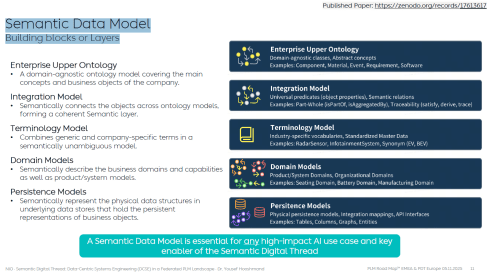

To understand the Semantic Digital Thread, it is essential to understand the Semantic Data Model and its building blocks or layers, as illustrated in the image below:

In addition, such an infrastructure is ideal for AI applications and avoids vendor- or tool lock-in, providing a significant long-term advantage.

I am sure it will take time for us to digest the content if you are entering the domain of a data-driven enterprise (the connected approach) instead of a document-driven enterprise (the coordinated approach).

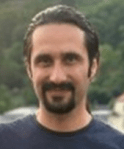

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

However, as many of the other presentations on day 1 also stated: “data without context is worthless – then they become just bits and bytes.” For advanced and future scenarios, you cannot avoid working with ontologies, semantic models and graph databases.

Where is your company on the path to becoming more data-driven?

Note: I just saw this post and the image above, which emphasizes the importance of the relationship between ontologies and the application of AI agents.

Evaluation of SysML v2 for use in Collaborative MBSE between OEMs and Suppliers

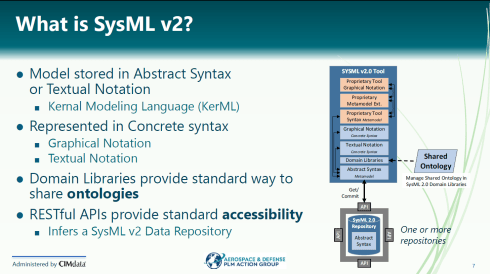

It was interesting to hear Chris Watkins’ speech, which presented the findings from the AD PLM Action Group MBSE Collaboration Working Group on digital collaboration based on SysML v2.

It was interesting to hear Chris Watkins’ speech, which presented the findings from the AD PLM Action Group MBSE Collaboration Working Group on digital collaboration based on SysML v2.

The topic they research is that currently there are no common methods and standards for exchanging digital model-based requirements and architecture deliverables for the design, procurement, and acceptance of aerospace systems equipment across the industry.

The action group explored the value of SysML v2 for data-driven collaboration between OEMs and suppliers, particularly in the early concept phases.

Chris started with a brief explanation of what SysXML v2 is – image below:

As the image illustrates, SysML v2-ready tools allow people to work in their proprietary interfaces while sharing results in common, defined structures and ontologies.

When analyzing various collaboration scenarios, one of the main challenges remained managing changes, the required ontologies, and working in a shared IT environment.

👉You can read the full report here: AD PAG reports: Model-Based Systems Engineering.

An interesting point of discussion here is that, in the report, participants note that, despite calling out significant gaps and concerns, a substantial majority of the industry indicated that their MBSE solution provider is a good partner. At the same time, only a small minority expressed a negative view.

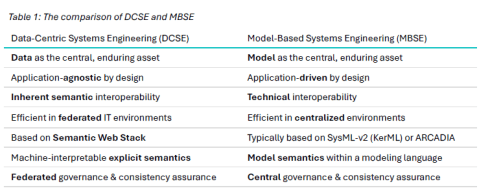

Would Data-Centric Systems Engineering change the discussion? See table 1 below from Yousef’s paper:

An illustration that there was enough food for discussion during the conference.

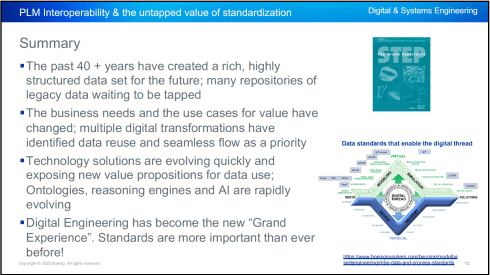

PLM Interoperability and the Untapped Value of 40 Years in Standardization

In the context of collaboration, two sessions fit together perfectly.

First, Kenny Swope from Boeing. Kenny is a longtime Boeing engineering leader and global industrial-data standards expert who oversees enterprise interoperability efforts, chairs ISO/TC 184/SC 4, and mentors youth in technology through 4-H and FIRST programs.

First, Kenny Swope from Boeing. Kenny is a longtime Boeing engineering leader and global industrial-data standards expert who oversees enterprise interoperability efforts, chairs ISO/TC 184/SC 4, and mentors youth in technology through 4-H and FIRST programs.

Kenny shared that over the past 40+ years, the understanding and value of this approach have become increasingly apparent, especially as organizations move toward a digital enterprise. In a digital enterprise, these standards are needed for efficient interoperability between various stakeholders. And the next session was an example of this.

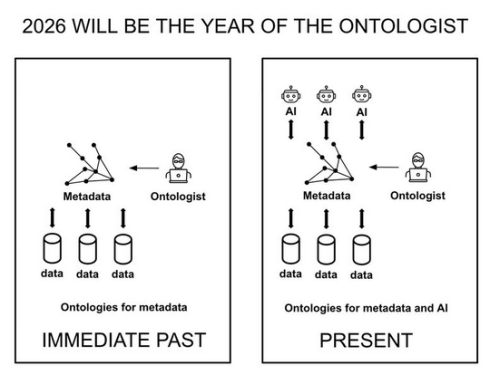

Unlocking Enterprise Knowledge

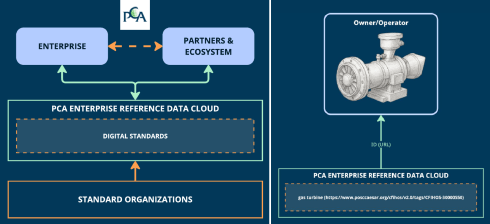

Fredrik Anthonisen, the CTO of the POSC Caesar Association (PCA), started his story about the potential value of efficient standard use.

Fredrik Anthonisen, the CTO of the POSC Caesar Association (PCA), started his story about the potential value of efficient standard use.

According to a Siemens report, “The true costs of downtime” a $1,4 trillion is lost to unplanned downtime.

The root cause is that, most of the time, the information needed to support the MRO activity is inaccessible or incomplete.

Making data available using standards can provide part of the answer, but static documents and slow consensus processes can’t keep up with the pace of change.

Therefore, PCA established the PCA enterprise reference data cloud, where all stakeholders in enterprise collaboration can relate their data to digital exposed standards, as the left side of the image shows.

Fredrik shared a use case (on the right side of the image) as an example. Also, he mentioned that the process for defining and making the digital reference data available to participants is ongoing. The reference data needs to become the trusted resource for the participants to monetize the benefits.

Summary

Day 1 had many more interesting and advanced concepts related to standards and the potential usage of AI.

Jean-Charles Leclerc, Head of Innovation & Standards at TotalEnergies, in his session, “Bringing Meaning Back To Data,” elaborated on the need to provide data in the context of the domain for which it is intended, rather than “indexed” LLM data.

Jean-Charles Leclerc, Head of Innovation & Standards at TotalEnergies, in his session, “Bringing Meaning Back To Data,” elaborated on the need to provide data in the context of the domain for which it is intended, rather than “indexed” LLM data.

Very much aligned with Yousef’s statement that there is a need to apply semantic technologies, and especially ontologies, to turn the data into knowledge.

More details can also be found in the “Shape the future of PLM – together” post, where Jean-Charles was one of the leading voices.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

The panel discussion at the end of day 1 was free of people jumping on the hype. Yes, benefits are envisioned across the product lifecycle management domain, but to be valuable, the foundation needs to be more structured than it has been in the past.

“Reliable AI comes from a foundation that supports knowledge in its domain context.”

Conclusion

For the casual user, day 1 was tough – digital transformation in the product lifecycle domain requires skills that might not yet exist in smaller organizations. Understanding the need for ontologies (generic/domain-specific) and semantic models is essential to benefit from what AI can bring – a challenging and enjoyable journey to follow!

Leave a comment

Comments feed for this article