Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

Due to other activities, I could not immediately share the second part of the review related to the PLM Roadmap / PDT Europe conference, held on 23-24 October in Gothenburg. You can read my first post, mainly about Day 1, here: The weekend after PLM Roadmap/PDT Europe 2024.

There were several interesting sessions which I will not mention here as I want to focus on forward-looking topics with a mix of (federated) data-driven PLM environments and the applicability of AI, staying around 1500 words.

R-evolutionizing PLM and ERP and Heliple

Cristina Paniagua from the Luleå University of Technology closed the first day of the conference, giving us food for thought to discuss over dinner. Her session, describing the Arrowhead fPTN project, fitted nicely with the concepts of the Federated PLM Heliple project presented by Erik Herzog also on Day 2.

They are both research products related to the future state of a digital enterprise. Therefore, it makes sense to treat them together.

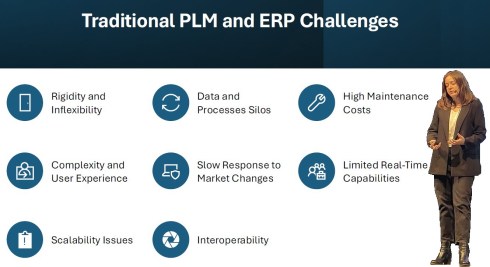

Cristina’s session started with sharing the challenges of traditional PLM and ERP systems:

These statements align with the drivers of the Heliple project. The PLM and ERP systems—Systems of Record—provide baselines and traceability. However, Systems of Record have not historically been designed to support real-time collaboration or to create an attractive user experience.

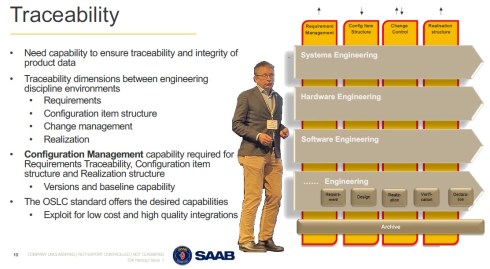

The Heliple project focuses on connecting various modules—the horizontal bars—for systems engineering, hardware engineering, etc., as real-time collaboration environments that can be highly customized and replaceable if needed. The Heliple project explored the usage of OSLC to connect these modules, the Systems of Engagement, with the Systems of Record.

![]() By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

By using Lynxwork as a low-code wrapper to develop the OSLC connections and map them to the needed business scenarios, the team concluded that this approach is affordable for businesses.

Now, the Heliple team is aiming to expand their research with industry scale validation through the Demoiple project (Validate that the Heliple-2 technology can be implemented and accredited in Saab Aeronautics’ operational IT) combined with the Nextiple project, where they will investigate the role of heterogeneous information models/ontologies for heterogeneous analysis.

![]() If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

If you are interested in participating in Nextiple, don’t hesitate to contact Erik Herzog.

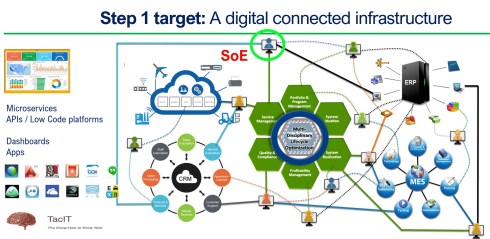

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

Christina’s Arrowhead flexible Production Value Network(fPVN) project aims to provide autonomous and evolvable information interoperability through machine-interpretable content for fPVN stakeholders. In less academic words, building a digital data-driven infrastructure.

The resulting technology is projected to impact manufacturing productivity and flexibility substantially.

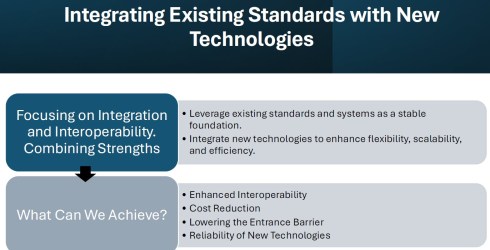

The exciting starting point of the Arrowhead project is that it wants to use existing standards and systems as a foundation and, on top of that, create a business and user-oriented layer, using modern technologies such as micro-services to support real-time processing and semantic technologies, ontologies, system modeling, and AI for data translations and learning—a much broader and ambitious scope than the Heliple project.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

I believe that in our PLM domain, this resonates with actual discussions you will find on LinkedIn, too. @Oleg Shilovitsky, @Dr. Yousef Hooshmand, @Prof. Dr. Jörg W. Fischer and Martin Eigner are a few of them steering these discussions. I consider it a perfect match for one of the images I shared about the future the digital enterprise.

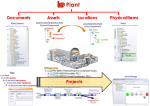

Potentially, there are five platforms with their own internal ways of working, a mix of systems of record and systems of engagement, supported by an overlay of several Systems of Engagement environments.

![]() I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

I previously described these dedicated environments, e.g., OpenBOM, Colab, Partful, and Authentise. These solutions could also be dedicated apps supporting a specific ecosystem role.

See below my artist’s impression of how a Service Engineer would work in its app connected to CRM, PLM and ERP platform datasets:

The exciting part of the Arrowhead fPVN project is that it wants to explore the interactions between systems and user roles based on existing mature standards instead of leaving the connections to software developers.

Christina mentioned some of these standards below:

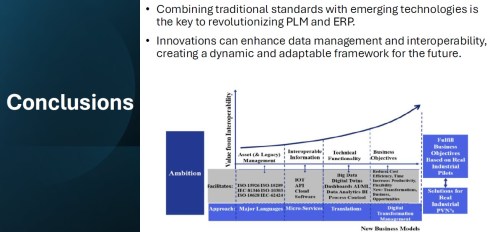

I greatly support this approach as, historically, much knowledge and effort has been put into developing standards to support interoperability. Maybe not in real-time, but the embedded knowledge in these standards will speed up the broader usage. Therefore, I concur with the concluding slide:

A final comment: Industrial users must push for these standards if they do not want a future vendor lock-in. Vendors will do what the majority of their customers ask for but will also keep their customers’ data in proprietary formats to prevent them from switching to another system.

Accelerated Product Development Enabled by Digitalization

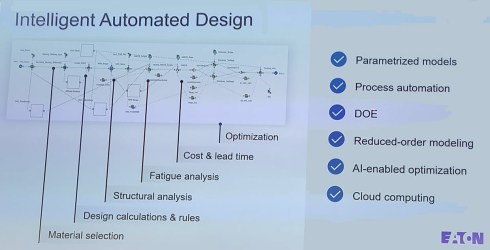

![]() The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

The keynote session on Day 2, delivered by Uyiosa Abusomwan, Ph.D., Senior Global Technology Manager – Digital Engineering at Eaton, was a visionary story about the future of engineering.

With its broad range of products, Eaton is exploring new, innovative ways to accelerate product design by modeling the design process and applying AI to narrow design decisions and customer-specific engineering work. The picture below shows the areas of attention needed to model the design processes. Uyiosa mentioned the significant beneficial results that have already been reached.

Together with generative design, Eaton works towards modern digital engineering processes built on models and knowledge. His session was complementary to the Heliple and Arrowhead story. To reach such a contemporary design engineering environment, it must be data-driven and built upon open PLM and software components to fully use AI and automation.

Next Gen” Life Cycle Management in Next-Gen Nuclear Power and LTO Legacy Plants

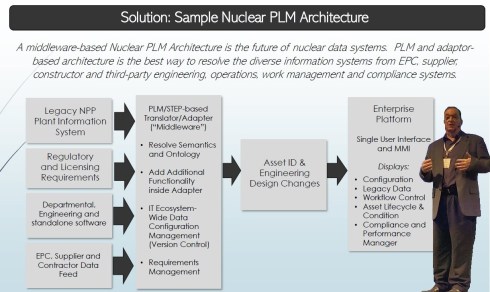

Kent Freeland‘s presentation was a trip into memory land when he discussed the issues with Long Term Operations of legacy nuclear plants.

I spent several years in Ringhals (Sweden) discussing and piloting the setup of a PLM front-end next to the MRO (Maintenance Repair Overhaul) system. As nuclear plants developed in the sixties, they required a longer than anticipated lifecycle, with access to the right design and operational data; maintenance and upgrade changes in the plant needed to be planned and controlled. The design data is often lacking; it resides at the EPC or has been stored in a document management system with limited retrieval capabilities.

I spent several years in Ringhals (Sweden) discussing and piloting the setup of a PLM front-end next to the MRO (Maintenance Repair Overhaul) system. As nuclear plants developed in the sixties, they required a longer than anticipated lifecycle, with access to the right design and operational data; maintenance and upgrade changes in the plant needed to be planned and controlled. The design data is often lacking; it resides at the EPC or has been stored in a document management system with limited retrieval capabilities.

See also my 2019 post: How PLM, ALM, and BIM converge thanks to the digital twin.

Kent described these experienced challenges – we must have worked in parallel universes – that now, for the future, we need a digitally connected infrastructure for both plant design and maintenance artifacts, as envisioned below:

The solution reminded me of a lecture I saw at the PI PLMx 2019 conference, where the Swedish ESS facility demonstrated its Asset Lifecycle Data Management solution based on the 3DEXPERIENCE platform.

The solution reminded me of a lecture I saw at the PI PLMx 2019 conference, where the Swedish ESS facility demonstrated its Asset Lifecycle Data Management solution based on the 3DEXPERIENCE platform.

You can still find the presentation here: Henrik Lindblad Ola Nanzell ESS – Enabling Predictive Maintenance Through PLM & IIOT.

Also, Kent focused on the relevant standards to support a “Single Source of Truth” concept, where I would say after all the federated PLM discussions, I would go for:

“The nearest source of truth and a single source of Change”

assuming this makes more sense in a digitally connected enterprise.

Why do you need to be SMART when contracting for information?

Rob Bodington‘s presentation was complementary to Kent Freeland’s presentation. Ron, a technical fellow at Eurostep, described the challenge of information acquisition when working with large assets that require access to the correct data once the asset is in operation. The large asset could be a nuclear plant or an aircraft carrier.

Rob Bodington‘s presentation was complementary to Kent Freeland’s presentation. Ron, a technical fellow at Eurostep, described the challenge of information acquisition when working with large assets that require access to the correct data once the asset is in operation. The large asset could be a nuclear plant or an aircraft carrier.

In the ideal world, the asset owner wants to have a digital twin of the asset fed by different data sources through a digital thread. Of course, this environment will only be reliable when accurate data is used and presented.

Getting accurate data starts with the information acquisition process, and Rob explained that this needed to be done SMARTly – see the image below:

Rob zoomed in on the SMART keywords and the challenge the various standards provide to make the information SMARTly accessible, like the ISO 10303 / PLCS standard, the CFIHOS exchange standard and more. And then there is the ISO 8000 standard about data quality.

Rob zoomed in on the SMART keywords and the challenge the various standards provide to make the information SMARTly accessible, like the ISO 10303 / PLCS standard, the CFIHOS exchange standard and more. And then there is the ISO 8000 standard about data quality.

Click on the image to get smart.

Rob believes that AI might be the silver bullet as it might help understand the data quality, ontology and context of the data and even improve contracting, generating data clauses for contracting….

And there was a lot of AI ….

![]() There was a dazzling presentation from Gary Langridge, engineering manager at Ocado, explaining their Ocado Smart Platform (OSP), which leverages AI, robotics, and automation to tackle the challenges of online grocery and allow their clients to excel in performance and customer responsiveness.

There was a dazzling presentation from Gary Langridge, engineering manager at Ocado, explaining their Ocado Smart Platform (OSP), which leverages AI, robotics, and automation to tackle the challenges of online grocery and allow their clients to excel in performance and customer responsiveness.

There was a significant AI component in his presentation, and if you are tired of reading, watch this video

![]() But here was more AI – from the 25 sessions in this conference, 19 of them mentioned the potential or usage of AI somewhere in their speech – this is more than 75 %!

But here was more AI – from the 25 sessions in this conference, 19 of them mentioned the potential or usage of AI somewhere in their speech – this is more than 75 %!

There was a dedicated closing panel discussion related to the real business value of Artificial Intelligence in the PLM domain, moderated by Peter Bilello and answered by selected speakers from the conference, Sandeep Natu (CIMdata), Lars Fossum (SAP), Diana Goenage (Dassault Systemes) and Uyiosa Abusomwan (Eaton).

The discussion was realistic and helpful for the audience. It is clear that to reap the benefits, companies must explore the technology and use it to create valuable business scenarios. One could argue that many AI tools are already available, but the challenge remains that they have to run on reliable data. The data foundation is crucial for a successful outcome.

An interesting point in the discussion was the statement from Diane Goenage, who repeatedly warned that using LLM-based solutions has an environmental impact due to the amount of energy they consume.

An interesting point in the discussion was the statement from Diane Goenage, who repeatedly warned that using LLM-based solutions has an environmental impact due to the amount of energy they consume.

We have a similar debate in the Netherlands – do we want the wind energy consumed by data centers (the big tech companies with a minimum workforce in the Netherlands), or should the Dutch citizens benefit from renewable energy resources?

Conclusion

There were even more interesting presentations during these two days, and you might have noticed that I did not advertise my content. This is because I have already reached 1600 words, but I also want to spend more time on the content separately.

It was about PLM and Sustainability, a topic often covered in this conference. Unfortunately, only 25 % of the presentations touched on sustainability, and AI over-hypes the topic.

Hopefully, it is not a sign of the time?

Leave a comment

Comments feed for this article