Last week, my memory was triggered by this LinkedIn post and discussion started by Oleg Shilovitsky: Rethinking the Data vs. Process Debate in the Age of Digital Transformation and AI.

me, 1989

In the past twenty years, the debate in the PLM community has changed a lot. PLM started as a central file repository, combined with processes to ensure the correct status and quality of the information.

Then, digital transformation in the PLM domain became achievable and there was a focus shift towards (meta)data. Now, we are entering the era of artificial intelligence, reshaping how we look at data.

In this technology evolution, there are lessons learned that are still valid for 2025, and I want to share some of my experiences in this post.

In addition, it was great to read Martin Eigner’s great reflection on the past 40 years of PDM/PLM. Martin shared his experiences and insights, not directly focusing on the data and processes debate, but very complementary and helping to understand the future.

In addition, it was great to read Martin Eigner’s great reflection on the past 40 years of PDM/PLM. Martin shared his experiences and insights, not directly focusing on the data and processes debate, but very complementary and helping to understand the future.

It started with processes (for me 2003-2014)

In the early days when I worked with SmarTeam, one of my main missions was to develop templates on top of the flexible toolkit SmarTeam.

For those who do not know SmarTeam, it was one of the first Windows PDM/PLM systems, and thanks to its open API (COM-based), companies could easily customize and adapt it. It came with standard data elements and behaviors like Projects, Documents (CAD-specific and Generic), Items and later Products.

For those who do not know SmarTeam, it was one of the first Windows PDM/PLM systems, and thanks to its open API (COM-based), companies could easily customize and adapt it. It came with standard data elements and behaviors like Projects, Documents (CAD-specific and Generic), Items and later Products.

On top of this foundation, almost every customer implemented their business logic (current practices).

And there the problems came …..

The implementations became too much a highly customized environment, not necessarily thought-through as every customer worked differently based on their (paper) history. Thanks to learning from the discussions in the field supporting stalled implementations, I was also assigned to develop templates (e.g. SmarTeam Design Express) and standard methodology (the FDA toolkit), as the mid-market customers requested. The focus was on standard processes.

The implementations became too much a highly customized environment, not necessarily thought-through as every customer worked differently based on their (paper) history. Thanks to learning from the discussions in the field supporting stalled implementations, I was also assigned to develop templates (e.g. SmarTeam Design Express) and standard methodology (the FDA toolkit), as the mid-market customers requested. The focus was on standard processes.

You can read my 2009 observations here: Can chaos become order through PLM?

The need for standardization?

When developing templates (the right data model and processes), it was also essential to provide template processes for releasing a product and controlling the status and product changes – from Engineering Change Request to Engineering Change Order. Many companies had their processes described in their ISO 900x manual, but were they followed correctly?

In 2010, I wrote ECR/ECO for Dummies, and it has been my second most-read post over the years. Only the 2019 post The importance of EBOM and MBOM in PLM (reprise) had more readers. These statistics show that many people are, and were, seeking education on general PLM processes and data model principles.

In 2010, I wrote ECR/ECO for Dummies, and it has been my second most-read post over the years. Only the 2019 post The importance of EBOM and MBOM in PLM (reprise) had more readers. These statistics show that many people are, and were, seeking education on general PLM processes and data model principles.

It was also the time when the PLM communities discussed out-of-the-box or flexible processes as Oleg referred to in his post..

You would expect companies to follow these best practices, and many small and medium enterprises that started with PLM did so. However, I discovered there was and still is the challenge with legacy (people and process), particularly in larger enterprises.

The challenge with legacy

The technology was there, the usability was not there. Many implementations of a PLM system go through a critical stage. Are companies willing to change their methodology and habits to align with common best practices, or do they still want to implement their unique ways of working (from the past)?

The technology was there, the usability was not there. Many implementations of a PLM system go through a critical stage. Are companies willing to change their methodology and habits to align with common best practices, or do they still want to implement their unique ways of working (from the past)?

“The embedded process is limiting our freedom, we need to be flexible”

is an often-heard statement. When every step is micro-managed in the PLM system, you create a bureaucracy detested by the user. In general, when the processes are implemented in a way first focusing on crucial steps with the option to improve later, you will get the best results and acceptance. Nowadays, we could call it an MVP approach.

I have seen companies that created a task or issue for every single activity a person should do. Managers loved the (demo) dashboard. It never lead to success as the approach created frustration at the end user level as their To-Do list grew and grew.

I have seen companies that created a task or issue for every single activity a person should do. Managers loved the (demo) dashboard. It never lead to success as the approach created frustration at the end user level as their To-Do list grew and grew.

Another example of the micro-management mindset is when I worked with a company that had the opposite definition of Version and Revision in their current terminology. Initially, they insisted that the new PLM system should support this, meaning everywhere in the interface where Revisions was mentioned should be Version and the reverse for Version and Revision.

Another example of the micro-management mindset is when I worked with a company that had the opposite definition of Version and Revision in their current terminology. Initially, they insisted that the new PLM system should support this, meaning everywhere in the interface where Revisions was mentioned should be Version and the reverse for Version and Revision.

Can you imagine the cost of implementing and maintaining this legacy per upgrade?

And then came data (for me 2014 – now)

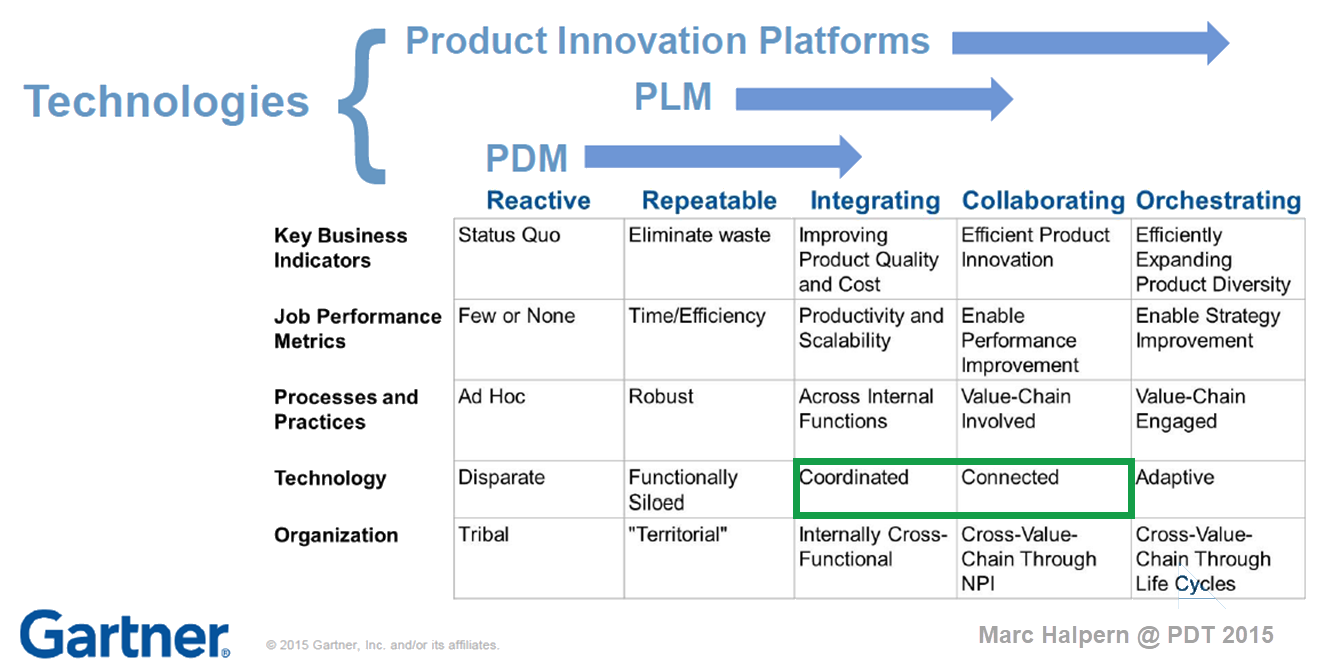

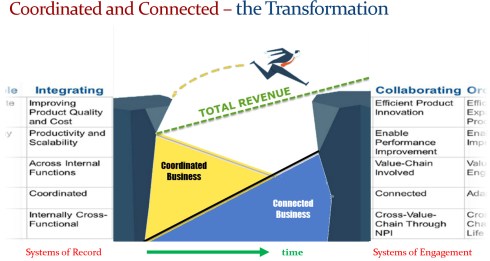

In 2015, during the pivotal PLM Roadmap/PDT conference related to Product Innovation Platforms, it brought the idea of framing digital transformation in the PLM domain in a single sentence: From Coordinated to Connected. See the original image from Marc Halpern here below and those who have read my posts over the years have seen this terminology’s evolution. Now I would say (till 2024): From Coordinated to Coordinated and Connected.

A data-driven approach was not new at that time. Roughly speaking, around 2006 – close to the introduction of the Smartphone – there was already a trend spurred by better global data connectivity at lower cost. Easy connectivity allowed PLM to expand into industries that were not closely connected to 3D CAD systems(CATIA, CREO or NX). Agile PLM, Aras, and SAP PLM became visible – PLM is no longer for design management but also for go-to-market governance in the CPG and apparel industry.

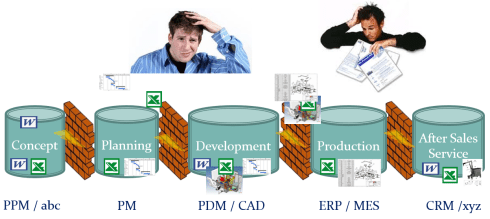

However, a data-driven approach was still rare in mainstream manufacturing companies, where drawings, office documents, email and Excel were the main information carriers next to the dominant ERP system.

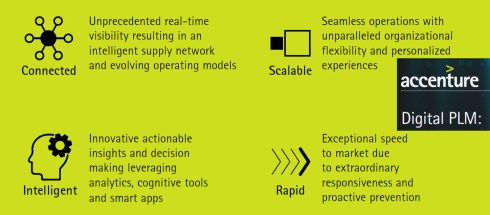

A data-driven approach was a consultant’s dream, and when looking at the impact of digital transformation in other parts of the business, why not for PLM, too? My favorite and still valid 2014 image is the one below from Accenture describing Digital PLM. Here business and PLM come together – the WHY!

Again, the challenge with legacy

At that time, I saw a few companies linking their digital transformation to implementing a new PLM system. Those were the days the PLM vendors were battling for the big enterprise deals, sometimes motivated by an IT mindset that unifying the existing PDM/PLM systems would fulfill the digital dream. Science was not winning, but emotion. Read the PLM blame game – still actual.

At that time, I saw a few companies linking their digital transformation to implementing a new PLM system. Those were the days the PLM vendors were battling for the big enterprise deals, sometimes motivated by an IT mindset that unifying the existing PDM/PLM systems would fulfill the digital dream. Science was not winning, but emotion. Read the PLM blame game – still actual.

One of my key observations is that companies struggle when they approach PLM transformation with a migration mindset. Moving from Coordinated to Connected isn’t just about technology—it’s about fundamentally changing how we work. Instead of a document-driven approach, organizations must embrace a data-driven, connected way of working.

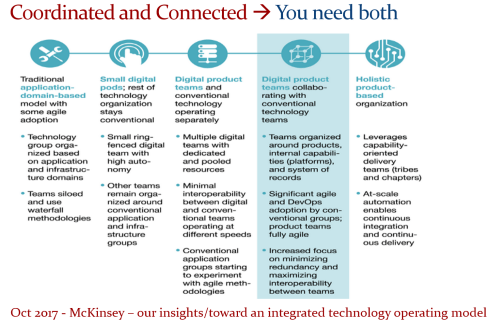

The PLM community increasingly agrees that PLM isn’t a single system; it’s a strategy that requires a federated approach—whether through SaaS or even beyond it.

Before AI became a hype, we discussed the digital thread, digital twins, graph databases, ontologies, and data meshes. Legacy – people (skills), processes(rigid) and data(not reliable) – are the elephant in the room. Yet, the biggest challenge remains: many companies see PLM transformation as just buying new tools.

Before AI became a hype, we discussed the digital thread, digital twins, graph databases, ontologies, and data meshes. Legacy – people (skills), processes(rigid) and data(not reliable) – are the elephant in the room. Yet, the biggest challenge remains: many companies see PLM transformation as just buying new tools.

A fundamental transformation requires a hybrid approach—maintaining traditional operations while enabling multidisciplinary, data-driven teams. However, this shift demands new skills and creates the need to learn and adapt, and many organizations hesitate to take that risk.

In his Product Data Plumber Perspective on 2025. Rob Ferrone addressed the challenge to move forward too, and I liked one of his responses in the underlying discussion that says it all – it is hard to get out of your day to day comfort (and data):

Rob Ferrone’s quote:

Transformations are announced, followed by training, then communication fades. Plans shift, initiatives are replaced, and improvements are delayed for the next “fix-all” solution. Meanwhile, employees feel stuck, their future dictated by a distant, ever-changing strategy team.

And then there is Artificial Intelligence (2024 ……)

In the past two years, I have been reading and digesting much news related to AI, particularly generative AI.

In the past two years, I have been reading and digesting much news related to AI, particularly generative AI.

Initially, I was a little skeptical because of all the hallucinations and hype; however, the progress in this domain is enormous.

I believe that AI has the potential to change our digital thread and digital twin concepts dramatically where the focus was on digital continuity of data.

Now this digital continuity might not be required, reading articles like The End of SaaS (a more and more louder voice), usage of the Fusion Strategy (the importance of AI) and an (academic) example, on a smaller scale, I about learned last year the Swedish Arrowhead™ fPVN project.

I hope that five years from now, there will not be a paragraph with the title Pity there was again legacy.

We should have learned from the past that there is always the first wave of tools – they come with a big hype and promise – think about the Startgate Project but also Deepseek.

Still remember, the change comes from doing things differently, not from efficiency gains. To do things differently you need an educated, visionary management with the power and skills to take a company in a new direction. If not, legacy will win (again)

Still remember, the change comes from doing things differently, not from efficiency gains. To do things differently you need an educated, visionary management with the power and skills to take a company in a new direction. If not, legacy will win (again)

Conclusion

In my 25 years of working in the data management domain, now known as PLM, I have seen several impressive new developments – from 2D to 3D, from documents to data, from physical prototypes to models and more. All these developments took decades to become mainstream. Whilst the technology was there, the legacy kept us back. Will this ever change? Your thoughts?

The pivotal 2015 PLM Roadmap / PDT conference

Leave a comment

Comments feed for this article