In my earlier post The weekend after PDT Europe I wrote about the first day of this interesting conference. We ended that day with some food for thought related to a bimodal PLM approach. Now I will take you through the highlights of day 2.

In my earlier post The weekend after PDT Europe I wrote about the first day of this interesting conference. We ended that day with some food for thought related to a bimodal PLM approach. Now I will take you through the highlights of day 2.

Interoperability and openness in the air (aerospace)

I believe Airbus and Boeing are one of the most challenged companies when it comes to PLM. They have to cope with their stakeholders and massive amount of suppliers involved, constrained by a strong focus on safety and quality. And as airplanes have a long lifetime, the need to keep data accessible and available for over 75 years are massive challenges. The morning was opened by presentations from Anders Romare (Airbus) and Brian Chiesi (Boeing) where they confirmed they could switch the presenter´s role between them as the situations in Airbus and Boeing are so alike.

![]() Anders Romare started with a presentation called: Digital Transformation through an e2e PLM backbone, where he explained the concept of extracting data from the various silo systems in the company (CRM, PLM, MES, ERP) to make data available across the enterprise. In particular in their business transformation towards digital capabilities Airbus needed and created a new architecture on top of the existing business systems, focusing on data (“Data is the new oil”).

Anders Romare started with a presentation called: Digital Transformation through an e2e PLM backbone, where he explained the concept of extracting data from the various silo systems in the company (CRM, PLM, MES, ERP) to make data available across the enterprise. In particular in their business transformation towards digital capabilities Airbus needed and created a new architecture on top of the existing business systems, focusing on data (“Data is the new oil”).

In order to meet a data-driven environment, Airbus extracts and normalizes data from their business systems and provides a data lake with integrated data on top of which various apps can run to offer digital services to existing and new stakeholders on any type of device. The data-driven environment allows people to have information in context and almost real-time available to make right decisions. Currently, these apps run on top of this data layer.

Now imagine information captured by these apps could be stored or directed back in the original architecture supporting the standard processes. This would be a real example of the bimodal approach as discussed on day 1. As a closing remark Anders also stated that three years ago digital transformation was not really visible at Airbus, now it is a must.

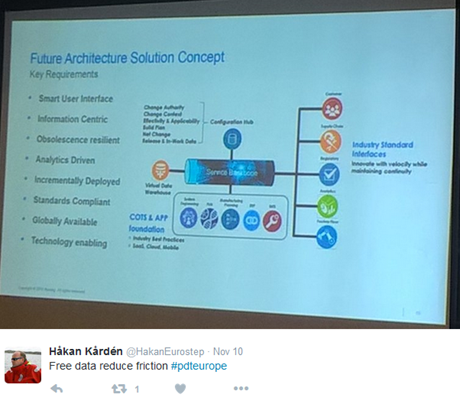

Next Brian Chiesi from Boeing talked about Data Standards: A strategic lever for Boeing Commercial Airplanes. Brian talked about the complex landscape at Boeing. 2500 Applications / 5000 Servers / 900 changes annually (3 per day) impacting 40.000 users. There is a lot of data replication because many systems need their own proprietary format. Brian estimated that if 12 copies exist now, in the ideal world 2 or 3 will do. Brian presented a similar future concept as Airbus, where the traditional business systems (Systems Engineering, PLM, MRP, ERP, MES) are all connected through a service backbone. This new architecture is needed to address modern technology capabilities (social / mobile / analytics / cloud /IoT / Automation / ,,)

Next Brian Chiesi from Boeing talked about Data Standards: A strategic lever for Boeing Commercial Airplanes. Brian talked about the complex landscape at Boeing. 2500 Applications / 5000 Servers / 900 changes annually (3 per day) impacting 40.000 users. There is a lot of data replication because many systems need their own proprietary format. Brian estimated that if 12 copies exist now, in the ideal world 2 or 3 will do. Brian presented a similar future concept as Airbus, where the traditional business systems (Systems Engineering, PLM, MRP, ERP, MES) are all connected through a service backbone. This new architecture is needed to address modern technology capabilities (social / mobile / analytics / cloud /IoT / Automation / ,,)

Interesting part of this architecture is that Boeing aims to exchange data with the outside world (customers / regulatory/supply chain /analytics / manufacturing) through industry standard interfaces to have an optimal flow of information. Standardization would lead to a reduction of customized applications, minimize costs of integration and migration, break the obsolescence cycle and enable future technologies. Brian knows that companies need to pull for standards, vendors will deliver. Boeing will be pushing for standards in their contracts and will actively work together with five major Aerospace & Defense companies to define required PLM capabilities and have a unified voice to PLM solutions providers.

My conclusion on these to Aerospace giants is they express the need to adapt to move to modern digital businesses, no longer the linear approach from the classic airplane programs. Incremental innovation in various domains is the future. The existing systems need to be there to support their current fleet for many, many years to come. The new data-driven layer needs to be connected through normalization and standardization of data. For the future focus on standards is a must.

Simon Floyd from Microsoft talked about The Impact of Digital Transformation in the Manufacturing Enterprise where he talked us through Digital Transformation, IoT, and analytics in the product lifecycle, clarified by examples from the Rolls Royce turbine engine. A good and compelling story which could be used by any vendor explaining digital transformation and the relation to IoT. Next, Simon walked through the Microsoft portfolio and solution components to support a modern digital enterprise based on various platform services. At the end, Simon articulated how for example ShareAspace based on Microsoft infrastructure and technology can be an interface between various PLM environments through the product lifecycle.

Simon Floyd from Microsoft talked about The Impact of Digital Transformation in the Manufacturing Enterprise where he talked us through Digital Transformation, IoT, and analytics in the product lifecycle, clarified by examples from the Rolls Royce turbine engine. A good and compelling story which could be used by any vendor explaining digital transformation and the relation to IoT. Next, Simon walked through the Microsoft portfolio and solution components to support a modern digital enterprise based on various platform services. At the end, Simon articulated how for example ShareAspace based on Microsoft infrastructure and technology can be an interface between various PLM environments through the product lifecycle.

Simon’s presentation was followed by a panel discussion where the theme was: When is history and legacy an asset and barriers of entry and When does it become a burden and an invitation to future competitors.

Mark Halpern (Gartner) mentioned here again the bimodal thinking. Aras is bimodal. The classical PLM vendors running in mode 1 will not change radically and the new vendors, the mode 2 types will need time to create credibility. Other companies mentioned here PropelPLM (PLM on Salesforce platform) or OnShape will battle the next five years to become significant and might disrupt.

Mark Halpern (Gartner) mentioned here again the bimodal thinking. Aras is bimodal. The classical PLM vendors running in mode 1 will not change radically and the new vendors, the mode 2 types will need time to create credibility. Other companies mentioned here PropelPLM (PLM on Salesforce platform) or OnShape will battle the next five years to become significant and might disrupt.

Simon Floyd(Microsoft) mentioned that in order to keep innovation within Microsoft, they allow for startups within in the company, with no constraints in the beginning to Microsoft. This to keep disruption inside you company instead of being disrupted from outside. Another point mentioned was that Tesla did not want to wait till COTS software would be available for their product development and support platform. Therefore they develop parts themselves. Are we going back to the early days of IT ?

Interesting trend I believe too, in case the building blocks for such solution architecture are based on open (standardized ?) services.

Data Quality

After the lunch, the conference was split in three streams where I was participating in the “Creating and managing information quality stream.” As I discussed in my presentation from day 1, there is a need for accurate data, starting a.s.a.p. as the future of our businesses will run on data as we learned from all speakers (and this is not a secret – still many companies do not act).

In the context of data quality, Jean Brange from Boost presented the ISO 8000 framework for data and information quality management. This standard is now under development and will help companies to address their digital needs. The challenge of data quality is that we need to store data with the right syntax and semantic to be used and in addition, it needs to be pragmatic: what are we going to store that will have value. And then the challenge of evaluating the content. Empty fields can be discovered, however, how do you qualify the quality of field with a value. The ISO 8000 framework is a framework, like ISO 9000 (product quality) that allow companies to work in a methodological way towards acceptable and needed data quality.

In the context of data quality, Jean Brange from Boost presented the ISO 8000 framework for data and information quality management. This standard is now under development and will help companies to address their digital needs. The challenge of data quality is that we need to store data with the right syntax and semantic to be used and in addition, it needs to be pragmatic: what are we going to store that will have value. And then the challenge of evaluating the content. Empty fields can be discovered, however, how do you qualify the quality of field with a value. The ISO 8000 framework is a framework, like ISO 9000 (product quality) that allow companies to work in a methodological way towards acceptable and needed data quality.

Magnus Färneland from Eurostep addressed the topic of data quality and the foundation for automation based on the latest developments done by Eurostep on top of their already rich PLCS data model. The PLCS data model is an impressive model as it already supports all facets of product lifecycle from design, through development and operations. By introducing soft typing, EuroStep allows a more detailed tuning of the data model to ensure configuration management. When at which stage of the lifecycle is certain information required (and becomes mandatory) ? Consistent data quality enforced through business process logic.

Magnus Färneland from Eurostep addressed the topic of data quality and the foundation for automation based on the latest developments done by Eurostep on top of their already rich PLCS data model. The PLCS data model is an impressive model as it already supports all facets of product lifecycle from design, through development and operations. By introducing soft typing, EuroStep allows a more detailed tuning of the data model to ensure configuration management. When at which stage of the lifecycle is certain information required (and becomes mandatory) ? Consistent data quality enforced through business process logic.

The conference ended with Marc Halpern making a plea for Take Control of Your Product Data or Lose Control of Your Revenue, where Marc painted the future (horror) scenario that due to digital transformation the real “big fish” will be the digital business ecosystem owner and that once you are locked in with a vendor, these vendors can uplift their prices to save their own business without any respect for your company’s business model. Marc gave some examples where some vendor raised prices with the subscription model up to 40 %. Therefore even when you are just closing a new agreement with a vendor, you should negotiate a price guarantee and a certain bandwidth for increase. And on top of that you should prepare an exit strategy – prepare data for migration and have backups using standards. Marc gave some examples of billions extra cost related to data quality and loss. It can hurt !! Finally, Marc ended with recommendations for master data management and quality as a needed company strategy.

Gerard Litjens from CIMdata as closing speaker gave a very comprehensive overview of The Internet of Thing – What does it mean for PLM ? based on CIMdata’ s vision. As all vendors in this space explain the relation between IoT and PLM differently, it was a good presentation to be used as a base for the discussion: how does IoT influence our PLM landscape. Because of the length of this blog post, I will not further go into these details – it is worth obtaining this overview.

Gerard Litjens from CIMdata as closing speaker gave a very comprehensive overview of The Internet of Thing – What does it mean for PLM ? based on CIMdata’ s vision. As all vendors in this space explain the relation between IoT and PLM differently, it was a good presentation to be used as a base for the discussion: how does IoT influence our PLM landscape. Because of the length of this blog post, I will not further go into these details – it is worth obtaining this overview.

Concluding: PDT2016 is a crucial PLM conference for people who are interested in the details of PLM. Other conferences might address high-level customer stories, at PDT2016 it is about the details and sharing the advantages of using standards. Standards are crucial for a data-driven environment where business platforms with all their constraints will be the future. And I saw more and more companies are working with standards in a pragmatic manner, observing the benefits and pushing for more data standards – it is not just theory.

See you next year ?

1 comment

Comments feed for this article

November 22, 2016 at 10:41 am

Håkan Kårdén (@HakanEurostep)

Jos,

well captured. A change is needed, will it be driven by aerospace for the reasons you mention? I guess at least they will be strong influencers. Great presentations and panel with openness from speakers.

Agree with you, Marc Halpers Gartner presented what could be a future horror scenario. But how many of the end user companies take notice?

Best Regards,

Håkan

Håkan hi, I agree aerospace and potentially nuclear new build have the highest need to manage data in a safe and secure manner over a long lifetime, therefore realizing that standards are a way to mitigate obsolescence and data isolation in silos. And related to your second remark – yes only few companies have an exit strategy – they go with the flow (reminds me of the book: Thinking Fast, Thinking Slow from Daniel Kahneman.

best regards

Jos

LikeLike